- 1、API 概述:第一个端到端示例

- jy: 参考 “(1)—>(a)” 以上的函数定义

- Insert activity regularization as a layer

- The displayed loss will be much higher than before

- due to the regularization component.

- First, let’s create a training Dataset instance.

- For the sake of our example, we’ll use the same MNIST data as before.

- Shuffle and slice the dataset.

- Now we get a test dataset.

- Since the dataset already takes care of batching,

- we don’t pass a

batch_sizeargument. - You can also evaluate or predict on a dataset.

- Prepare the training dataset

- Only use the 100 batches per epoch (that’s 64 * 100 samples)

- Prepare the training dataset

- Prepare the validation dataset

- Prepare the training dataset

- Prepare the validation dataset

- Here,

filenamesis list of path to the images - and

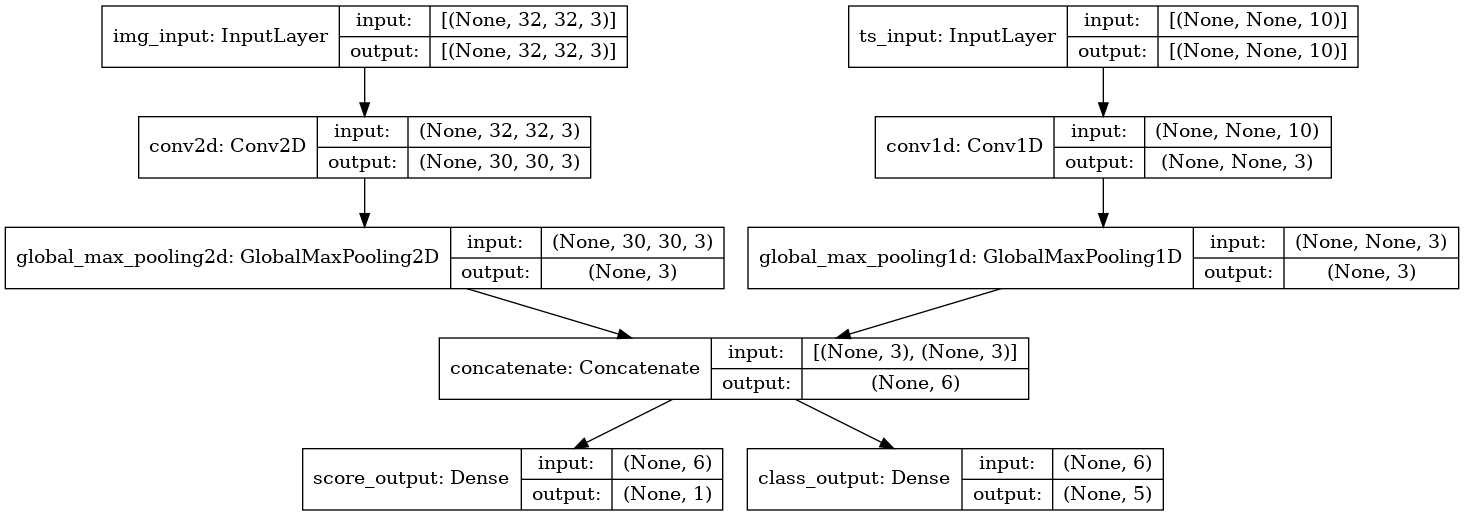

labelsare the associated labels. - (6)将数据传递到多输入、多输出模型

- jy: 绘制这个模型,以便清楚地看到在这里执行的操作(请注意,图中显示的形状是批次形状,而

- 不是每个样本的形状)

- List loss version

- Or dict loss version

- (8)为模型设置检查点

- (9)使用学习率时间表

- 本指南涵盖使用内置 API 进行训练和验证时的训练、评估和预测(推断)模型(例如

Model.fit()、Model.evaluate()和Model.predict())。 一般而言,无论您使用内置循环还是编写自己的循环,模型训练和评估都会在每种 Keras 模型(序贯模型、使用函数式 API 构建的模型以及通过模型子类化从头编写的模型)中严格按照相同的方式工作。

1、API 概述:第一个端到端示例

将数据传递到模型的内置训练循环时,应当使用 NumPy 数组(如果数据很小且适合装入内存)或

**tf.data Dataset**对象。- 以下将 MNIST 数据集用作 NumPy 数组,以演示如何使用优化器、损失和指标。

- 考虑以下模型(使用函数式 API 构建):

import tensorflow as tffrom tensorflow import kerasfrom tensorflow.keras import layersinputs = keras.Input(shape=(784,), name="digits")x = layers.Dense(64, activation="relu", name="dense_1")(inputs)x = layers.Dense(64, activation="relu", name="dense_2")(x)outputs = layers.Dense(10, activation="softmax", name="predictions")(x)model = keras.Model(inputs=inputs, outputs=outputs)(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()# Preprocess the data (these are NumPy arrays)x_train = x_train.reshape(60000, 784).astype("float32") / 255x_test = x_test.reshape(10000, 784).astype("float32") / 255y_train = y_train.astype("float32")y_test = y_test.astype("float32")# Reserve 10,000 samples for validationx_val = x_train[-10000:]y_val = y_train[-10000:]x_train = x_train[:-10000]y_train = y_train[:-10000]# jy: 通过 compile() 方法指定训练配置(优化器、损失、指标);# 使用 fit() 训练模型前需要指定损失函数、优化器以及一些要监视的指标(可选)model.compile(# Optimizeroptimizer=keras.optimizers.RMSprop(),# Loss function to minimizeloss=keras.losses.SparseCategoricalCrossentropy(),# jy: metrics 参数应当为列表(模型可以具有任意数量的指标)# List of metrics to monitormetrics=[keras.metrics.SparseCategoricalAccuracy()],)print("\n" + "=" * 88 + "\n")print("Fit model on training data")history = model.fit(x_train,y_train,batch_size=64,epochs=2,# We pass some validation for# monitoring validation loss and metrics# at the end of each epochvalidation_data=(x_val, y_val),)'''Fit model on training data2022-04-23 16:15:23.210193: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 156800000 exceeds 10% of free system memory.2022-04-23 16:15:23.359227: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:176] None of the MLIR Optimization Passes are enabled (registered 2)2022-04-23 16:15:23.359735: I tensorflow/core/platform/profile_utils/cpu_utils.cc:114] CPU Frequency: 2394445000 HzEpoch 1/2782/782 [==============================] - 2s 2ms/step - loss: 0.3498 - sparse_categorical_accuracy: 0.8998 - val_loss: 0.2226 - val_sparse_categorical_accuracy: 0.9328Epoch 2/2782/782 [==============================] - 1s 2ms/step - loss: 0.1642 - sparse_categorical_accuracy: 0.9507 - val_loss: 0.1311 - val_sparse_categorical_accuracy: 0.9620'''print("\n" + "=" * 88 + "\n")# jy: 返回的 history 对象保存训练期间的损失值和指标值记录print("show history.history ")print(history.history)'''show history.history{'loss': [0.34975022077560425, 0.1641906052827835],'sparse_categorical_accuracy': [0.8998000025749207, 0.9507200121879578],'val_loss': [0.2226019948720932, 0.1311493068933487],'val_sparse_categorical_accuracy': [0.9327999949455261, 0.9620000123977661]}'''print("\n" + "=" * 88 + "\n")# jy: 通过 evaluate() 在测试数据上评估模型# Evaluate the model on the test data using `evaluate`print("Evaluate on test data")results = model.evaluate(x_test, y_test, batch_size=128)print("test loss, test acc:", results)'''Evaluate on test data79/79 [==============================] - 0s 1ms/step - loss: 0.1314 - sparse_categorical_accuracy: 0.9599test loss, test acc: [0.1314447671175003, 0.9599000215530396]'''print("\n" + "=" * 88 + "\n")# Generate predictions (probabilities -- the output of the last layer)# on new data using `predict`print("Generate predictions for 3 samples")predictions = model.predict(x_test[:3])print("predictions shape:", predictions.shape)'''Generate predictions for 3 samplespredictions shape: (3, 10)'''

(1)compile() 方法:指定损失、指标和优化器

使用

fit()训练模型之前需要指定损失函数、优化器以及一些要监视的指标(可选)。通过compile()方法进行设置。model.compile( optimizer=keras.optimizers.RMSprop(learning_rate=1e-3), loss=keras.losses.SparseCategoricalCrossentropy(), # jy: metrics 参数应当为列表(模型可以具有任意数量的指标) metrics=[keras.metrics.SparseCategoricalAccuracy()], )如果模型具有多个输出,则可以为每个输出指定不同的损失和指标,并且可以调整每个输出对模型总损失的贡献。

- 参考【undo】:“将数据传递到多输入、多输出模型”

注意:在许多情况下可以通过字符串标识符将优化器、损失和指标指定如下(默认设置):

model.compile( optimizer="rmsprop", loss="sparse_categorical_crossentropy", metrics=["sparse_categorical_accuracy"], )将模型定义和编译步骤放入函数中,方便后续重用: ```python def get_uncompiled_model(): inputs = keras.Input(shape=(784,), name=”digits”) x = layers.Dense(64, activation=”relu”, name=”dense_1”)(inputs) x = layers.Dense(64, activation=”relu”, name=”dense_2”)(x) outputs = layers.Dense(10, activation=”softmax”, name=”predictions”)(x) model = keras.Model(inputs=inputs, outputs=outputs) return model

def get_compiled_model(): model = get_uncompiled_model() model.compile( optimizer=”rmsprop”, loss=”sparse_categorical_crossentropy”, metrics=[“sparse_categorical_accuracy”], ) return model

<a name="NycHG"></a>

### (a)内置优化器、损失和指标

- 优化器:

- `SGD()`(有或没有动量)

- `RMSprop()`

- `Adam()`

- 损失:

- `MeanSquaredError()`

- `KLDivergence()`

- `CosineSimilarity()`

- 指标:

- `AUC()`

- `Precision()`

- `Recall()`

<a name="jmMGu"></a>

### (b)自定义损失

- Keras 提供了两种方式创建自定义损失。

- 第一种方式:创建一个接受输入 `y_true` 和 `y_pred` 的函数。

- 示例:计算实际数据与预测值之间均方误差的损失函数:

```python

def custom_mean_squared_error(y_true, y_pred):

return tf.math.reduce_mean(tf.square(y_true - y_pred))

# jy: 参考 “(1)-->(a)” 以上的函数定义

model = get_uncompiled_model()

model.compile(optimizer=keras.optimizers.Adam(), loss=custom_mean_squared_error)

# We need to one-hot encode the labels to use MSE

y_train_one_hot = tf.one_hot(y_train, depth=10)

model.fit(x_train, y_train_one_hot, batch_size=64, epochs=1)

'''

782/782 [==============================] - 1s 2ms/step - loss: 0.0163

<keras.callbacks.History at 0x7fdd84118650>

'''

第二种方式:将

tf.keras.losses.Loss类子类化,并实现以下两种方法:__init__(self):接受要在调用损失函数期间传递的参数call(self, y_true, y_pred):使用目标 (y_true) 和模型预测 (y_pred) 来计算模型的损失- 损失函数的参数可以包含除

y_true和y_pred之外的其他参数的 如:假设要使用均方误差,但存在一个会抑制预测值远离 0.5(假设分类目标采用独热编码,且取值介于 0 和 1 之间)的附加项。这会为模型创建一个激励,使其不会对预测值过于自信,这可能有助于减轻过拟合(仅尝试,不确定是否有效!),则可以按以下方式处理: ```python class CustomMSE(keras.losses.Loss): def init(self, regularizationfactor=0.1, name=”custommse”): super().__init(name=name) self.regularization_factor = regularization_factor

def call(self, y_true, y_pred): mse = tf.math.reduce_mean(tf.square(y_true - y_pred)) reg = tf.math.reduce_mean(tf.square(0.5 - y_pred)) return mse + reg * self.regularization_factor

jy: 参考 “(1)—>(a)” 以上的函数定义

model = get_uncompiled_model() model.compile(optimizer=keras.optimizers.Adam(), loss=CustomMSE())

y_train_one_hot = tf.one_hot(y_train, depth=10) model.fit(x_train, y_train_one_hot, batch_size=64, epochs=1) “”” 782/782 [==============================] - 2s 2ms/step - loss: 0.0387

<a name="oRaqr"></a>

### (c)自定义指标

- 可以通过将 `tf.keras.metrics.Metric` 类子类化创建自定义指标,需要实现 4 个方法:

- `__init__(self)`:将在其中为指标创建状态变量。

- `update_state(self, y_true, y_pred, sample_weight=None)`:使用目标 `y_true` 和模型预测 `y_pred` 更新状态变量。

- `result(self)`:使用状态变量来计算最终结果。

- `reset_states(self)`:用于重新初始化指标的状态。

- 状态更新和结果计算分开处理(分别在 `update_state()` 和 `result()` 中),因为在某些情况下,结果计算的开销可能非常大,只能定期执行。

- 示例:实现 CategoricalTruePositives 指标(可以计算有多少样本被正确分类为属于给定类)

```python

class CategoricalTruePositives(keras.metrics.Metric):

def __init__(self, name="categorical_true_positives", **kwargs):

super(CategoricalTruePositives, self).__init__(name=name, **kwargs)

self.true_positives = self.add_weight(name="ctp", initializer="zeros")

def update_state(self, y_true, y_pred, sample_weight=None):

y_pred = tf.reshape(tf.argmax(y_pred, axis=1), shape=(-1, 1))

values = tf.cast(y_true, "int32") == tf.cast(y_pred, "int32")

values = tf.cast(values, "float32")

if sample_weight is not None:

sample_weight = tf.cast(sample_weight, "float32")

values = tf.multiply(values, sample_weight)

self.true_positives.assign_add(tf.reduce_sum(values))

def result(self):

return self.true_positives

def reset_states(self):

# The state of the metric will be reset at the start of each epoch.

self.true_positives.assign(0.0)

# jy: 参考 “(1)-->(a)” 以上的函数定义

model = get_uncompiled_model()

model.compile(

optimizer=keras.optimizers.RMSprop(learning_rate=1e-3),

loss=keras.losses.SparseCategoricalCrossentropy(),

metrics=[CategoricalTruePositives()],

)

model.fit(x_train, y_train, batch_size=64, epochs=3)

"""

Epoch 1/3

782/782 [==============================] - 2s 2ms/step - loss: 0.3386 - categorical_true_positives: 45176.0000

Epoch 2/3

75/782 [=>............................] - ETA: 1s - loss: 0.1818 - categorical_true_positives: 4547.0000

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/keras/metrics.py:257: UserWarning: Metric CategoricalTruePositives implements a `reset_states()` method; rename it to `reset_state()` (without the final "s"). The name `reset_states()` has been deprecated to improve API consistency.

'consistency.' % (self.__class__.__name__,))

782/782 [==============================] - 2s 2ms/step - loss: 0.1604 - categorical_true_positives: 47649.0000

Epoch 3/3

782/782 [==============================] - 2s 2ms/step - loss: 0.1187 - categorical_true_positives: 48270.0000

<keras.callbacks.History at 0x7fdd451996d0>

"""

(d)处理不适合标准签名的损失和指标

- 绝大多数损失和指标都可以通过

y_true和y_pred计算得出,其中y_pred是模型的输出,但不是全部。例如,正则化损失可能仅需要激活层(这种情况没有目标),并且这种激活可能不是模型输出。 - 在此类情况下可以从自定义层的调用方法内部调用

self.add_loss(loss_value)。以这种方式添加的损失会在训练期间添加到 “主要” 损失中(传递给compile()的损失)。 - 示例:添加激活正则化(注意:激活正则化内置于所有 Keras 层中,此层只是为了提供一个具体示例):

```python

class ActivityRegularizationLayer(layers.Layer):

def call(self, inputs):

self.add_loss(tf.reduce_sum(inputs) * 0.1) return inputs # Pass-through layer.

inputs = keras.Input(shape=(784,), name=”digits”) x = layers.Dense(64, activation=”relu”, name=”dense_1”)(inputs)

Insert activity regularization as a layer

x = ActivityRegularizationLayer()(x)

x = layers.Dense(64, activation=”relu”, name=”dense_2”)(x) outputs = layers.Dense(10, name=”predictions”)(x)

model = keras.Model(inputs=inputs, outputs=outputs) model.compile( optimizer=keras.optimizers.RMSprop(learning_rate=1e-3), loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True), )

The displayed loss will be much higher than before

due to the regularization component.

model.fit(x_train, y_train, batch_size=64, epochs=1) “”” 782/782 [==============================] - 2s 2ms/step - loss: 2.5069

- 可以使用 `add_metric()` 对记录指标值执行相同的操作:

```python

class MetricLoggingLayer(layers.Layer):

def call(self, inputs):

# The `aggregation` argument defines

# how to aggregate the per-batch values

# over each epoch:

# in this case we simply average them.

self.add_metric(

keras.backend.std(inputs), name="std_of_activation", aggregation="mean"

)

return inputs # Pass-through layer.

inputs = keras.Input(shape=(784,), name="digits")

x = layers.Dense(64, activation="relu", name="dense_1")(inputs)

# Insert std logging as a layer.

x = MetricLoggingLayer()(x)

x = layers.Dense(64, activation="relu", name="dense_2")(x)

outputs = layers.Dense(10, name="predictions")(x)

model = keras.Model(inputs=inputs, outputs=outputs)

model.compile(

optimizer=keras.optimizers.RMSprop(learning_rate=1e-3),

loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True),

)

model.fit(x_train, y_train, batch_size=64, epochs=1)

"""

782/782 [==============================] - 2s 2ms/step - loss: 0.3483 - std_of_activation: 0.9733

<keras.callbacks.History at 0x7fdd44fdd150>

"""

- 在函数式 API 中还可以调用

model.add_loss(loss_tensor)或model.add_metric(metric_tensor, name, aggregation)。 - 示例: ```python inputs = keras.Input(shape=(784,), name=”digits”) x1 = layers.Dense(64, activation=”relu”, name=”dense_1”)(inputs) x2 = layers.Dense(64, activation=”relu”, name=”dense_2”)(x1) outputs = layers.Dense(10, name=”predictions”)(x2) model = keras.Model(inputs=inputs, outputs=outputs)

model.add_loss(tf.reduce_sum(x1) * 0.1)

model.add_metric(keras.backend.std(x1), name=”std_of_activation”, aggregation=”mean”)

model.compile( optimizer=keras.optimizers.RMSprop(1e-3), loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True), ) model.fit(x_train, y_train, batch_size=64, epochs=1) “”” 782/782 [==============================] - 2s 2ms/step - loss: 2.4717 - std_of_activation: 0.0018

- 注意:当通过 `add_loss()` 传递损失时,可以在没有损失函数的情况下调用 `compile()`,因为模型已经有损失要最小化。

- 考虑以下 `LogisticEndpoint` 层:它以目标和 logits 作为输入,并通过 `add_loss()` 跟踪交叉熵损失。另外,它还通过 `add_metric()` 跟踪分类准确率。

```python

class LogisticEndpoint(keras.layers.Layer):

def __init__(self, name=None):

super(LogisticEndpoint, self).__init__(name=name)

self.loss_fn = keras.losses.BinaryCrossentropy(from_logits=True)

self.accuracy_fn = keras.metrics.BinaryAccuracy()

def call(self, targets, logits, sample_weights=None):

# Compute the training-time loss value and add it

# to the layer using `self.add_loss()`.

loss = self.loss_fn(targets, logits, sample_weights)

self.add_loss(loss)

# Log accuracy as a metric and add it

# to the layer using `self.add_metric()`.

acc = self.accuracy_fn(targets, logits, sample_weights)

self.add_metric(acc, name="accuracy")

# Return the inference-time prediction tensor (for `.predict()`).

return tf.nn.softmax(logits)

- 可以在具有两个输入(输入数据和目标)的模型中使用它,编译时无需 loss 参数,如下所示: ```python import numpy as np

inputs = keras.Input(shape=(3,), name=”inputs”) targets = keras.Input(shape=(10,), name=”targets”) logits = keras.layers.Dense(10)(inputs) predictions = LogisticEndpoint(name=”predictions”)(logits, targets)

model = keras.Model(inputs=[inputs, targets], outputs=predictions) model.compile(optimizer=”adam”) # No loss argument!

data = { “inputs”: np.random.random((3, 3)), “targets”: np.random.random((3, 10)), } model.fit(data) “”” 1/1 [==============================] - 0s 234ms/step - loss: 0.9193 - binary_accuracy: 0.0000e+00

- 有关训练多输入模型的更多信息,请参考【undo】:**将数据传递到多输入、多输出模型**

<a name="Oexnd"></a>

### (e)自动分离验证预留集

- 以上端到端示例中使用了 `validation_data` 参数将 NumPy 数组 `(x_val, y_val)` 的元组传递给模型,用于在每个周期结束时评估验证损失和验证指标。

- 另一个参数 `validation_split` 允许您自动保留部分训练数据以供验证。参数值表示要保留用于验证的数据比例,因此应将其设置为大于 0 且小于 1 的数字。

- 例如`validation_split=0.2` 表示 “使用 20% 的数据进行验证”,而 `validation_split=0.6` 表示 “使用 60% 的数据进行验证”。

- 注意:仅在使用 NumPy 数据进行训练时才能使用 `validation_split`。

- 验证的计算方法是在进行任何打乱顺序之前,获取 `fit()` 调用接收到的数组的最后 x% 个样本。

```python

model = get_compiled_model()

model.fit(x_train, y_train, batch_size=64, validation_split=0.2, epochs=1)

"""

625/625 [==============================] - 2s 2ms/step - loss: 0.3639 - sparse_categorical_accuracy: 0.8978 - val_loss: 0.2249 - val_sparse_categorical_accuracy: 0.9324

<keras.callbacks.History at 0x7fdd44bf7b10>

"""

(2)通过 tf.data 数据集进行训练和评估

tf.dataAPI 是 TensorFlow 2.0 中的一组实用工具,用于以快速且可扩展的方式加载和预处理数据。- 有关创建

Datasets的完整指南,请参阅tf.data文档 - 可以将

Dataset实例直接传递给方法fit()、evaluate()和predict(): ```python model = get_compiled_model()

First, let’s create a training Dataset instance.

For the sake of our example, we’ll use the same MNIST data as before.

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

Shuffle and slice the dataset.

train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64)

Now we get a test dataset.

test_dataset = tf.data.Dataset.from_tensor_slices((x_test, y_test)) test_dataset = test_dataset.batch(64)

Since the dataset already takes care of batching,

we don’t pass a batch_size argument.

model.fit(train_dataset, epochs=3)

You can also evaluate or predict on a dataset.

print(“Evaluate”) result = model.evaluate(test_dataset) dict(zip(model.metrics_names, result))

- 输出示例:

```shell

Epoch 1/3

782/782 [==============================] - 2s 2ms/step - loss: 0.3431 - sparse_categorical_accuracy: 0.9028

Epoch 2/3

782/782 [==============================] - 2s 2ms/step - loss: 0.1608 - sparse_categorical_accuracy: 0.9532

Epoch 3/3

782/782 [==============================] - 2s 2ms/step - loss: 0.1149 - sparse_categorical_accuracy: 0.9653

Evaluate

157/157 [==============================] - 0s 2ms/step - loss: 0.1211 - sparse_categorical_accuracy: 0.9638

{'loss': 0.12111453711986542,

'sparse_categorical_accuracy': 0.9638000130653381}

- 请注意,数据集会在每个周期结束时重置,因此可以在下一个周期重复使用。

- 如果只想在来自此数据集的特定数量批次上进行训练,则可以传递

steps_per_epoch参数,此参数可以指定在继续下一个周期之前,模型应使用此数据集运行多少训练步骤。 - 如果执行此操作,则不会在每个周期结束时重置数据集,而是会继续绘制接下来的批次。数据集最终将用尽数据(除非它是无限循环的数据集)。 ```shell model = get_compiled_model()

Prepare the training dataset

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)) train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64)

Only use the 100 batches per epoch (that’s 64 * 100 samples)

model.fit(train_dataset, epochs=3, steps_per_epoch=100)

- 输出结果如下:

```shell

Epoch 1/3

100/100 [==============================] - 1s 2ms/step - loss: 0.7885 - sparse_categorical_accuracy: 0.7967

Epoch 2/3

100/100 [==============================] - 0s 2ms/step - loss: 0.3615 - sparse_categorical_accuracy: 0.8975

Epoch 3/3

100/100 [==============================] - 0s 2ms/step - loss: 0.3167 - sparse_categorical_accuracy: 0.9072

<keras.callbacks.History at 0x7fdd44a78150>

使用验证数据集

- 可以在

fit()中将Dataset实例作为validation_data参数传递: ```python model = get_compiled_model()

Prepare the training dataset

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)) train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64)

Prepare the validation dataset

val_dataset = tf.data.Dataset.from_tensor_slices((x_val, y_val)) val_dataset = val_dataset.batch(64)

model.fit(train_dataset, epochs=1, validation_data=val_dataset)

输出结果示例:

```shell

782/782 [==============================] - 2s 3ms/step - loss: 0.3325 - sparse_categorical_accuracy: 0.9062 - val_loss: 0.1897 - val_sparse_categorical_accuracy: 0.9451

<keras.callbacks.History at 0x7fdd447de650>

- 在每个周期结束时,模型将迭代验证数据集并计算验证损失和验证指标。

- 如果只想对此数据集中的特定数量批次运行验证,则可以传递

validation_steps参数,此参数可以指定在中断验证并进入下一个周期之前,模型应使用验证数据集运行多少个验证步骤: ```python model = get_compiled_model()

Prepare the training dataset

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)) train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64)

Prepare the validation dataset

val_dataset = tf.data.Dataset.from_tensor_slices((x_val, y_val)) val_dataset = val_dataset.batch(64)

model.fit( train_dataset, epochs=1,

# Only run validation using the first 10 batches of the dataset

# using the `validation_steps` argument

validation_data=val_dataset,

validation_steps=10,

)

- 输出示例如下:

```shell

782/782 [==============================] - 2s 2ms/step - loss: 0.3374 - sparse_categorical_accuracy: 0.9040 - val_loss: 0.3082 - val_sparse_categorical_accuracy: 0.9187

<keras.callbacks.History at 0x7fdd447d6b90>

- 注意:验证数据集将在每次使用后重置(因此可以在不同周期中始终根据相同的样本进行评估)。

通过

Dataset对象进行训练时,不支持参数validation_split(从训练数据生成预留集),因为此功能需要为数据集样本编制索引的能力,而DatasetAPI 通常无法做到这一点。(3)支持的其他输入格式

除 NumPy 数组、Eager 张量和 TensorFlow

Datasets外,还可以使用 Pandas 数据帧或通过产生批量数据和标签的 Python 生成器训练 Keras 模型。keras.utils.Sequence类提供了一个简单的接口来构建可感知多处理并且可以打乱顺序的 Python 数据生成器。通常建议使用:

keras.utils.Sequence是一个实用工具,可以将其子类化以获得具有两个重要属性的 Python 生成器:- 适用于多处理。

- 可以打乱它的顺序(如,在

fit()中传递shuffle=True)。

- Sequence 必须实现两个方法:

__getitem__- 该方法应返回完整的批次。如果要在各个周期之间修改数据集,可以实现

on_epoch_end。

- 该方法应返回完整的批次。如果要在各个周期之间修改数据集,可以实现

__len__

- 示例: ```python from skimage.io import imread from skimage.transform import resize import numpy as np

Here, filenames is list of path to the images

and labels are the associated labels.

class CIFAR10Sequence(Sequence): def init(self, filenames, labels, batch_size): self.filenames, self.labels = filenames, labels self.batch_size = batch_size

def __len__(self):

return int(np.ceil(len(self.filenames) / float(self.batch_size)))

def __getitem__(self, idx):

batch_x = self.filenames[idx * self.batch_size:(idx + 1) * self.batch_size]

batch_y = self.labels[idx * self.batch_size:(idx + 1) * self.batch_size]

return np.array([

resize(imread(filename), (200, 200))

for filename in batch_x]), np.array(batch_y)

sequence = CIFAR10Sequence(filenames, labels, batch_size) model.fit(sequence, epochs=10)

<a name="BpSQL"></a>

## (5)使用样本加权和类加权

- 默认设置下,样本的权重由其在数据集中出现的频率决定。可以通过两种方式独立于样本频率来加权数据:

- 类权重

- 样本权重

<a name="ly8mV"></a>

### (a)类权重

- 通过将字典传递给 `Model.fit()` 的 `class_weight` 参数来进行设置。此字典会将类索引映射到应当用于属于此类的样本的权重。

- 这可用于在不重采样的情况下平衡类,或者用于训练更重视特定类的模型。

- 如,在数据中如果类 “0” 表示类 “1” 的一半,则可以使用 `Model.fit(..., class_weight={0: 1., 1: 0.5})`。

- 下面是一个 NumPy 示例:使用类权重或样本权重来提高对类 #5(MNIST 数据集中的数字 “5”)进行正确分类的重要性。

```python

import numpy as np

class_weight = {

0: 1.0,

1: 1.0,

2: 1.0,

3: 1.0,

4: 1.0,

# Set weight "2" for class "5",

# making this class 2x more important

5: 2.0,

6: 1.0,

7: 1.0,

8: 1.0,

9: 1.0,

}

print("Fit with class weight")

model = get_compiled_model()

model.fit(x_train, y_train, class_weight=class_weight, batch_size=64, epochs=1)

输出示例:

Fit with class weight 782/782 [==============================] - 2s 2ms/step - loss: 0.3798 - sparse_categorical_accuracy: 0.8998 <keras.callbacks.History at 0x7fdd4453e650>(b)样本权重

对于细粒度控制,或者如果不构建分类器,则可以使用 “样本权重”。

- 通过 NumPy 数据进行训练时:将

sample_weight参数传递给Model.fit()。 - 通过

tf.data或任何其他类型的迭代器进行训练时:产生(input_batch, label_batch, sample_weight_batch)元组。

- 通过 NumPy 数据进行训练时:将

- “样本权重” 数组是一个由数字组成的数组,这些数字用于指定批次中每个样本在计算总损失时应当具有的权重。它通常用于不平衡的分类问题(理念是将更多权重分配给罕见类)。

- 当使用的权重为 1 和 0 时,此数组可用作损失函数的掩码(完全丢弃某些样本对总损失的贡献)。 ```python sample_weight = np.ones(shape=(len(y_train),)) sample_weight[y_train == 5] = 2.0

print(“Fit with sample weight”) model = get_compiled_model() model.fit(x_train, y_train, sample_weight=sample_weight, batch_size=64, epochs=1)

“”” Fit with sample weight 782/782 [==============================] - 2s 2ms/step - loss: 0.3774 - sparse_categorical_accuracy: 0.9030

- 下面是一个匹配的 `Dataset` 示例:

```python

sample_weight = np.ones(shape=(len(y_train),))

sample_weight[y_train == 5] = 2.0

# Create a Dataset that includes sample weights

# (3rd element in the return tuple).

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train, sample_weight))

# Shuffle and slice the dataset.

train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64)

model = get_compiled_model()

model.fit(train_dataset, epochs=1)

"""

782/782 [==============================] - 2s 2ms/step - loss: 0.3684 - sparse_categorical_accuracy: 0.9025

<keras.callbacks.History at 0x7fdd442c6d90>

"""

(6)将数据传递到多输入、多输出模型

- 前面的示例考虑的是具有单个输入(形状为

(764,)的张量)和单个输出(形状为(10,)的预测张量)的模型。但具有多个输入或输出的模型呢? - 考虑以下模型,该模型具有形状为

(32, 32, 3)的图像输入(即(height, width, channels))和形状为(None, 10)的时间序列输入(即(timesteps, features))。我们的模型将具有根据这些输入的组合计算出的两个输出:“得分”(形状为(1,))和在五个类上的概率分布(形状为(5,))。 ```python image_input = keras.Input(shape=(32, 32, 3), name=”img_input”) timeseries_input = keras.Input(shape=(None, 10), name=”ts_input”)

x1 = layers.Conv2D(3, 3)(image_input) x1 = layers.GlobalMaxPooling2D()(x1)

x2 = layers.Conv1D(3, 3)(timeseries_input) x2 = layers.GlobalMaxPooling1D()(x2)

x = layers.concatenate([x1, x2])

score_output = layers.Dense(1, name=”score_output”)(x) class_output = layers.Dense(5, name=”class_output”)(x)

model = keras.Model( inputs=[image_input, timeseries_input], outputs=[score_output, class_output] )

jy: 绘制这个模型,以便清楚地看到在这里执行的操作(请注意,图中显示的形状是批次形状,而

不是每个样本的形状)

keras.utils.plot_model(model, “multi_input_and_output_model.png”, show_shapes=True)

- 在编译时,通过将损失函数作为列表传递,可以为不同的输出指定不同的损失:

```python

model.compile(

optimizer=keras.optimizers.RMSprop(1e-3),

loss=[keras.losses.MeanSquaredError(), keras.losses.CategoricalCrossentropy()],

)

- 如果仅将单个损失函数传递给模型,则相同的损失函数将应用于每个输出(此处不合适)。

对于指标同样如此:

model.compile( optimizer=keras.optimizers.RMSprop(1e-3), loss=[keras.losses.MeanSquaredError(), keras.losses.CategoricalCrossentropy()], metrics=[ [ keras.metrics.MeanAbsolutePercentageError(), keras.metrics.MeanAbsoluteError(), ], [keras.metrics.CategoricalAccuracy()], ], )由于已为输出层命名,因此还可以通过字典指定每个输出的损失和指标:

model.compile( optimizer=keras.optimizers.RMSprop(1e-3), loss={ "score_output": keras.losses.MeanSquaredError(), "class_output": keras.losses.CategoricalCrossentropy(), }, metrics={ "score_output": [ keras.metrics.MeanAbsolutePercentageError(), keras.metrics.MeanAbsoluteError(), ], "class_output": [keras.metrics.CategoricalAccuracy()], }, )如果输出超过 2 个,建议使用显式名称和字典。

可以使用 loss_weights 参数为特定于输出的不同损失赋予不同的权重(如,示例中可能希望通过为类损失赋予 2 倍重要性来向 “得分” 损失赋予特权):

model.compile( optimizer=keras.optimizers.RMSprop(1e-3), loss={ "score_output": keras.losses.MeanSquaredError(), "class_output": keras.losses.CategoricalCrossentropy(), }, metrics={ "score_output": [ keras.metrics.MeanAbsolutePercentageError(), keras.metrics.MeanAbsoluteError(), ], "class_output": [keras.metrics.CategoricalAccuracy()], }, loss_weights={"score_output": 2.0, "class_output": 1.0}, )如果这些输出用于预测而不是用于训练,也可以选择不计算某些输出的损失: ```python

List loss version

model.compile( optimizer=keras.optimizers.RMSprop(1e-3), loss=[None, keras.losses.CategoricalCrossentropy()], )

Or dict loss version

model.compile( optimizer=keras.optimizers.RMSprop(1e-3), loss={“class_output”: keras.losses.CategoricalCrossentropy()}, )

- 将数据传递给 `fit()` 中的多输入或多输出模型的工作方式与在编译中指定损失函数的方式类似:可以传递 **NumPy 数组的列表**(1:1 映射到接收损失函数的输出),或者**通过字典将输出名称映射到 NumPy 数组**。

```python

model.compile(

optimizer=keras.optimizers.RMSprop(1e-3),

loss=[keras.losses.MeanSquaredError(), keras.losses.CategoricalCrossentropy()],

)

# Generate dummy NumPy data

img_data = np.random.random_sample(size=(100, 32, 32, 3))

ts_data = np.random.random_sample(size=(100, 20, 10))

score_targets = np.random.random_sample(size=(100, 1))

class_targets = np.random.random_sample(size=(100, 5))

# Fit on lists

model.fit([img_data, ts_data], [score_targets, class_targets], batch_size=32, epochs=1)

# Alternatively, fit on dicts

model.fit(

{"img_input": img_data, "ts_input": ts_data},

{"score_output": score_targets, "class_output": class_targets},

batch_size=32,

epochs=1,

)

"""

2021-08-13 20:04:07.853066: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8100

2021-08-13 20:04:08.378444: I tensorflow/core/platform/default/subprocess.cc:304] Start cannot spawn child process: No such file or directory

4/4 [==============================] - 2s 11ms/step - loss: 12.0460 - score_output_loss: 0.6335 - class_output_loss: 11.4124

4/4 [==============================] - 1s 5ms/step - loss: 10.5846 - score_output_loss: 0.3324 - class_output_loss: 10.2522

<keras.callbacks.History at 0x7fdeae7206d0>

"""

- 下面是

Dataset的用例:与对 NumPy 数组执行的操作类似,Dataset应返回一个字典元组。 ```python train_dataset = tf.data.Dataset.from_tensor_slices( (

) ) train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64){"img_input": img_data, "ts_input": ts_data}, {"score_output": score_targets, "class_output": class_targets},

model.fit(train_dataset, epochs=1)

“”” 2/2 [==============================] - 0s 24ms/step - loss: 10.2075 - score_output_loss: 0.2289 - class_output_loss: 9.9786

<a name="r7b9p"></a>

## (7)使用回调

- Keras 中的回调是在训练过程中的不同时间点(在某个周期开始时、在批次结束时、在某个周期结束时等)调用的对象。它们可用于实现特定行为,例如:

- 在训练期间的不同时间点进行验证(除了内置的按周期验证外)

- 定期或在超过一定准确率阈值时为模型设置检查点

- 当训练似乎停滞不前时,更改模型的学习率

- 当训练似乎停滞不前时,对顶层进行微调

- 在训练结束或超出特定性能阈值时发送电子邮件或即时消息通知

- 回调可以作为列表传递给您对 `fit()` 的调用:

```python

model = get_compiled_model()

callbacks = [

keras.callbacks.EarlyStopping(

# Stop training when `val_loss` is no longer improving

monitor="val_loss",

# "no longer improving" being defined as "no better than 1e-2 less"

min_delta=1e-2,

# "no longer improving" being further defined as "for at least 2 epochs"

patience=2,

verbose=1,

)

]

model.fit(

x_train,

y_train,

epochs=20,

batch_size=64,

callbacks=callbacks,

validation_split=0.2,

)

"""

Epoch 1/20

625/625 [==============================] - 2s 2ms/step - loss: 0.3625 - sparse_categorical_accuracy: 0.8984 - val_loss: 0.2146 - val_sparse_categorical_accuracy: 0.9345

Epoch 2/20

625/625 [==============================] - 1s 2ms/step - loss: 0.1608 - sparse_categorical_accuracy: 0.9526 - val_loss: 0.1695 - val_sparse_categorical_accuracy: 0.9469

Epoch 3/20

625/625 [==============================] - 1s 2ms/step - loss: 0.1171 - sparse_categorical_accuracy: 0.9647 - val_loss: 0.1473 - val_sparse_categorical_accuracy: 0.9561

Epoch 4/20

625/625 [==============================] - 1s 2ms/step - loss: 0.0922 - sparse_categorical_accuracy: 0.9724 - val_loss: 0.1409 - val_sparse_categorical_accuracy: 0.9572

Epoch 5/20

625/625 [==============================] - 1s 2ms/step - loss: 0.0765 - sparse_categorical_accuracy: 0.9769 - val_loss: 0.1520 - val_sparse_categorical_accuracy: 0.9578

Epoch 00005: early stopping

<keras.callbacks.History at 0x7fdd44bf3450>

"""

(a)提供多个内置回调

- Keras 中已经提供多个内置回调,如:

ModelCheckpoint:定期保存模型。EarlyStopping:当训练不再改善验证指标时,停止训练。TensorBoard:定期编写可在 TensorBoard 中可视化的模型日志- TensorBoard:https://tensorflow.google.cn/tensorboard

CSVLogger:将损失和指标数据流式传输到 CSV 文件。

有关完整列表,请参阅:

您可以通过扩展基类

keras.callbacks.Callback来创建自定义回调。回调可以通过类属性self.model访问其关联的模型。示例:在训练期间保存每个批次的损失值列表:

class LossHistory(keras.callbacks.Callback): def on_train_begin(self, logs): self.per_batch_losses = [] def on_batch_end(self, batch, logs): self.per_batch_losses.append(logs.get("loss"))(8)为模型设置检查点

根据相对较大的数据集训练模型时,经常保存模型的检查点至关重要。

- 实现此目标的最简单方式是使用

ModelCheckpoint回调: ```python model = get_compiled_model()

callbacks = [ keras.callbacks.ModelCheckpoint(

# Path where to save the model

# The two parameters below mean that we will overwrite

# the current checkpoint if and only if

# the `val_loss` score has improved.

# The saved model name will include the current epoch.

filepath="mymodel_{epoch}",

save_best_only=True, # Only save a model if `val_loss` has improved.

monitor="val_loss",

verbose=1,

)

] model.fit( x_train, y_train, epochs=2, batch_size=64, callbacks=callbacks, validation_split=0.2 )

“”” Epoch 1/2 625/625 [==============================] - 2s 2ms/step - loss: 0.3583 - sparse_categorical_accuracy: 0.8988 - val_loss: 0.2209 - val_sparse_categorical_accuracy: 0.9323

Epoch 00001: val_loss improved from inf to 0.22089, saving model to mymodel_1 2021-08-13 20:04:19.699738: W tensorflow/python/util/util.cc:348] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them. INFO:tensorflow:Assets written to: mymodel_1/assets Epoch 2/2 625/625 [==============================] - 1s 2ms/step - loss: 0.1639 - sparse_categorical_accuracy: 0.9507 - val_loss: 0.1713 - val_sparse_categorical_accuracy: 0.9466

Epoch 00002: val_loss improved from 0.22089 to 0.17128, saving model to mymodel_2 INFO:tensorflow:Assets written to: mymodel_2/assets

- `ModelCheckpoint` 回调可用于实现容错:在训练随机中断的情况下,从模型的最后保存状态重新开始训练的能力。示例:

```python

import os

# Prepare a directory to store all the checkpoints.

checkpoint_dir = "./ckpt"

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

def make_or_restore_model():

# Either restore the latest model, or create a fresh one

# if there is no checkpoint available.

checkpoints = [checkpoint_dir + "/" + name for name in os.listdir(checkpoint_dir)]

if checkpoints:

latest_checkpoint = max(checkpoints, key=os.path.getctime)

print("Restoring from", latest_checkpoint)

return keras.models.load_model(latest_checkpoint)

print("Creating a new model")

return get_compiled_model()

model = make_or_restore_model()

callbacks = [

# This callback saves a SavedModel every 100 batches.

# We include the training loss in the saved model name.

keras.callbacks.ModelCheckpoint(

filepath=checkpoint_dir + "/ckpt-loss={loss:.2f}", save_freq=100

)

]

model.fit(x_train, y_train, epochs=1, callbacks=callbacks)

"""

Creating a new model

81/1563 [>.............................] - ETA: 2s - loss: 1.0211 - sparse_categorical_accuracy: 0.7207INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.93/assets

178/1563 [==>...........................] - ETA: 5s - loss: 0.7294 - sparse_categorical_accuracy: 0.7960INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.70/assets

281/1563 [====>.........................] - ETA: 5s - loss: 0.6025 - sparse_categorical_accuracy: 0.8312INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.58/assets

380/1563 [======>.......................] - ETA: 5s - loss: 0.5305 - sparse_categorical_accuracy: 0.8512INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.52/assets

483/1563 [========>.....................] - ETA: 5s - loss: 0.4832 - sparse_categorical_accuracy: 0.8635INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.48/assets

578/1563 [==========>...................] - ETA: 5s - loss: 0.4472 - sparse_categorical_accuracy: 0.8727INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.44/assets

680/1563 [============>.................] - ETA: 4s - loss: 0.4189 - sparse_categorical_accuracy: 0.8801INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.41/assets

782/1563 [==============>...............] - ETA: 4s - loss: 0.3970 - sparse_categorical_accuracy: 0.8857INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.39/assets

881/1563 [===============>..............] - ETA: 3s - loss: 0.3831 - sparse_categorical_accuracy: 0.8894INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.38/assets

980/1563 [=================>............] - ETA: 3s - loss: 0.3664 - sparse_categorical_accuracy: 0.8944INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.36/assets

1100/1563 [====================>.........] - ETA: 2s - loss: 0.3512 - sparse_categorical_accuracy: 0.8985INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.35/assets

1179/1563 [=====================>........] - ETA: 2s - loss: 0.3403 - sparse_categorical_accuracy: 0.9013INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.34/assets

1282/1563 [=======================>......] - ETA: 1s - loss: 0.3279 - sparse_categorical_accuracy: 0.9048INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.33/assets

1378/1563 [=========================>....] - ETA: 1s - loss: 0.3195 - sparse_categorical_accuracy: 0.9072INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.32/assets

1481/1563 [===========================>..] - ETA: 0s - loss: 0.3098 - sparse_categorical_accuracy: 0.9100INFO:tensorflow:Assets written to: ./ckpt/ckpt-loss=0.31/assets

1563/1563 [==============================] - 9s 6ms/step - loss: 0.3011 - sparse_categorical_accuracy: 0.9124

<keras.callbacks.History at 0x7fdeaee0aa50>

"""

-

(9)使用学习率时间表

训练深度学习模型的常见模式是随着训练的进行逐渐减少学习。这通常称为 “学习率衰减”。

学习衰减时间表可以是静态的(根据当前周期或当前批次索引预先确定),也可以是动态的(响应模型的当前行为,尤其是验证损失)。

(a)将时间表传递给优化器

通过将时间表对象作为优化器中的

learning_rate参数传递,可以轻松使用静态学习率衰减时间表: ```python initial_learning_rate = 0.1 lr_schedule = keras.optimizers.schedules.ExponentialDecay( initial_learning_rate, decay_steps=100000, decay_rate=0.96, staircase=True )

optimizer = keras.optimizers.RMSprop(learning_rate=lr_schedule)

- 几个内置时间表:`ExponentialDecay`、`PiecewiseConstantDecay`、`PolynomialDecay` 和 `InverseTimeDecay`。

<a name="WnjO6"></a>

### (b)使用回调实现动态学习率时间表

- 由于优化器无法访问验证指标,因此无法使用这些时间表对象来实现动态学习率时间表(如,当验证损失不再改善时降低学习率)。

- 但回调确实可以访问所有指标,包括验证指标!因此可以通过使用可修改优化器上的当前学习率的回调来实现此模式。实际上,它甚至以 `ReduceLROnPlateau` 回调的形式内置。

<a name="LTmUI"></a>

## (10)可视化训练期间的损失和指标

- 在训练期间密切关注模型的最佳方式是使用 TensorBoard,这是一个基于浏览器的应用,它可以在本地运行,为您提供:

- 训练和评估的实时损失和指标图

- (可选)层激活直方图的可视化

- (可选)Embedding 层学习的嵌入向量空间的 3D 可视化

- 参考:[https://tensorflow.google.cn/tensorboard](https://tensorflow.google.cn/tensorboard)

- 如果已通过 pip 安装了 TensorFlow,则应当能够从命令行启动 TensorBoard:

- `tensorboard --logdir=/full_path_to_your_logs`

<a name="yVIMC"></a>

#### 使用 TensorBoard 回调

- 将 TensorBoard 与 Keras 模型和 `fit` 方法一起使用的最简单方式是 TensorBoard 回调。

- 在最简单的情况下,只需指定希望回调写入日志的位置即可:

```python

keras.callbacks.TensorBoard(

log_dir="/full_path_to_your_logs",

histogram_freq=0, # How often to log histogram visualizations

embeddings_freq=0, # How often to log embedding visualizations

update_freq="epoch",

) # How often to write logs (default: once per epoch)

"""

2021-08-13 20:04:31.241071: I tensorflow/core/profiler/lib/profiler_session.cc:131] Profiler session initializing.

2021-08-13 20:04:31.241103: I tensorflow/core/profiler/lib/profiler_session.cc:146] Profiler session started.

2021-08-13 20:04:31.241136: I tensorflow/core/profiler/internal/gpu/cupti_tracer.cc:1614] Profiler found 1 GPUs

2021-08-13 20:04:31.803364: I tensorflow/core/profiler/lib/profiler_session.cc:164] Profiler session tear down.

<keras.callbacks.TensorBoard at 0x7fde1b625790>

2021-08-13 20:04:31.806278: I tensorflow/core/profiler/internal/gpu/cupti_tracer.cc:1748] CUPTI activity buffer flushed

"""