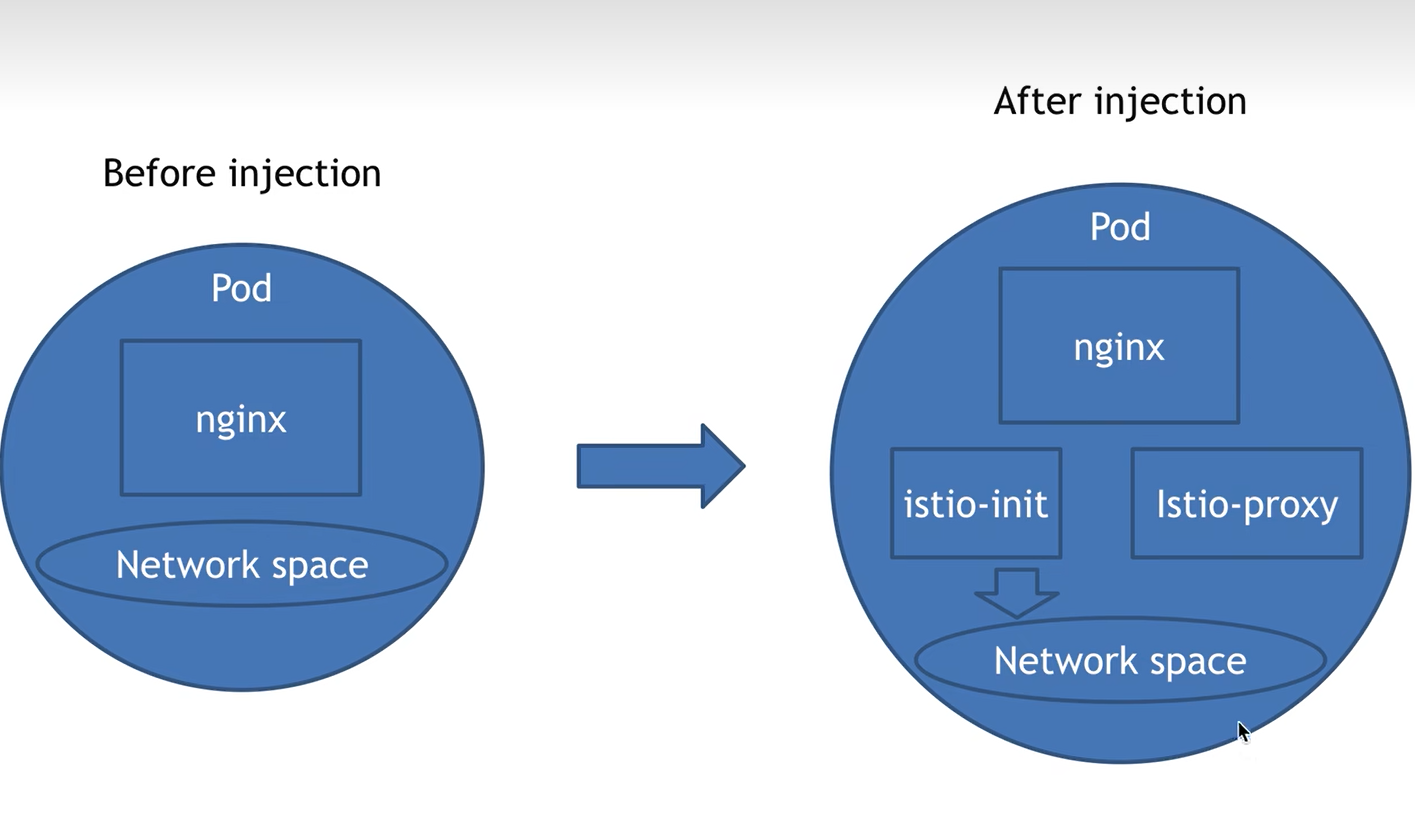

1、注入的本质和后果

2、那些资源能够被注入

Job、DaemonSet、ReplicaSet、Pod、Deployment(产生孩子)

Service、ConfigMap、Secret(没有什么结果)

3、inject注入实例

1. 模拟注入

- 创建一个deployment

kubectl create deployment inject --image=nginx --replicas=1 --dry-run -o yaml > inject-deployment.yamlkubectl create -f inject-deployment.yaml

查看创建的资源

kubectl get poNAME READY STATUS RESTARTS AGEinject-994587885-fbxfk 1/1 Running 0 67s

- 使用生成的该清单手动注入

istioctl kube-inject -f inject-deployment.yaml | kubectl apply -f - -n defaultWarning: resource deployments/inject is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.deployment.apps/inject configured

- 再次查看,观察pod变化

kubectl get podNAME READY STATUS RESTARTS AGEinject-8565f4c47-srwzd 2/2 Running 0 37s

可以看到READY由1/1变成了2/2.通过describe命令可以清楚的看到pod内部已经注入了一个名为istio-proxy的容器,还会注入一个istio-init容器,注入之后会死掉(用于初始化网络空间等)

而且pod名称也变了,由此可见已近创建了一个全新的pod

- 可以打印出注入的资源清单进行分析

istioctl kube-inject -f inject-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:creationTimestamp: nulllabels:app: injectname: injectspec:replicas: 1selector:matchLabels:app: injectstrategy: {}template:metadata:annotations:kubectl.kubernetes.io/default-container: nginxkubectl.kubernetes.io/default-logs-container: nginxprometheus.io/path: /stats/prometheusprometheus.io/port: "15020"prometheus.io/scrape: "true"sidecar.istio.io/status: '{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["workload-socket","credential-socket","workload-certs","istio-envoy","istio-data","istio-podinfo","istio-token","istiod-ca-cert"],"imagePullSecrets":null,"revision":"default"}'creationTimestamp: nulllabels:app: injectsecurity.istio.io/tlsMode: istioservice.istio.io/canonical-name: injectservice.istio.io/canonical-revision: latestspec:containers:- image: nginxname: nginxresources: {}- args:- proxy- sidecar- --domain- $(POD_NAMESPACE).svc.cluster.local- --proxyLogLevel=warning- --proxyComponentLogLevel=misc:error- --log_output_level=default:info- --concurrency- "2"env:- name: JWT_POLICYvalue: third-party-jwt- name: PILOT_CERT_PROVIDERvalue: istiod- name: CA_ADDRvalue: istiod.istio-system.svc:15012- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: INSTANCE_IPvalueFrom:fieldRef:fieldPath: status.podIP- name: SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName- name: HOST_IPvalueFrom:fieldRef:fieldPath: status.hostIP- name: PROXY_CONFIGvalue: |{}- name: ISTIO_META_POD_PORTSvalue: |-[]- name: ISTIO_META_APP_CONTAINERSvalue: nginx- name: ISTIO_META_CLUSTER_IDvalue: Kubernetes- name: ISTIO_META_INTERCEPTION_MODEvalue: REDIRECT- name: ISTIO_META_MESH_IDvalue: cluster.local- name: TRUST_DOMAINvalue: cluster.localimage: docker.io/istio/proxyv2:1.16.2name: istio-proxyports:- containerPort: 15090name: http-envoy-promprotocol: TCPreadinessProbe:failureThreshold: 30httpGet:path: /healthz/readyport: 15021initialDelaySeconds: 1periodSeconds: 2timeoutSeconds: 3resources:limits:cpu: "2"memory: 1Girequests:cpu: 10mmemory: 40MisecurityContext:allowPrivilegeEscalation: falsecapabilities:drop:- ALLprivileged: falsereadOnlyRootFilesystem: truerunAsGroup: 1337runAsNonRoot: truerunAsUser: 1337volumeMounts:- mountPath: /var/run/secrets/workload-spiffe-udsname: workload-socket- mountPath: /var/run/secrets/credential-udsname: credential-socket- mountPath: /var/run/secrets/workload-spiffe-credentialsname: workload-certs- mountPath: /var/run/secrets/istioname: istiod-ca-cert- mountPath: /var/lib/istio/dataname: istio-data- mountPath: /etc/istio/proxyname: istio-envoy- mountPath: /var/run/secrets/tokensname: istio-token- mountPath: /etc/istio/podname: istio-podinfoinitContainers:- args:- istio-iptables- -p- "15001"- -z- "15006"- -u- "1337"- -m- REDIRECT- -i- '*'- -x- ""- -b- '*'- -d- 15090,15021,15020- --log_output_level=default:infoimage: docker.io/istio/proxyv2:1.16.2name: istio-initresources:limits:cpu: "2"memory: 1Girequests:cpu: 10mmemory: 40MisecurityContext:allowPrivilegeEscalation: falsecapabilities:add:- NET_ADMIN- NET_RAWdrop:- ALLprivileged: falsereadOnlyRootFilesystem: falserunAsGroup: 0runAsNonRoot: falserunAsUser: 0volumes:- name: workload-socket- name: credential-socket- name: workload-certs- emptyDir:medium: Memoryname: istio-envoy- emptyDir: {}name: istio-data- downwardAPI:items:- fieldRef:fieldPath: metadata.labelspath: labels- fieldRef:fieldPath: metadata.annotationspath: annotationsname: istio-podinfo- name: istio-tokenprojected:sources:- serviceAccountToken:audience: istio-caexpirationSeconds: 43200path: istio-token- configMap:name: istio-ca-root-certname: istiod-ca-certstatus: {}---

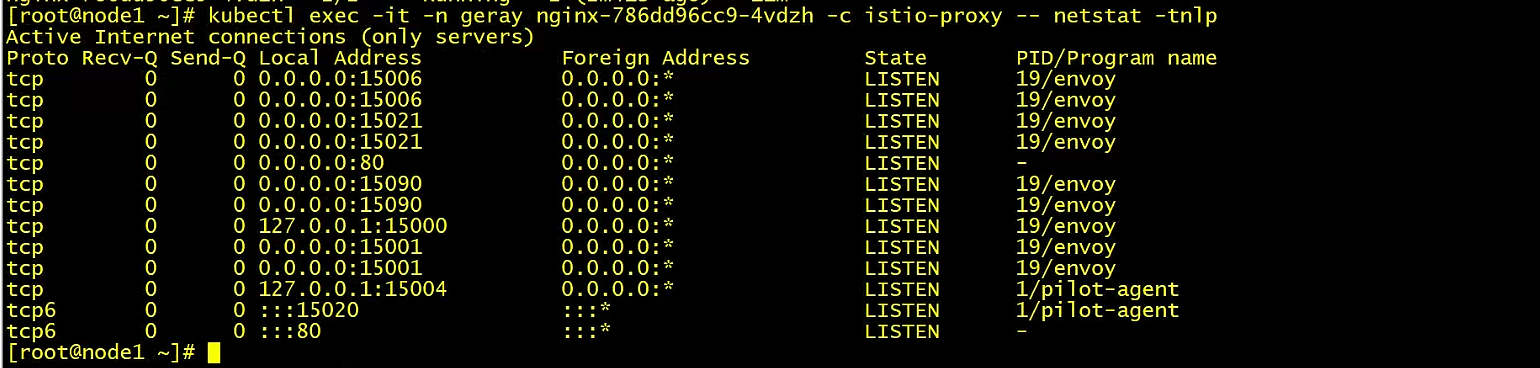

4、从进程角度分析注入后会发生那些变化(重点)

pod中对外服务端口号多增加5个(四个)(新本版发现新增加了10个)

kubectl exec -it -n geray nginx-786dd96cc9-4vdzh -c istio-proxy -- netstat -tnlpActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program nametcp 0 0 0.0.0.0:15006 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:15006 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:15021 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:15021 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN -tcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN 19/envoytcp 0 0 127.0.0.1:15000 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:15001 0.0.0.0:* LISTEN 19/envoytcp 0 0 0.0.0.0:15001 0.0.0.0:* LISTEN 19/envoytcp 0 0 127.0.0.1:15004 0.0.0.0:* LISTEN 1/pilot-agenttcp6 0 0 :::15020 :::* LISTEN 1/pilot-agenttcp6 0 0 :::80 :::* LISTEN -

istio-init干得事

kubectl logs -f -n geray nginx-786dd96cc9-4vdzh -c istio-init2023-02-10T02:01:12.144483Z info Istio iptables environment:ENVOY_PORT=INBOUND_CAPTURE_PORT=ISTIO_INBOUND_INTERCEPTION_MODE=ISTIO_INBOUND_TPROXY_ROUTE_TABLE=ISTIO_INBOUND_PORTS=ISTIO_OUTBOUND_PORTS=ISTIO_LOCAL_EXCLUDE_PORTS=ISTIO_EXCLUDE_INTERFACES=ISTIO_SERVICE_CIDR=ISTIO_SERVICE_EXCLUDE_CIDR=ISTIO_META_DNS_CAPTURE=INVALID_DROP=2023-02-10T02:01:12.144575Z info Istio iptables variables:PROXY_PORT=15001PROXY_INBOUND_CAPTURE_PORT=15006PROXY_TUNNEL_PORT=15008PROXY_UID=1337PROXY_GID=1337INBOUND_INTERCEPTION_MODE=REDIRECTINBOUND_TPROXY_MARK=1337INBOUND_TPROXY_ROUTE_TABLE=133INBOUND_PORTS_INCLUDE=*INBOUND_PORTS_EXCLUDE=15090,15021,15020OUTBOUND_OWNER_GROUPS_INCLUDE=*OUTBOUND_OWNER_GROUPS_EXCLUDE=OUTBOUND_IP_RANGES_INCLUDE=*OUTBOUND_IP_RANGES_EXCLUDE=OUTBOUND_PORTS_INCLUDE=OUTBOUND_PORTS_EXCLUDE=KUBE_VIRT_INTERFACES=ENABLE_INBOUND_IPV6=falseDNS_CAPTURE=falseDROP_INVALID=falseCAPTURE_ALL_DNS=falseDNS_SERVERS=[],[]OUTPUT_PATH=NETWORK_NAMESPACE=CNI_MODE=falseHOST_NSENTER_EXEC=falseEXCLUDE_INTERFACES=2023-02-10T02:01:12.144905Z info Writing following contents to rules file: /tmp/iptables-rules-1675994472144631139.txt2300041476* nat-N ISTIO_INBOUND-N ISTIO_REDIRECT-N ISTIO_IN_REDIRECT-N ISTIO_OUTPUT-A ISTIO_INBOUND -p tcp --dport 15008 -j RETURN-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006-A PREROUTING -p tcp -j ISTIO_INBOUND-A ISTIO_INBOUND -p tcp --dport 15090 -j RETURN-A ISTIO_INBOUND -p tcp --dport 15021 -j RETURN-A ISTIO_INBOUND -p tcp --dport 15020 -j RETURN-A ISTIO_INBOUND -p tcp -j ISTIO_IN_REDIRECT-A OUTPUT -p tcp -j ISTIO_OUTPUT-A ISTIO_OUTPUT -o lo -s 127.0.0.6/32 -j RETURN-A ISTIO_OUTPUT -o lo ! -d 127.0.0.1/32 -m owner --uid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT -o lo ! -d 127.0.0.1/32 -m owner --gid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -d 127.0.0.1/32 -j RETURN-A ISTIO_OUTPUT -j ISTIO_REDIRECTCOMMIT2023-02-10T02:01:12.144996Z info Running command: iptables-restore --noflush /tmp/iptables-rules-1675994472144631139.txt23000414762023-02-10T02:01:12.150882Z info Writing following contents to rules file: /tmp/ip6tables-rules-1675994472150846159.txt14181903992023-02-10T02:01:12.150937Z info Running command: ip6tables-restore --noflush /tmp/ip6tables-rules-1675994472150846159.txt14181903992023-02-10T02:01:12.154122Z info Running command: iptables-save2023-02-10T02:01:12.159165Z info Command output:# Generated by iptables-save v1.8.7 on Fri Feb 10 02:01:12 2023*raw:PREROUTING ACCEPT [0:0]:OUTPUT ACCEPT [0:0]COMMIT# Completed on Fri Feb 10 02:01:12 2023# Generated by iptables-save v1.8.7 on Fri Feb 10 02:01:12 2023*mangle:PREROUTING ACCEPT [0:0]:INPUT ACCEPT [0:0]:FORWARD ACCEPT [0:0]:OUTPUT ACCEPT [0:0]:POSTROUTING ACCEPT [0:0]COMMIT# Completed on Fri Feb 10 02:01:12 2023# Generated by iptables-save v1.8.7 on Fri Feb 10 02:01:12 2023*filter:INPUT ACCEPT [0:0]:FORWARD ACCEPT [0:0]:OUTPUT ACCEPT [0:0]COMMIT# Completed on Fri Feb 10 02:01:12 2023# Generated by iptables-save v1.8.7 on Fri Feb 10 02:01:12 2023*nat:PREROUTING ACCEPT [0:0]:INPUT ACCEPT [0:0]:OUTPUT ACCEPT [0:0]:POSTROUTING ACCEPT [0:0]:ISTIO_INBOUND - [0:0]:ISTIO_IN_REDIRECT - [0:0]:ISTIO_OUTPUT - [0:0]:ISTIO_REDIRECT - [0:0]-A PREROUTING -p tcp -j ISTIO_INBOUND-A OUTPUT -p tcp -j ISTIO_OUTPUT-A ISTIO_INBOUND -p tcp -m tcp --dport 15008 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 15090 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 15021 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 15020 -j RETURN-A ISTIO_INBOUND -p tcp -j ISTIO_IN_REDIRECT-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006-A ISTIO_OUTPUT -s 127.0.0.6/32 -o lo -j RETURN-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -m owner --uid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -m owner --gid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -d 127.0.0.1/32 -j RETURN-A ISTIO_OUTPUT -j ISTIO_REDIRECT-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001COMMIT# Completed on Fri Feb 10 02:01:12 2023

- 进入到容器查看iptable路由规则

crictl ps | grep proxy851999992cdc5 0ed03fb4d64c2 28 minutes ago Running istio-proxy 1 39d2b59c0c6d1 nginx-786dd96cc9-4vdzh

2. 基于进程分析

kubectl exec -it -n geray nginx-786dd96cc9-4vdzh -c istio-proxy -- ps -efUID PID PPID C STIME TTY TIME CMDistio-p+ 1 0 0 02:01 ? 00:00:05 /usr/local/bin/pilot-agent proxy sidecar --domain geray.svc.cluster.local --proxyLogLevel=warning --proxyComponentLogListio-p+ 19 1 0 02:01 ? 00:00:26 /usr/local/bin/envoy -c etc/istio/proxy/envoy-rev.json --drain-time-s 45 --drain-strategy immediate --parent-shutdown-istio-p+ 91 0 0 02:56 pts/0 00:00:00 ps -ef

- pilot-agent进程

- envoy进程