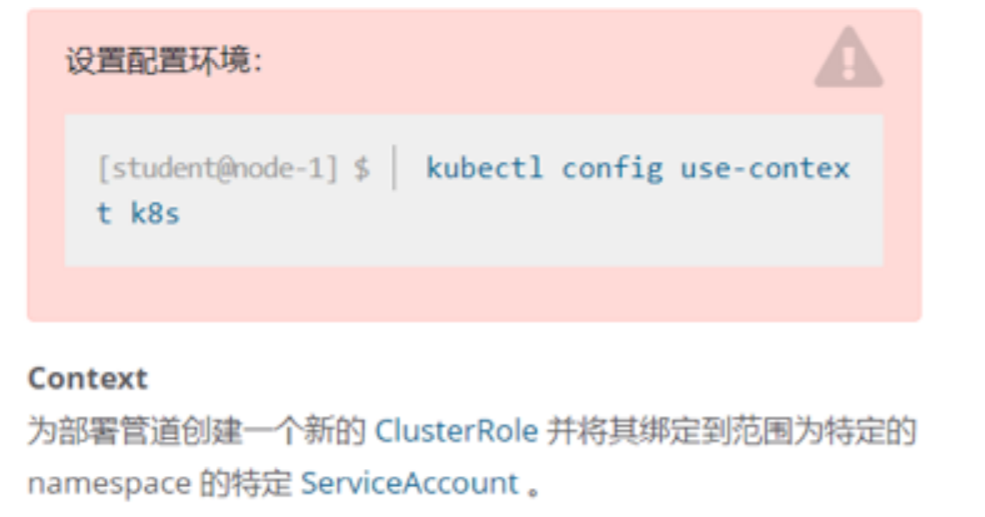

1、RBAC

kubectl create clusterrole deployment-clusterrole --verb=create --resource=Deployments,StatefulSets,DaemonSetkubectl create serviceaccount cicd-token -n app-team1kubectl create rolebinding cicd-token-clusterrole --clusterrole=deployment-clusterrole --serviceaccount=app-team1:cicd-token

2、设置节点不可用

Flag this to return to later | I am satisfied,next 将此标记为稍后返回 我满足,下一个-------------------------------------------------kubectl cordon ek8s-node-1kubectl drain ek8s-node-1 --ignore-daemonsets

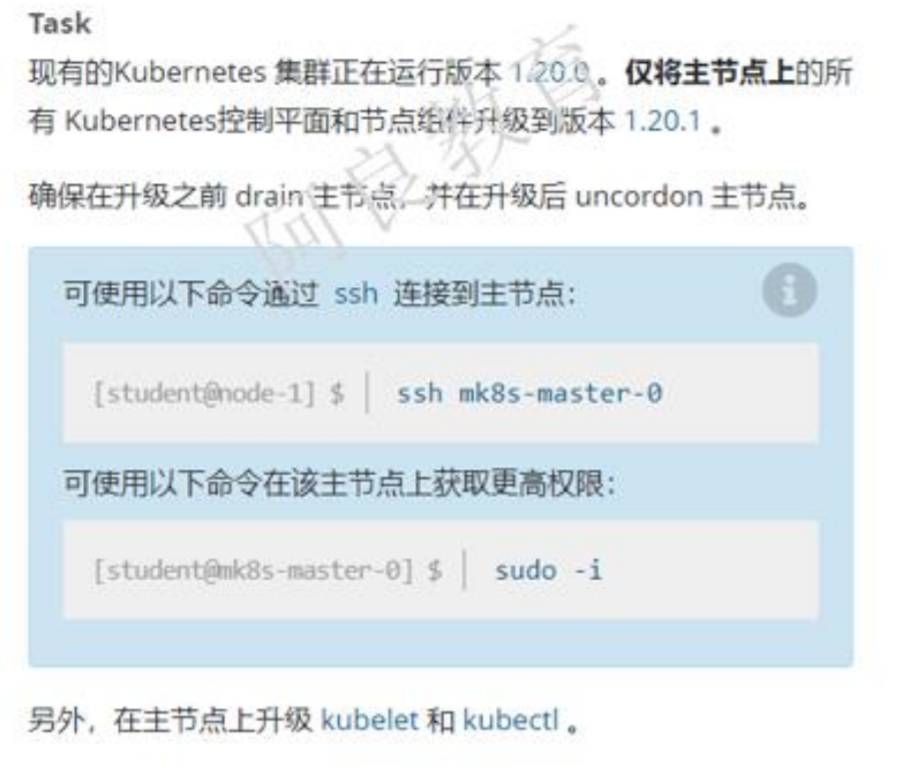

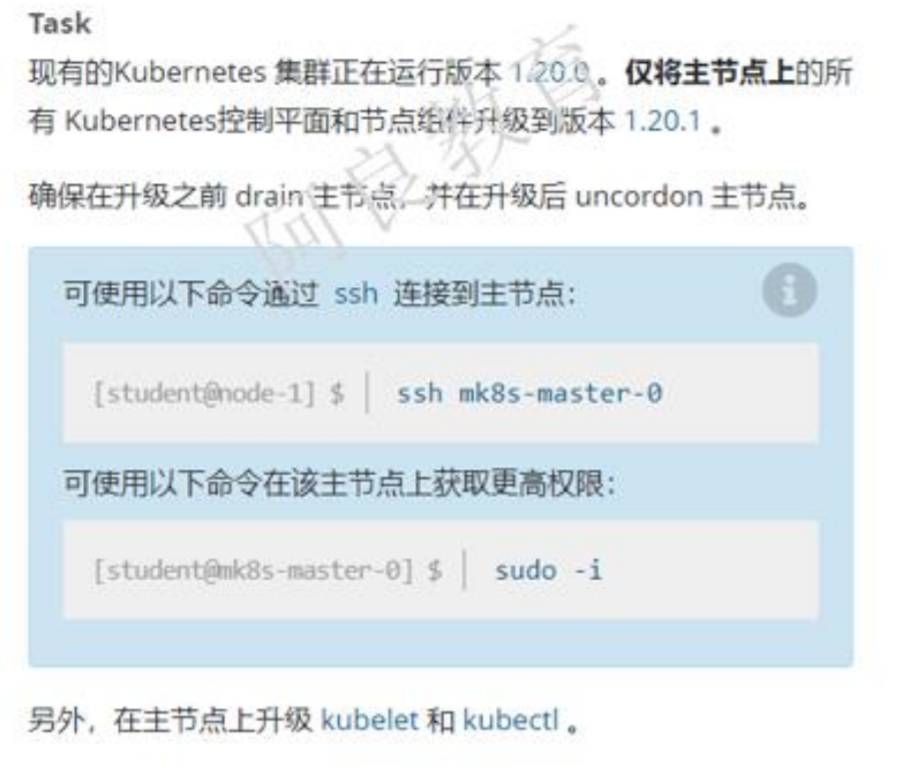

3、升级k8s版本

kubectl drain mk8s-master-0 --ignore-daemonsetsssh mk8s-master-0sudo -iapt install kubeadm=1.20.1-00 -ykubeadm versionkubeadm upgrade plankubeadm upgrade apply v1.212.1 --etcd-upgrade=falseapt install kubectl=1.21.1-00 kubelet=1.21.1-00 -ysystemctl daemon-reloadsystemctl restart kubeletkubectl get nodeskubectl uncordon mk8s-master-0

4、etcd备份和恢复

ETCDCTL_API=3 etcdctl snapshot save /data/backup/etcd-snapshot.db --cacert=ca.crt --cert=etcd-client.crt --key=etcd-client.keysystemctl stop etcdsystemctl cat etcdmv etcd目录 etcd-bakETCDCTL_API=3 etcdctl snapshot restore 恢复文件位置 --endpoints=https://127.0.0.1:2379 --data-dir=etcd数据目录chown -R etcd:etcd etcd数据目录systemctl start etcd

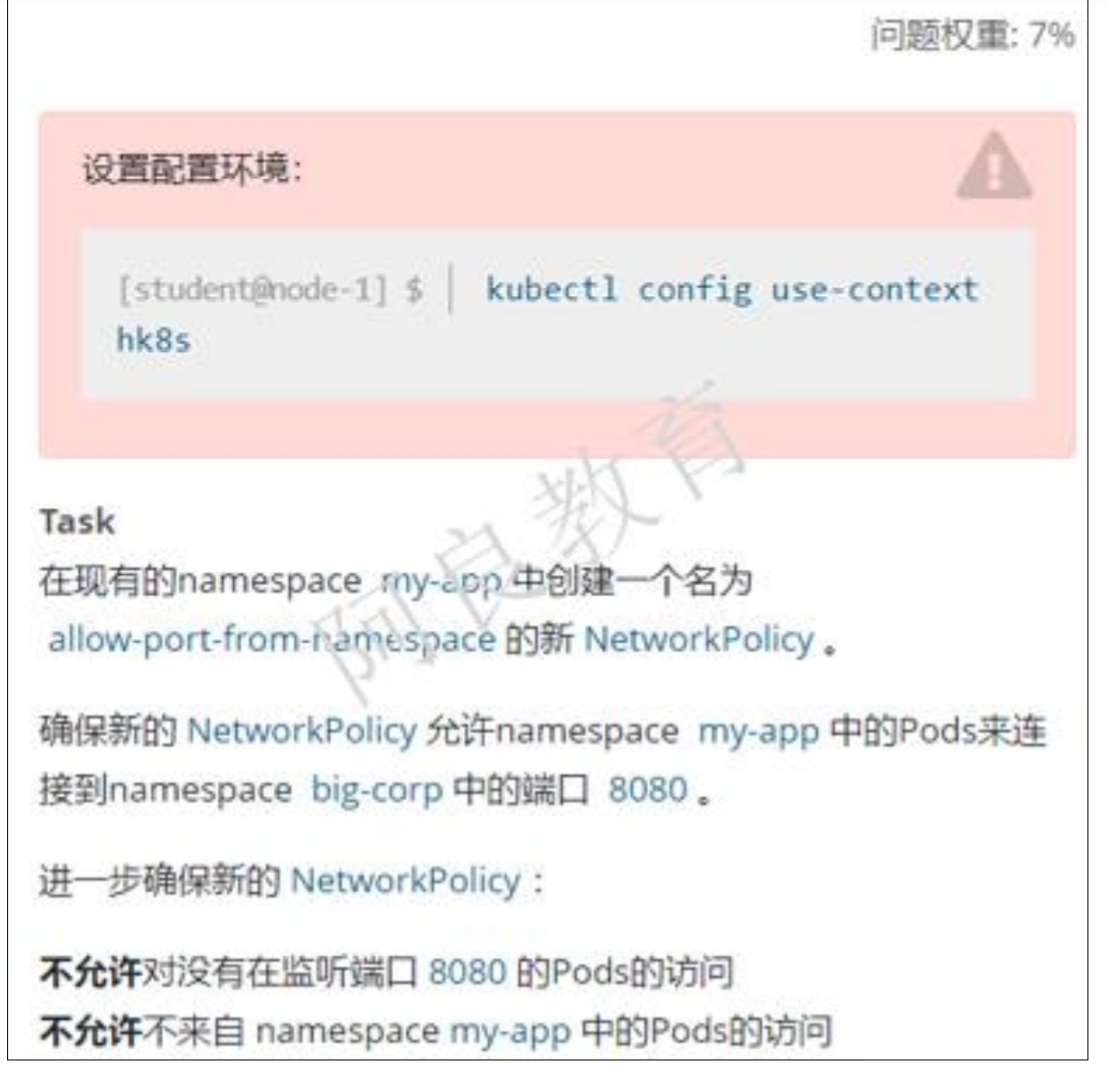

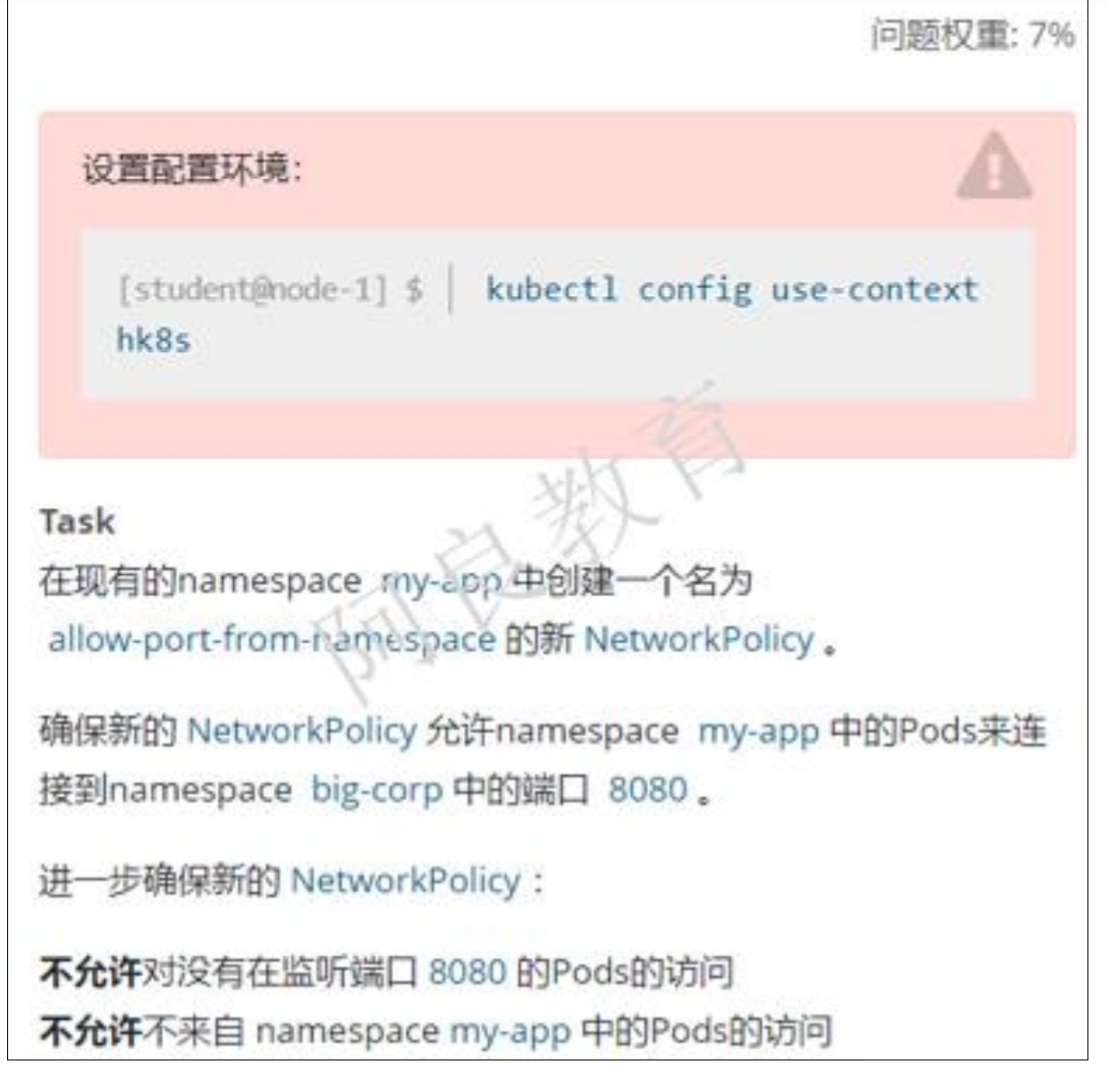

5、网络策略

kubectl label ns big-corp name=big-corpapiVersion: networking.k8s.io/v1kind: NetworkPolicymetadata: name: allow-port-from-namespace namespace: my-appspec: podSelector: {} policyTypes: - Ingress ingress: - from: - namespaceSelector: matchLabels: name: big-corp ports: - protocol: TCP port: 8080

6、SVC暴露

kubectl edit deployment front-end... containers: - image: nginx imagePullPolicy: Always name: nginx ports: - name: http protocol: TCP containerPort: 80...kubectl expose deployment fron-end --type=NodePort --port=80 --target-port=80 --name fron-end-svc

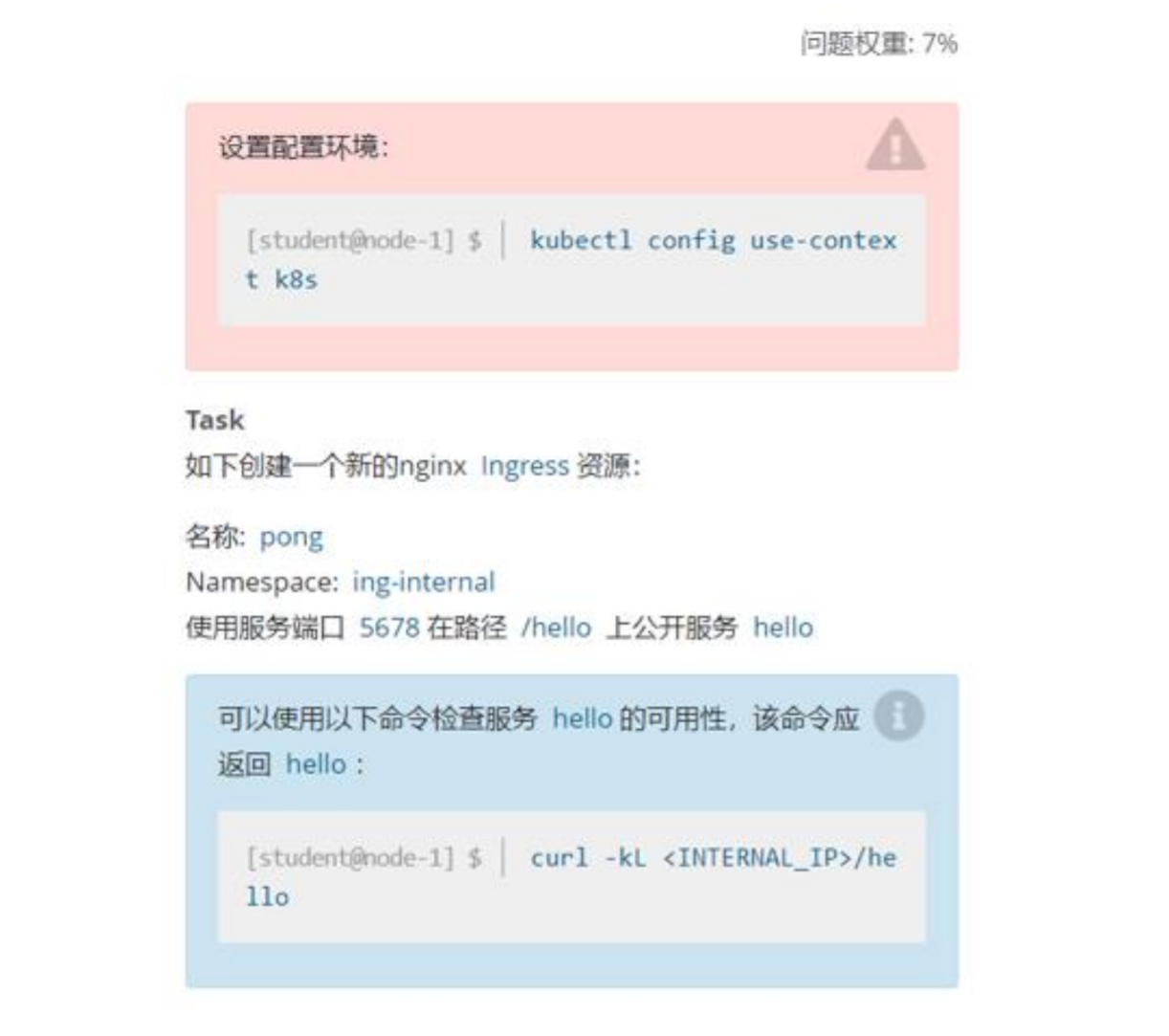

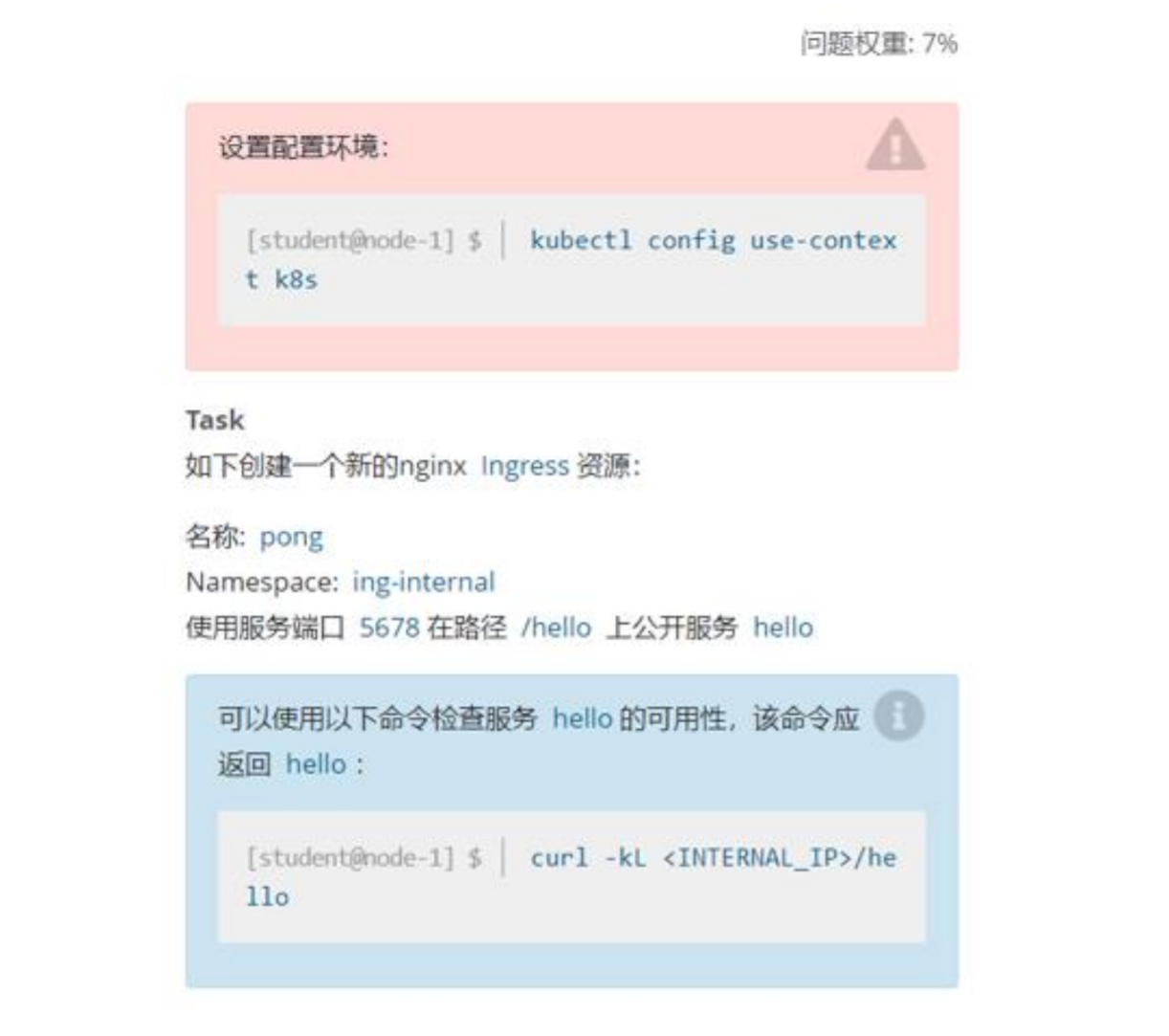

7、Ingress

apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: pong namespace: ing-internalspec: rules: - host: http: paths: - pathType: Prefix path: "/hello" backend: service: name: hello port: number: 5678kubectl get ingress -n ing-internalcurl -kL <ingressIP>:/hello

8、扩容pod数量

kubectl scale deployment loadbalancer --replicas=5

9、nodeSelector

apiVersion: v1kind: Podmetadata: name: nginx-kusc0041spec: containers: - name: nginx image: nginx nodeSelector: disk: ssd

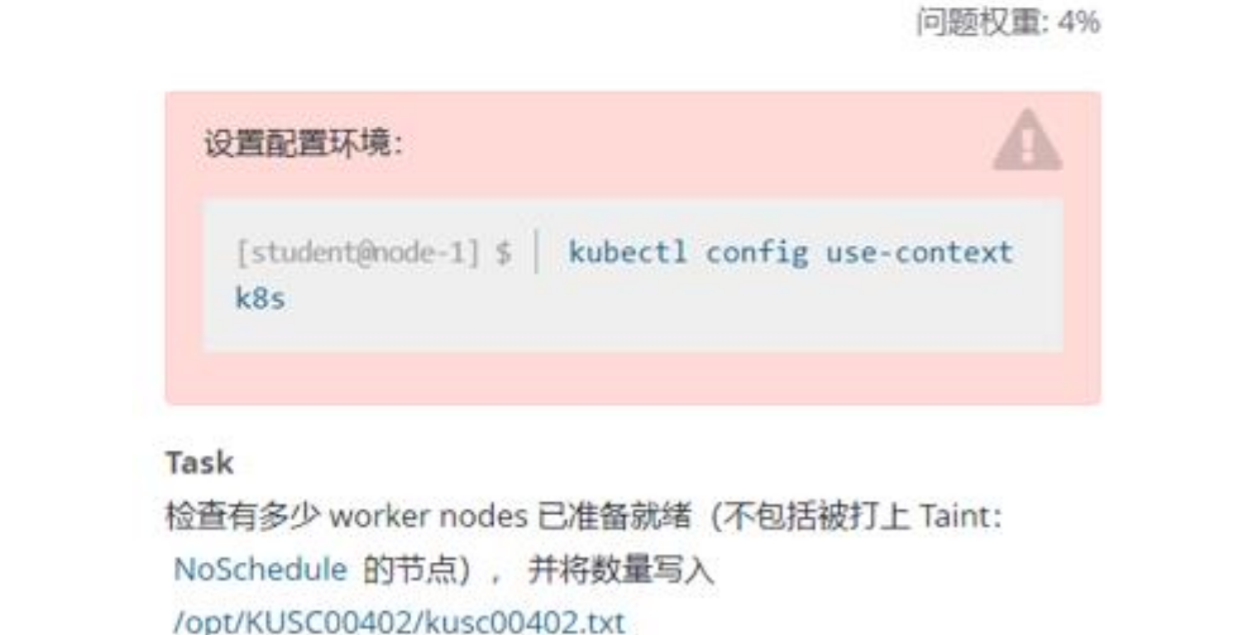

10、统计就绪节点数量

kubectl describe node $(kubectl get nodes | grep -v NotReady | grep Ready | awk '{print $1}') | grep Taint | grep -vc NoSchedule > 文件位置

11、配置多容器

apiVersion: v1kind: Podmetadata: labels: run: kuc4 name: kuc4spec: containers: - image: nginx name: kuc4 - image: redis name: redis - image: memcached name: memcached

12、pv

apiVersion: v1kind: PersistentVolumemetadata: name: app-dataspec: capacity: storage: 2Gi accessModes: - ReadWriteOnce hostPath: path: "/srv/app-data"

13、PVC

apiVersion: v1kind: PersistentVolumeClaimmetadata: name: pv-volumespec: storageClassName: csi-hostpath-sc accessModes: - ReadWriteOnce resources: requests: storage: 10Gi---apiVersion: v1kind: Podmetadata: name: web-serverspec: volumes: - name: task-pv-storage persistentVolumeClaim: claimName: pv-volume containers: - name: web-server image: nginx volumeMounts: - mountPath: "/usr/share/nginx/html" name: task-pv-storagekubectl edit pvc pv-volume --save-config

14、获取错误日志

kubectl logs footbar | grep file-not-found | tee 日志文件

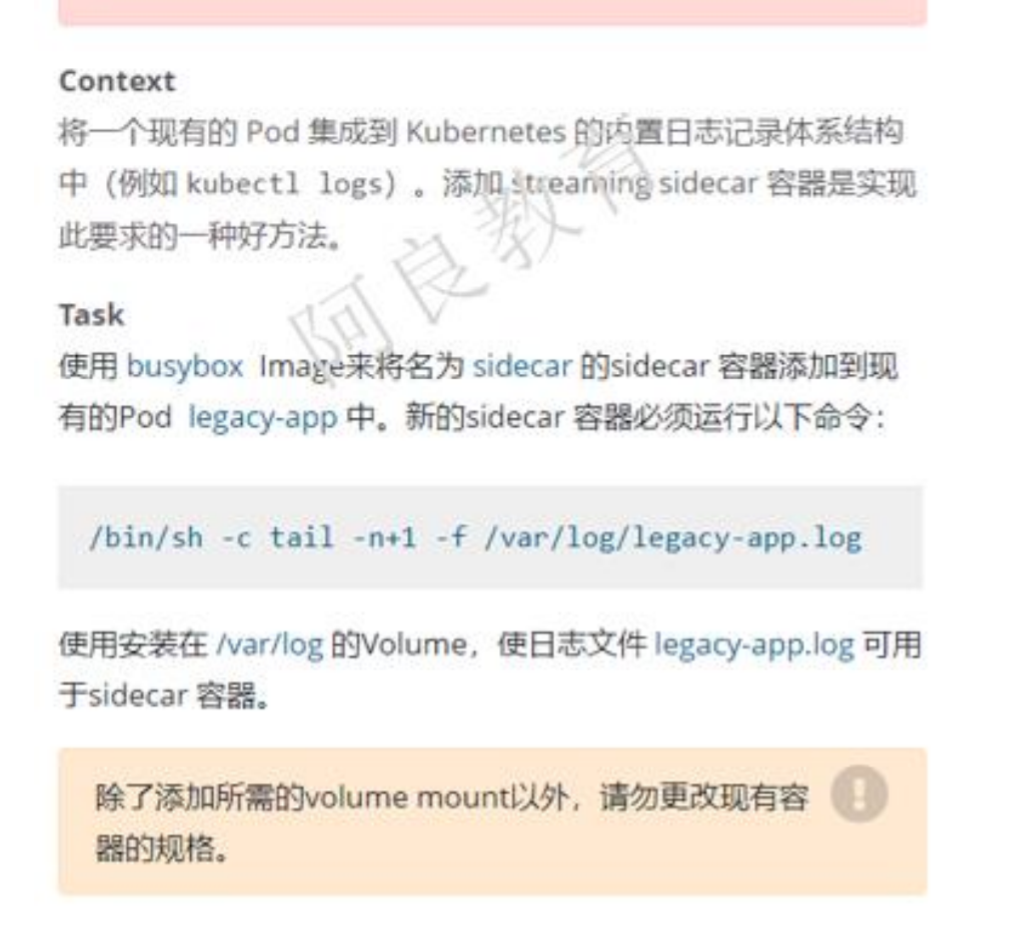

15、边车容器

apiVersion: v1kind: Podmetadata: name: legacy-appspec: containers: - name: legacy-app image: busybox args: - /bin/sh - -c - > i=0; while true; do echo "$i: $(date)" >> /var/log/1.log; echo "$(date) INFO $i" >> /var/log/2.log; i=$((i+1)); sleep 1; done volumeMounts: - name: varlog mountPath: /var/log - name: sidecar image: busybox args: [/bin/sh, -c, 'tail -n+1 -f /var/log/legacy-app.log'] volumeMounts: - name: varlog mountPath: /var/log volumes: - name: varlog emptyDir: {}

16、统计使用CPU最高的Pod

kubectl top pod -l name=cpu-utilizer --sort-by=cpu -Aecho "<pod-name>" > 文件位置

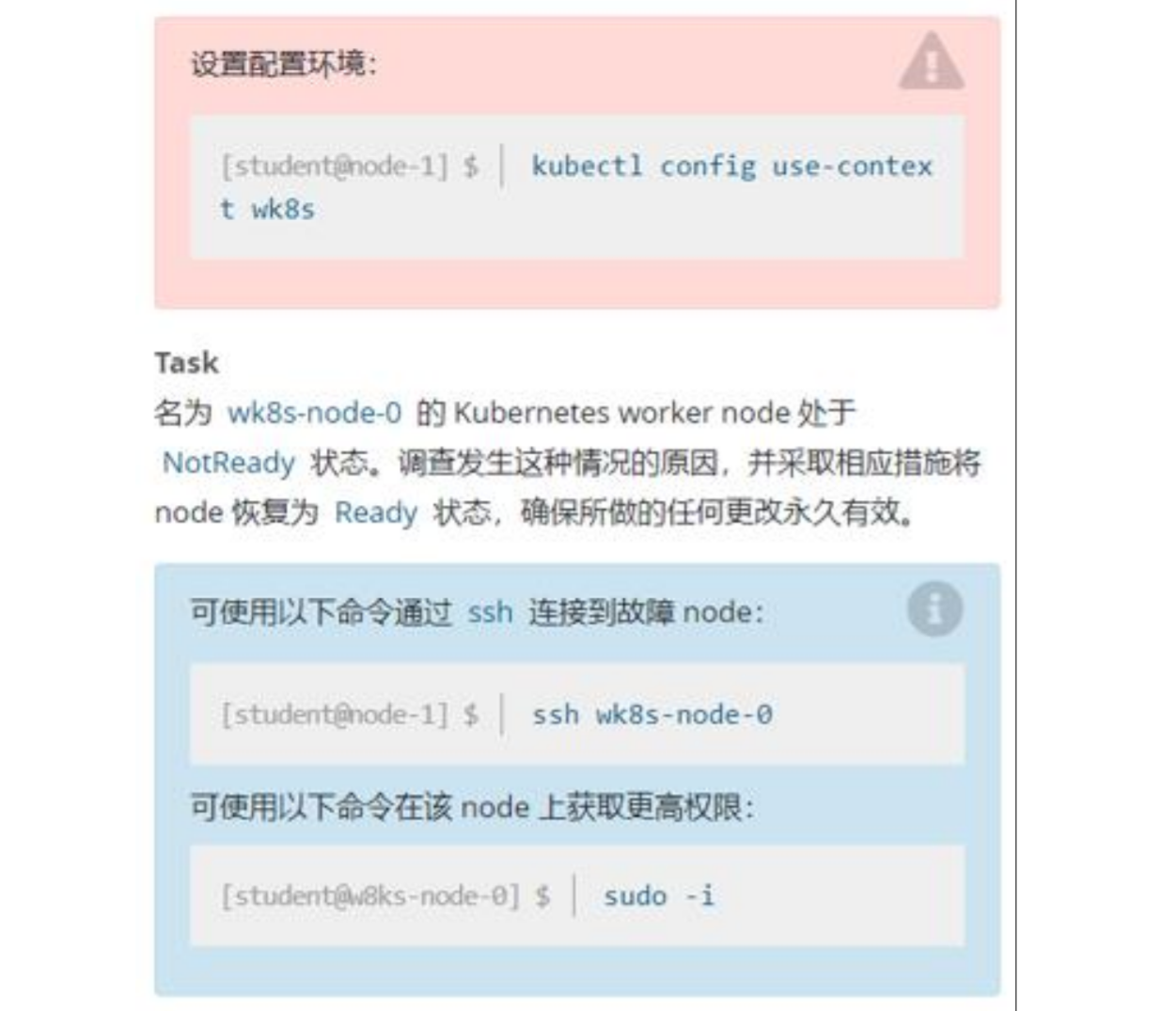

17、节点NotReady处理

ssh ..sudo -i systemctl status kubeletsystemctl start kubeletkubeclt get nodes