1、Ansible组成结构

○ Ansible

是Ansible的命令工具,核心执行工具;一次性或临时执行的操作都是通过该命令执行。

○ Ansible Playbook

任务剧本(又称任务集),编排定义Ansible任务集的配置文件,由Ansible顺序依次执行,yaml格式。

○ Inventory

○ Modules Ansible执行命令的功能模块,Ansible2.3版本为止,共有1039个模块。还可以自定义模块。

○ Plugins 插件,模块功能的补充,常有连接类型插件,循环插件,变量插件,过滤插件,插件功能用的较少。

○ API 提供给第三方程序调用的应用程序编程接口。 # 2、安装部署 ## 1. YUM

plain

yum install -y epel-release

yum install -y ansible

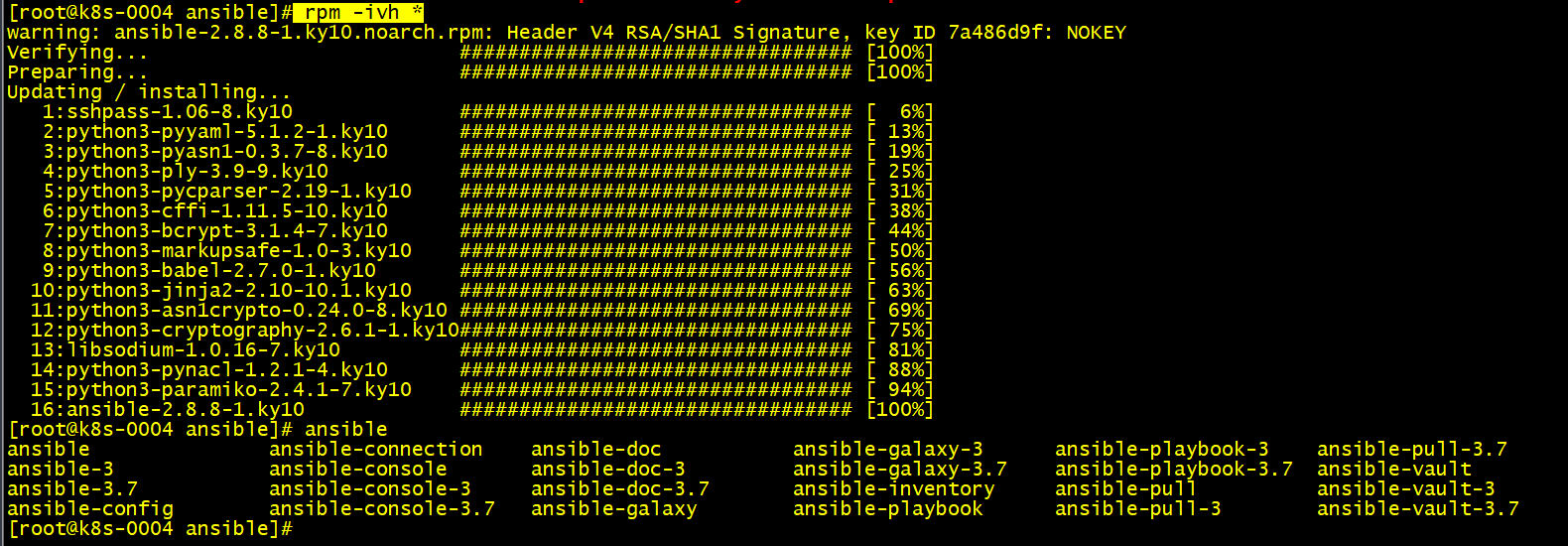

## 2. RPM(arm架构银河麒麟V10)

> 下载地址:https://github.com/geray-zsg/geray-zsg.github.io/tree/main/ansible-arm64

>

> amd的可以直接下载官方ansible安装包,并传到服务器

>

https://releases.ansible.com/ansible/ansible-2.9.9.tar.gz

plain

# rpm

rpm -ivh <rpm包>

> 顺序需要具体调整

>

plain

rpm -ivh *

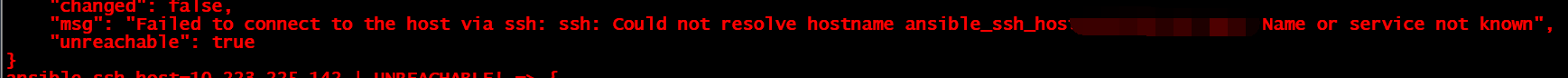

执行ping命令测试连通性时报错:

执行ping命令测试连通性时报错:

plain

Could not resolve hostname ansible_ssh_host=xxxxxxxx: Name or service not known

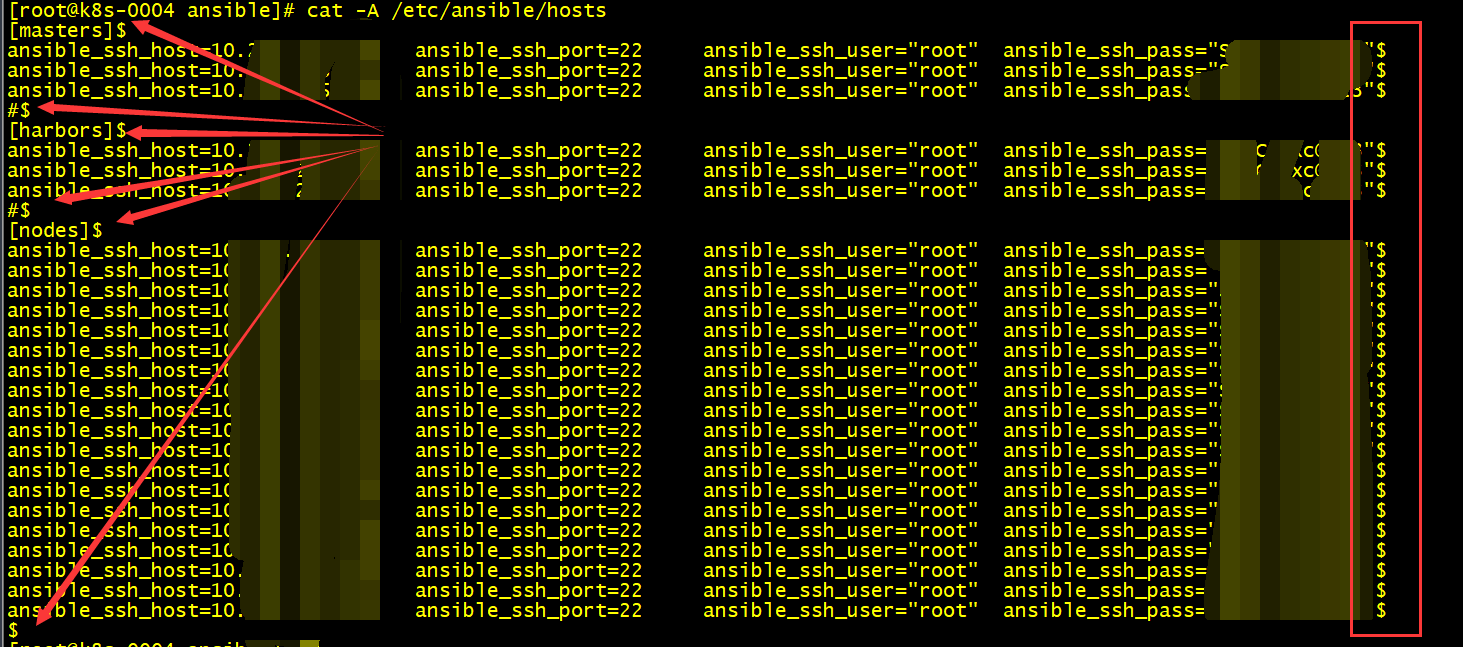

使用cat -A /etc/ansible/hosts查看时发现每行后都有个$符号

# 3、自动化批量管理

1. 编写hosts清单:

# 3、自动化批量管理

1. 编写hosts清单:<font style="color:rgb(0, 130, 0);background-color:rgb(244, 244, 244);">ansible all --list</font>查看清单是否配置合适

2. 加密清单

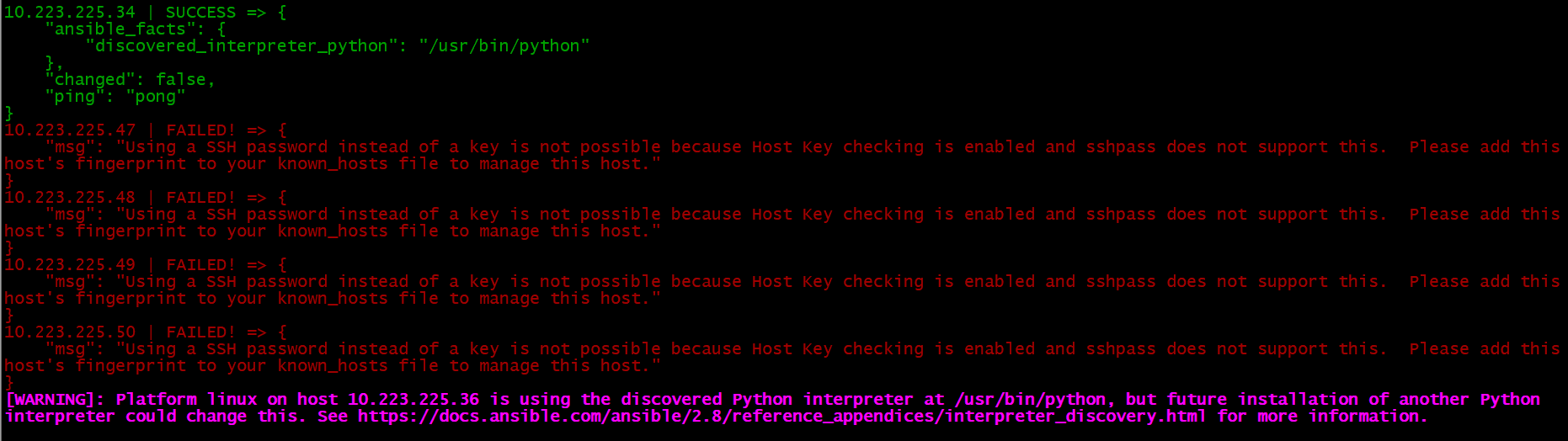

3. ping命令测试清单:ansible all -i /etc/ansible/hosts -m ping

:::info

存在以下错误,可以尝试将/etc/ansible/ansible.cfg 文件中的

:::info

存在以下错误,可以尝试将/etc/ansible/ansible.cfg 文件中的<font style="color:rgb(77, 77, 77);">host_key_checking = False</font>注释打开:

10.223.225.47 | FAILED! => {

“msg”: “Using a SSH password instead of a key is not possible because Host Key checking is enabled and sshpass does not support this. Please add this host’s fingerprint to your known_hosts file to manage this host.”

}

:::

4. 编写playbook

> 参考:https://www.yuque.com/geray-alxoc/cl987x/detc5p#qlXMw

>

# 4、Playbook

## 1. 介绍

playbook是ansible用于配置,部署,和管理被节点的剧本

通过playbook的详细描述,执行其中的一些列tasks,可以让远端的主机达到预期的状态。playbook就像ansible控制器给被控节点列出的一系列to-do-list,而且被控节点必须要完成

playbook顾名思义,即剧本,现实生活中演员按照剧本表演,在ansible中,这次由被控计算机表演,进行安装,部署应用,提供对外的服务等,以及组织计算机处理各种各样的事情。

## 2. 格式简介

### 1)安装运行mysql为例

plain

---

- hosts: node1

remote_user: root

tasks:

- name: install mysql-server package

yum: name=mysql-server state=present

- name: starting mysqld service

service: name=msyql state=started

:::info

以上面为例,文件名应该以 .yml 结尾

host部分:

使用hosts指示使用哪个主机或者主机组来运行下面的tasks,每个playbooks都必须指定hosts,host也可以使用通配符格式。主机或者主机组在inventorry清单中指定,可以使用系统默认的/etc/ansible/hosts,也可以自己编辑,在运行的时候加上-i 选项指定清单的位置。在运行清单文件的时候, —list-hosts选项会显示哪些主机将会参与执行task的过程中。

remote_user:

指定远端主机的哪个用户来登录远端系统,在远端系统执行task的用户,可以任意指定,也可以使用sudo,但是用户必须要有执行相应的task权限。

tasks:

指定远端主机将要执行的一系列动作。tasks的核心为ansible的模块,前面已经提到模块的用法,tasks包含name和要执行的模块,name是可选的,只是为了便于用户阅读,模块是必须的,同时也要给予模块相应的参数

:::

> + 使用ansible-playbook运行playbook文件,得到的输出信息中,信息内容为JSON格式,并且由不同的颜色组成,便于识别。

> + 绿色代表执行成功,系统保持原样。黄色代表系统状态发生改变。红色代表执行失败,显示错误输出。

>

### 2)核心元素

+ Hosts:主机组host_name="caas-kc1" ansible_ssh_host=10.223.225.7 ansible_ssh_port=22 ansible_ssh_user="root" ansible_ssh_pass="MeJYj9za5Hk9"

+ Tasks:任务列表

+ Variables:变量,设置方式有四种

+ Templates:包含了模块语法的文本文件

+ Handlers:由特定条件触发的任务。

3)基本组件

Hosts:运行指定任务的目标主机remoute_user:在远程主机上执行任务的用户;sudo_user:tasks:任务列表tasks的具体格式:tasks:- name: TASK_NAMEmodule: argumentsnotify: HANDLER_NAMEhandlers:- name: HANDLER_NAMEmodule: arguments##模块,模块参数:格式如下:(1)action: module arguments(2) module: arguments注意:shell和command模块后直接加命令,而不是key=value类的参数列表handlers:任务,在特定条件下触发;接受到其他任务的通知时被触发;

- 在某个任务的状态在运行之后为changed时,可以通过“notify”通知相应的handlers;

- 任务可以通过“tags”打标签,而后可以在ansible-playbook命令上使用-t指定进行调用;

3)安装Nginx实例

[root@ansible ~]# cd /etc/ansible/[root@ansible ansible]# lsansible.cfg hosts nginx.yml roles[root@ansible ansible]# cat nginx.yml---- hosts: webremote_user: roottasks:- name: install nginx ##安装模块,需要在被控主机里加上nginx的源yum: name=nginx state=present- name: copy nginx.conf ##复制nginx的配置文件过去,需要在本机的/tmp目录下编辑nginx.confcopy: src=/tmp/nginx.conf dest=/etc/nginx/nginx.conf backup=yesnotify: reload #当nginx.conf发生改变时,通知给相应的handlerstags: reloadnginx #打标签- name: start nginx service #服务启动模块service: name=nginx state=startedtags: startnginx #打标签handlers:- name: reloadservice: name=nginx state=restarted[root@ansible ansible]#

运行测试

ansible-playbook nginx.yml

- 测试标签,我们在nginx.yml中已经打了标签,所以可以直接应用标签,但是需要先将服务关闭,在运行剧本并且引用标签

# 关闭被控节点的nginx服务ansible web -m shell -a 'systemctl stop nginx'# 重新加载剧本并且应用标签ansible-playbook nginx.yml -t startnginx# 使用reloadnginx标签,重新加载剧本ansible-playbook nginx.yml -t reloadnginx

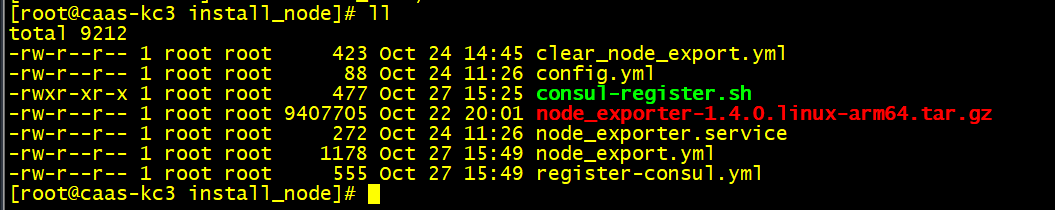

5、Playbook部署node_export监控节点

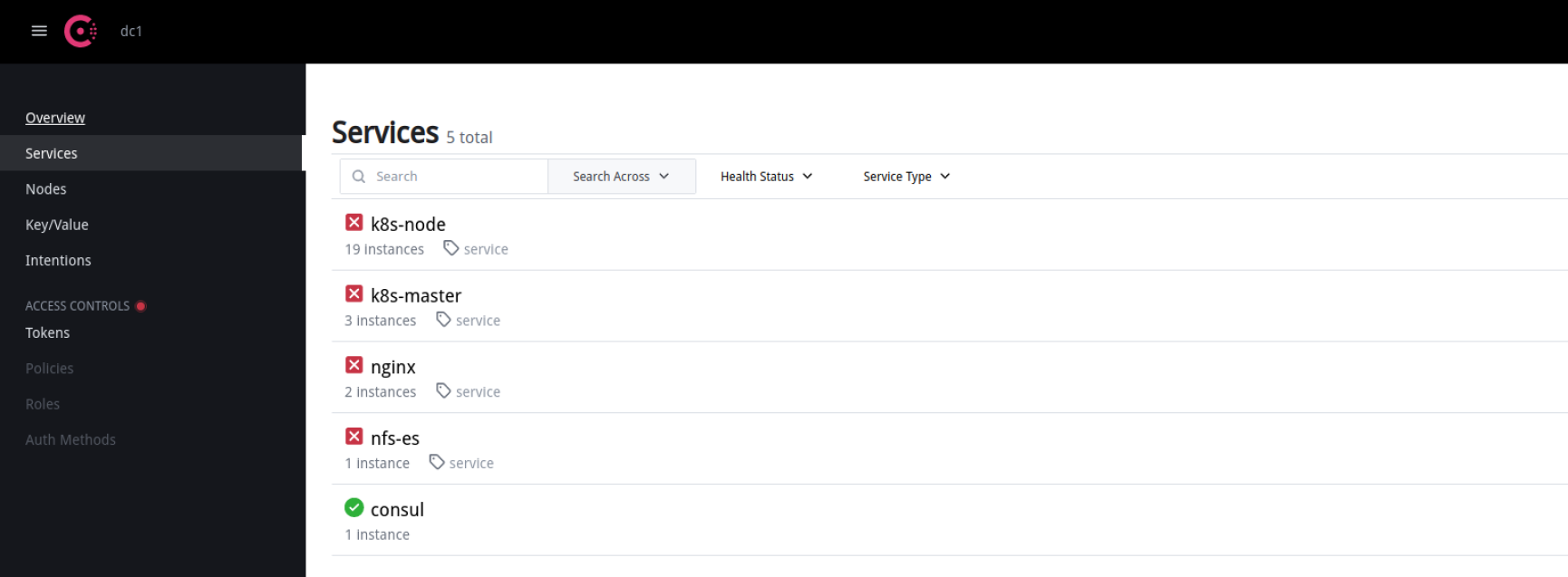

0. 部署consul

有状态服务:

镜像:10.223.225.56:32000/library/consul:1.13.3

容器名称:consul

持久化:/consul/data (读写)

环境变量:TZ=Asia/Shanghai

端口:8500=30085

1. playbook(部署)

1. consul服务注册脚本

cat consul-register.sh#!/bin/bashinventory_hostname=$1 # 主机名称 参数1:inventory_hostname[5:]group_names=$2 # 分组名称host中的[web] 参数2:group_names[0]ansible_ssh_host=$3 # IP地址 参数3:ansible_ssh_hostexporter_port=$4 # 服务端口 参数4:exporter_portecho $inventory_hostname $group_names $ansible_ssh_host $exporter_portcurl -X PUT -d '{"id": "'"${inventory_hostname}"'","name": "'"${group_names}"'","address": "'"${ansible_ssh_host}"'","port": '"${exporter_port}"',"tags": ["service"],"checks": [{"http": "http://'"${ansible_ssh_host}"':'"${exporter_port}"'","interval": "5s"}]}' http://10.223.225.55:30085/v1/agent/service/register

2. node_exporter.service

[Unit]Description=node_exporter[Service]ExecStart=/opt/node_exporter/node_exporter --web.listen-address=0.0.0.0:9110 --web.config=/opt/node_exporter/config.ymlExecReload=/bin/kill -HUP $MAINPIDKillMode=processRestart=on-failure[Install]WantedBy=multi-user.target

3. node_exporter服务加密配置

cat config.ymlbasic_auth_users:admin: $2y$12$Ny/LBvgnADZpCZzHIg1CQOOlvnCpPZweZd/ULT9BS159BUAQKijqq

4. 部署node_exporter的playbook

- 批量部署node_exporter剧本

cat node_export.yml---- hosts: k8s-masterremote_user: roottasks:- name: "上传node_exporter并解压"unarchive: src=node_exporter-1.4.0.linux-arm64.tar.gz dest=/opt/ backup=yes- name: '重命名文件名'shell: mv /opt/node_exporter-1.4.0.linux-arm64 /opt/node_exporter- name: '复制node_export.service'copy: src=node_exporter.service dest=/usr/lib/systemd/system/ backup=yes- name: '复制config.yml加密认证文件'copy: src=config.yml dest=/opt/node_exporter/ backup=yes- name: '启动node_exportert服务'systemd: name=node_exporter state=restarted enabled=yes daemon_reload=yes#- name: '启动node_exportert服务'# shell: systemctl daemon-reload && systemctl start node_exporter && systemctl enable node_exporter- name: '检查node_exporter状态'shell: systemctl status node_exporter | grep runningregister: status_resultignore_errors: True- name: node_exporter is Runningdebug: msg="node_exporter is running, OK"when: status_result is success- name: node_exporter is Failddebug: msg="node_exporter is faild, Faild"when: status_result is failed

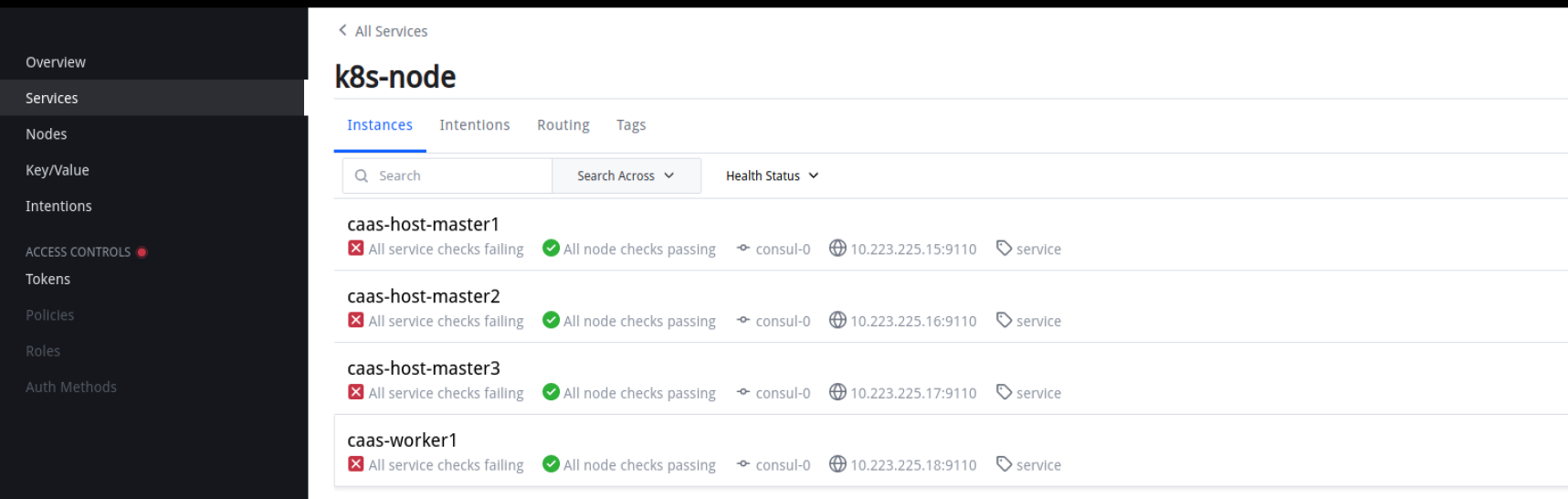

- consul服务注册API

"id" : "web1" , "name " : "webservers"

"id" : "web2" , "name " : "webservers"

#curl -X PUT -d '{"id": "<主机名>","name": "<分组名称>","address": "<IP>","port": <PORT>,"tags": ["<标签>"],"checks": [{"http": "http://192.168.6.32:9100","interval": "5s"}]}' http://192.168.6.31:8500/v1/agent/service/register

- 服务注册到consul的ansible剧本

cat register-consul.yml

---

#- hosts: k8s-master

- hosts: nfs-es

remote_user: root

vars:

exporter_port: 9110

tasks:

- name: '推送注册脚本'

copy: src=consul-register.sh dest=/usr/local/bin/ backup=yes

- name: 'debug'

debug: msg='{{ group_names[0] }} {{ inventory_hostname[5:] }} {{exporter_port}} {{ ansible_ssh_host }}'

- name: '注册'

shell: |

chmod +x /usr/local/bin/consul-register.sh

/usr/local/bin/consul-register.sh {{ inventory_hostname[5:] }} {{ group_names[0] }} {{ ansible_ssh_host }} {{ exporter_port }}

删除服务:

- 不能直接删除service(至少我没有找到方法)

- 删除service下的每一个实例(这里也就是主机名)

# curl --request PUT http://10.223.225.55:30085/v1/agent/service/deregister/<实例名>

curl --request PUT http://10.223.225.55:30085/v1/agent/service/deregister/caas-kc1

5. 检查并运行playbook

# 检查

#ansible-playbook -C <playbook.yaml>

# 执行

#ansible-playbook <playbook.yaml>

ansible-playbook -C node_export.yml

ansible-playbook -C register-consul.yml

ansible-playbook node_export.yml

ansible-playbook register-consul.yml

# 添加防火墙

#firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="10.223.225.0/24" port protocol="tcp" port="9110" accept"

firewall-cmd --zone=public --add-port=9110/tcp --permanent

firewall-cmd --reload

# ansible批量开放防火前

ansible mysql-1 -m shell -a "firewall-cmd --zone=public --add-port=9110/tcp --permanent"

ansible mysql-1 -m shell -a "firewall-cmd --reload"

- 红色的叉主要是node_exporter添加了账号密码认证导致无法进行健康检查

6. 添加Prometheus

- job_name: 'nginx-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['nginx-hosts']

- job_name: 'nfs-es-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['nfs-es-hosts']

- job_name: 'k8s-master-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['k8s-master-hosts']

- job_name: 'k8s-node-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['k8s-node-hosts']

- job_name: 'mysql57-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['mysql57-hosts']

- job_name: 'mysql58-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['mysql58-hosts']

7. 添加Grafana

https://grafana.com/grafana/dashboards/

grafana常用监控模板_51CTO博客_grafana监控模板大全

- 模板:8919

- 总结点

2. playbook(清理)

---

- hosts: web # 所有的组使用all

remote_user: root

tasks:

- name: '停止node_exportert服务'

shell: systemctl disable node_exporter && systemctl stop node_exporter

- name: '清理node_export.service'

file:

path: /usr/lib/systemd/system/node_exporter.service

state: absent

- name: "清理node_exporter解压文件"

file:

path: /opt/node_exporter

state: absent

5、监控mysql

1. mysql服务

1)mysqld_exporter.service

cat mysqld_exporter.service

[Unit]

Description=mysqld_exporter

[Service]

ExecStart=/opt/mysqld_exporter/mysqld_exporter --web.config.file=/opt/mysqld_exporter/config.yml --config.my-cnf=/opt/mysqld_exporter/.my.cnf

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target

--config.my-cnf=/usr/local/mysqld_exporter/.my.cnf指定mysql信息(默认位置/root/.my.cnf)

2).my.cnf

cat .my.cnf

[client]

host=127.0.0.1

port=3366

user=exporter

password=2@42AMFtnNY4

3) config.yml

cat config.yml

basic_auth_users:

admin: $2y$12$Ny/LBvgnADZpCZzHIg1CQOOlvnCpPZweZd/ULT9BS159BUAQKijqq

4)mysqld_exporter.yml

cat mysqld_exporter.yml

---

- hosts: dbservers

remote_user: root

tasks:

- name: "上传mysqld_exporter并解压"

unarchive: src=mysqld_exporter-0.14.0.linux-arm64.tar.gz dest=/opt/ backup=yes

- name: '重命名文件名'

shell: mv /opt/mysqld_exporter-0.14.0.linux-arm64 /opt/mysqld_exporter

- name: '复制node_export.service'

copy: src=mysqld_exporter.service dest=/usr/lib/systemd/system/ backup=yes

- name: '复制config.yml加密认证文件'

copy: src=config.yml dest=/opt/mysqld_exporter/ backup=yes

- name: '复制.my.cnf文件'

copy: src=.my.cnf dest=/opt/mysqld_exporter/ backup=yes

- name: '启动mysqld_exportert服务'

systemd: name=mysqld_exporter state=restarted enabled=yes daemon_reload=yes

- name: '检查mysqld_exporter状态'

shell: systemctl status mysqld_exporter | grep running

register: status_result

ignore_errors: True

- name: mysqld_exporter is Running

debug: msg="mysqld_exporter is running, OK"

when: status_result is success

- name: mysqld_exporter is Faild

debug: msg="mysqld_exporter is faild, Faild"

when: status_result is failed

6)执行部署

ansible-playbook -C mysqld_exporter.yml

ansible-playbook mysqld_exporter.yml

firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="10.223.225.0/24" port protocol="tcp" port="9104" accept"

# firewall-cmd --zone=public --add-port=9104/tcp --permanent

firewall-cmd --reload

# ansible批量开放防火强

ansible mysqlServer1 -m shell -a "firewall-cmd --zone=public --add-port=9104/tcp --permanent"

ansible mysqlServer1 -m shell -a "firewall-cmd --reload"

ansible mysqlServer2 -m shell -a "firewall-cmd --zone=public --add-port=9104/tcp --permanent"

ansible mysqlServer2 -m shell -a "firewall-cmd --reload"

6)创建账号授权

# 创建账号密码

create user 'exporter'@'127.0.0.1' identified by '2@42AMFtnNY4';

# 授权

grant PROCESS, REPLICATION CLIENT, SELECT on *.* to 'exporter'@'127.0.0.1';

# 刷新

FLUSH PRIVILEGES;

# 测试(3366)

systemctl restart mysqld_exporter.service

journalctl -u mysqld_exporter -f

- 暂未授权

7)注册服务

- consul-register.sh

#!/bin/bash

inventory_hostname=$1

group_names=$2

ansible_ssh_host=$3

exporter_port=$4

echo $inventory_hostname $group_names $ansible_ssh_host $exporter_port

curl -X PUT -d '{"id": "'"${inventory_hostname}"'","name": "'"${group_names}"'","address": "'"${ansible_ssh_host}"'","port": '"${exporter_port}"',"tags": ["service"],"checks": [{"http": "http://'"${ansible_ssh_host}"':'"${exporter_port}"'","interval": "5s"}]}' http://10.223.225.55:30085/v1/agent/service/register

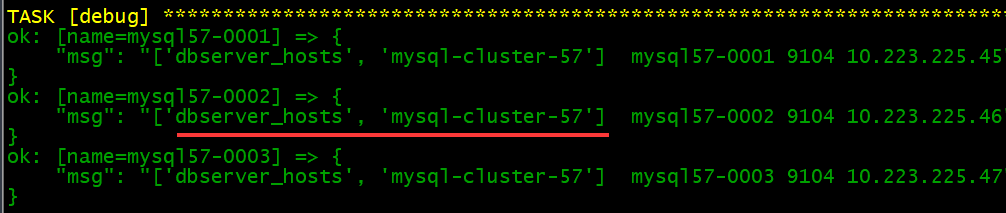

- register-consul.yml

---

- hosts: mysql-cluster-57

remote_user: root

vars:

exporter_port: 9104

tasks:

- name: '推送注册脚本'

copy: src=consul-register.sh dest=/usr/local/bin/ backup=yes

- name: 'debug'

debug: msg='{{ group_names[1] }} {{ inventory_hostname[5:] }} {{exporter_port}} {{ ansible_ssh_host }}'

- name: '注册'

shell: |

chmod +x /usr/local/bin/consul-register.sh

/usr/local/bin/consul-register.sh {{ inventory_hostname[5:] }} {{ group_names[1] }} {{ ansible_ssh_host }} {{ exporter_port }}

- name: "清理consul-register.sh文件"

file:

path: /usr/local/bin/consul-register.sh

state: absent

{{ group_names[1] }}:这里使用第二个值是由于之前天剑宿主机时已经有了一个相关数据的组名

使用{{ group_names }}可以看到有两个组名,第一个名称是我的主机监控时使用的,第二个是监控mysql的

:::info 注意:

这里之前注册了host主机服务,使用相同的实例名称(这里是主机名),会导致覆盖掉原来的注册服务,手动修改实例名称和组名注册

:::

8)添加Prometheus

- job_name: 'mysql58-hosts'

basic_auth:

username: admin

password: SZ_mssq@2022

consul_sd_configs:

- server: 10.223.225.55:30085

services: ['mysql58-hosts']

9)添加Grafana

- 7362

- 7991

- 12826

6、监控k8s

1 K8S for Prometheus Dashboard 20211010中文版 | Grafana Labs

- 模板来自大佬的

https://github.com/starsliao/Prometheus/tree/master/kubernetes

- Prometheus添加监控指标()

- job_name: 'k8s-cadvisor'

metrics_path: /metrics/cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:10255'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

- source_labels: [pod_name]

separator: ;

regex: (.+)

target_label: pod

replacement: $1

action: replace

- source_labels: [container_name]

separator: ;

regex: (.+)

target_label: container

replacement: $1

action: replace

- job_name: kube-state-metrics

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- ops-monit # kube-state-metrics所在的名称空间(默认的是kube-system)

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

replacement: $1

action: keep

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: k8s_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: k8s_sname

- 诉求

###--------------------------------------| k8s

- job_name: 'k8s-cadvisor'

metrics_path: /metrics/cadvisor

kubernetes_sd_configs:

- role: node

api_server: https://10.223.225.61:6443

bearer_token_file: /dcos/prometheus/token.k8s

tls_config:

insecure_skip_verify: true

bearer_token_file: /dcos/prometheus/token.k8s

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:10255'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

- source_labels: [pod_name]

separator: ;

regex: (.+)

target_label: pod

replacement: $1

action: replace

- source_labels: [container_name]

separator: ;

regex: (.+)

target_label: container

replacement: $1

action: replace

- job_name: kube-state-metrics

kubernetes_sd_configs:

- role: node

api_server: https://10.223.225.61:6443

bearer_token_file: /dcos/prometheus/token.k8s

tls_config:

insecure_skip_verify: true

bearer_token_file: /dcos/prometheus/token.k8s

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

replacement: $1

action: keep

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: k8s_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: k8s_sname

###--------------------------------------| k8s

- token获取

kubectl get sa prometheus -n kube-system -o yaml

kubectl describe secret <prometheus-token-xxx | secret名称> -n kube-system

# 例如

kubectl describe secret prometheus-token-ghtr9 -n kube-system | grep token: | awk '{print $2}' > /opt/prometheus/token.k8s

1. 诉求项目中集群内部Prometheus监控配置

7、时间同步

- ntpd_time.yml

#- hosts: nginx

- hosts: all

remote_user: root

tasks:

- name: '安装ntp服务'

shell: yum -y install ntp

- name: '修改配置文件'

shell: |

#cp /etc/ntp.conf /etc/ntp.conf-bak

# 在administrative行后添加数据

#sed -i '/administrative/a\restrict 127.0.0.1 nomodify notrap nopeer noquery' /etc/ntp.conf

sed -i '/administrative/a\restrict {{ ansible_ssh_host }} nomodify notrap nopeer noquery' /etc/ntp.conf

echo "server 10.224.38.13" >> /etc/ntp.conf

- name: '设置时间同步服务器'

shell: timedatectl set-ntp yes

- name: '重启ntp服务'

shell: |

systemctl restart ntpd

systemctl enable ntpd

内网环境时间同步配置

# 修改完上面的配置后还应设置定时任务

cat > /etc/ntp.conf <<EOF

driftfile /var/lib/ntp/drift

restrict default nomodify notrap nopeer noquery

restrict 127.0.0.1

restrict ::1

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

disable monitor

server 10.18.104.165

Fudge 10.18.104.165 stratum 0

EOF

# 添加本机

export HOST_IPADDR=$(ip addr show ens160 | grep "inet " | cut -d '/' -f 1 | awk '{print $2}')

sed -i "/default/a\restrict ${HOST_IPADDR} nomodify notrap nopeer noquery" /etc/ntp.conf

# 设置定时任务,5分钟同步一次时间和对应的IP

*/5 * * * * /usr/sbin/ntpdate -u 10.18.104.165

8、日志审计

需配置rsyslog协议

Syslog/rsyslog

麒麟系统都是rsyslog

rsyslog

1)寻找rsyslog.conf文件

#find / -name rsyslog.conf

注:rsyslog.conf文件通常是存储与根目录的etc下。

2)修改 /etc/rsyslog.conf 配置文件,在该文件内容最后增加一行:

*.* @10.223.225.118

注:* @之间为<tab>键,切勿漏输;

这里的 *.* 代表所有的日志。

最后,按ESC后输wq保存修改。

3)重启rsyslog服务

# systemctl restart rsyslog.service

- playbook

- hosts: all

remote_user: root

tasks:

- name: '修改配置文件'

shell: |

echo "*.* @10.223.225.118" >> /etc/rsyslog.conf

- name: '重启Syslog服务'

shell: |

systemctl restart rsyslog.service

9、安装Openjdk和磁盘挂载

cat install-jdk.yml

- hosts: all

remote_user: root

tasks:

- name: '安装jdk'

shell: yum -y install java-1.8.0-openjdk.aarch64

- name: '创建dcos目录'

file:

path: /dcos

state: directory

#- name: '磁盘挂载'

# shell: |

# mkfs.ext4 /dev/vdb

# #mkdir /dcos

# mount /dev/vdb /dcos

# echo "/dev/vdb /dcos ext4 defaults 0 0" >> /etc/fstab

ansible all -m shell -a 'mount -a'查看挂在

# 创建目录

ansible all -m shell -a 'mkdir /dcos'

10、诉求项目监控模板

- 包含linux主机和mysql监控

- 包含k8s监控(部署在k8s集群内的默认Prometheus配置)

监控模板

- k8s

1-k8s-for-prometheus-dashboard-20211010_rev7.json

- linux

- mysql