1. 导论

在前面的学习中,我们探讨了一系列简单而实用的回归和分类模型,同时也探讨了如何使用集成学习家族中的Bagging思想去优化最终的模型。Bagging思想的实质是:通过Bootstrap 的方式对全样本数据集进行抽样得到抽样子集,对不同的子集使用同一种基本模型进行拟合,然后投票得出最终的预测。我们也从前面的探讨知道:Bagging主要通过降低方差的方式减少预测误差。那么,本章介绍的Boosting是与Bagging截然不同的思想,Boosting方法是使用同一组数据集进行反复学习,得到一系列简单模型,然后组合这些模型构成一个预测性能十分强大的机器学习模型。显然,Boosting思想提高最终的预测效果是通过不断减少偏差的形式,与Bagging有着本质的不同。在Boosting这一大类方法中,笔者主要介绍两类常用的Boosting方式:Adaptive Boosting 和 Gradient Boosting 以及它们的变体Xgboost、LightGBM以及Catboost。

boosting的思想是通过重新给样本赋权重的方法训练出新的学习器,新的学习器会更关注之前预测错误的样本,然后再根据各个学习器的性能,将它们的结果结合起来。所以boosting的关键是,对于每个基学习器训练样本的分布是不一样的。

—— 感谢@三个诸葛亮-侍伟 大佬的分享!

2. Boosting方法的基本思路

在正式介绍Boosting思想之前,我想先介绍两个例子:

第一个例子:不知道大家有没有做过错题本,我们将每次测验的错的题目记录在错题本上,不停的翻阅,直到我们完全掌握(也就是能够在考试中能够举一反三)。

第二个例子:对于一个复杂任务来说,将多个专家的判断进行适当的综合所作出的判断,要比其中任何一个专家单独判断要好。实际上这是一种“三个臭皮匠顶个诸葛亮的道理”。

这两个例子都说明Boosting的道理,也就是不断地重复学习达到最终的要求。

Boosting的提出与发展离不开Valiant和 Kearns的努力,历史上正是Valiant和 Kearns提出了“强可学习”和”弱可学习”的概念。那什么是”强可学习”和”弱可学习”呢?在概率近似正确(probably approximately correct, PAC)学习的框架下:

- 弱学习:识别错误率小于1/2(即准确率仅比随机猜测略高的学习算法)

- 强学习:识别准确率很高并能在多项式时间内完成的学习算法

非常有趣的是,在PAC 学习的框架下,强可学习和弱可学习是等价的,也就是说一个概念是强可学习的充分必要条件是这个概念是弱可学习的(弱可学习是强可学习的充要条件?)。这样一来,问题便是:在学习中,如果已经发现了弱可学习算法,能否将他提升至强可学习算法。因为,弱可学习算法比强可学习算法容易得多。提升方法就是从弱学习算法出发,反复学习,得到一系列弱分类器(又称为基本分类器),然后通过一定的形式去组合这些弱分类器构成一个强分类器。大多数的Boosting方法都是通过改变训练数据集的概率分布(训练数据不同样本的权值),针对不同概率分布的数据调用弱分类算法学习一系列的弱分类器。

对于Boosting方法来说,有两个问题需要给出答案:

第一个是每一轮学习应该如何改变数据的概率分布,第二个是如何将各个弱分类器组合成一个强分类器。

关于这两个问题,不同的Boosting算法会有不同的答案,我们接下来介绍一种最经典的Boosting算法——Adaboost,我们需要理解Adaboost是怎么处理这两个问题以及为什么这么处理的。

3. Adaboost算法

3.1 Adaboost的基本原理

对于Adaboost来说,解决上述的两个问题的方式是:

(1) 提高那些被前一轮分类器错误分类的样本的权重,而降低那些被正确分类的样本的权重。这样一来,那些在上一轮分类器中没有得到正确分类的样本,由于其权重的增大而在后一轮的训练中“备受关注”。

(2) 各个弱分类器的组合是通过采取加权多数表决的方式,具体来说,加大分类错误率低的弱分类器的权重,因为这些分类器能更好地完成分类任务,而减小分类错误率较大的弱分类器的权重,使其在表决中起较小的作用。

3.2 Adaboost算法详解

现在,我们来具体介绍Adaboost算法:(参考李航老师的《统计学习方法》)

假设给定一个二分类的训练数据集:,其中每个样本点由特征与类别组成。特征,类别,是特征空间,是类别集合,输出最终分类器

。Adaboost算法如下:

(1) 初始化训练数据的分布:%2C%20%5Cquad%20w%7B1%20i%7D%3D%5Cfrac%7B1%7D%7BN%7D%2C%20%5Cquad%20i%3D1%2C2%2C%20%5Ccdots%2C%20N%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-44%22%20d%3D%22M287%20628Q287%20635%20230%20637Q207%20637%20200%20638T193%20647Q193%20655%20197%20667T204%20682Q206%20683%20403%20683Q570%20682%20590%20682T630%20676Q702%20659%20752%20597T803%20431Q803%20275%20696%20151T444%203L430%201L236%200H125H72Q48%200%2041%202T33%2011Q33%2013%2036%2025Q40%2041%2044%2043T67%2046Q94%2046%20127%2049Q141%2052%20146%2061Q149%2065%20218%20339T287%20628ZM703%20469Q703%20507%20692%20537T666%20584T629%20613T590%20629T555%20636Q553%20636%20541%20636T512%20636T479%20637H436Q392%20637%20386%20627Q384%20623%20313%20339T242%2052Q242%2048%20253%2048T330%2047Q335%2047%20349%2047T373%2046Q499%2046%20581%20128Q617%20164%20640%20212T683%20339T703%20469Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-31%22%20d%3D%22M213%20578L200%20573Q186%20568%20160%20563T102%20556H83V602H102Q149%20604%20189%20617T245%20641T273%20663Q275%20666%20285%20666Q294%20666%20302%20660V361L303%2061Q310%2054%20315%2052T339%2048T401%2046H427V0H416Q395%203%20257%203Q121%203%20100%200H88V46H114Q136%2046%20152%2046T177%2047T193%2050T201%2052T207%2057T213%2061V578Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3D%22%20d%3D%22M56%20347Q56%20360%2070%20367H707Q722%20359%20722%20347Q722%20336%20708%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H708Q722%20163%20722%20153Q722%20140%20707%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-77%22%20d%3D%22M580%20385Q580%20406%20599%20424T641%20443Q659%20443%20674%20425T690%20368Q690%20339%20671%20253Q656%20197%20644%20161T609%2080T554%2012T482%20-11Q438%20-11%20404%205T355%2048Q354%2047%20352%2044Q311%20-11%20252%20-11Q226%20-11%20202%20-5T155%2014T118%2053T104%20116Q104%20170%20138%20262T173%20379Q173%20380%20173%20381Q173%20390%20173%20393T169%20400T158%20404H154Q131%20404%20112%20385T82%20344T65%20302T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Q21%20293%2029%20315T52%20366T96%20418T161%20441Q204%20441%20227%20416T250%20358Q250%20340%20217%20250T184%20111Q184%2065%20205%2046T258%2026Q301%2026%20334%2087L339%2096V119Q339%20122%20339%20128T340%20136T341%20143T342%20152T345%20165T348%20182T354%20206T362%20238T373%20281Q402%20395%20406%20404Q419%20431%20449%20431Q468%20431%20475%20421T483%20402Q483%20389%20454%20274T422%20142Q420%20131%20420%20107V100Q420%2085%20423%2071T442%2042T487%2026Q558%2026%20600%20148Q609%20171%20620%20213T632%20273Q632%20306%20619%20325T593%20357T580%20385Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2C%22%20d%3D%22M78%2035T78%2060T94%20103T137%20121Q165%20121%20187%2096T210%208Q210%20-27%20201%20-60T180%20-117T154%20-158T130%20-185T117%20-194Q113%20-194%20104%20-185T95%20-172Q95%20-168%20106%20-156T131%20-126T157%20-76T173%20-3V9L172%208Q170%207%20167%206T161%203T152%201T140%200Q113%200%2096%2017Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-22EF%22%20d%3D%22M78%20250Q78%20274%2095%20292T138%20310Q162%20310%20180%20294T199%20251Q199%20226%20182%20208T139%20190T96%20207T78%20250ZM525%20250Q525%20274%20542%20292T585%20310Q609%20310%20627%20294T646%20251Q646%20226%20629%20208T586%20190T543%20207T525%20250ZM972%20250Q972%20274%20989%20292T1032%20310Q1056%20310%201074%20294T1093%20251Q1093%20226%201076%20208T1033%20190T990%20207T972%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-69%22%20d%3D%22M184%20600Q184%20624%20203%20642T247%20661Q265%20661%20277%20649T290%20619Q290%20596%20270%20577T226%20557Q211%20557%20198%20567T184%20600ZM21%20287Q21%20295%2030%20318T54%20369T98%20420T158%20442Q197%20442%20223%20419T250%20357Q250%20340%20236%20301T196%20196T154%2083Q149%2061%20149%2051Q149%2026%20166%2026Q175%2026%20185%2029T208%2043T235%2078T260%20137Q263%20149%20265%20151T282%20153Q302%20153%20302%20143Q302%20135%20293%20112T268%2061T223%2011T161%20-11Q129%20-11%20102%2010T74%2074Q74%2091%2079%20106T122%20220Q160%20321%20166%20341T173%20380Q173%20404%20156%20404H154Q124%20404%2099%20371T61%20287Q60%20286%2059%20284T58%20281T56%20279T53%20278T49%20278T41%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-4E%22%20d%3D%22M234%20637Q231%20637%20226%20637Q201%20637%20196%20638T191%20649Q191%20676%20202%20682Q204%20683%20299%20683Q376%20683%20387%20683T401%20677Q612%20181%20616%20168L670%20381Q723%20592%20723%20606Q723%20633%20659%20637Q635%20637%20635%20648Q635%20650%20637%20660Q641%20676%20643%20679T653%20683Q656%20683%20684%20682T767%20680Q817%20680%20843%20681T873%20682Q888%20682%20888%20672Q888%20650%20880%20642Q878%20637%20858%20637Q787%20633%20769%20597L620%207Q618%200%20599%200Q585%200%20582%202Q579%205%20453%20305L326%20604L261%20344Q196%2088%20196%2079Q201%2046%20268%2046H278Q284%2041%20284%2038T282%2019Q278%206%20272%200H259Q228%202%20151%202Q123%202%20100%202T63%202T46%201Q31%201%2031%2010Q31%2014%2034%2026T39%2040Q41%2046%2062%2046Q130%2049%20150%2085Q154%2091%20221%20362L289%20634Q287%20635%20234%20637Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-32%22%20d%3D%22M109%20429Q82%20429%2066%20447T50%20491Q50%20562%20103%20614T235%20666Q326%20666%20387%20610T449%20465Q449%20422%20429%20383T381%20315T301%20241Q265%20210%20201%20149L142%2093L218%2092Q375%2092%20385%2097Q392%2099%20409%20186V189H449V186Q448%20183%20436%2095T421%203V0H50V19V31Q50%2038%2056%2046T86%2081Q115%20113%20136%20137Q145%20147%20170%20174T204%20211T233%20244T261%20278T284%20308T305%20340T320%20369T333%20401T340%20431T343%20464Q343%20527%20309%20573T212%20619Q179%20619%20154%20602T119%20569T109%20550Q109%20549%20114%20549Q132%20549%20151%20535T170%20489Q170%20464%20154%20447T109%20429Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-44%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%221171%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%221560%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2616%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-77%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(716%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%221913%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-22EF%22%20x%3D%222358%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%223698%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(4143%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-77%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(716%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%225558%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-22EF%22%20x%3D%226003%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%227342%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(7787%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-77%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(716%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%229586%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%2212758%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(14203%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-77%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(716%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%2215896%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(16674%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(397%2C0)%22%3E%0A%3Crect%20stroke%3D%22none%22%20width%3D%221008%22%20height%3D%2260%22%20x%3D%220%22%20y%3D%22220%22%3E%3C%2Frect%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22254%22%20y%3D%22676%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4E%22%20x%3D%2260%22%20y%3D%22-704%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%2218201%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%2219646%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%2220269%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%2221325%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%2221826%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-32%22%20x%3D%2222271%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%2222772%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-22EF%22%20x%3D%2223217%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%2224556%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4E%22%20x%3D%2225001%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=D%7B1%7D%3D%5Cleft%28w%7B11%7D%2C%20%5Ccdots%2C%20w%7B1%20i%7D%2C%20%5Ccdots%2C%20w%7B1%20N%7D%5Cright%29%2C%20%5Cquad%20w%7B1%20i%7D%3D%5Cfrac%7B1%7D%7BN%7D%2C%20%5Cquad%20i%3D1%2C2%2C%20%5Ccdots%2C%20N&id=jVWF5)

(2) 对于m=1,2,…,M

- 使用具有权值分布的训练数据集进行学习,得到基本分类器:

- 计算

在训练集上的分类误差率

- 计算的系数,这里的log是自然对数ln

- 更新训练数据集的权重分布

这里的是规范化因子,使得成为一个概率分布,

(3) 构建基本分类器的线性组合,得到最终的分类器

下面对Adaboost算法做如下说明:

对于步骤(1),假设训练数据的权值分布是均匀分布,是为了使得第一次没有先验信息的条件下每个样本在基本分类器的学习中作用一样。

对于步骤(2),每一次迭代产生的基本分类器在加权训练数据集上的分类错误率代表了在中分类错误的样本权重和,这点直接说明了权重分布与的分类错误率有直接关系。同时,在步骤(2)中,计算基本分类器的系数,,它表示了在最终分类器的重要性程度,的取值由基本分类器的分类错误率有直接关系,当时,,并且随着的减少而增大,因此分类错误率越小的基本分类器在最终分类器的作用越大!

最重要的,对于步骤(2)中的样本权重的更新:

因此,从上式可以看到:被基本分类器错误分类的样本的权重扩大,被正确分类的样本权重减少,二者相比相差倍。

对于步骤(3),线性组合实现了将M个基本分类器的加权表决,系数标志了基本分类器的重要性,值得注意的是:所有的之和不为1。的符号决定了样本x属于哪一类。

3.3 Adaboost算法手动计算实例

下面,我们使用一组简单的数据来手动计算Adaboost算法的过程:(例子来源:http://www.csie.edu.tw)

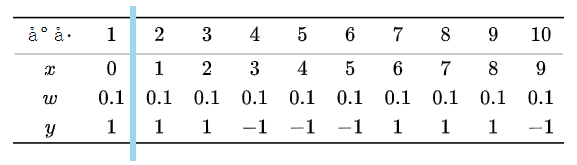

训练数据如下表,假设基本分类器的形式是一个分割或表示,阈值v由该基本分类器在训练数据集上分类错误率最低确定。

解:

初始化样本权值分布

我们首先认为的权重是一样的,即每一个数据同等重要。(权重是用来计算误差的)

%22%20aria-hidden%3D%22true%22%3E%0A%3Cg%20transform%3D%22translate(75%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(0%2C2137)%22%3E%0A%3Cg%20transform%3D%22translate(250%2C0)%22%3E%0A%3Ctext%20font-family%3D%22monospace%22%20stroke%3D%22none%22%20transform%3D%22scale(71.759)%20matrix(1%200%200%20-1%200%200)%22%3E%C3%A5%3C%2Ftext%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(859%2C0)%22%3E%0A%3Ctext%20font-family%3D%22monospace%22%20stroke%3D%22none%22%20transform%3D%22scale(71.759)%20matrix(1%200%200%20-1%200%200)%22%3E%C2%BA%3C%2Ftext%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1469%2C0)%22%3E%0A%3Ctext%20font-family%3D%22monospace%22%20stroke%3D%22none%22%20transform%3D%22scale(71.759)%20matrix(1%200%200%20-1%200%200)%22%3E%C3%A5%3C%2Ftext%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-22C5%22%20x%3D%222079%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%221017%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-77%22%20x%3D%22945%22%20y%3D%22-893%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%221055%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(3997%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(6277%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-32%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(8556%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-33%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-32%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(10836%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-34%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-33%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(0%2C-2293)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22778%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(13115%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-35%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-34%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(0%2C-2293)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22778%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(15395%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-36%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-35%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(0%2C-2293)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22778%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(17674%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-37%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-36%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(19954%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-38%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-37%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(22233%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-39%22%20x%3D%22389%22%20y%3D%222137%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-38%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22389%22%20y%3D%22-2293%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(24513%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(139%2C2137)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-39%22%20x%3D%22389%22%20y%3D%22507%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-893)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(0%2C-2293)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22778%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cline%20stroke-linecap%3D%22square%22%20stroke-width%3D%2220.9%22%20y2%3D%2210%22%20y1%3D%2210%22%20x1%3D%2210%22%20x2%3D%2226182%22%20transform%3D%22translate(0%2C1496)%22%3E%3C%2Fline%3E%0A%3C%2Fg%3E%0A%3Cline%20stroke-linecap%3D%22square%22%20stroke-width%3D%2275%22%20y2%3D%2237%22%20y1%3D%2237%22%20x1%3D%2237%22%20x2%3D%2226305%22%20transform%3D%22translate(0%2C3214)%22%3E%3C%2Fline%3E%0A%3Cline%20stroke-linecap%3D%22square%22%20stroke-width%3D%2275%22%20y2%3D%2237%22%20y1%3D%2237%22%20x1%3D%2237%22%20x2%3D%2226305%22%20transform%3D%22translate(0%2C-2790)%22%3E%3C%2Fline%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=%5Cbegin%7Barray%7D%7Bccccccccccc%7D%0A%5Chline%20%5Ctext%20%7B%20%C3%A5%C2%BA%C2%8F%C3%A5%C2%8F%C2%B7%20%7D%20%26%201%20%26%202%20%26%203%20%26%204%20%26%205%20%26%206%20%26%207%20%26%208%20%26%209%20%26%2010%20%5C%5C%0A%5Chline%20x%20%26%200%20%26%201%20%26%202%20%26%203%20%26%204%20%26%205%20%26%206%20%26%207%20%26%208%20%26%209%20%5C%5C%0Aw%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%26%200.1%20%5C%5C%0Ay%20%26%201%20%26%201%20%26%201%20%26%20-1%20%26%20-1%20%26%20-1%20%26%201%20%26%201%20%26%201%20%26%20-1%20%5C%5C%0A%5Chline%0A%5Cend%7Barray%7D%0A&id=TqwsX)

对m=1:

- 在权值分布的训练数据集上,遍历每个结点并计算分类误差率。

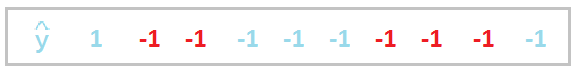

遍历到第一个划分节点,即阈值取v=(0+1)/2 = 0.5时:

分类器预测结果如下:

此时,样本分类错误,分类误差率为它们的权重之和,e=0.1+0.1+0.1+0.1+0.1=0.5。

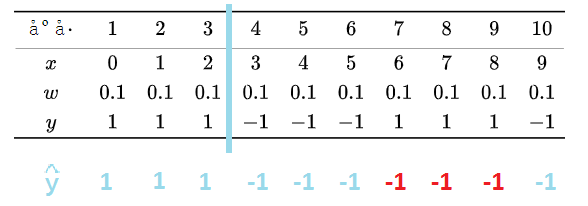

以此类推,遍历每个结点并计算分类误差率。当取v=2.5时(当v=8.5时误差率也一样)分类误差率最低(此时样本的数据被错分为反例,误差为它们的权重之和e=0.1+0.1+0.1=0.3,误差率小于0.5才有意义)

那么基本分类器为:%3D%5Cleft%5C%7B%5Cbegin%7Barray%7D%7Bll%7D%0A1%2C%20%26amp%3B%20x%26lt%3B2.5%20%5C%5C%0A-1%2C%20%26amp%3B%20x%26gt%3B2.5%0A%5Cend%7Barray%7D%5Cright.%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-47%22%20d%3D%22M50%20252Q50%20367%20117%20473T286%20641T490%20704Q580%20704%20633%20653Q642%20643%20648%20636T656%20626L657%20623Q660%20623%20684%20649Q691%20655%20699%20663T715%20679T725%20690L740%20705H746Q760%20705%20760%20698Q760%20694%20728%20561Q692%20422%20692%20421Q690%20416%20687%20415T669%20413H653Q647%20419%20647%20422Q647%20423%20648%20429T650%20449T651%20481Q651%20552%20619%20605T510%20659Q492%20659%20471%20656T418%20643T357%20615T294%20567T236%20496T189%20394T158%20260Q156%20242%20156%20221Q156%20173%20170%20136T206%2079T256%2045T308%2028T353%2024Q407%2024%20452%2047T514%20106Q517%20114%20529%20161T541%20214Q541%20222%20528%20224T468%20227H431Q425%20233%20425%20235T427%20254Q431%20267%20437%20273H454Q494%20271%20594%20271Q634%20271%20659%20271T695%20272T707%20272Q721%20272%20721%20263Q721%20261%20719%20249Q714%20230%20709%20228Q706%20227%20694%20227Q674%20227%20653%20224Q646%20221%20643%20215T629%20164Q620%20131%20614%20108Q589%206%20586%203Q584%201%20581%201Q571%201%20553%2021T530%2052Q530%2053%20528%2052T522%2047Q448%20-22%20322%20-22Q201%20-22%20126%2055T50%20252Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-31%22%20d%3D%22M213%20578L200%20573Q186%20568%20160%20563T102%20556H83V602H102Q149%20604%20189%20617T245%20641T273%20663Q275%20666%20285%20666Q294%20666%20302%20660V361L303%2061Q310%2054%20315%2052T339%2048T401%2046H427V0H416Q395%203%20257%203Q121%203%20100%200H88V46H114Q136%2046%20152%2046T177%2047T193%2050T201%2052T207%2057T213%2061V578Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-78%22%20d%3D%22M52%20289Q59%20331%20106%20386T222%20442Q257%20442%20286%20424T329%20379Q371%20442%20430%20442Q467%20442%20494%20420T522%20361Q522%20332%20508%20314T481%20292T458%20288Q439%20288%20427%20299T415%20328Q415%20374%20465%20391Q454%20404%20425%20404Q412%20404%20406%20402Q368%20386%20350%20336Q290%20115%20290%2078Q290%2050%20306%2038T341%2026Q378%2026%20414%2059T463%20140Q466%20150%20469%20151T485%20153H489Q504%20153%20504%20145Q504%20144%20502%20134Q486%2077%20440%2033T333%20-11Q263%20-11%20227%2052Q186%20-10%20133%20-10H127Q78%20-10%2057%2016T35%2071Q35%20103%2054%20123T99%20143Q142%20143%20142%20101Q142%2081%20130%2066T107%2046T94%2041L91%2040Q91%2039%2097%2036T113%2029T132%2026Q168%2026%20194%2071Q203%2087%20217%20139T245%20247T261%20313Q266%20340%20266%20352Q266%20380%20251%20392T217%20404Q177%20404%20142%20372T93%20290Q91%20281%2088%20280T72%20278H58Q52%20284%2052%20289Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3D%22%20d%3D%22M56%20347Q56%20360%2070%20367H707Q722%20359%20722%20347Q722%20336%20708%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H708Q722%20163%20722%20153Q722%20140%20707%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-7B%22%20d%3D%22M434%20-231Q434%20-244%20428%20-250H410Q281%20-250%20230%20-184Q225%20-177%20222%20-172T217%20-161T213%20-148T211%20-133T210%20-111T209%20-84T209%20-47T209%200Q209%2021%20209%2053Q208%20142%20204%20153Q203%20154%20203%20155Q189%20191%20153%20211T82%20231Q71%20231%2068%20234T65%20250T68%20266T82%20269Q116%20269%20152%20289T203%20345Q208%20356%20208%20377T209%20529V579Q209%20634%20215%20656T244%20698Q270%20724%20324%20740Q361%20748%20377%20749Q379%20749%20390%20749T408%20750H428Q434%20744%20434%20732Q434%20719%20431%20716Q429%20713%20415%20713Q362%20710%20332%20689T296%20647Q291%20634%20291%20499V417Q291%20370%20288%20353T271%20314Q240%20271%20184%20255L170%20250L184%20245Q202%20239%20220%20230T262%20196T290%20137Q291%20131%20291%201Q291%20-134%20296%20-147Q306%20-174%20339%20-192T415%20-213Q429%20-213%20431%20-216Q434%20-219%20434%20-231Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2C%22%20d%3D%22M78%2035T78%2060T94%20103T137%20121Q165%20121%20187%2096T210%208Q210%20-27%20201%20-60T180%20-117T154%20-158T130%20-185T117%20-194Q113%20-194%20104%20-185T95%20-172Q95%20-168%20106%20-156T131%20-126T157%20-76T173%20-3V9L172%208Q170%207%20167%206T161%203T152%201T140%200Q113%200%2096%2017Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3C%22%20d%3D%22M694%20-11T694%20-19T688%20-33T678%20-40Q671%20-40%20524%2029T234%20166L90%20235Q83%20240%2083%20250Q83%20261%2091%20266Q664%20540%20678%20540Q681%20540%20687%20534T694%20519T687%20505Q686%20504%20417%20376L151%20250L417%20124Q686%20-4%20687%20-5Q694%20-11%20694%20-19Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-32%22%20d%3D%22M109%20429Q82%20429%2066%20447T50%20491Q50%20562%20103%20614T235%20666Q326%20666%20387%20610T449%20465Q449%20422%20429%20383T381%20315T301%20241Q265%20210%20201%20149L142%2093L218%2092Q375%2092%20385%2097Q392%2099%20409%20186V189H449V186Q448%20183%20436%2095T421%203V0H50V19V31Q50%2038%2056%2046T86%2081Q115%20113%20136%20137Q145%20147%20170%20174T204%20211T233%20244T261%20278T284%20308T305%20340T320%20369T333%20401T340%20431T343%20464Q343%20527%20309%20573T212%20619Q179%20619%20154%20602T119%20569T109%20550Q109%20549%20114%20549Q132%20549%20151%20535T170%20489Q170%20464%20154%20447T109%20429Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2E%22%20d%3D%22M78%2060Q78%2084%2095%20102T138%20120Q162%20120%20180%20104T199%2061Q199%2036%20182%2018T139%200T96%2017T78%2060Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-35%22%20d%3D%22M164%20157Q164%20133%20148%20117T109%20101H102Q148%2022%20224%2022Q294%2022%20326%2082Q345%20115%20345%20210Q345%20313%20318%20349Q292%20382%20260%20382H254Q176%20382%20136%20314Q132%20307%20129%20306T114%20304Q97%20304%2095%20310Q93%20314%2093%20485V614Q93%20664%2098%20664Q100%20666%20102%20666Q103%20666%20123%20658T178%20642T253%20634Q324%20634%20389%20662Q397%20666%20402%20666Q410%20666%20410%20648V635Q328%20538%20205%20538Q174%20538%20149%20544L139%20546V374Q158%20388%20169%20396T205%20412T256%20420Q337%20420%20393%20355T449%20201Q449%20109%20385%2044T229%20-22Q148%20-22%2099%2032T50%20154Q50%20178%2061%20192T84%20210T107%20214Q132%20214%20148%20197T164%20157Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2212%22%20d%3D%22M84%20237T84%20250T98%20270H679Q694%20262%20694%20250T679%20230H98Q84%20237%2084%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3E%22%20d%3D%22M84%20520Q84%20528%2088%20533T96%20539L99%20540Q106%20540%20253%20471T544%20334L687%20265Q694%20260%20694%20250T687%20235Q685%20233%20395%2096L107%20-40H101Q83%20-38%2083%20-20Q83%20-19%2083%20-17Q82%20-10%2098%20-1Q117%209%20248%2071Q326%20108%20378%20132L626%20250L378%20368Q90%20504%2086%20509Q84%20513%2084%20520Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ3-7B%22%20d%3D%22M618%20-943L612%20-949H582L568%20-943Q472%20-903%20411%20-841T332%20-703Q327%20-682%20327%20-653T325%20-350Q324%20-28%20323%20-18Q317%2024%20301%2061T264%20124T221%20171T179%20205T147%20225T132%20234Q130%20238%20130%20250Q130%20255%20130%20258T131%20264T132%20267T134%20269T139%20272T144%20275Q207%20308%20256%20367Q310%20436%20323%20519Q324%20529%20325%20851Q326%201124%20326%201154T332%201205Q369%201358%20566%201443L582%201450H612L618%201444V1429Q618%201413%20616%201411L608%201406Q599%201402%20585%201393T552%201372T515%201343T479%201305T449%201257T429%201200Q425%201180%20425%201152T423%20851Q422%20579%20422%20549T416%20498Q407%20459%20388%20424T346%20364T297%20318T250%20284T214%20264T197%20254L188%20251L205%20242Q290%20200%20345%20138T416%203Q421%20-18%20421%20-48T423%20-349Q423%20-397%20423%20-472Q424%20-677%20428%20-694Q429%20-697%20429%20-699Q434%20-722%20443%20-743T465%20-782T491%20-816T519%20-845T548%20-868T574%20-886T595%20-899T610%20-908L616%20-910Q618%20-912%20618%20-928V-943Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-47%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%221112%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%221240%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%221629%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%222202%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%222869%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(3925%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ3-7B%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(917%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(-11%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(0%2C650)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(0%2C-750)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22778%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%221279%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(2547%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(0%2C650)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3C%22%20x%3D%22850%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1906%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-32%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-35%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(0%2C-750)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3E%22%20x%3D%22850%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1906%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-32%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-35%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=G_%7B1%7D%28x%29%3D%5Cleft%5C%7B%5Cbegin%7Barray%7D%7Bll%7D%0A1%2C%20%26%20x%3C2.5%20%5C%5C%0A-1%2C%20%26%20x%3E2.5%0A%5Cend%7Barray%7D%5Cright.%0A&id=Dqfji)

- 在训练数据集上的误差率为。

- 计算的系数:

(由公式可知,只有时,

,这样个体学习器才是有意义的)

- 更新训练数据的权值分布:

(其中是为了保证每次权值总和为1。)

对于m=2:

在权值分布的训练数据集上,遍历每个结点并计算分类误差率,阈值取v=8.5时分类误差率最低,那么基本分类器为:

在训练数据集上的误差率为

- 计算的系数:

- 更新训练数据的权值分布:

对m=3:

在权值分布的训练数据集上,遍历每个结点并计算分类误差率,阈值取v=5.5时分类误差率最低,那么基本分类器为:

在训练数据集上的误差率为

- 计算的系数:

- 更新训练数据的权值分布:

于是得到:,分类器在训练数据集上的误分类点的个数为0。

于是得到最终分类器为:

3.4 sklearn中使用Adaboost算法进行建模:

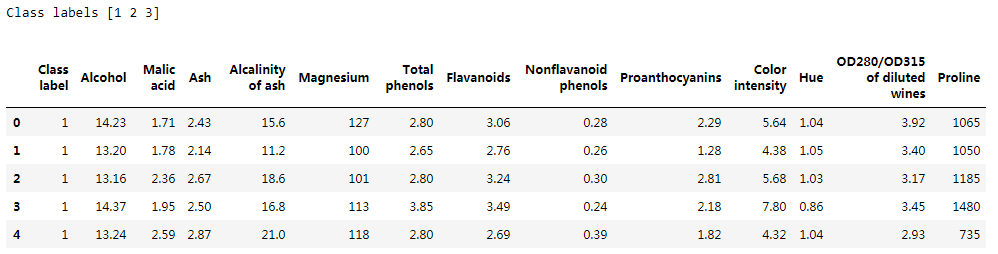

本次案例我们使用一份UCI的机器学习库里的开源数据集:葡萄酒数据集,该数据集可以在 ( https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data )上获得。该数据集包含了178个样本和13个特征,从不同的角度对不同的化学特性进行描述,我们的任务是根据这些数据预测红酒属于哪一个类别。(案例来源《python机器学习(第二版》)

# 引入数据科学相关工具包:import numpy as npimport pandas as pdimport matplotlib.pyplot as pltplt.style.use("ggplot")%matplotlib inlineimport seaborn as sns

加载训练数据:

wine = pd.read_csv("https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data",header=None)wine.columns = ['Class label', 'Alcohol', 'Malic acid', 'Ash', 'Alcalinity of ash','Magnesium', 'Total phenols','Flavanoids', 'Nonflavanoid phenols','Proanthocyanins','Color intensity', 'Hue','OD280/OD315 of diluted wines','Proline']

# 数据查看:print("Class labels",np.unique(wine["Class label"]))wine.head()

下面对数据做简单解读:

- Class label:分类标签

- Alcohol:酒精

- Malic acid:苹果酸

- Ash:灰

- Alcalinity of ash:灰的碱度

- Magnesium:镁

- Total phenols:总酚

- Flavanoids:黄酮类化合物

- Nonflavanoid phenols:非黄烷类酚类

- Proanthocyanins:原花青素

- Color intensity:色彩强度

- Hue:色调

- OD280/OD315 of diluted wines:稀释酒OD280 OD350

- Proline:脯氨酸

数据预处理

仅仅考虑2,3类葡萄酒,去除1类。

wine = wine[wine['Class label'] != 1]y = wine['Class label'].valuesX = wine[['Alcohol','OD280/OD315 of diluted wines']].values# 将分类标签变成二进制编码:from sklearn.preprocessing import LabelEncoderle = LabelEncoder()y = le.fit_transform(y)# 按8:2分割训练集和测试集from sklearn.model_selection import train_test_splitX_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.2,random_state=1,stratify=y) # stratify参数代表了按照y的类别等比例抽样

建模:

(1)使用单一决策树建模

from sklearn.tree import DecisionTreeClassifierfrom sklearn.metrics import accuracy_scoretree = DecisionTreeClassifier(criterion='entropy',random_state=1,max_depth=1)tree = tree.fit(X_train,y_train)y_train_pred = tree.predict(X_train)y_test_pred = tree.predict(X_test)tree_train = accuracy_score(y_train,y_train_pred)tree_test = accuracy_score(y_test,y_test_pred)print('Decision tree train/test accuracies %.3f/%.3f' % (tree_train,tree_test))

Decision tree train/test accuracies 0.916/0.875

(2)使用sklearn实现Adaboost(基分类器为决策树)

AdaBoostClassifier相关参数:

base_estimator:基本分类器,默认为DecisionTreeClassifier(max_depth=1)

n_estimators:终止迭代的次数

learning_rate:学习率

algorithm:训练的相关算法,{‘SAMME’,’SAMME.R’},默认=’SAMME.R’

random_state:随机种子

from sklearn.ensemble import AdaBoostClassifierada = AdaBoostClassifier(base_estimator=tree,n_estimators=500,learning_rate=0.1,random_state=1)ada = ada.fit(X_train,y_train)y_train_pred = ada.predict(X_train)y_test_pred = ada.predict(X_test)ada_train = accuracy_score(y_train,y_train_pred)ada_test = accuracy_score(y_test,y_test_pred)print('Adaboost train/test accuracies %.3f/%.3f' % (ada_train,ada_test))

Adaboost train/test accuracies 1.000/0.917

结果分析:

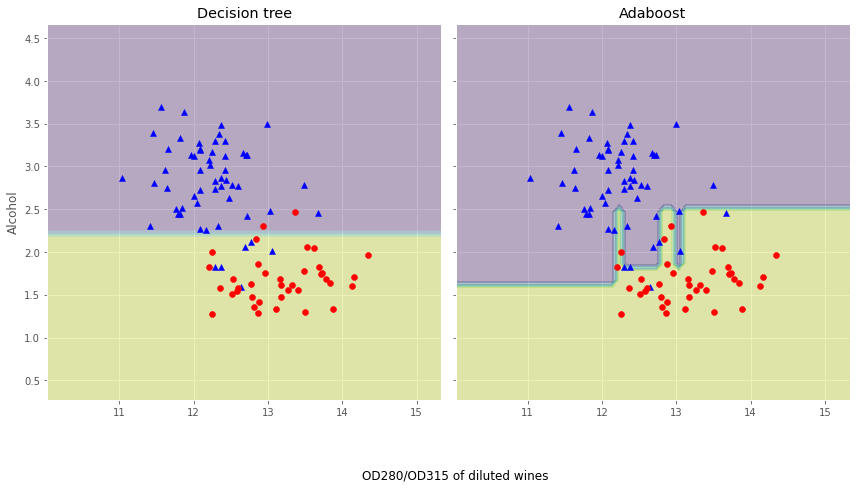

单层决策树似乎对训练数据欠拟合,而Adaboost模型正确地预测了训练数据的所有分类标签,而且与单层决策树相比,Adaboost的测试性能也略有提高。

然而,为什么模型在训练集和测试集的性能相差这么大呢?我们使用图像来简单说明下这个道理!

# 画出单层决策树与Adaboost的决策边界:

x_min = X_train[:, 0].min() - 1

x_max = X_train[:, 0].max() + 1

y_min = X_train[:, 1].min() - 1

y_max = X_train[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1),np.arange(y_min, y_max, 0.1))

f, axarr = plt.subplots(nrows=1, ncols=2,sharex='col',sharey='row',figsize=(12, 6))

for idx, clf, tt in zip([0, 1],[tree, ada],['Decision tree', 'Adaboost']):

clf.fit(X_train, y_train)

Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

axarr[idx].contourf(xx, yy, Z, alpha=0.3)

axarr[idx].scatter(X_train[y_train==0, 0],X_train[y_train==0, 1],c='blue', marker='^')

axarr[idx].scatter(X_train[y_train==1, 0],X_train[y_train==1, 1],c='red', marker='o')

axarr[idx].set_title(tt)

axarr[0].set_ylabel('Alcohol', fontsize=12)

plt.tight_layout()

plt.text(0, -0.2,s='OD280/OD315 of diluted wines',ha='center',va='center',fontsize=12,transform=axarr[1].transAxes)

plt.show()

从上面的决策边界图可以看到:

Adaboost模型的决策边界比单层决策树的决策边界要复杂的多。也就是说,Adaboost试图用增加模型复杂度而降低偏差的方式去减少总误差,但是过程中引入了方差,可能出现过拟合,因此在训练集和测试集之间的性能存在较大的差距,这就简单地回答的刚刚问题。

值的注意的是:

与单个分类器相比,Adaboost等Boosting模型增加了计算的复杂度,在实践中需要仔细思考是否愿意为预测性能的相对改善而增加计算成本,而且Boosting方式无法做到现在流行的并行计算的方式进行训练,因为每一步迭代都要基于上一部的基本分类器。

refer:

- 本笔记整理自DataWhale开源内容:https://github.com/datawhalechina/team-learning-data-mining/tree/master/EnsembleLearning

- AdaBoost算法手动计算实例部分参考自:https://zhuanlan.zhihu.com/p/27126737