https://github.com/4Catalyzer/cyclegan/blob/master/losses.py

self.fake_B = self.netG_A(self.real_A)self.rec_A = self.netG_B(self.fake_B)self.fake_A = self.netG_B(self.real_B)self.rec_B = self.netG_A(self.fake_A)self.idt_A = self.netG_A(self.real_B)self.idt_B = self.netG_B(self.real_A)

cycle_consistency_loss

The cycle consistency loss is defined as the sum of the L1 distances between the real images from each domain and their generated (fake) counterparts.tf.reduce_mean(tf.abs(real_images-generated_images))

Identity loss

- This loss can regularize the generator to be near an identity mapping when real samples of the target domain are provided. If something already looks like from the target domain, you should not map it into a different image.

- The model will be more conservative for unknown content.

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/322

LS-GAN loss

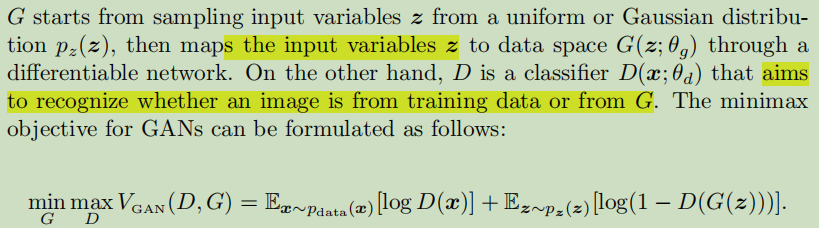

x — The target of G is to learn the distribution pg over data x

lsgan_loss_generator

Rather than compute the negative loglikelihood, a least-squares loss is used to optimize the discriminators as per Equation 2 in: Least Squares Generative Adversarial Networks Xudong Mao, Qing Li, Haoran Xie, Raymond Y.K. Lau, Zhen Wang, and Stephen Paul Smolley.tf.reduce_mean(tf.squared_difference(prob_fake_is_real, 1))

lsgan_loss_discriminator

Rather than compute the negative loglikelihood, a least-squares loss is used to optimize the discriminators as per Equation 2(tf.reduce_mean(tf.squared_difference(prob_real_is_real, 1)) + tf.reduce_mean(tf.squared_difference(prob_fake_is_real, 0))) * 0.5