https://www.tensorflow.org/tutorials/generative/cyclegan#loss_functions

Tensorflow官方文档对CycleGAN的解释很清晰

OUTPUT_CHANNELS = 3generator_g = pix2pix.unet_generator(OUTPUT_CHANNELS, norm_type='instancenorm')generator_f = pix2pix.unet_generator(OUTPUT_CHANNELS, norm_type='instancenorm')discriminator_x = pix2pix.discriminator(norm_type='instancenorm', target=False)discriminator_y = pix2pix.discriminator(norm_type='instancenorm', target=False)

Loss

adversarial loss

LAMBDA = 10loss_obj = tf.keras.losses.BinaryCrossentropy(from_logits=True)

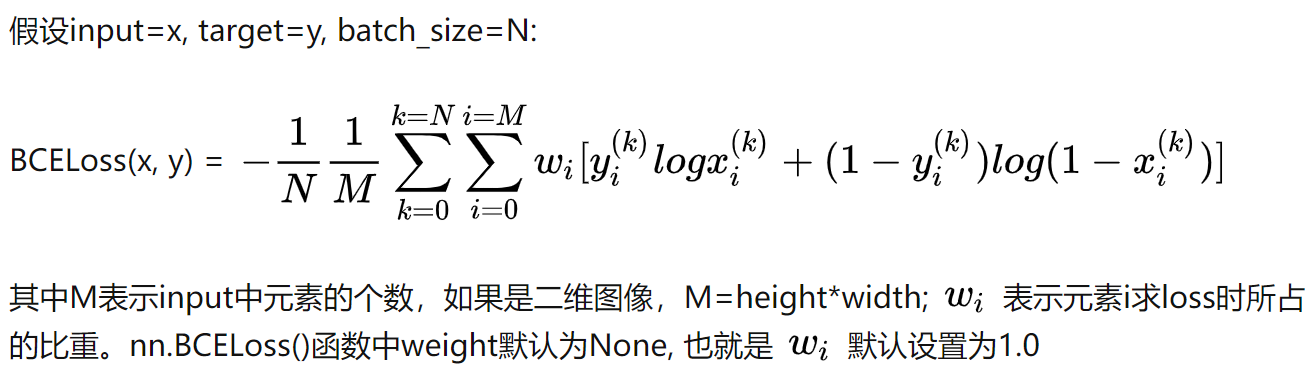

BinaryCrossentropy()

the cross-entropy loss between true labels and predicted labels

bce = tf.keras.losses.BinaryCrossentropy()loss = bce([0., 0., 1., 1.], [1., 1., 1., 0.])print('Loss: ', loss.numpy()) # Loss: 11.522857----------------------------------------------------__call__(y_true, y_pred, sample_weight=None)

def discriminator_loss(real, generated):real_loss = loss_obj(tf.ones_like(real), real)generated_loss = loss_obj(tf.zeros_like(generated), generated)total_disc_loss = real_loss + generated_lossreturn total_disc_loss * 0.5def generator_loss(generated):return loss_obj(tf.ones_like(generated), generated)

cycle-consistency loss

def calc_cycle_loss(real_image, cycled_image):loss1 = tf.reduce_mean(tf.abs(real_image - cycled_image))return LAMBDA * loss1

Indentity loss

def identity_loss(real_image, same_image):loss = tf.reduce_mean(tf.abs(real_image - same_image))return LAMBDA * 0.5 * loss

Optimizers

generator_g_optimizer = tf.keras.optimizers.Adam(2e-4, beta_1=0.5)generator_f_optimizer = tf.keras.optimizers.Adam(2e-4, beta_1=0.5)discriminator_x_optimizer = tf.keras.optimizers.Adam(2e-4, beta_1=0.5)discriminator_y_optimizer = tf.keras.optimizers.Adam(2e-4, beta_1=0.5)

Training

# Generator G translates X -> Y<br /> # Generator F translates Y -> X

Horse and zebr : real_x , real_y

数据输入到生成器

fake_y = generate_g( real_x )

cycle_x = generate_f(fake_y)

fake_x = generate_f( real_y )

cycle_y = generate_g( fake_x )

same_y = generate_g( real_y )

same_x = generate_f( real_x )

数据输入到鉴别器

disc_real_x = dicriminator_x( real_x )

disc_real_y = dicriminator_y( real_y )

disc_fake_x = dicriminator_x( fake_x )

disc_fake_y = dicriminator_y( fake_y )

adversarial loss

Generator

生成器希望骗过鉴别器,也就是生成器生成的图像经过鉴别器后为真概率越大越好

wish to maximize log(D(G(z)))

gen_g_loss = generator_loss( disc_fake_y )

gen_f_loss = generator_loss( disc_fake_x )

Discriminator

鉴别器希望找出所有的生成器的结果,对真实输入都能判定为真

we want to maximize

disc_x_loss = discriminator_loss(disc_real_x, disc_fake_x)

disc_y_loss = discriminator_loss(disc_real_y, disc_fake_y)

CycleLoss

total_cycle_loss = calc_cycle_loss(real_x, cycle_x) + calc_cycle_loss(real_y, cycle_y)

Total generator loss

total_gen_g_loss = gen_g_loss + total_cycle_loss + identity_loss(real_y, same_y)

total_gen_f_loss = gen_f_loss + total_cycle_loss + identity_loss(real_x, same_x)

EPOCHS = 40@tf.functiondef train_step(real_x, real_y):# persistent is set to True because the tape is used more than# once to calculate the gradients.with tf.GradientTape(persistent=True) as tape:# Generator G translates X -> Y# Generator F translates Y -> X.fake_y = generator_g(real_x, training=True)cycled_x = generator_f(fake_y, training=True)fake_x = generator_f(real_y, training=True)cycled_y = generator_g(fake_x, training=True)# same_x and same_y are used for identity loss.same_x = generator_f(real_x, training=True)same_y = generator_g(real_y, training=True)disc_real_x = discriminator_x(real_x, training=True)disc_real_y = discriminator_y(real_y, training=True)disc_fake_x = discriminator_x(fake_x, training=True)disc_fake_y = discriminator_y(fake_y, training=True)# calculate the lossgen_g_loss = generator_loss(disc_fake_y)gen_f_loss = generator_loss(disc_fake_x)total_cycle_loss = calc_cycle_loss(real_x, cycled_x) + calc_cycle_loss(real_y, cycled_y)# Total generator loss = adversarial loss + cycle losstotal_gen_g_loss = gen_g_loss + total_cycle_loss + identity_loss(real_y, same_y)total_gen_f_loss = gen_f_loss + total_cycle_loss + identity_loss(real_x, same_x)disc_x_loss = discriminator_loss(disc_real_x, disc_fake_x)disc_y_loss = discriminator_loss(disc_real_y, disc_fake_y)# Calculate the gradients for generator and discriminatorgenerator_g_gradients = tape.gradient(total_gen_g_loss,generator_g.trainable_variables)generator_f_gradients = tape.gradient(total_gen_f_loss,generator_f.trainable_variables)discriminator_x_gradients = tape.gradient(disc_x_loss,discriminator_x.trainable_variables)discriminator_y_gradients = tape.gradient(disc_y_loss,discriminator_y.trainable_variables)# Apply the gradients to the optimizergenerator_g_optimizer.apply_gradients(zip(generator_g_gradients,generator_g.trainable_variables))generator_f_optimizer.apply_gradients(zip(generator_f_gradients,generator_f.trainable_variables))discriminator_x_optimizer.apply_gradients(zip(discriminator_x_gradients,discriminator_x.trainable_variables))discriminator_y_optimizer.apply_gradients(zip(discriminator_y_gradients,discriminator_y.trainable_variables))