网络结构

Generater

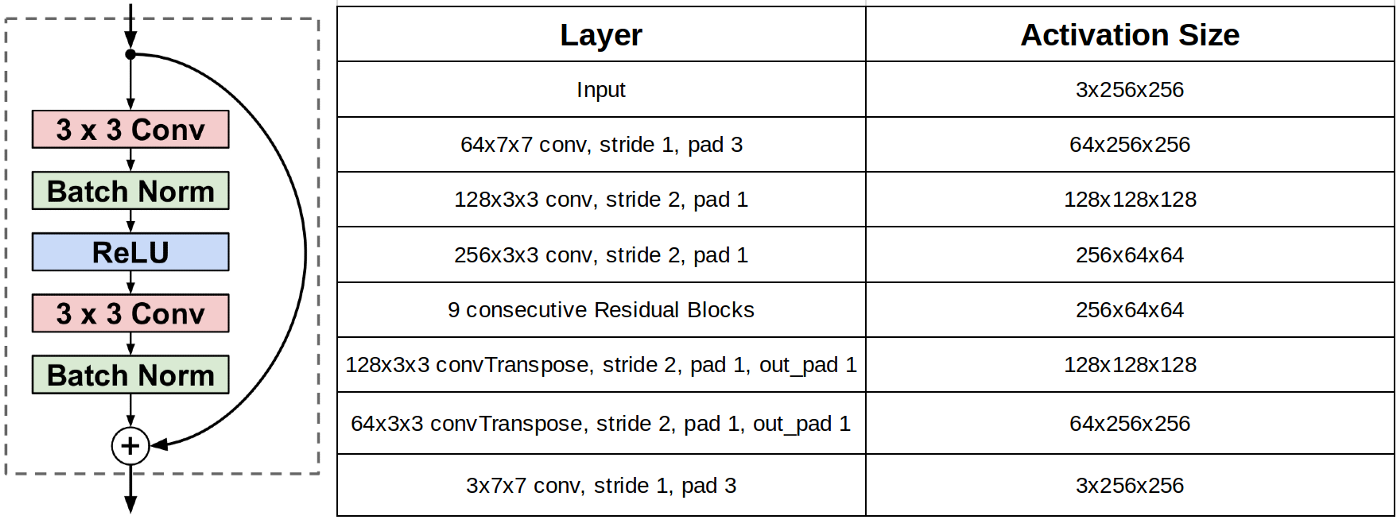

The architecture of generator is:

c7s1-64, d128, d256, R256, R256, R256,

R256, R256, R256, u128, u64, c7s1-3

c7s1-k denote a 7×7 Convolution-InstanceNorm-ReLU layer with k filters and stride 1.

dk denotes a 3 × 3 Convolution-InstanceNorm-ReLU layer with k filters and stride 2.

Rk denotes a residual block that contains two 3 × 3 convolution layers with the same number of filters on both layer.

uk denotes a 3 × 3 fractional-strides-Convolution-InstanceNorm-ReLU layer with k filters and stride 1/2 (i.e deconvolution operation).

Encoding:

The encoding phase converts the features in the image to a latent space representation via multiple convolution layers.

Transformation:

The transformation phase is comprised of six or nine resnet blocks and used to assemble the appropriate latent features captured in the encoding phase . The resnet blocks also have the benefit of skip connections which help avert vanishing gradients in deep networks.

Decoding:

The decoding phase does the opposite of the encoding phase and assembles the latent representations via transpose convolutions.

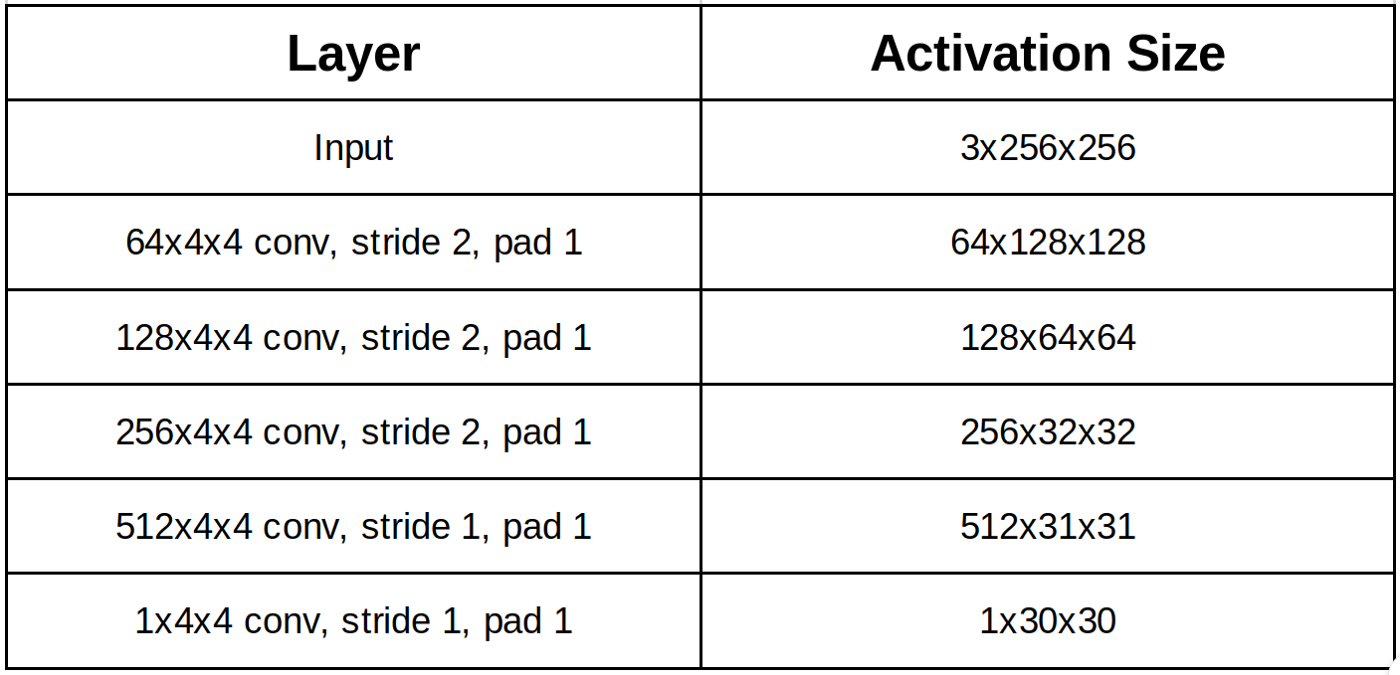

Discriminator

The architecture of discriminator is :

C64-C128-C256-C512

where Ck is 4×4 convolution-InstanceNorm-LeakyReLU layer with k filters and stride 2. We don’t apply InstanceNorm on the first layer (C64). After the last layer, we apply convolution operation to produce a 1×1 output.

Loss

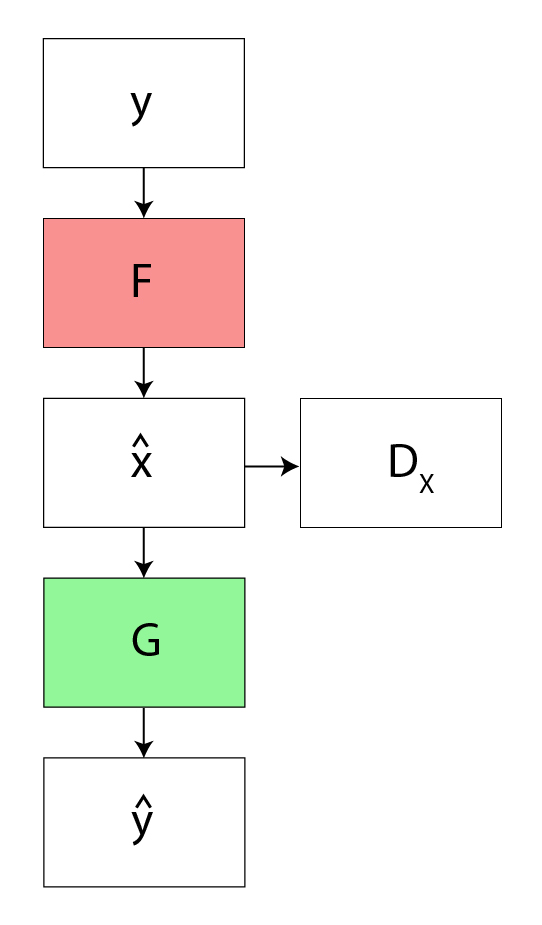

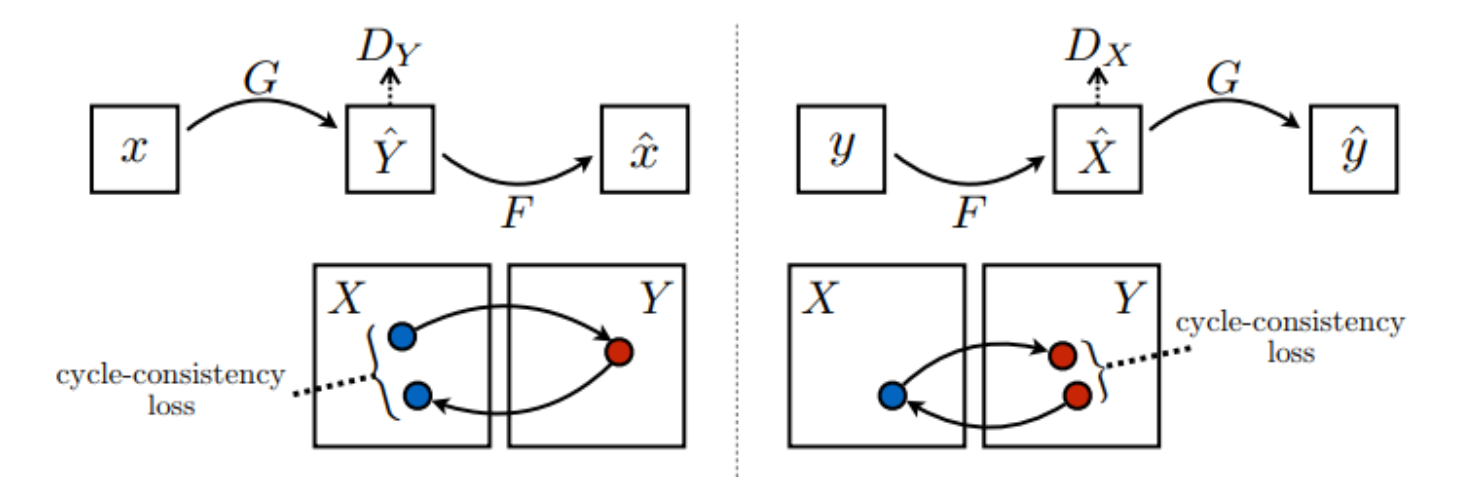

There are 2 generators (G and F) and 2 discriminators (X and Y) being trained here.

- Generator G learns to transform image X to image Y. (G:X−>Y)

- Generator F learns to transform image Y to image X. (F:Y−>X)

- Discriminator D_X learns to differentiate between image X and generated image X (F(Y)).

- Discriminator D_Y learns to differentiate between image Y and generated image Y (G(X)).

the job of generator to produce the samples from the desired distribution

and the job of discriminator is to figure out the sample is from actual distribution(real) or from the one that are generated by generator(fake).

cycle GAN与其他GAN不同的地方在于:有两个生成器

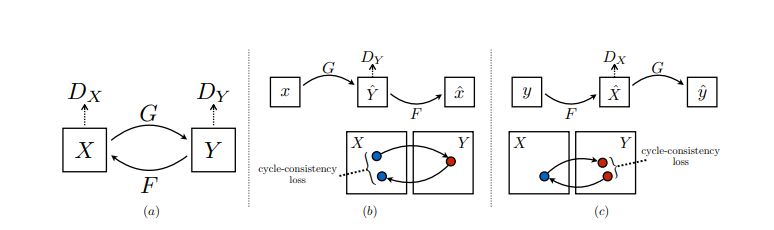

The CycleGAN architecture is different from other GANs in a way that it contains 2 mapping function(G and F) that acts as generators, and their corresponding Discriminators(Dx and Dy): The generator mapping functions are as follows:

where X is the input image distribution and Y is the desired output distribution (such as Van Gogh styles).

To further regularize the mappings the authors used two more loss function in addition to adversarial loss is used.The forward cycle consistency loss and the backward cycle consistency loss.

Adversarial loss

We apply adversarial loss to both our mappings of generators and discriminators.

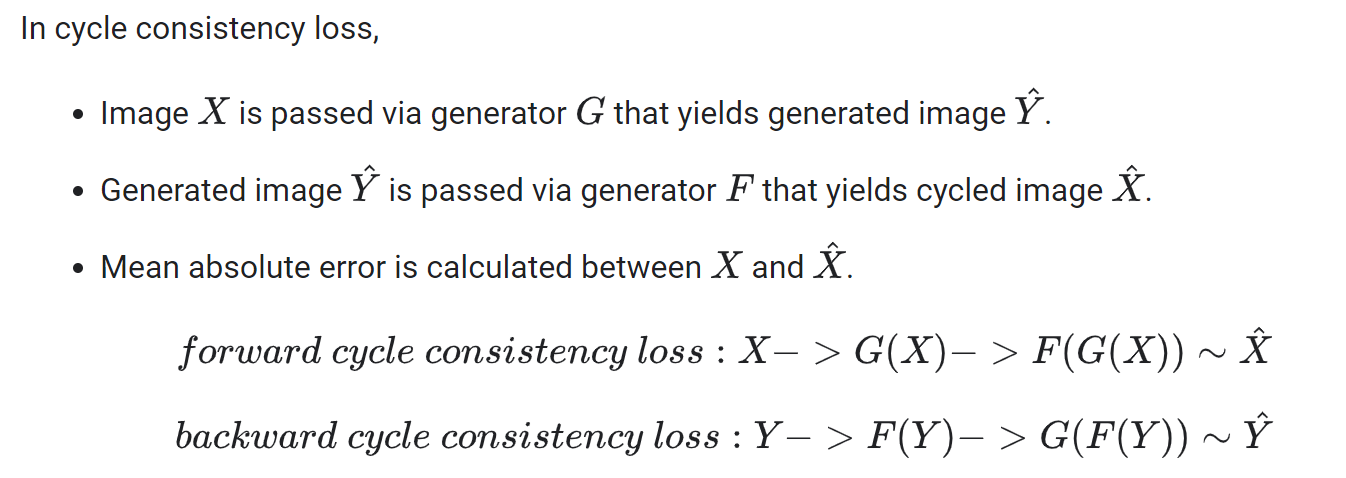

Cycle Consistency loss

Cycle consistency means the result should be close to the original input. For example, if one translates a sentence from English to French, and then translates it back from French to English, then the resulting sentence should be the same as the original sentence.

Forward cycle consistency loss

Backward cycle consistency loss

Indentity loss

G : X → Y

给G输入Y, 输出与Y的变换应该尽可能小

As shown above, generator G is responsible for translating image X to image Y. Identity loss says that, if you fed image Y to generator G, it should yield the real image Y or something close to image Y.

If you run the zebra-to-horse model on a horse or the horse-to-zebra model on a zebra, it should not modify the image much since the image already contains the target class.

Total loss

The cost function we used is the sum of adversarial loss and cyclic consistent loss:

引用

https://www.geeksforgeeks.org/cycle-generative-adversarial-network-cyclegan-2/ https://towardsdatascience.com/overview-of-cyclegan-architecture-and-training-afee31612a2f