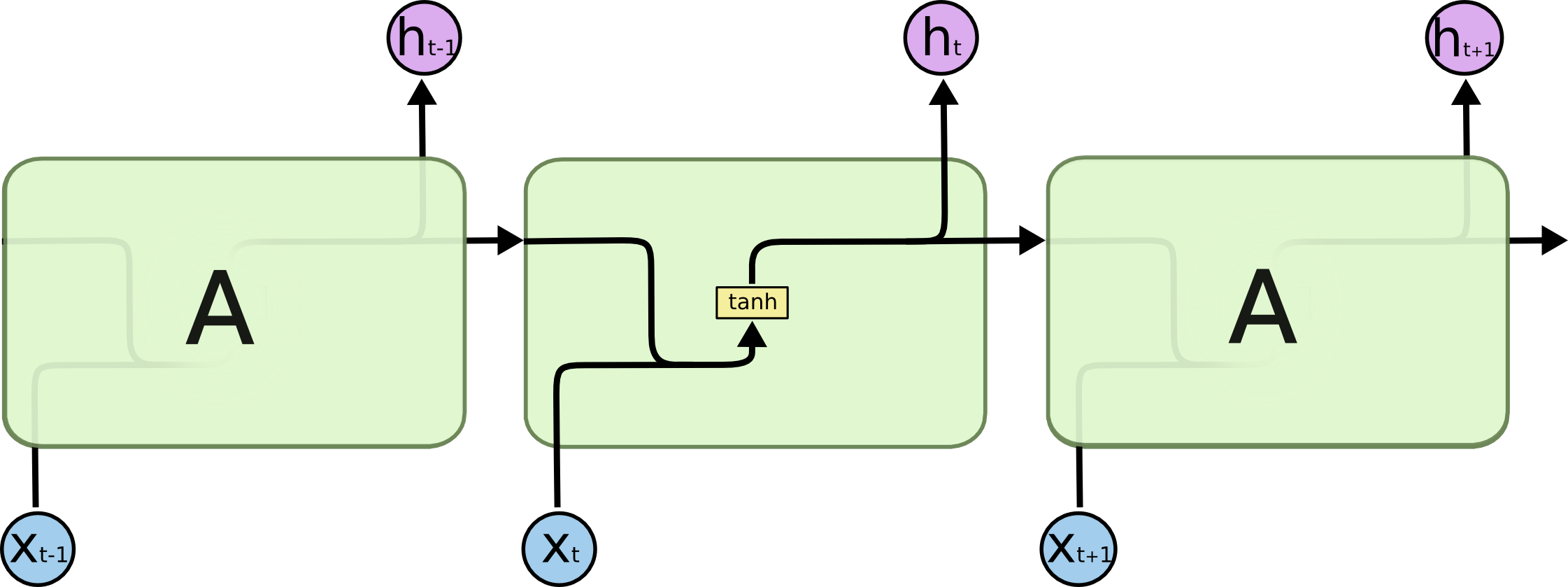

标准RNN

https://pytorch.org/docs/stable/generated/torch.nn.RNN.html?highlight=rnn#torch.nn.RNN

API介绍

where ht is the hidden state _at time t, xt is the **_input at time t, and h(t−1) is the hidden state of the previous layer **at time t-1 or the initial hidden state at time 0. If nonlinearity is ‘relu’, then ReLU is used instead of tanh

torch.nn.RNN(*args, **kwargs**)

- input_size – The number of expected features in the input x 输入xt的特征维度

- hidden_size – The number of features in the hidden state h ht的特征维度

- num_layers – Number of recurrent layers. E.g., setting num_layers=2 would mean stacking two RNNs together to form a stacked RNN, with the second RNN taking in outputs of the first RNN and computing the final results. Default: 1 表示网络层数

- nonlinearity – The non-linearity to use. Can be either ‘tanh’ or ‘relu’. Default: ‘tanh’

- bias – If False, then the layer does not use bias weights b_ih and b_hh. Default: True

网络的输入和输出

网络会接收一个序列输入xt和记忆输入h0

xt 的维度(Seq, batch, input_size)

分别表示 : 序列长度 sequence length, 批量batch size, 输入的特征维度

h0 的维度(layers*direction, batch, hidden_size)

卷积神经网络中输入batch在前面,循环网络中batch在中间

basic_rnn = nn.RNN(input_size=20, hidden_size=50, num_layers=2)

输入是一个长为100,批量为32,维度为20的张量

toy_input = Variable(torch.randn(100,32,20))h_0 = Variable(torch.randn(2,32,50))toy_output, h_n = basic_rnn(toy_input, h_0)

LSTM

Example

将图片数据转化为一个序列数据,MNIST手写数字的图片大小是28x28,可以将每张图片看作是长为28的序列,序列中每个元素的特征维度是28