<dependency><groupId>org.springframework.cloud</groupId><artifactId>spring-cloud-starter-gateway</artifactId><version>2.2.3.RELEASE</version></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-webflux</artifactId><version>2.3.0.RELEASE</version></dependency>

背景及现象

线上有一个业务需要调用算法同学的python服务,然后每次算法同学上一个新的服务都需要和运维同学沟通半天,于是将算法的服务全部都集中到网关中,然后通过path来进行转发,以解决算法和运维之间的沟通问题。

在切换完以后,所有原来走Nginx直接转发到python的流量,由nginx转发到gw,再由gw转发到python服务。在切换到网关之后,网关开始频繁出现堆外OOM,由于该网关的代码是小程序曾经使用过的一套网关,因此是不存在BUG的。

同时,前台的dubbo服务在网关OOM的时候频繁出现调用超时。

处理问题

一开始看的时候看到机器的CPU很高,当时认为CPU影响到gw的运行了,于是部署了2个新的Tomcat到线上,此时由于重新启动了Tomcat,导致OOM消失并缓解了。一度认为是CPU的问题。

过了几周又出现了超时且服务器CPU低,同时观察到日志中出现了OOM的情况。

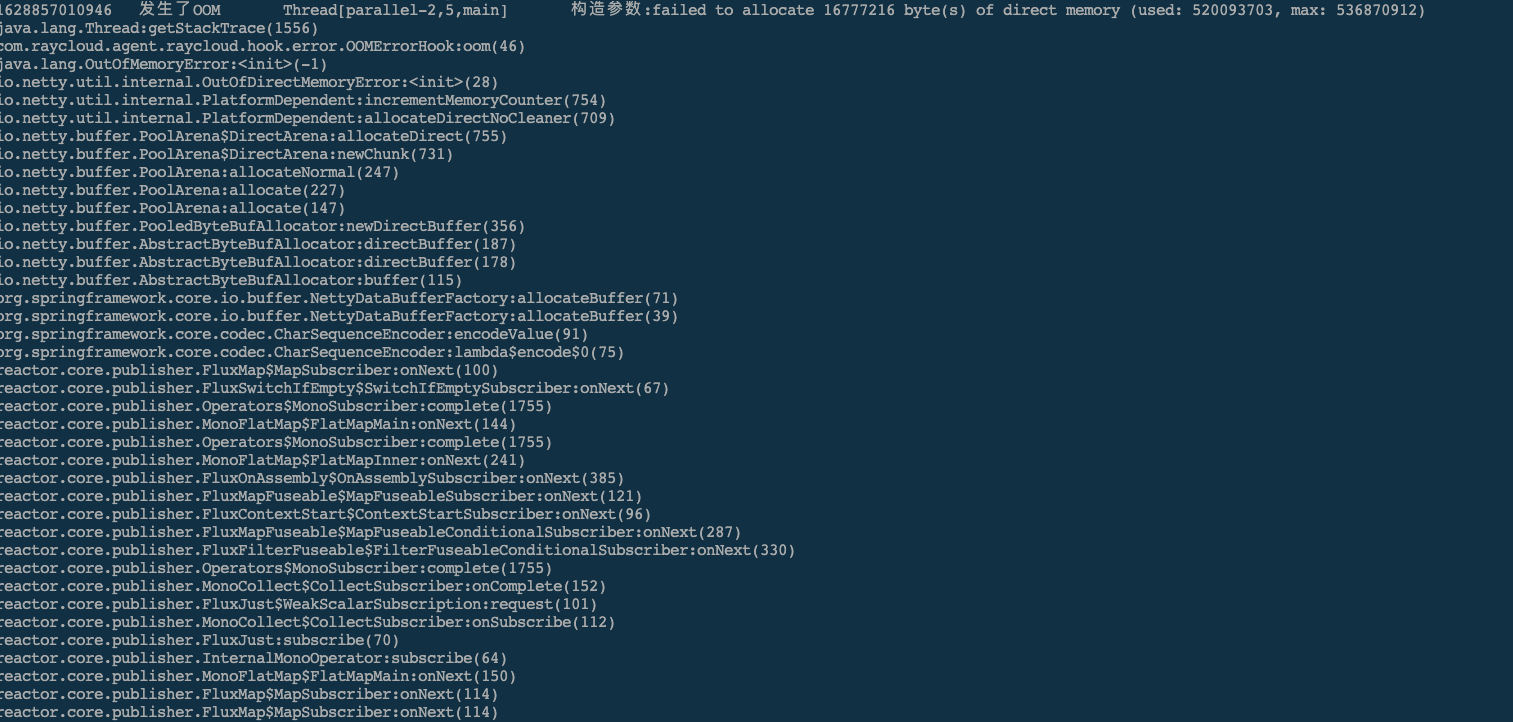

这个日志是字节码植入的,只是知道发生了OOM,但是并不知道是由于什么引发了堆外的OOM。

然后仔细检查日志的是发现了如下的数据

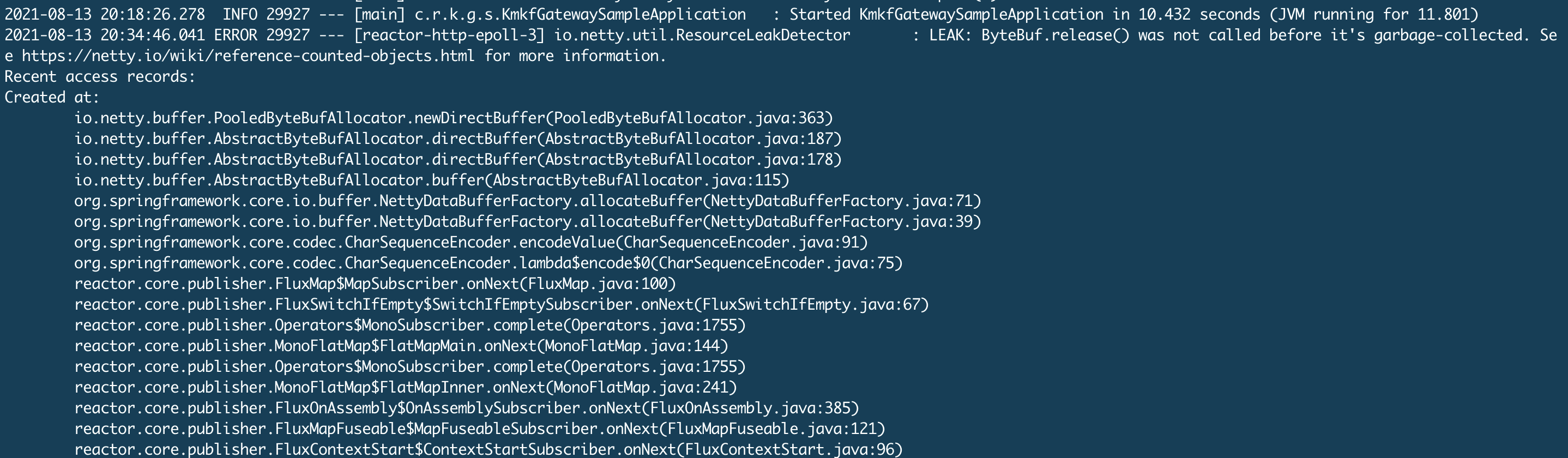

由于Netty的DataBuffer导致了堆外内存泄露。于是我添加了 -Dio.netty.leakDetectionLevel=advanced 让Netty帮忙进行内存监测,添加该操作,会在FGC的时候进行监测,何处可能存在内存溢出 Netty.

过来几天,观察到了如下情况

2021-08-14 14:24:11.042 ERROR 9797 --- [r-http-kqueue-6] io.netty.util.ResourceLeakDetector : LEAK: ByteBuf.release() was not called before it's garbage-collected. See https://netty.io/wiki/reference-counted-objects.html for more information.Recent access records:#1:io.netty.buffer.AdvancedLeakAwareByteBuf.readBytes(AdvancedLeakAwareByteBuf.java:502)io.netty.buffer.ByteBufInputStream.read(ByteBufInputStream.java:180)java.io.InputStream.read(InputStream.java:101)org.springframework.util.StreamUtils.copy(StreamUtils.java:165)org.springframework.util.FileCopyUtils.copy(FileCopyUtils.java:112)org.springframework.cloud.gateway.filter.factory.rewrite.GzipMessageBodyResolver.encode(GzipMessageBodyResolver.java:52)reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:100)reactor.core.publisher.MonoPublishOn$PublishOnSubscriber.run(MonoPublishOn.java:178)reactor.core.scheduler.SchedulerTask.call(SchedulerTask.java:68)reactor.core.scheduler.SchedulerTask.call(SchedulerTask.java:28)java.util.concurrent.FutureTask.run(FutureTask.java:266)java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)java.lang.Thread.run(Thread.java:748)Created at:io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:363)io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:178)io.netty.buffer.AbstractByteBufAllocator.buffer(AbstractByteBufAllocator.java:115)org.springframework.core.io.buffer.NettyDataBufferFactory.allocateBuffer(NettyDataBufferFactory.java:71)org.springframework.core.io.buffer.NettyDataBufferFactory.allocateBuffer(NettyDataBufferFactory.java:39)org.springframework.core.codec.CharSequenceEncoder.encodeValue(CharSequenceEncoder.java:91)org.springframework.core.codec.CharSequenceEncoder.lambda$encode$0(CharSequenceEncoder.java:75)reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:100)reactor.core.publisher.FluxSwitchIfEmpty$SwitchIfEmptySubscriber.onNext(FluxSwitchIfEmpty.java:67)reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1755)reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:144)reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1755)reactor.core.publisher.MonoFlatMap$FlatMapInner.onNext(MonoFlatMap.java:241)reactor.core.publisher.FluxOnAssembly$OnAssemblySubscriber.onNext(FluxOnAssembly.java:385)reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)reactor.core.publisher.FluxContextStart$ContextStartSubscriber.onNext(FluxContextStart.java:96)reactor.core.publisher.FluxMapFuseable$MapFuseableConditionalSubscriber.onNext(FluxMapFuseable.java:287)reactor.core.publisher.FluxFilterFuseable$FilterFuseableConditionalSubscriber.onNext(FluxFilterFuseable.java:330)reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1755)reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:152)reactor.core.publisher.FluxJust$WeakScalarSubscription.request(FluxJust.java:101)reactor.core.publisher.MonoCollect$CollectSubscriber.onSubscribe(MonoCollect.java:112)reactor.core.publisher.FluxJust.subscribe(FluxJust.java:70)reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:150)reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:114)reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:114)reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:114)reactor.core.publisher.MonoPublishOn$PublishOnSubscriber.run(MonoPublishOn.java:178)reactor.core.scheduler.SchedulerTask.call(SchedulerTask.java:68)reactor.core.scheduler.SchedulerTask.call(SchedulerTask.java:28)java.util.concurrent.FutureTask.run(FutureTask.java:266)java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)java.lang.Thread.run(Thread.java:748): 1 leak records were discarded because they were duplicates

GzipMessageBodyResolver

package org.springframework.cloud.gateway.filter.factory.rewrite;import org.springframework.core.io.buffer.DataBuffer;import org.springframework.core.io.buffer.DataBufferUtils;import org.springframework.util.FileCopyUtils;import java.io.ByteArrayInputStream;import java.io.ByteArrayOutputStream;import java.io.IOException;import java.io.InputStream;import java.util.zip.GZIPInputStream;import java.util.zip.GZIPOutputStream;public class GzipMessageBodyResolver implements MessageBodyDecoder, MessageBodyEncoder {@Overridepublic String encodingType() {return "gzip";}@Overridepublic byte[] decode(byte[] encoded) {try {ByteArrayInputStream bis = new ByteArrayInputStream(encoded);GZIPInputStream gis = new GZIPInputStream(bis);return FileCopyUtils.copyToByteArray(gis);} catch (IOException e) {throw new IllegalStateException("couldn't decode body from gzip", e);}}@Overridepublic byte[] encode(DataBuffer original) {try {ByteArrayOutputStream bis = new ByteArrayOutputStream();GZIPOutputStream gos = new GZIPOutputStream(bis);//带上所有信息,这里是第52行FileCopyUtils.copy(original.asInputStream(), gos);return bis.toByteArray();} catch (IOException e) {throw new IllegalStateException("couldn't encode body to gzip", e);}}}

调用这里的地方是 ModifyResponseBodyGatewayFilterFactory#writeBody , 如果存在 Content-Encoding 这个请求头,那么就进行encode。

使用Arthas查看返回的Content-Encoding,果然偶尔出现了 gzip的情况,然后运行的时间一长,就出现了OOM。

如何修复

明白这一层关系以后,我在本地重现了这个问题。

在 GzipMessageBodyResolver#encode 处,使用 DataBufferUtils#release 释放掉buffer即可,但是需要改动源码。为了修复问题,先拷贝一个类,改动发布上去。

降低-XX:MaxDirectMemorySize,由原来的512M降低到 128M2天过去并没有出现这个问题。

小结

- 使用

-Dio.netty.leakDetectionLevel=advanced让Netty监测可能是哪里出现了OOM,然后针对进行查看。 - Netty监测到了地方以后,使用Arthas进行佐证

- 本地模型进一步验证结论。

这里还使用jdk#bin目录下的一些工具,用于查看某些bean的实例化个数等等。

- jstat, 查看fgc次数

- jmap -histo 查看实例个数

- jmap dump文件