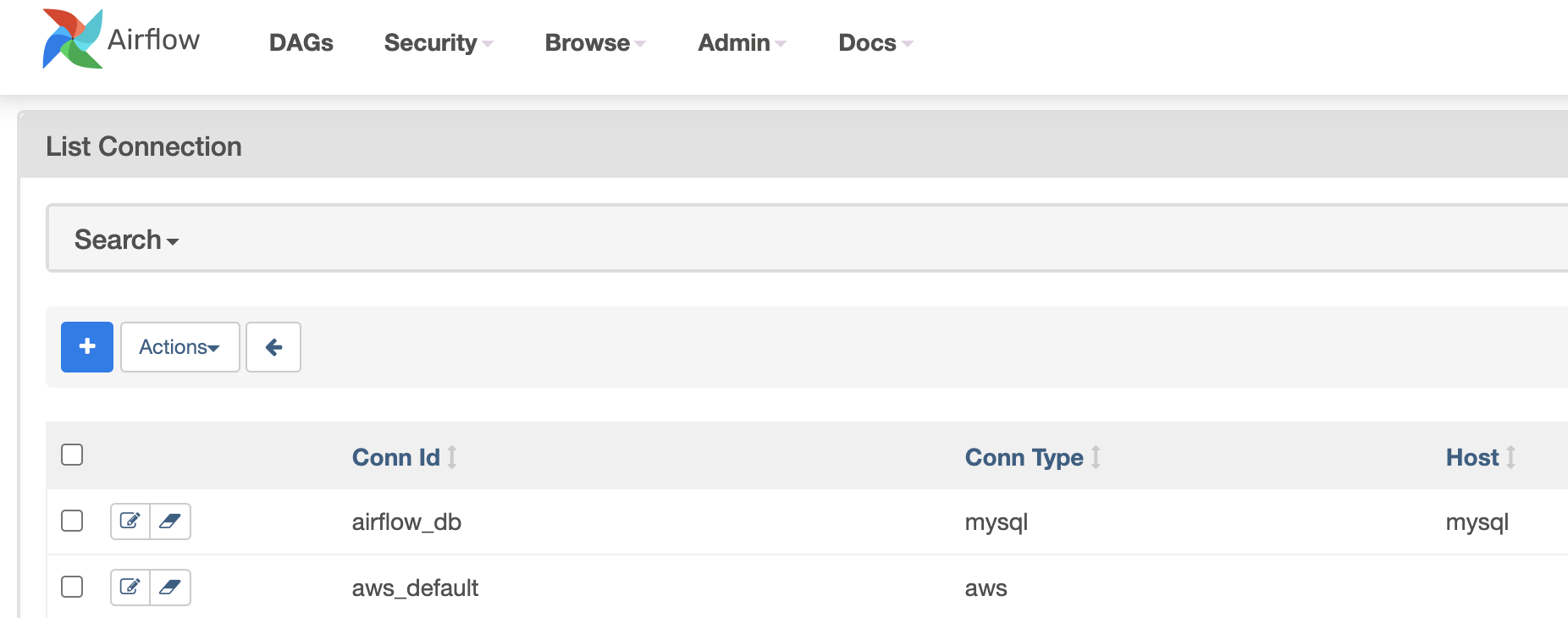

Airflow needs to know how to connect to your environment. Information such as hostname, port, login and passwords to other systems and services is handled in the Admin -> Connections section of the UI. The pipeline code you will author will reference the ‘conn_id’ of the Connection objects.

Connections can be created and managed using either the UI or environment variables.

See the Connections Concepts documentation for more information.

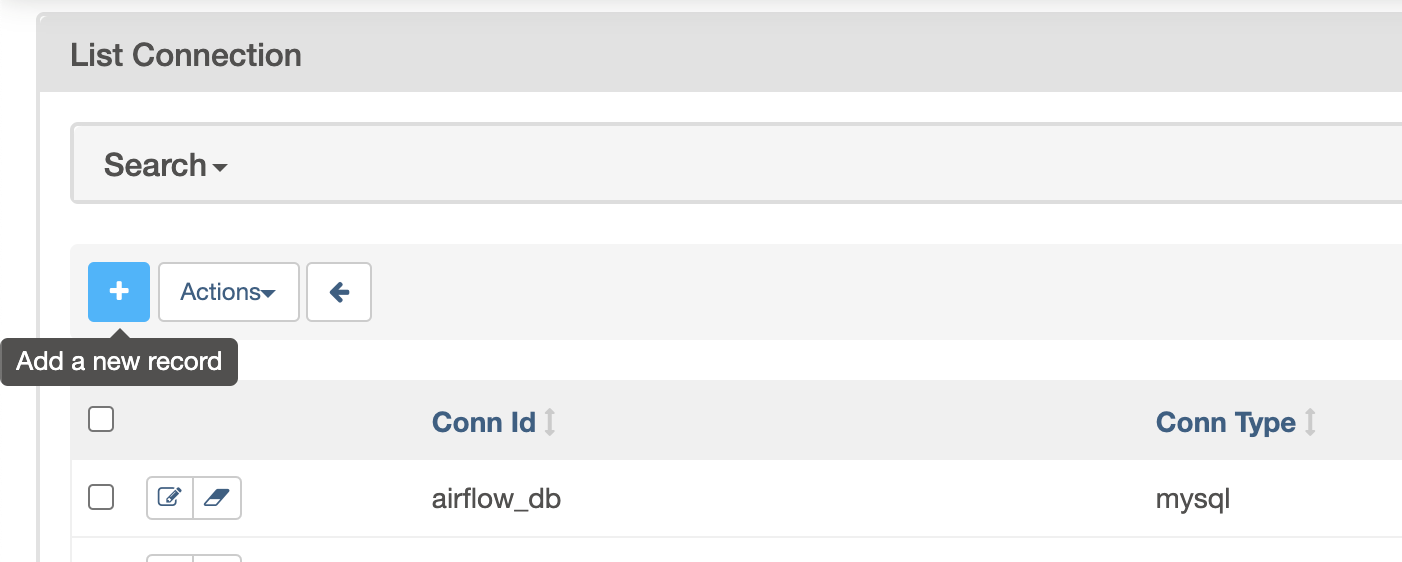

1. Creating a Connection with the UI

Open the Admin -> Connections section of the UI. Click the Create link to create a new connection.

- Fill in the

Conn Idfield with the desired connection ID. It is recommended that you use lower-case characters and separate words with underscores. - Choose the connection type with the

Conn Typefield. - Fill in the remaining fields. See Handling of special characters in connection params for a description of the fields belonging to the different connection types.

- Click the

Savebutton to create the connection.2. Editing a Connection with the UI

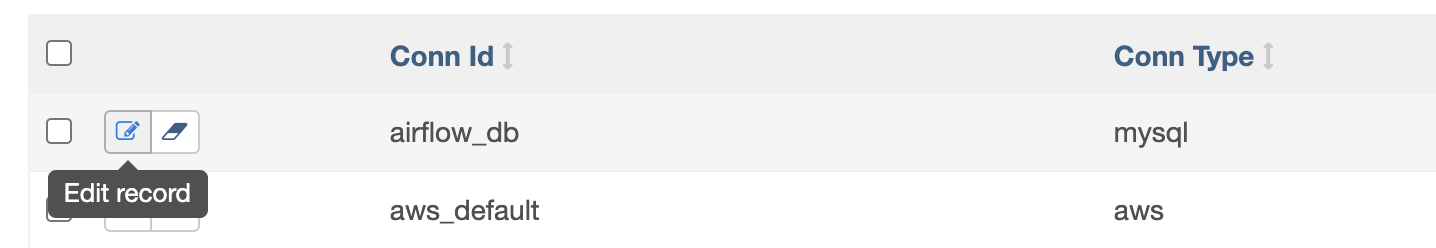

Open theAdmin -> Connectionssection of the UI. Click the pencil icon next to the connection you wish to edit in the connection list.

Modify the connection properties and click the Save button to save your changes.

3. Creating a Connection from the CLI

You may add a connection to the database from the CLI.

Obtain the URI for your connection (see Generating a Connection URI).

Then add connection like so:

airflow connections add 'my_prod_db' \--conn-uri 'my-conn-type://login:password@host:port/schema?param1=val1¶m2=val2'

Alternatively you may specify each parameter individually:

$ airflow connections add 'my_prod_db' \--conn-type 'my-conn-type'--conn-login 'login' \--conn-password 'password' \--conn-host 'host' \--conn-port 'port' \--conn-schema 'schema' \...

4. Exporting Connections from the CLI

You may export connections from the database using the CLI. The supported formats are json, yaml and env.

You may mention the target file as the parameter:

$ airflow connections export connections.json

Alternatively you may specify format parameter for overriding the format:

$ airflow connections export /tmp/connections --format yaml

You may also specify - for STDOUT:

$ airflow connections export -

The JSON format contains an object where the key contains the connection ID and the value contains the definition of the connection. In this format, the connection is defined as a JSON object. The following is a sample JSON file.

{"airflow_db": {"conn_type": "mysql","host": "mysql","login": "root","password": "plainpassword","schema": "airflow","port": null,"extra": null},"druid_broker_default": {"conn_type": "druid","host": "druid-broker","login": null,"password": null,"schema": null,"port": 8082,"extra": "{\"endpoint\": \"druid/v2/sql\"}"}}

The YAML file structure is similar to that of a JSON. The key-value pair of connection ID and the definitions of one or more connections. In this format, the connection is defined as a YAML object. The following is a sample YAML file.

airflow_db:conn_type: mysqlextra: nullhost: mysqllogin: rootpassword: plainpasswordport: nullschema: airflowdruid_broker_default:conn_type: druidextra: '{"endpoint": "druid/v2/sql"}'host: druid-brokerlogin: nullpassword: nullport: 8082schema: null

You may also export connections in .env format. The key is the connection ID, and the value describes the connection using the URI. The following is a sample ENV file.

airflow_db=mysql://root:plainpassword@mysql/airflowdruid_broker_default=druid://druid-broker:8082?endpoint=druid%2Fv2%2Fsql

5. Storing a Connection in Environment Variables

The environment variable naming convention is [AIRFLOW_CONN_{CONN_ID}](https://airflow.apache.org/docs/apache-airflow/stable/cli-and-env-variables-ref.html#envvar-AIRFLOW_CONN_-CONN_ID), all uppercase.

So if your connection id is my_prod_db then the variable name should be AIRFLOW_CONN_MY_PROD_DB.

:::tips

🔖 Note

:::

:::info

Single underscores surround CONN. This is in contrast with the way airflow.cfg parameters are stored, where double underscores surround the config section name. Connections set using Environment Variables would not appear in the Airflow UI but you will be able to use them in your DAG file.

:::

The value of this environment variable must use airflow’s URI format for connections. See the section Generating a Connection URI for more details.

5.1 Using .bashrc (or similar)

If storing the environment variable in something like ~/.bashrc, add as follows:

$ export AIRFLOW_CONN_MY_PROD_DATABASE='my-conn-type://login:password@host:port/schema?param1=val1¶m2=val2'

5.2 Using docker .env

If using with a docker .env file, you may need to remove the single quotes.

AIRFLOW_CONN_MY_PROD_DATABASE=my-conn-type://login:password@host:port/schema?param1=val1¶m2=val2

6. Connection URI format

In general, Airflow’s URI format is like so:

my-conn-type://my-login:my-password@my-host:5432/my-schema?param1=val1¶m2=val2

:::tips

🔖 Note

:::

:::info

The params param1 and param2 are just examples; you may supply arbitrary urlencoded json-serializable data there.

:::

The above URI would produce a Connection object equivalent to the following:

Connection(conn_id='',conn_type='my_conn_type',description=None,login='my-login',password='my-password',host='my-host',port=5432,schema='my-schema',extra=json.dumps(dict(param1='val1', param2='val2')))

You can verify a URI is parsed correctly like so:

>>> from airflow.models.connection import Connection>>> c = Connection(uri='my-conn-type://my-login:my-password@my-host:5432/my-schema?param1=val1¶m2=val2')>>> print(c.login)my-login>>> print(c.password)my-password

6.1 Generating a connection URI

To make connection URI generation easier, the [Connection](https://airflow.apache.org/docs/apache-airflow/stable/_api/airflow/models/connection/index.html#airflow.models.connection.Connection) class has a convenience method [get_uri()](https://airflow.apache.org/docs/apache-airflow/stable/_api/airflow/models/connection/index.html#airflow.models.connection.Connection.get_uri). It can be used like so:

import jsonfrom airflow.models.connection import Connectionc = Connection(conn_id='some_conn',conn_type='mysql',description='connection description',host='myhost.com',login='myname',password='mypassword',extra=json.dumps(dict(this_param='some val', that_param='other val*')),)print(f"AIRFLOW_CONN_{c.conn_id.upper()}='{c.get_uri()}'")# AIRFLOW_CONN_SOME_CONN='mysql://myname:mypassword@myhost.com?this_param=some+val&that_param=other+val%2A'

Additionally, if you have created a connection, you can use airflow connections get command.

$ airflow connections get sqlite_defaultId: 40Conn Id: sqlite_defaultConn Type: sqliteHost: /tmp/sqlite_default.dbSchema: nullLogin: nullPassword: nullPort: nullIs Encrypted: falseIs Extra Encrypted: falseExtra: {}URI: sqlite://%2Ftmp%2Fsqlite_default.db

6.2 Handling of special characters in connection params

:::tips

🔖 Note

This process is automated as described in section Generating a Connection URI.

:::

Special handling is required for certain characters when building a URI manually.

For example if your password has a /, this fails:

>>> c = Connection(uri='my-conn-type://my-login:my-pa/ssword@my-host:5432/my-schema?param1=val1¶m2=val2')ValueError: invalid literal for int() with base 10: 'my-pa'

To fix this, you can encode with [quote_plus()](https://docs.python.org/3/library/urllib.parse.html#urllib.parse.quote_plus):

>>> c = Connection(uri='my-conn-type://my-login:my-pa%2Fssword@my-host:5432/my-schema?param1=val1¶m2=val2')>>> print(c.password)my-pa/ssword

7. Securing Connections

Airflow uses Fernet to encrypt passwords in the connection configurations stored the metastore database. It guarantees that without the encryption password, Connection Passwords cannot be manipulated(使用) or read without the key. For information on configuring Fernet, look at Fernet.

In addition to retrieving connections from environment variables or the metastore database, you can enable an secrets backend to retrieve connections. For more details see Secrets backend.

8. Custom connection types

Airflow allows the definition of custom connection types - including modifications of the add/edit form for the connections. Custom connection types are defined in community maintained providers, but you can can also add a custom provider that adds custom connection types. See Provider packages for description on how to add custom providers.

The custom connection types are defined via Hooks delivered by the providers. The Hooks can implement methods defined in the protocol(协议) class DiscoverableHook. Note that your custom Hook should not derive(得到) from this class, this class is a dummy example to document expectations regarding about class fields and methods that your Hook might define. Another good example is [JdbcHook](https://airflow.apache.org/docs/apache-airflow-providers-jdbc/stable/_api/airflow/providers/jdbc/hooks/jdbc/index.html#airflow.providers.jdbc.hooks.jdbc.JdbcHook).

By implementing those methods in your hooks and exposing them via hook-class-names array in the provider meta-data you can customize Airflow by:

- Adding custom connection types

- Adding automated Hook creation from the connection type

- Adding custom form widget to display and edit custom “extra” parameters in your connection URL

- Hiding fields that are not used for your connection

- Adding placeholders showing examples of how fields should be formatted

You can read more about details how to add custom provider packages in the Provider packages