k8s-centos8u2-集群部署02-核心组件:kube-apiserver、kube-controller-manager、kube-schedule、kubelet、kube-proxy

本实验环境每次关机后重启需要检查:

- keepalived是否工作(systemctl status keepalived),vip是否正常。(ip addr查看192.168.26.10是否存在)

- harbaor启动是否正常:在启动目录下docker-compose ps查看是否正常。

- supervisorctl status:查看各进程启动状态。

- 检查docker和k8s集群。

kube-apiserver

1 集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| vms21.cos.com | kube-apiserver | 192.168.26.21 |

| vms22.cos.com | kube-apiserver | 192.168.26.22 |

| vms11.cos.com | 4层负载均衡 | 192.168.26.11 |

| vms12.cos.com | 4层负载均衡 | 192.168.26.12 |

注意:这里192.168.26.11和192.168.26.12使用nginx做4层负载均衡器,用keepalived跑一个vip:192.168.26.10,代理两个kube-apiserver,实现高可用。

这里部署文档以vms21.cos.com主机为例,另外一台运算节点安装部署方法类似。

2 下载软件,解压,做软连接

在vms21.cos.com上:

下载地址:https://github.com/kubernetes/kubernetes/releases

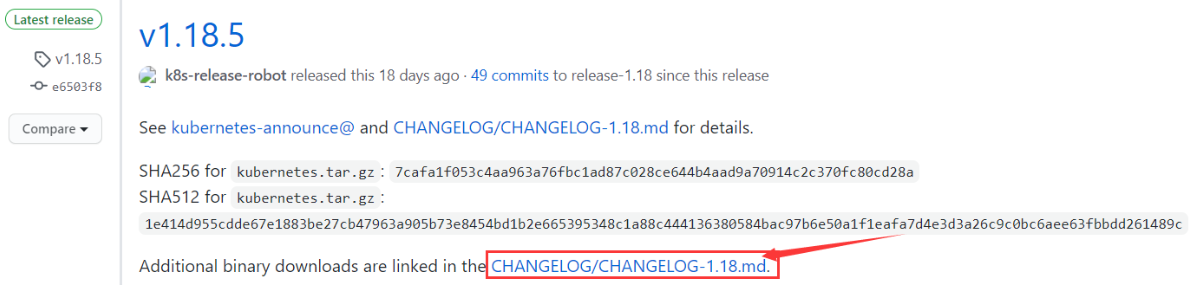

- 选择下载版本

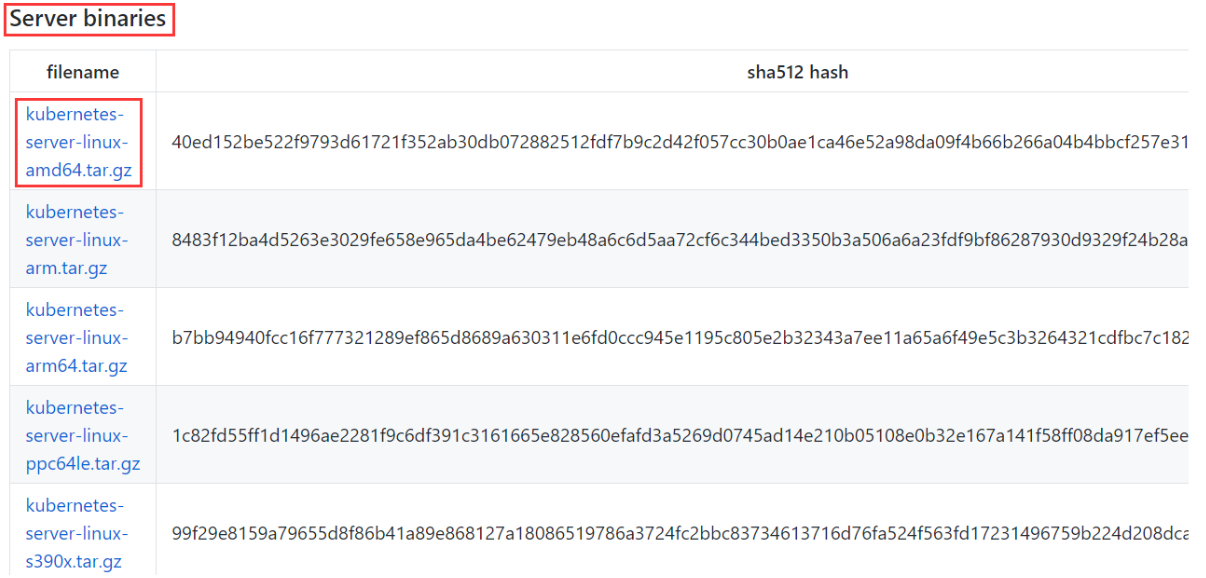

Downloads for v1.18.5 - 从

Server binaries列表中选择:

右键复制下载地址:https://dl.k8s.io/v1.18.5/kubernetes-server-linux-amd64.tar.gz

[root@vms21 ~]# mkdir /opt/src[root@vms21 ~]# cd /opt/src[root@vms21 src]# wget https://dl.k8s.io/v1.18.5/kubernetes-server-linux-amd64.tar.gz[root@vms21 src]# tar xf kubernetes-server-linux-amd64.tar.gz -C /opt[root@vms21 src]# mv /opt/kubernetes /opt/kubernetes-v1.18.5[root@vms21 src]# ln -s /opt/kubernetes-v1.18.5 /opt/kubernetes[root@vms21 src]# mkdir /opt/kubernetes/server/bin/{cert,conf}[root@vms21 src]# ls -l /opt|grep kuberneteslrwxrwxrwx 1 root root 23 Jul 15 10:46 kubernetes -> /opt/kubernetes-v1.18.5drwxr-xr-x 4 root root 79 Jun 26 12:26 kubernetes-v1.18.5

删除一些不必要的包和文件,只保留如下:

[root@vms21 src]# ls -l /opt/kubernetes/server/bintotal 458360drwxr-xr-x 2 root root 6 Jul 15 10:47 certdrwxr-xr-x 2 root root 6 Jul 15 10:47 conf-rwxr-xr-x 1 root root 120659968 Jun 26 12:26 kube-apiserver-rwxr-xr-x 1 root root 110059520 Jun 26 12:26 kube-controller-manager-rwxr-xr-x 1 root root 44027904 Jun 26 12:26 kubectl-rwxr-xr-x 1 root root 113283800 Jun 26 12:26 kubelet-rwxr-xr-x 1 root root 38379520 Jun 26 12:26 kube-proxy-rwxr-xr-x 1 root root 42946560 Jun 26 12:26 kube-scheduler

3 签发client证书

apiserver与etc通信用的证书。apiserver是客户端,etcd是服务端

运维主机vms200.cos.com上:

- 创建生成证书签名请求(csr)的JSON配置文件

[root@vms200 certs]# vi /opt/certs/client-csr.json

{"CN": "k8s-node","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "op","OU": "ops"}]}

- 生成client证书和私钥

[root@vms200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client2020/07/16 11:20:54 [INFO] generate received request2020/07/16 11:20:54 [INFO] received CSR2020/07/16 11:20:54 [INFO] generating key: rsa-20482020/07/16 11:20:54 [INFO] encoded CSR2020/07/16 11:20:54 [INFO] signed certificate with serial number 3848115788161061540281657627001486550127652875752020/07/16 11:20:54 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").[root@vms200 certs]# ls -l client*-rw-r--r-- 1 root root 993 Jul 16 11:20 client.csr-rw-r--r-- 1 root root 280 Jul 16 11:14 client-csr.json-rw------- 1 root root 1675 Jul 16 11:20 client-key.pem-rw-r--r-- 1 root root 1363 Jul 16 11:20 client.pem

4 签发server证书

apiserver和其它k8s组件通信使用

运维主机vms200.cos.com上:

- 创建生成证书签名请求(csr)的JSON配置文件

[root@vms200 certs]# vi /opt/certs/apiserver-csr.json

{"CN": "k8s-apiserver","hosts": ["127.0.0.1","10.168.0.1","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local","192.168.26.10","192.168.26.21","192.168.26.22","192.168.26.23"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "op","OU": "ops"}]}

- 生成kube-apiserver证书和私钥

[root@vms200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver2020/07/16 14:04:16 [INFO] generate received request2020/07/16 14:04:16 [INFO] received CSR2020/07/16 14:04:16 [INFO] generating key: rsa-20482020/07/16 14:04:17 [INFO] encoded CSR2020/07/16 14:04:17 [INFO] signed certificate with serial number 4620647282159999415256507746143955321906174637992020/07/16 14:04:17 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").[root@vms200 certs]# ls -l apiserver*-rw-r--r-- 1 root root 1249 Jul 16 14:04 apiserver.csr-rw-r--r-- 1 root root 581 Jul 16 11:33 apiserver-csr.json-rw------- 1 root root 1679 Jul 16 14:04 apiserver-key.pem-rw-r--r-- 1 root root 1594 Jul 16 14:04 apiserver.pem

5 拷贝证书

在vms21.cos.com上:

[root@vms21 cert]# cd /opt/kubernetes/server/bin/cert/[root@vms21 cert]# scp vms200:/opt/certs/ca.pem .[root@vms21 cert]# scp vms200:/opt/certs/ca-key.pem .[root@vms21 cert]# scp vms200:/opt/certs/client.pem .[root@vms21 cert]# scp vms200:/opt/certs/client-key.pem .[root@vms21 cert]# scp vms200:/opt/certs/apiserver.pem .[root@vms21 cert]# scp vms200:/opt/certs/apiserver-key.pem .[root@vms21 cert]# ls -ltotal 24-rw------- 1 root root 1679 Jul 16 14:14 apiserver-key.pem-rw-r--r-- 1 root root 1594 Jul 16 14:14 apiserver.pem-rw------- 1 root root 1679 Jul 16 14:13 ca-key.pem-rw-r--r-- 1 root root 1338 Jul 16 14:12 ca.pem-rw------- 1 root root 1675 Jul 16 14:13 client-key.pem-rw-r--r-- 1 root root 1363 Jul 16 14:13 client.pem

6 创建配置

在vms21.cos.com上:

[root@vms21 bin]# cd /opt/kubernetes/server/bin/conf/

[root@vms21 conf]# vi audit.yaml

apiVersion: audit.k8s.io/v1beta1 # This is required.kind: Policy# Don't generate audit events for all requests in RequestReceived stage.omitStages:- "RequestReceived"rules:# Log pod changes at RequestResponse level- level: RequestResponseresources:- group: ""# Resource "pods" doesn't match requests to any subresource of pods,# which is consistent with the RBAC policy.resources: ["pods"]# Log "pods/log", "pods/status" at Metadata level- level: Metadataresources:- group: ""resources: ["pods/log", "pods/status"]# Don't log requests to a configmap called "controller-leader"- level: Noneresources:- group: ""resources: ["configmaps"]resourceNames: ["controller-leader"]# Don't log watch requests by the "system:kube-proxy" on endpoints or services- level: Noneusers: ["system:kube-proxy"]verbs: ["watch"]resources:- group: "" # core API groupresources: ["endpoints", "services"]# Don't log authenticated requests to certain non-resource URL paths.- level: NoneuserGroups: ["system:authenticated"]nonResourceURLs:- "/api*" # Wildcard matching.- "/version"# Log the request body of configmap changes in kube-system.- level: Requestresources:- group: "" # core API groupresources: ["configmaps"]# This rule only applies to resources in the "kube-system" namespace.# The empty string "" can be used to select non-namespaced resources.namespaces: ["kube-system"]# Log configmap and secret changes in all other namespaces at the Metadata level.- level: Metadataresources:- group: "" # core API groupresources: ["secrets", "configmaps"]# Log all other resources in core and extensions at the Request level.- level: Requestresources:- group: "" # core API group- group: "extensions" # Version of group should NOT be included.# A catch-all rule to log all other requests at the Metadata level.- level: Metadata# Long-running requests like watches that fall under this rule will not# generate an audit event in RequestReceived.omitStages:- "RequestReceived"

7 创建启动脚本

在vms21.cos.com上:

[root@vms21 bin]# vi /opt/kubernetes/server/bin/kube-apiserver.sh

#!/bin/bash./kube-apiserver \--apiserver-count 2 \--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \--audit-policy-file ./conf/audit.yaml \--authorization-mode RBAC \--client-ca-file ./cert/ca.pem \--requestheader-client-ca-file ./cert/ca.pem \--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \--etcd-cafile ./cert/ca.pem \--etcd-certfile ./cert/client.pem \--etcd-keyfile ./cert/client-key.pem \--etcd-servers https://192.168.26.12:2379,https://192.168.26.21:2379,https://192.168.26.22:2379 \--service-account-key-file ./cert/ca-key.pem \--service-cluster-ip-range 10.168.0.0/16 \--service-node-port-range 3000-29999 \--target-ram-mb=1024 \--kubelet-client-certificate ./cert/client.pem \--kubelet-client-key ./cert/client-key.pem \--log-dir /data/logs/kubernetes/kube-apiserver \--tls-cert-file ./cert/apiserver.pem \--tls-private-key-file ./cert/apiserver-key.pem \--v 2 \--enable-aggregator-routing=true \--requestheader-client-ca-file=./cert/ca.pem \--requestheader-allowed-names=aggregator,metrics-server \--requestheader-extra-headers-prefix=X-Remote-Extra- \--requestheader-group-headers=X-Remote-Group \--requestheader-username-headers=X-Remote-User \--proxy-client-cert-file=./cert/metrics-server.pem \--proxy-client-key-file=./cert/metrics-server-key.pem

- apiserver-count 2:apiserver的数量

- 修改

etcd-servers、service-cluster-ip-range - 查看帮助命令,查看每行参数的意思:

[root@vms21 bin]# ./kube-apiserver --help|grep -A 5 target-ram-mb--target-ram-mb int Memory limit for apiserver in MB (used to configure sizes of caches, etc.)

8 调整权限和目录

在vms21.cos.com上:

[root@vms21 bin]# chmod +x /opt/kubernetes/server/bin/kube-apiserver.sh[root@vms21 bin]# mkdir -p /data/logs/kubernetes/kube-apiserver

9 创建supervisor配置

在vms21.cos.com上:

[root@vms21 bin]# vi /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-26-21] # 21根据实际IP地址更改command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

10 启动服务并检查

在vms21.cos.com上:

[root@vms21 bin]# supervisorctl updatekube-apiserver-26-21: added process group[root@vms21 bin]# supervisorctl status kube-apiserver-26-21kube-apiserver-26-21 RUNNING pid 2016, uptime 0:00:57[root@vms21 bin]# tail /data/logs/kubernetes/kube-apiserver/apiserver.stdout.log #查看日志[root@vms21 bin]# netstat -luntp | grep kube-apitcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 2017/./kube-apiservtcp6 0 0 :::6443 :::* LISTEN 2017/./kube-apiserv[root@vms21 bin]# ps uax|grep kube-apiserver|grep -v greproot 2016 0.0 0.1 234904 3528 ? S 14:54 0:00 /bin/bash /opt/kubernetes/server/bin/kube-apiserver.shroot 2017 21.7 18.9 496864 380332 ? Sl 14:54 0:46 ./kube-apiserver --apiserver-count 2 --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log --audit-policy-file ./conf/audit.yaml --authorization-mode RBAC --client-ca-file ./cert/ca.pem --requestheader-client-ca-file ./cert/ca.pem --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --etcd-cafile ./cert/ca.pem --etcd-certfile ./cert/client.pem --etcd-keyfile ./cert/client-key.pem --etcd-servers https://192.168.26.12:2379,https://192.168.26.21:2379,https://192.168.26.22:2379 --service-account-key-file ./cert/ca-key.pem --service-cluster-ip-range 10.168.0.0/16 --service-node-port-range 3000-29999 --target-ram-mb=1024 --kubelet-client-certificate ./cert/client.pem --kubelet-client-key ./cert/client-key.pem --log-dir /data/logs/kubernetes/kube-apiserver --tls-cert-file ./cert/apiserver.pem --tls-private-key-file ./cert/apiserver-key.pem --v 2

启停apiserver的命令:

# supervisorctl start kube-apiserver-26-21# supervisorctl stop kube-apiserver-26-21# supervisorctl restart kube-apiserver-26-21# supervisorctl status kube-apiserver-26-21

11 快速部署到vms22

在vms22.cos.com上:

- 复制软件、证书、创建目录

[root@vms22 opt]# scp -r vms21:/opt/kubernetes-v1.18.5/ /opt[root@vms22 opt]# ln -s /opt/kubernetes-v1.18.5 /opt/kubernetes[root@vms22 opt]# ls -l /opt|grep kuberneteslrwxrwxrwx 1 root root 23 Jul 16 15:22 kubernetes -> /opt/kubernetes-v1.18.5drwxr-xr-x 4 root root 79 Jul 16 15:20 kubernetes-v1.18.5[root@vms22 opt]# ls -l /opt/kubernetes/server/bintotal 458364drwxr-xr-x 2 root root 124 Jul 16 15:20 certdrwxr-xr-x 2 root root 43 Jul 16 15:20 conf-rwxr-xr-x 1 root root 120659968 Jul 16 15:20 kube-apiserver-rwxr-xr-x 1 root root 1089 Jul 16 15:20 kube-apiserver.sh-rwxr-xr-x 1 root root 110059520 Jul 16 15:20 kube-controller-manager-rwxr-xr-x 1 root root 44027904 Jul 16 15:20 kubectl-rwxr-xr-x 1 root root 113283800 Jul 16 15:20 kubelet-rwxr-xr-x 1 root root 38379520 Jul 16 15:20 kube-proxy-rwxr-xr-x 1 root root 42946560 Jul 16 15:20 kube-scheduler[root@vms22 opt]# mkdir -p /data/logs/kubernetes/kube-apiserver

- 创建supervisor配置

kube-apiserver-26-22

[root@vms22 opt]# vi /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-26-22]command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

- 启动服务并检查。同上

12 配4层反向代理nginx + keepalived

vms11.cos.com、vms12.cos.com上:

安装nginx和keepalived

nginx安装

[root@vms11 ~]# rpm -qa nginx[root@vms11 ~]# yum install -y nginx...[root@vms11 ~]# rpm -qa nginxnginx-1.14.1-9.module_el8.0.0+184+e34fea82.x86_64

keepalived安装

[root@vms11 ~]# rpm -qa keepalived[root@vms11 ~]# yum install keepalived -y...[root@vms11 ~]# rpm -qa keepalivedkeepalived-2.0.10-10.el8.x86_64

nginx配置

[root@vms11 ~]# vi /etc/nginx/nginx.conf

- 末尾加上以下内容,stream 只能加在 main 中

- 此处只是简单配置下nginx,实际生产中,建议进行更合理的配置

stream {upstream kube-apiserver {server 192.168.26.21:6443 max_fails=3 fail_timeout=30s;server 192.168.26.22:6443 max_fails=3 fail_timeout=30s;}server {listen 8443;proxy_connect_timeout 2s;proxy_timeout 900s;proxy_pass kube-apiserver;}}

检查配置、启动、测试

[root@vms11 ~]# nginx -tnginx: the configuration file /etc/nginx/nginx.conf syntax is oknginx: configuration file /etc/nginx/nginx.conf test is successful[root@vms11 ~]# systemctl enable nginxCreated symlink /etc/systemd/system/multi-user.target.wants/nginx.service → /usr/lib/systemd/system/nginx.service.[root@vms11 ~]# systemctl start nginx[root@vms11 ~]# curl 127.0.0.1:8443 #测试几次Client sent an HTTP request to an HTTPS server.

keepalived配置

参考链接:https://www.cnblogs.com/zeq912/p/11065199.html

[root@vms11 ~]# vi /etc/keepalived/check_port.sh #vms12也要配置

#!/bin/bash#keepalived 监控端口脚本#使用方法:#在keepalived的配置文件中#vrrp_script check_port {#创建一个vrrp_script脚本,检查配置# script "/etc/keepalived/check_port.sh 6379" #配置监听的端口# interval 2 #检查脚本的频率,单位(秒)#}CHK_PORT=$1if [ -n "$CHK_PORT" ];thenPORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`if [ $PORT_PROCESS -eq 0 ];thenecho "Port $CHK_PORT Is Not Used,End."#systemctl stop keepalivedexit 1fielseecho "Check Port Cant Be Empty!"fi

注释systemctl stop keepalived后主备自动切换,VIP自动漂移。否则,VIP不会自动切换和漂移,需要人工确认及操作

测试:

[root@vms11 ~]# ss -lnt|grep 8443|wc -l1[root@vms12 ~]# ss -lnt|grep 8443|wc -l1

参考脚本:

#!/bin/bashif [ $# -eq 1 ] && [[ $1 =~ ^[0-9]+ ]];then[ $(netstat -lntp|grep ":$1 " |wc -l) -eq 0 ] && echo "[ERROR] nginx may be not running!" && exit 1 || exit 0elseecho "[ERROR] need one port!"exit 1fi

chmod +x /etc/keepalived/check_port.sh

配置主节点(vms11):[root@vms11 ~]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id 192.168.26.11}vrrp_script chk_nginx {script "/etc/keepalived/check_port.sh 8443"interval 2weight -20}vrrp_instance VI_1 {state MASTERinterface ens160virtual_router_id 251priority 100advert_int 1mcast_src_ip 192.168.26.11nopreemptauthentication {auth_type PASSauth_pass 11111111}track_script {chk_nginx}virtual_ipaddress {192.168.26.10}}

interface # 根据实际网卡更改,用ifconfig查看- 主节点中,必须加上

nopreempt- 因为一旦因为网络抖动导致VIP漂移,不能让它自动飘回来,必须要分析原因后手动迁移VIP到主节点!如主节点确认正常后,重启备节点的keepalive,让VIP飘到主节点。

- keepalived 的日志输出配置此处省略,生产中需要进行处理。

配置备节点(vms12):[root@vms12 ~]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id 192.168.26.12script_user rootenable_script_security}vrrp_script chk_nginx {script "/etc/keepalived/check_port.sh 8443"interval 2weight -20}vrrp_instance VI_1 {state BACKUPinterface ens160virtual_router_id 251mcast_src_ip 192.168.26.12priority 90advert_int 1authentication {auth_type PASSauth_pass 11111111}track_script {chk_nginx}virtual_ipaddress {192.168.26.10}}

启动keepalived,并检查VIP(192.168.26.10)是否出现

[root@vms11 ~]# systemctl start keepalived ; systemctl enable keepalivedCreated symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.[root@vms12 ~]# systemctl start keepalived ; systemctl enable keepalivedCreated symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.[root@vms11 ~]# netstat -luntp | grep 8443tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 24891/nginx: master[root@vms12 ~]# netstat -luntp | grep 8443tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 930/nginx: master p[root@vms11 ~]# ip addr show ens1602: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:ce:4b:d4 brd ff:ff:ff:ff:ff:ffinet 192.168.26.11/24 brd 192.168.26.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet 192.168.26.10/32 scope global ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fece:4bd4/64 scope linkvalid_lft forever preferred_lft forever

测试主备(自动)切换、VIP漂移

- 在

vms11主:停nginx,VIP(192.168.26.10)漂移走

[root@vms11 ~]# netstat -luntp | grep 8443tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 20526/nginx: master[root@vms11 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:ce:4b:d4 brd ff:ff:ff:ff:ff:ffinet 192.168.26.11/24 brd 192.168.26.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet 192.168.26.10/32 scope global ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fece:4bd4/64 scope linkvalid_lft forever preferred_lft forever[root@vms11 ~]# nginx -s stop[root@vms11 ~]# netstat -luntp | grep 8443[root@vms11 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:ce:4b:d4 brd ff:ff:ff:ff:ff:ffinet 192.168.26.11/24 brd 192.168.26.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fece:4bd4/64 scope linkvalid_lft forever preferred_lft forever

- 在

vms12从:VIP漂移到备上

[root@vms12 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:40:05:87 brd ff:ff:ff:ff:ff:ffinet 192.168.26.12/24 brd 192.168.26.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet 192.168.26.10/32 scope global ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe40:587/64 scope linkvalid_lft forever preferred_lft forever

- 在

vms11主:启动nginx,过一会VIP自动漂移回来

[root@vms11 ~]# nginx[root@vms11 ~]# netstat -luntp | grep 8443tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 21045/nginx: master[root@vms11 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:ce:4b:d4 brd ff:ff:ff:ff:ff:ffinet 192.168.26.11/24 brd 192.168.26.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet 192.168.26.10/32 scope global ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fece:4bd4/64 scope linkvalid_lft forever preferred_lft forever

在vms12从:VIP已经自动漂移到主了

[root@vms12 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:40:05:87 brd ff:ff:ff:ff:ff:ffinet 192.168.26.12/24 brd 192.168.26.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe40:587/64 scope linkvalid_lft forever preferred_lft forever

主的上面nginx杀掉后,vip漂移到备上能正常访问,主的nginx起来后,vip又飘回到主上。

实际生产情况一般要求:Keepalived设置master故障恢复后不重新抢回VIP,需人工确认好后才能切换和漂移

如果vip出现变动,主keepalived恢复后,一定要确认主keepalived端口起来, 服务搞好后,再重启keepalived(主、备),让vip变回主keepalived。

此时需要修改检查脚本。参考脚本:/etc/keepalived/check_keepalived.sh

#!/bin/bashNGINX_SBIN=`which nginx`NGINX_PORT=$1function check_nginx(){NGINX_STATUS=`nmap localhost -p ${NGINX_PORT} | grep "8443/tcp open" | awk '{print $2}'`NGINX_PROCESS=`ps -ef | grep nginx|grep -v grep|wc -l`}check_nginxif [ "$NGINX_STATUS" != "open" -o $NGINX_PROCESS -lt 2 ]then${NGINX_SBIN} -s stop${NGINX_SBIN}sleep 3check_nginxif [ "$NGINX_STATUS" != "open" -o $NGINX_PROCESS -lt 2 ];thensystemctl stop keepalivedfifi

kube-controller-manager

1 集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| vms21.cos.com | controller-manager | 192.168.26.21 |

| vms22.cos.com | controller-manager | 192.168.26.22 |

注意:这里部署文档以vms21.cos.com主机为例,另外一台运算节点安装部署方法类似。

controller-manager 设置为只调用当前机器的 apiserver,走127.0.0.1网卡,因此不配置SSL证书。

2 创建启动脚本

vms21.cos.com上:

[root@vms21 bin]# vi /opt/kubernetes/server/bin/kube-controller-manager.sh

#!/bin/sh./kube-controller-manager \--cluster-cidr 172.26.0.0/16 \--leader-elect true \--log-dir /data/logs/kubernetes/kube-controller-manager \--master http://127.0.0.1:8080 \--service-account-private-key-file ./cert/ca-key.pem \--service-cluster-ip-range 10.168.0.0/16 \--root-ca-file ./cert/ca.pem \--v 2

[root@vms21 bin]# mkdir -p /data/logs/kubernetes/kube-controller-manager

[root@vms21 bin]# chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh

3 创建supervisor配置

[root@vms21 bin]# vi /etc/supervisord.d/kube-conntroller-manager.ini

[program:kube-controller-manager-26-21]command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

注意:vms22上修改为[program:kube-controller-manager-26-22]

4 启动服务并检查

[root@vms21 bin]# supervisorctl updatekube-controller-manager-26-21: added process group[root@vms21 bin]# supervisorctl statusetcd-server-26-21 RUNNING pid 1030, uptime 6:15:58kube-apiserver-26-21 RUNNING pid 1031, uptime 6:15:58kube-controller-manager-26-21 RUNNING pid 1659, uptime 0:00:35

[root@vms22 bin]# supervisorctl updatekube-controller-manager-26-22: added process group[root@vms22 bin]# supervisorctl statusetcd-server-26-22 RUNNING pid 1045, uptime 6:20:57kube-apiserver-26-22 RUNNING pid 1046, uptime 6:20:57kube-controller-manager-26-22 RUNNING pid 1660, uptime 0:00:37

kube-scheduler

1 集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| vms21.cos.com | kube-scheduler | 192.168.26.21 |

| vms22.cos.com | kube-scheduler | 192.168.26.22 |

注意:这里部署文档以vms21.cos.com主机为例,另外一台运算节点安装部署方法类似。

kube-scheduler 设置为只调用当前机器的 apiserver,走127.0.0.1网卡,因此不配置SSL证书。

2 创建启动脚本

vms21.cos.com上:

[root@vms21 bin]# vi /opt/kubernetes/server/bin/kube-scheduler.sh

#!/bin/sh./kube-scheduler \--leader-elect \--log-dir /data/logs/kubernetes/kube-scheduler \--master http://127.0.0.1:8080 \--v 2

[root@vms21 bin]# chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh

[root@vms21 bin]# mkdir -p /data/logs/kubernetes/kube-scheduler

3 创建supervisor配置

[root@vms21 bin]# vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-26-21]command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

注意:vms22上修改为[program:kube-scheduler-26-22]

4 启动服务并检查

[root@vms21 bin]# supervisorctl updatekube-scheduler-26-21: added process group[root@vms21 bin]# supervisorctl statusetcd-server-26-21 RUNNING pid 1030, uptime 6:36:44kube-apiserver-26-21 RUNNING pid 1031, uptime 6:36:44kube-controller-manager-26-21 RUNNING pid 1659, uptime 0:21:21kube-scheduler-26-21 RUNNING pid 1726, uptime 0:00:32

[root@vms22 bin]# supervisorctl updatekube-scheduler-26-22: added process group[root@vms22 bin]# supervisorctl statusetcd-server-26-22 RUNNING pid 1045, uptime 6:38:26kube-apiserver-26-22 RUNNING pid 1046, uptime 6:38:26kube-controller-manager-26-22 RUNNING pid 1660, uptime 0:18:06kube-scheduler-26-22 RUNNING pid 1688, uptime 0:00:50

5 检查主控节点状态

至此,主控节点组件已经部署完成!

[root@vms21 bin]# ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl #给kubectl创建软连接[root@vms21 bin]# kubectl get csNAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-0 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}etcd-2 Healthy {"health":"true"}

kubelet

1 集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| vms21.cos.com | kubelet | 192.168.26.21 |

| vms22.cos.com | kubelet | 192.168.26.22 |

注意:这里部署文档以vms21.cos.com主机为例,其它运算节点安装部署方法类似

2 签发kubelet证书

运维主机vms200.cos.com上:

创建生成证书签名请求(csr)的JSON配置文件

/opt/certs:将所有可能的kubelet机器IP添加到hosts中 [root@vms200 certs]# vi kubelet-csr.json

{"CN": "k8s-kubelet","hosts": ["127.0.0.1","192.168.26.10","192.168.26.21","192.168.26.22","192.168.26.23","192.168.26.24","192.168.26.25","192.168.26.26"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "op","OU": "ops"}]}

生成kubelet证书和私钥

[root@vms200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet2020/07/18 19:14:41 [INFO] generate received request2020/07/18 19:14:41 [INFO] received CSR2020/07/18 19:14:41 [INFO] generating key: rsa-20482020/07/18 19:14:41 [INFO] encoded CSR2020/07/18 19:14:41 [INFO] signed certificate with serial number 3081507703205394292544266920171603726664380708242020/07/18 19:14:41 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").

检查生成的证书、私钥

[root@vms200 certs]# ls kubelet* -l-rw-r--r-- 1 root root 1098 Jul 18 19:14 kubelet.csr-rw-r--r-- 1 root root 446 Jul 18 19:09 kubelet-csr.json-rw------- 1 root root 1675 Jul 18 19:14 kubelet-key.pem-rw-r--r-- 1 root root 1448 Jul 18 19:14 kubelet.pem

3 拷贝证书至各运算节点,并创建配置

vms21.cos.com上:

拷贝证书、私钥,注意私钥文件属性600

[root@vms21 ~]# cd /opt/kubernetes/server/bin/cert/

[root@vms21 cert]# scp vms200:/opt/certs/kubelet.pem .[root@vms21 cert]# scp vms200:/opt/certs/kubelet-key.pem .[root@vms21 cert]# lltotal 32-rw------- 1 root root 1679 Jul 16 14:14 apiserver-key.pem-rw-r--r-- 1 root root 1594 Jul 16 14:14 apiserver.pem-rw------- 1 root root 1679 Jul 16 14:13 ca-key.pem-rw-r--r-- 1 root root 1338 Jul 16 14:12 ca.pem-rw------- 1 root root 1675 Jul 16 14:13 client-key.pem-rw-r--r-- 1 root root 1363 Jul 16 14:13 client.pem-rw------- 1 root root 1675 Jul 18 20:16 kubelet-key.pem-rw-r--r-- 1 root root 1448 Jul 18 20:16 kubelet.pem

创建配置

本步骤只需要创建一次,因为写入到etcd

- set-cluster # 创建需要连接的集群信息,可以创建多个k8s集群信息

[root@vms21 ~]# cd /opt/kubernetes/server/bin/conf[root@vms21 conf]# kubectl config set-cluster myk8s \--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \--embed-certs=true \--server=https://192.168.26.10:8443 \--kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

执行输出:Cluster "myk8s" set.

- set-credentials # 创建用户账号,即用户登陆使用的客户端私有和证书,可以创建多个证书

[root@vms21 conf]# kubectl config set-credentials k8s-node \--client-certificate=/opt/kubernetes/server/bin/cert/client.pem \--client-key=/opt/kubernetes/server/bin/cert/client-key.pem \--embed-certs=true \--kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

执行输出:User "k8s-node" set.

- set-context # 设置context,即确定账号和集群对应关系

[root@vms21 conf]# kubectl config set-context myk8s-context \--cluster=myk8s \--user=k8s-node \--kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

执行输出:Context "myk8s-context" created.

- use-context # 设置当前使用哪个context

[root@vms21 conf]# kubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

执行输出:Switched to context "myk8s-context".

授权k8s-node用户

此步骤只需要在一台master节点执行(因为不论在哪个节点创建,已经同步到etcd上。)

授权 k8s-node 用户绑定集群角色 system:node ,让 k8s-node 成为具备运算节点的权限。

创建资源配置文件

k8s-node.yaml[root@vms21 conf]# vim k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: k8s-noderoleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:nodesubjects:- apiGroup: rbac.authorization.k8s.iokind: Username: k8s-node

应用资源配置文件,并检查

[root@vms21 conf]# kubectl apply -f k8s-node.yamlclusterrolebinding.rbac.authorization.k8s.io/k8s-node created[root@vms21 conf]# kubectl get clusterrolebinding k8s-nodeNAME ROLE AGEk8s-node ClusterRole/system:node 21s

装备pause镜像

将pause镜像放入到harbor私有仓库中,仅在 vms200 操作:

[root@vms200 ~]# docker pull kubernetes/pause[root@vms200 ~]# docker image tag kubernetes/pause:latest harbor.op.com/public/pause:latest[root@vms200 ~]# docker login -u admin harbor.op.comPassword:WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded[root@vms200 ~]# docker image push harbor.op.com/public/pause:latestThe push refers to repository [harbor.op.com/public/pause]5f70bf18a086: Mounted from public/nginxe16a89738269: Pushedlatest: digest: sha256:b31bfb4d0213f254d361e0079deaaebefa4f82ba7aa76ef82e90b4935ad5b105 size: 938

登录harbor.op.com查看:

获取私有仓库登录密码

[root@vms200 ~]# ls /root/.docker/config.json/root/.docker/config.json[root@vms200 ~]# cat /root/.docker/config.json{"auths": {"harbor.op.com": {"auth": "YWRtaW46SGFyYm9yMTI1NDM="}},"HttpHeaders": {"User-Agent": "Docker-Client/19.03.12 (linux)"}}[root@vms200 ~]# echo "YWRtaW46SGFyYm9yMTI1NDM=" | base64 -dadmin:Harbor12543

注意:也可以在各运算节点使用docker login harbor.op.com,输入用户名,密码

创建kubelet启动脚本

在node节点创建脚本并启动kubelet,涉及服务器:vms21、vms22

vms21.cos.com上:

[root@vms21 bin]# vi /opt/kubernetes/server/bin/kubelet.sh

#!/bin/sh./kubelet \--anonymous-auth=false \--cgroup-driver systemd \--cluster-dns 10.168.0.2 \--cluster-domain cluster.local \--runtime-cgroups=/systemd/system.slice \--kubelet-cgroups=/systemd/system.slice \--fail-swap-on="false" \--client-ca-file ./cert/ca.pem \--tls-cert-file ./cert/kubelet.pem \--tls-private-key-file ./cert/kubelet-key.pem \--hostname-override vms21.cos.com \--image-gc-high-threshold 20 \--image-gc-low-threshold 10 \--kubeconfig ./conf/kubelet.kubeconfig \--log-dir /data/logs/kubernetes/kube-kubelet \--pod-infra-container-image harbor.op.com/public/pause:latest \--root-dir /data/kubelet \--authentication-token-webhook=true

注意:

- kubelet集群各主机的启动脚本略有不同,部署其他节点时注意修改。

vms21.cos.com可以使用替换ip192.168.26.21 - 部署

metrics-server时,必须添加--authentication-token-webhook=true

vms22.cos.com上:--hostname-override vms22.cos.com

检查配置,授权,创建目录

[root@vms21 bin]# ls -l /opt/kubernetes/server/bin/conf/kubelet.kubeconfig-rw------- 1 root root 6187 Jul 18 20:46 /opt/kubernetes/server/bin/conf/kubelet.kubeconfig[root@vms21 bin]# chmod +x /opt/kubernetes/server/bin/kubelet.sh[root@vms21 bin]# mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

4 创建supervisor配置

[root@vms21 bin]# vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-26-21]command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

vms22.cos.com上:[program:kube-kubelet-26-22]

5 启动服务并检查

vms21.cos.com部署成功时:

[root@vms21 bin]# supervisorctl updatekube-kubelet-26-21: added process group[root@vms21 bin]# supervisorctl statusetcd-server-26-21 RUNNING pid 1040, uptime 3:38:37kube-apiserver-26-21 RUNNING pid 1041, uptime 3:38:37kube-controller-manager-26-21 RUNNING pid 1043, uptime 3:38:37kube-kubelet-26-21 RUNNING pid 1685, uptime 0:00:43kube-scheduler-26-21 RUNNING pid 1354, uptime 3:15:36[root@vms21 bin]# kubectl get nodesNAME STATUS ROLES AGE VERSIONvms21.cos.com Ready <none> 107s v1.18.5

vms22.cos.com部署成功时:

[root@vms22 conf]# supervisorctl updatekube-kubelet-26-22: added process group[root@vms22 conf]# supervisorctl statusetcd-server-26-22 RUNNING pid 1038, uptime 4:21:23kube-apiserver-26-22 RUNNING pid 1715, uptime 0:48:01kube-controller-manager-26-22 RUNNING pid 1729, uptime 0:47:10kube-kubelet-26-22 STARTING #需要等待30skube-scheduler-26-22 RUNNING pid 1739, uptime 0:45:15[root@vms22 conf]# supervisorctl statusetcd-server-26-22 RUNNING pid 1038, uptime 4:21:37kube-apiserver-26-22 RUNNING pid 1715, uptime 0:48:15kube-controller-manager-26-22 RUNNING pid 1729, uptime 0:47:24kube-kubelet-26-22 RUNNING pid 1833, uptime 0:00:39kube-scheduler-26-22 RUNNING pid 1739, uptime 0:45:29[root@vms22 conf]# kubectl get nodesNAME STATUS ROLES AGE VERSIONvms21.cos.com Ready <none> 18m v1.18.5vms22.cos.com Ready <none> 48s v1.18.5

检查所有节点并给节点打上标签

[root@vms21 bin]# kubectl label nodes vms21.cos.com node-role.kubernetes.io/master=[root@vms21 bin]# kubectl label nodes vms21.cos.com node-role.kubernetes.io/worker=[root@vms21 bin]# kubectl label nodes vms22.cos.com node-role.kubernetes.io/worker=[root@vms21 bin]# kubectl label nodes vms22.cos.com node-role.kubernetes.io/master=[root@vms21 bin]# kubectl get nodesNAME STATUS ROLES AGE VERSIONvms21.cos.com Ready master,worker 28m v1.18.5vms22.cos.com Ready master,worker 11m v1.18.5

kube-proxy

1 集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| vms21.cos.com | kube-proxy | 192.168.26.21 |

| vms22.cos.com | kube-proxy | 192.168.26.22 |

注意:这里部署文档以vms21.cos.com主机为例,其它运算节点安装部署方法类似。

2 签发kube-proxy证书

运维主机vms200.cos.com上:

创建生成证书签名请求(csr)的JSON配置文件

[root@vms200 ~]# cd /opt/certs/

[root@vms200 certs]# vi kube-proxy-csr.json

{"CN": "system:kube-proxy","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "op","OU": "ops"}]}

说明:这里CN 对应的是k8s中的角色

生成kube-proxy证书和私钥

[root@vms200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client2020/07/18 23:44:36 [INFO] generate received request2020/07/18 23:44:36 [INFO] received CSR2020/07/18 23:44:36 [INFO] generating key: rsa-20482020/07/18 23:44:36 [INFO] encoded CSR2020/07/18 23:44:36 [INFO] signed certificate with serial number 3842996367283221551967650138797226542414034796142020/07/18 23:44:36 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").

因为kube-proxy使用的用户是kube-proxy,不能使用client证书,必须要重新签发自己的证书

检查生成的证书、私钥

[root@vms200 certs]# ls kube-proxy-c* -l-rw-r--r-- 1 root root 1005 Jul 18 23:44 kube-proxy-client.csr-rw------- 1 root root 1675 Jul 18 23:44 kube-proxy-client-key.pem-rw-r--r-- 1 root root 1375 Jul 18 23:44 kube-proxy-client.pem-rw-r--r-- 1 root root 267 Jul 18 23:39 kube-proxy-csr.json

3 拷贝证书至各运算节点,并创建配置

vms21.cos.com上:

拷贝证书、私钥,注意私钥文件属性600

[root@vms21 ~]# cd /opt/kubernetes/server/bin/cert

[root@vms21 cert]# scp vms200:/opt/certs/kube-proxy-client.pem .[root@vms21 cert]# scp vms200:/opt/certs/kube-proxy-client-key.pem .[root@vms21 cert]# ls -ltotal 40-rw------- 1 root root 1679 Jul 16 14:14 apiserver-key.pem-rw-r--r-- 1 root root 1594 Jul 16 14:14 apiserver.pem-rw------- 1 root root 1679 Jul 16 14:13 ca-key.pem-rw-r--r-- 1 root root 1338 Jul 16 14:12 ca.pem-rw------- 1 root root 1675 Jul 16 14:13 client-key.pem-rw-r--r-- 1 root root 1363 Jul 16 14:13 client.pem-rw------- 1 root root 1675 Jul 18 20:16 kubelet-key.pem-rw-r--r-- 1 root root 1448 Jul 18 20:16 kubelet.pem-rw------- 1 root root 1675 Jul 18 23:54 kube-proxy-client-key.pem-rw-r--r-- 1 root root 1375 Jul 18 23:53 kube-proxy-client.pem

创建配置

[root@vms21 cert]# cd /opt/kubernetes/server/bin/conf

(1)、set-cluster

[root@vms21 conf]# kubectl config set-cluster myk8s \--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \--embed-certs=true \--server=https://192.168.26.10:8443 \--kubeconfig=kube-proxy.kubeconfig

注意修改--server=https://192.168.26.10:8443 此IP地址是keeplive的VIP地址

(2)、set-credentials

[root@vms21 conf]# kubectl config set-credentials kube-proxy \--client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem \--client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem \--embed-certs=true \--kubeconfig=kube-proxy.kubeconfig

(3)、set-context

[root@vms21 conf]# kubectl config set-context myk8s-context \--cluster=myk8s \--user=kube-proxy \--kubeconfig=kube-proxy.kubeconfig

(4)、use-context

[root@vms21 conf]# kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

(5)、拷贝kube-proxy.kubeconfig 到 vms22的conf目录下

[root@vms21 conf]# scp kube-proxy.kubeconfig vms22:/opt/kubernetes/server/bin/conf/

vms22部署时,直接拷贝这个文件,前面(1)~(4)不用再执行,因为配置保存在etcd

4 创建kube-proxy启动脚本

vms21.cos.com上:

[root@vms21 bin]# vi /opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh./kube-proxy \--cluster-cidr 172.26.0.0/16 \--hostname-override vms21.cos.com \--proxy-mode=ipvs \--ipvs-scheduler=nq \--kubeconfig ./conf/kube-proxy.kubeconfig

注意:kube-proxy集群各主机的启动脚本略有不同,部署其他节点时注意修改。这里设置ipvs,如果不设置则使用iptables。

kube-proxy 共有3种流量调度模式,分别是 namespace,iptables,ipvs,其中ipvs性能最好。

vms22部署时,修改为--hostname-override vms22.cos.com

加载ipvs模块

[root@vms21 bin]# lsmod |grep ip_vs[root@vms21 bin]# vi /root/ipvs.sh

#!/bin/bashipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")do/sbin/modinfo -F filename $i &>/dev/nullif [ $? -eq 0 ];then/sbin/modprobe $ifidone

[root@vms21 bin]# chmod +x /root/ipvs.sh[root@vms21 bin]# sh /root/ipvs.sh[root@vms21 bin]# lsmod |grep ip_vsip_vs_wrr 16384 0ip_vs_wlc 16384 0ip_vs_sh 16384 0ip_vs_sed 16384 0ip_vs_rr 16384 0ip_vs_pe_sip 16384 0nf_conntrack_sip 32768 1 ip_vs_pe_sipip_vs_ovf 16384 0ip_vs_nq 16384 0ip_vs_lc 16384 0ip_vs_lblcr 16384 0ip_vs_lblc 16384 0ip_vs_ftp 16384 0ip_vs_fo 16384 0ip_vs_dh 16384 0ip_vs 172032 28 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_ovf,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_pe_sip,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftpnf_defrag_ipv6 20480 1 ip_vsnf_nat 36864 2 nf_nat_ipv4,ip_vs_ftpnf_conntrack 155648 8 xt_conntrack,nf_conntrack_ipv4,nf_nat,ipt_MASQUERADE,nf_nat_ipv4,nf_conntrack_sip,nf_conntrack_netlink,ip_vslibcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

或使用如下脚本直接加载ipvs模块 [root@vms22 cert]# for i in

(uname -r)/kernel/net/netfilter/ipvs|grep -o “.]*”);do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

ip_vs_dhip_vs_foip_vs_ftpip_vsip_vs_lblcip_vs_lblcrip_vs_lcip_vs_nqip_vs_ovfip_vs_pe_sipip_vs_rrip_vs_sedip_vs_ship_vs_wlcip_vs_wrr

5 检查配置,权限,创建日志目录

vms21.cos.com上:

[root@vms21 bin]# ls -l /opt/kubernetes/server/bin/conf/|grep kube-proxy-rw------- 1 root root 6207 Jul 19 00:03 kube-proxy.kubeconfig[root@vms21 bin]# chmod +x /opt/kubernetes/server/bin/kube-proxy.sh[root@vms21 bin]# mkdir -p /data/logs/kubernetes/kube-proxy

6 创建supervisor配置

vms21.cos.com上:

[root@vms21 bin]# vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-26-21]command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

注意:vms22上修改为[program:kube-proxy-26-22]

7 启动服务并检查

vms21.cos.com上部署成功时:

[root@vms21 bin]# supervisorctl updatekube-proxy-26-21: added process group[root@vms21 bin]# supervisorctl statusetcd-server-26-21 RUNNING pid 1040, uptime 5:57:33kube-apiserver-26-21 RUNNING pid 5472, uptime 2:02:14kube-controller-manager-26-21 RUNNING pid 5695, uptime 2:01:09kube-kubelet-26-21 RUNNING pid 14382, uptime 1:31:04kube-proxy-26-21 RUNNING pid 31874, uptime 0:01:30kube-scheduler-26-21 RUNNING pid 5864, uptime 2:00:23

[root@vms21 bin]# yum install ipvsadm -y...[root@vms21 bin]# ipvsadm -LnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.168.0.1:443 nq-> 192.168.26.21:6443 Masq 1 0 0-> 192.168.26.22:6443 Masq 1 0 0

vms22.cos.com上部署成功时:

[root@vms22 cert]# supervisorctl updatekube-proxy-26-22: added process group[root@vms22 cert]# supervisorctl statusetcd-server-26-22 RUNNING pid 1038, uptime 12:44:43kube-apiserver-26-22 RUNNING pid 1715, uptime 9:11:21kube-controller-manager-26-22 RUNNING pid 1729, uptime 9:10:30kube-kubelet-26-22 RUNNING pid 1833, uptime 8:23:45kube-proxy-26-22 RUNNING pid 97223, uptime 0:00:43kube-scheduler-26-22 RUNNING pid 1739, uptime 9:08:35

[root@vms22 cert]# yum install ipvsadm -y...IP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.168.0.1:443 nq-> 192.168.26.21:6443 Masq 1 0 0-> 192.168.26.22:6443 Masq 1 0 0TCP 10.168.84.129:80 nq-> 172.26.22.2:80 Masq 1 0 0

[root@vms22 cert]# cat /data/logs/kubernetes/kube-proxy/proxy.stdout.logW0719 07:38:13.317104 97224 server.go:225] WARNING: all flags other than --config, --write-config-to, and --cleanup are deprecated. Please begin using a config file ASAP.I0719 07:38:13.622938 97224 node.go:136] Successfully retrieved node IP: 192.168.26.22I0719 07:38:13.623274 97224 server_others.go:259] Using ipvs Proxier.I0719 07:38:13.624775 97224 server.go:583] Version: v1.18.5I0719 07:38:13.626035 97224 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_max' to 131072I0719 07:38:13.626071 97224 conntrack.go:52] Setting nf_conntrack_max to 131072I0719 07:38:13.738249 97224 conntrack.go:83] Setting conntrack hashsize to 32768I0719 07:38:13.744138 97224 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' to 86400I0719 07:38:13.744178 97224 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' to 3600I0719 07:38:13.745933 97224 config.go:133] Starting endpoints config controllerI0719 07:38:13.745956 97224 shared_informer.go:223] Waiting for caches to sync for endpoints configI0719 07:38:13.745985 97224 config.go:315] Starting service config controllerI0719 07:38:13.745990 97224 shared_informer.go:223] Waiting for caches to sync for service configI0719 07:38:13.946923 97224 shared_informer.go:230] Caches are synced for endpoints configI0719 07:38:14.046439 97224 shared_informer.go:230] Caches are synced for service config

k8s集群验证

创建pod和service进行测试:

[root@vms21 ~]# kubectl run pod-ng —image=harbor.op.com/public/nginx:v1.7.9 —dry-run=client -o yaml > pod-ng.yaml

apiVersion: v1kind: Podmetadata:creationTimestamp: nulllabels:run: pod-ngname: pod-ngspec:containers:- image: harbor.op.com/public/nginx:v1.7.9name: pod-ngresources: {}dnsPolicy: ClusterFirstrestartPolicy: Alwaysstatus: {}

[root@vms21 ~]# kubectl apply -f pod-ng.yamlpod/pod-ng created[root@vms21 ~]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESpod-ng 1/1 Running 0 102s 172.26.22.2 vms22.cos.com <none> <none>

[root@vms21 ~]# kubectl expose pod pod-ng —name=svc-ng —port=80

[root@vms21 ~]# kubectl get svc svc-ngNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEsvc-ng ClusterIP 10.168.84.129 <none> 80/TCP 27s

[root@vms21 ~]# vi nginx-ds.yaml #设置

type: NodePort,会随机生成一个port

apiVersion: v1kind: Servicemetadata:name: nginx-svclabels:app: nginx-dsspec:type: NodePortselector:app: nginx-dsports:- name: httpport: 80targetPort: 80---apiVersion: apps/v1kind: DaemonSetmetadata:name: nginx-dslabels:addonmanager.kubernetes.io/mode: Reconcilespec:selector:matchLabels:app: nginx-dstemplate:metadata:labels:app: nginx-dsspec:containers:- name: my-nginximage: harbor.op.com/public/nginx:v1.7.9ports:- containerPort: 80

[root@vms21 ~]# kubectl apply -f nginx-ds.yaml[root@vms21 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-ds-j24hm 1/1 Running 1 4h27m 172.26.22.2 vms22.cos.com <none> <none>nginx-ds-zk2bg 1/1 Running 1 4h27m 172.26.21.2 vms21.cos.com <none> <none>[root@vms21 ~]# kubectl get svc -o wideNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORkubernetes ClusterIP 10.168.0.1 <none> 443/TCP 5h3m <none>nginx-svc NodePort 10.168.250.78 <none> 80:26604/TCP 4h30m app=nginx-ds

此时测试集群不同节点/pod容器互通情况,ping、curl -I不通:(pod、容器在这种场景下指的是同一个实体概念)

- 节点到其它节点pod容器

- pod容器到其它节点

- pod容器到其它节点pod容器

因此需要安装CNI网络插件,解决集群内pod容器/节点之间的互连。

[root@vms21 ~]# vi nginx-svc.yaml #创建指定端口的svc:

nodePort: 26133

apiVersion: v1kind: Servicemetadata:name: nginx-svc26133labels:app: nginx-dsspec:type: NodePortselector:app: nginx-dsports:- name: httpport: 80targetPort: 80nodePort: 26133

[root@vms21 ~]# kubectl apply -f nginx-svc.yamlservice/nginx-svc26133 created[root@vms21 ~]# kubectl get svc | grep 26133nginx-svc26133 NodePort 10.168.154.208 <none> 80:26133/TCP 3m19s

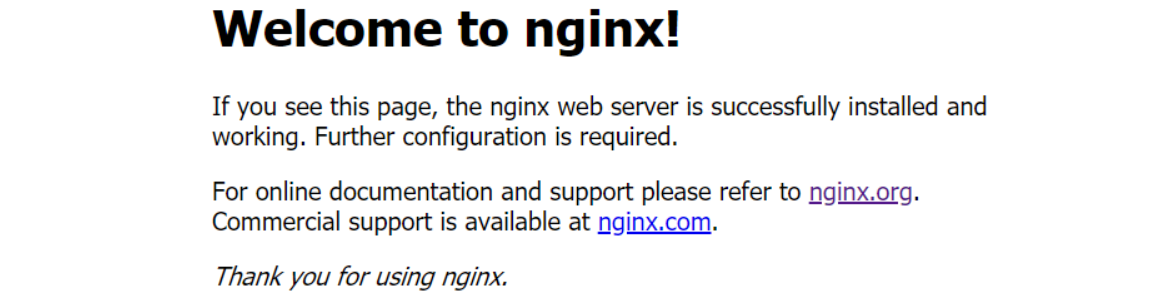

在浏览器输入:http://192.168.26.21:26133/ 或 http://192.168.26.22:26133/

可知,通过nodeIP:nodePort实现服务暴露

[root@vms21 ~]# vi nginx-svc2.yaml #不设置

type: NodePort

apiVersion: v1kind: Servicemetadata:name: nginx-svc2labels:app: nginx-dsspec:selector:app: nginx-dsports:- name: httpport: 80targetPort: 80

[root@vms21 ~]# kubectl apply -f nginx-svc2.yamlservice/nginx-svc2 created[root@vms21 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.168.0.1 <none> 443/TCP 2dnginx-svc NodePort 10.168.250.78 <none> 80:26604/TCP 47hnginx-svc2 ClusterIP 10.168.74.94 <none> 80/TCP 13snginx-svc26133 NodePort 10.168.154.208 <none> 80:26133/TCP 9m11s

nginx-svc2只能在pod内访问。不能将服务暴露出去

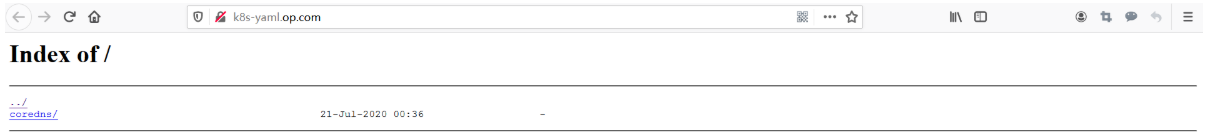

部署k8s资源配置清单的内网http服务

1 配置一个nginx虚拟主机,用以提供k8s统一的资源配置清单访问入口

运维主机vms200.cos.com上:

[root@vms200 ~]# vi /etc/nginx/conf.d/k8s-yaml.op.com.conf

server {listen 80;server_name k8s-yaml.op.com;location / {autoindex on;default_type text/plain;root /data/k8s-yaml;}}

[root@vms200 ~]# mkdir /data/k8s-yaml #以后所有的资源配置清单统一放置在运维主机的

/data/k8s-yaml目录下即可

[root@vms200 ~]# nginx -tnginx: the configuration file /etc/nginx/nginx.conf syntax is oknginx: configuration file /etc/nginx/nginx.conf test is successful[root@vms200 ~]# nginx -s reload

2 配置内网DNS解析k8s-yaml.op.com

DNS主机vms11.cos.com上:

[root@vms11 ~]# vi /var/named/op.com.zone

$ORIGIN op.com.$TTL 600 ; 10 minutes@ IN SOA dns.op.com. dnsadmin.op.com. (20200702 ; serial10800 ; refresh (3 hours)900 ; retry (15 minutes)604800 ; expire (1 week)86400 ; minimum (1 day))NS dns.op.com.$TTL 60 ; 1 minutedns A 192.168.26.11harbor A 192.168.26.200k8s-yaml A 192.168.26.200

serial前滚1个序号;在末尾增加一行k8s-yaml A 192.168.26.200

[root@vms11 ~]# systemctl restart named

[root@vms11 ~]# dig -t A k8s-yaml.op.com @192.168.26.11 +short

192.168.26.200

3 操作使用,提供统一存储及下载链接

运维主机vms200.cos.com上:

[root@vms200 ~]# cd /data/k8s-yaml[root@vms200 k8s-yaml]# lltotal 0[root@vms200 k8s-yaml]# mkdir coredns[root@vms200 k8s-yaml]# lltotal 0drwxr-xr-x 2 root root 6 Jul 21 08:36 coredns

浏览器登陆:http://k8s-yaml.op.com/

至此,完美完成k8s核心组件部署成功!