k8s-centos8u2-集群-企业级kubernetes容器云自动化运维平台

概述

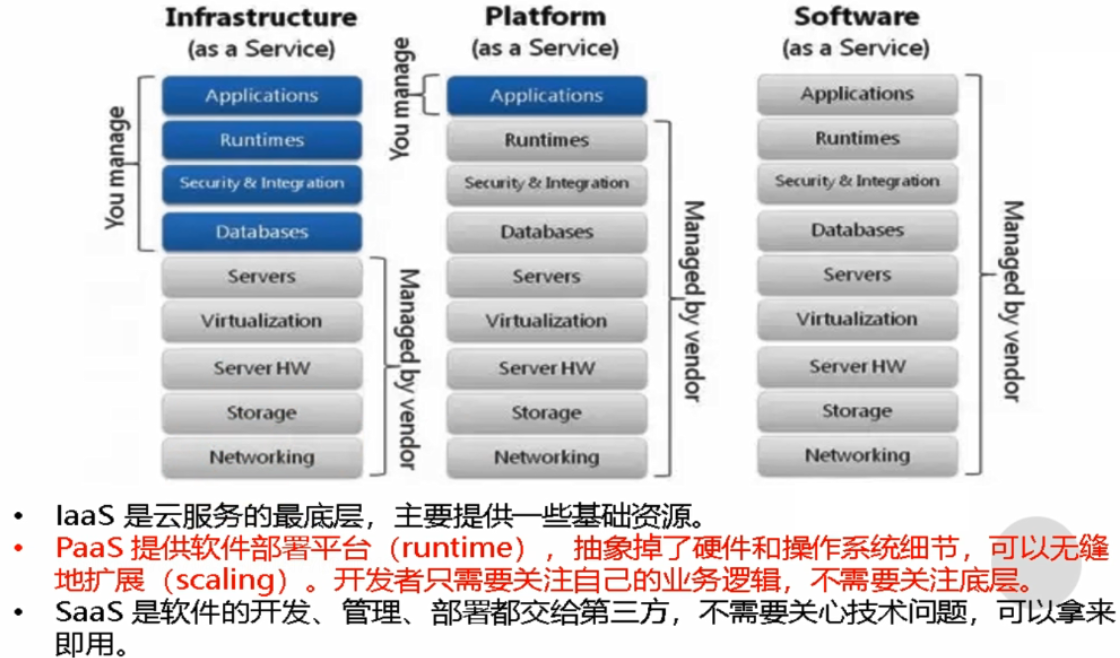

关于IaaS、PaaS、SaaS

K8S不是传统意义上的Paas平台,而很多互联网公司都需要的是Paas平台,而不是单纯的K8S,K8S及其周边生态(如logstash、Prometheus等)才是Paas平台,才是企业需要的。

获得PaaS能力的几个必要条件:

- 统一应用的运行时环境(docker)

- 有IaaS能力(K8S)

- 有可靠的中间件集群、数据库集群(DBA的主要工作)

- 有分布式存储集群(存储工程师的主要工作)

- 有适配的监控、日志系统(Prometheus、ELK)

- 有完善的CI、CD系统(Jenkins、Spinnaker)

阿里云、腾讯云等厂商都提供了K8S为底的服务,即你买了集群就给你配备了K8S,但我们不能完全依赖于厂商,而被钳制,同时我们也需要不断的学习以备更好的理解和使用,公司越大时越需要自己创建而不是依赖于厂商。

spinnaker:通过灵活和可配置 Pipelines,实现可重复的自动化部署;提供所有环境的全局视图,可随时查看应用程序在其部署 Pipeline 的状态;易于配置、维护和扩展;等等。

基于Kubernets生态的闭环

Kubernets集群的目标是为了构建一套Paas平台:

- 代码提交:开发将代码提交到Git仓库

- 持续集成:通过流水线将开发提交的代码克隆、编译、构建镜像并推到docker镜像仓库

- 持续部署:通过流水线配置Kubernetes中Pod控制器、service和ingress等,将docker镜像部署到测试环境

- 生产发布:通过流水线配置Kubernetes中Pod控制器、service和ingress等,将通过测试的docker镜像部署到生产环境

涉及到的功能组件:

- 持续集成用Jenkins实现

- 持续部署用Spinnaker实现

- 服务配置中心用Apollo实现

- 监控用Prometheus+Grafana实现

- 日志收集用ELK实现

- 通过外挂存储方式实现数据持久化,甚至可以通过StoargeClass配合PV和PVC来实现自动分配和挂盘

- 数据库属于有状态的服务,一般不会放Kubernets集群中

Spinnaker

- Spinnaker(https://www.spinnaker.io)是一个开源多云持续交付的平台,提供快速、可靠、稳定的软件变更服务。主要提供了两个功能。

- github地址:https://github.com/spinnaker

集群管理/应用管理

- 集群管理主要用于管理云资源,Spinnaker所说的”云“可以理解成AWS,即主要是laaS的资源,比如OpenStak,Google云,微软云等,后来还支持了容器与Kubernetes,但是管理方式还是按照管理基础设施的模式来设计的。

- Spinnaker使用应用程序管理功能(Application management)来查看和管理您的云资源,常涉及到的云资源有 Azure、AWS、Kubernetes等,不支持国内阿里云、腾讯云。

- Applications, clusters, server groups是Spinnaker用来描述服务的关键概念。Load balancers and firewalls 描述了您的服务如何向用户公开。

部署管理/应用部署

- 部署管理流程是Spinnaker的核心功能,使用minio作为持久化层,同时对接jenkins流水线创建的镜像,部署到Kubernetes集群中去,让服务真正运行起来。

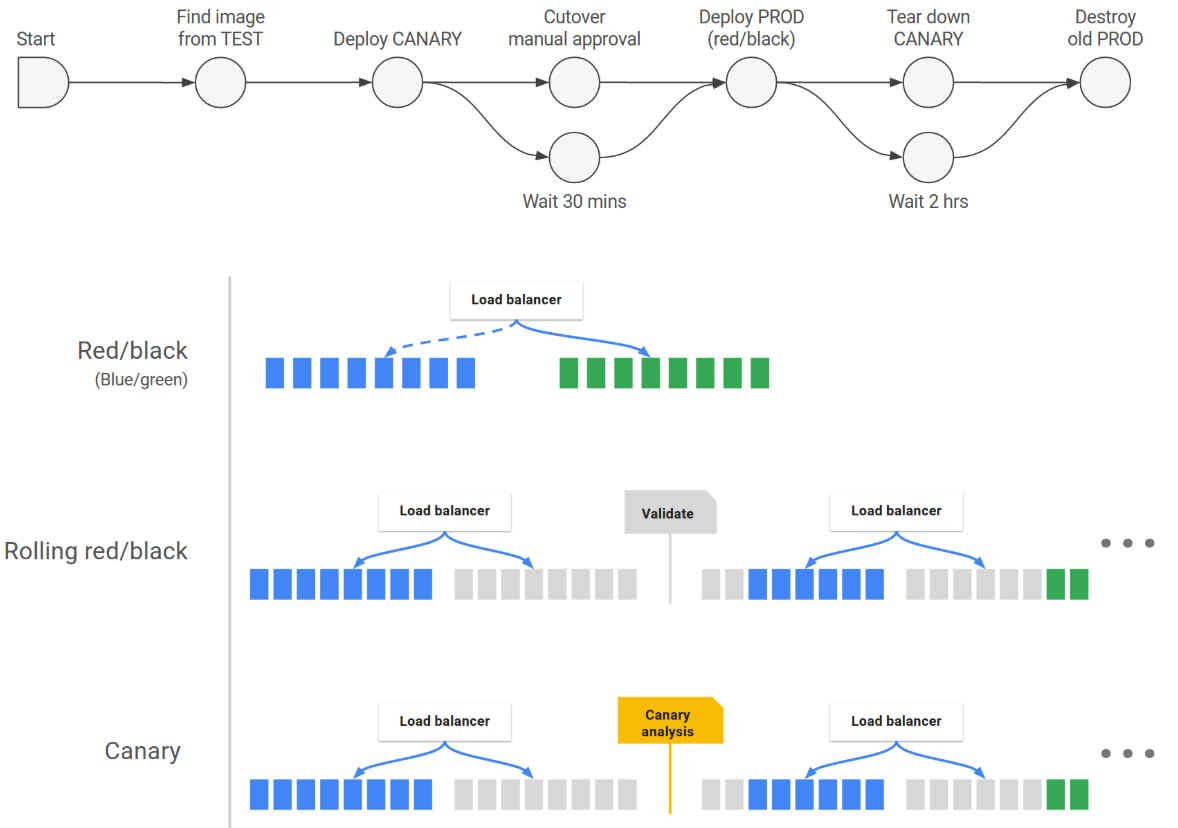

- 应用部署中核心功能有两个,流水线和部署策略。流水线将CI和CD过程串联起来,每个项目构建一个流水线,通过传递变化的参数(服务名、版本号、镜像标签等),调用Jenkins中持续集成流水线完成构建,再通过提前部署方式(如kubernetes中deployment/service/ingress)方式来将构建好的镜像发布到指定的环境中。部署策略是在通过测试环境测试之后,在生产环境中的升级策略,常用的有蓝绿发布、金丝雀发布、滚动发布。

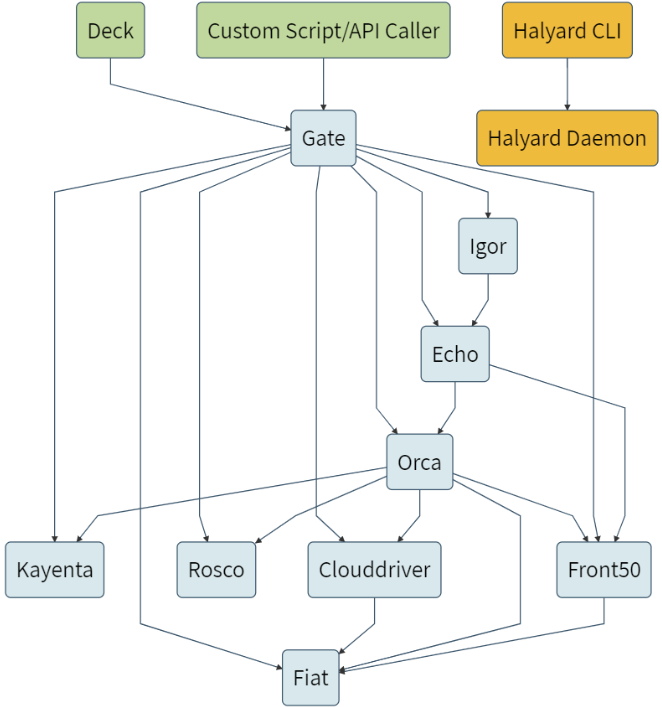

Spinnaker的常用组件

组件

- Deck是基于浏览器的UI。

- Gate是API网关。

Spinnaker UI和所有api调用程序都通过Gate与Spinnaker进行通信。 - Clouddriver操纵云环境资源的驱动,负责管理云平台,并为所有部署的资源编制索引/缓存。

- Front50用于管理数据持久化,用于保存应用程序、管道、项目和通知的元数据,存放在桶中。本实验采用Minio存储(类似s3)

- Igor用于通过Jenkins和Travis CI等系统中的持续集成作业来触发管道,并且它允许在管道中使用Jenkins / Travis阶段。

- Orca是编排引擎。它处理所有临时操作和流水线。

- Rosco是管理调度虚拟机。为云厂商提供VM镜像或者镜像模板,Kubernetes集群中不涉及

- Kayenta为Spinnaker提供自动化的金丝雀分析。本实验未涉及

- Fiat 是Spinnaker的认证服务。提供用户认证,本实验未涉及,后期可以考虑使用。

- Echo是信息通信服务。

它支持发送通知(例如,Slack,电子邮件,SMS),并处理来自Github之类的服务中传入的Webhook。 - Halyard: 提供spinnaker集群部署、升级和配置的,本实验未涉及

架构图

Spinnaker自己就是Spinnake一个微服务,由若干组件组成,整套逻辑架构图如下:

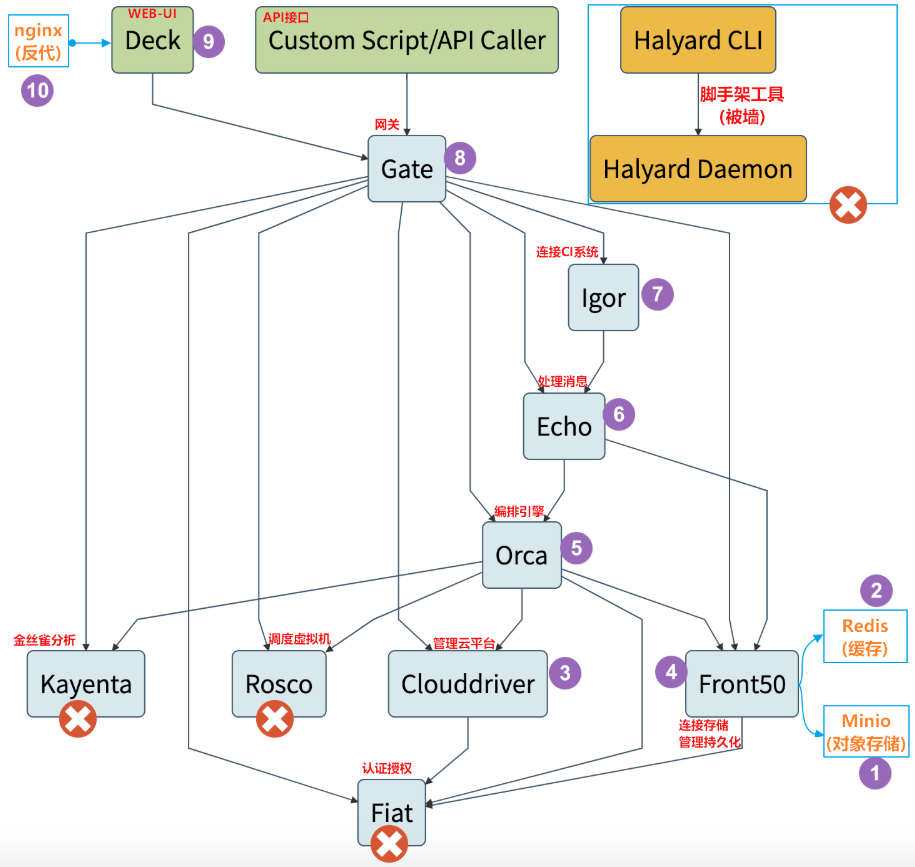

部署选型

- Spinnaker官网:https://www.spinnaker.io/

Spinnaker包含组件众多,部署相对复杂,因此官方提供的脚手架工具halyard,但是涉及的部分镜像地址难以访问。 - Armory发行版:https://www.armory.io/

基于Spinnaker,众多公司开发了第三方发行版来简化Spinnaker的部署工作,例如我们要用的Armory发行版。

Armory也有自己的脚手架工具,虽然相对halyard更简化了,但仍然难以访问。

因此部署方式采用手动交付Spinnaker的Armory发行版。

部署顺序:Minio > Redis > Clouddriver >Front50 > Orca > Echo > Igor > Gate > Deck > Nginx(是静态页面所以需要)

各组件服务端口:

| Service | Port |

|---|---|

| Clouddriver | 7002 |

| Deck | 9000 |

| Echo | 8089 |

| Fiat | 7003 |

| Front50 | 8080 |

| Gate | 8084 |

| Halyard | 8064 |

| Igor | 8088 |

| Kayenta | 8090 |

| Orca | 8083 |

| Rosco | 8087 |

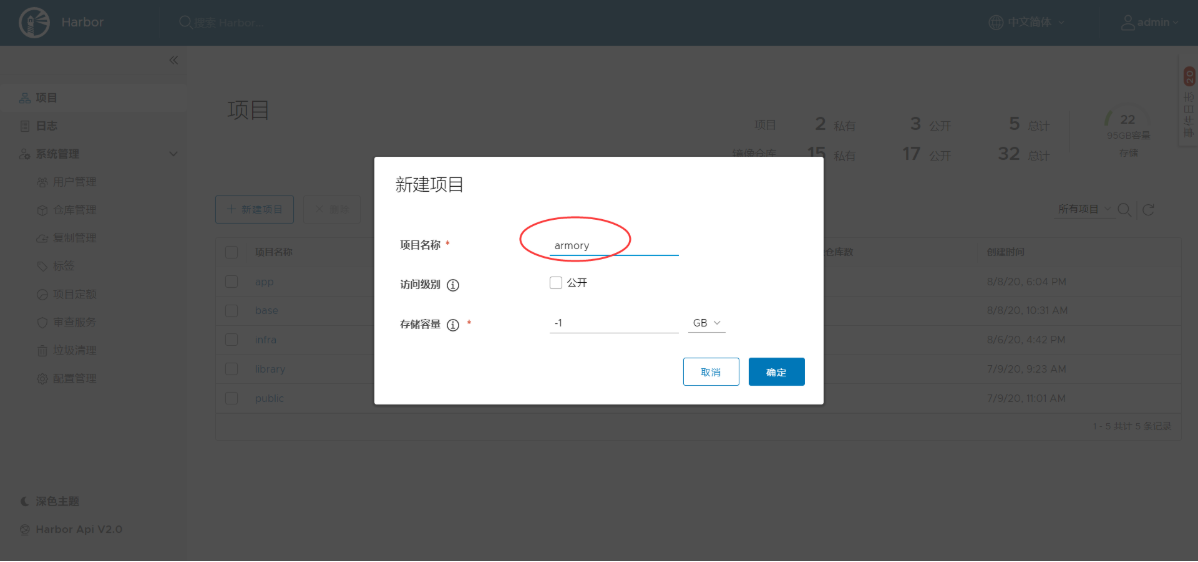

创建私有仓库harbor.op.com/armory

- 在任一运算节点创建名称空间

[root@vms21 ~]# kubectl create ns armorynamespace/armory created[root@vms21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.op.com --docker-username=admin --docker-password=Harbor12543 -n armorysecret/harbor created

因为armory仓库是私有的,要创建secret,否则不能拉取镜像

部署对象式存储minio

运维主机vms200上:

准备docker镜像

镜像下载地址:https://hub.docker.com/r/minio/minio

[root@vms200 ~]# docker pull minio/minio:latestlatest: Pulling from minio/miniodf20fa9351a1: Already existsebc4e9e74d67: Pull completeDigest: sha256:a6c895f2037fb39c2a3151fcc675bd03882a807a00d3b53026076839f32472d2Status: Downloaded newer image for minio/minio:latestdocker.io/minio/minio:latest[root@vms200 ~]# docker tag minio/minio:latest harbor.op.com/armory/minio:latest[root@vms200 ~]# docker push harbor.op.com/armory/minio:latestThe push refers to repository [harbor.op.com/armory/minio]d2ea7b1fe80e: Pushed50644c29ef5a: Mounted from infra/grafanalatest: digest: sha256:a6c895f2037fb39c2a3151fcc675bd03882a807a00d3b53026076839f32472d2 size: 740

准备资源配置清单

[root@vms200 ~]# mkdir -p /data/k8s-yaml/armory/minio && cd /data/k8s-yaml/armory/minio/

- Deployment

[root@vms200 minio]# vi deployment.yaml

kind: DeploymentapiVersion: apps/v1kind: Deploymentmetadata:labels:name: minioname: minionamespace: armoryspec:progressDeadlineSeconds: 600replicas: 1revisionHistoryLimit: 7selector:matchLabels:name: miniotemplate:metadata:labels:app: minioname: miniospec:containers:- name: minioimage: harbor.op.com/armory/minio:latestimagePullPolicy: IfNotPresentports:- containerPort: 9000protocol: TCPargs:- server- /dataenv:- name: MINIO_ACCESS_KEYvalue: admin- name: MINIO_SECRET_KEYvalue: admin123readinessProbe:failureThreshold: 3httpGet:path: /minio/health/readyport: 9000scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 5volumeMounts:- mountPath: /dataname: dataimagePullSecrets:- name: harborvolumes:- nfs:server: vms200path: /data/nfs-volume/minioname: data

创建对应的存储

[root@vms200 minio]# mkdir /data/nfs-volume/minio

- Service

[root@vms200 minio]# vi svc.yaml

apiVersion: v1kind: Servicemetadata:name: minionamespace: armoryspec:ports:- port: 80protocol: TCPtargetPort: 9000selector:app: minio

- Ingress

[root@vms200 minio]# vi ingress.yaml

kind: IngressapiVersion: extensions/v1beta1metadata:name: minionamespace: armoryspec:rules:- host: minio.op.comhttp:paths:- path: /backend:serviceName: minioservicePort: 80

解析域名

vms11上

[root@vms11 ~]# vi /var/named/op.com.zone

...minio A 192.168.26.10

注意serial前滚一个序号

[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A minio.op.com +short192.168.26.10[root@vms11 ~]# host minio.op.comminio.op.com has address 192.168.26.10

应用资源配置清单

任意运算节点上:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/minio/deployment.yamldeployment.apps/minio created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/minio/svc.yamlservice/minio created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/minio/ingress.yamlingress.extensions/minio created[root@hdss7-21 ~]# kubectl apply -f https://k8s-yaml.od.com/minio/deployment.yamldeployment.extensions/minio created[root@hdss7-21 ~]# kubectl apply -f https://k8s-yaml.od.com/minio/svc.yamlservice/minio created[root@hdss7-21 ~]# kubectl apply -f https://k8s-yaml.od.com/minio/ingress.yamlingress.extensions/minio created

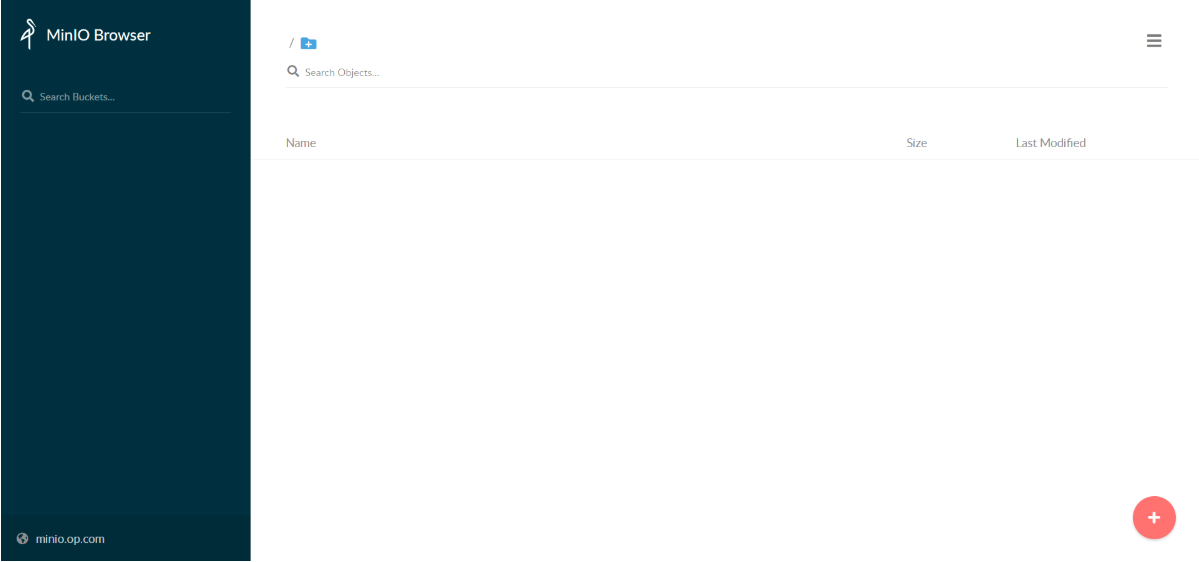

浏览器访问

http://minio.op.com (账户:admin 密码:admin123 即Deployment中设置的明文密码)

部署Redis

Spinnaker中的redis仅仅是起到缓存作用,对Spinnaker的作用不是很大,即使宕机重启也问题不大,且并发小。基于当前有限的资源条件下,考虑使用单个副本非持久化的方式部署redis。如果需要持久化,在启动容器时,指定command和args,如改为: /usr/local/bin/redis-server /etc/myredis.conf

准备docker镜像

运维主机vms200上:

镜像下载地址:https://hub.docker.com/search?q=redis&type=image

[root@vms200 ~]# docker pull redis:6.0.86.0.8: Pulling from library/redisd121f8d1c412: Pull complete2f9874741855: Pull completed92da09ebfd4: Pull completebdfa64b72752: Pull completee748e6f663b9: Pull completeeb1c8b66e2a1: Pull completeDigest: sha256:1cfb205a988a9dae5f025c57b92e9643ec0e7ccff6e66bc639d8a5f95bba928cStatus: Downloaded newer image for redis:6.0.8docker.io/library/redis:6.0.8[root@vms200 ~]# docker images|grep redisredis 6.0.8 84c5f6e03bf0 2 weeks ago 104MB[root@vms200 ~]# docker tag redis:6.0.8 harbor.op.com/armory/redis:v6.0.8[root@vms200 ~]# docker push harbor.op.com/armory/redis:v6.0.8The push refers to repository [harbor.op.com/armory/redis]2e9c060aef92: Pushedea96cbf71ac4: Pushed47d8fadc6714: Pushed7fb1fa4d4022: Pushed45b5e221b672: Pushed07cab4339852: Pushedv6.0.8: digest: sha256:02d2467210e76794c98ae14c642b88ee047911c7e2ab4aa444b0bfe019a41892 size: 1572

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/redis && cd /data/k8s-yaml/armory/redis

- Deployment

[root@vms200 redis]# vi deployment.yaml

kind: DeploymentapiVersion: apps/v1kind: Deploymentmetadata:labels:name: redisname: redisnamespace: armoryspec:replicas: 1revisionHistoryLimit: 7selector:matchLabels:name: redistemplate:metadata:labels:app: redisname: redisspec:containers:- name: redisimage: harbor.op.com/armory/redis:v6.0.8imagePullPolicy: IfNotPresentports:- containerPort: 6379protocol: TCPimagePullSecrets:- name: harbor

- Service

[root@vms200 redis]# vi svc.yaml

apiVersion: v1kind: Servicemetadata:name: redisnamespace: armoryspec:ports:- port: 6379protocol: TCPtargetPort: 6379selector:app: redis

应用资源配置清单

任意运算节点上:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/redis/deployment.yamldeployment.apps/redis created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/redis/svc.yamlservice/redis created

[root@vms21 ~]# kubectl get pod -n armory -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESminio-5d6b989d46-wxh4c 1/1 Running 0 69m 172.26.21.3 vms21.cos.com <none> <none>redis-5979d767cd-dp2sb 1/1 Running 0 2m12s 172.26.21.4 vms21.cos.com <none> <none>[root@vms21 ~]# telnet 172.26.21.4 6379Trying 172.26.21.4...Connected to 172.26.21.4.Escape character is '^]'.^]telnet> quitConnection closed.

部署CloudDriver

CloudDriver是整套spinnaker部署中最难的部分

运维主机vms200上:

准备镜像和目录

镜像下载地址:

[root@vms200 ~]# docker pull armory/spinnaker-clouddriver-slim:release-1.11.x-bee52673arelease-1.11.x-bee52673a: Pulling from armory/spinnaker-clouddriver-slim6c40cc604d8e: Pull completee78b80385239: Pull complete47317d99e629: Pull completed81f37aa0a02: Pull complete6d7a23031ae9: Pull completef18a770afc14: Pull complete6bea2c559832: Pull complete68654bc5bd90: Pull complete5f28719fb892: Pull completeDigest: sha256:1267bdc872c741ce28021d44d7c69f6eb04e7441fb1e3e475d584772de829df7Status: Downloaded newer image for armory/spinnaker-clouddriver-slim:release-1.11.x-bee52673adocker.io/armory/spinnaker-clouddriver-slim:release-1.11.x-bee52673a[root@vms200 ~]# docker images | grep spinnaker-clouddriver-slimarmory/spinnaker-clouddriver-slim release-1.11.x-bee52673a f1d52d01e28d 19 months ago 1.05GB[root@vms200 ~]# docker tag f1d52d01e28d harbor.op.com/armory/clouddriver:v1.11.x[root@vms200 ~]# docker push harbor.op.com/armory/clouddriver:v1.11.xThe push refers to repository [harbor.op.com/armory/clouddriver]be305dda3fe4: Pushedacceb5d68f45: Pushede405e67c8e60: Pushed0f59f260abd3: Pushed43f1d24bca51: Pushed820b438c3358: Pushed4c6899b75fdb: Pushed744b4cd8cf79: Pushed503e53e365f3: Pushedv1.11.x: digest: sha256:1267bdc872c741ce28021d44d7c69f6eb04e7441fb1e3e475d584772de829df7 size: 2216

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/clouddriver[root@vms200 ~]# cd /data/k8s-yaml/armory/clouddriver

准备minio的secret

[root@vms200 clouddriver]# vi credentials

[default]aws_access_key_id=adminaws_secret_access_key=admin123

任一运算节点创建secret

[root@vms21 ~]# wget http://k8s-yaml.op.com/armory/clouddriver/credentials...[root@vms21 ~]# kubectl create secret generic credentials --from-file=./credentials -n armorysecret/credentials created

也可以直接使用命令行创建:

kubectl create secret generic credentials \--aws_access_key_id=admin \--aws_secret_access_key=admin123 \-n armory

签发证书与私钥

运维主机vms200上:

[root@vms200 ~]# cd /opt/certs/[root@vms200 certs]# cp client-csr.json admin-csr.json[root@vms200 certs]# vi admin-csr.json

修改"CN": "k8s-node"为"CN": "cluster-admin"

{"CN": "cluster-admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "op","OU": "ops"}]}

[root@vms200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client admin-csr.json | cfssl-json -bare admin2020/09/29 17:37:54 [INFO] generate received request2020/09/29 17:37:54 [INFO] received CSR2020/09/29 17:37:54 [INFO] generating key: rsa-20482020/09/29 17:37:54 [INFO] encoded CSR2020/09/29 17:37:54 [INFO] signed certificate with serial number 2969024687788369113333258177471812540992403217792020/09/29 17:37:54 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").[root@vms200 certs]# ls -l admin*-rw-r--r-- 1 root root 1001 Sep 29 17:37 admin.csr-rw-r--r-- 1 root root 285 Sep 29 17:37 admin-csr.json-rw------- 1 root root 1675 Sep 29 17:37 admin-key.pem-rw-r--r-- 1 root root 1367 Sep 29 17:37 admin.pem

准备cluster-admin用户配置

vms21上:

- 分发证书

[root@vms21 ~]# mkdir /opt/certs[root@vms21 ~]# cd /opt/certs[root@vms21 certs]# scp vms200:/opt/certs/ca.pem .... 100% 1338 292.8KB/s 00:00[root@vms21 certs]# scp vms200:/opt/certs/admin.pem .... 100% 1367 583.9KB/s 00:00[root@vms21 certs]# scp vms200:/opt/certs/admin-key.pem .... 100% 1675 718.7KB/s 00:00[root@vms21 certs]# lltotal 12-rw------- 1 root root 1675 Sep 29 18:09 admin-key.pem-rw-r--r-- 1 root root 1367 Sep 29 18:09 admin.pem-rw-r--r-- 1 root root 1338 Sep 29 18:08 ca.pem

- 4步法创建用户+集群角色绑定

[root@vms21 certs]# kubectl config set-cluster myk8s --certificate-authority=./ca.pem --embed-certs=true --server=https://192.168.26.10:8443 --kubeconfig=configCluster "myk8s" set.[root@vms21 certs]# kubectl config set-credentials cluster-admin --client-certificate=./admin.pem --client-key=./admin-key.pem --embed-certs=true --kubeconfig=configUser "cluster-admin" set.[root@vms21 certs]# kubectl config set-context myk8s-context --cluster=myk8s --user=cluster-admin --kubeconfig=configContext "myk8s-context" created.[root@vms21 certs]# kubectl config use-context myk8s-context --kubeconfig=configSwitched to context "myk8s-context".[root@vms21 certs]# kubectl create clusterrolebinding myk8s-admin --clusterrole=cluster-admin --user=cluster-adminclusterrolebinding.rbac.authorization.k8s.io/myk8s-admin created[root@vms21 certs]# ls -l config-rw------- 1 root root 6201 Sep 29 18:17 config

此时使用kubectl config view查看是空的

[root@vms21 certs]# ls -l /root/.kube/total 8drwxr-x--- 3 root root 23 Jul 17 16:03 cachedrwxr-x--- 3 root root 4096 Sep 29 18:40 http-cache[root@vms21 certs]# cp config /root/.kube/[root@vms21 certs]# ls -l /root/.kube/total 16drwxr-x--- 3 root root 23 Jul 17 16:03 cache-rw------- 1 root root 6201 Sep 29 18:41 configdrwxr-x--- 3 root root 4096 Sep 29 18:40 http-cache[root@vms21 certs]# kubectl config view

apiVersion: v1clusters:- cluster:certificate-authority-data: DATA+OMITTEDserver: https://192.168.26.10:8443name: myk8scontexts:- context:cluster: myk8suser: cluster-adminname: myk8s-contextcurrent-context: myk8s-contextkind: Configpreferences: {}users:- name: cluster-adminuser:client-certificate-data: REDACTEDclient-key-data: REDACTED

- 在

vms200使用kubectl验证测试kubeconfig是否可以用

[root@vms200 ~]# mkdir /root/.kube[root@vms200 ~]# cd /root/.kube[root@vms200 .kube]# scp vms21:/opt/certs/config ....[root@vms200 .kube]# scp vms21:/opt/kubernetes/server/bin/kubectl ....[root@vms200 .kube]# mv kubectl /usr/bin/[root@vms200 .kube]# kubectl config view...[root@vms200 .kube]# kubectl get pod -n armoryNAME READY STATUS RESTARTS AGEminio-5d6b989d46-wxh4c 1/1 Running 0 3h9mredis-5979d767cd-dp2sb 1/1 Running 0 122m

如果config文件不放在缺省目录/root/.kube,可以在命令行中使用--kubeconfig指定:

kubectl get pod -n armory --kubeconfig=/xxx/config

- 使用config创建cm资源

vms21上:

[root@vms21 certs]# cd /root/.kube/[root@vms21 .kube]# mv config default-kubeconfig[root@vms21 .kube]# kubectl create configmap default-kubeconfig --from-file=./default-kubeconfig -n armoryconfigmap/default-kubeconfig created

创建并应用资源清单

Spinnaker 的配置比较繁琐,其中有一个default-config.yaml的configmap非常复杂,一般不需要修改。

运维主机vms200上:/data/k8s-yaml/armory/clouddriver

- 创建环境变量配置:init-env.yaml (包括redis地址、对外的API接口域名等)

kind: ConfigMapapiVersion: v1metadata:name: init-envnamespace: armorydata:API_HOST: http://spinnaker.op.com/apiARMORY_ID: c02f0781-92f5-4e80-86db-0ba8fe7b8544ARMORYSPINNAKER_CONF_STORE_BUCKET: armory-platformARMORYSPINNAKER_CONF_STORE_PREFIX: front50ARMORYSPINNAKER_GCS_ENABLED: "false"ARMORYSPINNAKER_S3_ENABLED: "true"AUTH_ENABLED: "false"AWS_REGION: us-east-1BASE_IP: 127.0.0.1CLOUDDRIVER_OPTS: -Dspring.profiles.active=armory,configurator,localCONFIGURATOR_ENABLED: "false"DECK_HOST: http://spinnaker.op.comECHO_OPTS: -Dspring.profiles.active=armory,configurator,localGATE_OPTS: -Dspring.profiles.active=armory,configurator,localIGOR_OPTS: -Dspring.profiles.active=armory,configurator,localPLATFORM_ARCHITECTURE: k8sREDIS_HOST: redis://redis:6379SERVER_ADDRESS: 0.0.0.0SPINNAKER_AWS_DEFAULT_REGION: us-east-1SPINNAKER_AWS_ENABLED: "false"SPINNAKER_CONFIG_DIR: /home/spinnaker/configSPINNAKER_GOOGLE_PROJECT_CREDENTIALS_PATH: ""SPINNAKER_HOME: /home/spinnakerSPRING_PROFILES_ACTIVE: armory,configurator,local

- 创建组件配置文件:custom-config.yaml

kind: ConfigMapapiVersion: v1metadata:name: custom-confignamespace: armorydata:clouddriver-local.yml: |kubernetes:enabled: trueaccounts:- name: cluster-adminserviceAccount: falsedockerRegistries:- accountName: harbornamespace: []namespaces:- test- prodkubeconfigFile: /opt/spinnaker/credentials/custom/default-kubeconfigprimaryAccount: cluster-admindockerRegistry:enabled: trueaccounts:- name: harborrequiredGroupMembership: []providerVersion: V1insecureRegistry: trueaddress: http://harbor.op.comusername: adminpassword: Harbor12543primaryAccount: harborartifacts:s3:enabled: trueaccounts:- name: armory-config-s3-accountapiEndpoint: http://minioapiRegion: us-east-1gcs:enabled: falseaccounts:- name: armory-config-gcs-accountcustom-config.json: ""echo-configurator.yml: |diagnostics:enabled: truefront50-local.yml: |spinnaker:s3:endpoint: http://minioigor-local.yml: |jenkins:enabled: truemasters:- name: jenkins-adminaddress: http://jenkins.op.comusername: adminpassword: admin123primaryAccount: jenkins-adminnginx.conf: |gzip on;gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript application/vnd.ms-fontobject application/x-font-ttf font/opentype image/svg+xml image/x-icon;server {listen 80;location / {proxy_pass http://armory-deck/;}location /api/ {proxy_pass http://armory-gate:8084/;}rewrite ^/login(.*)$ /api/login$1 last;rewrite ^/auth(.*)$ /api/auth$1 last;}spinnaker-local.yml: |services:igor:enabled: true

该配置文件指定访问k8s、harbor、minio、Jenkins的访问方式;其中部分地址可以根据是否在k8s内部,和是否同一个名称空间来选择是否使用短域名。

- 创建默认配置文件:default-config.yaml

此配置文件超长(放在文末位置),是用armory部署工具部署好后,基本不需要改动。 此配置也被其他组件引用。

- Deployment:dp.yaml

apiVersion: apps/v1kind: Deploymentmetadata:labels:app: armory-clouddrivername: armory-clouddrivernamespace: armoryspec:replicas: 1revisionHistoryLimit: 7selector:matchLabels:app: armory-clouddrivertemplate:metadata:annotations:artifact.spinnaker.io/location: '"armory"'artifact.spinnaker.io/name: '"armory-clouddriver"'artifact.spinnaker.io/type: '"kubernetes/deployment"'moniker.spinnaker.io/application: '"armory"'moniker.spinnaker.io/cluster: '"clouddriver"'labels:app: armory-clouddriverspec:containers:- name: armory-clouddriverimage: harbor.op.com/armory/clouddriver:v1.11.ximagePullPolicy: IfNotPresentcommand:- bash- -cargs:- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config&& /opt/clouddriver/bin/clouddriverports:- containerPort: 7002protocol: TCPenv:- name: JAVA_OPTSvalue: -Xmx512MenvFrom:- configMapRef:name: init-envlivenessProbe:failureThreshold: 5httpGet:path: /healthport: 7002scheme: HTTPinitialDelaySeconds: 600periodSeconds: 3successThreshold: 1timeoutSeconds: 1readinessProbe:failureThreshold: 5httpGet:path: /healthport: 7002scheme: HTTPinitialDelaySeconds: 180periodSeconds: 3successThreshold: 5timeoutSeconds: 1securityContext:runAsUser: 0volumeMounts:- mountPath: /etc/podinfoname: podinfo- mountPath: /home/spinnaker/.awsname: credentials- mountPath: /opt/spinnaker/credentials/customname: default-kubeconfig- mountPath: /opt/spinnaker/config/defaultname: default-config- mountPath: /opt/spinnaker/config/customname: custom-configimagePullSecrets:- name: harborvolumes:- configMap:defaultMode: 420name: default-kubeconfigname: default-kubeconfig- configMap:defaultMode: 420name: custom-configname: custom-config- configMap:defaultMode: 420name: default-configname: default-config- name: credentialssecret:defaultMode: 420secretName: credentials- downwardAPI:defaultMode: 420items:- fieldRef:apiVersion: v1fieldPath: metadata.labelspath: labels- fieldRef:apiVersion: v1fieldPath: metadata.annotationspath: annotationsname: podinfo

- service:svc.yaml

apiVersion: v1kind: Servicemetadata:name: armory-clouddrivernamespace: armoryspec:ports:- port: 7002protocol: TCPtargetPort: 7002selector:app: armory-clouddriver

- 应用资源清单:任一运算节点或在vms200上(因为之前可以使用kubectl)

vms200:/data/k8s-yaml/armory/clouddriver

[root@vms200 clouddriver]# kubectl apply -f ./init-env.yamlconfigmap/init-env created[root@vms200 clouddriver]# kubectl apply -f ./default-config.yamlconfigmap/default-config created[root@vms200 clouddriver]# kubectl apply -f ./custom-config.yamlconfigmap/custom-config created[root@vms200 clouddriver]# kubectl apply -f ./dp.yamldeployment.apps/armory-clouddriver created[root@vms200 clouddriver]# kubectl apply -f ./svc.yamlservice/armory-clouddriver created

检查

- vms200上查看pod

[root@vms200 clouddriver]# kubectl get pod -n armory -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESarmory-clouddriver-5fdb54ffbd-gwtzh 0/1 Running 0 3m34s 172.26.21.5 vms21.cos.com <none> <none>minio-5d6b989d46-wxh4c 1/1 Running 0 4h10m 172.26.21.3 vms21.cos.com <none> <none>redis-5979d767cd-dp2sb 1/1 Running 0 3h3m 172.26.21.4 vms21.cos.com <none> <none>[root@vms200 clouddriver]# kubectl exec -n armory minio-5d6b989d46-wxh4c -- curl -s armory-clouddriver:7002/health{"status":"UP","kubernetes":{"status":"UP"},"dockerRegistry":{"status":"UP"},"redisHealth":{"status":"UP","maxIdle":100,"minIdle":25,"numActive":0,"numIdle":3,"numWaiters":0},"diskSpace":{"status":"UP","total":101954621440,"free":83474780160,"threshold":10485760}}

- 从上可知minio运行在vms21上,进入容器进行验证

[root@vms21 .kube]# docker ps -a|grep minio748c44a5333c harbor.op.com/armory/minio "/usr/bin/docker-ent…" 4 hours ago Up 4 hours k8s_minio_minio-5d6b989d46-wxh4c_armory_d482fdee-351b-44d0-87ae-c40a70978633_022088e51e681 harbor.op.com/public/pause:latest "/pause" 4 hours ago Up 4 hours k8s_POD_minio-5d6b989d46-wxh4c_armory_d482fdee-351b-44d0-87ae-c40a70978633_0[root@vms21 .kube]# docker exec -it 748c44a5333c /bin/sh/ # curl armory-clouddriver:7002/health

输出以下内容:(已格式化)

{"status": "UP","kubernetes": {"status": "UP"},"dockerRegistry": {"status": "UP"},"redisHealth": {"status": "UP","maxIdle": 100,"minIdle": 25,"numActive": 0,"numIdle": 3,"numWaiters": 0},"diskSpace": {"status": "UP","total": 101954621440,"free": 83473608704,"threshold": 10485760}}

/ # curl armory-clouddriver:7002{"_links" : {"profile" : {"href" : "http://armory-clouddriver:7002/profile"}}}

- 使用pod/容器IP验证

[root@vms22 ~]# curl 172.26.21.5:7002/health{"status":"UP","kubernetes":{"status":"UP"},"dockerRegistry":{"status":"UP"},"redisHealth":{"status":"UP","maxIdle":100,"minIdle":25,"numActive":0,"numIdle":3,"numWaiters":0},"diskSpace":{"status":"UP","total":101954621440,"free":83475595264,"threshold":10485760}}[root@vms22 ~][root@vms22 ~]# curl 172.26.21.5:7002{"_links" : {"profile" : {"href" : "http://172.26.21.5:7002/profile"}}}[

"status":"UP"表示正常

部署Front50

部署数据持久化组件Front50

运维主机vms200上:

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/front50[root@vms200 ~]# cd /data/k8s-yaml/armory/front50

准备docker镜像

镜像下载地址:

[root@vms200 front50]# docker pull armory/spinnaker-front50-slim:release-1.10.x-98b4ab9release-1.10.x-98b4ab9: Pulling from armory/spinnaker-front50-slim6c40cc604d8e: Already existse78b80385239: Already existsf41fe1b6eee3: Pull complete43986b0e233e: Pull completebbee873f8a25: Pull completef9e1630d99d3: Pull completeDigest: sha256:893442d2ccc55ad9ea1e48651b19a89228516012de8e7618260b2e35cbeab77fStatus: Downloaded newer image for armory/spinnaker-front50-slim:release-1.10.x-98b4ab9docker.io/armory/spinnaker-front50-slim:release-1.10.x-98b4ab9[root@vms200 front50]# docker images|grep front50armory/spinnaker-front50-slim release-1.10.x-98b4ab9 97d161022d93 20 months ago 276MB[root@vms200 front50]# docker tag 97d161022d93 harbor.op.com/armory/front50:v1.10.x[root@vms200 front50]# docker push harbor.op.com/armory/front50:v1.10.x...[root@vms200 front50]# docker pull armory/spinnaker-front50-slim:release-1.8.x-93febf2...[root@vms200 front50]# docker tag armory/spinnaker-front50-slim:release-1.8.x-93febf2 harbor.op.com/armory/front50:v1.8.x[root@vms200 front50]# docker push harbor.op.com/armory/front50:v1.8.x...

准备资源配置清单

- Deployment:dp.yaml

apiVersion: apps/v1kind: Deploymentmetadata:labels:app: armory-front50name: armory-front50namespace: armoryspec:replicas: 1revisionHistoryLimit: 7selector:matchLabels:app: armory-front50template:metadata:annotations:artifact.spinnaker.io/location: '"armory"'artifact.spinnaker.io/name: '"armory-front50"'artifact.spinnaker.io/type: '"kubernetes/deployment"'moniker.spinnaker.io/application: '"armory"'moniker.spinnaker.io/cluster: '"front50"'labels:app: armory-front50spec:containers:- name: armory-front50image: harbor.op.com/armory/front50:v1.10.ximagePullPolicy: IfNotPresentcommand:- bash- -cargs:- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config&& /opt/front50/bin/front50ports:- containerPort: 8080protocol: TCP# env:# - name: JAVA_OPTS# value: -javaagent:/opt/front50/lib/jamm-0.2.5.jar -Xmx1000MenvFrom:- configMapRef:name: init-envlivenessProbe:failureThreshold: 3httpGet:path: /healthport: 8080scheme: HTTPinitialDelaySeconds: 600periodSeconds: 3successThreshold: 1timeoutSeconds: 1readinessProbe:failureThreshold: 3httpGet:path: /healthport: 8080scheme: HTTPinitialDelaySeconds: 180periodSeconds: 5successThreshold: 8timeoutSeconds: 1volumeMounts:- mountPath: /etc/podinfoname: podinfo- mountPath: /home/spinnaker/.awsname: credentials- mountPath: /opt/spinnaker/config/defaultname: default-config- mountPath: /opt/spinnaker/config/customname: custom-configimagePullSecrets:- name: harborvolumes:- configMap:defaultMode: 420name: custom-configname: custom-config- configMap:defaultMode: 420name: default-configname: default-config- name: credentialssecret:defaultMode: 420secretName: credentials- downwardAPI:defaultMode: 420items:- fieldRef:apiVersion: v1fieldPath: metadata.labelspath: labels- fieldRef:apiVersion: v1fieldPath: metadata.annotationspath: annotationsname: podinfo

使用1.10.x版本时注释env那3行 使用了

default-config.yaml的配置,如fetch.sh来自这个配置文件

- Service:svc.yaml

apiVersion: v1kind: Servicemetadata:name: armory-front50namespace: armoryspec:ports:- port: 8080protocol: TCPtargetPort: 8080selector:app: armory-front50

应用资源配置清单

任意一台运算节点上:

[root@vms22 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/front50/dp.yamldeployment.apps/armory-front50 created[root@vms22 ~]# kubectl apply -f http://k8s-yaml.op.com/armory/front50/svc.yamlservice/armory-front50 created

检查:

[root@vms22 ~]# kubectl get po -n armory -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESarmory-clouddriver-5fdb54ffbd-gwtzh 1/1 Running 0 95m 172.26.21.5 vms21.cos.com <none> <none>armory-front50-c74947d8b-dtv5f 0/1 Running 0 5s 172.26.22.6 vms22.cos.com <none> <none>minio-5d6b989d46-wxh4c 1/1 Running 0 5h42m 172.26.21.3 vms21.cos.com <none> <none>redis-5979d767cd-dp2sb 1/1 Running 0 4h35m 172.26.21.4 vms21.cos.com <none> <none>[root@vms22 ~]# kubectl exec -n armory minio-5d6b989d46-wxh4c -- curl -s http://armory-front50:8080/health{"status":"UP"}

[root@vms21 ~]# docker ps -a|grep minio

748c44a5333c harbor.op.com/armory/minio "/usr/bin/docker-ent…" 6 hours ago Up 6 hours k8s_minio_minio-5d6b989d46-wxh4c_armory_d482fdee-351b-44d0-87ae-c40a70978633_0

22088e51e681 harbor.op.com/public/pause:latest "/pause" 6 hours ago Up 6 hours k8s_POD_minio-5d6b989d46-wxh4c_armory_d482fdee-351b-44d0-87ae-c40a70978633_0

[root@vms21 ~]# docker exec -it 748c44a5333c sh

/ # curl armory-front50:8080/health

{"status":"UP"}

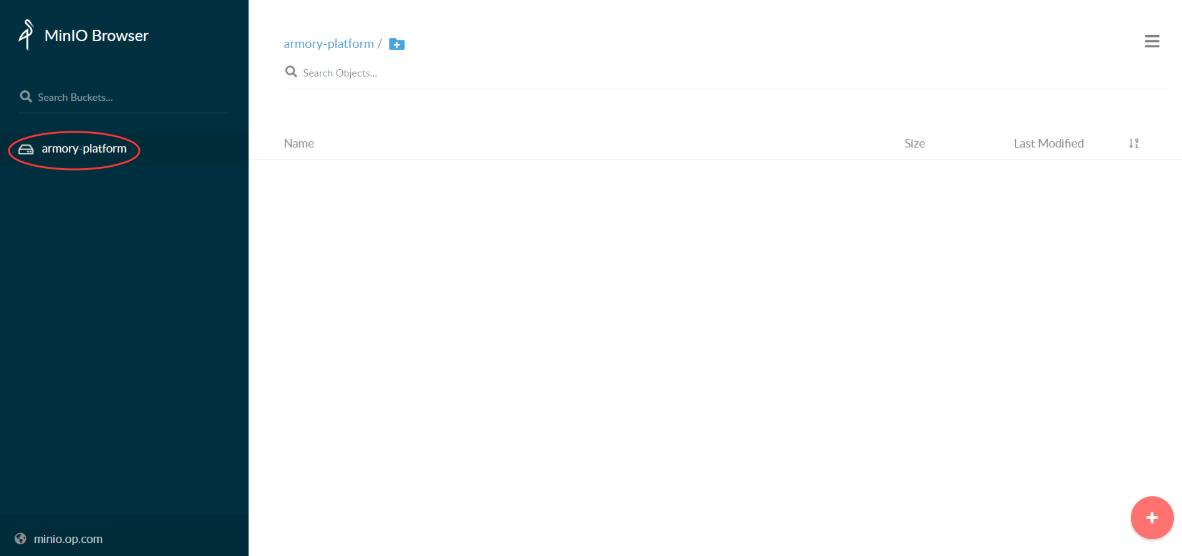

浏览器访问

http://minio.op.com 登录并观察存储是否创建

关机前停止pod,可以加快关机和开机。

[root@vms22 ~]# kubectl -n armory get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

armory-clouddriver 1/1 1 1 111m

armory-front50 1/1 1 1 15m

minio 1/1 1 1 5h58m

redis 1/1 1 1 4h51m

[root@vms22 ~]# kubectl -n armory scale deployment armory-clouddriver --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-front50 --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment redis --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment minio --replicas=0

[root@vms22 ~]# kubectl -n armory get pod

No resources found in armory namespace.

[root@vms22 ~]# kubectl -n armory get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

armory-clouddriver 0/0 0 0 114m

armory-front50 0/0 0 0 18m

minio 0/0 0 0 6h1m

redis 0/0 0 0 4h54m

部署Orca

运维主机vms200上:

准备docker镜像

镜像下载

[root@vms200 ~]# docker pull docker.io/armory/spinnaker-orca-slim:release-1.8.x-de4ab55

release-1.8.x-de4ab55: Pulling from armory/spinnaker-orca-slim

...

docker.io/armory/spinnaker-orca-slim:release-1.8.x-de4ab55

[root@vms200 ~]# docker images|grep orca

armory/spinnaker-orca-slim release-1.8.x-de4ab55 5103b1f73e04 2 years ago 141MB

[root@vms200 ~]# docker tag 5103b1f73e04 harbor.op.com/armory/orca:v1.8.x

[root@vms200 ~]# docker push harbor.op.com/armory/orca:v1.8.x

[root@vms200 ~]# docker pull armory/spinnaker-orca-slim:release-1.10.x-769f4e5

release-1.10.x-769f4e5: Pulling from armory/spinnaker-orca-slim

...

Status: Downloaded newer image for armory/spinnaker-orca-slim:release-1.10.x-769f4e5

docker.io/armory/spinnaker-orca-slim:release-1.10.x-769f4e5

[root@vms200 ~]# docker tag armory/spinnaker-orca-slim:release-1.10.x-769f4e5 harbor.op.com/armory/orca:v1.10.x

[root@vms200 ~]# docker push harbor.op.com/armory/orca:v1.10.x

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/orca

[root@vms200 ~]# cd /data/k8s-yaml/armory/orca

- Deployment:dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-orca

name: armory-orca

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-orca

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-orca"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"orca"'

labels:

app: armory-orca

spec:

containers:

- name: armory-orca

image: harbor.op.com/armory/orca:v1.10.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/orca/bin/orca

ports:

- containerPort: 8083

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

- Service:svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-orca

namespace: armory

spec:

ports:

- port: 8083

protocol: TCP

targetPort: 8083

selector:

app: armory-orca

应用资源配置清单

任意一台运算节点或vms200上:

- vms200:/data/k8s-yaml/armory/orca

[root@vms200 orca]# kubectl apply -f ./dp.yaml

deployment.apps/armory-orca created

[root@vms200 orca]# kubectl apply -f ./svc.yaml

service/armory-orca created

- vms21

[root@vms21 ~]# kubectl -n armory get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5fdb54ffbd-mbmpv 1/1 Running 0 6m22s 172.26.21.6 vms21.cos.com <none> <none>

armory-front50-c74947d8b-8vpxt 1/1 Running 0 3m50s 172.26.22.6 vms22.cos.com <none> <none>

armory-orca-59cf846bf9-hrtjc 1/1 Running 4 11m 172.26.21.3 vms21.cos.com <none> <none>

minio-5d6b989d46-t24js 1/1 Running 0 7m39s 172.26.21.4 vms21.cos.com <none> <none>

redis-5979d767cd-jxkrt 1/1 Running 0 7m12s 172.26.21.5 vms21.cos.com <none> <none>

[root@vms21 ~]# kubectl -n armory exec minio-5d6b989d46-t24js -- curl -s 'http://armory-orca:8083/health'

{"status":"UP"}

也可进入minio的pod/容器进行curl armory-orca:8083/health

部署Echo

运维主机vms200上:

准备docker镜像

镜像下载

[root@vms200 ~]# docker pull docker.io/armory/echo-armory:c36d576-release-1.8.x-617c567

c36d576-release-1.8.x-617c567: Pulling from armory/echo-armory

...

Digest: sha256:33f6d25aa536d245bc1181a9d6f42eceb8ce59c9daa954fa9e4a64095acf8356

Status: Downloaded newer image for armory/echo-armory:c36d576-release-1.8.x-617c567

docker.io/armory/echo-armory:c36d576-release-1.8.x-617c567

[root@vms200 ~]# docker images |grep echo

armory/echo-armory c36d576-release-1.8.x-617c567 415efd46f474 2 years ago 287MB

[root@vms200 ~]# docker tag 415efd46f474 harbor.op.com/armory/echo:v1.8.x

[root@vms200 ~]# docker push harbor.op.com/armory/echo:v1.8.x

[root@vms200 ~]# docker pull armory/echo-armory:5891816-release-1.10.x-a568cf9

5891816-release-1.10.x-a568cf9: Pulling from armory/echo-armory

...

Digest: sha256:cd60f8af39079a3e943ddae03e468d76ef6964b3becc71c607554c356453574e

Status: Downloaded newer image for armory/echo-armory:5891816-release-1.10.x-a568cf9

docker.io/armory/echo-armory:5891816-release-1.10.x-a568cf9

[root@vms200 ~]# docker tag armory/echo-armory:5891816-release-1.10.x-a568cf9 harbor.op.com/armory/echo:v1.10.x

[root@vms200 ~]# docker push harbor.op.com/armory/echo:v1.10.x

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/echo

[root@vms200 ~]# cd /data/k8s-yaml/armory/echo

- Deployment:dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-echo

name: armory-echo

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-echo

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-echo"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"echo"'

labels:

app: armory-echo

spec:

containers:

- name: armory-echo

image: harbor.op.com/armory/echo:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/echo/bin/echo

ports:

- containerPort: 8089

protocol: TCP

env:

- name: JAVA_OPTS

value: -javaagent:/opt/echo/lib/jamm-0.2.5.jar -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

- Service:svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-echo

namespace: armory

spec:

ports:

- port: 8089

protocol: TCP

targetPort: 8089

selector:

app: armory-echo

应用资源配置清单

任意一台运算节点或vms200上:

- vms200:/data/k8s-yaml/armory/echo

[root@vms200 echo]# kubectl apply -f ./dp.yaml

deployment.apps/armory-echo created

[root@vms200 echo]# kubectl apply -f ./svc.yaml

service/armory-echo created

- vms21

[root@vms21 ~]# kubectl -n armory get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5fdb54ffbd-mbmpv 1/1 Running 0 37m 172.26.21.6 vms21.cos.com <none> <none>

armory-echo-774875cc4-sxtcs 1/1 Running 0 5m22s 172.26.22.7 vms22.cos.com <none> <none>

armory-front50-c74947d8b-8vpxt 1/1 Running 0 34m 172.26.22.6 vms22.cos.com <none> <none>

armory-orca-59cf846bf9-hrtjc 1/1 Running 4 41m 172.26.21.3 vms21.cos.com <none> <none>

minio-5d6b989d46-t24js 1/1 Running 0 38m 172.26.21.4 vms21.cos.com <none> <none>

redis-5979d767cd-jxkrt 1/1 Running 0 37m 172.26.21.5 vms21.cos.com <none> <none

[root@vms21 ~]# kubectl -n armory exec minio-5d6b989d46-t24js -- curl -s 'http://armory-echo:8089/health'

{"status":"UP"}

部署Igor

运维主机vms200上:

准备docker镜像

镜像下载:

[root@vms200 ~]# docker pull docker.io/armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329

release-1.8-x-new-install-healthy-ae2b329: Pulling from armory/spinnaker-igor-slim

...

Digest: sha256:2a487385908647f24ffa6cd11071ad571bec717008b7f16bc470ba754a7ad258

Status: Downloaded newer image for armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329

docker.io/armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329

[root@vms200 ~]# docker images | grep igor

armory/spinnaker-igor-slim release-1.8-x-new-install-healthy-ae2b329 23984f5b43f6 2 years ago 135MB

[root@vms200 ~]# docker tag 23984f5b43f6 harbor.op.com/armory/igor:v1.8.x

[root@vms200 ~]# docker push harbor.op.com/armory/igor:v1.8.x

[root@vms200 ~]# docker pull armory/spinnaker-igor-slim:release-1.10.x-a4fd897

release-1.10.x-a4fd897: Pulling from armory/spinnaker-igor-slim

...

Digest: sha256:99cb6d52d8585bf736b00ec52d4d2c460f6cdac181bc32a08ecd40c1241f977a

Status: Downloaded newer image for armory/spinnaker-igor-slim:release-1.10.x-a4fd897

docker.io/armory/spinnaker-igor-slim:release-1.10.x-a4fd897

[root@vms200 ~]# docker tag armory/spinnaker-igor-slim:release-1.10.x-a4fd897 harbor.op.com/armory/igor:v1.10.x

[root@vms200 ~]# docker push harbor.op.com/armory/igor:v1.10.x

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/igor

[root@vms200 ~]# cd /data/k8s-yaml/armory/igor

- Deployment:dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-igor

name: armory-igor

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-igor

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-igor"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"igor"'

labels:

app: armory-igor

spec:

containers:

- name: armory-igor

image: harbor.op.com/armory/igor:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/igor/bin/igor

ports:

- containerPort: 8088

protocol: TCP

env:

- name: IGOR_PORT_MAPPING

value: -8088:8088

- name: JAVA_OPTS

value: -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

- Service:svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-igor

namespace: armory

spec:

ports:

- port: 8088

protocol: TCP

targetPort: 8088

selector:

app: armory-igor

应用资源配置清单

任意一台运算节点或vms200上:

- vms200:/data/k8s-yaml/armory/igor

[root@vms200 igor]# kubectl apply -f ./dp.yaml

deployment.apps/armory-igor created

[root@vms200 igor]# kubectl apply -f ./svc.yaml

service/armory-igor created

- vms21

[root@vms21 ~]# kubectl -n armory get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5fdb54ffbd-mbmpv 1/1 Running 0 58m 172.26.21.6 vms21.cos.com <none> <none>

armory-echo-774875cc4-sxtcs 1/1 Running 0 26m 172.26.22.7 vms22.cos.com <none> <none>

armory-front50-c74947d8b-8vpxt 1/1 Running 0 56m 172.26.22.6 vms22.cos.com <none> <none>

armory-igor-7689f5cc96-v6g9v 1/1 Running 0 8m41s 172.26.21.7 vms21.cos.com <none> <none>

armory-orca-59cf846bf9-hrtjc 1/1 Running 4 63m 172.26.21.3 vms21.cos.com <none> <none>

minio-5d6b989d46-t24js 1/1 Running 0 59m 172.26.21.4 vms21.cos.com <none> <none>

redis-5979d767cd-jxkrt 1/1 Running 0 59m 172.26.21.5 vms21.cos.com <none> <none>

[root@vms21 ~]# kubectl -n armory exec minio-5d6b989d46-t24js -- curl -s 'http://armory-igor:8088/health'

{"status":"UP"}

部署Gate

运维主机vms200上:

准备docker镜像

镜像下载:

[root@vms200 ~]# docker pull docker.io/armory/gate-armory:dfafe73-release-1.8.x-5d505ca

dfafe73-release-1.8.x-5d505ca: Pulling from armory/gate-armory

...

Digest: sha256:e3ea88c29023bce211a1b0772cc6cb631f3db45c81a4c0394c4fc9999a417c1f

Status: Downloaded newer image for armory/gate-armory:dfafe73-release-1.8.x-5d505ca

docker.io/armory/gate-armory:dfafe73-release-1.8.x-5d505ca

[root@vms200 ~]# docker images | grep gate

armory/gate-armory dfafe73-release-1.8.x-5d505ca b092d4665301 2 years ago 179MB

[root@vms200 ~]# docker tag b092d4665301 harbor.op.com/armory/gate:v1.8.x

[root@vms200 ~]# docker push harbor.op.com/armory/gate:v1.8.x

[root@vms200 ~]# docker pull armory/gate-armory:0d6729c-release-1.10.x-a8bb998

0d6729c-release-1.10.x-a8bb998: Pulling from armory/gate-armory

...

Digest: sha256:6d06a597a9d8c98362230c6d4aa1c13944673702f433f504fd9eadf90b91d0e0

Status: Downloaded newer image for armory/gate-armory:0d6729c-release-1.10.x-a8bb998

docker.io/armory/gate-armory:0d6729c-release-1.10.x-a8bb998

[root@vms200 ~]# docker tag armory/gate-armory:0d6729c-release-1.10.x-a8bb998 harbor.op.com/armory/gate:v1.10.x

[root@vms200 ~]# docker push harbor.op.com/armory/gate:v1.10.x

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/gate

[root@vms200 ~]# cd /data/k8s-yaml/armory/gate

- Deployment:dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-gate

name: armory-gate

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-gate

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-gate"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"gate"'

labels:

app: armory-gate

spec:

containers:

- name: armory-gate

image: harbor.op.com/armory/gate:v1.10.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh gate && cd /home/spinnaker/config

&& /opt/gate/bin/gate

ports:

- containerPort: 8084

name: gate-port

protocol: TCP

- containerPort: 8085

name: gate-api-port

protocol: TCP

env:

- name: GATE_PORT_MAPPING

value: -8084:8084

- name: GATE_API_PORT_MAPPING

value: -8085:8085

- name: JAVA_OPTS

value: -Xmx512M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health || wget -O - https://localhost:8084/health

failureThreshold: 5

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

|| wget -O - https://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

failureThreshold: 3

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 10

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

- Service:svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-gate

namespace: armory

spec:

ports:

- name: gate-port

port: 8084

protocol: TCP

targetPort: 8084

- name: gate-api-port

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-gate

应用资源配置清单

任意一台运算节点或vms200上:

- vms200:/data/k8s-yaml/armory/gate

[root@vms200 gate]# kubectl apply -f ./dp.yaml

deployment.apps/armory-gate created

[root@vms200 gate]# kubectl apply -f ./svc.yaml

service/armory-gate created

- vms21

[root@vms21 ~]# kubectl -n armory get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5fdb54ffbd-mbmpv 1/1 Running 0 74m 172.26.21.6 vms21.cos.com <none> <none>

armory-echo-774875cc4-sxtcs 1/1 Running 0 42m 172.26.22.7 vms22.cos.com <none> <none>

armory-front50-c74947d8b-8vpxt 1/1 Running 0 71m 172.26.22.6 vms22.cos.com <none> <none>

armory-gate-68bbb98cb9-gblp6 1/1 Running 0 4m10s 172.26.22.8 vms22.cos.com <none> <none>

armory-igor-7689f5cc96-v6g9v 1/1 Running 0 24m 172.26.21.7 vms21.cos.com <none> <none>

armory-orca-59cf846bf9-hrtjc 1/1 Running 4 78m 172.26.21.3 vms21.cos.com <none> <none>

minio-5d6b989d46-t24js 1/1 Running 0 75m 172.26.21.4 vms21.cos.com <none> <none>

redis-5979d767cd-jxkrt 1/1 Running 0 75m 172.26.21.5 vms21.cos.com <none> <none>

[root@vms21 ~]# kubectl -n armory exec minio-5d6b989d46-t24js -- curl -s 'http://armory-gate:8084/health'

{"status":"UP"}

部署Deck

运维主机vms200上:

准备docker镜像

镜像下载:

[root@vms200 ~]# docker pull docker.io/armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94

d4bf0cf-release-1.8.x-0a33f94: Pulling from armory/deck-armory

...

Digest: sha256:ad85eb8e1ada327ab0b98471d10ed2a4e5eada3c154a2f17b6b23a089c74839f

Status: Downloaded newer image for armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94

docker.io/armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94

[root@vms200 ~]# docker images | grep deck

armory/deck-armory d4bf0cf-release-1.8.x-0a33f94 9a87ba3b319f 2 years ago 518MB

[root@vms200 ~]# docker tag 9a87ba3b319f harbor.op.com/armory/deck:v1.8.x

[root@vms200 ~]# docker push harbor.op.com/armory/deck:v1.8.x

[root@vms200 ~]# docker pull armory/deck-armory:12927b8-release-1.10.x-c9abb38e5

12927b8-release-1.10.x-c9abb38e5: Pulling from armory/deck-armory

...

Digest: sha256:f6421f5a3bae09f0ea78fb915fe2269ce5ec60fb4ed1737d0e534ddf8149c69d

Status: Downloaded newer image for armory/deck-armory:12927b8-release-1.10.x-c9abb38e5

docker.io/armory/deck-armory:12927b8-release-1.10.x-c9abb38e5

[root@vms200 ~]# docker tag armory/deck-armory:12927b8-release-1.10.x-c9abb38e5 harbor.op.com/armory/deck:v1.10.x

[root@vms200 ~]# docker push harbor.op.com/armory/deck:v1.10.x

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/deck

[root@vms200 ~]# cd /data/k8s-yaml/armory/deck

- Deployment:dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-deck

name: armory-deck

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-deck

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-deck"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"deck"'

labels:

app: armory-deck

spec:

containers:

- name: armory-deck

image: harbor.op.com/armory/deck:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && /entrypoint.sh

ports:

- containerPort: 9000

protocol: TCP

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

- Service:svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-deck

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: armory-deck

应用资源配置清单

任意一台运算节点或vms200上:

- vms200:/data/k8s-yaml/armory/deck

[root@vms200 deck]# kubectl apply -f ./dp.yaml

deployment.apps/armory-deck created

[root@vms200 deck]# kubectl apply -f ./svc.yaml

service/armory-deck created

- vms21

[root@vms21 ~]# kubectl -n armory get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5fdb54ffbd-mbmpv 1/1 Running 0 89m 172.26.21.6 vms21.cos.com <none> <none>

armory-deck-8688b588d5-mjbgm 1/1 Running 0 3m22s 172.26.21.8 vms21.cos.com <none> <none>

armory-echo-774875cc4-sxtcs 1/1 Running 0 57m 172.26.22.7 vms22.cos.com <none> <none>

armory-front50-c74947d8b-8vpxt 1/1 Running 0 86m 172.26.22.6 vms22.cos.com <none> <none>

armory-gate-68bbb98cb9-gblp6 1/1 Running 0 19m 172.26.22.8 vms22.cos.com <none> <none>

armory-igor-7689f5cc96-v6g9v 1/1 Running 0 39m 172.26.21.7 vms21.cos.com <none> <none>

armory-orca-59cf846bf9-hrtjc 1/1 Running 4 93m 172.26.21.3 vms21.cos.com <none> <none>

minio-5d6b989d46-t24js 1/1 Running 0 90m 172.26.21.4 vms21.cos.com <none> <none>

redis-5979d767cd-jxkrt 1/1 Running 0 90m 172.26.21.5 vms21.cos.com <none> <none>

[root@vms21 ~]# kubectl -n armory exec minio-5d6b989d46-t24js -- curl -Is 'http://armory-deck'

HTTP/1.1 200 OK

Server: nginx/1.4.6 (Ubuntu)

Date: Thu, 01 Oct 2020 01:21:16 GMT

Content-Type: text/html

Content-Length: 22031

Last-Modified: Tue, 17 Jul 2018 17:42:20 GMT

Connection: keep-alive

ETag: "5b4e2a7c-560f"

Accept-Ranges: bytes

部署前端代理Nginx

准备docker镜像

镜像下载:

[root@vms200 ~]# docker pull nginx:1.12.2

1.12.2: Pulling from library/nginx

...

Digest: sha256:72daaf46f11cc753c4eab981cbf869919bd1fee3d2170a2adeac12400f494728

Status: Downloaded newer image for nginx:1.12.2

docker.io/library/nginx:1.12.2

[root@vms200 ~]# docker tag nginx:1.12.2 harbor.op.com/armory/nginx:v1.12.2

[root@vms200 ~]# docker push harbor.op.com/armory/nginx:v1.12.2

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/armory/nginx

[root@vms200 ~]# cd /data/k8s-yaml/armory/nginx

- Deployment:dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-nginx

name: armory-nginx

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-nginx

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-nginx"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"nginx"'

labels:

app: armory-nginx

spec:

containers:

- name: armory-nginx

image: harbor.op.com/armory/nginx:v1.12.2

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh nginx && nginx -g 'daemon off;'

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8085

name: api

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /etc/nginx/conf.d

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- Service:svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-nginx

namespace: armory

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

- name: api

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-nginx

- ingress.yaml

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

labels:

app: spinnaker

web: spinnaker.op.com

name: spinnaker-route

namespace: armory

spec:

entryPoints:

- web

routes:

- match: Host(`spinnaker.op.com`)

kind: Rule

services:

- name: armory-nginx

port: 80

IngressRoute版本获取:

[root@vms22 ~]# kubectl explain ingressroute

KIND: IngressRoute

VERSION: traefik.containo.us/v1alpha1

或者使用Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

app: spinnaker

web: spinnaker.op.com

name: armory-nginx

namespace: armory

spec:

rules:

- host: spinnaker.op.com

http:

paths:

- backend:

serviceName: armory-nginx

servicePort: 80

应用资源配置清单

任意一台运算节点或vms200上:

- vms200:/data/k8s-yaml/armory/nginx

[root@vms200 nginx]# kubectl apply -f dp.yaml

deployment.apps/armory-nginx created

[root@vms200 nginx]# kubectl apply -f svc.yaml

service/armory-nginx created

[root@vms200 nginx]# kubectl apply -f ./ingress.yaml

ingressroute.traefik.containo.us/spinnaker-route created

- vms21

[root@vms21 ~]# kubectl -n armory get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

armory-clouddriver-5fdb54ffbd-mbmpv 1/1 Running 0 120m 172.26.21.6 vms21.cos.com <none> <none>

armory-deck-8688b588d5-mjbgm 1/1 Running 0 34m 172.26.21.8 vms21.cos.com <none> <none>

armory-echo-774875cc4-sxtcs 1/1 Running 0 88m 172.26.22.7 vms22.cos.com <none> <none>

armory-front50-c74947d8b-8vpxt 1/1 Running 0 117m 172.26.22.6 vms22.cos.com <none> <none>

armory-gate-68bbb98cb9-gblp6 1/1 Running 0 50m 172.26.22.8 vms22.cos.com <none> <none>

armory-igor-7689f5cc96-v6g9v 1/1 Running 0 70m 172.26.21.7 vms21.cos.com <none> <none>

armory-nginx-64f88b4bc8-542nl 1/1 Running 0 4m24s 172.26.22.9 vms22.cos.com <none> <none>

armory-orca-59cf846bf9-hrtjc 1/1 Running 4 124m 172.26.21.3 vms21.cos.com <none> <none>

minio-5d6b989d46-t24js 1/1 Running 0 121m 172.26.21.4 vms21.cos.com <none> <none>

redis-5979d767cd-jxkrt 1/1 Running 0 120m 172.26.21.5 vms21.cos.com <none> <none>

解析域名

vms11上

[root@vms11 ~]# vi /var/named/op.com.zone

...

spinnaker A 192.168.26.10

注意serial前滚一个序号

[root@vms11 ~]# systemctl restart named

[root@vms11 ~]# dig -t A spinnaker.op.com +short

192.168.26.10

[root@vms11 ~]# host spinnaker.op.com

minio.op.com has address 192.168.26.10

浏览器访问:http://spinnaker.op.com

至此,**spinnaker**完美成功部署!

启动/关闭spinnaker

[root@vms22 ~]# kubectl -n armory get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

armory-clouddriver 1/1 1 0 44h

armory-deck 1/1 1 1 7h2m

armory-echo 1/1 1 0 8h

armory-front50 1/1 1 0 42h

armory-gate 1/1 1 1 7h15m

armory-igor 1/1 1 1 7h38m

armory-nginx 1/1 1 1 6h29m

armory-orca 1/1 1 1 8h

minio 1/1 1 1 2d

redis 1/1 1 1 47h

[root@vms22 ~]# kubectl -n armory scale deployment armory-clouddriver --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-deck --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-echo --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-front50 --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-gate --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-igor --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-nginx --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment armory-orca --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment minio --replicas=0

[root@vms22 ~]# kubectl -n armory scale deployment redis --replicas=0

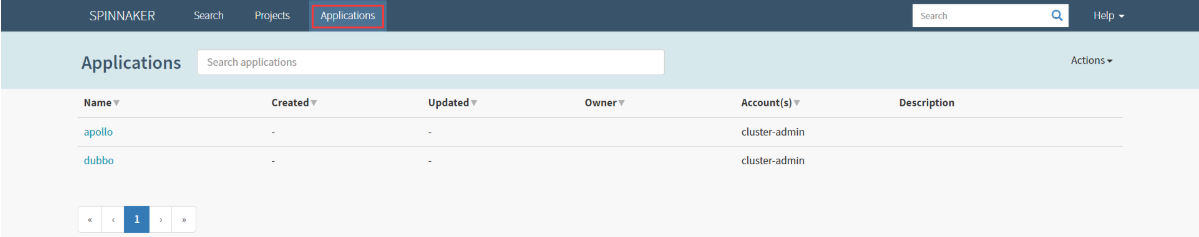

Spinnaker的使用

全流程的图形化界面操作及自动化运维。能够在统一的集中管理平台完成之前所有实验:

- 使用spinnaker结合jenkins构建镜像

- 使用spinnkaer配置dubbo服务提供者发布至K8S

- 使用spinnaker配置dubbo服务消费者到K8S

- 使用spinnkaer发版和生产环境配置

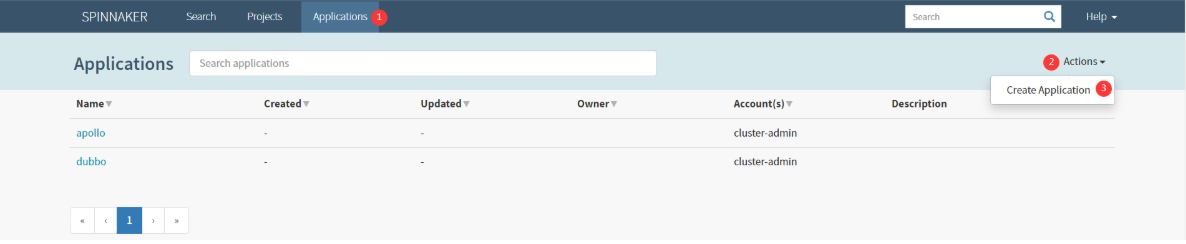

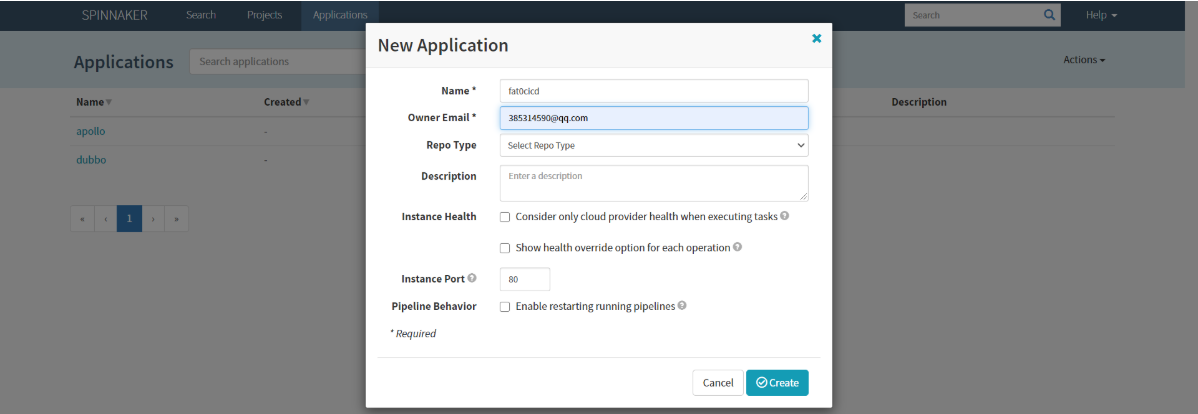

创建应用集

Applications > Actions : Create Application

New Application : fat0cicd

应用名不要太长,命名规则:环境名 + 0 + 应用/项目/部门

Create : (如果页面抖动,调整浏览器缩放比例)

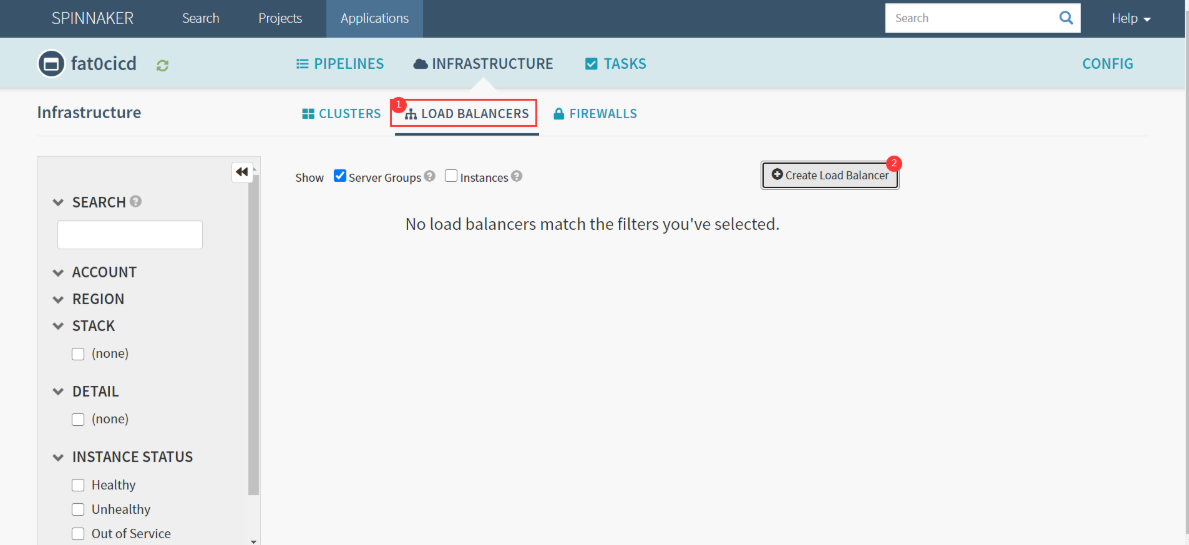

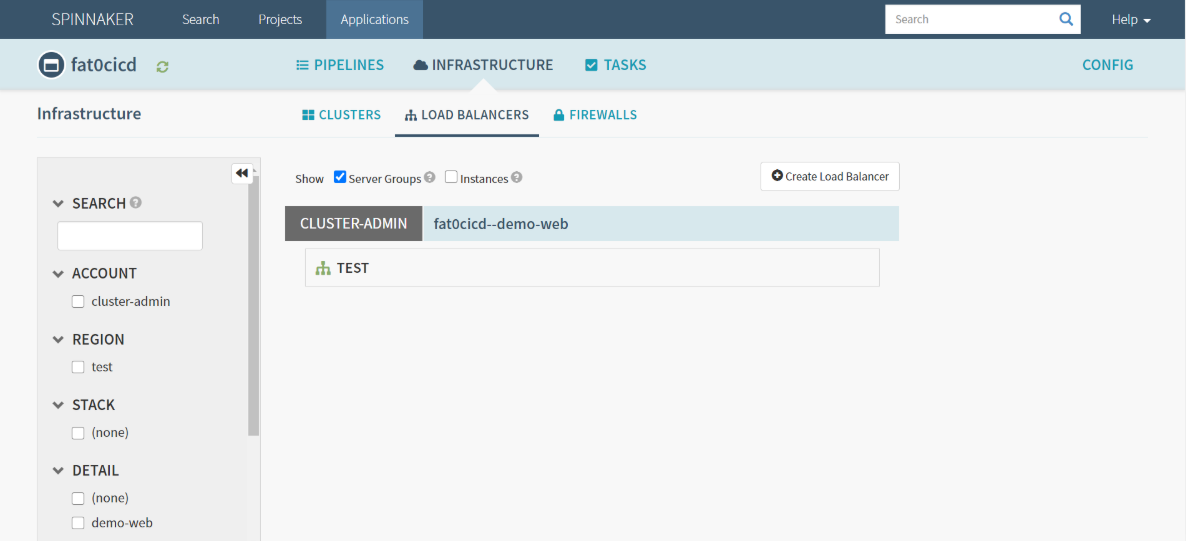

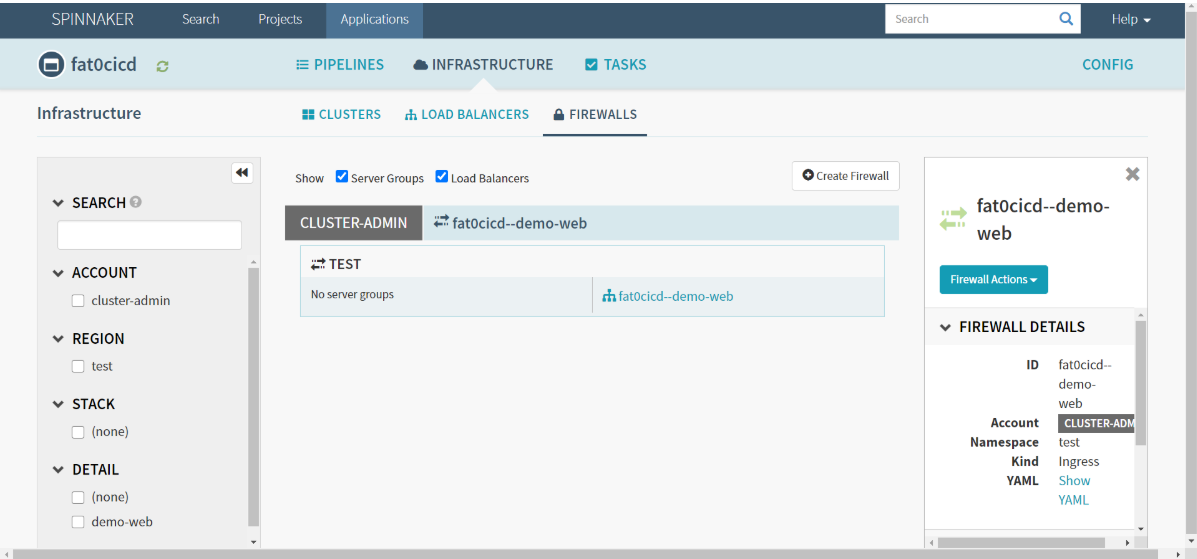

创建service

- fat0cicd :LOAD BALANCERS

- Create Load Balancer

- Account : cluster-admin

- Namespace : test

- Detail : demo-web

- Target Port : 8080

- Create : 刷新

创建ingress

- FIREWALLS : Create Firewall

- Create New Firewall

- Account : cluster-admin

- Namespace : test

- Detail : demo-web

- Rules : Add New Rule

- Host : demo-fat.op.com

- Add New Path

- Load Balancer : fat0cicd—demo-web

Path : /

Port : 80

- Load Balancer : fat0cicd—demo-web

- Create : 刷新

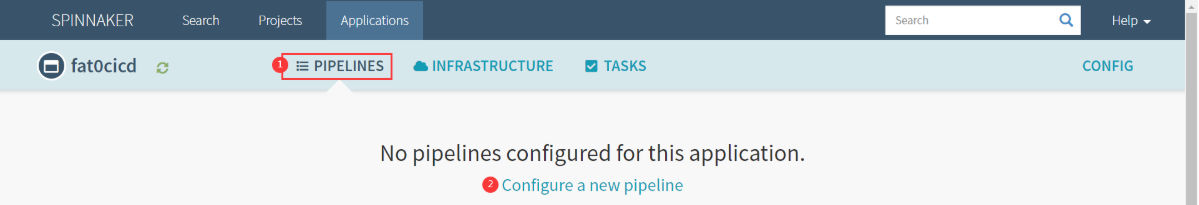

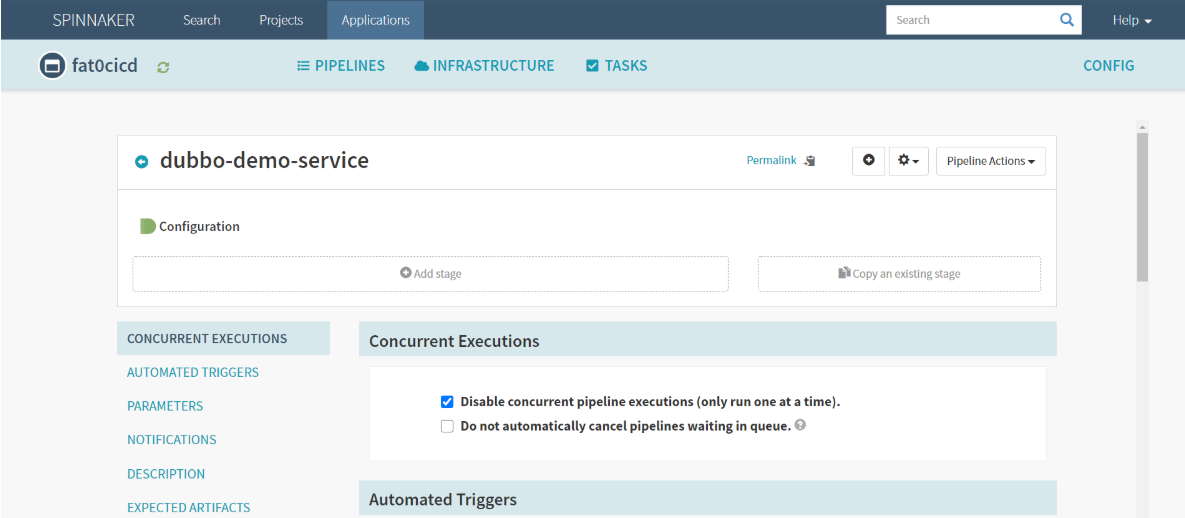

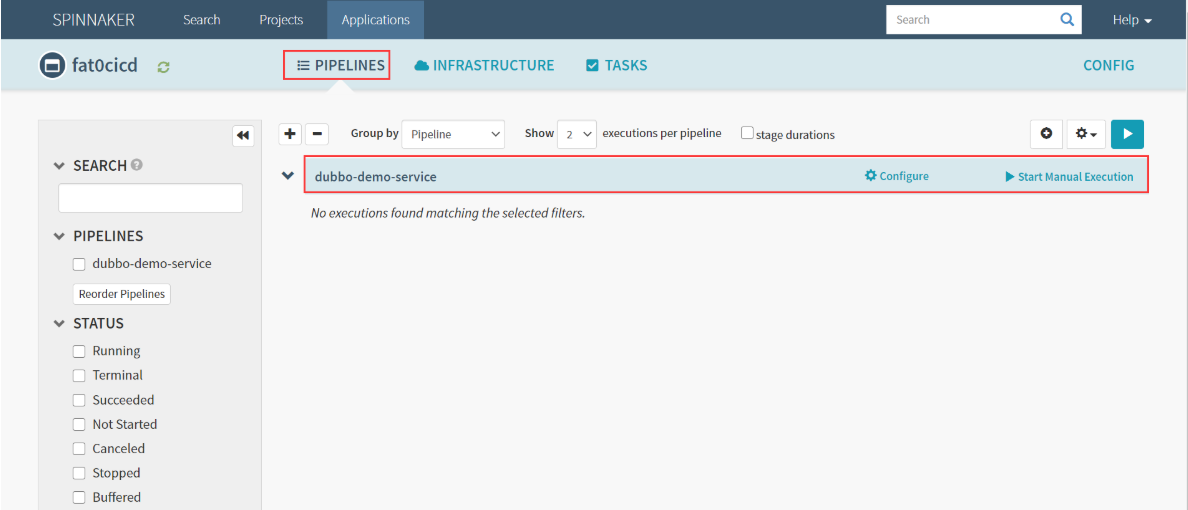

创建Pipeline

- PIPELINES

- Configure a new pipeline

- Type : Pipeline

Pipeline Name : dubbo-demo-service

- Type : Pipeline

- Create

- Parameters : Add Parameters 添加四个参数,通过参数构建流水线

增加以下四个参数,本次编译和发布 dubbo-demo-service,因此默认的项目名称和镜像名称是基本确定的

- name: app_name

required: true

default: dubbo-demo-service

description: 项目在Git仓库名称- name: git_ver

required: true

description: 项目的版本或者commit ID或者分支- name: image_name

required: true

default: app/dubbo-demo-service

description: 镜像名称,仓库/image- name: add_tag

required: true

description: 标签的一部分,追加在git_ver后面,使用YYYYmmdd_HHMM

Save 保存的参数会写入jenkins。

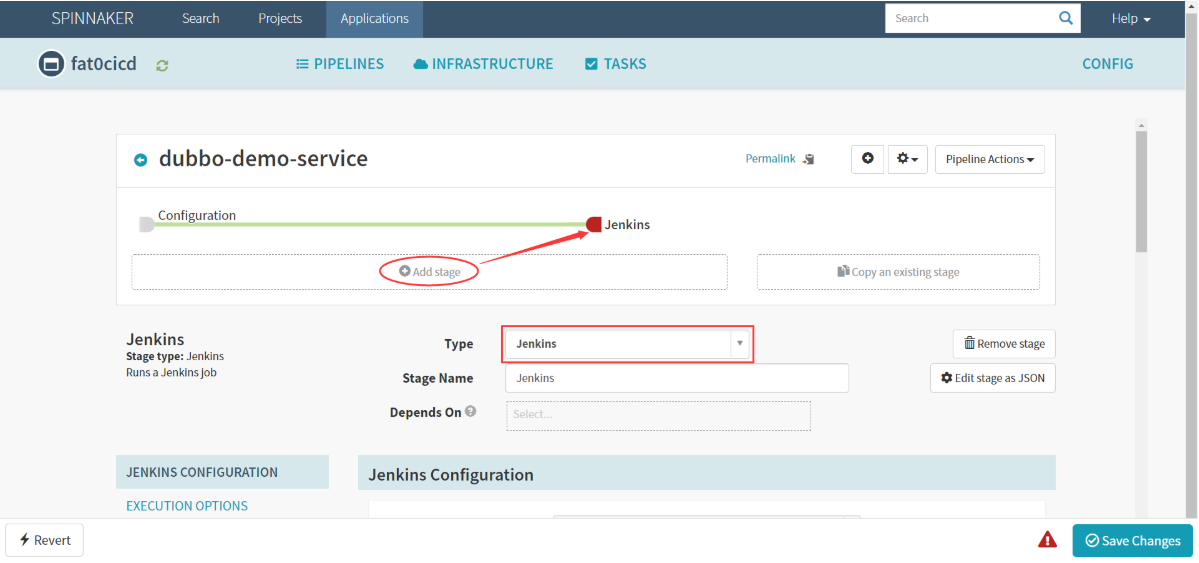

创建Jenkins构建步骤

如果在测试环境中,Jenkins一般是流水线的一部分,而在生产环境中,一般跳过Jenkins这个步骤。

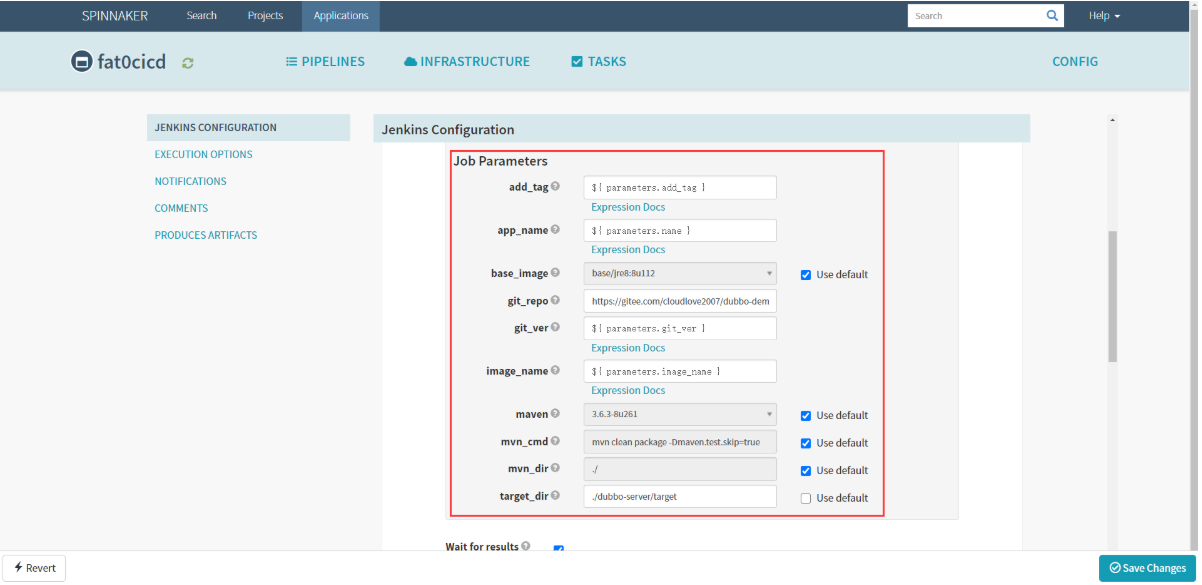

- Add stage : Jenkins

- Jenkins Configuration

- 指定登陆jenkins用户,可以看到Jenkins已经被加载进来了,下面的参数化构建的选项也被加载进来了

- 关联jenkins中的流水线

- 基于服务创建流水线,每个服务编译时只需要修改少量参数 | 选项 | 值 | | —- | —- | | add_tag | ${ parameters.add_tag } | | app_name | ${ parameters.name } | | base_image | base/jre8:8u112 | | git_repo | https://gitee.com/cloudlove2007/dubbo-demo-service.git | | get_ver | ${ parameters.git_ver } | | image_name | ${ parameters.image_name } | | maven | 3.6.3-8u261 | | mvn_cmd | 勾选Use default | | mvn_dir | ./ | | target_dir | ./dubbo-server/target |

Save Changes

- 点击

PIPELINES

执行流水线

略

其它

- Spinnaker的账号认证系统如何实现?

- 当前灰度发布、金丝雀发布、蓝绿发布如何实现?

default-config.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: default-config

namespace: armory

data:

barometer.yml: |

server:

port: 9092

spinnaker:

redis:

host: ${services.redis.host}

port: ${services.redis.port}

clouddriver-armory.yml: |

aws:

defaultAssumeRole: role/${SPINNAKER_AWS_DEFAULT_ASSUME_ROLE:SpinnakerManagedProfile}

accounts:

- name: default-aws-account

accountId: ${SPINNAKER_AWS_DEFAULT_ACCOUNT_ID:none}

client:

maxErrorRetry: 20

serviceLimits:

cloudProviderOverrides:

aws:

rateLimit: 15.0

implementationLimits:

AmazonAutoScaling:

defaults:

rateLimit: 3.0

AmazonElasticLoadBalancing:

defaults:

rateLimit: 5.0

security.basic.enabled: false

management.security.enabled: false

clouddriver-dev.yml: |

serviceLimits:

defaults:

rateLimit: 2

clouddriver.yml: |

server:

port: ${services.clouddriver.port:7002}

address: ${services.clouddriver.host:localhost}

redis:

connection: ${REDIS_HOST:redis://localhost:6379}

udf:

enabled: ${services.clouddriver.aws.udf.enabled:true}

udfRoot: /opt/spinnaker/config/udf

defaultLegacyUdf: false

default:

account:

env: ${providers.aws.primaryCredentials.name}

aws:

enabled: ${providers.aws.enabled:false}

defaults:

iamRole: ${providers.aws.defaultIAMRole:BaseIAMRole}

defaultRegions:

- name: ${providers.aws.defaultRegion:us-east-1}

defaultFront50Template: ${services.front50.baseUrl}

defaultKeyPairTemplate: ${providers.aws.defaultKeyPairTemplate}

azure:

enabled: ${providers.azure.enabled:false}

accounts:

- name: ${providers.azure.primaryCredentials.name}

clientId: ${providers.azure.primaryCredentials.clientId}

appKey: ${providers.azure.primaryCredentials.appKey}

tenantId: ${providers.azure.primaryCredentials.tenantId}

subscriptionId: ${providers.azure.primaryCredentials.subscriptionId}

google:

enabled: ${providers.google.enabled:false}

accounts:

- name: ${providers.google.primaryCredentials.name}

project: ${providers.google.primaryCredentials.project}

jsonPath: ${providers.google.primaryCredentials.jsonPath}

consul:

enabled: ${providers.google.primaryCredentials.consul.enabled:false}

cf:

enabled: ${providers.cf.enabled:false}

accounts:

- name: ${providers.cf.primaryCredentials.name}

api: ${providers.cf.primaryCredentials.api}

console: ${providers.cf.primaryCredentials.console}

org: ${providers.cf.defaultOrg}

space: ${providers.cf.defaultSpace}

username: ${providers.cf.account.name:}

password: ${providers.cf.account.password:}

kubernetes:

enabled: ${providers.kubernetes.enabled:false}

accounts:

- name: ${providers.kubernetes.primaryCredentials.name}

dockerRegistries:

- accountName: ${providers.kubernetes.primaryCredentials.dockerRegistryAccount}

openstack:

enabled: ${providers.openstack.enabled:false}

accounts:

- name: ${providers.openstack.primaryCredentials.name}

authUrl: ${providers.openstack.primaryCredentials.authUrl}

username: ${providers.openstack.primaryCredentials.username}

password: ${providers.openstack.primaryCredentials.password}

projectName: ${providers.openstack.primaryCredentials.projectName}

domainName: ${providers.openstack.primaryCredentials.domainName:Default}

regions: ${providers.openstack.primaryCredentials.regions}

insecure: ${providers.openstack.primaryCredentials.insecure:false}

userDataFile: ${providers.openstack.primaryCredentials.userDataFile:}

lbaas:

pollTimeout: 60

pollInterval: 5

dockerRegistry:

enabled: ${providers.dockerRegistry.enabled:false}

accounts:

- name: ${providers.dockerRegistry.primaryCredentials.name}

address: ${providers.dockerRegistry.primaryCredentials.address}

username: ${providers.dockerRegistry.primaryCredentials.username:}

passwordFile: ${providers.dockerRegistry.primaryCredentials.passwordFile}

credentials:

primaryAccountTypes: ${providers.aws.primaryCredentials.name}, ${providers.google.primaryCredentials.name}, ${providers.cf.primaryCredentials.name}, ${providers.azure.primaryCredentials.name}

challengeDestructiveActionsEnvironments: ${providers.aws.primaryCredentials.name}, ${providers.google.primaryCredentials.name}, ${providers.cf.primaryCredentials.name}, ${providers.azure.primaryCredentials.name}

spectator:

applicationName: ${spring.application.name}

webEndpoint:

enabled: ${services.spectator.webEndpoint.enabled:false}

prototypeFilter:

path: ${services.spectator.webEndpoint.prototypeFilter.path:}

stackdriver:

enabled: ${services.stackdriver.enabled}

projectName: ${services.stackdriver.projectName}

credentialsPath: ${services.stackdriver.credentialsPath}

stackdriver:

hints:

- name: controller.invocations

labels:

- account

- region

dinghy.yml: ""

echo-armory.yml: |

diagnostics:

enabled: true

id: ${ARMORY_ID:unknown}

armorywebhooks:

enabled: false

forwarding:

baseUrl: http://armory-dinghy:8081

endpoint: v1/webhooks

echo-noncron.yml: |

scheduler:

enabled: false

echo.yml: |

server:

port: ${services.echo.port:8089}

address: ${services.echo.host:localhost}

cassandra:

enabled: ${services.echo.cassandra.enabled:false}

embedded: ${services.cassandra.embedded:false}

host: ${services.cassandra.host:localhost}

spinnaker:

baseUrl: ${services.deck.baseUrl}

cassandra:

enabled: ${services.echo.cassandra.enabled:false}

inMemory:

enabled: ${services.echo.inMemory.enabled:true}

front50:

baseUrl: ${services.front50.baseUrl:http://localhost:8080 }

orca:

baseUrl: ${services.orca.baseUrl:http://localhost:8083 }

endpoints.health.sensitive: false

slack:

enabled: ${services.echo.notifications.slack.enabled:false}

token: ${services.echo.notifications.slack.token}

spring:

mail:

host: ${mail.host}

mail:

enabled: ${services.echo.notifications.mail.enabled:false}

host: ${services.echo.notifications.mail.host}

from: ${services.echo.notifications.mail.fromAddress}

hipchat:

enabled: ${services.echo.notifications.hipchat.enabled:false}

baseUrl: ${services.echo.notifications.hipchat.url}

token: ${services.echo.notifications.hipchat.token}

twilio:

enabled: ${services.echo.notifications.sms.enabled:false}

baseUrl: ${services.echo.notifications.sms.url:https://api.twilio.com/ }

account: ${services.echo.notifications.sms.account}

token: ${services.echo.notifications.sms.token}

from: ${services.echo.notifications.sms.from}

scheduler:

enabled: ${services.echo.cron.enabled:true}

threadPoolSize: 20

triggeringEnabled: true

pipelineConfigsPoller:

enabled: true

pollingIntervalMs: 30000

cron:

timezone: ${services.echo.cron.timezone}

spectator:

applicationName: ${spring.application.name}

webEndpoint:

enabled: ${services.spectator.webEndpoint.enabled:false}

prototypeFilter:

path: ${services.spectator.webEndpoint.prototypeFilter.path:}

stackdriver:

enabled: ${services.stackdriver.enabled}

projectName: ${services.stackdriver.projectName}

credentialsPath: ${services.stackdriver.credentialsPath}

webhooks:

artifacts:

enabled: true

fetch.sh: |+

CONFIG_LOCATION=${SPINNAKER_HOME:-"/opt/spinnaker"}/config

CONTAINER=$1

rm -f /opt/spinnaker/config/*.yml

mkdir -p ${CONFIG_LOCATION}

for filename in /opt/spinnaker/config/default/*.yml; do

cp $filename ${CONFIG_LOCATION}

done

if [ -d /opt/spinnaker/config/custom ]; then

for filename in /opt/spinnaker/config/custom/*; do

cp $filename ${CONFIG_LOCATION}

done

fi

add_ca_certs() {

ca_cert_path="$1"

jks_path="$2"

alias="$3"

if [[ "$(whoami)" != "root" ]]; then

echo "INFO: I do not have proper permisions to add CA roots"

return

fi

if [[ ! -f ${ca_cert_path} ]]; then

echo "INFO: No CA cert found at ${ca_cert_path}"

return

fi

keytool -importcert \

-file ${ca_cert_path} \

-keystore ${jks_path} \

-alias ${alias} \

-storepass changeit \

-noprompt

}

if [ `which keytool` ]; then

echo "INFO: Keytool found adding certs where appropriate"

add_ca_certs "${CONFIG_LOCATION}/ca.crt" "/etc/ssl/certs/java/cacerts" "custom-ca"

else

echo "INFO: Keytool not found, not adding any certs/private keys"

fi

saml_pem_path="/opt/spinnaker/config/custom/saml.pem"

saml_pkcs12_path="/tmp/saml.pkcs12"

saml_jks_path="${CONFIG_LOCATION}/saml.jks"

x509_ca_cert_path="/opt/spinnaker/config/custom/x509ca.crt"

x509_client_cert_path="/opt/spinnaker/config/custom/x509client.crt"

x509_jks_path="${CONFIG_LOCATION}/x509.jks"

x509_nginx_cert_path="/opt/nginx/certs/ssl.crt"

if [ "${CONTAINER}" == "gate" ]; then

if [ -f ${saml_pem_path} ]; then

echo "Loading ${saml_pem_path} into ${saml_jks_path}"

openssl pkcs12 -export -out ${saml_pkcs12_path} -in ${saml_pem_path} -password pass:changeit -name saml

keytool -genkey -v -keystore ${saml_jks_path} -alias saml \

-keyalg RSA -keysize 2048 -validity 10000 \

-storepass changeit -keypass changeit -dname "CN=armory"

keytool -importkeystore \

-srckeystore ${saml_pkcs12_path} \

-srcstoretype PKCS12 \

-srcstorepass changeit \

-destkeystore ${saml_jks_path} \

-deststoretype JKS \

-storepass changeit \

-alias saml \

-destalias saml \

-noprompt

else

echo "No SAML IDP pemfile found at ${saml_pem_path}"

fi

if [ -f ${x509_ca_cert_path} ]; then

echo "Loading ${x509_ca_cert_path} into ${x509_jks_path}"

add_ca_certs ${x509_ca_cert_path} ${x509_jks_path} "ca"

else

echo "No x509 CA cert found at ${x509_ca_cert_path}"

fi

if [ -f ${x509_client_cert_path} ]; then

echo "Loading ${x509_client_cert_path} into ${x509_jks_path}"

add_ca_certs ${x509_client_cert_path} ${x509_jks_path} "client"

else

echo "No x509 Client cert found at ${x509_client_cert_path}"

fi

if [ -f ${x509_nginx_cert_path} ]; then

echo "Creating a self-signed CA (EXPIRES IN 360 DAYS) with java keystore: ${x509_jks_path}"

echo -e "\n\n\n\n\n\ny\n" | keytool -genkey -keyalg RSA -alias server -keystore keystore.jks -storepass changeit -validity 360 -keysize 2048

keytool -importkeystore \

-srckeystore keystore.jks \

-srcstorepass changeit \

-destkeystore "${x509_jks_path}" \

-storepass changeit \

-srcalias server \

-destalias server \

-noprompt

else

echo "No x509 nginx cert found at ${x509_nginx_cert_path}"

fi

fi

if [ "${CONTAINER}" == "nginx" ]; then

nginx_conf_path="/opt/spinnaker/config/default/nginx.conf"

if [ -f ${nginx_conf_path} ]; then

cp ${nginx_conf_path} /etc/nginx/nginx.conf

fi

fi

fiat.yml: |-

server:

port: ${services.fiat.port:7003}

address: ${services.fiat.host:localhost}

redis:

connection: ${services.redis.connection:redis://localhost:6379}

spectator:

applicationName: ${spring.application.name}

webEndpoint:

enabled: ${services.spectator.webEndpoint.enabled:false}

prototypeFilter:

path: ${services.spectator.webEndpoint.prototypeFilter.path:}

stackdriver:

enabled: ${services.stackdriver.enabled}

projectName: ${services.stackdriver.projectName}

credentialsPath: ${services.stackdriver.credentialsPath}

hystrix:

command:

default.execution.isolation.thread.timeoutInMilliseconds: 20000

logging:

level:

com.netflix.spinnaker.fiat: DEBUG

front50-armory.yml: |

spinnaker:

redis:

enabled: true

host: redis

front50.yml: |

server:

port: ${services.front50.port:8080}

address: ${services.front50.host:localhost}

hystrix:

command:

default.execution.isolation.thread.timeoutInMilliseconds: 15000

cassandra:

enabled: ${services.front50.cassandra.enabled:false}

embedded: ${services.cassandra.embedded:false}

host: ${services.cassandra.host:localhost}

aws:

simpleDBEnabled: ${providers.aws.simpleDBEnabled:false}

defaultSimpleDBDomain: ${providers.aws.defaultSimpleDBDomain}

spinnaker:

cassandra: