k8s-centos8u2-集群-交付dubbo微服务实战

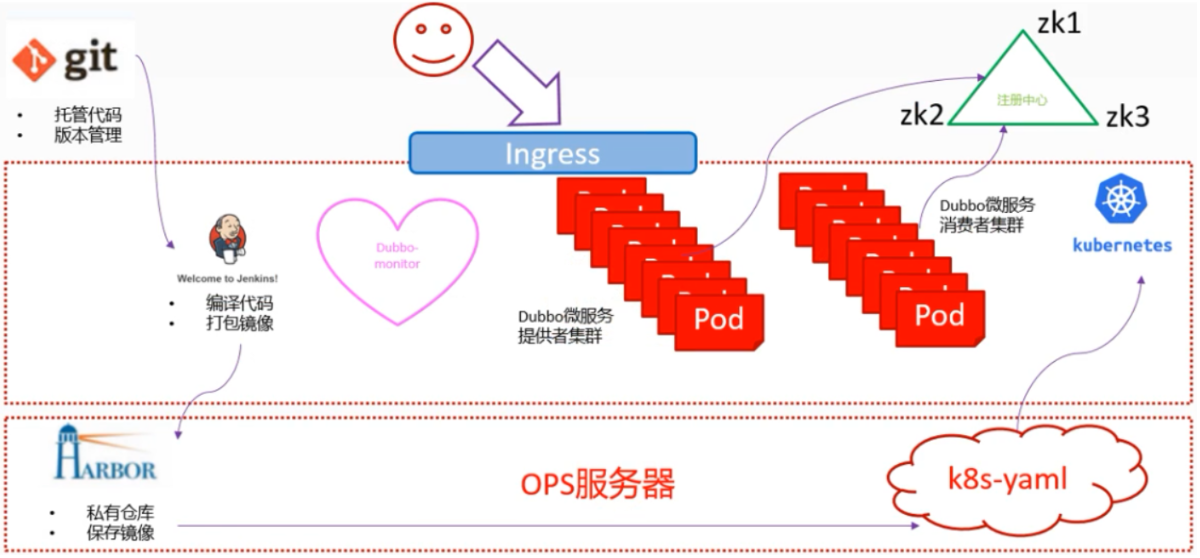

实验架构

此图片仅学习使用

- 最上面一排为K8S集群外服务

1.1 代码仓库使用基于git的gitee

1.2 注册中心使用3台zk组成集群

1.3 用户通过ingress暴露出去的服务进行访问- 中间层是K8S集群内服务

2.1 jenkins以容器方式运行,数据目录通过共享磁盘做持久化

2.2 整套dubbo微服务都以POD方式交付,通过zk集群通信

2.3 需要提供的外部访问的服务通过ingress方式暴露- 最下层是运维主机层

3.1 harbor是docker私有仓库,存放docker镜像

3.2 POD相关yaml文件创建在运维主机特定目录

3.3 在K8S集群内通过nginx提供的下载连接应用yaml配置

| 主机名 | 角色 | ip |

|---|---|---|

| vms11.cos.com | k8s代理节点1,zk1 | 192.168.26.11 |

| vms12.cos.com | k8s代理节点2,zk2 | 192.168.26.12 |

| vms21.cos.com | k8s运算节点1,zk3 | 192.168.26.21 |

| vms22.cos.com | k8s运算节点2,jenkins | 192.168.26.22 |

| vms200.cos.com | k8s运维节点(docker仓库) | 192.168.26.200 |

zookeeper

ZK集群是有状态的服务,其选择Leader的方式和ETCD类似,要求集群节点是不低于3的奇数个。

| 主机 | IP地址 | 角色 |

|---|---|---|

| vms11 | 192.168.26.11 | zk1 |

| vms12 | 192.168.26.12 | zk2 |

| vms21 | 192.168.26.21 | zk3 |

安装jdk8(3台zk角色主机)

jdk下载地址:https://www.oracle.com/java/technologies/javase-downloads.html

以vms11为例,其他节点相同。

##1 下载、解压、做软连接[root@vms11 ~]# cd /opt/src[root@vms11 src]# ls -l|grep jdk-rw-r--r-- 1 root root 143111803 Jul 28 16:09 jdk-8u261-linux-x64.tar.gz[root@vms11 src]# mkdir /usr/java[root@vms11 src]# tar xf jdk-8u261-linux-x64.tar.gz -C /usr/java[root@vms11 src]# ls -l /usr/javatotal 0drwxr-xr-x 8 10143 10143 273 Jun 18 14:59 jdk1.8.0_261[root@vms11 src]# ln -s /usr/java/jdk1.8.0_261 /usr/java/jdk##2 配置[root@vms11 src]# vi /etc/profile #在末尾增加以下3行export JAVA_HOME=/usr/java/jdkexport PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATHexport CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar##3 生效、验证[root@vms11 src]# source /etc/profile # 使环境变量生效[root@vms11 src]# java -versionjava version "1.8.0_261"Java(TM) SE Runtime Environment (build 1.8.0_261-b12)Java HotSpot(TM) 64-Bit Server VM (build 25.261-b12, mixed mode)

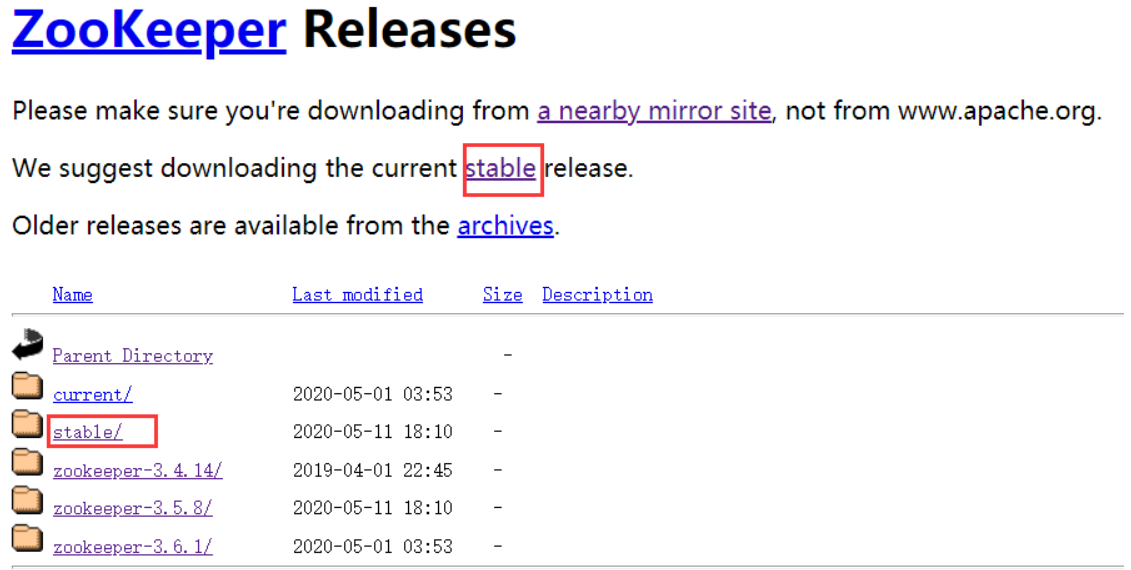

安装zookeeper(3台zk角色主机)

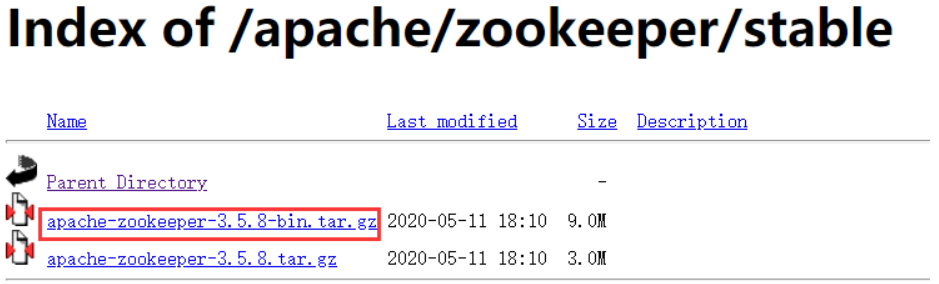

下载软件

- 选择镜像地址

- 选择

stable

- 选择

apache-zookeeper-3.5.8-bin.tar.gz

解压、配置

以vms11为例,其他节点相同。

##1 将下载的软件放到/opt/src目录、解压、做软连接[root@vms11 ~]# cd /opt/src[root@vms11 src]# ls -l|grep zoo-rw-r--r-- 1 root root 9394700 Jul 28 16:35 apache-zookeeper-3.5.8-bin.tar.gz[root@vms11 src]# tar xf apache-zookeeper-3.5.8-bin.tar.gz -C /opt[root@vms11 src]# ls -l /opttotal 0drwxr-xr-x 6 root root 134 Jul 28 16:36 apache-zookeeper-3.5.8-bindrwxr-xr-x 2 root root 81 Jul 28 16:35 src[root@vms11 src]# ln -s /opt/apache-zookeeper-3.5.8-bin /opt/zookeeper##2 创建目录[root@vms11 src]# mkdir -pv /data/zookeeper/data /data/zookeeper/logsmkdir: created directory '/data'mkdir: created directory '/data/zookeeper'mkdir: created directory '/data/zookeeper/data'mkdir: created directory '/data/zookeeper/logs'##3 创建配置文件/opt/zookeeper/conf/zoo.cfg[root@vms11 src]# vi /opt/zookeeper/conf/zoo.cfg[root@vms11 src]# cat /opt/zookeeper/conf/zoo.cfgtickTime=2000initLimit=10syncLimit=5dataDir=/data/zookeeper/datadataLogDir=/data/zookeeper/logsclientPort=2181server.1=zk1.op.com:2888:3888server.2=zk2.op.com:2888:3888server.3=zk3.op.com:2888:3888##4 设置myid:vms11上为1,vms12上为2,vms21上为3[root@vms11 src]# vi /data/zookeeper/data/myid[root@vms11 src]# cat /data/zookeeper/data/myid1

注意:各节点zk配置相同。其中myid:vms11上为1,vms12上为2,vms21上为3

做dns解析

vms11.cos.com上

##1 增加配置[root@vms11 ~]# vi /var/named/op.com.zone #在末尾增加以下3行、注意前滚序号:`serial`...zk1 A 192.168.26.11zk2 A 192.168.26.12zk3 A 192.168.26.21##2 重启与验证[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A zk1.op.com +short192.168.26.11[root@vms11 ~]# dig -t A zk3.op.com @192.168.26.11 +short192.168.26.21

依次启动zk

##1 vms11启动[root@vms11 ~]# /opt/zookeeper/bin/zkServer.sh startZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgStarting zookeeper ... STARTED##2 vms12启动[root@vms12 src]# /opt/zookeeper/bin/zkServer.sh startZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgStarting zookeeper ... STARTED##3 vms21启动[root@vms21 src]# /opt/zookeeper/bin/zkServer.sh startZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgStarting zookeeper ... STARTED

验证zk启动情况

查看三个节点,其中一个为

leader,其它为follower

[root@vms11 ~]# /opt/zookeeper/bin/zkServer.sh statusZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgClient port found: 2181. Client address: localhost.Mode: follower[root@vms12 src]# /opt/zookeeper/bin/zkServer.sh statusZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgClient port found: 2181. Client address: localhost.Mode: leader[root@vms21 src]# /opt/zookeeper/bin/zkServer.sh statusZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgClient port found: 2181. Client address: localhost.Mode: follower[root@vms11 ~]# ss -ln|grep 2181tcp LISTEN 0 50 *:2181 *:*

jenkins

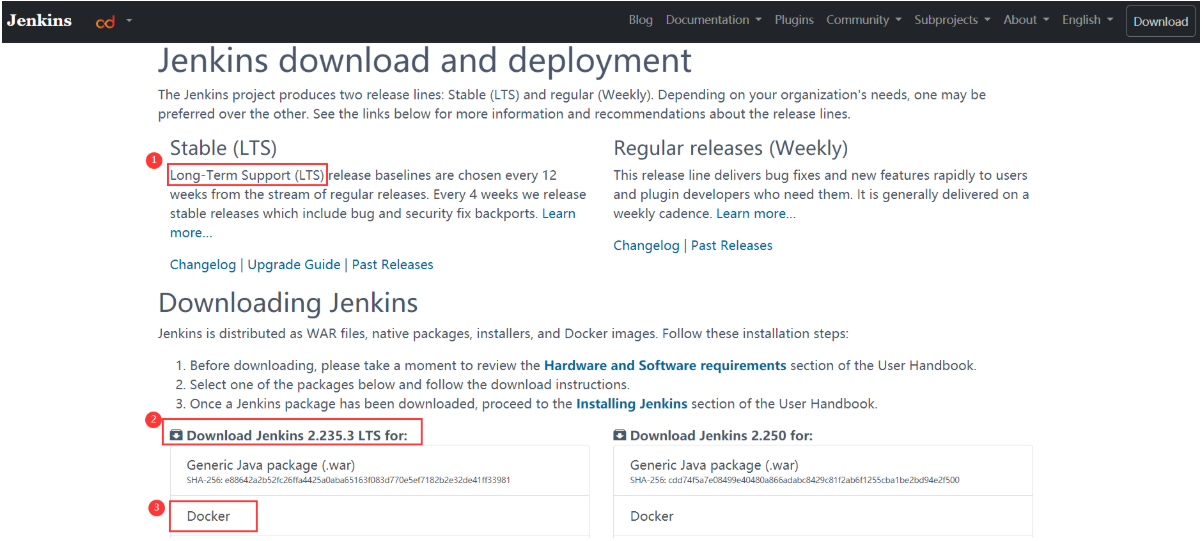

下载基础镜像

jenkins官网:https://www.jenkins.io/download/

在运维主机vms200下载官网上的稳定版Long-Term Support (LTS)

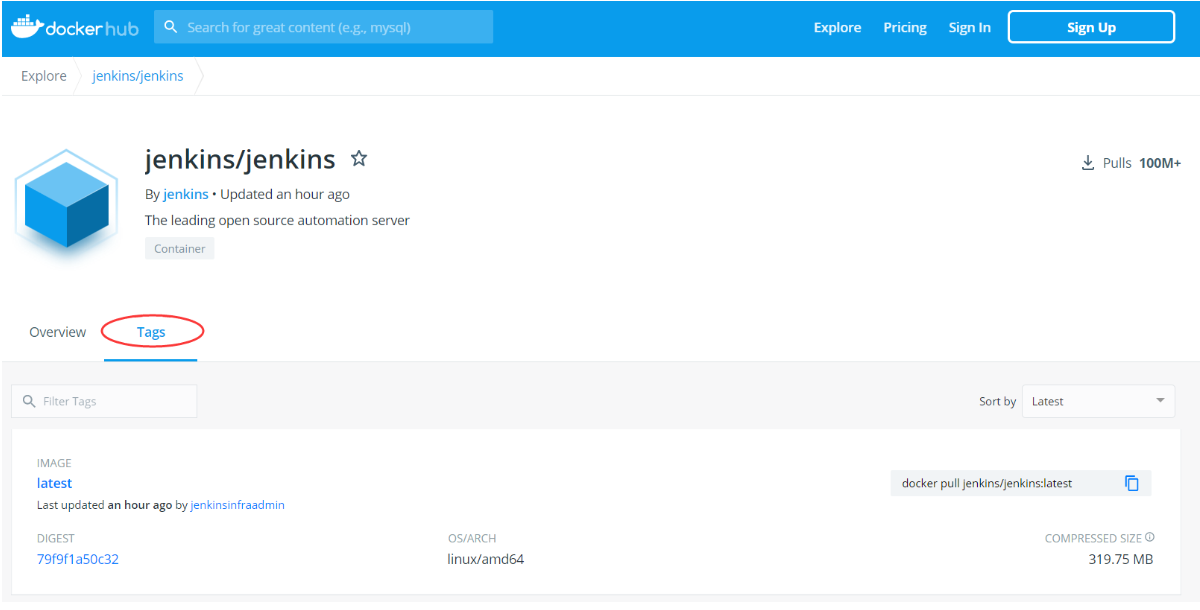

点击Docker跳到镜像站点:https://hub.docker.com/r/jenkins/jenkins

选择Tag。

下载2.235.3(当前最新版本)。在搜索框输入2.235进行搜索。

docker pull jenkins/jenkins:2.235.3

将镜像上传到私有仓库:

[root@vms200 ~]# docker images |grep jenkinsjenkins/jenkins 2.235.3 135a0d19f757 35 hours ago 667MB[root@vms200 ~]# docker tag jenkins/jenkins:2.235.3 harbor.op.com/public/jenkins:2.235.3[root@vms200 ~]# docker push harbor.op.com/public/jenkins:2.235.3

本实验需要在jenkins基础镜像的基础上,制作jenkins的Docker自定义镜像

自定义Dockerfile

在运维主机vms200.cos.com上编辑自定义dockerfile

Dockerfile

[root@vms200 ~]# mkdir -p /data/dockerfile/jenkins/[root@vms200 ~]# cd /data/dockerfile/jenkins/[root@vms200 jenkins]# vi Dockerfile

FROM harbor.op.com/public/jenkins:2.235.3#定义启动jenkins的用户,使用root用户启动USER root#修改时区为东八区RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\echo 'Asia/Shanghai' >/etc/timezone#加载用户密钥,使用ssh拉取dubbo代码需要ADD id_rsa /root/.ssh/id_rsa#加载运维主机的docker配置文件,里面包含登录harbor仓库的认证信息。ADD config.json /root/.docker/config.json#在jenkins容器内安装docker客户端,docker引擎用的是宿主机的docker引擎ADD get-docker.sh /get-docker.sh# 跳过ssh时候输入yes的交互步骤,并执行安装dockerRUN echo " StrictHostKeyChecking no" >/etc/ssh/ssh_config &&\/get-docker.sh

这个Dockerfile里主要做了以下几件事

- 设置容器启动时使用的用户为root

- 设置容器内的时区为UTC+8(东八区)

- 将ssh私钥加入(使用ssh方式拉取git代码时要用到,配对的公钥应配置在gitlab中)

- 加入了登录自建harbor仓库的config文件

- 修改了ssh客户端的配置

- 安装一个docker的客户端

ssh密钥id_rsa

生成ssh密钥对:

[root@vms200 jenkins]# ssh-keygen -t rsa -b 2048 -C "swbook@189.cn" -N "" -f /root/.ssh/id_rsaGenerating public/private rsa key pair.Your identification has been saved in /root/.ssh/id_rsa.Your public key has been saved in /root/.ssh/id_rsa.pub.The key fingerprint is:SHA256:3gOWhpb2assSyD9ajkAsEq+SvHYTYIh5euGBHB/VoQ8 swbook@189.cnThe key's randomart image is:+---[RSA 2048]----+| ..... || . . .. ||=oo .E ||**+. oo . ||+Bo+ =.S ||*o=..o = o ||=o .o. o o ||.o.*+... . ||..+.o++. |+----[SHA256]-----+[root@vms200 jenkins]# ls -l /root/.ssh/id_rsa-rw------- 1 root root 1823 Aug 6 16:00 /root/.ssh/id_rsa[root@vms200 jenkins]# cp /root/.ssh/id_rsa /data/dockerfile/jenkins/[root@vms200 jenkins]# ls -ltotal 8-rw-r--r-- 1 root root 728 Aug 6 15:17 Dockerfile-rw------- 1 root root 1823 Aug 6 16:00 id_rsa

- 邮箱请根据自己的邮箱自行修改

- 创建完成后记得把公钥放到gitee的信任中

[root@vms200 ~]# cat /root/.ssh/id_rsa.pubssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDHcFe00ZIjBwckjJ1pIlUJZZESb1WQ8bdeLGZ6+OFBZHyOcsEU9iBLgXsDYqaxWzF/Eb8GR5GnQnEhaKYnxPj81sUJoE8JmVgTgThevm6UZMZblEy/KZtMCB2Y42SJwkfDCm0tGScetnP8IcZLZ2mYyt308mJPnZu61JlhwHIdUKqcy4HfO4sRoKlh+Fh67tjpM/1snmA0RlJ7bXmWV02i1G5j1muT2ObEHO4nVHeCstIPgIgPtVHR8Mndl9f5ambVaB+jPUKHfkQIl2kk+4fQ/8xwSYTQ+oowWiAkm8hIxQeix4pqtsW91qHfHxnbbKNID6bynemxopesV9+Olzex swbook@189.cn

config.json

拷贝config.json文件:(目录

/data/dockerfile/jenkins下)

[root@vms200 jenkins]# cp ~/.docker/config.json ./ #Docker登陆信息

get-docker.sh

获取

get-docker.sh脚本:https://get.docker.com (目录/data/dockerfile/jenkins下)

使用以下两种方法不能下载时,可以直接在浏览器打开网址进行复制。

- 下载方法1:wget -O get-docker.sh https://get.docker.com

- 下载方法2:curl -fsSL get.docker.com -o /data/dockerfile/jenkins/get-docker.sh

#!/bin/shset -e# Docker CE for Linux installation script## See https://docs.docker.com/install/ for the installation steps.## This script is meant for quick & easy install via:# $ curl -fsSL https://get.docker.com -o get-docker.sh# $ sh get-docker.sh## For test builds (ie. release candidates):# $ curl -fsSL https://test.docker.com -o test-docker.sh# $ sh test-docker.sh## NOTE: Make sure to verify the contents of the script# you downloaded matches the contents of install.sh# located at https://github.com/docker/docker-install# before executing.## Git commit from https://github.com/docker/docker-install when# the script was uploaded (Should only be modified by upload job):SCRIPT_COMMIT_SHA="26ff363bcf3b3f5a00498ac43694bf1c7d9ce16c"# The channel to install from:# * nightly# * test# * stable# * edge (deprecated)DEFAULT_CHANNEL_VALUE="stable"if [ -z "$CHANNEL" ]; thenCHANNEL=$DEFAULT_CHANNEL_VALUEfiDEFAULT_DOWNLOAD_URL="https://download.docker.com"if [ -z "$DOWNLOAD_URL" ]; thenDOWNLOAD_URL=$DEFAULT_DOWNLOAD_URLfiDEFAULT_REPO_FILE="docker-ce.repo"if [ -z "$REPO_FILE" ]; thenREPO_FILE="$DEFAULT_REPO_FILE"fimirror=''DRY_RUN=${DRY_RUN:-}while [ $# -gt 0 ]; docase "$1" in--mirror)mirror="$2"shift;;--dry-run)DRY_RUN=1;;--*)echo "Illegal option $1";;esacshift $(( $# > 0 ? 1 : 0 ))donecase "$mirror" inAliyun)DOWNLOAD_URL="https://mirrors.aliyun.com/docker-ce";;AzureChinaCloud)DOWNLOAD_URL="https://mirror.azure.cn/docker-ce";;esaccommand_exists() {command -v "$@" > /dev/null 2>&1}is_dry_run() {if [ -z "$DRY_RUN" ]; thenreturn 1elsereturn 0fi}is_wsl() {case "$(uname -r)" in*microsoft* ) true ;; # WSL 2*Microsoft* ) true ;; # WSL 1* ) false;;esac}is_darwin() {case "$(uname -s)" in*darwin* ) true ;;*Darwin* ) true ;;* ) false;;esac}deprecation_notice() {distro=$1date=$2echoecho "DEPRECATION WARNING:"echo " The distribution, $distro, will no longer be supported in this script as of $date."echo " If you feel this is a mistake please submit an issue at https://github.com/docker/docker-install/issues/new"echosleep 10}get_distribution() {lsb_dist=""# Every system that we officially support has /etc/os-releaseif [ -r /etc/os-release ]; thenlsb_dist="$(. /etc/os-release && echo "$ID")"fi# Returning an empty string here should be alright since the# case statements don't act unless you provide an actual valueecho "$lsb_dist"}add_debian_backport_repo() {debian_version="$1"backports="deb http://ftp.debian.org/debian $debian_version-backports main"if ! grep -Fxq "$backports" /etc/apt/sources.list; then(set -x; $sh_c "echo \"$backports\" >> /etc/apt/sources.list")fi}echo_docker_as_nonroot() {if is_dry_run; thenreturnfiif command_exists docker && [ -e /var/run/docker.sock ]; then(set -x$sh_c 'docker version') || truefiyour_user=your-user[ "$user" != 'root' ] && your_user="$user"# intentionally mixed spaces and tabs here -- tabs are stripped by "<<-EOF", spaces are kept in the outputecho "If you would like to use Docker as a non-root user, you should now consider"echo "adding your user to the \"docker\" group with something like:"echoecho " sudo usermod -aG docker $your_user"echoecho "Remember that you will have to log out and back in for this to take effect!"echoecho "WARNING: Adding a user to the \"docker\" group will grant the ability to run"echo " containers which can be used to obtain root privileges on the"echo " docker host."echo " Refer to https://docs.docker.com/engine/security/security/#docker-daemon-attack-surface"echo " for more information."}# Check if this is a forked Linux distrocheck_forked() {# Check for lsb_release command existence, it usually exists in forked distrosif command_exists lsb_release; then# Check if the `-u` option is supportedset +elsb_release -a -u > /dev/null 2>&1lsb_release_exit_code=$?set -e# Check if the command has exited successfully, it means we're in a forked distroif [ "$lsb_release_exit_code" = "0" ]; then# Print info about current distrocat <<-EOFYou're using '$lsb_dist' version '$dist_version'.EOF# Get the upstream release infolsb_dist=$(lsb_release -a -u 2>&1 | tr '[:upper:]' '[:lower:]' | grep -E 'id' | cut -d ':' -f 2 | tr -d '[:space:]')dist_version=$(lsb_release -a -u 2>&1 | tr '[:upper:]' '[:lower:]' | grep -E 'codename' | cut -d ':' -f 2 | tr -d '[:space:]')# Print info about upstream distrocat <<-EOFUpstream release is '$lsb_dist' version '$dist_version'.EOFelseif [ -r /etc/debian_version ] && [ "$lsb_dist" != "ubuntu" ] && [ "$lsb_dist" != "raspbian" ]; thenif [ "$lsb_dist" = "osmc" ]; then# OSMC runs Raspbianlsb_dist=raspbianelse# We're Debian and don't even know it!lsb_dist=debianfidist_version="$(sed 's/\/.*//' /etc/debian_version | sed 's/\..*//')"case "$dist_version" in10)dist_version="buster";;9)dist_version="stretch";;8|'Kali Linux 2')dist_version="jessie";;esacfififi}semverParse() {major="${1%%.*}"minor="${1#$major.}"minor="${minor%%.*}"patch="${1#$major.$minor.}"patch="${patch%%[-.]*}"}do_install() {echo "# Executing docker install script, commit: $SCRIPT_COMMIT_SHA"if command_exists docker; thendocker_version="$(docker -v | cut -d ' ' -f3 | cut -d ',' -f1)"MAJOR_W=1MINOR_W=10semverParse "$docker_version"shouldWarn=0if [ "$major" -lt "$MAJOR_W" ]; thenshouldWarn=1fiif [ "$major" -le "$MAJOR_W" ] && [ "$minor" -lt "$MINOR_W" ]; thenshouldWarn=1ficat >&2 <<-'EOF'Warning: the "docker" command appears to already exist on this system.If you already have Docker installed, this script can cause trouble, which iswhy we're displaying this warning and provide the opportunity to cancel theinstallation.If you installed the current Docker package using this script and are using itEOFif [ $shouldWarn -eq 1 ]; thencat >&2 <<-'EOF'again to update Docker, we urge you to migrate your image store before upgradingto v1.10+.You can find instructions for this here:https://github.com/docker/docker/wiki/Engine-v1.10.0-content-addressability-migrationEOFelsecat >&2 <<-'EOF'again to update Docker, you can safely ignore this message.EOFficat >&2 <<-'EOF'You may press Ctrl+C now to abort this script.EOF( set -x; sleep 20 )fiuser="$(id -un 2>/dev/null || true)"sh_c='sh -c'if [ "$user" != 'root' ]; thenif command_exists sudo; thensh_c='sudo -E sh -c'elif command_exists su; thensh_c='su -c'elsecat >&2 <<-'EOF'Error: this installer needs the ability to run commands as root.We are unable to find either "sudo" or "su" available to make this happen.EOFexit 1fifiif is_dry_run; thensh_c="echo"fi# perform some very rudimentary platform detectionlsb_dist=$( get_distribution )lsb_dist="$(echo "$lsb_dist" | tr '[:upper:]' '[:lower:]')"if is_wsl; thenechoecho "WSL DETECTED: We recommend using Docker Desktop for Windows."echo "Please get Docker Desktop from https://www.docker.com/products/docker-desktop"echocat >&2 <<-'EOF'You may press Ctrl+C now to abort this script.EOF( set -x; sleep 20 )ficase "$lsb_dist" inubuntu)if command_exists lsb_release; thendist_version="$(lsb_release --codename | cut -f2)"fiif [ -z "$dist_version" ] && [ -r /etc/lsb-release ]; thendist_version="$(. /etc/lsb-release && echo "$DISTRIB_CODENAME")"fi;;debian|raspbian)dist_version="$(sed 's/\/.*//' /etc/debian_version | sed 's/\..*//')"case "$dist_version" in10)dist_version="buster";;9)dist_version="stretch";;8)dist_version="jessie";;esac;;centos|rhel)if [ -z "$dist_version" ] && [ -r /etc/os-release ]; thendist_version="$(. /etc/os-release && echo "$VERSION_ID")"fi;;*)if command_exists lsb_release; thendist_version="$(lsb_release --release | cut -f2)"fiif [ -z "$dist_version" ] && [ -r /etc/os-release ]; thendist_version="$(. /etc/os-release && echo "$VERSION_ID")"fi;;esac# Check if this is a forked Linux distrocheck_forked# Run setup for each distro accordinglycase "$lsb_dist" inubuntu|debian|raspbian)pre_reqs="apt-transport-https ca-certificates curl"if [ "$lsb_dist" = "debian" ]; then# libseccomp2 does not exist for debian jessie main repos for aarch64if [ "$(uname -m)" = "aarch64" ] && [ "$dist_version" = "jessie" ]; thenadd_debian_backport_repo "$dist_version"fifiif ! command -v gpg > /dev/null; thenpre_reqs="$pre_reqs gnupg"fiapt_repo="deb [arch=$(dpkg --print-architecture)] $DOWNLOAD_URL/linux/$lsb_dist $dist_version $CHANNEL"(if ! is_dry_run; thenset -xfi$sh_c 'apt-get update -qq >/dev/null'$sh_c "DEBIAN_FRONTEND=noninteractive apt-get install -y -qq $pre_reqs >/dev/null"$sh_c "curl -fsSL \"$DOWNLOAD_URL/linux/$lsb_dist/gpg\" | apt-key add -qq - >/dev/null"$sh_c "echo \"$apt_repo\" > /etc/apt/sources.list.d/docker.list"$sh_c 'apt-get update -qq >/dev/null')pkg_version=""if [ -n "$VERSION" ]; thenif is_dry_run; thenecho "# WARNING: VERSION pinning is not supported in DRY_RUN"else# Will work for incomplete versions IE (17.12), but may not actually grab the "latest" if in the test channelpkg_pattern="$(echo "$VERSION" | sed "s/-ce-/~ce~.*/g" | sed "s/-/.*/g").*-0~$lsb_dist"search_command="apt-cache madison 'docker-ce' | grep '$pkg_pattern' | head -1 | awk '{\$1=\$1};1' | cut -d' ' -f 3"pkg_version="$($sh_c "$search_command")"echo "INFO: Searching repository for VERSION '$VERSION'"echo "INFO: $search_command"if [ -z "$pkg_version" ]; thenechoecho "ERROR: '$VERSION' not found amongst apt-cache madison results"echoexit 1fisearch_command="apt-cache madison 'docker-ce-cli' | grep '$pkg_pattern' | head -1 | awk '{\$1=\$1};1' | cut -d' ' -f 3"# Don't insert an = for cli_pkg_version, we'll just include it latercli_pkg_version="$($sh_c "$search_command")"pkg_version="=$pkg_version"fifi(if ! is_dry_run; thenset -xfiif [ -n "$cli_pkg_version" ]; then$sh_c "apt-get install -y -qq --no-install-recommends docker-ce-cli=$cli_pkg_version >/dev/null"fi$sh_c "apt-get install -y -qq --no-install-recommends docker-ce$pkg_version >/dev/null")echo_docker_as_nonrootexit 0;;centos|fedora|rhel)yum_repo="$DOWNLOAD_URL/linux/$lsb_dist/$REPO_FILE"if ! curl -Ifs "$yum_repo" > /dev/null; thenecho "Error: Unable to curl repository file $yum_repo, is it valid?"exit 1fiif [ "$lsb_dist" = "fedora" ]; thenpkg_manager="dnf"config_manager="dnf config-manager"enable_channel_flag="--set-enabled"disable_channel_flag="--set-disabled"pre_reqs="dnf-plugins-core"pkg_suffix="fc$dist_version"elsepkg_manager="yum"config_manager="yum-config-manager"enable_channel_flag="--enable"disable_channel_flag="--disable"pre_reqs="yum-utils"pkg_suffix="el"fi(if ! is_dry_run; thenset -xfi$sh_c "$pkg_manager install -y -q $pre_reqs"$sh_c "$config_manager --add-repo $yum_repo"if [ "$CHANNEL" != "stable" ]; then$sh_c "$config_manager $disable_channel_flag docker-ce-*"$sh_c "$config_manager $enable_channel_flag docker-ce-$CHANNEL"fi$sh_c "$pkg_manager makecache")pkg_version=""if [ -n "$VERSION" ]; thenif is_dry_run; thenecho "# WARNING: VERSION pinning is not supported in DRY_RUN"elsepkg_pattern="$(echo "$VERSION" | sed "s/-ce-/\\\\.ce.*/g" | sed "s/-/.*/g").*$pkg_suffix"search_command="$pkg_manager list --showduplicates 'docker-ce' | grep '$pkg_pattern' | tail -1 | awk '{print \$2}'"pkg_version="$($sh_c "$search_command")"echo "INFO: Searching repository for VERSION '$VERSION'"echo "INFO: $search_command"if [ -z "$pkg_version" ]; thenechoecho "ERROR: '$VERSION' not found amongst $pkg_manager list results"echoexit 1fisearch_command="$pkg_manager list --showduplicates 'docker-ce-cli' | grep '$pkg_pattern' | tail -1 | awk '{print \$2}'"# It's okay for cli_pkg_version to be blank, since older versions don't support a cli packagecli_pkg_version="$($sh_c "$search_command" | cut -d':' -f 2)"# Cut out the epoch and prefix with a '-'pkg_version="-$(echo "$pkg_version" | cut -d':' -f 2)"fifi(if ! is_dry_run; thenset -xfi# install the correct cli version firstif [ -n "$cli_pkg_version" ]; then$sh_c "$pkg_manager install -y -q docker-ce-cli-$cli_pkg_version"fi$sh_c "$pkg_manager install -y -q docker-ce$pkg_version")echo_docker_as_nonrootexit 0;;*)if [ -z "$lsb_dist" ]; thenif is_darwin; thenechoecho "ERROR: Unsupported operating system 'macOS'"echo "Please get Docker Desktop from https://www.docker.com/products/docker-desktop"echoexit 1fifiechoecho "ERROR: Unsupported distribution '$lsb_dist'"echoexit 1;;esacexit 1}# wrapped up in a function so that we have some protection against only getting# half the file during "curl | sh"do_install

[root@vms200 jenkins]# chmod +x /data/dockerfile/jenkins/get-docker.sh

该脚本就是在docker-ce源中安装一个docker-ce-cli。

检查文件

[root@vms200 jenkins]# pwd/data/dockerfile/jenkins[root@vms200 jenkins]# lltotal 28-rw------- 1 root root 152 Aug 6 16:28 config.json-rw-r--r-- 1 root root 727 Aug 6 17:00 Dockerfile-rwxr-xr-x 1 root root 13857 Aug 6 16:10 get-docker.sh-rw------- 1 root root 1823 Aug 6 16:00 id_rsa

在harbor中创建私有仓库infra

harbor中创建私有仓库infra:infra 是harbor的一个私有仓库,是infrastructure(基础设施)的缩写

制作自定义镜像

在运维主机vms200.cos.com上(目录/data/dockerfile/jenkins下)

构建自定义的jenkins镜像

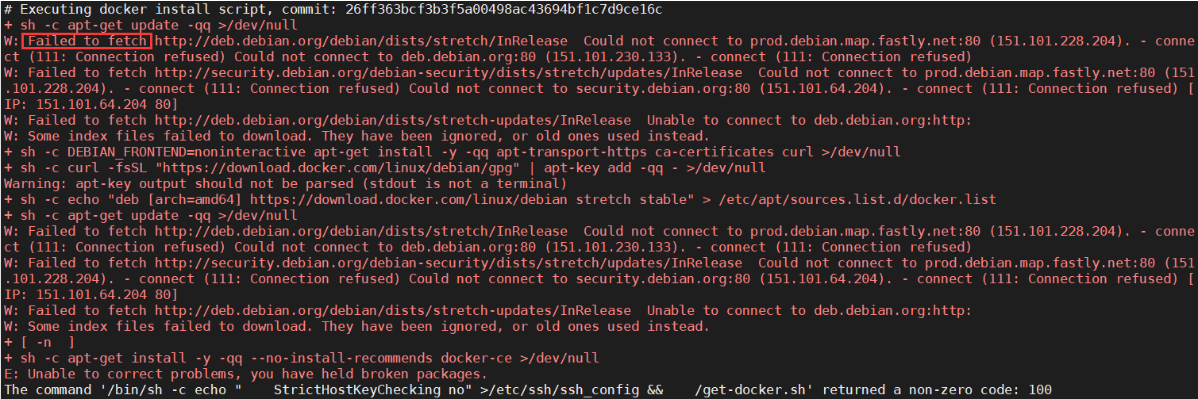

[root@vms200 jenkins]# docker build . -t harbor.op.com/infra/jenkins:v2.235.3Sending build context to Docker daemon 20.99kBStep 1/7 : FROM harbor.op.com/public/jenkins:2.235.3---> 135a0d19f757Step 2/7 : USER root---> Using cache---> b1eff4e3e0daStep 3/7 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone---> Using cache---> eab764c9255cStep 4/7 : ADD id_rsa /root/.ssh/id_rsa---> Using cache---> a0d3f87bdb18Step 5/7 : ADD config.json /root/.docker/config.json---> Using cache---> 95df846a34b5Step 6/7 : ADD get-docker.sh /get-docker.sh---> Using cache---> 22996ce3b3aeStep 7/7 : RUN echo " StrictHostKeyChecking no" >/etc/ssh/ssh_config && /get-docker.sh --mirror Aliyun---> Running in ba9b845ef6ff# Executing docker install script, commit: 26ff363bcf3b3f5a00498ac43694bf1c7d9ce16c+ sh -c apt-get update -qq >/dev/nullW: Failed to fetch http://deb.debian.org/debian/dists/stretch/InRelease Could not connect to prod.debian.map.fastly.net:80 (151.101.228.204). - connect (111: Connection refused) Could not connect to deb.debian.org:80 (151.101.230.133). - connect (111: Connection refused)W: Failed to fetch http://deb.debian.org/debian/dists/stretch-updates/InRelease Unable to connect to deb.debian.org:http:W: Some index files failed to download. They have been ignored, or old ones used instead.+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq apt-transport-https ca-certificates curl >/dev/nulldebconf: delaying package configuration, since apt-utils is not installed+ sh -c curl -fsSL "https://mirrors.aliyun.com/docker-ce/linux/debian/gpg" | apt-key add -qq - >/dev/nullWarning: apt-key output should not be parsed (stdout is not a terminal)+ sh -c echo "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/debian stretch stable" > /etc/apt/sources.list.d/docker.list+ sh -c apt-get update -qq >/dev/null+ [ -n ]+ sh -c apt-get install -y -qq --no-install-recommends docker-ce >/dev/nulldebconf: delaying package configuration, since apt-utils is not installedIf you would like to use Docker as a non-root user, you should now consideradding your user to the "docker" group with something like:sudo usermod -aG docker your-userRemember that you will have to log out and back in for this to take effect!WARNING: Adding a user to the "docker" group will grant the ability to runcontainers which can be used to obtain root privileges on thedocker host.Refer to https://docs.docker.com/engine/security/security/#docker-daemon-attack-surfacefor more information.Removing intermediate container ba9b845ef6ff---> feccbc6793c9Successfully built feccbc6793c9Successfully tagged harbor.op.com/infra/jenkins:v2.235.3

执行出错时:

修改Dockerfile后重新执行:加入--mirror Aliyun指定使用阿里云的repo仓库

...RUN echo " StrictHostKeyChecking no" >/etc/ssh/ssh_config &&\/get-docker.sh --mirror Aliyun

将自定义的jenkins镜像推送到harbor仓库

[root@vms200 jenkins]# docker images |grep infraharbor.op.com/infra/jenkins v2.235.3 feccbc6793c9 9 minutes ago 1.07GB[root@vms200 jenkins]# docker push harbor.op.com/infra/jenkins:v2.235.3The push refers to repository [harbor.op.com/infra/jenkins]85087a5c3775: Pushed6e63c5039dbb: Pushed04d0d0f4981f: Pushed0f14261940a8: Pushed2958cac9061e: Pushed127451aea177: Mounted from public/jenkinsf5bcfae65e8c: Mounted from public/jenkins687e70c08de0: Mounted from public/jenkins97228bebcea6: Mounted from public/jenkins5ea28e96a7c4: Mounted from public/jenkins09df571b2d1a: Mounted from public/jenkins1621b831e01c: Mounted from public/jenkinsc2210d8051b3: Mounted from public/jenkins96706081cc19: Mounted from public/jenkins053d23f0bdb8: Mounted from public/jenkinsa18cfc771ac0: Mounted from public/jenkins9cebc9e5d610: Mounted from public/jenkinsd81d8fa6dfd4: Mounted from public/jenkinsbd76253da83a: Mounted from public/jenkinse43c0c41b833: Mounted from public/jenkins01727b1a72df: Mounted from public/jenkins69dfa7bd7a92: Mounted from public/jenkins4d1ab3827f6b: Mounted from public/jenkins7948c3e5790c: Mounted from public/jenkinsv2.235.3: digest: sha256:25227f09225d5bebcead8abd0207aeaf0d2dc01973c6c24c08a44710aa089dc6 size: 5342

登陆harbor进行查看:

准备运行环境

创建NFS共享存储

NFS共享存储放在 vms200.cos.com上,用于存储Jenkins持久化文件。

在所有运算节点(vms21、vms22)和vms200安装:

[root@vms200 ~]# yum install nfs-utils -y[root@vms21 ~]# yum install nfs-utils -y[root@vms22 ~]# yum install nfs-utils -y

在运维主机

vms200.cos.com上配置NFS:

- 创建目录,编辑/etc/exports

[root@vms200 ~]# mkdir -p /data/nfs-volume[root@vms200 ~]# vi /etc/exports

/data/nfs-volume 192.168.26.0/24(rw,no_root_squash)

启动NFS服务,在各节点查看共享目录、测试

[root@vms200 ~]# systemctl start nfs-server; systemctl enable nfs-server[root@vms200 ~]# showmount -e[root@vms21 ~]# systemctl start nfs-server; systemctl enable nfs-server[root@vms21 ~]# showmount -e vms200[root@vms22 ~]# systemctl start nfs-server; systemctl enable nfs-server[root@vms22 ~]# showmount -e vms200#在每个节点单独挂载测试下,确保没有问题[root@vms22 ~]# mount 192.168.26.200:/data/nfs-volume /mnt[root@vms22 ~]# df | grep '192\.' #查看是否有挂载,确保每个节点没有挂载,若有,则umount192.168.26.200:/data/nfs-volume 99565568 9588736 89976832 10% /mnt[root@vms22 ~]# umount /mnt

创建namespace和secret

在k8s任一master节点(vms21或vms22)上执行一次即可。

创建namespace

[root@vms21 ~]# kubectl create ns infranamespace/infra created

创建专有名词空间infra的目的是将jenkins等运维相关软件放到同一个namespace下,便于统一管理以及和其他资源分开。

创建访问harbor的secret规则,用于从私有仓库拉取镜像

Secret用来保存敏感信息,例如密码、OAuth 令牌和 ssh key等,有三种类型:

- Opaque:

base64 编码格式的 Secret,用来存储密码、密钥等,可以反解,加密能力弱 - kubernetes.io/dockerconfigjson:

用来存储私有docker registry的认证信息。 - kubernetes.io/service-account-token:

用于被serviceaccount引用,serviceaccout 创建时Kubernetes会默认创建对应的secret

前面dashborad部分以及用过了

访问docker的私有仓库,必须要创建专有的secret,否则不能拉取镜像。创建方法如下:

kubectl create secret docker-registry harbor \--docker-server=harbor.op.com \--docker-username=admin \--docker-password=Harbor12543 \-n infra

创建secret,资源类型是docker-registry,名字是 harbor,并指定docker仓库地址、访问用户、密码、仓库名。

[root@vms21 ~]# kubectl -n infra get secrets | grep harborharbor kubernetes.io/dockerconfigjson 1 2m44s

创建jenkins资源清单

在运维主机 vms200.cos.com上

[root@vms200 ~]# mkdir /data/k8s-yaml/jenkins[root@vms200 ~]# cd /data/k8s-yaml/jenkins

[root@vms200 jenkins]# vi deployment.yaml #deployment

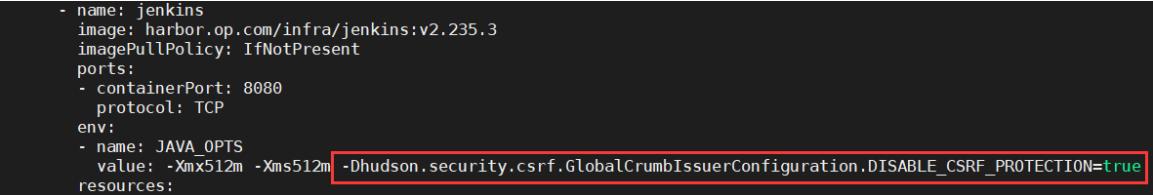

kind: DeploymentapiVersion: apps/v1metadata:name: jenkinsnamespace: infralabels:name: jenkinsspec:replicas: 1selector:matchLabels:name: jenkinstemplate:metadata:labels:app: jenkinsname: jenkinsspec:volumes:- name: datanfs:server: vms200path: /data/nfs-volume/jenkins_home- name: dockerhostPath:path: /run/docker.socktype: ''containers:- name: jenkinsimage: harbor.op.com/infra/jenkins:v2.235.3imagePullPolicy: IfNotPresentports:- containerPort: 8080protocol: TCPenv:- name: JAVA_OPTSvalue: -Xmx512m -Xms512mresources:limits:cpu: 500mmemory: 1Girequests:cpu: 500mmemory: 1GivolumeMounts:- name: datamountPath: /var/jenkins_home- name: dockermountPath: /run/docker.sockimagePullSecrets:- name: harborsecurityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

- 创建挂载的目录:(挂载到共享存储NFS下)

[root@vms200 jenkins]# mkdir /data/nfs-volume/jenkins_home

- 较高版本的Jenkins在管理页面没有关闭CSRF的选项。在Jenkins启动前加入相关取消保护的参数配置后启动Jenkins,即可关闭CSRF,配置内容如下:(更新deployment.yaml中相应内容)

...env:- name: JAVA_OPTSvalue: -Xmx512m -Xms512m -Dhudson.security.csrf.GlobalCrumbIssuerConfiguration.DISABLE_CSRF_PROTECTION=true...

[root@vms200 jenkins]# vi svc.yaml #service

kind: ServiceapiVersion: v1metadata:name: jenkinsnamespace: infraspec:ports:- protocol: TCPport: 80targetPort: 8080selector:app: jenkins

[root@vms200 jenkins]# vi ingress.yaml #ingress

kind: IngressapiVersion: extensions/v1beta1metadata:name: jenkinsnamespace: infraspec:rules:- host: jenkins.op.comhttp:paths:- path: /backend:serviceName: jenkinsservicePort: 80

注意:ingress中的serviceport必须与service中的port保持一致

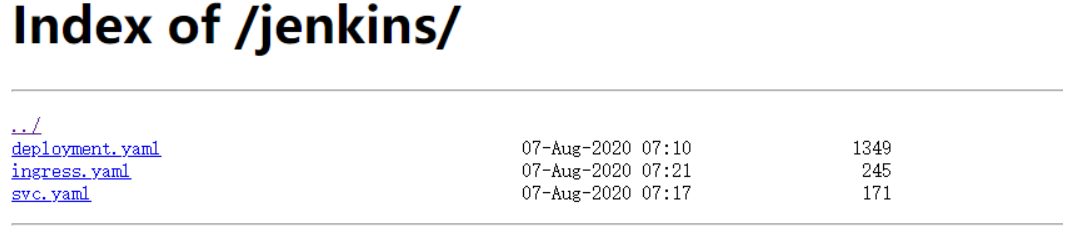

查看yaml,在浏览器打开:http://k8s-yaml.op.com/jenkins/ (可以在这里复制文件的链接地址)

应用资源配置清单

在k8s任一master节点(vms21或vms22)上执行一次即可。

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/jenkins/deployment.yamldeployment.apps/jenkins created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/jenkins/svc.yamlservice/jenkins created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/jenkins/ingress.yamlingress.extensions/jenkins created

查看在infra中创建的资源:(需要等一会,pod状态变为Running)

[root@vms21 ~]# kubectl get all -n infraNAME READY STATUS RESTARTS AGEpod/jenkins-f4ff87ff7-jhjft 1/1 Running 0 8m18sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/jenkins ClusterIP 10.168.51.35 <none> 80/TCP 5m47sNAME READY UP-TO-DATE AVAILABLE AGEdeployment.apps/jenkins 1/1 1 1 8m18sNAME DESIRED CURRENT READY AGEreplicaset.apps/jenkins-f4ff87ff7 1 1 1 8m18s

可以在dashboard中查看pod启动日志。

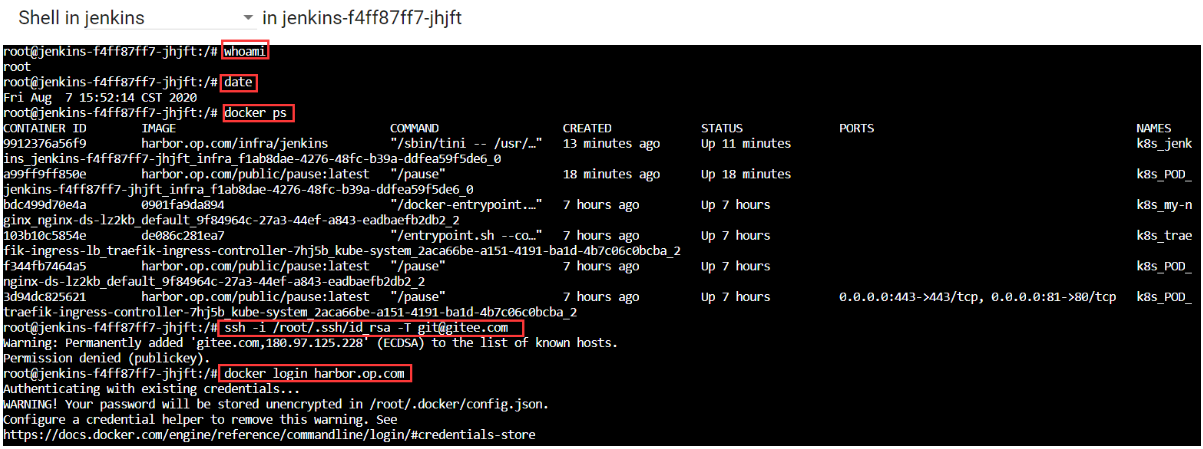

验证jenkins容器状态

进入容器进入以下验证:(运行用户是否为root、时区、是否连接到本地docker server、是否都能登陆harbor)

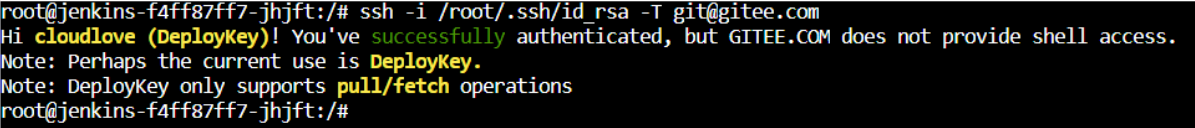

# 查看用户whoami# 查看时区date# 查看是否能用宿主机的docker引擎docker ps# 看是否能免密访问gitee,确认是否能通过SSH方式连接到git仓库ssh -i /root/.ssh/id_rsa -T git@gitee.com# 是否能访问是否harbor仓库docker login harbor.op.com

验证

[root@vms21 ~]# kubectl get po -n infraNAME READY STATUS RESTARTS AGEjenkins-f4ff87ff7-jhjft 1/1 Running 0 35m[root@vms21 ~]# kubectl exec jenkins-f4ff87ff7-jhjft -n infra -it -- /bin/bashroot@jenkins-f4ff87ff7-jhjft:/# whoamirootroot@jenkins-f4ff87ff7-jhjft:/# dateFri Aug 7 16:22:30 CST 2020root@jenkins-f4ff87ff7-jhjft:/# docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES9912376a56f9 harbor.op.com/infra/jenkins "/sbin/tini -- /usr/…" 43 minutes ago Up 41 minutes k8s_jenkins_jenkins-f4ff87ff7-jhjft_infra_f1ab8dae-4276-48fc-b39a-ddfea59f5de6_0a99ff9ff850e harbor.op.com/public/pause:latest "/pause" 48 minutes ago Up 48 minutes k8s_POD_jenkins-f4ff87ff7-jhjft_infra_f1ab8dae-4276-48fc-b39a-ddfea59f5de6_0bdc499d70e4a 0901fa9da894 "/docker-entrypoint.…" 7 hours ago Up 7 hours k8s_my-nginx_nginx-ds-lz2kb_default_9f84964c-27a3-44ef-a843-eadbaefb2db2_2103b10c5854e de086c281ea7 "/entrypoint.sh --co…" 7 hours ago Up 7 hours k8s_traefik-ingress-lb_traefik-ingress-controller-7hj5b_kube-system_2aca66be-a151-4191-ba1d-4b7c06c0bcba_2f344fb7464a5 harbor.op.com/public/pause:latest "/pause" 7 hours ago Up 7 hours k8s_POD_nginx-ds-lz2kb_default_9f84964c-27a3-44ef-a843-eadbaefb2db2_23d94dc825621 harbor.op.com/public/pause:latest "/pause" 7 hours ago Up 7 hours 0.0.0.0:443->443/tcp, 0.0.0.0:81->80/tcp k8s_POD_traefik-ingress-controller-7hj5b_kube-system_2aca66be-a151-4191-ba1d-4b7c06c0bcba_2root@jenkins-f4ff87ff7-jhjft:/# ssh -i /root/.ssh/id_rsa -T git@gitee.comHi cloudlove (DeployKey)! You've successfully authenticated, but GITEE.COM does not provide shell access.Note: Perhaps the current use is DeployKey.Note: DeployKey only supports pull/fetch operationsroot@jenkins-f4ff87ff7-jhjft:/# docker login harbor.op.comAuthenticating with existing credentials...WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded

也可以在dashboard进入pod,进行如下验证:

免密访问gitee时,需要登陆https://gitee.com/,上传公钥/root/.ssh/id_rsa.pub(复制粘贴),再验证:

查看持久化结果和密码

在运维主机 vms200.cos.com上

查看持久化数据是否成功存放到共享存储

[root@vms200 ~]# ll /data/nfs-volume/jenkins_hometotal 36-rw-r--r-- 1 root root 1643 Aug 7 15:52 config.xml-rw-r--r-- 1 root root 50 Aug 7 15:41 copy_reference_file.log-rw-r--r-- 1 root root 156 Aug 7 15:46 hudson.model.UpdateCenter.xml-rw------- 1 root root 1712 Aug 7 15:46 identity.key.enc-rw-r--r-- 1 root root 7 Aug 7 15:46 jenkins.install.UpgradeWizard.state-rw-r--r-- 1 root root 171 Aug 7 15:46 jenkins.telemetry.Correlator.xmldrwxr-xr-x 2 root root 6 Aug 7 15:46 jobsdrwxr-xr-x 3 root root 19 Aug 7 15:46 logs-rw-r--r-- 1 root root 907 Aug 7 15:46 nodeMonitors.xmldrwxr-xr-x 2 root root 6 Aug 7 15:46 nodesdrwxr-xr-x 2 root root 6 Aug 7 15:46 plugins-rw-r--r-- 1 root root 64 Aug 7 15:46 secret.key-rw-r--r-- 1 root root 0 Aug 7 15:46 secret.key.not-so-secretdrwx------ 4 root root 265 Aug 7 15:47 secretsdrwxr-xr-x 2 root root 67 Aug 7 15:52 updatesdrwxr-xr-x 2 root root 24 Aug 7 15:46 userContentdrwxr-xr-x 3 root root 56 Aug 7 15:47 usersdrwxr-xr-x 11 root root 4096 Aug 7 15:45 war

查看jenkins初始化的密码

[root@vms200 ~]# cat /data/nfs-volume/jenkins_home/secrets/initialAdminPasswordc38f1d97c8ba4630a205932c53a15f0d

替换jenkins插件源

在运维主机 vms200.cos.com上

cd /data/nfs-volume/jenkins_home/updatessed -i 's#http:\/\/updates.jenkins-ci.org\/download#https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins#g' default.jsonsed -i 's#http:\/\/www.google.com#https:\/\/www.baidu.com#g' default.json

解析域名jenkins.op.com

jenkins部署成功后后,需要给他添加外网的域名解析

在vms11上

[root@vms11 ~]# vi /var/named/op.com.zone #在文件末尾追加一行;前滚序列号serial

jenkins A 192.168.26.10

重启、验证:

[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A jenkins.op.com @192.168.26.11 +short192.168.26.10

在浏览器访问:http://jenkins.op.com/

页面配置jenkins

创建用户名及密码

创建用户名及密码为

admin:admin123

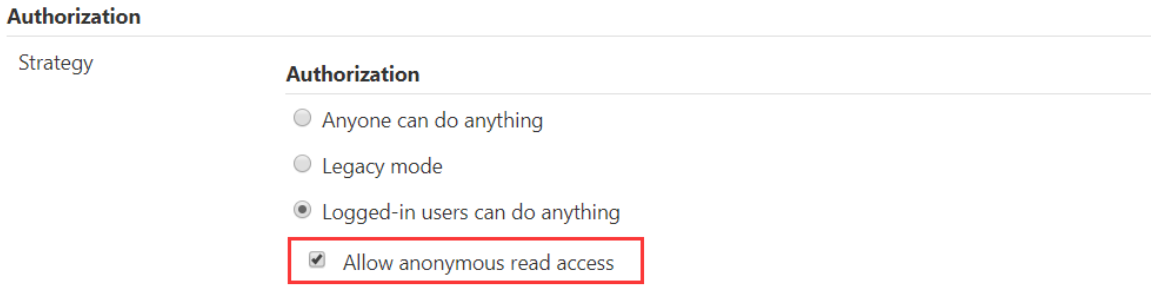

允许匿名读

Manage Jenkins>Security>Configure Global Security>Strategy:勾选Allow anonymous read access

允许跨域请求

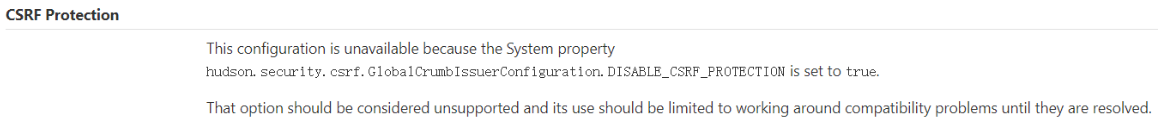

Manage Jenkins>Security>Configure Global Security>CSRF Protection新版本中没有配置项:prevent cross site request forgery exploits(去除勾选)需要在启动参数进行设置(Jenkins是部署在k8s环境中,故启动参数配置在deployment.yaml文件中添加)

-Dhudson.security.csrf.GlobalCrumbIssuerConfiguration.DISABLE_CSRF_PROTECTION=true

[root@vms21 ~]# kubectl delete -f http://k8s-yaml.op.com/jenkins/deployment.yamldeployment.apps "jenkins" deleted[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/jenkins/deployment.yamldeployment.apps/jenkins created[root@vms21 ~]# kubectl get po -n infraNAME READY STATUS RESTARTS AGEjenkins-684d5f5d8b-5v7zj 1/1 Running 0 54s

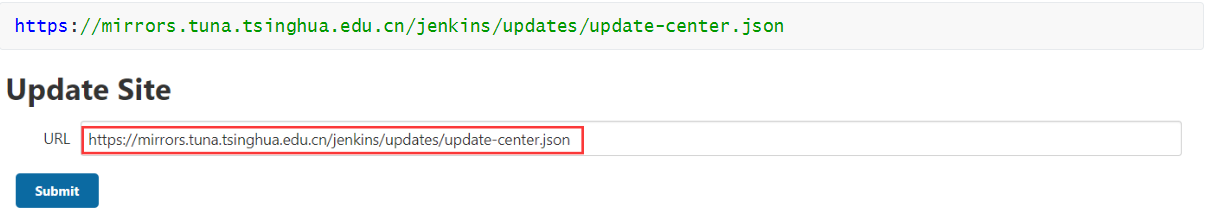

配置插件加速地址

Manage Jenkins>System Configuration>Manage Jenkins>Advanced>Update Site

使用清华大学开源软件镜像站替换掉https://updates.jenkins.io/update-center.json

https://mirrors.tuna.tsinghua.edu.cn/jenkins/updates/update-center.json

修改下载地址:参见前文替换jenkins插件源

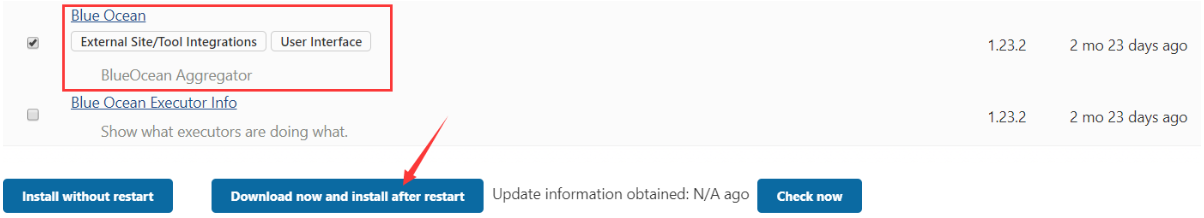

搜索并安装蓝海插件Blue Ocean

Manage Jenkins>System Configuration>Manage Jenkins>Available在搜索框输入blue ocean

重启Jenkins后,出现Blue Ocean菜单:

打开Blue Ocean菜单,出现创建流水线的页面:

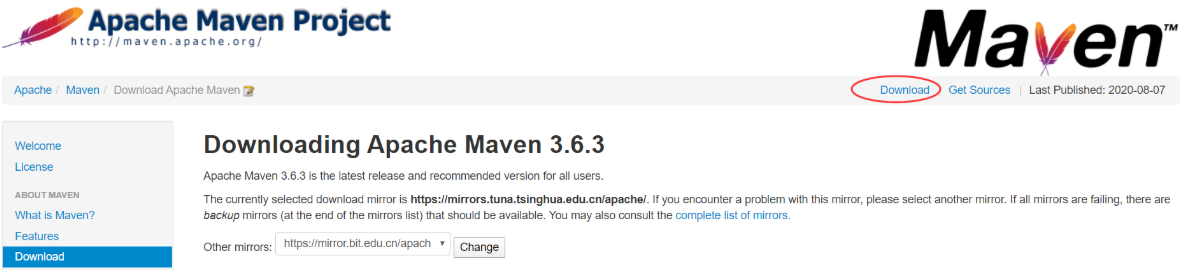

给jenkins配置maven环境

Maven是提供给Jenkins使用,需要放到Jenkins的持久化目录中,直接将二进制包形式的Maven拷贝到Jenkins目录最方便。因此本次安装直接在vms200 操作。

不同的项目对编译的JDK版本和Maven可能不同,可能需要多个版本的JDK和Maven组合使用,因此Maven目录名称就使用 maven-${maven_versin}-${jdk_version}格式。Maven的 bin/mvn文件中可以定义JAVA_HOME环境变量的值,不同的Maven可以使用不同的 JAVA_HOME 值。

因为jenkins的数据目录已经挂载到了NFS中做持久化,因此可以直接将maven放到NFS目录中,同时也就部署进了jenkins。

Maven官方下载地址:http://maven.apache.org/docs/history.html

在vms200上:

[root@vms200 ~]# cd /opt/src[root@vms200 src]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz[root@vms200 src]# ls -l | grep maven-rw-r--r-- 1 root root 9506321 Nov 20 2019 apache-maven-3.6.3-bin.tar.gz

场景一

当Maven需求的jdk版本和jenkins一致时,不需要定义 bin/mvn 中JAVA_HOME。

- 查看

jenkins中jdk版本

[root@vms21 ~]# kubectl get po -n infraNAME READY STATUS RESTARTS AGEjenkins-684d5f5d8b-t2mvs 1/1 Running 0 16m[root@vms21 ~]# kubectl exec jenkins-684d5f5d8b-t2mvs -n infra -- java -versionopenjdk version "1.8.0_242"OpenJDK Runtime Environment (build 1.8.0_242-b08)OpenJDK 64-Bit Server VM (build 25.242-b08, mixed mode)

- 解压、修改目录名称

[root@vms200 src]# tar -xf apache-maven-3.6.3-bin.tar.gz[root@vms200 src]# mv apache-maven-3.6.3 /data/nfs-volume/jenkins_home/maven-3.6.3-8u242

8u242对应jdk版本”1.8.0_242”

- 设置国内镜像源

[root@vms200 src]# vi /data/nfs-volume/jenkins_home/maven-3.6.3-8u242/conf/settings.xml

settings.xml 中 标签中添加国内镜像源

<mirror><id>nexus-aliyun</id><mirrorOf>*</mirrorOf><name>Nexus aliyun</name><url>http://maven.aliyun.com/nexus/content/groups/public</url></mirror>

场景二

当Maven需要jdk-8u261时:

- 解压

jdk-8u261-linux-x64.tar.gz到指定目录

[root@vms200 src]# mkdir /data/nfs-volume/jenkins_home/jdk_versions[root@vms200 src]# tar -xf jdk-8u261-linux-x64.tar.gz -C /data/nfs-volume/jenkins_home/jdk_versions/[root@vms200 src]# ls -l /data/nfs-volume/jenkins_home/jdk_versions/total 0drwxr-xr-x 8 10143 10143 273 Jun 18 14:59 jdk1.8.0_261

- 复制

maven(如果没有场景一,则直接修改maven目录名)

[root@vms200 src]# cp -r /data/nfs-volume/jenkins_home/maven-3.6.3-8u242 /data/nfs-volume/jenkins_home/maven-3.6.3-8u261

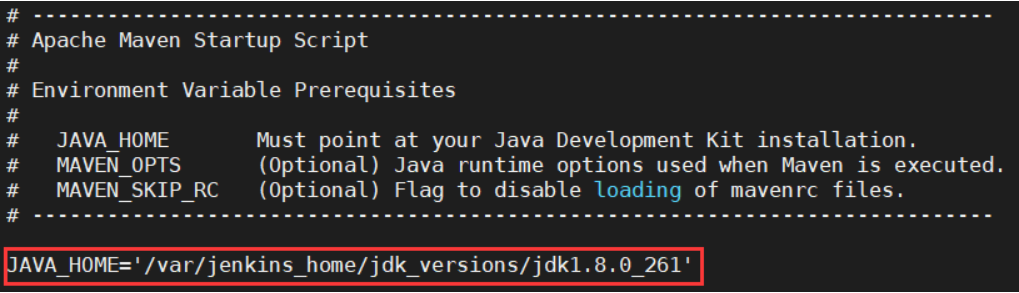

- 手工指定

maven使用的jdk,需要在/bin/mvn文件中设置JAVA_HOME

[root@vms200 src]# file /data/nfs-volume/jenkins_home/maven-3.6.3-8u261/bin/mvn/data/nfs-volume/jenkins_home/apache-maven-3.6.3-8u261/bin/mvn: POSIX shell script, ASCII text executable[root@vms200 src]# vi /data/nfs-volume/jenkins_home/maven-3.6.3-8u261/bin/mvn

JAVA_HOME='/var/jenkins_home/jdk_versions/jdk1.8.0_261'

使用jenkins中绝对路径

制作dubbo微服务的底包镜像

运维主机vms200上

下载JRE镜像底包

[root@vms200 ~]# docker pull docker.io/stanleyws/jre8:8u112[root@vms200 ~]# docker image tag stanleyws/jre8:8u112 harbor.op.com/public/jre:8u112[root@vms200 ~]# docker image push harbor.op.com/public/jre:8u112

Dockerfile

[root@vms200 ~]# mkdir /data/dockerfile/jre8[root@vms200 ~]# cd /data/dockerfile/jre8[root@vms200 jre8]# vi Dockerfile

FROM harbor.op.com/public/jre:8u112RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\echo 'Asia/Shanghai' >/etc/timezoneADD config.yml /opt/prom/config.ymlADD jmx_javaagent-0.3.1.jar /opt/prom/ADD entrypoint.sh /entrypoint.shWORKDIR /opt/project_dirCMD ["/entrypoint.sh"]

- 监控agent和配置项(监控JVM,后续要用到)

[root@vms200 jre8]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar[root@vms200 jre8]# vi config.yml

---rules:- pattern: '.*'

- 默认启动脚本

entrypoint.sh

[root@vms200 jre8]# vim entrypoint.sh

#!/bin/shM_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"C_OPTS=${C_OPTS}JAR_BALL=${JAR_BALL}exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

[root@vms200 jre8]# chmod +x entrypoint.sh

- 在harbor仓库中创建名称为

base,访问级别为公开的项目。

制作dubbo服务docker底包

/data/dockerfile/jre8目录下:

[root@vms200 jre8]# ll-rw-r--r-- 1 root root 29 Aug 8 10:07 config.yml-rw-r--r-- 1 root root 297 Aug 8 10:04 Dockerfile-rwxr-xr-x 1 root root 234 Aug 8 10:16 entrypoint.sh-rw-r--r-- 1 root root 367417 May 10 2018 jmx_javaagent-0.3.1.jar

[root@vms200 jre8]# docker build . -t harbor.op.com/base/jre8:8u112Sending build context to Docker daemon 747.5kBStep 1/7 : FROM harbor.op.com/public/jre:8u112---> fa3a085d6ef1Step 2/7 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone---> Running in c9b0eb0965b1Removing intermediate container c9b0eb0965b1---> 3d2513af12bbStep 3/7 : ADD config.yml /opt/prom/config.yml---> 6a1e662e81d4Step 4/7 : ADD jmx_javaagent-0.3.1.jar /opt/prom/---> d9ff4a1faf7cStep 5/7 : ADD entrypoint.sh /entrypoint.sh---> 79dd5fb64044Step 6/7 : WORKDIR /opt/project_dir---> Running in b8792a9a1169Removing intermediate container b8792a9a1169---> df9997cbd2f3Step 7/7 : CMD ["/entrypoint.sh"]---> Running in e666dffb0716Removing intermediate container e666dffb0716---> 9fa5bdd784cbSuccessfully built 9fa5bdd784cbSuccessfully tagged harbor.op.com/base/jre8:8u112[root@vms200 jre8]# docker push harbor.op.com/base/jre8:8u112

配置jenkins流水线

jenkins流水线配置的java项目的十个常用参数

| 参数名 | 作用 | 举例或说明 |

|---|---|---|

| app_name | 项目名 | dubbo_demo_service |

| image_name | docker镜像名 | app/dubbo-demo-service |

| git_repo | 项目的git地址 | https://x.com/x/x.git |

| git_ver | 项目的git分支或版本号 | master |

| add_tag | 镜像标签,常用时间戳 | 200808_1830 |

| mvn_dir | 执行mvn编译的目录 | ./ |

| target_dir | 编译产生包的目录 | ./target |

| mvn_cmd | 编译maven项目的命令 | mvc clean package -Dmaven. |

| base_image | 项目的docker底包 | 不同的项目底包不一样,下拉选择 |

| maven | maven软件版本 | 不同的项目可能maven环境不一样 |

除了base_image和maven类型是choice parameter,其他都是string parameter类型。

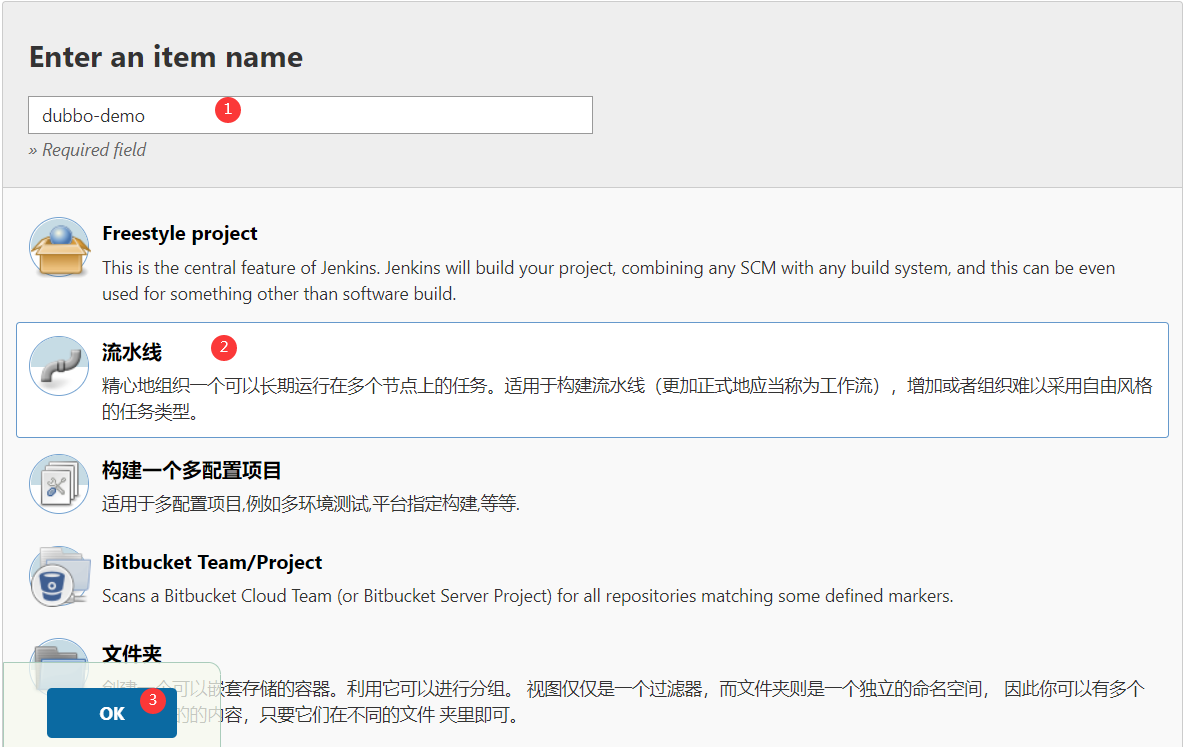

New Item

- 在jenkins主页面点击

New Item

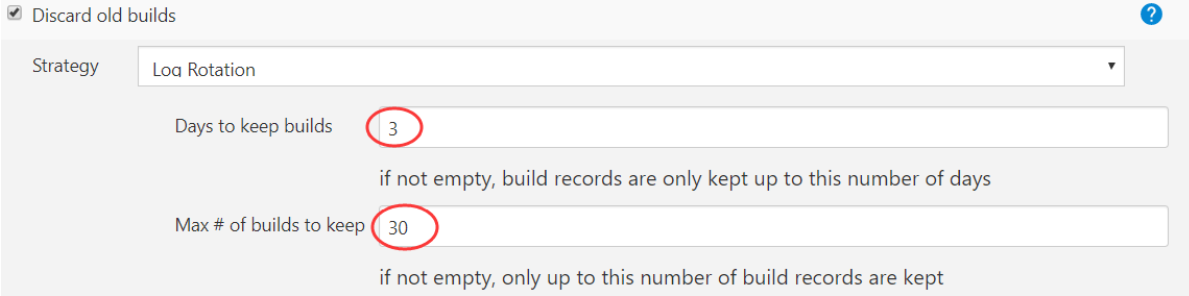

- 在

General选项卡设置Discard old builds(保留的记录数量和时间)

- 在

General选项卡勾选This project is parameterized,点击Add Parameter,分别添加如下10个参数 | 序号 | 参数类型 | Name | Default Value/Choices | Description | | :—-: | :—-: | —- | —- | —- | | 1 | String Parameter | appname | | project name. 项目名 eg:dubbo-demo-service | | 2 | String Parameter | image_name | | project docker image name. docker镜像名 eg:app/dubbo-demo-service | | 3 | String Parameter | git_repo | | project git repository. 仓库地址 eg:https://gitee.com/xxx/xxx.git | | 4 | String Parameter | git_ver | | git commit id of the project. 项目的git分支或版本号 | | 5 | String Parameter | add_tag | | 给docker镜像添加标签组合的一部分,日期-时间,和git_ver拼在一起组成镜像的tag。如:add_tag=master_200808_1830 | | 6 | String Parameter | mvn_dir | default:./ | project maven directory. e.g: ./ 执行mvn编译的目录,默认是项目根目录,一般由开发提供。eg: ./ | | 7 | String Parameter | target_dir | default:./target | the relative path of target file such as .jar or .war package. e.g: ./dubbo-server/target 编译产生的war/jar包目录 eg: ./dubbo-server/target | | 8 | String Parameter | mvn_cmd | default:mvn clean package -Dmaven.test.skip=true | maven command. e.g: mvn clean package -e -q -Dmaven.test.skip=true 编译命令,常加上-e -q参数只输出错误 | | 9 | Choice Parameter | base_image | Choices:

base/jre7:7u80

base/jre8:8u112 | project base image list in harbor.op.com.项目的docker jre底包 | | 10 | Choice Parameter | maven | Choices:

3.6.3-8u261

3.6.3-8u242

2.2.1 | different maven edition.执行编译使用的maven软件版本 |

添加完成后,点击Save保存。

点击Build with Parameters,可以看到:

- 添加

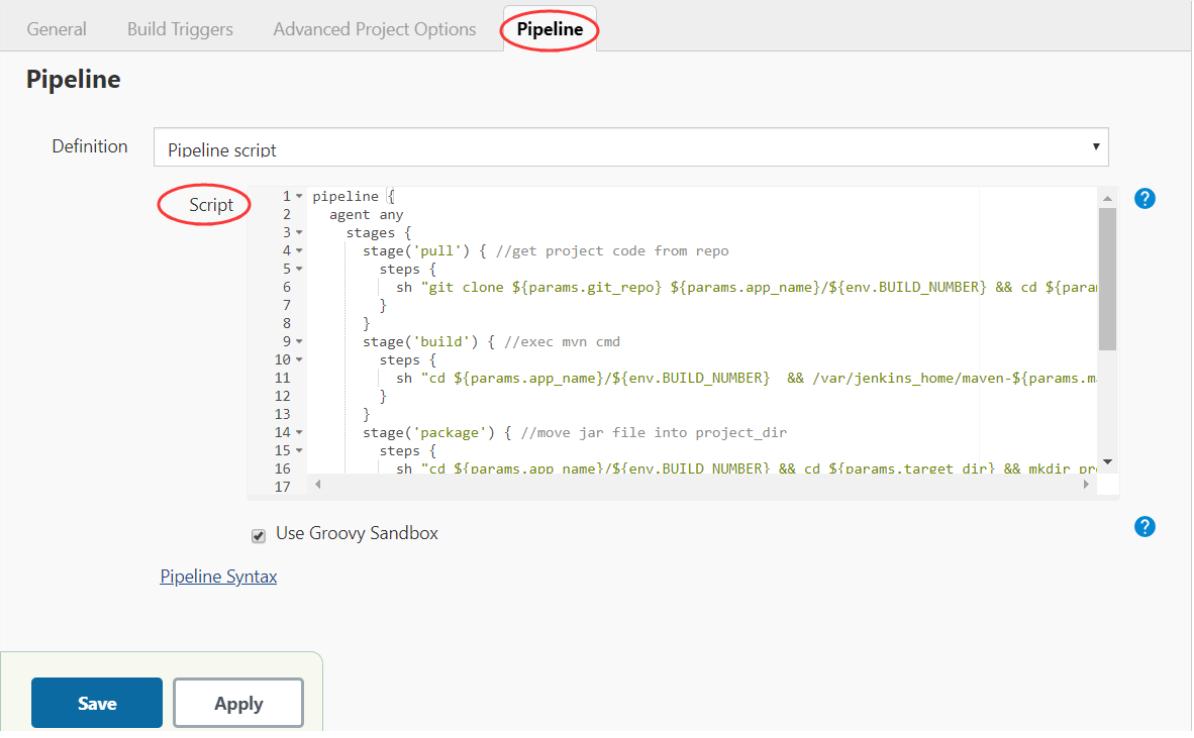

pipiline代码

点击Configure,在Pipeline选项卡,复制粘贴代码Pipeline Script:

流水线构建所用的pipiline代码语法比较有专门的生成工具

以下语句的作用大致是分为四步:拉代码pull > 构建包build >移动包package > 打docker镜像并推送image&push

pipeline {agent anystages {stage('pull') { //get project code from reposteps {sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"}}stage('build') { //exec mvn cmdsteps {sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"}}stage('package') { //move jar file into project_dirsteps {sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"}}stage('image') { //build image and push to registrysteps {writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.op.com/${params.base_image}ADD ${params.target_dir}/project_dir /opt/project_dir"""sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.op.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.op.com/${params.image_name}:${params.git_ver}_${params.add_tag}"}}}}

构建和交付dubbo微服务至kubernetes集群

dubbo服务提供者(dubbo-demo-service)

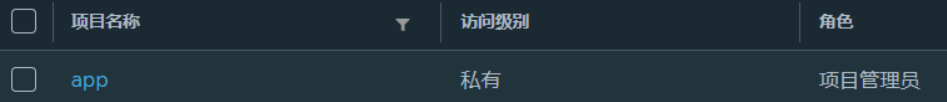

在harbor中创建私有仓库app

通过jenkins进行一次CI

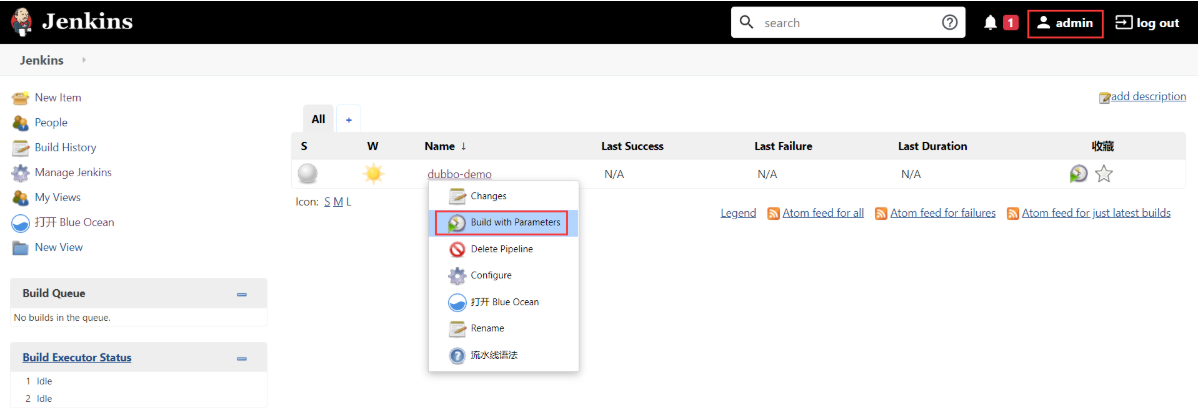

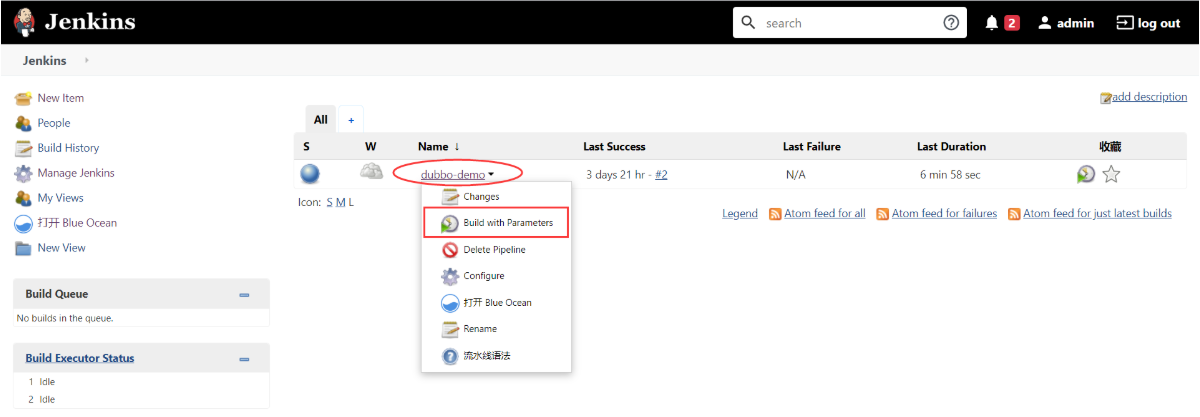

打开jenkins页面,使用admin登录,准备构建

dubbo-demo项目

在项目

dubbo-demo上点击下拉按钮,选择Build with Parameters,填写10个构建的参数:

| 参数名 | 参数值 |

|---|---|

| app_name | dubbo-demo-service |

| image_name | app/dubbo-demo-service |

| git_repo | https://gitee.com/cloudlove2007/dubbo-demo-service.git |

| git_ver | master |

| add_tag | 200808_1800 |

| mvn_dir | ./ |

| target_dir | ./dubbo-server/target |

| mvn_cmd | mvn clean package -Dmaven.test.skip=true |

| base_image | base/jre8:8u112 |

| maven | 3.6.3-8u261 |

截图:

填写完以后执行

bulid

第一次构建需要下载很多依赖包,时间较长。

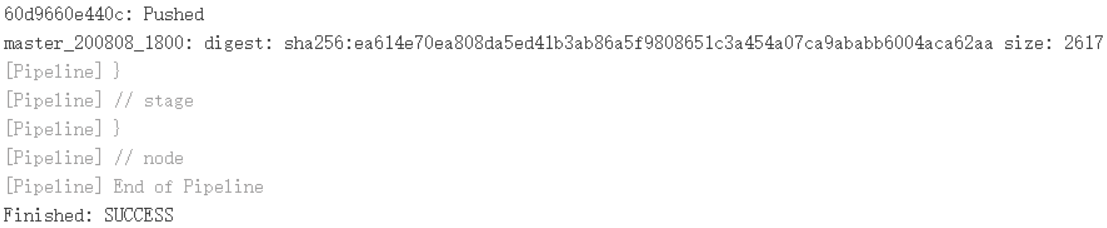

- 点击

Console Output,查看构建过程的输出信息…最后出现Finished: SUCCESS表示构建成功了。

- 点击

打开 Blue Ocean查看构建历史及过程:(点击pull/build/package/image可以查看每一阶段的构建历史)

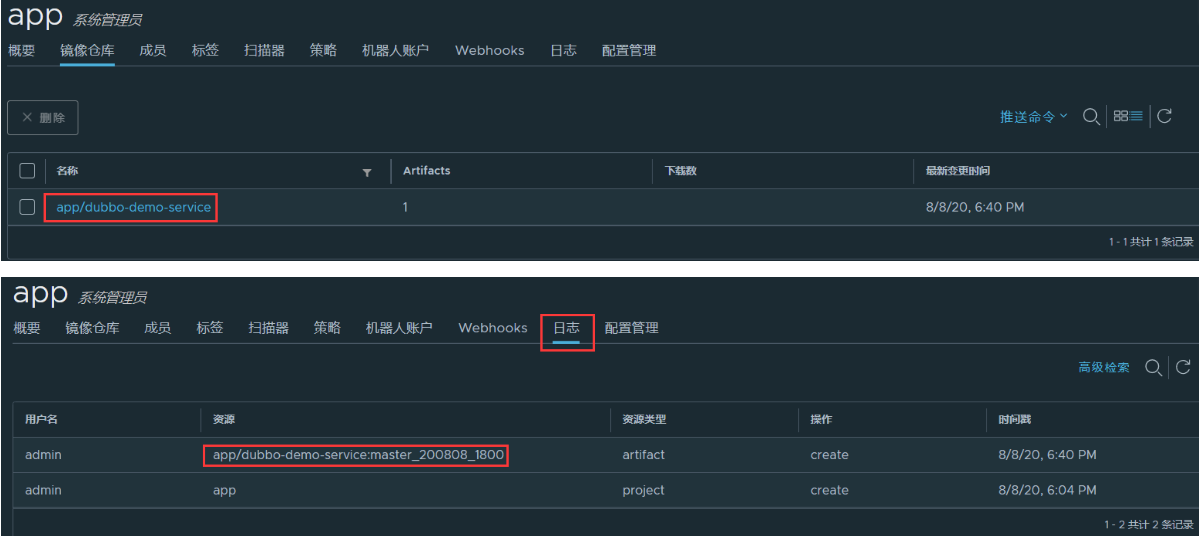

- 检查harbor仓库是否有相应版本的镜像

- 查看

jenkins容器情况

[root@vms21 ~]# kubectl get po -n infraNAME READY STATUS RESTARTS AGEjenkins-684d5f5d8b-5x274 1/1 Running 0 6h18m[root@vms21 ~]# kubectl exec -n infra jenkins-684d5f5d8b-5x274 -- ls -l /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-servicetotal 0drwxr-xr-x 6 root root 101 Aug 8 18:12 1drwxr-xr-x 6 root root 119 Aug 8 18:39 2[root@vms21 ~]# kubectl exec -n infra jenkins-684d5f5d8b-5x274 -- ls -a /root/.m2/repository...aopallianceasmbackport-util-concurrentchclassworldscomcommons-clicommons-iocommons-langcommons-loggingiojavaxjlinejunitlog4jorg

编译内容存放路径,数字目录表示某一个app编译的序号,每个目录下有自己的 Dockerfile。

第一次编译时会下载很多的第三方库文件(java依赖包),速度较慢,可以将下载后第三方库持久化,避免重启pod后速度变慢。第三方库的缓存目录在: /root/.m2/repository。

交付dubbo-service到k8s

运维主机vms200上

[root@vms200 ~]# mkdir /data/k8s-yaml/dubbo-demo-service[root@vms200 ~]# cd /data/k8s-yaml/dubbo-demo-service

准备k8s资源配置清单:/data/k8s-yaml/dubbo-demo-service/deployment.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: dubbo-demo-servicenamespace: applabels:name: dubbo-demo-servicespec:replicas: 1selector:matchLabels:name: dubbo-demo-servicetemplate:metadata:labels:app: dubbo-demo-servicename: dubbo-demo-servicespec:containers:- name: dubbo-demo-serviceimage: harbor.op.com/app/dubbo-demo-service:master_200808_1800ports:- containerPort: 20880protocol: TCPenv:- name: JAR_BALLvalue: dubbo-server.jarimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

- 需要根据自己构建镜像的tag来修改image

- dubbo的server服务,只向zk注册并通过zk与dubbo的web交互,不需要对外提供服务。因此不需要service资源和ingress资源。

创建app名称空间(在vms21或vms22执行一次)

业务资源和运维资源等应该通过名称空间来隔离,因此创建专有名称空间app

[root@vms21 ~]# kubectl create ns app

创建secret资源(在vms21或vms22执行一次)

kubectl -n app \create secret docker-registry harbor \--docker-server=harbor.op.com \--docker-username=admin \--docker-password=Harbor12543

应用资源配置清单:

http://k8s-yaml.op.com/dubbo-demo-service/deployment.yaml(在vms21或vms22执行一次)

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-demo-service/deployment.yamldeployment.apps/dubbo-demo-service created

检查docker运行情况及zk里的信息

- 查看pod运行日志

[root@vms21 ~]# kubectl get po -n appNAME READY STATUS RESTARTS AGEdubbo-demo-service-564b47c8fd-4kkdg 1/1 Running 0 18s[root@vms21 ~]# kubectl -n app logs dubbo-demo-service-564b47c8fd-4kkdg --tail=2Dubbo server startedDubbo 服务端已经启动

- 查看dubbo-demo-service是否连接到了ZK

[root@vms21 ~]# sh /opt/zookeeper/bin/zkCli.sh #或:/opt/zookeeper/bin/zkCli.sh -server localhost[zk: localhost:2181(CONNECTED) 0] ls /[dubbo, zookeeper][zk: localhost:2181(CONNECTED) 1] ls /dubbo[com.od.dubbotest.api.HelloService][zk: localhost:2181(CONNECTED) 2]

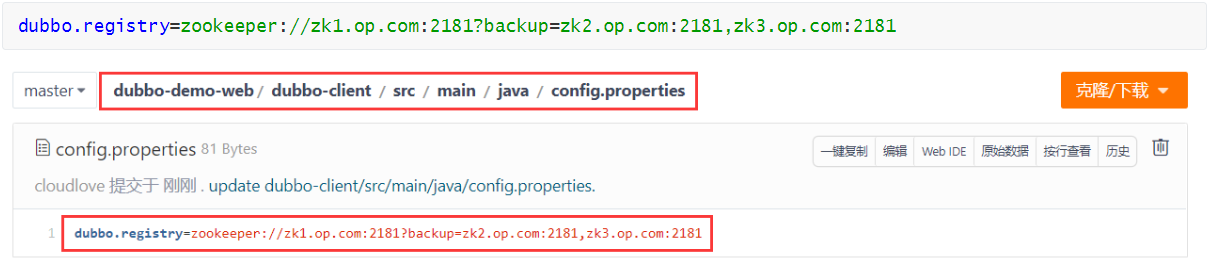

dubbo-demo-service 连接zk的地址是写在配置文件中dubbo-server/src/main/java/config.properties:

dubbo.registry=zookeeper://zk1.op.com:2181?backup=zk2.op.com:2181,zk3.op.com:2181

- 后续也可以在

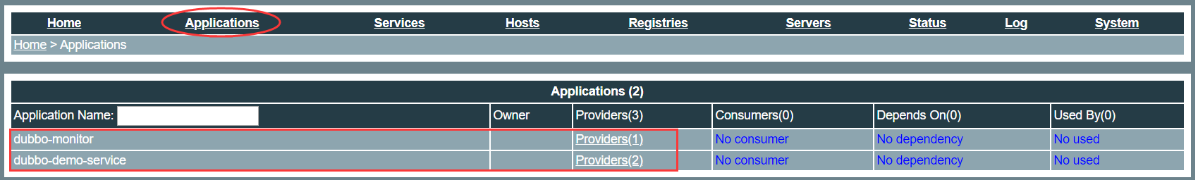

Monitor页面查看:Applications > dubbo-demo-service

dubbo-monitor工具

github地址:https://github.com/Jeromefromcn/dubbo-monitor

在vms200上

制作dubbo-monitor镜像

- 获取

dubbo-monitor源码包

目录:

/opt/src(安装git:yum install git -y)

[root@vms200 src]# git clone https://github.com/Jeromefromcn/dubbo-monitor.git

也可以wget后unzip:

[root@vms200 src]# wget https://github.com/Jeromefromcn/dubbo-monitor/archive/master.zip[root@vms200 src]# unzip master.zip

[root@vms200 src]# ls -l |grep monitordrwxr-xr-x 4 root root 81 Aug 9 00:51 dubbo-monitordrwxr-xr-x 3 root root 69 Jul 27 2016 dubbo-monitor-master[root@vms200 src]# ls -l dubbo-monitortotal 8-rw-r--r-- 1 root root 155 Aug 9 00:51 Dockerfiledrwxr-xr-x 5 root root 40 Aug 9 00:51 dubbo-monitor-simple-rw-r--r-- 1 root root 16 Aug 9 00:51 README.md

- 修改配置:conf/dubbo_origin.properties(目录:

/opt/srcdubbo-monitor/dubbo-monitor-simple)

[root@vms200 src]# cd dubbo-monitor/dubbo-monitor-simple[root@vms200 dubbo-monitor-simple]# vi conf/dubbo_origin.properties

dubbo.container=log4j,spring,registry,jettydubbo.application.name=dubbo-monitordubbo.application.owner=dubbo.registry.address=zookeeper://zk1.op.com:2181?backup=zk2.op.com:2181,zk3.op.com:2181dubbo.protocol.port=20880dubbo.jetty.port=8080dubbo.jetty.directory=/dubbo-monitor-simple/monitordubbo.charts.directory=/dubbo-monitor-simple/chartsdubbo.statistics.directory=/dubbo-monitor-simple/statisticsdubbo.log4j.file=logs/dubbo-monitor-simple.logdubbo.log4j.level=WARN

- 修改启动脚本:bin/start.sh(目录:

/opt/src/dubbo-monitor/dubbo-monitor-simple)

[root@vms200 dubbo-monitor-simple]# sed -r -i -e '/^nohup/{p;:a;N;$!ba;d}' ./bin/start.sh && sed -r -i -e "s%^nohup(.*)%exec \1%" ./bin/start.sh

让 java 进程在前台运行,将nohup替换为exec,删除末尾的&;删除exec java命令这一行之后的所有行!

...JAVA_MEM_OPTS=""BITS=`java -version 2>&1 | grep -i 64-bit`if [ -n "$BITS" ]; thenJAVA_MEM_OPTS=" -server -Xmx128m -Xms128m -Xmn32m -XX:PermSize=16m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:LargePageSizeInBytes=16m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "elseJAVA_MEM_OPTS=" -server -Xms128m -Xmx128m -XX:PermSize=16m -XX:SurvivorRatio=2 -XX:+UseParallelGC "fiecho -e "Starting the $SERVER_NAME ...\c"exec java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1

修改jvm资源限制(本实验使用内存不大,生产中根据实际调整)

- 创建目录并复制:/data/dockerfile/dubbo-monitor(目录:

/opt/src)

[root@vms200 src]# mkdir /data/dockerfile/dubbo-monitor[root@vms200 src]# cp -a dubbo-monitor/* /data/dockerfile/dubbo-monitor/[root@vms200 src]# cd /data/dockerfile/dubbo-monitor[root@vms200 dubbo-monitor]# lltotal 8-rw-r--r-- 1 root root 155 Aug 9 00:51 Dockerfiledrwxr-xr-x 5 root root 40 Aug 9 00:51 dubbo-monitor-simple-rw-r--r-- 1 root root 16 Aug 9 00:51 README.md[root@vms200 dubbo-monitor]# cat Dockerfile

FROM jeromefromcn/docker-alpine-java-bashMAINTAINER Jerome JiangCOPY dubbo-monitor-simple/ /dubbo-monitor-simple/CMD /dubbo-monitor-simple/bin/start.sh

- build镜像

[root@vms200 dubbo-monitor]# docker build . -t harbor.op.com/infra/dubbo-monitor:latestSending build context to Docker daemon 26.21MBStep 1/4 : FROM jeromefromcn/docker-alpine-java-bashlatest: Pulling from jeromefromcn/docker-alpine-java-bashImage docker.io/jeromefromcn/docker-alpine-java-bash:latest uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/420890c9e918: Pull completea3ed95caeb02: Pull complete4a5cf8bc2931: Pull complete6a17cae86292: Pull complete4729ccfc7091: Pull completeDigest: sha256:658f4a5a2f6dd06c4669f8f5baeb85ca823222cb938a15cfb7f6459c8cfe4f91Status: Downloaded newer image for jeromefromcn/docker-alpine-java-bash:latest---> 3114623bb27bStep 2/4 : MAINTAINER Jerome Jiang---> Running in c0bb9e3cc5bcRemoving intermediate container c0bb9e3cc5bc---> 1c50f76b9528Step 3/4 : COPY dubbo-monitor-simple/ /dubbo-monitor-simple/---> 576fdd3573d0Step 4/4 : CMD /dubbo-monitor-simple/bin/start.sh---> Running in 447c96bca8bdRemoving intermediate container 447c96bca8bd---> 76fb6a7a58f3Successfully built 76fb6a7a58f3Successfully tagged harbor.op.com/infra/dubbo-monitor:latest

- push到harbor仓库

[root@vms200 dubbo-monitor]# docker push harbor.op.com/infra/dubbo-monitor:latestThe push refers to repository [harbor.op.com/infra/dubbo-monitor]ea1f33d4dc16: Pushed6c05aa02bec9: Pushed1bdff01a06a9: Pushed5f70bf18a086: Mounted from public/pausee271a1fb1dfc: Pushedc56b7dabbc7a: Pushedlatest: digest: sha256:f7d344f02a54594c4b3db6ab91cd023f6d1d0086f40471e807bfcd00b6f2f384 size: 2400

创建k8s资源配置清单

- 准备目录:/data/k8s-yaml/dubbo-monitor

[root@vms200 ~]# mkdir /data/k8s-yaml/dubbo-monitor[root@vms200 ~]# cd /data/k8s-yaml/dubbo-monitor

- 创建deploy资源文件:/data/k8s-yaml/dubbo-monitor/deployment.yaml

[root@vms200 dubbo-monitor]# vi /data/k8s-yaml/dubbo-monitor/deployment.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: dubbo-monitornamespace: infralabels:name: dubbo-monitorspec:replicas: 1selector:matchLabels:name: dubbo-monitortemplate:metadata:labels:app: dubbo-monitorname: dubbo-monitorspec:containers:- name: dubbo-monitorimage: harbor.op.com/infra/dubbo-monitor:latestports:- containerPort: 8080protocol: TCP- containerPort: 20880protocol: TCPimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

- 创建service资源文件:/data/k8s-yaml/dubbo-monitor/svc.yaml

[root@vms200 dubbo-monitor]# vi /data/k8s-yaml/dubbo-monitor/svc.yaml

kind: ServiceapiVersion: v1metadata:name: dubbo-monitornamespace: infraspec:ports:- protocol: TCPport: 80targetPort: 8080selector:app: dubbo-monitor

- 创建ingress资源文件:

[root@vms200 dubbo-monitor]# vi /data/k8s-yaml/dubbo-monitor/ingress.yaml

kind: IngressapiVersion: extensions/v1beta1metadata:name: dubbo-monitornamespace: infraspec:rules:- host: dubbo-monitor.op.comhttp:paths:- path: /backend:serviceName: dubbo-monitorservicePort: 80

ingress.servicePort要与svc.port保持一致

应用资源配置清单

在vms21或vms22上执行一次即可

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-monitor/deployment.yamldeployment.apps/dubbo-monitor created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-monitor/svc.yamlservice/dubbo-monitor created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-monitor/ingress.yamlingress.extensions/dubbo-monitor created[root@vms21 ~]# kubectl get po -n infraNAME READY STATUS RESTARTS AGEdubbo-monitor-5fbd49ff49-wv6w7 1/1 Running 0 53sjenkins-684d5f5d8b-5x274 1/1 Running 0 21h

添加dns解析

在DNS主机vms11上:

[root@vms11 ~]# vi /var/named/op.com.zone

在文件末尾增加一行:

dubbo-monitor A 192.168.26.10

注意前滚序列号serial

重启并验证

[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A dubbo-monitor.op.com @192.168.26.11 +short192.168.26.10

浏览器访问monitor的web页面

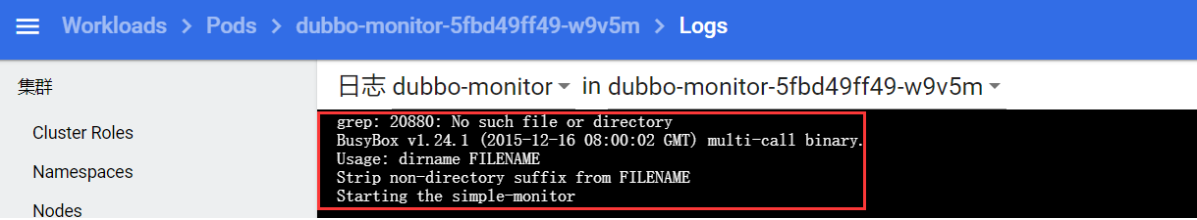

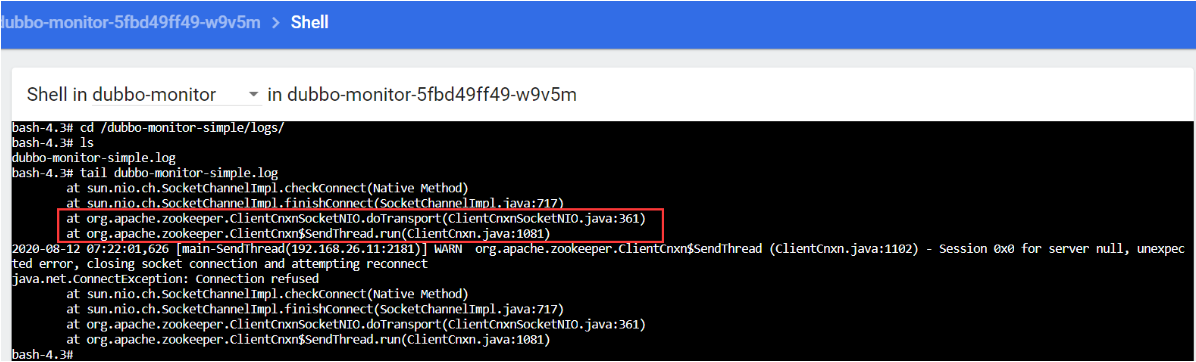

如果浏览器访问报错:

Bad Gateway

- 查看pod日志

- 进入pod查看dubbo-monitor启动日志

发现是zk没有启动的问题

dubbo服务消费者(dubbo-demo-consumer)

通过jenkins进行一次CI

获取私有仓库代码

- 之前创建的dubbo-service是微服务的提供者,现在创建一个微服务的消费者。

- 先从

https://gitee.com/sunx66/dubbo-demo-service这里fork到自己仓库,再设为私有,并修改zk的配置:

dubbo.registry=zookeeper://zk1.op.com:2181?backup=zk2.op.com:2181,zk3.op.com:2181

- 使用

git@gitee.com:cloudlove2007/dubbo-demo-web.git这个私有仓库中的代码构建dubbo服务消费者。

打开jenkins页面,使用admin登录,准备构建

dubbo-demo项目

- 点

Build with Parameters依次填入/选择: | 参数名 | 参数值 | | —- | —- | | app_name | dubbo-demo-consumer | | image_name | app/dubbo-demo-consumer | | git_repo | git@gitee.com:cloudlove2007/dubbo-demo-web.git | | git_ver | master | | add_tag | 200812_1830 | | mvn_dir | ./ | | target_dir | ./dubbo-client/target | | mvn_cmd | mvn clean package -Dmaven.test.skip=true | | base_image | base/jre8:8u112 | | maven | 3.6.3-8u261 |

截图:

- 点击

Build进行构建,切换到Console Output查看构建输出信息,等待构建完成。(如果失败,排错直到成功)

...[Pipeline] End of PipelineFinished: SUCCESS

打开 Blue Ocean:

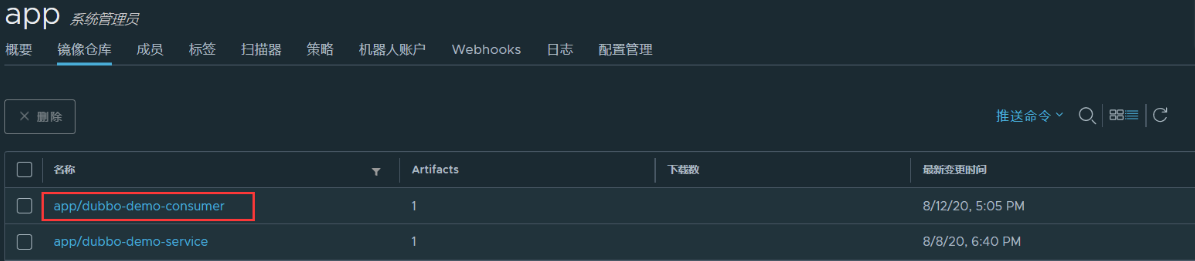

- 在harbor仓库中查看镜像:

准备k8s资源配置清单

运维主机vms200上,准备资源配置清单

准备目录

[root@vms200 ~]# mkdir /data/k8s-yaml/dubbo-consumer[root@vms200 ~]# cd /data/k8s-yaml/dubbo-consumer[root@vms200 dubbo-consumer]#

Deployment:/data/k8s-yaml/dubbo-demo-consumer/deployment.yaml (注意修改镜像的tag)

kind: DeploymentapiVersion: apps/v1metadata:name: dubbo-demo-consumernamespace: applabels:name: dubbo-demo-consumerspec:replicas: 1selector:matchLabels:name: dubbo-demo-consumertemplate:metadata:labels:app: dubbo-demo-consumername: dubbo-demo-consumerspec:containers:- name: dubbo-demo-consumerimage: harbor.op.com/app/dubbo-demo-consumer:master_200813_0909ports:- containerPort: 8080protocol: TCP- containerPort: 20880protocol: TCPenv:- name: JAR_BALLvalue: dubbo-client.jarimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

Service:/data/k8s-yaml/dubbo-demo-consumer/svc.yaml

kind: ServiceapiVersion: v1metadata:name: dubbo-demo-consumernamespace: appspec:ports:- protocol: TCPport: 80targetPort: 8080selector:app: dubbo-demo-consumer

Ingress:/data/k8s-yaml/dubbo-demo-consumer/ingress.yaml

kind: IngressapiVersion: extensions/v1beta1metadata:name: dubbo-demo-consumernamespace: appspec:rules:- host: dubbo-demo.op.comhttp:paths:- path: /backend:serviceName: dubbo-demo-consumerservicePort: 80

servicePort与service中的port保持一致

应用资源配置清单

在任意一台k8s运算节点(vms21或vms22)执行:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-consumer/deployment.yamldeployment.apps/dubbo-demo-consumer created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-consumer/svc.yamlservice/dubbo-demo-consumer created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-consumer/ingress.yamlingress.extensions/dubbo-demo-consumer created

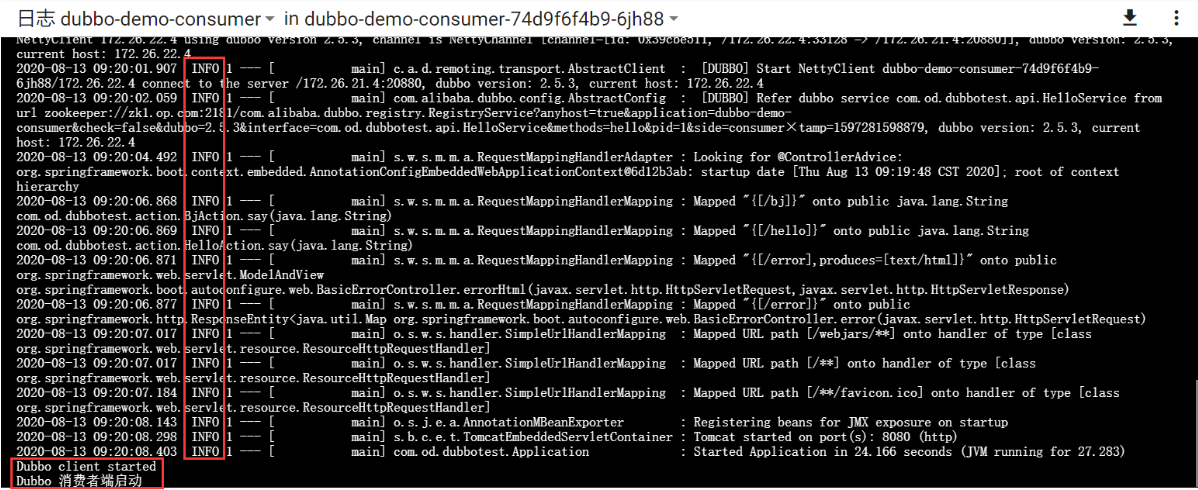

检查启动是否成功:

[root@vms21 ~]# kubectl get pod -n appNAME READY STATUS RESTARTS AGEdubbo-demo-consumer-74d9f6f4b9-6jh88 1/1 Running 0 10sdubbo-demo-service-564b47c8fd-4kkdg 1/1 Running 3 4d13h[root@vms21 ~]# kubectl logs dubbo-demo-consumer-74d9f6f4b9-6jh88 -n app --tail=2Dubbo client startedDubbo 消费者端启动

在dashboard查看pod日志:

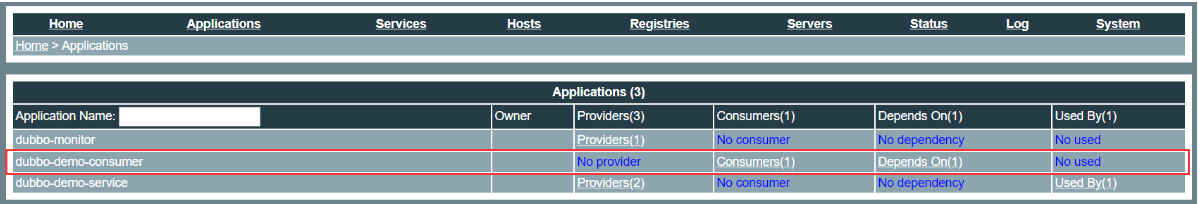

在dubbo-monitor检查是否已经注册成功:

解析域名

在DNS主机vms11上:

[root@vms11 ~]# vi /var/named/op.com.zone

dubbo-demo A 192.168.26.10

重启服务

[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A dubbo-demo.op.com @192.168.26.11 +short192.168.26.10

浏览器访问

http://dubbo-demo.op.com/hello?name=k8s-dubbo

实战维护dubbo微服务集群

更新(rolling update)

- 修改代码提git(发版)

- 使用jenkins进行CI(拉取代码、maven编译、构建镜像、推送到仓库)

- 修改并应用k8s资源配置清单> 或者在k8s的dashboard上直接操作

扩容(scaling)

- k8s的dashboard上直接操作

至此,完美完成dubbo微服务交付到k8s集群!