k8s-centos8u2-集群-kubernetes集群日志收集与分析平台

改造dubbo-demo-web项目为Tomcat启动项目

本改造是利用tomcat产生日志较多,为了方便进行收集tomcat日志的实验。

Tomcat官网:http://tomcat.apache.org

| 软件环境 | 主机或仓库 | 说明 |

|---|---|---|

| apache-tomcat-9.0.37 | vms200 | 打入镜像软件 |

| harbor.op.com/public/jre:8u112 | harbor.op.com/public | 镜像base |

| jmx_javaagent-0.14.0.jar | vms200 | 打入镜像软件 |

1 准备Tomcat的镜像底包

准备tomcat二进制包

运维主机vms200上:

Tomcat9下载链接:https://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-9/v9.0.37/bin/apache-tomcat-9.0.37.tar.gz

Tomcat8下载链接:https://archive.apache.org/dist/tomcat/tomcat-8/v8.5.50/bin/apache-tomcat-8.5.50.tar.gz

- 下载、解压

[root@vms200 ~]# cd /opt/src[root@vms200 src]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-9/v9.0.37/bin/apache-tomcat-9.0.37.tar.gz--2020-09-07 14:33:33-- https://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-9/v9.0.37/bin/apache-tomcat-9.0.37.tar.gzResolving mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.8.193, 2402:f000:1:408:8100::1Connecting to mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.8.193|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 11211292 (11M) [application/octet-stream]Saving to: ‘apache-tomcat-9.0.37.tar.gz’apache-tomcat-9.0.37.tar.gz 100%[============================================================================>] 10.69M 1.36MB/s in 9.8s2020-09-07 14:33:46 (1.09 MB/s) - ‘apache-tomcat-9.0.37.tar.gz’ saved [11211292/11211292][root@vms200 src]# ls -l|grep tomcat-rw-r--r-- 1 root root 11211292 Jul 1 04:34 apache-tomcat-9.0.37.tar.gz[root@vms200 src]# mkdir -p /data/dockerfile/tomcat9[root@vms200 src]# tar xf apache-tomcat-9.0.37.tar.gz -C /data/dockerfile/tomcat9[root@vms200 src]# cd /data/dockerfile/tomcat9

- 删除自带网页:保住

ROOT目录,部署需要

[root@vms200 tomcat9]# rm -fr apache-tomcat-9.0.37/webapps/*

简单配置tomcat

关闭

AJP端口

[root@vms200 tomcat9]# vi apache-tomcat-9.0.37/conf/server.xml

<!-- Define an AJP 1.3 Connector on port 8009 --><!--<Connector protocol="AJP/1.3"address="::1"port="8009"redirectPort="8443" />-->

配置日志

- 删除

3manager,4host-manager的handlers - 注释掉所有关于

3manager,4host-manager日志的配置 - 日志级别改为

INFO

[root@vms200 tomcat9]# vi apache-tomcat-9.0.37/conf/logging.properties

...handlers = 1catalina.org.apache.juli.AsyncFileHandler, 2localhost.org.apache.juli.AsyncFileHandler, java.util.logging.ConsoleHandler...1catalina.org.apache.juli.AsyncFileHandler.level = INFO...2localhost.org.apache.juli.AsyncFileHandler.level = INFO...#3manager.org.apache.juli.AsyncFileHandler.level = FINE#3manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs#3manager.org.apache.juli.AsyncFileHandler.prefix = manager.#3manager.org.apache.juli.AsyncFileHandler.maxDays = 90#3manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8#4host-manager.org.apache.juli.AsyncFileHandler.level = FINE#4host-manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs#4host-manager.org.apache.juli.AsyncFileHandler.prefix = host-manager.#4host-manager.org.apache.juli.AsyncFileHandler.maxDays = 90#4host-manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8java.util.logging.ConsoleHandler.level = INFO...

准备Dockerfile

运维主机vms200上:/data/dockerfile/tomcat9

[root@vms200 tomcat9]# vi Dockerfile

From harbor.op.com/public/jre:8u112RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\echo 'Asia/Shanghai' >/etc/timezoneENV CATALINA_HOME /opt/tomcatENV LANG zh_CN.UTF-8ADD apache-tomcat-9.0.37 /opt/tomcatADD config.yml /opt/prom/config.ymlADD jmx_javaagent-0.14.0.jar /opt/prom/jmx_javaagent-0.14.0.jarWORKDIR /opt/tomcatADD entrypoint.sh /entrypoint.shCMD ["/entrypoint.sh"]

- config.yml

[root@vms200 tomcat9]# vi config.yml

---rules:- pattern: '.*'

- jmx_javaagent-0.14.0.jar

下载:https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/

[root@vms200 tomcat9]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.14.0/jmx_prometheus_javaagent-0.14.0.jar...[root@vms200 tomcat9]# ls -l jmx*-rw-r--r-- 1 root root 413862 Sep 4 20:20 jmx_prometheus_javaagent-0.14.0.jar[root@vms200 tomcat9]# mv jmx_prometheus_javaagent-0.14.0.jar jmx_javaagent-0.14.0.jar[root@vms200 tomcat9]# ls -l jmx*-rw-r--r-- 1 root root 413862 Sep 4 20:20 jmx_javaagent-0.14.0.jar

- entrypoint.sh

[root@vms200 tomcat9]# vi entrypoint.sh

#!/bin/bashM_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.14.0.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"C_OPTS=${C_OPTS}MIN_HEAP=${MIN_HEAP:-"128m"}MAX_HEAP=${MAX_HEAP:-"128m"}JAVA_OPTS=${JAVA_OPTS:-"-Xmn384m -Xss256k -Duser.timezone=GMT+08 -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:CMSFullGCsBeforeCompaction=0 -XX:+CMSClassUnloadingEnabled -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+PrintClassHistogram -Dfile.encoding=UTF8 -Dsun.jnu.encoding=UTF8"}CATALINA_OPTS="${CATALINA_OPTS}"JAVA_OPTS="${M_OPTS} ${C_OPTS} -Xms${MIN_HEAP} -Xmx${MAX_HEAP} ${JAVA_OPTS}"sed -i -e "1a\JAVA_OPTS=\"$JAVA_OPTS\"" -e "1a\CATALINA_OPTS=\"$CATALINA_OPTS\"" /opt/tomcat/bin/catalina.shcd /opt/tomcat && /opt/tomcat/bin/catalina.sh run 2>&1 >> /opt/tomcat/logs/stdout.log

C_OPTS={MIN_HEAP:-“128m”} # java虚拟机初始化时的最小内存

MAX_HEAP=${MAX_HEAP:-“128m”} # java虚拟机初始化时的最大内存

[root@vms200 tomcat9]# chmod +x entrypoint.sh

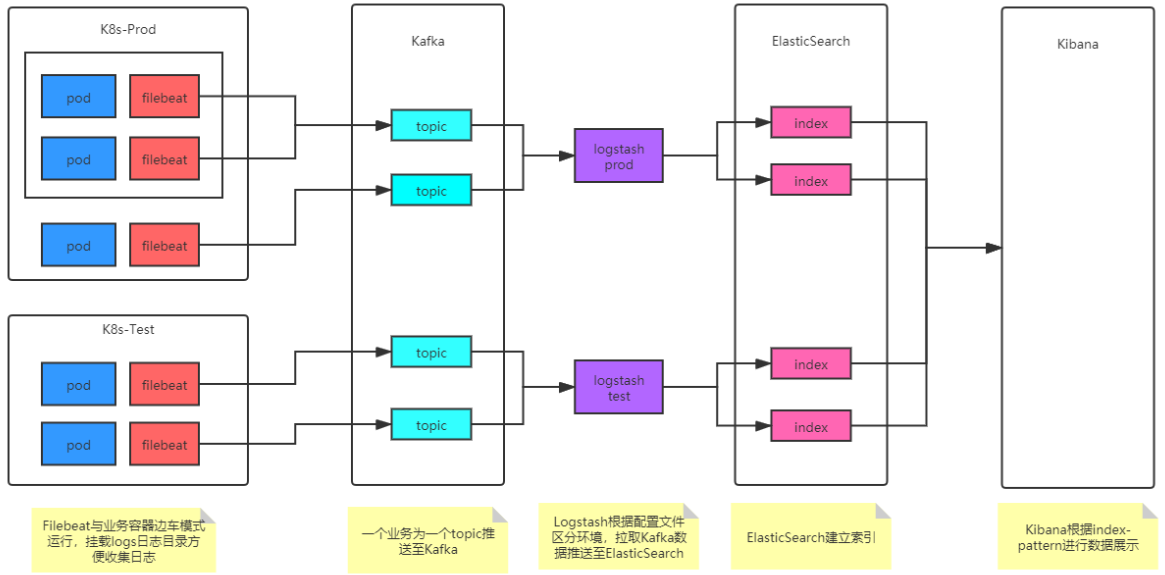

制作镜像并推送

[root@vms200 tomcat9]# docker build . -t harbor.op.com/base/tomcat:v9.0.37Sending build context to Docker daemon 11.73MBStep 1/10 : From harbor.op.com/public/jre:8u112---> fa3a085d6ef1Step 2/10 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone---> Using cache---> 3d2513af12bbStep 3/10 : ENV CATALINA_HOME /opt/tomcat---> Using cache---> d888b03e2e39Step 4/10 : ENV LANG zh_CN.UTF-8---> Using cache---> 76529c3a3e5eStep 5/10 : ADD apache-tomcat-9.0.37 /opt/tomcat---> 24939d226378Step 6/10 : ADD config.yml /opt/prom/config.yml---> b5879071cddbStep 7/10 : ADD jmx_javaagent-0.14.0.jar /opt/prom/jmx_javaagent-0.14.0.jar---> e61fe1aecd91Step 8/10 : WORKDIR /opt/tomcat---> Running in 61c50f87b795Removing intermediate container 61c50f87b795---> 57a7c35dca1bStep 9/10 : ADD entrypoint.sh /entrypoint.sh---> 449f9c27e7c3Step 10/10 : CMD ["/entrypoint.sh"]---> Running in ccb74ec8ae84Removing intermediate container ccb74ec8ae84---> 2873d09a2a27Successfully built 2873d09a2a27Successfully tagged harbor.op.com/base/tomcat:v9.0.37[root@vms200 tomcat9]# docker images |grep tomcatharbor.op.com/base/tomcat v9.0.37 2873d09a2a27 51 seconds ago 374MB

[root@vms200 tomcat9]# docker push harbor.op.com/base/tomcat:v9.0.37The push refers to repository [harbor.op.com/base/tomcat]...v9.0.37: digest: sha256:bfb7ec490f68af0997691f0b4686d618f22ebe88412d6eba2e423fcef3ed9425 size: 2409

2 改造dubbo-demo-web项目

此处项目代码改造仅供参考。本实验直接使用tomcat分支即可。

修改dubbo-client/pom.xml

/dubbo-demo-web/dubbo-client/pom.xml

<packaging>war</packaging><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId><exclusions><exclusion><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-tomcat</artifactId></exclusion></exclusions></dependency><dependency><groupId>org.apache.tomcat</groupId><artifactId>tomcat-servlet-api</artifactId><version>8.0.36</version><scope>provided</scope></dependency>

修改Application.java

/dubbo-demo-web/dubbo-client/src/main/java/com/od/dubbotest/Application.java

import org.springframework.boot.autoconfigure.EnableAutoConfiguration;import org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration;import org.springframework.context.annotation.ImportResource;@ImportResource(value={"classpath*:spring-config.xml"})@EnableAutoConfiguration(exclude={DataSourceAutoConfiguration.class})

创建ServletInitializer.java

/dubbo-demo-web/dubbo-client/src/main/java/com/od/dubbotest/ServletInitializer.java

package com.od.dubbotest;import org.springframework.boot.SpringApplication;import org.springframework.boot.builder.SpringApplicationBuilder;import org.springframework.boot.context.web.SpringBootServletInitializer;import com.od.dubbotest.Application;public class ServletInitializer extends SpringBootServletInitializer {@Overrideprotected SpringApplicationBuilder configure(SpringApplicationBuilder builder) {return builder.sources(Application.class);}}

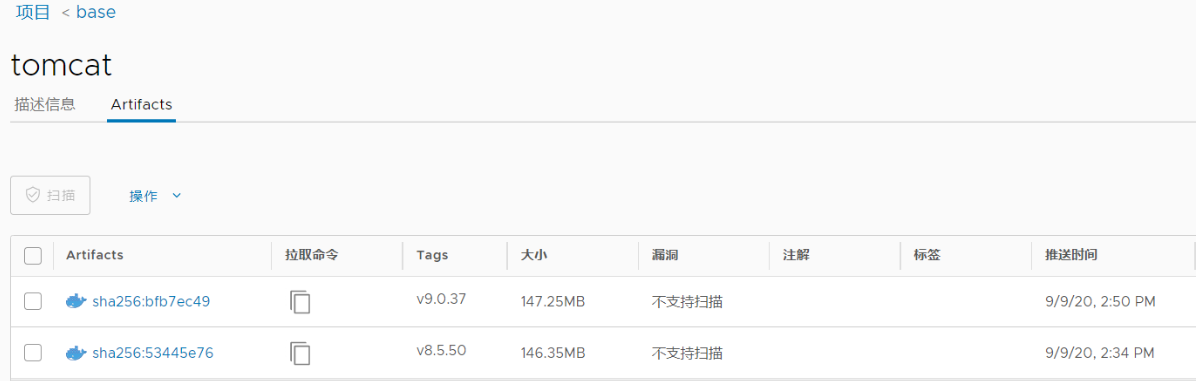

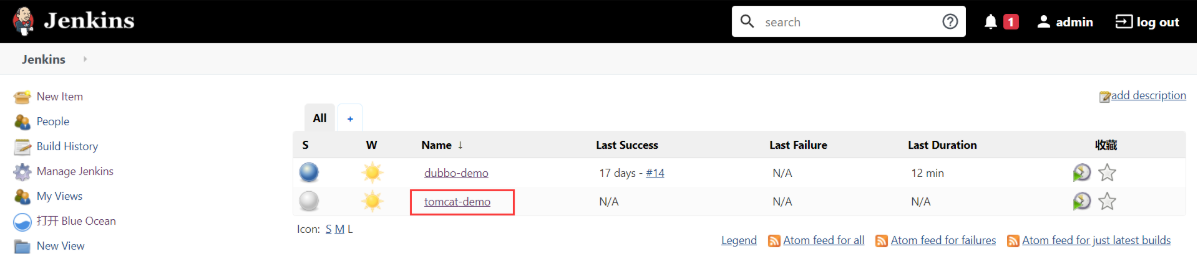

3 新建Jenkins的pipeline

新增流水线及配置

使用admin登录

- New Item

- Enter an item name> tomcat-demo

- Pipeline -> OK

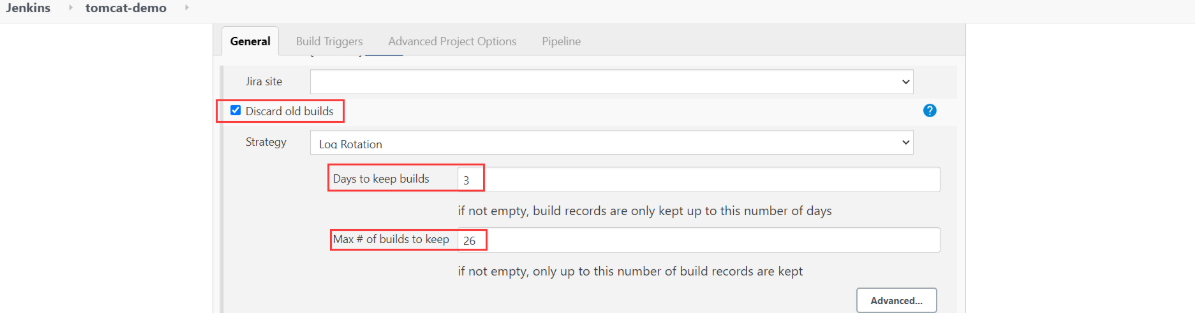

- Discard old builds> Days to keep builds : 3

Max # of builds to keep : 30

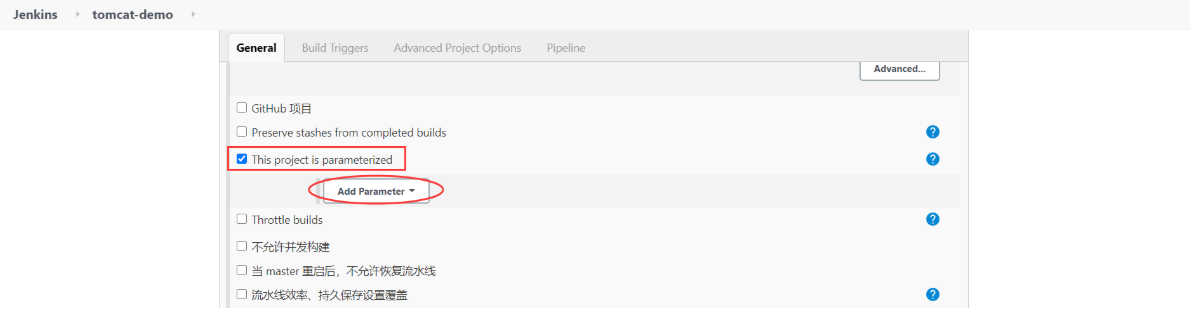

- This project is parameterized

- Add Parameter -> String Parameter> Name : app_name

Default Value :

Description : project name. e.g: dubbo-demo-web

- Add Parameter -> String Parameter> Name : image_name

Default Value :

Description : project docker image name. e.g: app/dubbo-demo-web

- Add Parameter -> String Parameter> Name : git_repo

Default Value :

Description : project git repository. e.g: git@gitee.com:cloudlove2007/dubbo-demo-web.git

- Add Parameter -> String Parameter> Name : git_ver

Default Value : tomcat

Description : git commit id of the project.

- Add Parameter -> String Parameter> Name : add_tag

Default Value :

Description : project docker image tag, date_timestamp recommended. e.g: 200907_1720

- Add Parameter -> String Parameter> Name : mvn_dir

Default Value : ./

Description : project maven directory. e.g: ./

- Add Parameter -> String Parameter> Name : target_dir

Default Value : ./dubbo-client/target

Description : the relative path of target file such as .jar or .war package. e.g: ./dubbo-client/target

- Add Parameter -> String Parameter> Name : mvn_cmd

Default Value : mvn clean package -Dmaven.test.skip=true

Description : maven command. e.g: mvn clean package -e -q -Dmaven.test.skip=true

- Add Parameter -> Choice Parameter> Name : base_image

Default Value :- base/tomcat:v9.0.37

- base/tomcat:v8.5.57

- base/tomcat:v8.5.50

Description : project base image list in harbor.op.com.

- Add Parameter -> Choice Parameter> Name : maven

Default Value :- 3.6.3-8u261

- 3.2.5-6u025

- 2.2.1-6u025

Description : different maven edition.

- Add Parameter -> String Parameter> Name : root_url

Default Value : ROOT

Description : webapp dir.

所有String Parameter,选中Trim the string

Pipeline Script

pipeline {agent anystages {stage('pull') { //get project code from reposteps {sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"}}stage('build') { //exec mvn cmdsteps {sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"}}stage('unzip') { //unzip target/*.war -c target/project_dirsteps {sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && unzip *.war -d ./project_dir"}}stage('image') { //build image and push to registrysteps {writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.op.com/${params.base_image}ADD ${params.target_dir}/project_dir /opt/tomcat/webapps/${params.root_url}"""sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.op.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.op.com/${params.image_name}:${params.git_ver}_${params.add_tag}"}}}}

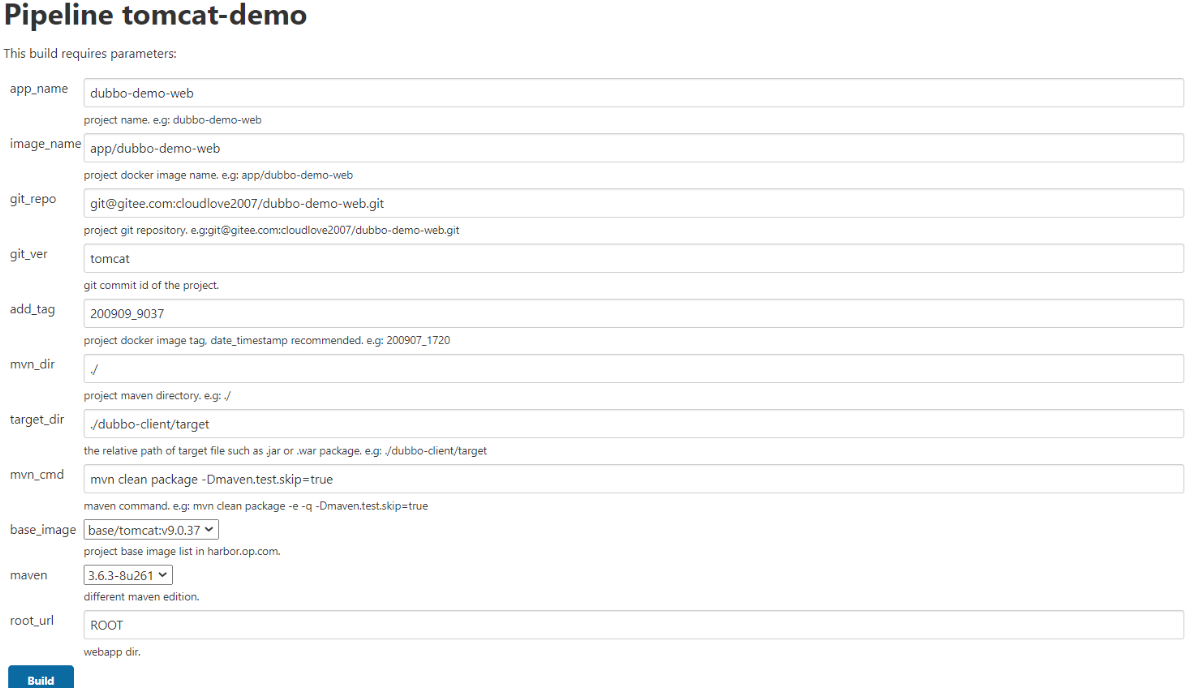

4 构建应用镜像

使用Jenkins进行CI,并查看harbor仓库

- Build with Parameters 配置参数 | 参数名 | 参数值 | | —- | —- | | app_name | dubbo-demo-web | | image_name | app/dubbo-demo-web | | git_repo | git@gitee.com:cloudlove2007/dubbo-demo-web.git | | git_ver | tomcat | | add_tag | 200909_9037 | | mvn_dir | ./ | | target_dir | ./dubbo-client/target | | mvn_cmd | mvn clean package -Dmaven.test.skip=true | | base_image | base/tomcat:v9.0.37 | | maven | 3.6.3-8u261 | | root_url | ROOT |

app_name与git项目名、image_name镜像名保持一致,便于核对和查找

截图:

- 点击

Build,然后切换到Console Output查看

...[INFO] --- maven-war-plugin:3.0.0:war (default-war) @ dubbo-client ---[INFO] Packaging webapp[INFO] Assembling webapp [dubbo-client] in [/var/jenkins_home/workspace/tomcat-demo/dubbo-demo-web/16/dubbo-client/target/dubbo-client][INFO] Processing war project[INFO] Webapp assembled in [5684 msecs][INFO] Building war: /var/jenkins_home/workspace/tomcat-demo/dubbo-demo-web/16/dubbo-client/target/dubbo-client.war[INFO][INFO] ------------------< com.od.dubbotest:dubbo-demo-web >-------------------[INFO] Building demo 0.0.1-SNAPSHOT [3/3][INFO] --------------------------------[ pom ]---------------------------------[INFO][INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ dubbo-demo-web ---[INFO] ------------------------------------------------------------------------[INFO] Reactor Summary for demo 0.0.1-SNAPSHOT:[INFO][INFO] dubbotest-api ...................................... SUCCESS [ 11.742 s][INFO] dubbotest-client ................................... SUCCESS [ 30.942 s][INFO] demo ............................................... SUCCESS [ 0.032 s][INFO] ------------------------------------------------------------------------[INFO] BUILD SUCCESS[INFO] ------------------------------------------------------------------------[INFO] Total time: 44.501 s[INFO] Finished at: 2020-09-09T14:56:05+08:00...Successfully built 30005d9b0e21Successfully tagged harbor.op.com/app/dubbo-demo-web:tomcat_200909_9037+ docker push harbor.op.com/app/dubbo-demo-web:tomcat_200909_9037The push refers to repository [harbor.op.com/app/dubbo-demo-web]...[Pipeline] End of PipelineFinished: SUCCESS

5 部署dubbo-demo-web到k8s

全程在dashboard操作(如果资源紧张,可以停止jenkins等用不到的)

准备k8s的资源配置清单

不再需要单独准备资源配置清单

- dubbo-demo-web(tomcat)需要Apollo配置,本实验使用Apollo测试环境

FAT - 确认用于测试环境的zk是否启动(vms11上)

- 在

test命名空间- 启动:

apollo-configservice、apollo-adminservice; - 启动:

dubbo-demo-service;停止:dubbo-demo-consumer

- 启动:

- 在

infra命名空间- 启动:

apollo-portal; - 登录

http://portal.op.com/查看配置及实例

- 启动:

应用资源配置清单

k8s的dashboard上直接修改image的值为jenkins打包出来的镜像:

在

test命名空间编辑dubbo-demo-consumer,修改镜像为:(只需要修改镜像即可)harbor.op.com/app/dubbo-demo-web:tomcat_200909_9037启动

访问验证及检查

查看POD启动日志

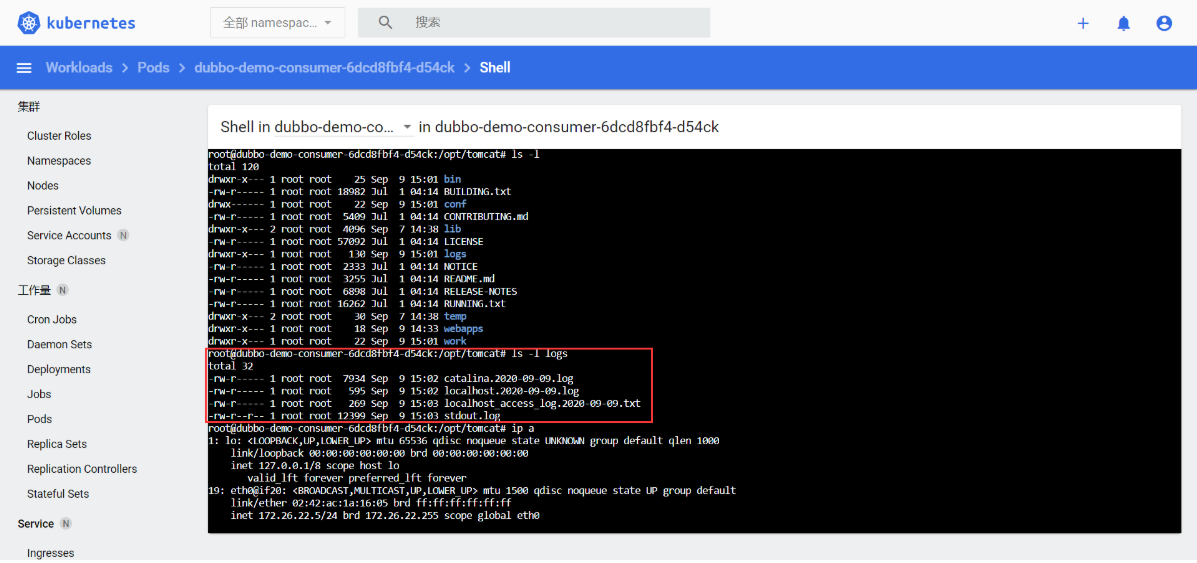

检查tomcat运行情况:任意一台运算节点主机上

[root@vms22 ~]# kubectl get pod -n testNAME READY STATUS RESTARTS AGEapollo-adminservice-7fcb487b58-h67lr 1/1 Running 0 4h40mapollo-configservice-8bb4dc678-qjj5m 1/1 Running 0 4h46mdubbo-demo-consumer-6dcd8fbf4-d54ck 1/1 Running 0 19mdubbo-demo-service-77f7c589fb-7kdbw 1/1 Running 0 4h38m[root@vms22 ~]# kubectl exec -ti dubbo-demo-consumer-6dcd8fbf4-d54ck -n test -- bashroot@dubbo-demo-consumer-6dcd8fbf4-d54ck:/opt/tomcat# ls -lsr logs/total 3216 -rw-r--r-- 1 root root 12399 Sep 9 15:03 stdout.log4 -rw-r----- 1 root root 269 Sep 9 15:03 localhost_access_log.2020-09-09.txt4 -rw-r----- 1 root root 595 Sep 9 15:02 localhost.2020-09-09.log8 -rw-r----- 1 root root 7934 Sep 9 15:02 catalina.2020-09-09.logroot@dubbo-demo-consumer-6dcd8fbf4-d54ck:/opt/tomcat# exit

也可以从dashboard进入pod进行查看:

查看Apollo实例列表

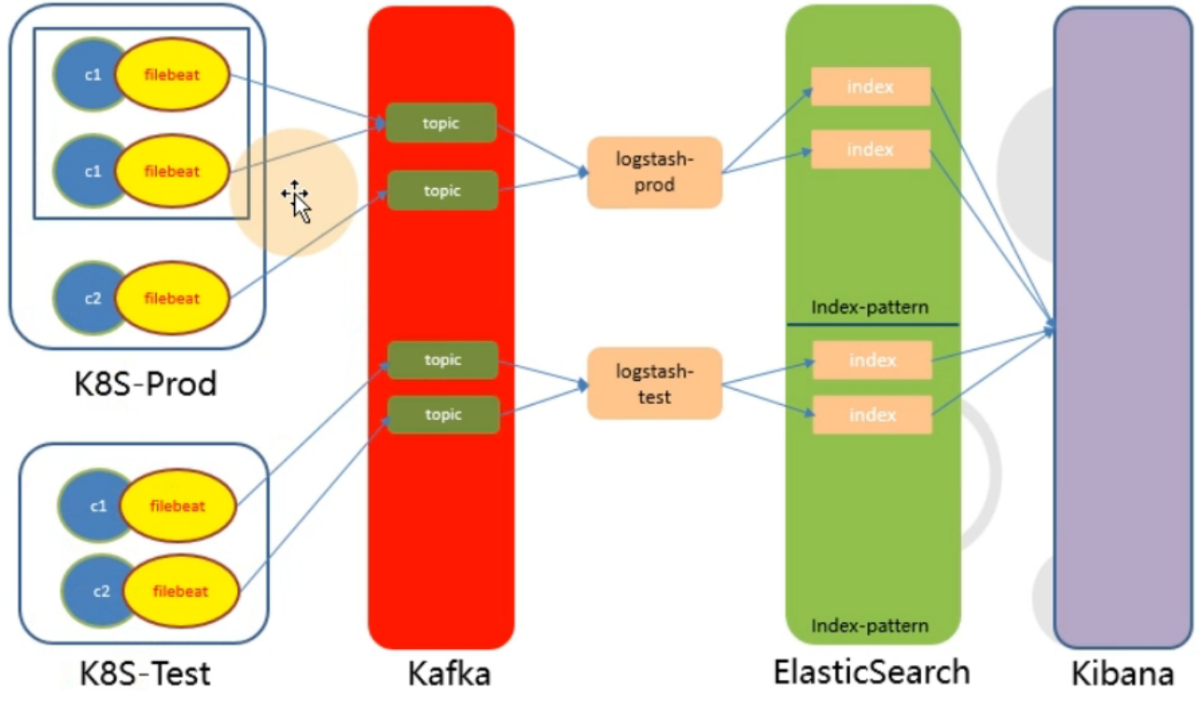

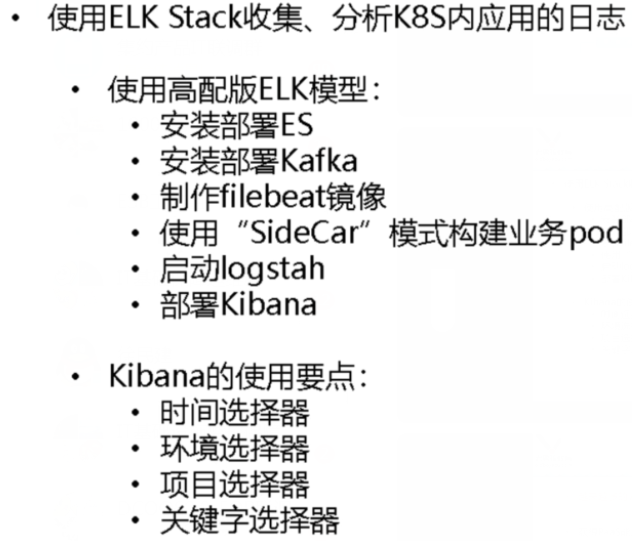

使用ELK Stack收集kubernetes集群内的应用日志

收集K8S日志方案

K8s系统里的业务应用是高度“动态化”的,随着容器编排的进行,业务容器在不断的被创建、被摧毁、被漂移、被扩缩容…

我们需要这样一套日志收集、分析的系统:

- 收集 – 能够采集多种来源的日志数据(流式日志收集器)

- 传输 – 能够稳定的把日志数据传输到中央系统(消息队列)

- 存储 – 可以将日志以结构化数据的形式存储起来(搜索引擎)

- 分析 – 支持方便的分析、检索方法,最好有GUI管理系统(web)

- 警告 – 能够提供错误报告,监控机制(监控系统)

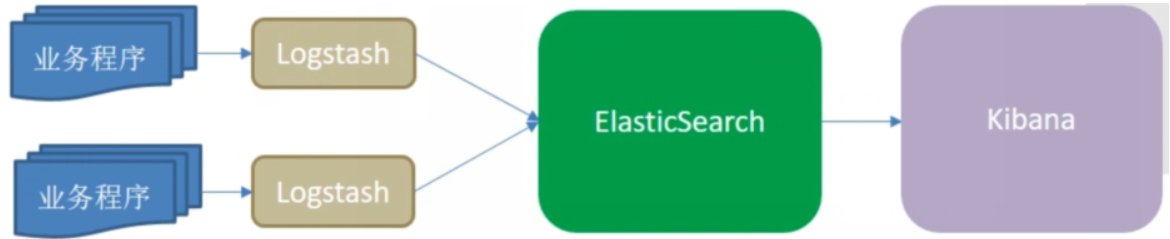

传统ELk模型缺点

此图片仅学习使用

- Logstash使用Jruby语言开发,吃资源,大量部署消耗极高

- 业务程序与logstash耦合过松,不利于业务迁移

- 日志收集与ES耦合又过紧,易打爆、丢数据

- 在容器云环境下,传统ELk模型难以完成工作

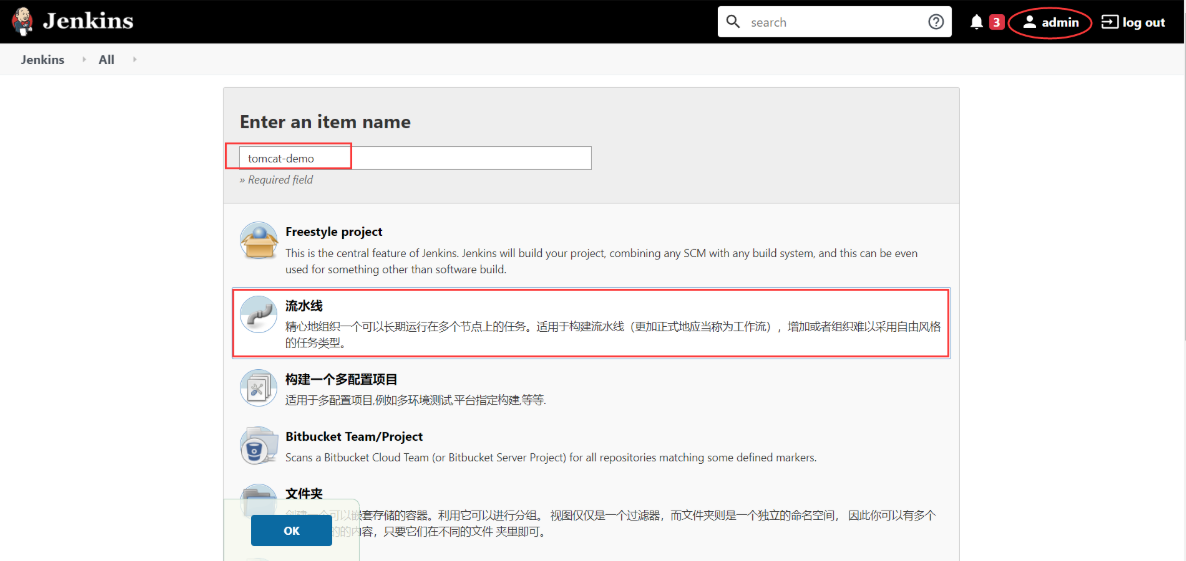

K8s容器日志收集模型

软件环境

| 软件 | 主机 | 说明 |

|---|---|---|

| JDK8 (1.8.0_261) | vms11、vms12 | 二进制部署 |

| elasticsearch-6.8.12 | vms12 | 二进制部署 |

| kafka_2.12-2.5.1 | vms11 | 二进制部署 |

| zookeeper-3.5.8 | vms11 | 二进制部署 |

| sheepkiller/kafka-manager:latest | k8s(vms21、vms22) | k8s:Deployment |

| filebeat-7.9.1 | k8s(vms21、vms22) | k8s:自制镜像 + Deployment |

| logstash:6.8.12 | vm200 | Docker |

| kibana:6.8.12 | k8s(vms21、vms22) | k8s:Deployment |

部署ElasticSearch

官网:https://www.elastic.co/

官方github地址:https://github.com/elastic/elasticsearch

下载地址:

vms12上:(JDK8)

[root@vms12 ~]# java -versionjava version "1.8.0_261"Java(TM) SE Runtime Environment (build 1.8.0_261-b12)Java HotSpot(TM) 64-Bit Server VM (build 25.261-b12, mixed mode)

安装

选择JDK8支持的版本进行下载。

下载:https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.12.tar.gz (7.0以上版本使用openJDK)

[root@vms12 ~]# cd /opt/src[root@vms12 src]# ls -l | grep elasticsearch-6.8.12.tar.gz-rw-r--r-- 1 root root 149091869 Sep 19 17:58 elasticsearch-6.8.12.tar.gz[root@vms12 src]# tar xf elasticsearch-6.8.12.tar.gz -C /opt[root@vms12 src]# ln -s /opt/elasticsearch-6.8.12/ /opt/elasticsearch[root@vms12 src]# cd /opt/elasticsearch

配置

elasticsearch.yml

[root@vms12 elasticsearch]# mkdir -p /data/elasticsearch/{data,logs}[root@vms12 elasticsearch]# vi config/elasticsearch.yml

cluster.name: es.op.comnode.name: vms12.cos.compath.data: /data/elasticsearch/datapath.logs: /data/elasticsearch/logsbootstrap.memory_lock: truenetwork.host: 192.168.26.12http.port: 9200

jvm.options

[root@vms12 elasticsearch]# vi config/jvm.options

根据环境设置,-Xms和-Xmx设置为相同的值,推荐设置为机器内存的一半左右

...-Xms512m-Xmx512m...

创建普通用户

[root@vms12 elasticsearch]# useradd -s /bin/bash -M es[root@vms12 elasticsearch]# chown -R es.es /opt/elasticsearch-6.8.12[root@vms12 elasticsearch]# chown -R es.es /data/elasticsearch

文件描述符

[root@vms12 elasticsearch]# vi /etc/security/limits.d/es.conf

es hard nofile 65536es soft fsize unlimitedes hard memlock unlimitedes soft memlock unlimited

调整内核参数

[root@vms12 elasticsearch]# sysctl -w vm.max_map_count=262144vm.max_map_count = 262144

或者

[root@vms12 elasticsearch]# echo "vm.max_map_count=262144" >> /etc/sysctl.conf[root@vms12 elasticsearch]# sysctl -pvm.max_map_count = 262144

启动

[root@vms12 elasticsearch]# su -c "/opt/elasticsearch/bin/elasticsearch -d" es[root@vms12 elasticsearch]# su es -c "ps aux|grep -v grep|grep java|grep elasticsearch"es 1401 33.2 50.7 3259508 1018588 ? SLl 19:00 0:49 /usr/java/jdk/bin/java -Xms512m -Xmx512m -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch-8592780966516530277 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:logs/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/opt/elasticsearch -Des.path.conf=/opt/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -cp /opt/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d[root@vms12 elasticsearch]# netstat -luntp|grep 9200tcp6 0 0 192.168.26.12:9200 :::* LISTEN 1401/java[root@vms12 elasticsearch]# netstat -luntp|grep 1401tcp6 0 0 192.168.26.12:9200 :::* LISTEN 1401/javatcp6 0 0 192.168.26.12:9300 :::* LISTEN 1401/java

9200为ES端口,1401是ES进程PID

添加k8s日志索引模板:

[root@vms12 elasticsearch]# curl -H "Content-Type:application/json" -XPUT http://192.168.26.12:9200/_template/k8s -d '{"template" : "k8s*","index_patterns": ["k8s*"],"settings": {"number_of_shards": 5,"number_of_replicas": 0}}'

返回:{"acknowledged":true}

number_of_replicas: 生产为3份副本集,本es为单节点,不能配置副本集

部署kafka

官网:http://kafka.apache.org

github地址:https://github.com/apache/kafka

下载地址:https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.5.1/kafka_2.12-2.5.1.tgz

kafka版本选择注意:JDK版本;kafka-manager支持的版本。

vms11上:

安装

[root@vms11 ~]# cd /opt/src/[root@vms11 src]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.5.1/kafka_2.12-2.5.1.tgz--2020-09-19 20:19:52-- https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.5.1/kafka_2.12-2.5.1.tgzResolving mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.8.193, 2402:f000:1:408:8100::1Connecting to mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.8.193|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 61709988 (59M) [application/octet-stream]Saving to: ‘kafka_2.12-2.5.1.tgz’kafka_2.12-2.5.1.tgz 100%[======================================================================>] 58.85M 1.07MB/s in 30s2020-09-19 20:20:25 (1.97 MB/s) - ‘kafka_2.12-2.5.1.tgz’ saved [61709988/61709988][root@vms11 src]# ls -l kafka*-rw-r--r-- 1 root root 61709988 Aug 11 06:18 kafka_2.12-2.5.1.tgz

[root@vms11 src]# tar xf kafka_2.12-2.5.1.tgz -C /opt[root@vms11 src]# ln -s /opt/kafka_2.12-2.5.1/ /opt/kafka

配置

[root@vms11 src]# vi /opt/kafka/config/server.properties

log.dirs=/data/kafka/logs# 填写需要连接的 zookeeper 集群地址,当前连接本地的 zk 集群。zookeeper.connect=localhost:2181# 超过10000条日志强制刷盘,超过1000ms刷盘log.flush.interval.messages=10000log.flush.interval.ms=1000# 新增以下两项delete.topic.enable=truehost.name=vms11.cos.com

[root@vms11 src]# mkdir -p /data/kafka/logs

启动

[root@vms11 src]# cd /opt/kafka

[root@vms11 kafka]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties[root@vms11 kafka]# ps aux |grep kafka | grep -v greproot 8753 91.0 12.1 3749936 243548 pts/0 Sl 08:45 0:20 /usr/java/jdk/bin/java -Xmx1G -Xms1G -server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -XX:MaxInlineLevel=15 -Djava.awt.headless=true -Xloggc:/opt/kafka/bin/../logs/kafkaServer-gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dkafka.logs.dir=/opt/kafka/bin/../logs -Dlog4j.configuration=file:/opt/kafka/bin/../config/log4j.properties -cp /usr/java/jdk/lib:/usr/java/jdk/lib/tools.jar:/opt/kafka/bin/../libs/activation-1.1.1.jar:/opt/kafka/bin/../libs/aopalliance-repackaged-2.5.0.jar:/opt/kafka/bin/../libs/argparse4j-0.7.0.jar:/opt/kafka/bin/../libs/audience-annotations-0.5.0.jar:/opt/kafka/bin/../libs/commons-cli-1.4.jar:/opt/kafka/bin/../libs/commons-lang3-3.8.1.jar:/opt/kafka/bin/../libs/connect-api-2.5.1.jar:/opt/kafka/bin/../libs/connect-basic-auth-extension-2.5.1.jar:/opt/kafka/bin/../libs/connect-file-2.5.1.jar:/opt/kafka/bin/../libs/connect-json-2.5.1.jar:/opt/kafka/bin/../libs/connect-mirror-2.5.1.jar:/opt/kafka/bin/../libs/connect-mirror-client-2.5.1.jar:/opt/kafka/bin/../libs/connect-runtime-2.5.1.jar:/opt/kafka/bin/../libs/connect-transforms-2.5.1.jar:/opt/kafka/bin/../libs/hk2-api-2.5.0.jar:/opt/kafka/bin/../libs/hk2-locator-2.5.0.jar:/opt/kafka/bin/../libs/hk2-utils-2.5.0.jar:/opt/kafka/bin/../libs/jackson-annotations-2.10.2.jar:/opt/kafka/bin/../libs/jackson-core-2.10.2.jar:/opt/kafka/bin/../libs/jackson-databind-2.10.2.jar:/opt/kafka/bin/../libs/jackson-dataformat-csv-2.10.2.jar:/opt/kafka/bin/../libs/jackson-datatype-jdk8-2.10.2.jar:/opt/kafka/bin/../libs/jackson-jaxrs-base-2.10.2.jar:/opt/kafka/bin/../libs/jackson-jaxrs-json-provider-2.10.2.jar:/opt/kafka/bin/../libs/jackson-module-jaxb-annotations-2.10.2.jar:/opt/kafka/bin/../libs/jackson-module-paranamer-2.10.2.jar:/opt/kafka/bin/../libs/jackson-module-scala_2.12-2.10.2.jar:/opt/kafka/bin/../libs/jakarta.activation-api-1.2.1.jar:/opt/kafka/bin/../libs/jakarta.annotation-api-1.3.4.jar:/opt/kafka/bin/../libs/jakarta.inject-2.5.0.jar:/opt/kafka/bin/../libs/jakarta.ws.rs-api-2.1.5.jar:/opt/kafka/bin/../libs/jakarta.xml.bind-api-2.3.2.jar:/opt/kafka/bin/../libs/javassist-3.22.0-CR2.jar:/opt/kafka/bin/../libs/javassist-3.26.0-GA.jar:/opt/kafka/bin/../libs/javax.servlet-api-3.1.0.jar:/opt/kafka/bin/../libs/javax.ws.rs-api-2.1.1.jar:/opt/kafka/bin/../libs/jaxb-api-2.3.0.jar:/opt/kafka/bin/../libs/jersey-client-2.28.jar:/opt/kafka/bin/../libs/jersey-common-2.28.jar:/opt/kafka/bin/../libs/jersey-container-servlet-2.28.jar:/opt/kafka/bin/../libs/jersey-container-servlet-core-2.28.jar:/opt/kafka/bin/../libs/jersey-hk2-2.28.jar:/opt/kafka/bin/../libs/jersey-media-jaxb-2.28.jar:/opt/kafka/bin/../libs/jersey-server-2.28.jar:/opt/kafka/bin/../libs/jetty-client-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-continuation-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-http-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-io-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-security-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-server-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-servlet-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-servlets-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jetty-util-9.4.24.v20191120.jar:/opt/kafka/bin/../libs/jopt-simple-5.0.4.jar:/opt/kafka/bin/../libs/kafka_2.12-2.5.1.jar:/opt/kafka/bin/../libs/kafka_2.12-2.5.1-sources.jar:/opt/kafka/bin/../libs/kafka-clients-2.5.1.jar:/opt/kafka/bin/../libs/kafka-log4j-appender-2.5.1.jar:/opt/kafka/bin/../libs/kafka-streams-2.5.1.jar:/opt/kafka/bin/../libs/kafka-streams-examples-2.5.1.jar:/opt/kafka/bin/../libs/kafka-streams-scala_2.12-2.5.1.jar:/opt/kafka/bin/../libs/kafka-streams-test-utils-2.5.1.jar:/opt/kafka/bin/../libs/kafka-tools-2.5.1.jar:/opt/kafka/bin/../libs/log4j-1.2.17.jar:/opt/kafka/bin/../libs/lz4-java-1.7.1.jar:/opt/kafka/bin/../libs/maven-artifact-3.6.3.jar:/opt/kafka/bin/../libs/metrics-core-2.2.0.jar:/opt/kafka/bin/../libs/netty-buffer-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-codec-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-common-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-handler-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-resolver-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-transport-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-transport-native-epoll-4.1.50.Final.jar:/opt/kafka/bin/../libs/netty-transport-native-unix-common-4.1.50.Final.jar:/opt/kafka/bin/../libs/osgi-resource-locator-1.0.1.jar:/opt/kafka/bin/../libs/paranamer-2.8.jar:/opt/kafka/bin/../libs/plexus-utils-3.2.1.jar:/opt/kafka/bin/../libs/reflections-0.9.12.jar:/opt/kafka/bin/../libs/rocksdbjni-5.18.3.jar:/opt/kafka/bin/../libs/scala-collection-compat_2.12-2.1.3.jar:/opt/kafka/bin/../libs/scala-java8-compat_2.12-0.9.0.jar:/opt/kafka/bin/../libs/scala-library-2.12.10.jar:/opt/kafka/bin/../libs/scala-logging_2.12-3.9.2.jar:/opt/kafka/bin/../libs/scala-reflect-2.12.10.jar:/opt/kafka/bin/../libs/slf4j-api-1.7.30.jar:/opt/kafka/bin/../libs/slf4j-log4j12-1.7.30.jar:/opt/kafka/bin/../libs/snappy-java-1.1.7.3.jar:/opt/kafka/bin/../libs/validation-api-2.0.1.Final.jar:/opt/kafka/bin/../libs/zookeeper-3.5.8.jar:/opt/kafka/bin/../libs/zookeeper-jute-3.5.8.jar:/opt/kafka/bin/../libs/zstd-jni-1.4.4-7.jar kafka.Kafka /opt/kafka/config/server.properties[root@vms11 kafka]# netstat -luntp|grep 9092tcp6 0 0 192.168.26.11:9092 :::* LISTEN 8753/java[root@vms11 kafka]# netstat -luntp|grep 8753 #PIDtcp6 0 0 192.168.26.11:9092 :::* LISTEN 8753/javatcp6 0 0 :::40565 :::* LISTEN 8753/java

9092为kafka端口,8753是kafka进程PID

部署kafka-manager

Kafka-manager是一款管理Kafka集群的软件,是kafka的一个web管理页面,非必须。

官方github地址:https://github.com/yahoo/kafka-manager

源码下载地址:https://github.com/yahoo/kafka-manager/archive/2.0.0.2.tar.gz

运维主机vms200上:

方法一:自己制作docker镜像

1、准备Dockerfile:/data/dockerfile/kafka-manager/Dockerfile

FROM hseeberger/scala-sbtENV ZK_HOSTS=192.168.26.11:2181 \KM_VERSION=2.0.0.2RUN mkdir -p /tmp && \cd /tmp && \wget https://github.com/yahoo/kafka-manager/archive/${KM_VERSION}.tar.gz && \tar xf ${KM_VERSION}.tar.gz && \cd /tmp/kafka-manager-${KM_VERSION} && \sbt clean dist && \unzip -d / ./target/universal/kafka-manager-${KM_VERSION}.zip && \rm -fr /tmp/${KM_VERSION} /tmp/kafka-manager-${KM_VERSION}WORKDIR /kafka-manager-${KM_VERSION}EXPOSE 9000ENTRYPOINT ["./bin/kafka-manager","-Dconfig.file=conf/application.conf"]

2、制作docker镜像:/data/dockerfile/kafka-manager

[vms200 kafka-manager]# docker build . -t harbor.op.com/infra/kafka-manager:v2.0.0.2

(漫长的过程)

这里存在几个问题:

- kafka-manager 改名为 CMAK,压缩包名称和内部目录名发生了变化。

- sbt 编译需要下载很多依赖,因为不可描述的原因,速度非常慢,非VPN网络大概率失败。

- 因不具备VPN条件,编译失败。又因为第一条,这个dockerfile大概率需要修改。

- 生产环境中一定要自己重新做一份!

- 构建过程极其漫长,大概率会失败,因此通过第二种方式下载构建好的镜像。

- 构建好的镜像写死了zk地址,要注意传入变量修改zk地址。

方法二:直接下载docker镜像

https://www.docker.com/products/docker-hub 搜索:kafka-manager

镜像下载地址:https://hub.docker.com/r/sheepkiller/kafka-manager/tags

[root@vms200 ~]# docker pull sheepkiller/kafka-manager:stable

stable: Pulling from sheepkiller/kafka-manager

...

Digest: sha256:77c652e080c5c4d1d37a6cd6a0838194cf6d5af9fa83660ef208280dea163b35

Status: Downloaded newer image for sheepkiller/kafka-manager:stable

docker.io/sheepkiller/kafka-manager:stable

[root@vms200 ~]# docker pull sheepkiller/kafka-manager:latest

latest: Pulling from sheepkiller/kafka-manager

...

Digest: sha256:615f3b99d38aba2d5fdb3fb750a5990ba9260c8fb3fd29c7e776e8c150518b78

Status: Downloaded newer image for sheepkiller/kafka-manager:latest

docker.io/sheepkiller/kafka-manager:latest

[root@vms200 ~]# docker tag sheepkiller/kafka-manager:latest harbor.op.com/infra/kafka-manager:latest

[root@vms200 ~]# docker tag sheepkiller/kafka-manager:stable harbor.op.com/infra/kafka-manager:stable

[root@vms200 ~]# docker push harbor.op.com/infra/kafka-manager:latest

The push refers to repository [harbor.op.com/infra/kafka-manager]

...

latest: digest: sha256:615f3b99d38aba2d5fdb3fb750a5990ba9260c8fb3fd29c7e776e8c150518b78 size: 1160

[root@vms200 ~]# docker push harbor.op.com/infra/kafka-manager:stable

The push refers to repository [harbor.op.com/infra/kafka-manager]

...

stable: digest: sha256:df04c4f47039de97d5c4fd38064aa91153b2a65f69296faae6e474756af834eb size: 3056

[root@vms200 ~]# docker pull zenko/kafka-manager:latest

[root@vms200 ~]# docker tag zenko/kafka-manager:latest harbor.op.com/infra/kafka-manager:200920

[root@vms200 ~]# docker push harbor.op.com/infra/kafka-manager:200920

准备资源配置清单

[root@vms200 ~]# mkdir /data/k8s-yaml/kafka-manager && cd /data/k8s-yaml/kafka-manager

- Deployment

[root@vms200 kafka-manager]# vi /data/k8s-yaml/kafka-manager/deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: kafka-manager

namespace: infra

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

name: kafka-manager

template:

metadata:

labels:

app: kafka-manager

name: kafka-manager

spec:

containers:

- name: kafka-manager

image: harbor.op.com/infra/kafka-manager:200920

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: zk1.op.com:2181

- name: APPLICATION_SECRET

value: letmein

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

- Service

[root@vms200 kafka-manager]# vi /data/k8s-yaml/kafka-manager/svc.yaml

kind: Service

apiVersion: v1

metadata:

name: kafka-manager

namespace: infra

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

- Ingress

[root@vms200 kafka-manager]# vi /data/k8s-yaml/kafka-manager/ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: kafka-manager

namespace: infra

spec:

rules:

- host: kafka-manager.op.com

http:

paths:

- path: /

backend:

serviceName: kafka-manager

servicePort: 9000

应用资源配置清单

任意一台运算节点上:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/kafka-manager/deployment.yaml

deployment.apps/kafka-manager created

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/kafka-manager/svc.yaml

service/kafka-manager created

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/kafka-manager/ingress.yaml

ingress.extensions/kafka-manager created

解析域名

vms11上

[root@vms11 ~]# vi /var/named/op.com.zone

...

kafka-manager A 192.168.26.10

注意:serial前滚一个序列号

[root@vms11 ~]# systemctl restart named

[root@vms11 ~]# host kafka-manager.op.com

kafka-manager.op.com has address 192.168.26.10

[root@vms11 ~]# dig -t A kafka-manager.op.com @192.168.26.11 +short

192.168.26.10

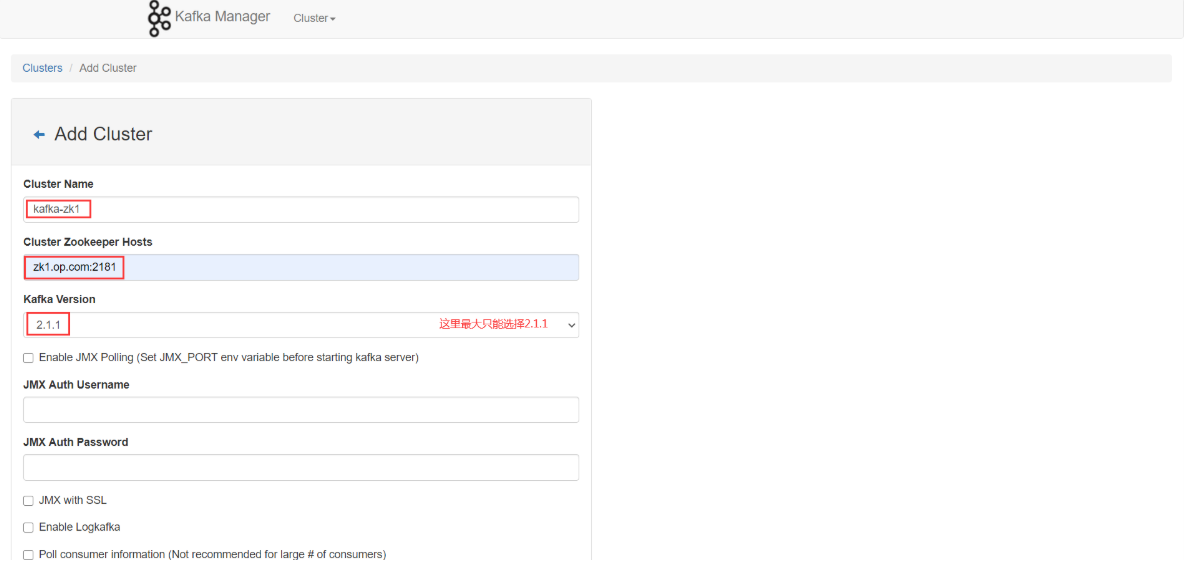

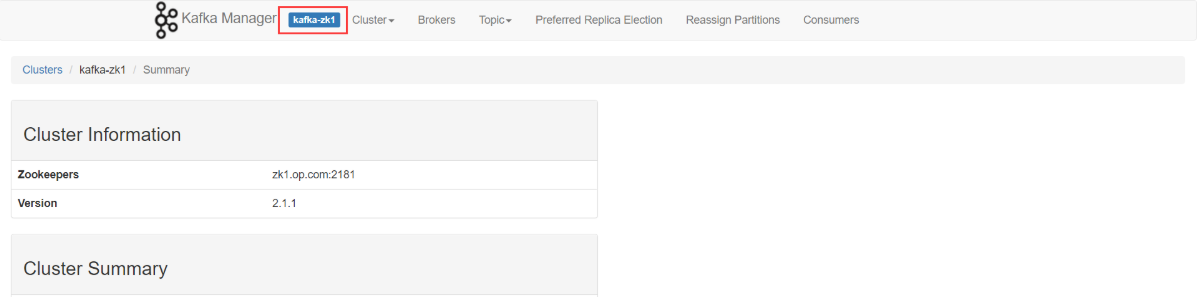

浏览器访问

Cluster > Add Cluster

Kafka Version最大只有2.1.1,本实验中是2.5.1。

输入以上下3项后Save,点击Go to cluster view.

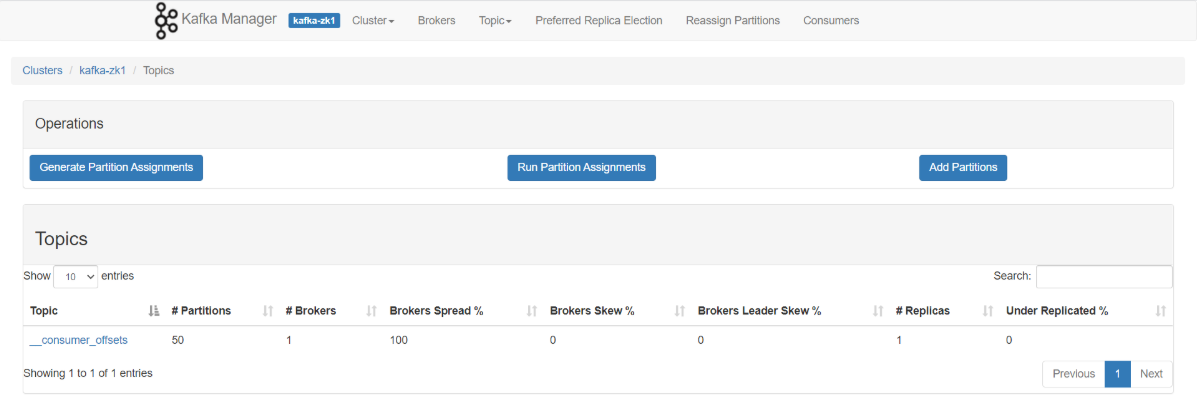

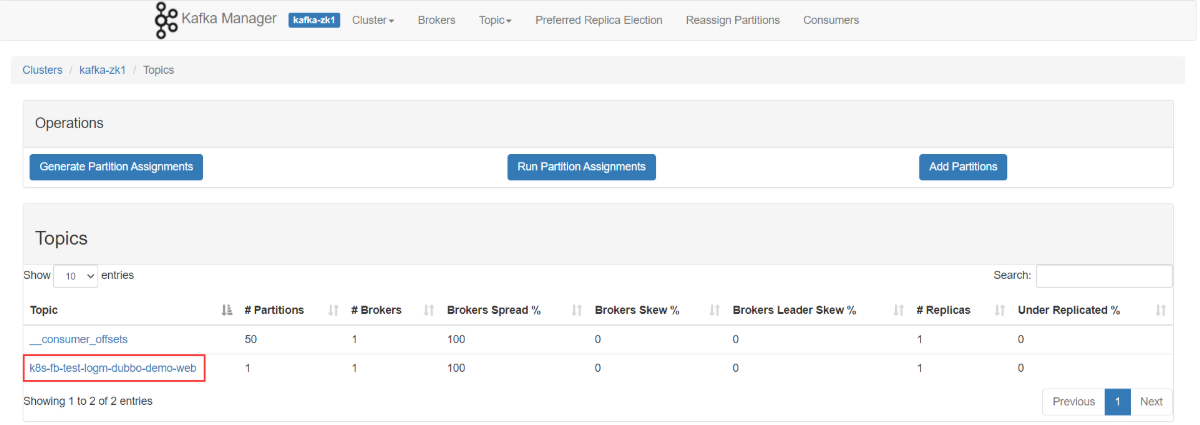

Topic > List

部署filebeat

运维主机vms200上:

制作docker镜像

准备Dockerfile

[root@vms200 ~]# mkdir /data/dockerfile/filebeat && cd /data/dockerfile/filebeat

[root@vms200 filebeat]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.9.1-linux-x86_64.tar.gz

[root@vms200 filebeat]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.9.1-linux-aarch64.tar.gz.sha512

[root@vms200 filebeat]# ls -l fileb*

-rw-r--r-- 1 root root 31152914 Sep 20 15:22 filebeat-7.9.1-linux-x86_64.tar.gz

-rw-r--r-- 1 root root 164 Sep 20 15:22 filebeat-7.9.1-linux-x86_64.tar.gz.sha512

[root@vms200 filebeat]# cat filebeat-7.9.1-linux-x86_64.tar.gz.sha512

4ed8d0e43b0f7bed648ad902ae693cf024be7f2038bbb1a1c2af8c7000de4f188c490b11c0f65eafa228a2fe7052fd8e86a2c3783c1fc09ebc0c2c278fefce73 filebeat-7.9.1-linux-x86_64.tar.gz

- Dockerfile

[root@vms200 filebeat]# vi /data/dockerfile/filebeat/Dockerfile

如果更换版本,需在官网下载同版本LINUX64-BIT的sha替换FILEBEAT_SHA1

FROM debian:jessie

ENV FILEBEAT_VERSION=7.9.1 \

FILEBEAT_SHA1=4ed8d0e43b0f7bed648ad902ae693cf024be7f2038bbb1a1c2af8c7000de4f188c490b11c0f65eafa228a2fe7052fd8e86a2c3783c1fc09ebc0c2c278fefce73

RUN set -x && \

apt-get update && \

apt-get install -y wget && \

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-${FILEBEAT_VERSION}-linux-x86_64.tar.gz -O /opt/filebeat.tar.gz && \

cd /opt && \

echo "${FILEBEAT_SHA1} filebeat.tar.gz" | sha512sum -c - && \

tar xzvf filebeat.tar.gz && \

cd filebeat-* && \

cp filebeat /bin && \

cd /opt && \

rm -rf filebeat* && \

apt-get purge -y wget && \

apt-get autoremove -y && \

apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

COPY docker-entrypoint.sh /

ENTRYPOINT ["/docker-entrypoint.sh"]

如果通过wget下载filebeat速度太慢,采用下载好的压缩包直接ADD到镜像中

FROM debian:jessie

ADD filebeat-7.9.1-linux-x86_64.tar.gz /opt/

RUN set -x && cp /opt/filebeat-*/filebeat /bin && rm -fr /opt/filebeat*

COPY entrypoint.sh /

ENTRYPOINT ["/docker-entrypoint.sh"]

- Entrypoint

[root@vms200 filebeat]# vi /data/dockerfile/filebeat/docker-entrypoint.sh

#!/bin/bash

ENV=${ENV:-"test"}

PROJ_NAME=${PROJ_NAME:-"no-define"}

MULTILINE=${MULTILINE:-"^\d{2}"}

KAFKA_ADDR=${KAFKA_ADDR:-'"192.168.26.11:9092"'}

cat > /etc/filebeat.yaml << EOF

filebeat.inputs:

- type: log

fields_under_root: true

fields:

topic: logm-${PROJ_NAME}

paths:

- /logm/*.log

- /logm/*/*.log

- /logm/*/*/*.log

- /logm/*/*/*/*.log

- /logm/*/*/*/*/*.log

scan_frequency: 120s

max_bytes: 10485760

multiline.pattern: '$MULTILINE'

multiline.negate: true

multiline.match: after

multiline.max_lines: 100

- type: log

fields_under_root: true

fields:

topic: logu-${PROJ_NAME}

paths:

- /logu/*.log

- /logu/*/*.log

- /logu/*/*/*.log

- /logu/*/*/*/*.log

- /logu/*/*/*/*/*.log

- /logu/*/*/*/*/*/*.log

output.kafka:

hosts: [${KAFKA_ADDR}]

topic: k8s-fb-$ENV-%{[topic]}

version: 2.0.0

required_acks: 0

max_message_bytes: 10485760

EOF

set -xe

# If user don't provide any command

# Run filebeat

if [[ "$1" == "" ]]; then

exec filebeat -c /etc/filebeat.yaml

else

# Else allow the user to run arbitrarily commands like bash

exec "$@"

fi

ENV={PROJ_NAME:-“no-define”} # project项目名称,关系到topic

MULTILINE=${MULTILINE:-“^\d{2}”} # 多行匹配,根据日志格式来定。这里以2个数字开头的为一行

version: 2.0.0 # kafka版本超过2.0,默认写2.0.0

[root@vms200 filebeat]# chmod +x docker-entrypoint.sh

制作镜像

vms200:/data/dockerfile/filebeat目录下:

[root@vms200 filebeat]# docker build . -t harbor.op.com/infra/filebeat:v7.9.1

Sending build context to Docker daemon 31.16MB

Step 1/5 : FROM debian:jessie

jessie: Pulling from library/debian

290431e50016: Pull complete

Digest: sha256:e180975d5c1012518e711c92ab26a4ff98218f439a97d9adbcd503b0d3ad1c8a

Status: Downloaded newer image for debian:jessie

---> 8c9d595d91c1

Step 2/5 : ENV FILEBEAT_VERSION=7.9.1 FILEBEAT_SHA1=4ed8d0e43b0f7bed648ad902ae693cf024be7f2038bbb1a1c2af8c7000de4f188c490b11c0f65eafa228a2fe7052fd8e86a2c3783c1fc09ebc0c2c278fefce73

---> Running in a16448d98e29

Removing intermediate container a16448d98e29

---> 42d78322e2f6

Step 3/5 : RUN set -x && apt-get update && apt-get install -y wget && wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-${FILEBEAT_VERSION}-linux-x86_64.tar.gz -O /opt/filebeat.tar.gz && cd /opt && echo "${FILEBEAT_SHA1} filebeat.tar.gz" | sha512sum -c - && tar xzvf filebeat.tar.gz && cd filebeat-* && cp filebeat /bin && cd /opt && rm -rf filebeat* && apt-get purge -y wget && apt-get autoremove -y && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

---> Running in 47e9bb4a8b77

...

---> 42875bf545eb

Step 4/5 : COPY docker-entrypoint.sh /

---> 38fe7ca3711a

Step 5/5 : ENTRYPOINT ["/docker-entrypoint.sh"]

---> Running in a6a200b5de99

Removing intermediate container a6a200b5de99

---> 23268695e23b

Successfully built 23268695e23b

Successfully tagged harbor.op.com/infra/filebeat:v7.9.1

[root@vms200 filebeat]# docker images | grep filebeat

harbor.op.com/infra/filebeat v7.9.1 23268695e23b 6 minutes ago 226MB

[root@vms200 filebeat]# docker push harbor.op.com/infra/filebeat:v7.9.1

The push refers to repository [harbor.op.com/infra/filebeat]

5d66a6b47881: Pushed

9a00a13c1bd2: Pushed

1fdaddb01136: Pushed

v7.9.1: digest: sha256:d10bd5ccabec59ff52bb3730c78cf15a97a03bfd1a63cad325f362223e861d20 size: 948

修改资源配置清单

- 使用dubbo-demo-consumer的Tomcat版镜像,以边车模式运行filebeat

- vms200上:使用test环境的清单文件

[root@vms200 ~]# cd /data/k8s-yaml/test/dubbo-demo-consumer

[root@vms200 dubbo-demo-consumer]# cp deployment-apollo.yaml deployment-apollo-fb.yaml

[root@vms200 dubbo-demo-consumer]# vi deployment-apollo-fb.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: dubbo-demo-consumer

namespace: test

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.op.com/app/dubbo-demo-web:tomcat_200909_9037

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

- name: C_OPTS

value: -Denv=fat -Dapollo.meta=http://config-test.op.com

imagePullPolicy: IfNotPresent

#--------新增内容--------

volumeMounts:

- mountPath: /opt/tomcat/logs

name: logm

- name: filebeat

image: harbor.op.com/infra/filebeat:v7.9.1

env:

- name: ENV

value: test

- name: PROJ_NAME

value: dubbo-demo-web

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /logm

name: logm

volumes:

- emptyDir: {}

name: logm

#--------新增结束--------

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/dubbo-demo-consumer/deployment-apollo-fb.yaml

deployment.apps/dubbo-demo-consumer configured

使用test环境:

- 确认vms11:zk-test是否已经启动;确认ES、kafka已经启动;

- 启动test命名空间的apollo-configservice、apollo-adminservice;

- 启动infra命名空间的apollo-portal;

- 启动test命名空间的dubbo-demo-service、dubbo-demo-consumer

浏览器访问http://kafka-manager.op.com/

看到kafaka-manager里,topic打进来,即为成功。

验证数据

进入dubbo-demo-consumer的filebeat容器中,查看logm目录下是否有日志:

[root@vms21 ~]# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

apollo-adminservice-7fcb487b58-4n9gb 1/1 Running 0 40m

apollo-configservice-8bb4dc678-bw9br 1/1 Running 0 42m

dubbo-demo-consumer-5cb47cc466-qlr7f 2/2 Running 0 26m

dubbo-demo-service-7b44d55879-7m8qx 1/1 Running 0 27m

[root@vms21 ~]# kubectl -n test exec -it dubbo-demo-consumer-5cb47cc466-qlr7f -c filebeat -- /bin/bash

root@dubbo-demo-consumer-5cb47cc466-qlr7f:/# ls -l /logm

total 36

-rw-r----- 1 root root 7934 Sep 26 12:32 catalina.2020-09-26.log

-rw-r----- 1 root root 595 Sep 26 12:32 localhost.2020-09-26.log

-rw-r----- 1 root root 2011 Sep 26 12:35 localhost_access_log.2020-09-26.txt

-rw-r--r-- 1 root root 17199 Sep 26 12:35 stdout.log

在vms11查看kafka主题:

[root@vms11 ~]# cd /opt/kafka/bin/

[root@vms11 bin]# ./kafka-console-consumer.sh --bootstrap-server 192.168.26.11:9092 --topic k8s-fb-test-logm-dubbo-demo-web --from-beginning

输出:

{"@timestamp":"2020-09-26T12:17:35.096Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"},"log":{"offset":0,"file":{"path":"/logm/stdout.log"},"flags":["multiline"]},"message":" . ____ _ __ _ _\n /\\\\ / ___'_ __ _ _(_)_ __ __ _ \\ \\ \\ \\\n( ( )\\___ | '_ | '_| | '_ \\/ _` | \\ \\ \\ \\\n \\\\/ ___)| |_)| | | | | || (_| | ) ) ) )\n ' |____| .__|_| |_|_| |_\\__, | / / / /\n =========|_|==============|___/=/_/_/_/\n :: Spring Boot :: (v1.3.1.RELEASE)\n","input":{"type":"log"},"topic":"logm-dubbo-demo-web","ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"log":{"offset":0,"file":{"path":"/logm/catalina.2020-09-26.log"}},"message":"26-Sep-2020 20:15:07.609 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server version name: Apache Tomcat/9.0.37","input":{"type":"log"},"topic":"logm-dubbo-demo-web","agent":{"id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat","version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f"},"ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"input":{"type":"log"},"topic":"logm-dubbo-demo-web","ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat","version":"7.9.1"},"log":{"offset":294,"file":{"path":"/logm/stdout.log"}},"message":"2020-09-26 20:16:41.640 INFO 8 --- [ main] com.od.dubbotest.ServletInitializer : Starting ServletInitializer v0.0.1-SNAPSHOT on dubbo-demo-consumer-5cb47cc466-ppgl7 with PID 8 (/opt/tomcat/webapps/ROOT/WEB-INF/classes started by root in /opt/tomcat)"}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"log":{"offset":560,"file":{"path":"/logm/stdout.log"}},"message":"2020-09-26 20:16:41.660 INFO 8 --- [ main] com.od.dubbotest.ServletInitializer : No active profile set, falling back to default profiles: default","input":{"type":"log"},"topic":"logm-dubbo-demo-web","ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat","version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"},"log":{"file":{"path":"/logm/catalina.2020-09-26.log"},"offset":135},"message":"26-Sep-2020 20:15:08.242 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server built: Jun 30 2020 20:09:49 UTC","topic":"logm-dubbo-demo-web","input":{"type":"log"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"topic":"logm-dubbo-demo-web","ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"},"log":{"offset":274,"file":{"path":"/logm/catalina.2020-09-26.log"}},"message":"26-Sep-2020 20:15:08.243 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server version number: 9.0.37.0","input":{"type":"log"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"topic":"logm-dubbo-demo-web","ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"type":"filebeat","version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"log":{"file":{"path":"/logm/stdout.log"},"offset":722},"message":"2020-09-26 20:16:41.962 INFO 8 --- [ main] ationConfigEmbeddedWebApplicationContext : Refreshing org.springframework.boot.context.embedded.AnnotationConfigEmbeddedWebApplicationContext@32a541a8: startup date [Sat Sep 26 20:16:41 GMT+08:00 2020]; root of context hierarchy","input":{"type":"log"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"input":{"type":"log"},"topic":"logm-dubbo-demo-web","agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"},"ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"log":{"offset":1005,"file":{"path":"/logm/stdout.log"}},"message":"2020-09-26 20:16:47.744 INFO 8 --- [ main] o.s.b.f.xml.XmlBeanDefinitionReader : Loading XML bean definitions from URL [file:/opt/tomcat/webapps/ROOT/WEB-INF/classes/spring-config.xml]"}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"log":{"offset":1206,"file":{"path":"/logm/stdout.log"}},"message":"2020-09-26 20:16:52.273 INFO 8 --- [ main] o.s.b.f.s.DefaultListableBeanFactory : Overriding bean definition for bean 'beanNameViewResolver' with a different definition: replacing [Root bean: class [null]; scope=; abstract=false; lazyInit=false; autowireMode=3; dependencyCheck=0; autowireCandidate=true; primary=false; factoryBeanName=org.springframework.boot.autoconfigure.web.ErrorMvcAutoConfiguration$WhitelabelErrorViewConfiguration; factoryMethodName=beanNameViewResolver; initMethodName=null; destroyMethodName=(inferred); defined in class path resource [org/springframework/boot/autoconfigure/web/ErrorMvcAutoConfiguration$WhitelabelErrorViewConfiguration.class]] with [Root bean: class [null]; scope=; abstract=false; lazyInit=false; autowireMode=3; dependencyCheck=0; autowireCandidate=true; primary=false; factoryBeanName=org.springframework.boot.autoconfigure.web.WebMvcAutoConfiguration$WebMvcAutoConfigurationAdapter; factoryMethodName=beanNameViewResolver; initMethodName=null; destroyMethodName=(inferred); defined in class path resource [org/springframework/boot/autoconfigure/web/WebMvcAutoConfiguration$WebMvcAutoConfigurationAdapter.class]]","input":{"type":"log"},"topic":"logm-dubbo-demo-web","host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"},"ecs":{"version":"1.5.0"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"log":{"offset":397,"file":{"path":"/logm/catalina.2020-09-26.log"}},"message":"26-Sep-2020 20:15:08.243 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Name: Linux","input":{"type":"log"},"topic":"logm-dubbo-demo-web","ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"log":{"offset":517,"file":{"path":"/logm/catalina.2020-09-26.log"}},"message":"26-Sep-2020 20:15:08.243 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Version: 4.18.0-193.el8.x86_64","topic":"logm-dubbo-demo-web","input":{"type":"log"},"ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"}}

{"@timestamp":"2020-09-26T12:17:35.097Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"ecs":{"version":"1.5.0"},"host":{"name":"dubbo-demo-consumer-5cb47cc466-ppgl7"},"agent":{"version":"7.9.1","hostname":"dubbo-demo-consumer-5cb47cc466-ppgl7","ephemeral_id":"40088248-f47c-4397-abac-34cc37b7ce2f","id":"7eda0e69-048a-441b-98e6-2c832c2a1235","name":"dubbo-demo-consumer-5cb47cc466-ppgl7","type":"filebeat"},"log":{"offset":653,"file":{"path":"/logm/catalina.2020-09-26.log"}},"message":"26-Sep-2020 20:15:08.244 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Architecture: amd64","input":{"type":"log"},"topic":"logm-dubbo-demo-web"}

...

部署Logstash

运维主机vms200上:

选版本

Logstash/Kibana选型:https://www.elastic.co/support/matrix 选择Product Compatibility。 (下表有筛选)

| Elasticsearch | Kibana | Logstash^* |

|---|---|---|

| 6.5.x | 6.5.x | 5.6.x-6.8.x |

| 6.6.x | 6.6.x | 5.6.x-6.8.x |

| 6.7.x | 6.7.x | 5.6.x-6.8.x |

| 6.8.x | 6.8.x | 5.6.x-6.8.x |

| 7.0.x | 7.0.x | 6.8.x-7.9.x |

| 7.1.x | 7.1.x | 6.8.x-7.9.x |

| 7.2.x | 7.2.x | 6.8.x-7.9.x |

| 7.3.x | 7.3.x | 6.8.x-7.9.x |

| 7.4.x | 7.4.x | 6.8.x-7.9.x |

| 7.5.x | 7.5.x | 6.8.x-7.9.x |

| 7.6.x | 7.6.x | 6.8.x-7.9.x |

| 7.7.x | 7.7.x | 6.8.x-7.9.x |

| 7.8.x | 7.8.x | 6.8.x-7.9.x |

| 7.9.x | 7.9.x | 6.8.x-7.9.x |

elasticsearch-7以前要求jdk8,7之后版本官方要求openJDK11。

选择与elasticsearch-6.8.12一致的版本。

准备docker镜像

- 下载官方镜像

[root@vms200 ~]# docker pull logstash:6.8.12

6.8.12: Pulling from library/logstash

75f829a71a1c: Pull complete

e4e846c66bad: Pull complete

8f22cd1c1369: Pull complete

14dc7cfcce00: Pull complete

d6490ac03934: Pull complete

87885adde843: Pull complete

175711d143a8: Pull complete

213059750c3c: Pull complete

f9279d991509: Pull complete

ecc09681bba8: Pull complete

1ac8ee173592: Pull complete

Digest: sha256:c0c13f530fe0cba576b2627079f8105fc0f4f33c619d67f5d27367ebcda80353

Status: Downloaded newer image for logstash:6.8.12

docker.io/library/logstash:6.8.12

[root@vms200 ~]# docker tag logstash:6.8.12 harbor.op.com/infra/logstash:v6.8.12

[root@vms200 ~]# docker push harbor.op.com/infra/logstash:v6.8.12

- 自定义Dockerfile(此步已删除)

From harbor.op.com/infra/logstash:v6.8.12

ADD logstash.yml /usr/share/logstash/config

- logstash.yml(此步已删除)

http.host: "0.0.0.0"

path.config: /etc/logstash

xpack.monitoring.enabled: false

- 创建自定义镜像(此步已删除)

这里不创建自定义镜像,直接使用下载的官方镜像

启动docker镜像

[root@vms200 ~]# mkdir /etc/logstash/

[root@vms200 ~]# cd /etc/logstash/

- 创建配置:/etc/logstash/logstash-test.conf (测试环境)

input {

kafka {

bootstrap_servers => "192.168.26.11:9092"

client_id => "192.168.26.200"

consumer_threads => 4

group_id => "k8s_test"

topics_pattern => "k8s-fb-test-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["192.168.26.12:9200"]

index => "k8s-test-%{+YYYY.MM}"

}

}

定义为test组,只收集k8s-fb-test开头的topics。index按YYYY.MM建立,1月1个index。

- 启动logstash镜像

[root@vms200 logstash]# docker run -d --name logstash-test -v /etc/logstash:/etc/logstash harbor.op.com/infra/logstash:v6.8.12 -f /etc/logstash/logstash-test.conf

bdc5bb2eb2c39c0cd80e78ecbd727c5035c78d23ee5807e020bd666b83b63217

[root@vms200 logstash]# docker ps -a|grep logstash

bdc5bb2eb2c3 harbor.op.com/infra/logstash:v6.8.12 "/usr/local/bin/dock…" 32 seconds ago Up 22 seconds 5044/tcp, 9600/tcp logstash-test

logstash可以交付到k8s中,相关配置采用configmap方式挂载; k8s在不同项目的名称空间中,可以创建不同的logstash,制定不同的索引。

- 验证ElasticSearch里的索引(需要等待一会)

[root@vms21 ~]# curl http://192.168.26.12:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

[root@vms21 ~]# curl http://192.168.26.12:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open k8s-test-2020.09 VsPqUv_4R9Cq4czbn3zPpw 5 0 58 0 100.7kb 100.7k

- 生产环境配置:/etc/logstash/logstash-prod.conf

cat >/etc/logstash/logstash-prod.conf <<'EOF'

input {

kafka {

bootstrap_servers => "192.168.26.11:9092"

client_id => "192.168.26.200"

consumer_threads => 4

group_id => "k8s_prod"

topics_pattern => "k8s-fb-prod-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["192.168.26.12:9200"]

index => “k8s-prod-%{+YYYY.MM.DD}"

}

}

EOF

index按YYYY.MM.DD建立,适用于大数据量,每天一个index。

因为实验环境资源有限,这里不再启动生产环境的测试及验证。

部署Kibana

运维主机vms200上:

准备docker镜像

kibana官方镜像下载地址:https://hub.docker.com/_/kibana?tab=tags

[root@vms200 ~]# docker pull kibana:6.8.12

6.8.12: Pulling from library/kibana

75f829a71a1c: Already exists

7ae3692e2bce: Pull complete

932a0bf8c03a: Pull complete

6acea4293b25: Pull complete

9bd647c0da00: Pull complete

7c126949930c: Pull complete

d1319ec5a926: Pull complete

37183b6c21cc: Pull complete

Digest: sha256:b82ddf3ba69ea030702993a368306a744ca532347d2d00f21b4f6439ab64bb0e

Status: Downloaded newer image for kibana:6.8.12

docker.io/library/kibana:6.8.12

[root@vms200 ~]# docker tag kibana:6.8.12 harbor.op.com/infra/kibana:v6.8.12

[root@vms200 ~]# docker push harbor.op.com/infra/kibana:v6.8.12

解析域名

vms11上

[root@vms11 ~]# vi /var/named/op.com.zone

...

kibana A 192.168.26.10

注意:serial前滚一个序列号

[root@vms11 ~]# systemctl restart named

[root@vms11 ~]# host kibana.op.com

kibana.op.com has address 192.168.26.1

[root@vms11 ~]# dig -t A kibana.op.com @192.168.26.11 +short

192.168.26.10

准备资源配置清单

vms200上

[root@vms200 ~]# mkdir /data/k8s-yaml/kibana && cd /data/k8s-yaml/kibana

- Deployment

[root@vms200 kibana]# vi /data/k8s-yaml/kibana/deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: kibana

namespace: infra

labels:

name: kibana

spec:

replicas: 1

selector:

matchLabels:

name: kibana

template:

metadata:

labels:

app: kibana

name: kibana

spec:

containers:

- name: kibana

image: harbor.op.com/infra/kibana:v6.8.12

ports:

- containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_URL

value: http://192.168.26.12:9200

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

- Service

[root@vms200 kibana]# vi /data/k8s-yaml/kibana/svc.yaml

kind: Service

apiVersion: v1

metadata:

name: kibana

namespace: infra

spec:

ports:

- protocol: TCP

port: 5601

targetPort: 5601

selector:

app: kibana

- Ingress

[root@vms200 kibana]# vi /data/k8s-yaml/kibana/ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: kibana

namespace: infra

spec:

rules:

- host: kibana.op.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 5601

应用资源配置清单

任意运算节点上:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/kibana/deployment.yaml

deployment.apps/kibana created

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/kibana/svc.yaml

service/kibana created

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/kibana/ingress.yaml

ingress.extensions/kibana created

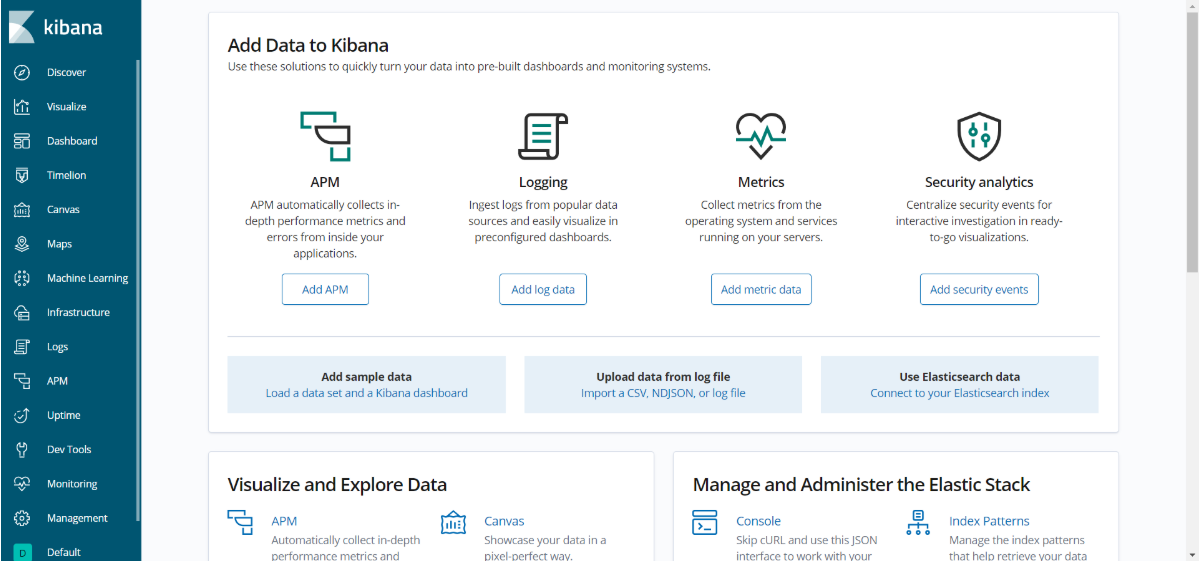

浏览器访问http://kibana.op.com

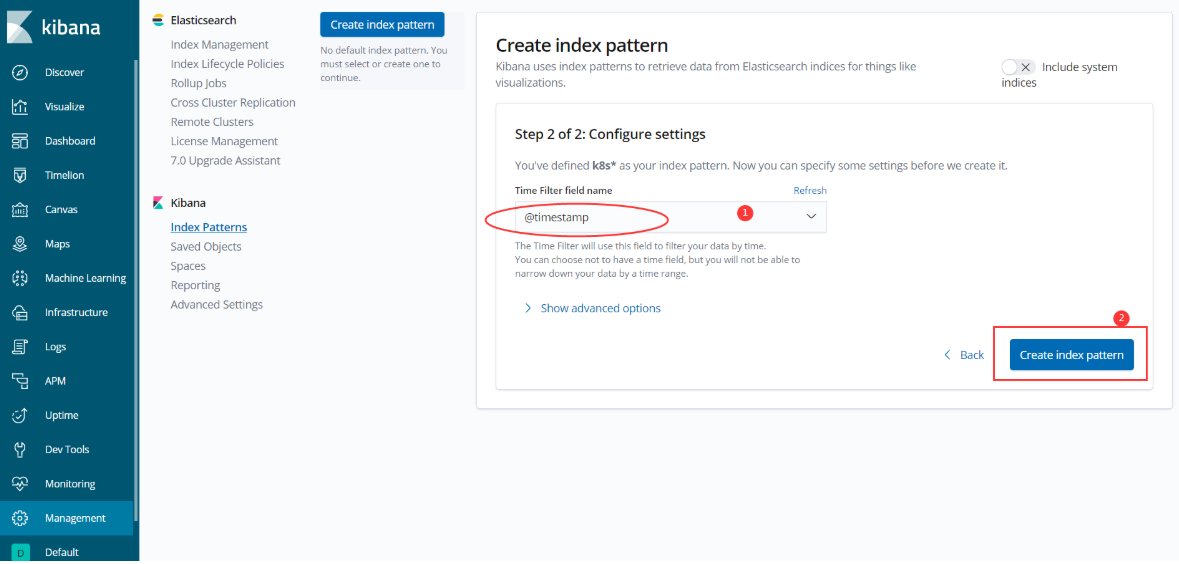

配置Kibana索引分组

Management

Next step

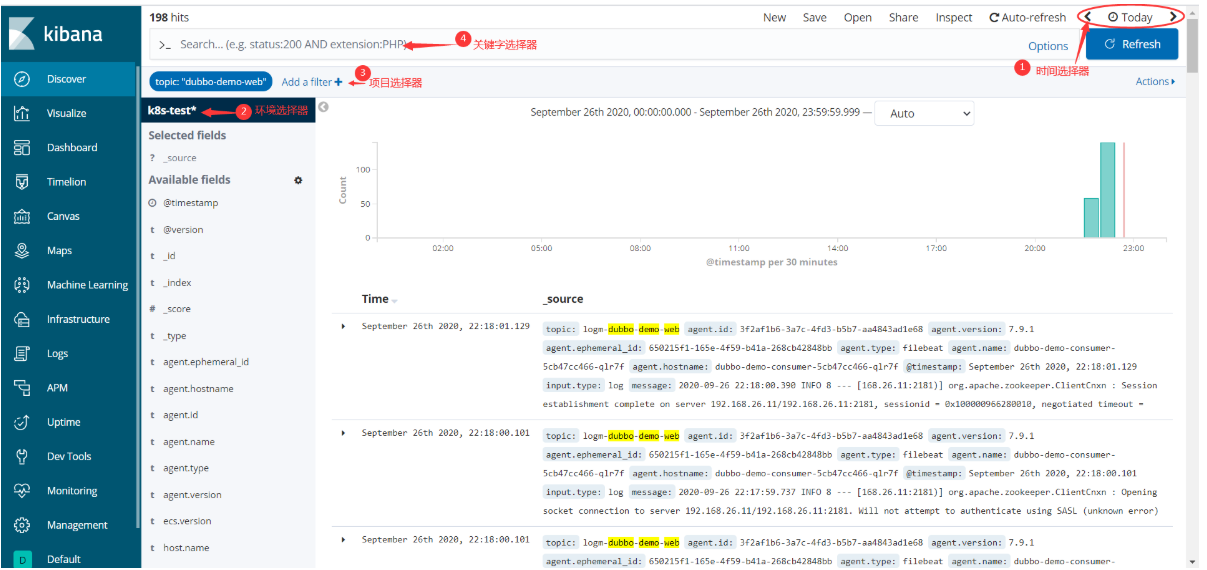

Create index pattern后,点击Discover,在时间选择器选择Today

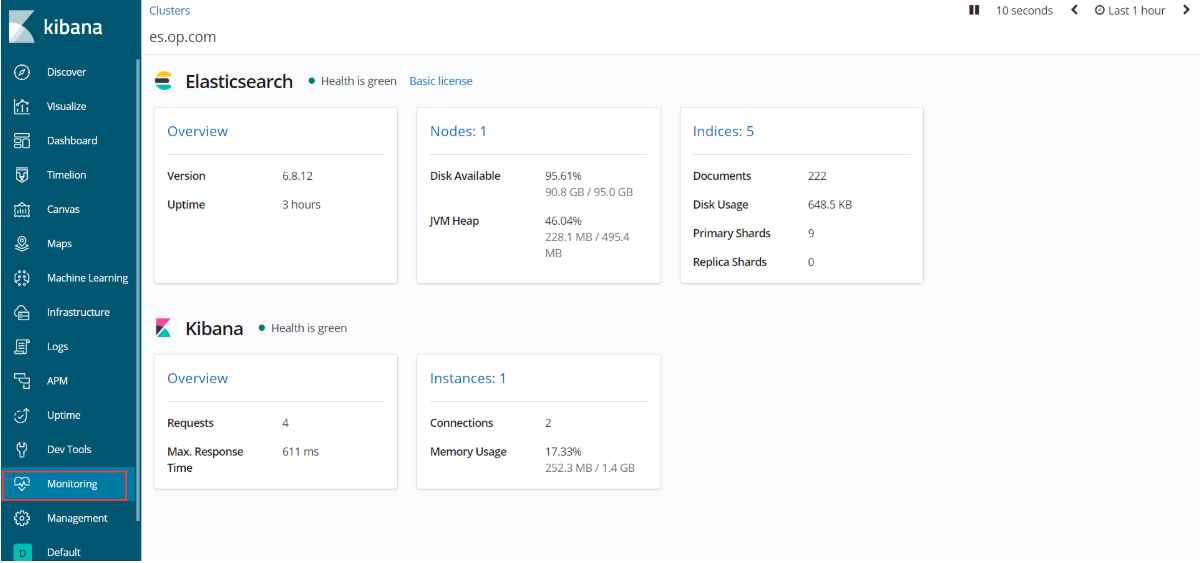

kibana的使用

- Monitoring

- 四大选择器

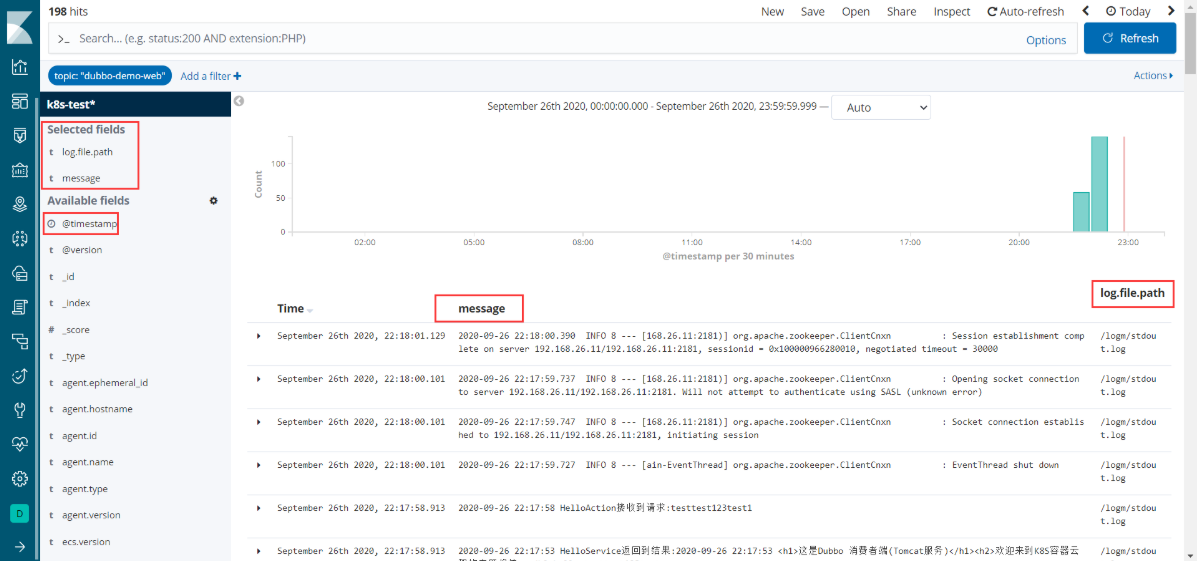

选择区域

| 项目 | 用途 |

|---|---|

| @timestamp | 对应日志的时间戳 |

| log.file.path | 对应日志路径/文件名 |

| message | 对应日志内容 |

时间选择器

选择日志时间:快速时间、绝对时间、相对时间

环境选择器

选择对应环境的日志

- k8s-test-*

- k8s-prod-*

项目选择器

- 对应

filebeat的PROJ_NAME值 - Add a fillter

- topic is ${PROJ_NAME}> dubbo-demo-service

dubbo-demo-web

关键字选择器

- exception

- error

至此,完美成功地部署了k8s集群日志管理平台。