一、制作虚拟机模板

参考:https://www.cnblogs.com/sw-blog/p/14394949.html

二、虚拟机克隆

三、k8s集群部署准备

k8s官方网址:https://kubernetes.io/

Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.

- kubernetes的五个组件

图片来源:https://kubernetes.io/docs/concepts/overview/components/

master节点的三个组件

kube-apiserver 整个集群的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制。 kube-controller-manager 控制器管理器负责维护集群的状态,比如故障检测、自动扩展、滚动更新等。保证资源到达期望值。 kube-scheduler 调度器经过策略调度POD到合适的节点上面运行。分别有预选策略和优选策略。

node节点的两个组件

kubelet 在集群节点上运行的代理,kubelet会通过各种机制来确保容器处于运行状态且健康。kubelet不会管理不是由kubernetes创建的容器。kubelet接收POD的期望状态(副本数、镜像、网络等),并调用容器运行环境来实现预期状态。kubelet会定时汇报节点的状态给apiserver,作为scheduler调度的基础。kubelet会对镜像和容器进行清理,避免不必要的文件资源占用。 kube-proxy kube-proxy是集群中节点上运行的网络代理,是实现service资源功能组件之一。kube-proxy建立了POD网络和集群网络之间的关系。不同node上的service流量转发规则会通过kube-proxy来调用apiserver访问etcd进行规则更新。service流量调度方式有三种方式:userspace(废弃,性能很差)、iptables(性能差,复杂,即将废弃)、ipvs(性能好,转发方式清晰)。

- 节点列表

| 节点 | IP | 节点 | 配置 | 组件 | 说明 | | —- | —- | —- | —- | —- | —- | | vm91 | 192.168.26.91 | k8s-master | 2核4G | kube-apiserver,

kube-controller-manager,

kube-scheduler,

kubectl,

etcd | 主控节点 | | vm92 | 192.168.26.92 | k8s-node1 | 2核4G | kubelet,

kube-proxy | 运算节点 |

注:主控节点master也可兼做运算节点node,这里将master、node分开只是为了验证k8s集群架构。

证书签发、软件下载主机:vm200(192.168.26.200)

所有节点添加或修改:/etc/hosts

192.168.26.91 vm91.c83.com vm91192.168.26.92 vm92.c83.com vm92

节点初始化

~]# modprobe br_netfilter~]# cat <<EOF > /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOF~]# sysctl -p /etc/sysctl.d/k8s.conf

四、下载cfssl证书生成工具与签发根证书

下载cfssl证书生成工具

下载:

[root@vm200 ~]# mkdir /opt/soft[root@vm200 ~]# cd /opt/soft[root@vm200 soft]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl...[root@vm200 soft]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json...[root@vm200 soft]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo...

修改权限:

[root@vm200 soft]# ls -l /usr/bin/cfssl*-rw-r--r-- 1 root root 10376657 3月 30 2016 /usr/bin/cfssl-rw-r--r-- 1 root root 6595195 3月 30 2016 /usr/bin/cfssl-certinfo-rw-r--r-- 1 root root 2277873 3月 30 2016 /usr/bin/cfssl-json[root@vm200 soft]# chmod +x /usr/bin/cfssl*[root@vm200 soft]# ls -l /usr/bin/cfssl*-rwxr-xr-x 1 root root 10376657 3月 30 2016 /usr/bin/cfssl-rwxr-xr-x 1 root root 6595195 3月 30 2016 /usr/bin/cfssl-certinfo-rwxr-xr-x 1 root root 2277873 3月 30 2016 /usr/bin/cfssl-json

签发根证书

创建证书目录

[root@vm200 soft]# mkdir /opt/certs/ ; cd /opt/certs/

创建生成CA证书的JSON配置文件

[root@vm200 certs]# vim /opt/certs/ca-config.json

{"signing": {"default": {"expiry": "175200h"},"profiles": {"server": {"expiry": "175200h","usages": ["signing","key encipherment","server auth"]},"client": {"expiry": "175200h","usages": ["signing","key encipherment","client auth"]},"peer": {"expiry": "175200h","usages": ["signing","key encipherment","server auth","client auth"]}}}}

证书类型

client certificate:客户端使用,用于服务端认证客户端,例如etcdctl、etcd proxy、fleetctl、docker客户端server certificate:服务端使用,客户端以此验证服务端身份,例如docker服务端、kube-apiserverpeer certificate:双向证书,用于etcd集群成员间通信创建生成CA证书签名请求(csr)的JSON配置文件

[root@vm200 certs]# vim /opt/certs/ca-csr.json

{"CN": "swcloud","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "sw","OU": "cloud"}],"ca": {"expiry": "175200h"}}

CN:Common Name,浏览器使用该字段验证网站是否合法,一般写的是域名。非常重要。C:Country, 国家ST:State,州,省L:Locality,地区,城市O:Organization Name,组织名称,公司名称OU:Organization Unit Name,组织单位名称,公司部门生成CA证书和私钥

[root@vm200 certs]# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca -...[root@vm200 certs]# ls ca* |grep -v jsonca.csrca-key.pemca.pem

生成ca.pem、ca.csr、ca-key.pem(CA私钥,需妥善保管)

五、安装supervisor软件

在所有节点安装。这里只给出vm91上的操作记录

[root@vm91 ~]# yum install epel-release -y...[root@vm91 ~]# yum install supervisor -y...[root@vm91 ~]# rpm -qa supervisorsupervisor-4.2.1-1.el8.noarch[root@vm91 ~]# systemctl start supervisord[root@vm91 ~]# systemctl enable supervisordCreated symlink /etc/systemd/system/multi-user.target.wants/supervisord.service → /usr/lib/systemd/system/supervisord.service.[root@vm91 ~]# yum info supervisor

上次元数据过期检查:0:02:37 前,执行于 2021年01月29日 星期五 17时02分15秒。已安装的软件包名称 : supervisor版本 : 4.2.1发布 : 1.el8架构 : noarch大小 : 2.9 M源 : supervisor-4.2.1-1.el8.src.rpm仓库 : @System来自仓库 : epel概况 : A System for Allowing the Control of Process State on UNIXURL : http://supervisord.org/协议 : BSD and MIT描述 : The supervisor is a client/server system that allows its users to control a: number of processes on UNIX-like operating systems.

Supervisor是用Python开发的一个client/server服务,是Linux系统下的一个进程管理工具。它可以很方便的监听、启动、停止、重启一个或多个进程。用Supervisor管理的进程,当一个进程意外被杀死,supervisort监听到进程死后,会自动将它重新拉起,很方便的做到进程自动恢复的功能,不再需要自己写shell脚本来控制。源码下载:https://github.com/Supervisor/supervisor/releases (可以使用源码进行安装)

六、docker

在所有节点安装。这里只给出vm91上的操作记录

选择docker-20.10.3.tgz下载到vm200:/opt/soft/

[root@vm200 ~]# cd /opt/soft/[root@vm200 soft]# wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.3.tgz

将

docker-20.10.3.tgz复制到vm91、vm92上,解压[root@vm91 ~]# mkdir -p /opt/src && cd /opt/src[root@vm91 src]# scp 192.168.26.200:/opt/soft/docker-20.10.3.tgz ....[root@vm91 src]# tar zxvf docker-20.10.3.tgz...[root@vm91 src]# mv docker/* /usr/bin[root@vm91 src]# rm -rf docker

创建配置文件

[root@vm91 src]# mkdir /etc/docker /data/docker -p[root@vm91 src]# vi /etc/docker/daemon.json

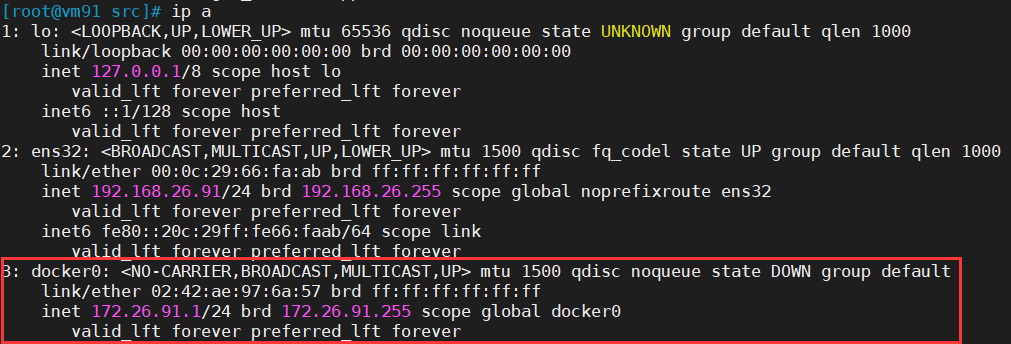

{"graph": "/data/docker","storage-driver": "overlay2","registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"],"bip": "172.26.91.1/24","exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true}

注意:各个机器上bip网段不一致,bip中间两段与宿主机最后两段相同,目的是方便定位问题。bip根据宿主机ip变化 : vm91 bip 172.

26.91.1/24 对应:192.168.26.91vm92 bip 172.26.92.1/24 对应:192.168.26.92systemd管理docker

cat > /usr/lib/systemd/system/docker.service << EOF[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.serviceWants=network-online.target[Service]Type=notifyExecStart=/usr/bin/dockerdExecReload=/bin/kill -s HUP $MAINPIDLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTimeoutStartSec=0Delegate=yesKillMode=processRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.targetEOF

启动并设置开机启动

[root@vm91 src]# systemctl start docker ; systemctl enable docker

修改配置文件后:systemctl daemon-reload

检查启动情况

[root@vm91 src]# docker -vClient: Docker Engine - CommunityVersion: 20.10.3API version: 1.41Go version: go1.13.15Git commit: 48d30b5Built: Fri Jan 29 14:28:23 2021OS/Arch: linux/amd64Context: defaultExperimental: trueServer: Docker Engine - CommunityEngine:Version: 20.10.3API version: 1.41 (minimum version 1.12)Go version: go1.13.15Git commit: 46229caBuilt: Fri Jan 29 14:31:57 2021OS/Arch: linux/amd64Experimental: falsecontainerd:Version: v1.4.3GitCommit: 269548fa27e0089a8b8278fc4fc781d7f65a939brunc:Version: 1.0.0-rc92GitCommit: ff819c7e9184c13b7c2607fe6c30ae19403a7affdocker-init:Version: 0.19.0GitCommit: de40ad0[root@vm91 src]# docker info...[root@vm91 src]# ip a

查看容器运行是否符合配置

[root@vm91 src]# docker pull busybox...[root@vm91 src]# docker run -it --rm busybox /bin/sh/ # ip add1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:1a:5b:02 brd ff:ff:ff:ff:ff:ffinet 172.26.91.2/24 brd 172.26.91.255 scope global eth0valid_lft forever preferred_lft forever

容器IP为

172.26.96.1,符合设置。这样,根据容器ip就可以很容易定位到容器所属主机节点。

七、etcd

在vm91上部署etcd

如无特别说明都是在vm91上操作

- 软件下载

github下载地址:https://github.com/etcd-io/etcd/

选择release v3.4.14版本,下载到vm200:/opt/soft/

从github下载太慢或下载不了,可以从华为云下载:

[root@vm200 ~]# cd /opt/soft/[root@vm200 soft]# wget https://repo.huaweicloud.com/etcd/v3.4.14/etcd-v3.4.14-linux-amd64.tar.gz...

将

etcd-v3.4.14-linux-amd64.tar.gz复制到vm91,解压[root@vm91 ~]# cd /opt/src[root@vm91 src]# scp 192.168.26.200:/opt/soft/etcd-v3.4.14-linux-amd64.tar.gz ....[root@vm91 src]# tar zxvf etcd-v3.4.14-linux-amd64.tar.gz...[root@vm91 src]# mv etcd-v3.4.14-linux-amd64 etcd-v3.4.14

签发证书(在vm200上操作)

[root@vm200 soft]# cd /opt/certs/[root@vm200 certs]# vim /opt/certs/etcd-peer-csr.json

{"CN": "etcd-peer","hosts": ["192.168.26.91","192.168.26.81","192.168.26.82","192.168.26.83","192.168.26.84"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "sw","OU": "cloud"}]}

生成etcd证书和私钥

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer...[root@vm200 certs]# ls |grep etcdetcd-peer.csretcd-peer-csr.jsonetcd-peer-key.pemetcd-peer.pem

创建工作目录

[root@vm91 ~]# mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server /data/etcd/etcd-server

做软连接

[root@vm91 ~]# ln -s /opt/src/etcd-v3.4.14 /opt/etcd/bin

拷贝证书、私钥

将生成的

ca.pem、etcd-peer-key.pem、etcd-peer.pem拷贝到/opt/etcd/certs目录中,注意私钥文件权限600

[root@vm91 ~]# cd /opt/etcd/certs/[root@vm91 certs]# scp 192.168.26.200:/opt/certs/ca.pem .[root@vm91 certs]# scp 192.168.26.200:/opt/certs/etcd-peer-key.pem .[root@vm91 certs]# scp 192.168.26.200:/opt/certs/etcd-peer.pem .[root@vm91 certs]# ls /opt/etcd/certsca.pem etcd-peer-key.pem etcd-peer.pem[root@vm91 certs]# chmod 600 /opt/etcd/certs/etcd-peer-key.pem

- 创建etcd服务启动脚本

[root@vm91 ~]# cd /opt/etcd[root@vm91 etcd]# vi /opt/etcd/etcd-server-startup.sh

#!/bin/sh/opt/etcd/bin/etcd --name=etcd-server-26-91 \--data-dir=/data/etcd/etcd-server \--listen-client-urls=https://192.168.26.91:2379,http://127.0.0.1:2379 \--advertise-client-urls=https://192.168.26.91:2379,http://127.0.0.1:2379 \--listen-peer-urls=https://192.168.26.91:2380 \--initial-advertise-peer-urls=https://192.168.26.91:2380 \--initial-cluster=etcd-server-26-91=https://192.168.26.91:2380 \--quota-backend-bytes=8000000000 \--cert-file=/opt/etcd/certs/etcd-peer.pem \--key-file=/opt/etcd/certs/etcd-peer-key.pem \--peer-cert-file=/opt/etcd/certs/etcd-peer.pem \--peer-key-file=/opt/etcd/certs/etcd-peer-key.pem \--trusted-ca-file=/opt/etcd/certs/ca.pem \--peer-trusted-ca-file=/opt/etcd/certs/ca.pem \--log-outputs=stdout \--logger=zap \--enable-v2=true

- etcd集群各主机的启动脚本略有不同,部署其他节点时注意修改。不同版本的etcd启动配置有区别,请参考github文档。

--enable-v2=true是因为etcd3.4以上默认v3版本(ETCDCTL_API=3is now the default.),为了与flannel插件的配合,需要设置为v2。 调整权限和目录

[root@vm91 etcd]# chmod +x /opt/etcd/etcd-server-startup.sh[root@vm91 etcd]# chmod 700 /data/etcd/etcd-server

创建etcd-server的启动配置

[root@vm91 etcd]# vi /etc/supervisord.d/etcd-server.ini[program:etcd-server-26-91]command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/etcd ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=etcd ; setuid to this UNIX account to run the programredirect_stderr=false ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)stderr_logfile=/data/logs/etcd-server/etcd.stderr.log ; stderr log path, NONE for none; default AUTOstderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stderr_events_enabled=false ; emit events on stderr writes (default false)killasgroup=truestopasgroup=true

创建etccd用户

[root@vm91 ~]# useradd -s /sbin/nologin -M etcd[root@vm91 ~]# chown -R etcd.etcd /opt/etcd/certs /data/etcd /data/logs/etcd-server/ /data/etcd/etcd-server

启动etcd服务并检查

[root@vm91 etcd]# supervisorctl updateetcd-server-26-91: added process group[root@vm91 etcd]# supervisorctl statusetcd-server-26-91 STARTING[root@vm91 etcd]# supervisorctl statusetcd-server-26-91 RUNNING pid 1334, uptime 0:00:45[root@vm91 etcd]# netstat -luntp|grep etcdtcp 0 0 192.168.26.91:2379 0.0.0.0:* LISTEN 1335/etcdtcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 1335/etcdtcp 0 0 192.168.26.91:2380 0.0.0.0:* LISTEN 1335/etcd

查看日志

[root@vm91 etcd]# tail /var/log/supervisor/supervisord.log

启动成功会出现:

…,275 INFO success: etcd-server-26-91 entered RUNNING state, process has stayed up for > than 30 seconds (startsecs)

查看输出、错误信息

[root@vm91 etcd]# supervisorctl tail etcd-server-26-91 stdout...[root@vm91 etcd]# supervisorctl tail etcd-server-26-91 stderr

注意检查

/data/logs/etcd-server目录下的etcd日志有没有报错

查看集群状态

在任一节点使用

ETCDCTL_API=2版本查看[root@vm91 etcd]# ETCDCTL_API=2 /opt/etcd/bin/etcdctl member listcd5786668e34373a: name=etcd-server-26-91 peerURLs=https://192.168.26.91:2380 clientURLs=http://127.0.0.1:2379,https://192.168.26.91:2379 isLeader=true[root@vm91 etcd]# ETCDCTL_API=2 /opt/etcd/bin/etcdctl cluster-healthmember cd5786668e34373a is healthy: got healthy result from http://127.0.0.1:2379cluster is healthy

在任一节点使用

ETCDCTL_API=3版本查看[root@vm91 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.26.91:2379" endpoint healthhttps://192.168.26.91:2379 is healthy: successfully committed proposal: took = 8.881354ms[root@vm91 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.26.91:2379" endpoint statushttps://192.168.26.91:2379, cd5786668e34373a, 3.4.14, 20 kB, true, false, 2, 5, 5,[root@vm91 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.26.91:2379" endpoint health --write-out=table+----------------------------+--------+------------+-------+| ENDPOINT | HEALTH | TOOK | ERROR |+----------------------------+--------+------------+-------+| https://192.168.26.91:2379 | true | 8.993102ms | |+----------------------------+--------+------------+-------+[root@vm91 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.26.91:2379" endpoint status --write-out=table+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| https://192.168.26.91:2379 | cd5786668e34373a | 3.4.14 | 20 kB | true | false | 2 | 5 | 5 | |+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

--write-out=table格式化输出在当前节点查看

[root@vm91 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --write-out=table endpoint status+----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| 127.0.0.1:2379 | cd5786668e34373a | 3.4.14 | 20 kB | true | false | 2 | 5 | 5 | |+----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+[root@vm91 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --write-out=table endpoint health+----------------+--------+------------+-------+| ENDPOINT | HEALTH | TOOK | ERROR |+----------------+--------+------------+-------+| 127.0.0.1:2379 | true | 1.730553ms | |+----------------+--------+------------+-------+

八、首先在vm91上部署k8s-mater节点

部署kube-apiserver

从github下载k8s二进制包

选择Kubernetes v1.20.2进行下载:(在vm200上操作)

[root@vm200 ~]# cd /opt/soft/[root@vm200 soft]# wget https://dl.k8s.io/v1.20.2/kubernetes-server-linux-amd64.tar.gz...[root@vm200 soft]# mv kubernetes-server-linux-amd64.tar.gz kubernetes-server-v1.20.2.gz

将kubernetes-server-v1.20.2.gz复制到vm91,解压:

[root@vm91 ~]# cd /opt/src

[root@vm91 src]# scp 192.168.26.200:/opt/soft/kubernetes-server-v1.20.2.gz .

[root@vm91 src]# tar zxvf kubernetes-server-v1.20.2.gz

[root@vm91 src]# mv kubernetes kubernetes-v1.20.2

[root@vm91 src]# cd /opt/src/kubernetes-v1.20.2/

[root@vm91 kubernetes-v1.20.2]# rm -rf addons/ kubernetes-src.tar.gz LICENSES/

创建目录、软连接

[root@vm91 src]# mkdir /opt/kubernetes/{cert,conf} -p [root@vm91 src]# ln -s /opt/src/kubernetes-v1.20.2/server/bin /opt/kubernetes/bin签发client证书(在vm200上操作)

apiserver与etcd通信用的证书。apiserver是客户端,etcd是服务端

创建生成证书签名请求(csr)的JSON配置文件

[root@vm200 ~]# cd /opt/certs/

[root@vm200 certs]# vi /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "sw",

"OU": "cloud"

}

]

}

生成client证书和私钥:

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

...

[root@vm200 certs]# ls client*

client.csr client-csr.json client-key.pem client.pem

- 签发server证书(在vm200上操作)

apiserver和其它k8s组件通信使用

创建生成证书签名请求(csr)的JSON配置文件

[root@vm200 certs]# vi /opt/certs/apiserver-csr.json

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"10.26.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"192.168.26.10",

"192.168.26.81",

"192.168.26.91",

"192.168.26.92",

"192.168.26.93",

"192.168.26.94",

"192.168.26.95"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "sw",

"OU": "cloud"

}

]

}

生成kube-apiserver证书和私钥:

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

...

[root@vm200 certs]# ls apiserver*

apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem

拷贝证书

[root@vm91 ~]# cd /opt/kubernetes/cert/ [root@vm91 cert]# scp 192.168.26.200:/opt/certs/ca.pem . [root@vm91 cert]# scp 192.168.26.200:/opt/certs/ca-key.pem . [root@vm91 cert]# scp 192.168.26.200:/opt/certs/client.pem . [root@vm91 cert]# scp 192.168.26.200:/opt/certs/client-key.pem . [root@vm91 cert]# scp 192.168.26.200:/opt/certs/apiserver.pem . [root@vm91 cert]# scp 192.168.26.200:/opt/certs/apiserver-key.pem . [root@vm91 certs]# ls /opt/kubernetes/cert apiserver-key.pem apiserver.pem ca-key.pem ca.pem client-key.pem client.pem创建配置

[root@vm91 ~]# cd /opt/kubernetes/conf/ [root@vm91 conf]# vi audit.yamlapiVersion: audit.k8s.io/v1beta1 # This is required. kind: Policy # Don't generate audit events for all requests in RequestReceived stage. omitStages: - "RequestReceived" rules: # Log pod changes at RequestResponse level - level: RequestResponse resources: - group: "" # Resource "pods" doesn't match requests to any subresource of pods, # which is consistent with the RBAC policy. resources: ["pods"] # Log "pods/log", "pods/status" at Metadata level - level: Metadata resources: - group: "" resources: ["pods/log", "pods/status"] # Don't log requests to a configmap called "controller-leader" - level: None resources: - group: "" resources: ["configmaps"] resourceNames: ["controller-leader"] # Don't log watch requests by the "system:kube-proxy" on endpoints or services - level: None users: ["system:kube-proxy"] verbs: ["watch"] resources: - group: "" # core API group resources: ["endpoints", "services"] # Don't log authenticated requests to certain non-resource URL paths. - level: None userGroups: ["system:authenticated"] nonResourceURLs: - "/api*" # Wildcard matching. - "/version" # Log the request body of configmap changes in kube-system. - level: Request resources: - group: "" # core API group resources: ["configmaps"] # This rule only applies to resources in the "kube-system" namespace. # The empty string "" can be used to select non-namespaced resources. namespaces: ["kube-system"] # Log configmap and secret changes in all other namespaces at the Metadata level. - level: Metadata resources: - group: "" # core API group resources: ["secrets", "configmaps"] # Log all other resources in core and extensions at the Request level. - level: Request resources: - group: "" # core API group - group: "extensions" # Version of group should NOT be included. # A catch-all rule to log all other requests at the Metadata level. - level: Metadata # Long-running requests like watches that fall under this rule will not # generate an audit event in RequestReceived. omitStages: - "RequestReceived"创建启动脚本

[root@vm91 kubernetes]# vi /opt/kubernetes/kube-apiserver.sh #!/bin/bash /opt/kubernetes/bin/kube-apiserver \ --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \ --audit-policy-file /opt/kubernetes/conf/audit.yaml \ --token-auth-file /opt/kubernetes/conf/token.csv \ --authorization-mode RBAC \ --client-ca-file /opt/kubernetes/cert/ca.pem \ --requestheader-client-ca-file /opt/kubernetes/cert/ca.pem \ --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \ --etcd-cafile /opt/kubernetes/cert/ca.pem \ --etcd-certfile /opt/kubernetes/cert/client.pem \ --etcd-keyfile /opt/kubernetes/cert/client-key.pem \ --etcd-servers https://192.168.26.91:2379 \ --service-account-key-file /opt/kubernetes/cert/ca-key.pem \ --service-account-signing-key-file /opt/kubernetes/cert/ca-key.pem \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --service-cluster-ip-range 10.26.0.0/16 \ --service-node-port-range 3000-29999 \ --target-ram-mb=1024 \ --kubelet-client-certificate /opt/kubernetes/cert/client.pem \ --kubelet-client-key /opt/kubernetes/cert/client-key.pem \ --log-dir /data/logs/kubernetes/kube-apiserver \ --tls-cert-file /opt/kubernetes/cert/apiserver.pem \ --tls-private-key-file /opt/kubernetes/cert/apiserver-key.pem \ --v 2创建

/opt/kubernetes/conf/token.csv[root@vm91 ~]# cd /opt/kubernetes/conf [root@vm91 conf]# cat > token.csv << EOF $(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF创建目录、赋权限

[root@vm91 kubernetes]# mkdir -p /data/logs/kubernetes/kube-apiserver [root@vm91 kubernetes]# chmod +x /opt/kubernetes/kube-apiserver.sh创建supervisor配置

[root@vm91 kubernetes]# vi /etc/supervisord.d/kube-apiserver.ini [program:kube-apiserver-26-91] command=/opt/kubernetes/kube-apiserver.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=trueprogram根据实际IP地址更改启动服务并检查

[root@vm91 kubernetes]# supervisorctl update kube-apiserver-26-91: added process group [root@vm91 kubernetes]# supervisorctl status etcd-server-26-91 RUNNING pid 1334, uptime 1:07:28 kube-apiserver-26-91 RUNNING pid 14003, uptime 0:02:21 其他操作: [root@vm91 kubernetes]# supervisorctl start kube-apiserver-26-91 #启动 kube-apiserver-26-91: started [root@vm91 kubernetes]# supervisorctl status kube-apiserver-26-91 kube-apiserver-26-91 RUNNING pid 1765, uptime 0:01:31 [root@vm91 kubernetes]# tail /data/logs/kubernetes/kube-apiserver/apiserver.stdout.log #查看日志 [root@vm91 kubernetes]# netstat -luntp | grep kube-api tcp6 0 0 :::6443 :::* LISTEN 1766/./bin/kube-api [root@vm91 kubernetes]# ps uax|grep kube-apiserver|grep -v grep root 1765 0.0 0.0 26164 3340 ? S 10:05 0:00 /bin/bash /opt/kubernetes/kube-apiserver.sh root 1766 12.6 10.9 1097740 416360 ? Sl 10:05 0:59 ./bin/kube-apiserver --apiserver-count 2 --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log --audit-policy-file ./conf/audit.yaml --authorization-mode RBAC --client-ca-file ./cert/ca.pem --requestheader-client-ca-file ./cert/ca.pem --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --etcd-cafile ./cert/ca.pem --etcd-certfile ./cert/client.pem --etcd-keyfile ./cert/client-key.pem --etcd-servers https://192.168.26.91:2379,https://192.168.26.92:2379,https://192.168.26.93:2379 --service-account-key-file ./cert/ca-key.pem --service-account-signing-key-file ./cert/ca-key.pem --service-account-issuer=https://kubernetes.default.svc.cluster.local [root@vm91 kubernetes]# supervisorctl stop kube-apiserver-26-91 #停止 [root@vm91 kubernetes]# supervisorctl restart kube-apiserver-26-91 #重启部署kube-controller-manager

签发证书(在vm200上操作)

创建csr请求文件

[root@vm200 ~]# cd /opt/certs/

[root@vm200 certs]# vim kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.26.10",

"192.168.26.81",

"192.168.26.91",

"192.168.26.92",

"192.168.26.93",

"192.168.26.94",

"192.168.26.95"

],

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

注:hosts 列表包含所有 kube-controller-manager 节点 IP;CN 为 system:kube-controller-manager、O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限。

生成证书

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer kube-controller-manager-csr.json | cfssl-json -bare kube-controller-manager

[root@vm200 certs]# ls kube-controller-manager*.pem -l

-rw------- 1 root root 1679 2月 16 08:23 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1533 2月 16 08:23 kube-controller-manager.pem

- 创建kube-controller-manager的kubeconfig

复制证书

[root@vm91 ~]# cd /opt/kubernetes/cert/

[root@vm91 cert]# scp 192.168.26.200:/opt/certs/kube-controller-manager*.pem .

设置集群参数

[root@vm91 cert]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.26.91:6443 --kubeconfig=kube-controller-manager.kubeconfig

设置客户端认证参数

[root@vm91 cert]# kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

设置上下文参数

[root@vm91 cert]# kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

设置默认上下文

[root@vm91 cert]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

创建启动脚本

[root@vm91 kubernetes]# vi /opt/kubernetes/kube-controller-manager.sh #!/bin/sh /opt/kubernetes/bin/kube-controller-manager \ --bind-address=127.0.0.1 \ --cluster-cidr=172.26.0.0/16 \ --leader-elect=true \ --log-dir=/data/logs/kubernetes/kube-controller-manager \ --kubeconfig=/opt/kubernetes/cert/kube-controller-manager.kubeconfig \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/cert/ca.pem \ --service-account-private-key-file=/opt/kubernetes/cert/ca-key.pem \ --service-cluster-ip-range=10.26.0.0/16 \ --root-ca-file=/opt/kubernetes/cert/ca.pem \ --cluster-signing-cert-file=/opt/kubernetes/cert/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/cert/ca-key.pem \ --tls-cert-file=/opt/kubernetes/cert/kube-controller-manager.pem \ --tls-private-key-file=/opt/kubernetes/cert/kube-controller-manager-key.pem \ --controllers=*,bootstrapsigner,tokencleaner \ --use-service-account-credentials=true \ --alsologtostderr=true \ --logtostderr=false \ --experimental-cluster-signing-duration=87600h0m0s \ --v=2[root@vm91 kubernetes]# mkdir -p /data/logs/kubernetes/kube-controller-manager [root@vm91 kubernetes]# chmod +x /opt/kubernetes/kube-controller-manager.sh创建supervisor配置

[root@vm91 kubernetes]# vi /etc/supervisord.d/kube-conntroller-manager.ini [program:kube-controller-manager-26-91] command=/opt/kubernetes/kube-controller-manager.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true启动服务并检查

[root@vm91 kubernetes]# supervisorctl update kube-controller-manager-26-91: added process group [root@vm91 kubernetes]# supervisorctl status etcd-server-26-91 RUNNING pid 1334, uptime 1:17:04 kube-apiserver-26-91 RUNNING pid 14003, uptime 0:11:57 kube-controller-manager-26-91 RUNNING pid 18045, uptime 0:00:31部署kube-scheduler

签发证书(在vm200上操作)

创建csr请求文件

[root@vm200 ~]# cd /opt/certs/

[root@vm200 certs]# vim kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.26.10",

"192.168.26.81",

"192.168.26.91",

"192.168.26.92",

"192.168.26.93",

"192.168.26.94",

"192.168.26.95"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

注:hosts 列表包含所有 kube-scheduler 节点 IP;CN 为 system:kube-scheduler、O 为 system:kube-scheduler,kubernetes 内置的 ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限。

生成证书

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer kube-scheduler-csr.json | cfssl-json -bare kube-scheduler

[root@vm200 certs]# ls kube-scheduler*.pem -l

-rw------- 1 root root 1679 2月 16 08:49 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1509 2月 16 08:49 kube-scheduler.pem

- 创建kube-scheduler的kubeconfig

复制证书

[root@vm91 ~]# cd /opt/kubernetes/cert/

[root@vm91 cert]# scp 192.168.26.200:/opt/certs/kube-scheduler*.pem .

设置集群参数

[root@vm91 cert]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.26.91:6443 --kubeconfig=kube-scheduler.kubeconfig

设置客户端认证参数

[root@vm91 cert]# kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

设置上下文参数

[root@vm91 cert]# kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

设置默认上下文

[root@vm91 cert]# kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

创建启动脚本

[root@vm91 kubernetes]# vi /opt/kubernetes/kube-scheduler.sh #!/bin/sh /opt/kubernetes/bin/kube-scheduler \ --bind-address=127.0.0.1 \ --leader-elect=true \ --log-dir=/data/logs/kubernetes/kube-scheduler \ --kubeconfig=/opt/kubernetes/cert/kube-scheduler.kubeconfig \ --tls-cert-file=/opt/kubernetes/cert/kube-scheduler.pem \ --tls-private-key-file=/opt/kubernetes/cert/kube-scheduler-key.pem \ --v=2[root@vm91 kubernetes]# mkdir -p /data/logs/kubernetes/kube-scheduler [root@vm91 kubernetes]# chmod +x /opt/kubernetes/kube-scheduler.sh创建supervisor配置

[root@vm91 kubernetes]# vi /etc/supervisord.d/kube-scheduler.ini [program:kube-scheduler-26-91] command=/opt/kubernetes/kube-scheduler.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true启动服务并检查

[root@vm91 kubernetes]# supervisorctl update kube-scheduler-26-91: added process group [root@vm91 kubernetes]# supervisorctl status etcd-server-26-91 RUNNING pid 1334, uptime 1:25:13 kube-apiserver-26-91 RUNNING pid 14003, uptime 0:20:06 kube-controller-manager-26-91 RUNNING pid 18045, uptime 0:08:40 kube-scheduler-26-91 RUNNING pid 20900, uptime 0:00:32部署kubectl

签发证书(在vm200上操作)

[root@vm200 ~]# cd /opt/certs/ [root@vm200 certs]# vim admin-csr.json { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:masters", "OU": "system" } ] }注:这个admin 证书,是将来生成管理员用的kube config 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group;”O”: “system:masters”, 必须是system:masters,否则后面kubectl create clusterrolebinding报错。

生成admin证书和私钥:

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer admin-csr.json | cfssl-json -bare admin

[root@vm200 certs]# ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem

拷贝证书、私钥,注意私钥文件属性600

[root@vm91 ~]# cd /opt/kubernetes/cert/ [root@vm91 cert]# scp 192.168.26.200:/opt/certs/admin*.pem .给kubectl创建软连接

[root@vm91 kubernetes]# ln -s /opt/kubernetes/bin/kubectl /usr/bin/kubectl创建kubeconfig配置文件

kubeconfig 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

[root@vm91 ~]# cd /opt/kubernetes/cert/

设置集群参数

[root@vm91 cert]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.26.91:6443 --kubeconfig=kube.config

设置客户端认证参数

[root@vm91 cert]# kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

设置上下文参数

[root@vm91 cert]# kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

设置默认上下文

[root@vm91 cert]# kubectl config use-context kubernetes --kubeconfig=kube.config

复制配置文件

[root@vm91 cert]# mkdir ~/.kube

[root@vm91 cert]# cp kube.config ~/.kube/config

授权kubernetes证书访问kubelet api权限

[root@vm91 cert]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

- 配置kubectl命令自动补全

在~/.bashrc文件中增加一行source <(kubectl completion bash)

[root@vm91 cert]# vi ~/.bashrc

# .bashrc

source <(kubectl completion bash)

...

[root@vm91 cert]# source ~/.bashrc

如果没有安装bash-completion

[root@vm91 kubernetes]# yum install -y bash-completion

检查主控节点状态

[root@vm91 cert]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@vm91 cert]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.26.91:6443

九、在vm92上部署k8s-node节点

如无特别说明都是在vm92上操作

创建目录

[root@vm92 ~]# mkdir /opt/kubernetes/{bin,cert,conf} -p复制

kubelet、kube-proxy[root@vm92 ~]# cd /opt/kubernetes/bin/ [root@vm92 bin]# scp 192.168.26.91:/opt/kubernetes/bin/kubelet . [root@vm92 bin]# scp 192.168.26.91:/opt/kubernetes/bin/kube-proxy .部署kubelet

签发kubelet证书(在vm200上操作)

创建生成证书签名请求(csr)的JSON配置文件

[root@vm200 ~]# cd /opt/certs/

[root@vm200 certs]# vi kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"192.168.26.10",

"192.168.26.81",

"192.168.26.91",

"192.168.26.92",

"192.168.26.93",

"192.168.26.94",

"192.168.26.95"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "sw",

"OU": "cloud"

}

]

}

生成kubelet证书和私钥

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

...

[root@vm200 certs]# ls kubelet*

kubelet.csr kubelet-csr.json kubelet-key.pem kubelet.pem

拷贝证书、私钥,注意私钥文件属性600

[root@vm92 ~]# cd /opt/kubernetes/cert/ [root@vm92 cert]# scp 192.168.26.200:/opt/certs/kubelet*.pem . [root@vm92 cert]# scp 192.168.26.200:/opt/certs/ca*.pem . [root@vm92 cert]# chmod 600 /opt/kubernetes/cert/kubelet-key.pem [root@vm92 cert]# ls kubelet* kubelet-key.pem kubelet.pem创建配置

kubelet.kubeconfig(在vm91上操作)(1) set-cluster 创建需要连接的集群信息,可以创建多个k8s集群信息

[root@vm91 cert]# cd /opt/kubernetes/conf

[root@vm91 conf]# kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=https://192.168.26.91:6443 \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig

(2) set-credentials 创建用户账号,即用户登陆使用的客户端私有和证书,可以创建多个证书

[root@vm91 conf]# kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/cert/client.pem \

--client-key=/opt/kubernetes/cert/client-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig

(3) set-context 设置context,即确定账号和集群对应关系

[root@vm91 conf]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig

(4) use-context 设置当前使用哪个context

[root@vm91 conf]# kubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig

- 授权k8s-node用户(在vm91上操作)

授权k8s-node用户绑定集群角色 system:node ,让 k8s-node 成为具备运算节点的权限。

[root@vm91 conf]# vim k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

应用资源配置文件,并检查

[root@vm91 conf]# kubectl apply -f k8s-node.yaml

clusterrolebinding.rbac.authorization.k8s.io/k8s-node created

[root@vm91 conf]# kubectl get clusterrolebinding k8s-node

NAME ROLE AGE

k8s-node ClusterRole/system:node 17s

[root@vm91 conf]# kubectl get clusterrolebinding k8s-node -o yaml

...

准备pause基础镜像

[root@vm92 kubernetes]# docker pull kubernetes/pause创建kubelet启动脚本

[root@vm92 kubernetes]# vi /opt/kubernetes/kubelet.sh #!/bin/sh /opt/kubernetes/bin/kubelet \ --anonymous-auth=false \ --cgroup-driver systemd \ --cluster-dns 10.26.0.2 \ --cluster-domain cluster.local \ --runtime-cgroups=/systemd/system.slice \ --kubelet-cgroups=/systemd/system.slice \ --fail-swap-on="false" \ --client-ca-file /opt/kubernetes/cert/ca.pem \ --tls-cert-file /opt/kubernetes/cert/kubelet.pem \ --tls-private-key-file /opt/kubernetes/cert/kubelet-key.pem \ --hostname-override 192.168.26.92 \ --image-gc-high-threshold 20 \ --image-gc-low-threshold 10 \ --kubeconfig /opt/kubernetes/conf/kubelet.kubeconfig \ --log-dir /data/logs/kubernetes/kube-kubelet \ --pod-infra-container-image kubernetes/pause:latest \ --root-dir /data/kubelet将

kubelet.kubeconfig复制到vm92:/opt/kubernetes/conf/

[root@vm92 conf]# scp 192.168.26.91:/opt/kubernetes/conf/kubelet.kubeconfig .

检查配置,授权,创建目录

[root@vm92 kubernetes]# ls -l /opt/kubernetes/conf/kubelet.kubeconfig

-rw------- 1 root root 6203 2月 15 16:57 /opt/kubernetes/conf/kubelet.kubeconfig

[root@vm92 kubernetes]# chmod +x /opt/kubernetes/kubelet.sh

[root@vm92 kubernetes]# mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

创建supervisor配置

[root@vm92 kubernetes]# vi /etc/supervisord.d/kube-kubelet.ini [program:kube-kubelet-26-92] command=/opt/kubernetes/kubelet.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true启动服务并检查

[root@vm92 kubernetes]# supervisorctl update kube-kubelet-26-92: added process group [root@vm92 kubernetes]# supervisorctl status kube-kubelet-26-92 RUNNING pid 1623, uptime 0:00:38在vm91上查看节点

[root@vm91 conf]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.26.92 Ready <none> 61s v1.20.2 [root@vm91 conf]# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 192.168.26.92 Ready <none> 106s v1.20.2 192.168.26.92 <none> CentOS Linux 8 4.18.0-240.10.1.el8_3.x86_64 docker://20.10.3给节点标签

[root@vm91 conf]# kubectl label nodes 192.168.26.92 node-role.kubernetes.io/node= node/192.168.26.92 labeled [root@vm91 conf]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.26.92 Ready node 4m23s v1.20.2 [root@vm91 conf]# kubectl label nodes 192.168.26.92 node-role.kubernetes.io/worker= node/192.168.26.92 labeled [root@vm91 conf]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.26.92 Ready node,worker 5m42s v1.20.2部署kube-proxy

签发kube-proxy证书(在vm200上操作)

创建生成证书签名请求(csr)的JSON配置文件

[root@vm200 ~]# cd /opt/certs

[root@vm200 certs]# vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "sw",

"OU": "cloud"

}

]

}

注:这里CN对应的是k8s中的角色

生成kube-proxy证书和私钥

[root@vm200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

[root@vm200 certs]# ls kube-proxy* -l

-rw-r--r-- 1 root root 1009 2月 15 17:24 kube-proxy-client.csr

-rw------- 1 root root 1675 2月 15 17:24 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1379 2月 15 17:24 kube-proxy-client.pem

-rw-r--r-- 1 root root 269 2月 15 17:22 kube-proxy-csr.json

拷贝证书、私钥,注意私钥文件属性600

[root@vm92 ~]# cd /opt/kubernetes/cert/ [root@vm92 cert]# scp 192.168.26.200:/opt/certs/kube-proxy*.pem . [root@vm92 cert]# ls kube-proxy* -l -rw------- 1 root root 1675 2月 15 17:28 kube-proxy-client-key.pem -rw-r--r-- 1 root root 1379 2月 15 17:28 kube-proxy-client.pem创建配置

kube-proxy.kubeconfig(在vm91上操作)[root@vm91 ~]# cd /opt/kubernetes/cert/ [root@vm91 cert]# scp 192.168.26.200:/opt/certs/kube-proxy*.pem .(1) set-cluster

[root@vm91 cert]# cd /opt/kubernetes/conf/

[root@vm91 conf]# kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=https://192.168.26.91:6443 \

--kubeconfig=kube-proxy.kubeconfig

(2) set-credentials

[root@vm91 conf]# kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/cert/kube-proxy-client.pem \

--client-key=/opt/kubernetes/cert/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

(3) set-context

[root@vm91 conf]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

(4) use-context

[root@vm91 conf]# kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

(5) 拷贝kube-proxy.kubeconfig 到 vm92的conf目录下

[root@vm91 conf]# scp kube-proxy.kubeconfig 192.168.26.92:/opt/kubernetes/conf/

创建kube-proxy启动脚本

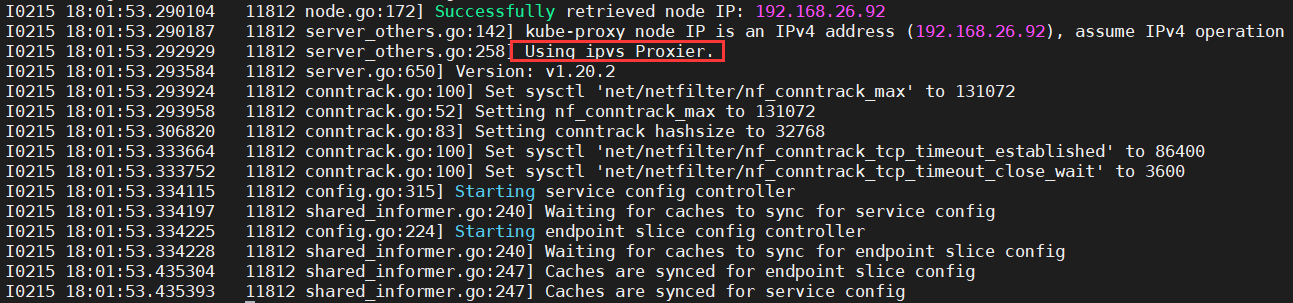

[root@vm92 ~]# cd /opt/kubernetes/ [root@vm92 kubernetes]# vi /opt/kubernetes/kube-proxy.sh #!/bin/sh /opt/kubernetes/bin/kube-proxy \ --cluster-cidr 172.26.0.0/16 \ --hostname-override 192.168.26.92 \ --proxy-mode=ipvs \ --ipvs-scheduler=nq \ --kubeconfig /opt/kubernetes/conf/kube-proxy.kubeconfig注:kube-proxy共有3种流量调度模式,分别是 namespace,iptables,ipvs,其中ipvs性能最好。这里设置

ipvs,如果不设置则使用iptables。检查配置,权限,创建日志目录

[root@vm92 kubernetes]# ls -l /opt/kubernetes/conf/|grep kube-proxy -rw------- 1 root root 6223 2月 15 17:44 kube-proxy.kubeconfig [root@vm92 kubernetes]# chmod +x /opt/kubernetes/kube-proxy.sh [root@vm92 kubernetes]# mkdir -p /data/logs/kubernetes/kube-proxy加载ipvs模块

[root@vm92 kubernetes]# lsmod |grep ip_vs [root@vm92 kubernetes]# vi /root/ipvs.sh #!/bin/bash ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs" for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*") do /sbin/modinfo -F filename $i &>/dev/null if [ $? -eq 0 ];then /sbin/modprobe $i fi done[root@vm92 kubernetes]# sh /root/ipvs.sh [root@vm92 kubernetes]# lsmod |grep ip_vs ip_vs_wrr 16384 0 ip_vs_wlc 16384 0 ip_vs_sh 16384 0 ip_vs_sed 16384 0 ip_vs_rr 16384 0 ip_vs_pe_sip 16384 0 nf_conntrack_sip 32768 1 ip_vs_pe_sip ip_vs_ovf 16384 0 ip_vs_nq 16384 0 ip_vs_lc 16384 0 ip_vs_lblcr 16384 0 ip_vs_lblc 16384 0 ip_vs_ftp 16384 0 ip_vs_fo 16384 0 ip_vs_dh 16384 0 ip_vs 172032 28 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_ovf,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_pe_sip,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp nf_nat 45056 3 ipt_MASQUERADE,nft_chain_nat,ip_vs_ftp nf_conntrack 172032 6 xt_conntrack,nf_nat,ipt_MASQUERADE,nf_conntrack_sip,nf_conntrack_netlink,ip_vs nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs创建supervisor配置

[root@vm92 kubernetes]# vi /etc/supervisord.d/kube-proxy.ini [program:kube-proxy-26-92] command=/opt/kubernetes/kube-proxy.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true启动服务并检查

[root@vm92 kubernetes]# vi /etc/supervisord.d/kube-proxy.ini [root@vm92 kubernetes]# supervisorctl update kube-proxy-26-92: added process group [root@vm92 kubernetes]# supervisorctl status kube-kubelet-26-92 RUNNING pid 1623, uptime 0:53:52 kube-proxy-26-92 STARTING [root@vm92 kubernetes]# supervisorctl status kube-kubelet-26-92 RUNNING pid 1623, uptime 0:54:29 kube-proxy-26-92 RUNNING pid 11811, uptime 0:00:46 [root@vm92 kubernetes]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.26.0.1:443 nq -> 192.168.26.91:6443 Masq 1 0 0 [root@vm92 kubernetes]# cat /data/logs/kubernetes/kube-proxy/proxy.stdout.log

在vm91查看svc[root@vm91 conf]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.26.0.1 <none> 443/TCP 8h验证测试

如无特别说明都是在vm91上操作

查看node节点是否可调度

[root@vm91 kubernetes]# kubectl describe nodes 192.168.26.92 Name: 192.168.26.92 Roles: node,worker Labels: beta.kubernetes.io/arch=amd64 beta.kubernetes.io/os=linux kubernetes.io/arch=amd64 kubernetes.io/hostname=192.168.26.92 kubernetes.io/os=linux node-role.kubernetes.io/node= node-role.kubernetes.io/worker= Annotations: volumes.kubernetes.io/controller-managed-attach-detach: true CreationTimestamp: Mon, 15 Feb 2021 17:08:13 +0800 Taints: node.kubernetes.io/not-ready:NoSchedule Unschedulable: false Lease: HolderIdentity: 192.168.26.92 AcquireTime: <unset> RenewTime: Tue, 16 Feb 2021 09:36:33 +0800 Conditions: Type Status LastHeartbeatTime LastTransitionTime Reason Message ---- ------ ----------------- ------------------ ------ ------- MemoryPressure False Tue, 16 Feb 2021 09:33:23 +0800 Mon, 15 Feb 2021 17:08:12 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available DiskPressure False Tue, 16 Feb 2021 09:33:23 +0800 Mon, 15 Feb 2021 17:08:12 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure PIDPressure False Tue, 16 Feb 2021 09:33:23 +0800 Mon, 15 Feb 2021 17:08:12 +0800 KubeletHasSufficientPID kubelet has sufficient PID available Ready True Tue, 16 Feb 2021 09:33:23 +0800 Tue, 16 Feb 2021 09:28:21 +0800 KubeletReady kubelet is posting ready status Addresses: InternalIP: 192.168.26.92 Hostname: 192.168.26.92 Capacity: cpu: 2 ephemeral-storage: 100613124Ki hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 3995584Ki pods: 110 Allocatable: cpu: 2 ephemeral-storage: 92725054925 hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 3893184Ki pods: 110 System Info: Machine ID: 2f3cbfff855846afa2c1bb476b48eaa5 System UUID: 16cb4d56-fbc5-b989-ec6b-8a72965a2350 Boot ID: 2caa418b-7d7f-46eb-a589-ea9bdddf6bd9 Kernel Version: 4.18.0-240.10.1.el8_3.x86_64 OS Image: CentOS Linux 8 Operating System: linux Architecture: amd64 Container Runtime Version: docker://20.10.3 Kubelet Version: v1.20.2 Kube-Proxy Version: v1.20.2 Non-terminated Pods: (0 in total) Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE --------- ---- ------------ ---------- --------------- ------------- --- Allocated resources: (Total limits may be over 100 percent, i.e., overcommitted.) Resource Requests Limits -------- -------- ------ cpu 0 (0%) 0 (0%) memory 0 (0%) 0 (0%) ephemeral-storage 0 (0%) 0 (0%) hugepages-1Gi 0 (0%) 0 (0%) hugepages-2Mi 0 (0%) 0 (0%) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Starting 19m kubelet Starting kubelet. Normal NodeHasSufficientMemory 19m kubelet Node 192.168.26.92 status is now: NodeHasSufficientMemory Normal NodeHasNoDiskPressure 19m kubelet Node 192.168.26.92 status is now: NodeHasNoDiskPressure Normal NodeHasSufficientPID 19m kubelet Node 192.168.26.92 status is now: NodeHasSufficientPID Normal NodeAllocatableEnforced 19m kubelet Updated Node Allocatable limit across pods Normal Starting 18m kube-proxy Starting kube-proxy. Normal NodeAllocatableEnforced 8m29s kubelet Updated Node Allocatable limit across pods Normal NodeHasNoDiskPressure 8m27s (x3 over 8m30s) kubelet Node 192.168.26.92 status is now: NodeHasNoDiskPressure Normal NodeHasSufficientPID 8m27s (x3 over 8m30s) kubelet Node 192.168.26.92 status is now: NodeHasSufficientPID Normal NodeHasSufficientMemory 8m27s (x3 over 8m30s) kubelet Node 192.168.26.92 status is now: NodeHasSufficientMemory Warning Rebooted 8m27s kubelet Node 192.168.26.92 has been rebooted, boot id: 2caa418b-7d7f-46eb-a589-ea9bdddf6bd9 Normal NodeNotReady 8m27s kubelet Node 192.168.26.92 status is now: NodeNotReady Normal Starting 8m26s kube-proxy Starting kube-proxy. Normal NodeReady 8m17s kubelet Node 192.168.26.92 status is now: NodeReadyTaints: node.kubernetes.io/not-ready:NoSchedule为不可调度,需要删除污点让节点可调度[root@vm91 kubernetes]# kubectl taint node 192.168.26.92 node.kubernetes.io/not-ready:NoSchedule- node/192.168.26.92 untainted下载镜像(在vm92上操作)

[root@vm92 kubernetes]# docker pull nginx:1.7.9创建pod和service进行测试:(在vm91上操作)

[root@vm91 ~]# mkdir /home/test [root@vm91 ~]# cd /home/test [root@vm91 test]# kubectl run pod-ng --image=nginx:1.7.9 --dry-run=client -o yaml > pod-ng.yaml [root@vm91 test]# cat pod-ng.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-ng name: pod-ng spec: containers: - image: nginx:1.7.9 imagePullPolicy: IfNotPresent name: pod-ng resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}添加镜像拉取策略:

imagePullPolicy: IfNotPresent[root@vm91 test]# kubectl apply -f pod-ng.yaml pod/pod-ng created [root@vm91 test]# kubectl get pod NAME READY STATUS RESTARTS AGE pod-ng 1/1 Running 0 5s [root@vm91 test]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-ng 1/1 Running 0 2m29s 172.26.92.2 192.168.26.92 <none> <none> [root@vm91 test]# kubectl expose pod pod-ng --name=svc-ng --port=80 service/svc-ng exposed [root@vm91 test]# kubectl get svc svc-ng NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc-ng ClusterIP 10.26.115.27 <none> 80/TCP 3s在vm92上访问pod(容器):

[root@vm92 ~]# curl -I 172.26.92.2 HTTP/1.1 200 OK Server: nginx/1.7.9 Date: Tue, 16 Feb 2021 02:28:32 GMT Content-Type: text/html Content-Length: 612 Last-Modified: Tue, 23 Dec 2014 16:25:09 GMT Connection: keep-alive ETag: "54999765-264" Accept-Ranges: bytes由于还没部署k8s网络插件,还不能从其他节点或外部进行访问此pod。后续将进行k8s网络插件及其他插件部署。

至此,一个简单的k8s集群成功部署。

210216 广州

十、将vm91部署成k8s-node节点

部署kubelet

拷贝证书、私钥,注意私钥文件属性600

[root@vm91 ~]# cd /opt/kubernetes/cert/ [root@vm91 cert]# scp 192.168.26.200:/opt/certs/kubelet*.pem . [root@vm91 cert]# chmod 600 /opt/kubernetes/cert/kubelet-key.pem [root@vm91 cert]# ls kubelet* kubelet-key.pem kubelet.pem准备pause基础镜像

[root@vm91 kubernetes]# docker pull kubernetes/pause ... [root@vm91 cert]# docker images | grep pause kubernetes/pause latest f9d5de079539 6 years ago 240kB创建kubelet启动脚本

[root@vm91 kubernetes]# vi /opt/kubernetes/kubelet.sh #!/bin/sh /opt/kubernetes/bin/kubelet \ --anonymous-auth=false \ --cgroup-driver systemd \ --cluster-dns 10.26.0.2 \ --cluster-domain cluster.local \ --runtime-cgroups=/systemd/system.slice \ --kubelet-cgroups=/systemd/system.slice \ --fail-swap-on="false" \ --client-ca-file /opt/kubernetes/cert/ca.pem \ --tls-cert-file /opt/kubernetes/cert/kubelet.pem \ --tls-private-key-file /opt/kubernetes/cert/kubelet-key.pem \ --hostname-override 192.168.26.91 \ --image-gc-high-threshold 20 \ --image-gc-low-threshold 10 \ --kubeconfig /opt/kubernetes/conf/kubelet.kubeconfig \ --log-dir /data/logs/kubernetes/kube-kubelet \ --pod-infra-container-image kubernetes/pause:latest \ --root-dir /data/kubelet检查配置,权限,创建日志目录

[root@vm91 kubernetes]# ls -l /opt/kubernetes/conf/kubelet.kubeconfig

-rw------- 1 root root 6203 2月 15 16:36 /opt/kubernetes/conf/kubelet.kubeconfig

[root@vm91 kubernetes]# chmod +x /opt/kubernetes/kubelet.sh

[root@vm91 kubernetes]# mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

创建supervisor配置

[root@vm91 kubernetes]# vi /etc/supervisord.d/kube-kubelet.ini [program:kube-kubelet-26-91] command=/opt/kubernetes/kubelet.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true启动服务并检查

[root@vm91 kubernetes]# supervisorctl update kube-kubelet-26-91: added process group [root@vm92 kubernetes]# supervisorctl status [root@vm91 kubernetes]# supervisorctl status etcd-server-26-91 RUNNING pid 998, uptime 0:21:35 kube-apiserver-26-91 RUNNING pid 999, uptime 0:21:35 kube-controller-manager-26-91 RUNNING pid 1000, uptime 0:21:35 kube-kubelet-26-91 RUNNING pid 1424, uptime 0:00:31 kube-scheduler-26-91 RUNNING pid 1001, uptime 0:21:35等一会,状态由

STARTING变成RUNNING。查看集群节点

[root@vm91 kubernetes]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.26.91 Ready <none> 2m26s v1.20.2 192.168.26.92 Ready node,worker 6d18h v1.20.2 [root@vm91 kubernetes]# kubectl label nodes 192.168.26.91 node-role.kubernetes.io/master= node/192.168.26.91 labeled [root@vm91 kubernetes]# kubectl label nodes 192.168.26.91 node-role.kubernetes.io/worker= node/192.168.26.91 labeled [root@vm91 kubernetes]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.26.91 Ready master,worker 5m27s v1.20.2 192.168.26.92 Ready node,worker 6d18h v1.20.2部署kube-proxy

拷贝证书、私钥,注意私钥文件属性600

[root@vm91 ~]# cd /opt/kubernetes/cert/ [root@vm91 cert]# scp 192.168.26.200:/opt/certs/kube-proxy*.pem . [root@vm91 cert]# ls kube-proxy* -l -rw------- 1 root root 1675 2月 15 17:34 kube-proxy-client-key.pem -rw-r--r-- 1 root root 1379 2月 15 17:34 kube-proxy-client.pem创建kube-proxy启动脚本

[root@vm91 ~]# cd /opt/kubernetes/ [root@vm91 kubernetes]# vi /opt/kubernetes/kube-proxy.sh #!/bin/sh /opt/kubernetes/bin/kube-proxy \ --cluster-cidr 172.26.0.0/16 \ --hostname-override 192.168.26.91 \ --proxy-mode=ipvs \ --ipvs-scheduler=nq \ --kubeconfig /opt/kubernetes/conf/kube-proxy.kubeconfig检查配置,权限,创建日志目录

[root@vm91 kubernetes]# ls -l /opt/kubernetes/conf/|grep kube-proxy

-rw------- 1 root root 6223 2月 15 17:42 kube-proxy.kubeconfig

[root@vm91 kubernetes]# chmod +x /opt/kubernetes/kube-proxy.sh

[root@vm91 kubernetes]# mkdir -p /data/logs/kubernetes/kube-proxy

加载ipvs模块

[root@vm91 kubernetes]# lsmod |grep ip_vs [root@vm91 kubernetes]# vi /root/ipvs.sh #!/bin/bash ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs" for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*") do /sbin/modinfo -F filename $i &>/dev/null if [ $? -eq 0 ];then /sbin/modprobe $i fi done [root@vm91 kubernetes]# sh /root/ipvs.sh [root@vm91 kubernetes]# lsmod |grep ip_vs ip_vs_wrr 16384 0 ip_vs_wlc 16384 0 ip_vs_sh 16384 0 ip_vs_sed 16384 0 ip_vs_rr 16384 0 ip_vs_pe_sip 16384 0 nf_conntrack_sip 32768 1 ip_vs_pe_sip ip_vs_ovf 16384 0 ip_vs_nq 16384 0 ip_vs_lc 16384 0 ip_vs_lblcr 16384 0 ip_vs_lblc 16384 0 ip_vs_ftp 16384 0 ip_vs_fo 16384 0 ip_vs_dh 16384 0 ip_vs 172032 28 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_ovf,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_pe_sip,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp nf_nat 45056 3 ipt_MASQUERADE,nft_chain_nat,ip_vs_ftp nf_conntrack 172032 6 xt_conntrack,nf_nat,ipt_MASQUERADE,nf_conntrack_sip,nf_conntrack_netlink,ip_vs nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs创建supervisor配置

[root@vm91 kubernetes]# vi /etc/supervisord.d/kube-proxy.ini [program:kube-proxy-26-91] command=/opt/kubernetes/kube-proxy.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true启动服务并检查

[root@vm91 kubernetes]# supervisorctl update kube-proxy-26-91: added process group [root@vm91 kubernetes]# supervisorctl status etcd-server-26-91 RUNNING pid 998, uptime 0:39:53 kube-apiserver-26-91 RUNNING pid 999, uptime 0:39:53 kube-controller-manager-26-91 RUNNING pid 1000, uptime 0:39:53 kube-kubelet-26-91 RUNNING pid 1424, uptime 0:18:49 kube-proxy-26-91 RUNNING pid 5071, uptime 0:00:33 kube-scheduler-26-91 RUNNING pid 1001, uptime 0:39:53 [root@vm91 kubernetes]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.26.0.1:443 nq -> 192.168.26.91:6443 Masq 1 0 0 TCP 10.26.115.27:80 nq注:查看并删除污点

Taints: node.kubernetes.io/not-ready:NoSchedule[root@vm91 kubernetes]# kubectl taint node 192.168.26.91 node.kubernetes.io/not-ready:NoSchedule- node/192.168.26.91 untainted验证测试

创建Deployment:nginx-dep.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx179 image: nginx:1.7.9 imagePullPolicy: IfNotPresent ports: - containerPort: 80 [root@vm91 test]# kubectl apply -f nginx-dep.yaml deployment.apps/nginx-deployment created [root@vm91 test]# kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 2/2 2 2 7s [root@vm91 test]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-58c5bfcf99-gmlvt 1/1 Running 0 20m 172.26.91.2 192.168.26.91 <none> <none> nginx-deployment-58c5bfcf99-sqkz6 1/1 Running 0 20m 172.26.92.2 192.168.26.92 <none> <none>可以发现创建了2个pod,分别部署在vm91、vm92上

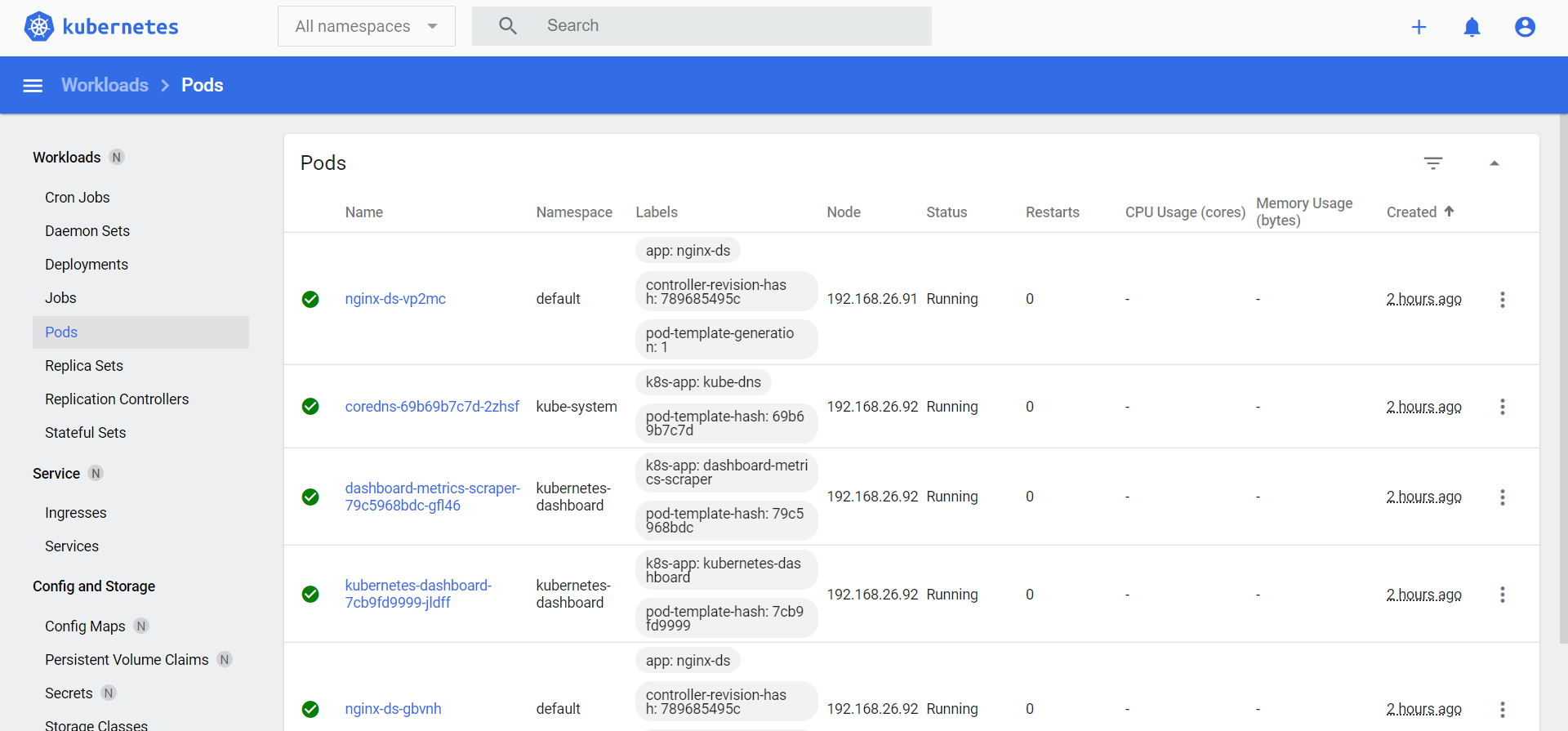

创建DaemonSet、Service

[root@vm91 test]# vi nginx-ds.yaml #设置`type: NodePort`,会随机生成一个port```yaml apiVersion: v1 kind: Service metadata: name: nginx-svc labels: app: nginx-ds spec: type: NodePort selector: app: nginx-ds ports:

- name: http port: 80 targetPort: 80

apiVersion: apps/v1 kind: DaemonSet metadata: name: nginx-ds labels: addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: app: nginx-ds template: metadata: labels: app: nginx-ds spec: containers:

- name: my-nginx

image: nginx:1.7.9

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

```shell

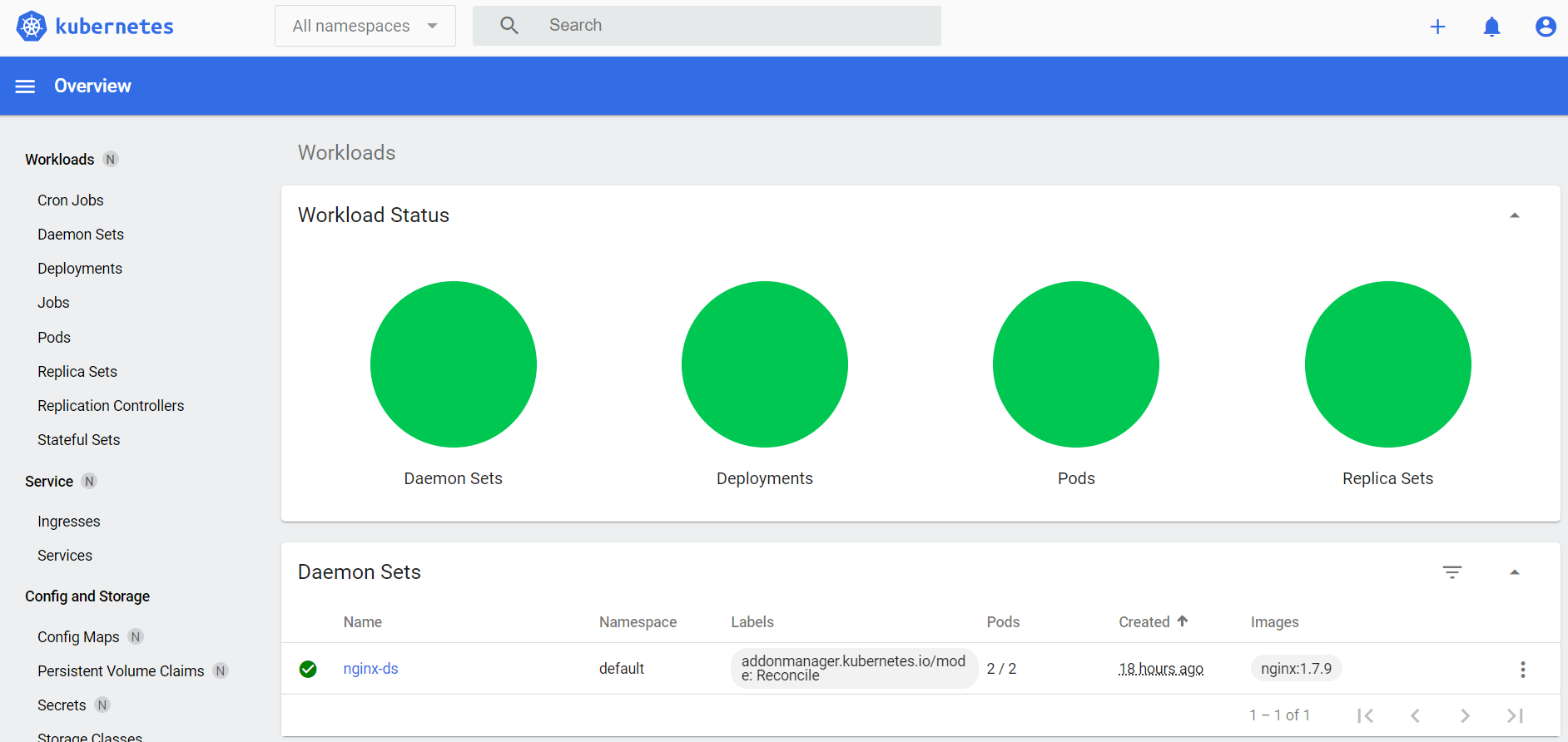

[root@vm91 test]# kubectl apply -f nginx-ds.yaml

service/nginx-svc created

daemonset.apps/nginx-ds created

[root@vm91 test]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-8msqs 1/1 Running 0 10s 172.26.92.2 192.168.26.92 <none> <none>

nginx-ds-q9jvl 1/1 Running 0 10s 172.26.91.2 192.168.26.91 <none> <none>

[root@vm91 test]# kubectl get svc -o wide | grep nginx-svc

nginx-svc NodePort 10.26.16.252 <none> 80:3027/TCP 78s app=nginx-ds

此时测试集群不同节点/pod容器互通情况,ping、curl -I不通:(pod、容器在这种场景下指的是同一个实体概念)

- 节点到其它节点pod容器

- pod容器到其它节点

- pod容器到其它节点pod容器

因此需要安装CNI网络插件,解决集群内pod容器/节点之间的互连。

[root@vm91 test]# vi nginx-svc.yaml #创建指定端口的svc:`nodePort: 26133`

apiVersion: v1

kind: Service

metadata:

name: nginx-svc26133

labels:

app: nginx-ds

spec:

type: NodePort

selector:

app: nginx-ds

ports:

- name: http

port: 80

targetPort: 80

nodePort: 26133

[root@vm91 test]# kubectl apply -f nginx-svc.yaml

service/nginx-svc26133 created

[root@vm91 test]# kubectl get svc | grep 26133

nginx-svc26133 NodePort 10.26.26.78 <none> 80:26133/TCP 39s

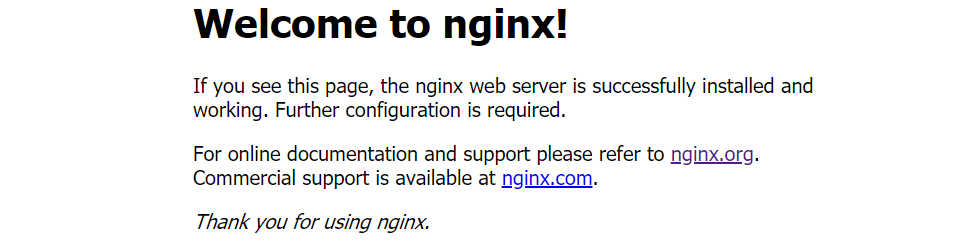

在浏览器输入:[http://192.168.26.91:26133/](http://192.168.26.91:26133/) 或 [http://192.168.26.92:26133/](http://192.168.26.92:26133/)

可知,通过nodeIP:nodePort实现服务暴露

[root@vm91 test]# vi nginx-svc2.yaml #不设置

type: NodePort

apiVersion: v1

kind: Service

metadata:

name: nginx-svc2

labels:

app: nginx-ds

spec:

selector:

app: nginx-ds

ports:

- name: http

port: 80

targetPort: 80

[root@vm91 test]# kubectl apply -f nginx-svc2.yaml

service/nginx-svc2 created

[root@vm91 test]# kubectl get svc |grep nginx-svc2

nginx-svc2 ClusterIP 10.26.35.138 <none> 80/TCP 51s

nginx-svc2只能在pod内访问。不能将服务暴露出去

十一、核心插件-CNI网络插件Flannel

如无特别说明都是在vm91、vm92上操作

- 软件下载

选择v0.13.0版本,下载到vm200:/opt/soft/

[root@vm200 ~]# cd /opt/soft/

[root@vm200 soft]# wget https://github.com/flannel-io/flannel/releases/download/v0.13.0/flannel-v0.13.0-linux-amd64.tar.gz

...

从vm200:/opt/soft/复制、解压、做软链接

]# cd /opt/src ]# tar -zxvf flannel-v0.13.0-linux-amd64.tar.gz ]# mv flanneld flannel-v0.13.0 ]# ln -s /opt/src/flannel-v0.13.0 /opt/kubernetes/flannel创建配置和启动脚本

]# vi /opt/kubernetes/conf/subnet.env FLANNEL_NETWORK=172.26.0.0/16 FLANNEL_SUBNET=172.26.91.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false注意:flannel集群各主机的配置略有不同,部署其他节点时注意修改。

vm92上修改内容:FLANNEL_SUBNET=172.26.92.1/24操作etcd,增加host-gw。只需要在一台etcd机器上设置就可以了。

]# ETCDCTL_API=2 /opt/etcd/bin/etcdctl set /coreos.com/network/config '{"Network": "172.26.0.0/16", "Backend": {"Type": "host-gw"}}'

...

]# ETCDCTL_API=2 /opt/etcd/bin/etcdctl get /coreos.com/network/config #检查

创建启动脚本

]# vi /opt/kubernetes/flannel-startup.sh

#!/bin/sh

/opt/kubernetes/flannel \

--public-ip=192.168.26.91 \

--etcd-endpoints=https://192.168.26.91:2379 \

--etcd-keyfile=/opt/kubernetes/cert/client-key.pem \

--etcd-certfile=/opt/kubernetes/cert/client.pem \

--etcd-cafile=/opt/kubernetes/cert/ca.pem \

--iface=ens32 \

--subnet-file=/opt/kubernetes/conf/subnet.env \

--healthz-port=2401

注意:flannel集群各主机的启动脚本略有不同,部署其他节点时注意修改。public-ip 为本机IP,iface 为当前宿主机对外网卡。vm92上修改内容:--public-ip=192.168.26.92

检查配置,权限,创建日志目录

]# chmod +x /opt/kubernetes/flannel-startup.sh

]# mkdir -p /data/logs/flanneld

创建supervisor配置

]# vi /etc/supervisord.d/flannel.ini [program:flanneld-26-91] command=/opt/kubernetes/flannel-startup.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=5 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=truevm92上修改内容:[program:flanneld-26-92]启动服务并检查

]# supervisorctl update ]# supervisorctl status | grep flannel flanneld-26-91 RUNNING pid 22052, uptime 0:05:01 ]# supervisorctl status | grep flannel flanneld-26-92 RUNNING pid 21122, uptime 0:00:42查看路由

vm91:

[root@vm91 kubernetes]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

172.26.91.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

172.26.92.0 192.168.26.92 255.255.255.0 UG 0 0 0 ens32

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32

注意到加了一条路由:172.26.92.0 192.168.26.92 255.255.255.0 UG 0 0 0 ens32

vm92:

[root@vm92 kubernetes]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

172.26.91.0 192.168.26.91 255.255.255.0 UG 0 0 0 ens32

172.26.92.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32

注意到加了一条路由:172.26.91.0 192.168.26.91 255.255.255.0 UG 0 0 0 ens32

可见,flanneld的实质就是添加路由。

十二、核心插件-CoreDNS服务发现

获取资源配置清单https://github.com/kubernetes/kubernetes/blob/v1.20.2/cluster/addons/dns/coredns/coredns.yaml.base 从网页上复制到文件

coredns.yaml

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes __DNS__DOMAIN__ in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

image: k8s.gcr.io/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: __DNS__MEMORY__LIMIT__

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: __DNS__SERVER__

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

修改1:

kubernetes __DNS__DOMAIN__ in-addr.arpa ip6.arpa {

pods insecure