- 一、前置知识点

- 二、部署Etcd集群

- 三、安装Docker

- 四、部署Master Node

- 五、部署Worker Node

- 六、部署Dashboard和CoreDNS

- 七、高可用架构(扩容多Master架构)

- kube-proxy修改成ipvs模式

- k8s二进制离线安装(centos7.8+k8s1.18.4)详细记录 ,适合初学者。

- 本文是本人实验记录,部分材料来自网络。

2020/6/26 端午

一、前置知识点

1.1 生产环境可部署Kubernetes集群的两种方式

目前生产部署Kubernetes集群主要有两种方式:

- kubeadm

Kubeadm是一个K8s部署工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。

官方地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

- 二进制包

从github下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

Kubeadm降低部署门槛,但屏蔽了很多细节,遇到问题很难排查。如果想更容易可控,推荐使用二进制包部署Kubernetes集群,虽然手动部署麻烦点,期间可以学习很多工作原理,也利于后期维护。

1.2 安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 CentOS7.x-86_x64

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

- 可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点

- 禁止swap分区

1.3 准备环境

软件环境:

| 软件 | 版本 |

|---|---|

| 操作系统 | CentOS7.8_x64 (mini) |

| Docker | 19-ce |

| Kubernetes | 1.18 |

服务器整体规划:

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master1 | 192.168.26.71 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

| k8s-master2 | 192.168.26.74 | kube-apiserver,kube-controller-manager,kube-scheduler |

| k8s-node1 | 192.168.26.72 | kubelet,kube-proxy,docker etcd |

| k8s-node2 | 192.168.26.73 | kubelet,kube-proxy,docker,etcd |

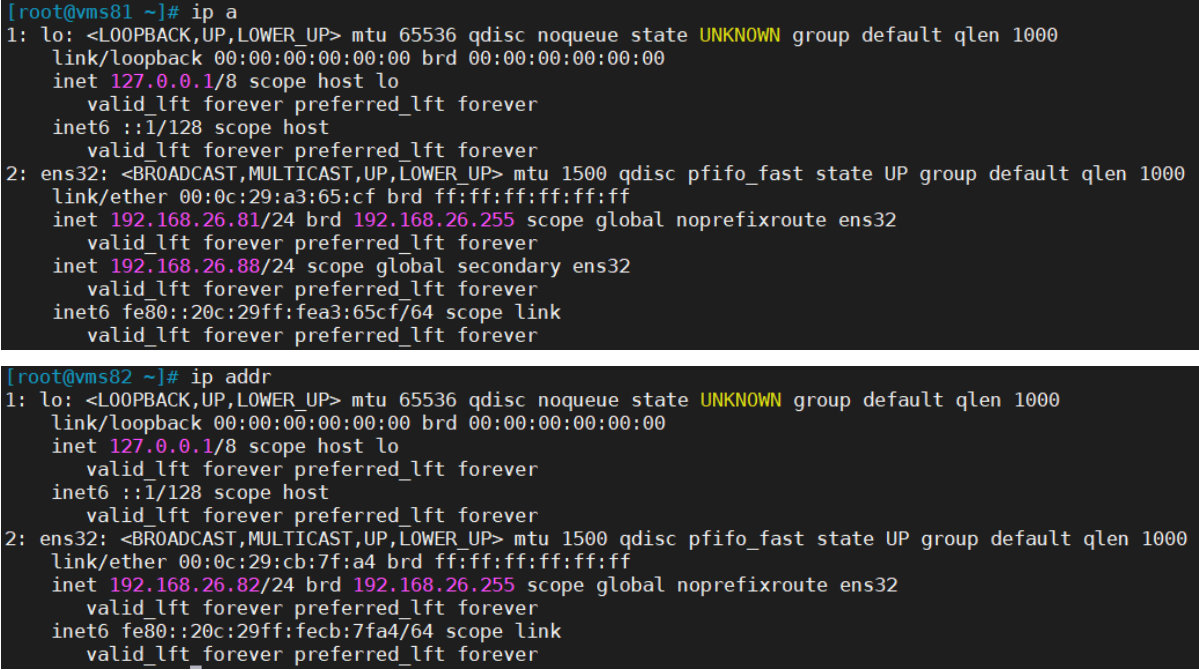

| Load Balancer(Master) | 192.168.26.81 ,192.168.26.88 (VIP) | Nginx L4 |

| Load Balancer(Backup) | 192.168.26. 82 | Nginx L4 |

须知:考虑到有些朋友电脑配置较低,这么多虚拟机跑不动,所以这一套高可用集群分两部分实施,先部署一套单Master架构(192.168.26.71/72/73),再扩容为多Master架构(上述规划),顺便熟悉下Master扩容流程。

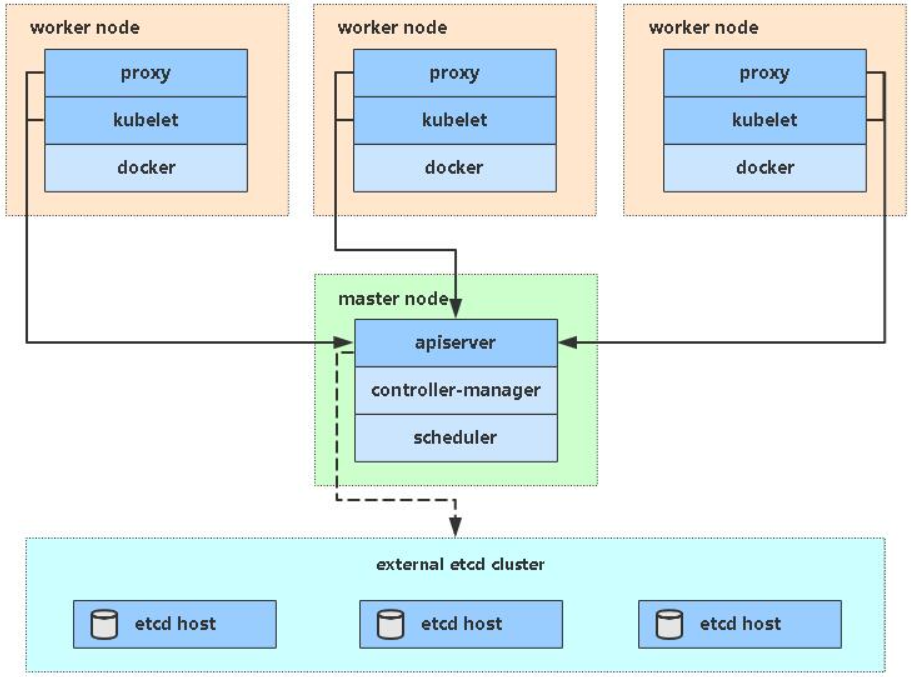

单Master架构图:

单Master服务器规划:(master也可兼做为work节点)

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master | 192.168.26.71 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

| k8s-node1 | 192.168.26.72 | kubelet,kube-proxy,docker etcd |

| k8s-node2 | 192.168.26.73 | kubelet,kube-proxy,docker,etcd |

1.4 初始化配置

ifconfig没有安装时:yum install net-tools.x86_64 -y 所有节点同步/etc/hosts(方便访问)

192.168.26.71 vms71.k8s.com vms71192.168.26.72 vms72.k8s.com vms72192.168.26.73 vms73.k8s.com vms73在master和worker添加hosts(如果集群中解析没用到,也可以不加)

cat >> /etc/hosts << EOF192.168.26.71 k8s-master192.168.26.72 k8s-node1192.168.26.73 k8s-node2EOF

将桥接的IPv4流量传递到iptables的链

在所有节点设置(master和node)和执行

cat <<EOF > /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOF生效:sysctl -p /etc/sysctl.d/k8s.conf #出现以下错误时:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directorysysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory执行:modprobe br_netfilter 然后再生效

所有节点时间同步

yum install ntpdate -yntpdate time.windows.com

1.5 准备cfssl证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。

找任意一台服务器操作,这里用Master节点。

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64mv cfssl_linux-amd64 /usr/local/bin/cfsslmv cfssljson_linux-amd64 /usr/local/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

二、部署Etcd集群

2.1 规划

Etcd 是一个分布式键值存储系统,Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库,为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,当然,你也可以使用5台组建集群,可容忍2台机器故障。

| 节点名称 | IP |

|---|---|

| etcd-1 | 192.168.26.71 |

| etcd-2 | 192.168.26.72 |

| etcd-3 | 192.168.26.73 |

注:为了节省机器,这里与K8s节点机器复用。也可以独立于k8s集群之外部署,只要apiserver能连接到就行。

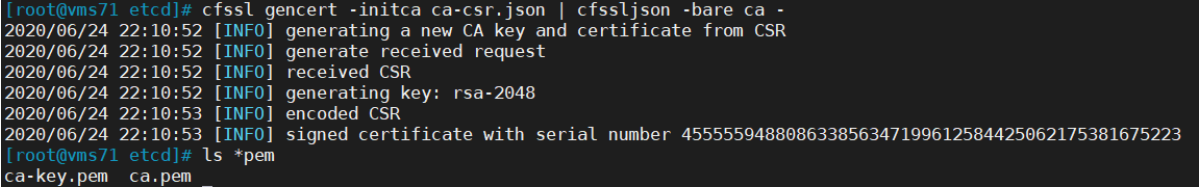

2.2 生成Etcd证书

1. 自签证书颁发机构(CA)

创建工作目录:

mkdir -p ~/TLS/{etcd,k8s}cd TLS/etcd

自签CA:

cat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOF

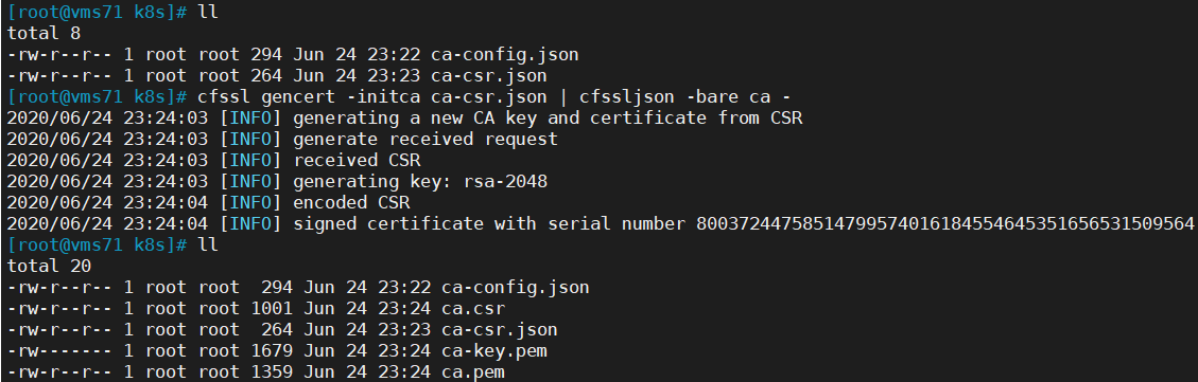

生成证书:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -ls *pemca-key.pem ca.pem

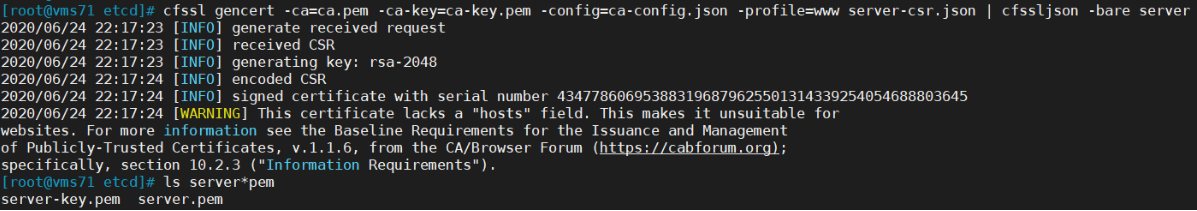

2. 使用自签CA签发Etcd HTTPS证书

创建证书申请文件:

cat > server-csr.json << EOF{"CN": "etcd","hosts": ["192.168.26.71","192.168.26.72","192.168.26.73"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]}EOF

注:上述文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare serverls server*pemserver-key.pem server.pem

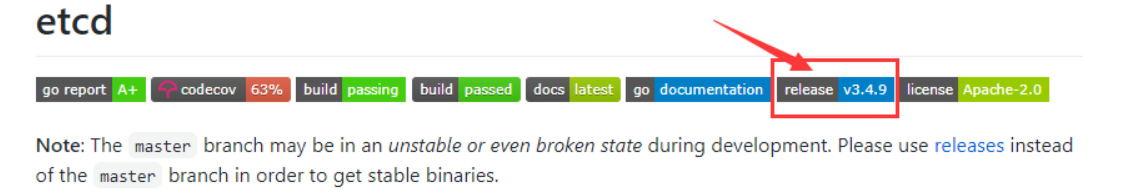

2.3 从Github下载二进制文件

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

2.4 部署Etcd集群

以下在节点1上操作,为简化操作,待会将节点1生成的所有文件拷贝到节点2和节点3.

1. 创建工作目录并解压二进制包

mkdir /opt/etcd/{bin,cfg,ssl} -ptar zxvf etcd-v3.4.9-linux-amd64.tar.gzmv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

2. 创建etcd配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF#[Member]ETCD_NAME="etcd-1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.26.71:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.26.71:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.26.71:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.26.71:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.26.71:2380,etcd-2=https://192.168.26.72:2380,etcd-3=https://192.168.26.73:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF

- ETCD_NAME:节点名称,集群中唯一

- ETCD_DATA_DIR:数据目录

- ETCD_LISTEN_PEER_URLS:集群通信监听地址

- ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

- ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

- ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

- ETCD_INITIAL_CLUSTER:集群节点地址

- ETCD_INITIAL_CLUSTER_TOKEN:集群Token

- ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

3. systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=/opt/etcd/cfg/etcd.confExecStart=/opt/etcd/bin/etcd \--cert-file=/opt/etcd/ssl/server.pem \--key-file=/opt/etcd/ssl/server-key.pem \--peer-cert-file=/opt/etcd/ssl/server.pem \--peer-key-file=/opt/etcd/ssl/server-key.pem \--trusted-ca-file=/opt/etcd/ssl/ca.pem \--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \--logger=zapRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

4. 拷贝刚才生成的证书

把刚才生成的证书拷贝到配置文件中的路径:

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

5. 启动并设置开机启动

systemctl daemon-reloadsystemctl start etcdsystemctl enable etcd

启动时会卡住或失败,等待其他节点加入。

6. 将上面节点1所有生成的文件拷贝到节点2和节点3

scp -r /opt/etcd/ root@192.168.26.72:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.26.72:/usr/lib/systemd/system/scp -r /opt/etcd/ root@192.168.26.73:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.26.73:/usr/lib/systemd/system/

然后在节点2和节点3分别修改etcd.conf配置文件中的节点名称和当前服务器IP:

vi /opt/etcd/cfg/etcd.conf#[Member]ETCD_NAME="etcd-1" # 修改此处,节点2改为etcd-2,节点3改为etcd-3ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.26.71:2380" # 修改此处为当前服务器IPETCD_LISTEN_CLIENT_URLS="https://192.168.26.71:2379" # 修改此处为当前服务器IP#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.26.71:2380" # 修改此处为当前服务器IPETCD_ADVERTISE_CLIENT_URLS="https://192.168.26.71:2379" # 修改此处为当前服务器IPETCD_INITIAL_CLUSTER="etcd-1=https://192.168.26.71:2380,etcd-2=https://192.168.26.72:2380,etcd-3=https://192.168.26.73:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"

最后启动etcd并设置开机启动,同上。

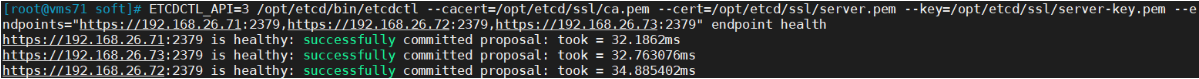

7. 查看集群状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.26.71:2379,https://192.168.26.72:2379,https://192.168.26.73:2379" endpoint healthhttps://192.168.31.71:2379 is healthy: successfully committed proposal: took = 8.154404mshttps://192.168.31.73:2379 is healthy: successfully committed proposal: took = 9.044117mshttps://192.168.31.72:2379 is healthy: successfully committed proposal: took = 10.000825ms

如果输出上面信息,就说明集群部署成功。如果有问题第一步先看日志:/var/log/message 或 journalctl -u etcd

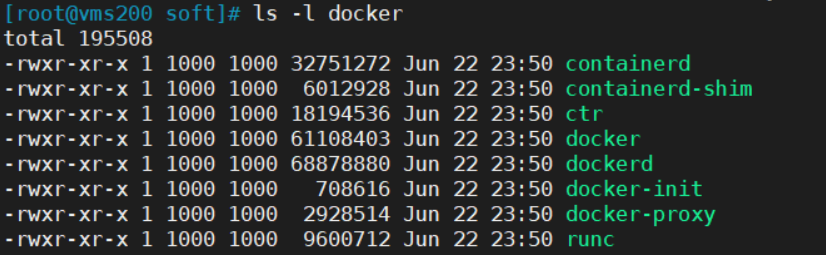

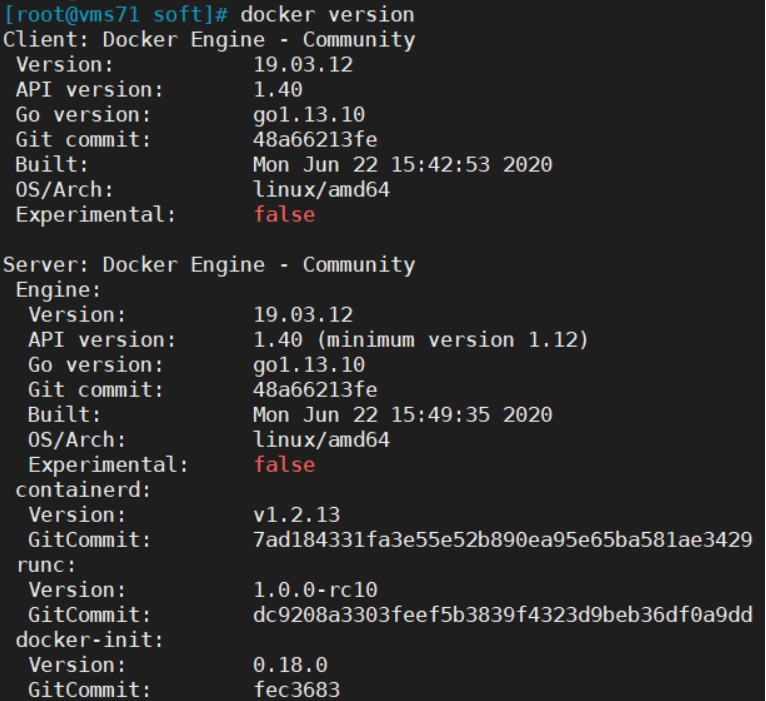

三、安装Docker

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.12.tgz

以下在所有节点操作。这里采用二进制安装,用yum安装也一样。

3.1 解压二进制包

tar zxvf docker-19.03.12.tgzmv docker/* /usr/bin

3.2 systemd管理docker

cat > /usr/lib/systemd/system/docker.service << EOF[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.serviceWants=network-online.target[Service]Type=notifyExecStart=/usr/bin/dockerdExecReload=/bin/kill -s HUP $MAINPIDLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTimeoutStartSec=0Delegate=yesKillMode=processRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.targetEOF

3.3 创建配置文件

mkdir /etc/dockercat > /etc/docker/daemon.json << EOF{"registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"]}EOF

- registry-mirrors 配置阿里云镜像加速器。这里用的是个人在阿里云镜像加速器,需要自己注册,免费的。

3.4 启动并设置开机启动

systemctl daemon-reloadsystemctl start dockersystemctl enable docker

四、部署Master Node

4.1 生成kube-apiserver证书

1. 自签证书颁发机构(CA)

[root@vms71 TLS]# cd k8scat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]}EOF

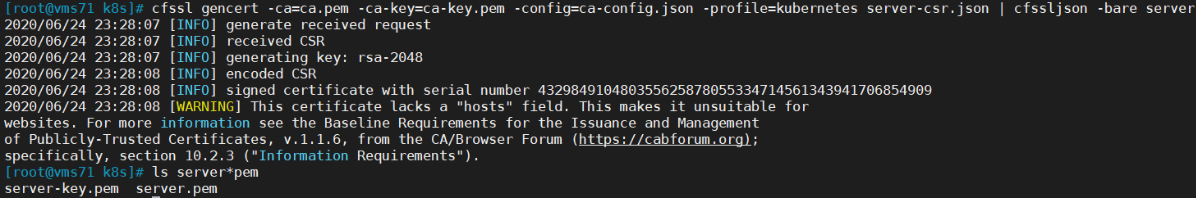

生成证书:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -ls *pemca-key.pem ca.pem

2. 使用自签CA签发kube-apiserver HTTPS证书

创建证书申请文件:

cd TLS/k8scat > server-csr.json << EOF{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","192.168.26.71","192.168.26.72","192.168.26.73","192.168.26.74","192.168.26.81","192.168.26.82","192.168.26.88","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOF

注:上述文件hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare serverls server*pemserver-key.pem server.pem

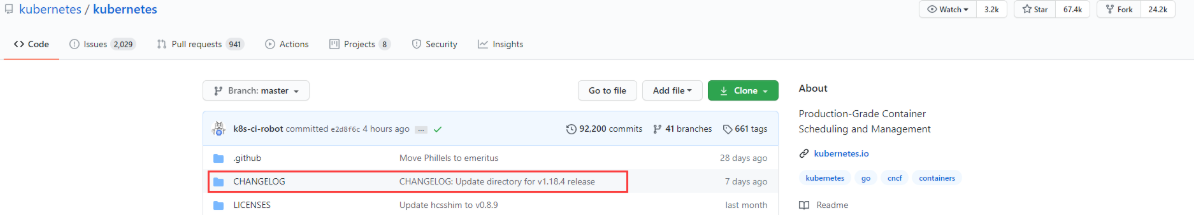

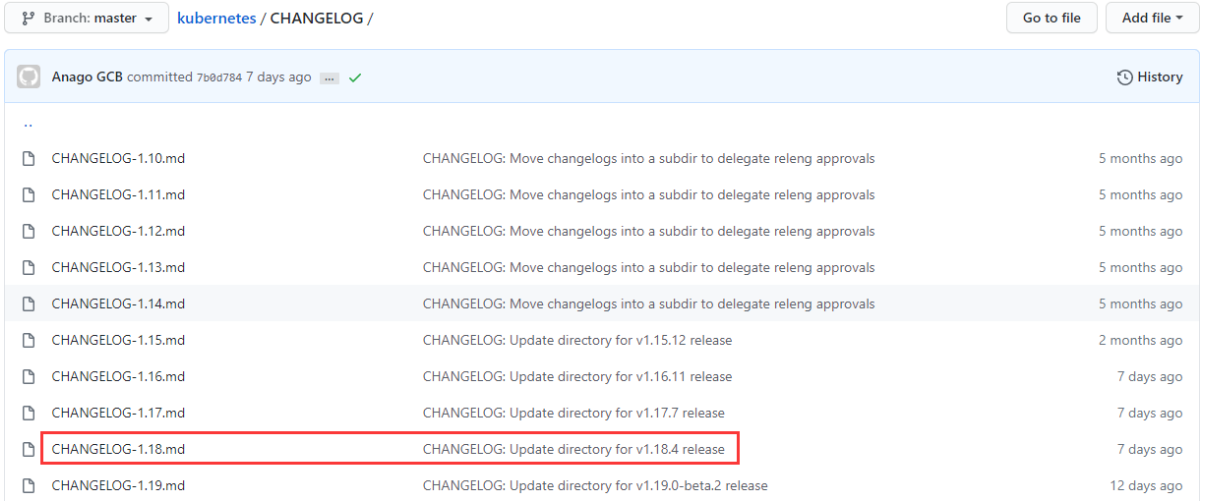

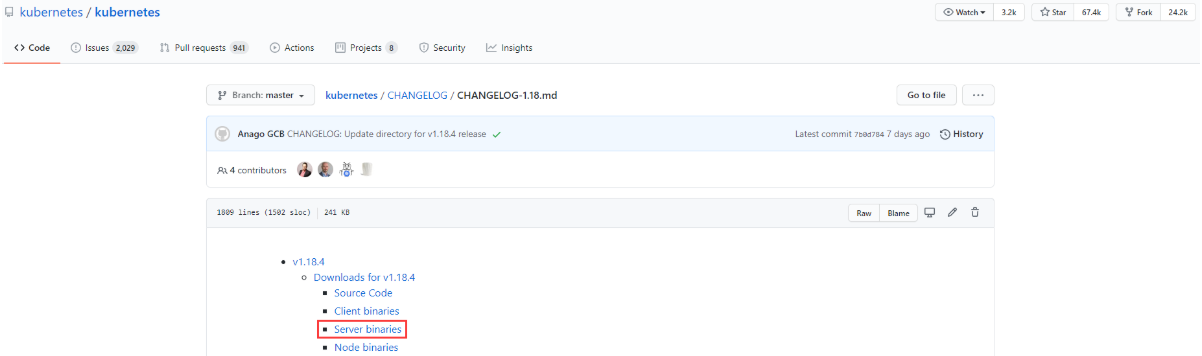

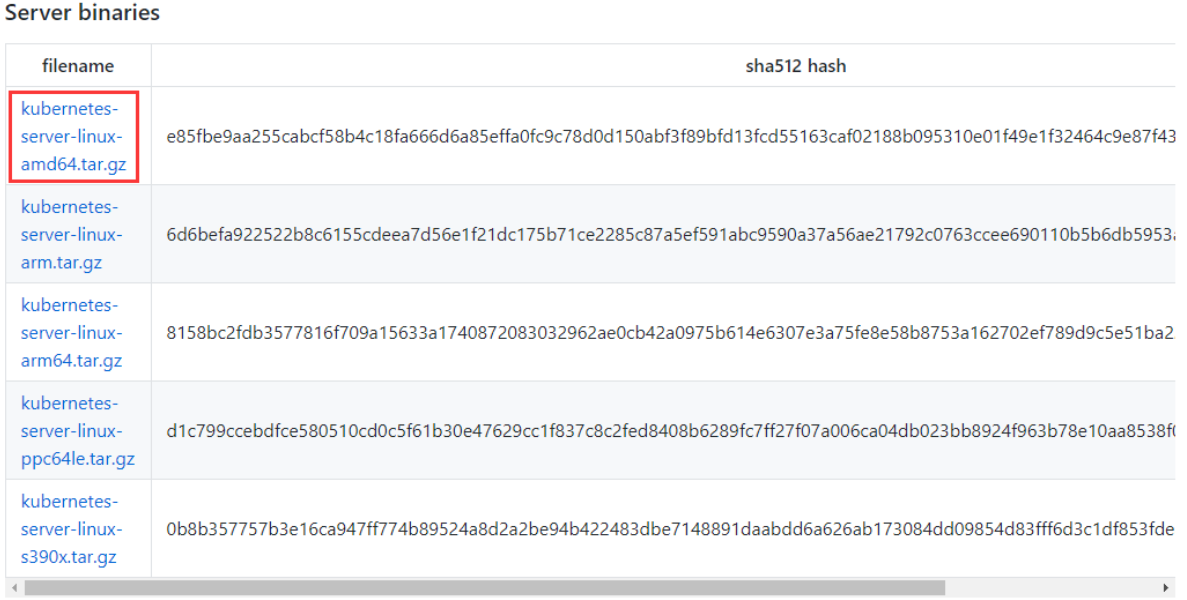

4.2 从Github下载二进制文件

下载地址: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#v1183

注:打开链接会发现里面有很多包,下载一个server包就够了,包含了Master和Worker Node二进制文件。

点击进入:

点击下载或右键获取下载地址:

4.3 解压二进制包

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}tar zxvf kubernetes-server-linux-amd64.tar.gzcd kubernetes/server/binls kube-apiserver kube-scheduler kube-controller-managercp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bincp kubectl /usr/bin/

4.4 部署kube-apiserver

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOFKUBE_APISERVER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--etcd-servers=https://192.168.26.71:2379,https://192.168.26.72:2379,https://192.168.26.73:2379 \\--bind-address=192.168.26.71 \\--secure-port=6443 \\--advertise-address=192.168.26.71 \\--allow-privileged=true \\--service-cluster-ip-range=10.0.0.0/24 \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\--authorization-mode=RBAC,Node \\--enable-bootstrap-token-auth=true \\--token-auth-file=/opt/kubernetes/cfg/token.csv \\--service-node-port-range=6000-32767 \\--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\--tls-cert-file=/opt/kubernetes/ssl/server.pem \\--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\--client-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\--etcd-cafile=/opt/etcd/ssl/ca.pem \\--etcd-certfile=/opt/etcd/ssl/server.pem \\--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\--audit-log-maxage=30 \\--audit-log-maxbackup=3 \\--audit-log-maxsize=100 \\--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"EOF

注:上面两个\ \ 第一个是转义符,第二个是换行符,使用转义符是为了使用EOF保留换行符。

- –logtostderr:启用日志

- —v:日志等级

- –log-dir:日志目录

- –etcd-servers:etcd集群地址

- –bind-address:监听地址

- –secure-port:https安全端口

- –advertise-address:集群通告地址

- –allow-privileged:启用授权

- –service-cluster-ip-range:Service虚拟IP地址段

- –enable-admission-plugins:准入控制模块

- –authorization-mode:认证授权,启用RBAC授权和节点自管理

- –enable-bootstrap-token-auth:启用TLS bootstrap机制

- –token-auth-file:bootstrap token文件

- –service-node-port-range:Service nodeport类型默认分配端口范围

- –kubelet-client-xxx:apiserver访问kubelet客户端证书

- –tls-xxx-file:apiserver https证书

- –etcd-xxxfile:连接Etcd集群证书

- –audit-log-xxx:审计日志

2. 拷贝刚才生成的证书

把刚才生成的证书拷贝到配置文件中的路径:

ls ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pemcp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

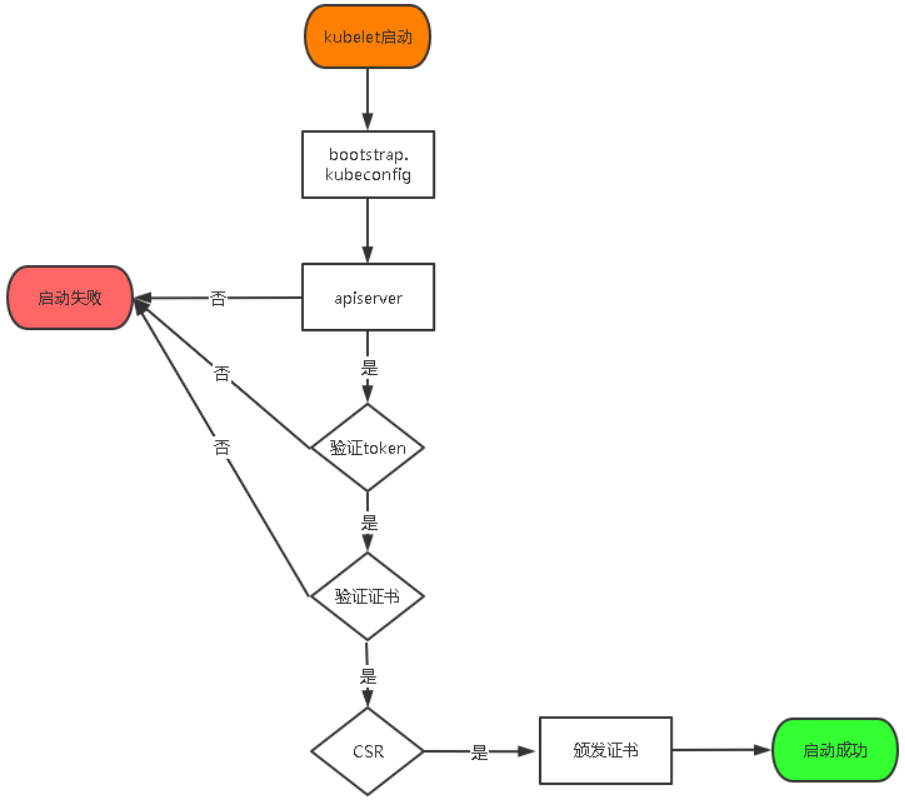

3. 启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

TLS bootstraping 工作流程:

创建上述配置文件中token文件:

cat > /opt/kubernetes/cfg/token.csv << EOF7edfdd626b76c341a002477b8a3d3fe2,kubelet-bootstrap,10001,"system:node-bootstrapper"EOF

格式:token,用户名,UID,用户组

token也可自行生成替换:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

4. systemd管理apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.confExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF

5. 启动并设置开机启动

systemctl daemon-reloadsystemctl start kube-apiserversystemctl enable kube-apiserver

6. 授权kubelet-bootstrap用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrap

4.5 部署kube-controller-manager

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOFKUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--leader-elect=true \\--master=127.0.0.1:8080 \\--bind-address=127.0.0.1 \\--allocate-node-cidrs=true \\--cluster-cidr=10.244.0.0/16 \\--service-cluster-ip-range=10.0.0.0/24 \\--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\--root-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\--experimental-cluster-signing-duration=87600h0m0s"EOF

- –master:通过本地非安全本地端口8080连接apiserver。

- –leader-elect:当该组件启动多个时,自动选举(HA)

- –cluster-signing-cert-file/–cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

2. systemd管理controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.confExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF

3. 启动并设置开机启动

systemctl daemon-reloadsystemctl start kube-controller-managersystemctl enable kube-controller-manager

4.6 部署kube-scheduler

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOFKUBE_SCHEDULER_OPTS="--logtostderr=false \--v=2 \--log-dir=/opt/kubernetes/logs \--leader-elect \--master=127.0.0.1:8080 \--bind-address=127.0.0.1"EOF

- –master:通过本地非安全本地端口8080连接apiserver。

- –leader-elect:当该组件启动多个时,自动选举(HA)

2. systemd管理scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.confExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF

3. 启动并设置开机启动

systemctl daemon-reloadsystemctl start kube-schedulersystemctl enable kube-scheduler

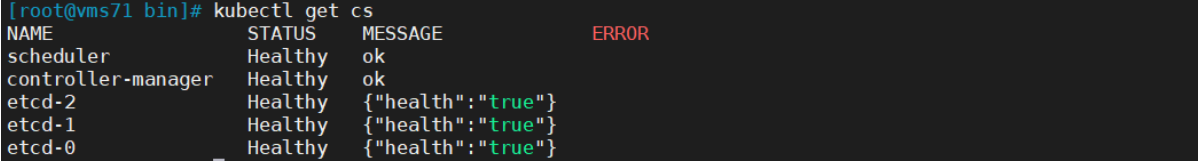

4. 查看集群状态

所有组件都已经启动成功,通过kubectl工具查看当前集群组件状态:

kubectl get csNAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-2 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}etcd-0 Healthy {"health":"true"}

如上输出说明Master节点组件运行正常。

五、部署Worker Node

下面还是在Master Node上操作,即同时作为Worker Node

5.1 创建工作目录并拷贝二进制文件

在所有worker node创建工作目录:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从master节点拷贝:

cd kubernetes/server/bincp kubelet kube-proxy /opt/kubernetes/bin # 本地拷贝

5.2 部署kubelet

1. 创建配置文件

下载镜像

pause-amd64:3.0docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

cat > /opt/kubernetes/cfg/kubelet.conf << EOFKUBELET_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--hostname-override=k8s-master \\--network-plugin=cni \\--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet-config.yml \\--cert-dir=/opt/kubernetes/ssl \\--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"EOF

- –hostname-override:显示名称,集群中唯一

- –network-plugin:启用CNI

- –kubeconfig:空路径,会自动生成,后面用于连接apiserver

- –bootstrap-kubeconfig:首次启动向apiserver申请证书

- –config:配置参数文件

- –cert-dir:kubelet证书生成目录

- –pod-infra-container-image:管理Pod网络容器的镜像

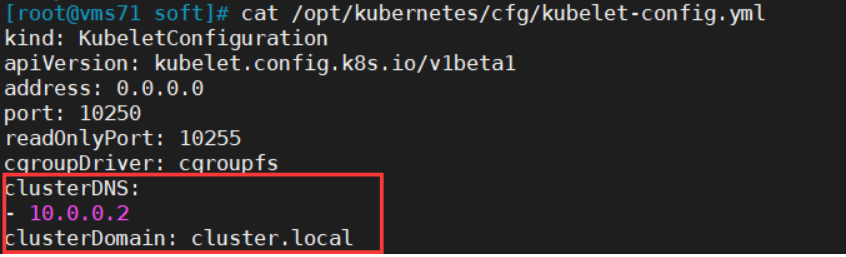

2. 配置参数文件

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOFkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: 0.0.0.0port: 10250readOnlyPort: 10255cgroupDriver: cgroupfsclusterDNS:- 10.0.0.2clusterDomain: cluster.localfailSwapOn: falseauthentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /opt/kubernetes/ssl/ca.pemauthorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30sevictionHard:imagefs.available: 15%memory.available: 100Minodefs.available: 10%nodefs.inodesFree: 5%maxOpenFiles: 1000000maxPods: 110EOF

3. 生成bootstrap.kubeconfig文件

KUBE_APISERVER="https://192.168.26.71:6443" # apiserver IP:PORTTOKEN="7edfdd626b76c341a002477b8a3d3fe2" # 与token.csv里保持一致# 生成 kubelet bootstrap kubeconfig 配置文件kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=bootstrap.kubeconfigkubectl config set-credentials "kubelet-bootstrap" \--token=${TOKEN} \--kubeconfig=bootstrap.kubeconfigkubectl config set-context default \--cluster=kubernetes \--user="kubelet-bootstrap" \--kubeconfig=bootstrap.kubeconfigkubectl config use-context default --kubeconfig=bootstrap.kubeconfig

直接粘贴到命令行:

拷贝到配置文件路径:

ls -ltcp bootstrap.kubeconfig /opt/kubernetes/cfg

4. systemd管理kubelet

cat > /usr/lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletAfter=docker.service[Service]EnvironmentFile=/opt/kubernetes/cfg/kubelet.confExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

5. 启动并设置开机启动

systemctl daemon-reloadsystemctl start kubeletsystemctl enable kubelet

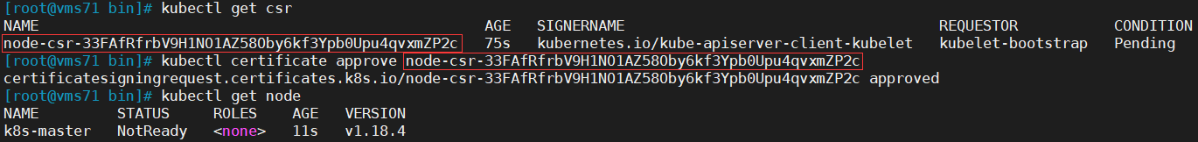

5.3 批准kubelet证书申请并加入集群

# 查看kubelet证书请求[root@vms71 bin]# kubectl get csrNAME AGE SIGNERNAME REQUESTOR CONDITIONnode-csr-33FAfRfrbV9H1NO1AZ58Oby6kf3Ypb0Upu4qvxmZP2c 75s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending# 批准申请[root@vms71 bin]# kubectl certificate approve node-csr-33FAfRfrbV9H1NO1AZ58Oby6kf3Ypb0Upu4qvxmZP2ccertificatesigningrequest.certificates.k8s.io/node-csr-33FAfRfrbV9H1NO1AZ58Oby6kf3Ypb0Upu4qvxmZP2c approved# 查看节点[root@vms71 bin]# kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master NotReady <none> 11s v1.18.4

注:由于网络插件还没有部署,节点会没有准备就绪 NotReady

5.4 部署kube-proxy

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOFKUBE_PROXY_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--config=/opt/kubernetes/cfg/kube-proxy-config.yml"EOF

2. 配置参数文件

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOFkind: KubeProxyConfigurationapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0metricsBindAddress: 0.0.0.0:10249clientConnection:kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfighostnameOverride: k8s-masterclusterCIDR: 10.0.0.0/24EOF

3. 生成kube-proxy.kubeconfig文件

生成kube-proxy证书:

# 切换工作目录cd TLS/k8s# 创建证书请求文件cat > kube-proxy-csr.json << EOF{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOF# 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxyls kube-proxy*pemkube-proxy-key.pem kube-proxy.pem

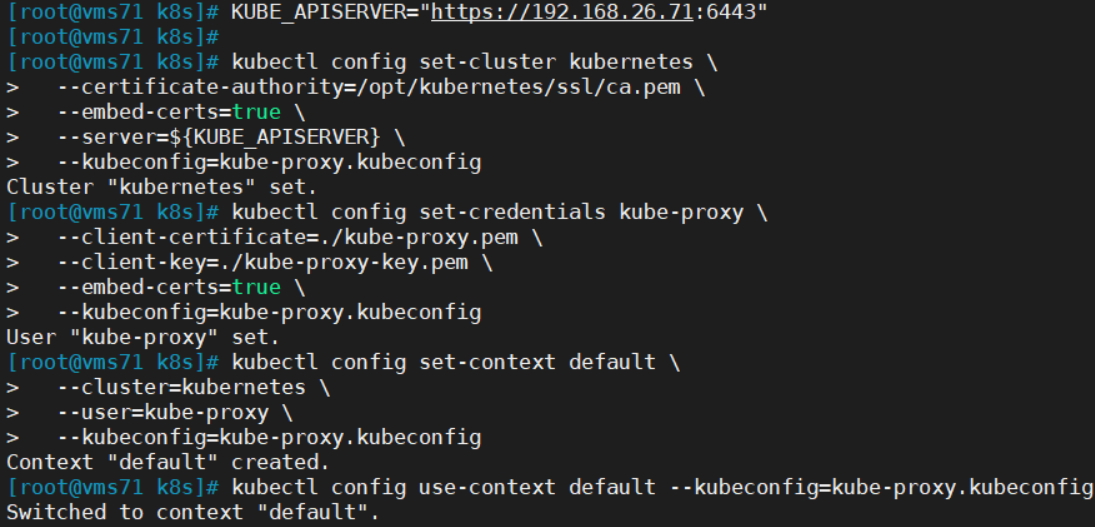

生成kubeconfig文件:

KUBE_APISERVER="https://192.168.26.71:6443"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \--client-certificate=./kube-proxy.pem \--client-key=./kube-proxy-key.pem \--embed-certs=true \--kubeconfig=kube-proxy.kubeconfigkubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

直接粘贴到命令行执行:

拷贝到配置文件指定路径:

cp kube-proxy.kubeconfig /opt/kubernetes/cfg/

4. systemd管理kube-proxy

cat > /usr/lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.confExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

5. 启动并设置开机启动

systemctl daemon-reloadsystemctl start kube-proxysystemctl enable kube-proxy

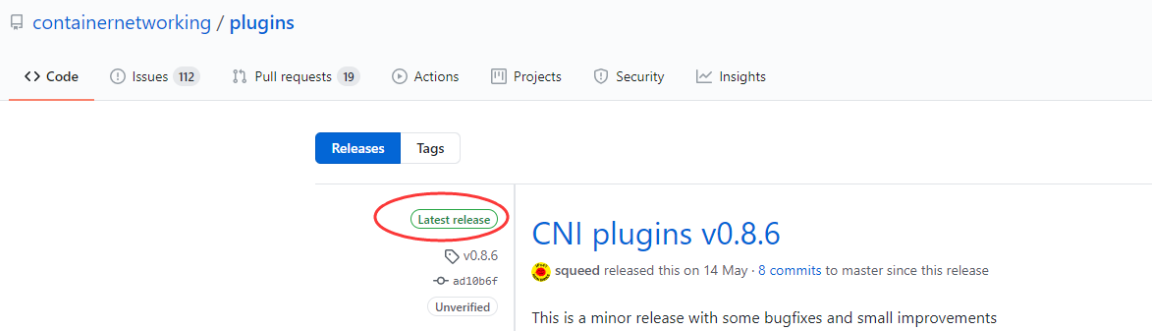

5.5 部署CNI网络

先准备好CNI二进制文件:

解压二进制包并移动到默认工作目录:

mkdir -p /opt/cni/bintar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

部署CNI网络:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlsed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml

默认镜像地址无法访问,修改为docker hub镜像仓库。

这里的kube-flannel.yml不能下载,从网上搜索并下载这个文件。然后从yml文件找出镜像进行下载

[root@vms71 soft]# grep image kube-flannel.yml

image: quay.io/coreos/flannel:v0.12.0-amd64

image: quay.io/coreos/flannel:v0.12.0-arm64

image: quay.io/coreos/flannel:v0.12.0-arm

image: quay.io/coreos/flannel:v0.12.0-ppc64le

kubectl apply -f kube-flannel.ymlkubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEkube-flannel-ds-amd64-qsp5g 1/1 Running 0 11mkubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master Ready <none> 8h v1.18.4

部署好网络插件,Node准备就绪。

5.6 授权apiserver访问kubelet

cat > apiserver-to-kubelet-rbac.yaml << EOFapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubeletrules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metrics- pods/logverbs:- "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: system:kube-apiservernamespace: ""roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubeletsubjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kubernetesEOFkubectl apply -f apiserver-to-kubelet-rbac.yaml

5.7 新增加Worker Node

本节无特别说明,均在worker节点执行。

1. 拷贝已部署好的Node相关文件到新节点

在Master节点将Worker Node涉及文件拷贝到新节点192.168.26.72/73

scp -r /opt/kubernetes root@192.168.26.72:/opt/scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.26.72:/usr/lib/systemd/systemscp -r /opt/cni/ root@192.168.26.72:/opt/scp /opt/kubernetes/ssl/ca.pem root@192.168.26.72:/opt/kubernetes/ssl

2. 删除kubelet证书和kubeconfig文件

rm /opt/kubernetes/cfg/kubelet.kubeconfigrm -f /opt/kubernetes/ssl/kubelet*

注:这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除重新生成。

3. 修改主机名

vi /opt/kubernetes/cfg/kubelet.conf--hostname-override=k8s-node1vi /opt/kubernetes/cfg/kube-proxy-config.ymlhostnameOverride: k8s-node1

4. 启动并设置开机启动

systemctl daemon-reloadsystemctl start kubeletsystemctl enable kubeletsystemctl start kube-proxysystemctl enable kube-proxy

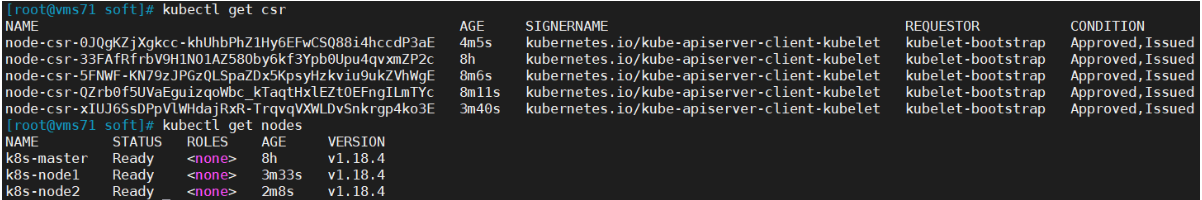

5. 在Master上批准新Node kubelet证书申请

[root@vms71 soft]# kubectl get csrNAME AGE SIGNERNAME REQUESTOR CONDITIONnode-csr-0JQgKZjXgkcc-khUhbPhZ1Hy6EFwCSQ88i4hccdP3aE 97s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pendingnode-csr-33FAfRfrbV9H1NO1AZ58Oby6kf3Ypb0Upu4qvxmZP2c 8h kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issuednode-csr-5FNWF-KN79zJPGzQLSpaZDx5KpsyHzkviu9ukZVhWgE 5m38s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pendingnode-csr-QZrb0f5UVaEguizqoWbc_kTaqtHxlEZtOEFngILmTYc 5m43s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pendingnode-csr-xIUJ6SsDPpVlWHdajRxR-TrqvqVXWLDvSnkrgp4ko3E 72s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending[root@vms71 soft]# kubectl certificate approve node-csr-0JQgKZjXgkcc-khUhbPhZ1Hy6EFwCSQ88i4hccdP3aEcertificatesigningrequest.certificates.k8s.io/node-csr-0JQgKZjXgkcc-khUhbPhZ1Hy6EFwCSQ88i4hccdP3aE approved[root@vms71 soft]# kubectl certificate approve node-csr-5FNWF-KN79zJPGzQLSpaZDx5KpsyHzkviu9ukZVhWgEcertificatesigningrequest.certificates.k8s.io/node-csr-5FNWF-KN79zJPGzQLSpaZDx5KpsyHzkviu9ukZVhWgE approved[root@vms71 soft]# kubectl certificate approve node-csr-QZrb0f5UVaEguizqoWbc_kTaqtHxlEZtOEFngILmTYccertificatesigningrequest.certificates.k8s.io/node-csr-QZrb0f5UVaEguizqoWbc_kTaqtHxlEZtOEFngILmTYc approved[root@vms71 soft]# kubectl certificate approve node-csr-xIUJ6SsDPpVlWHdajRxR-TrqvqVXWLDvSnkrgp4ko3Ecertificatesigningrequest.certificates.k8s.io/node-csr-xIUJ6SsDPpVlWHdajRxR-TrqvqVXWLDvSnkrgp4ko3E approved

6. 查看Node状态

kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master Ready <none> 8h v1.18.4k8s-node1 Ready <none> 3m33s v1.18.4k8s-node2 Ready <none> 2m8s v1.18.4

Node2(192.168.26.73 )节点同上。记得修改主机名!

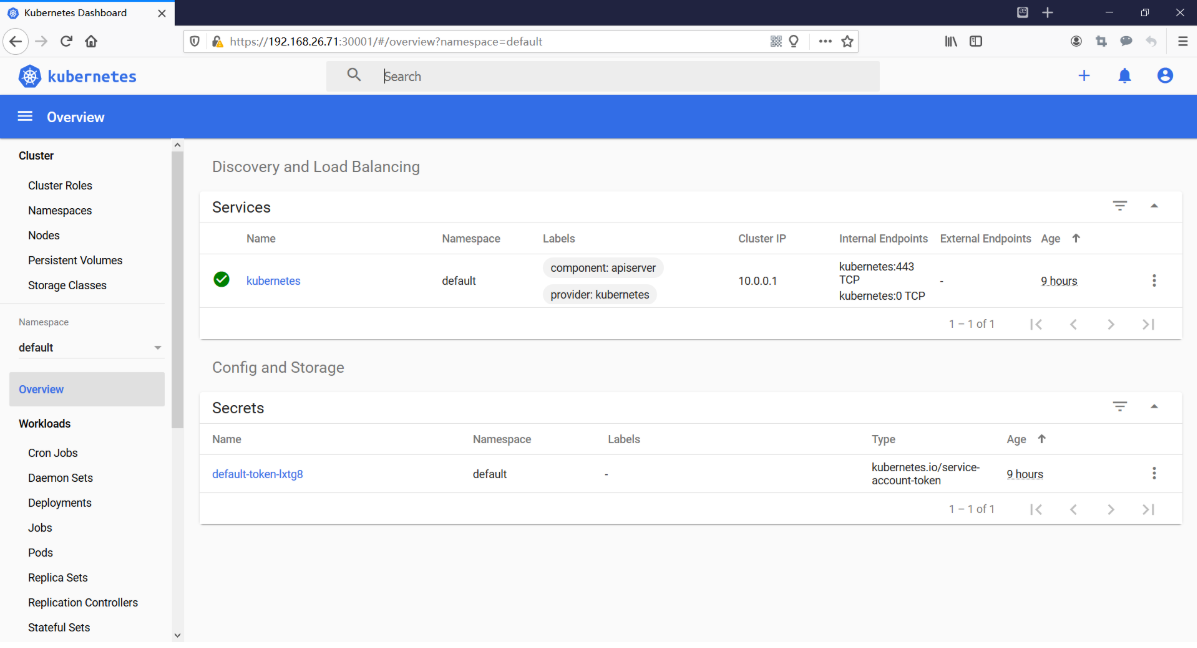

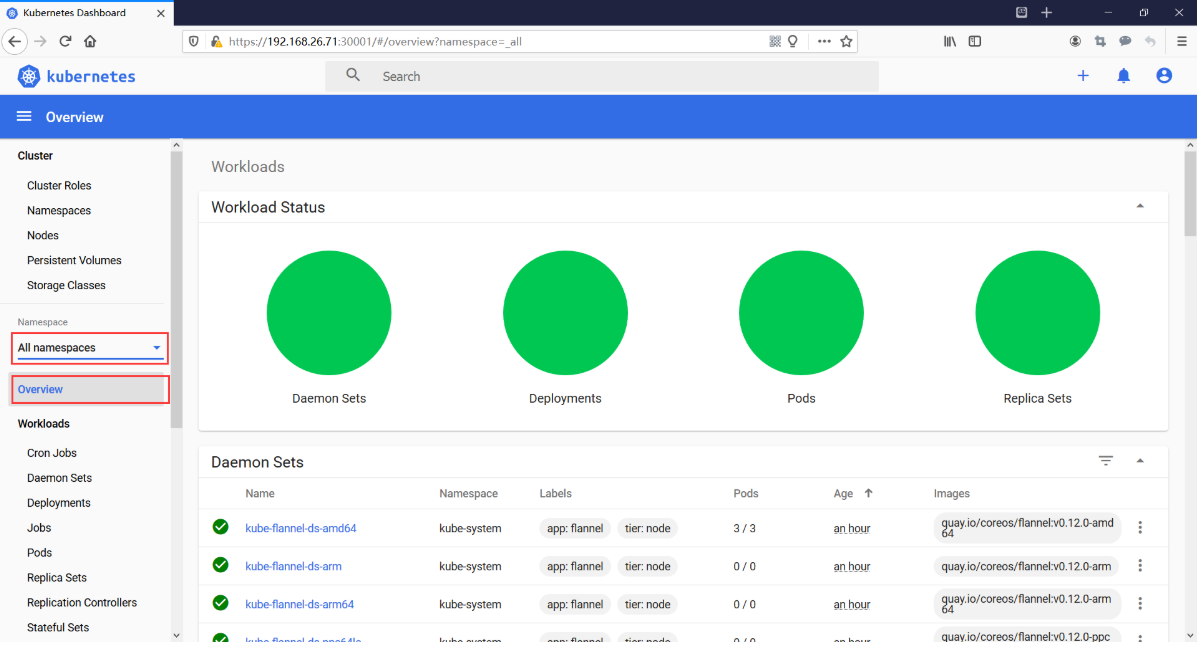

六、部署Dashboard和CoreDNS

6.1 部署Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

下载不了recommended.yaml,在网上搜索kubernetes-dashboard.yaml后修改相关内容

下载所需要的镜像:

[root@vms71 soft]# grep image kubernetes-dashboard.yaml

image: kubernetesui/dashboard:v2.0.0-beta8

image: kubernetesui/metrics-scraper:v1.0.1

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardspec:ports:- port: 443targetPort: 8443nodePort: 30001type: NodePortselector:k8s-app: kubernetes-dashboard

[root@vms71 soft]# kubectl apply -f kubernetes-dashboard.yamlnamespace/kubernetes-dashboard createdserviceaccount/kubernetes-dashboard createdservice/kubernetes-dashboard createdsecret/kubernetes-dashboard-certs createdsecret/kubernetes-dashboard-csrf createdsecret/kubernetes-dashboard-key-holder createdconfigmap/kubernetes-dashboard-settings createdrole.rbac.authorization.k8s.io/kubernetes-dashboard createdclusterrole.rbac.authorization.k8s.io/kubernetes-dashboard createdrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard createdclusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard createddeployment.apps/kubernetes-dashboard createdservice/dashboard-metrics-scraper createddeployment.apps/dashboard-metrics-scraper created[root@vms71 soft]# kubectl get pods,svc -n kubernetes-dashboardNAME READY STATUS RESTARTS AGEpod/dashboard-metrics-scraper-694557449d-szgz6 1/1 Running 0 2m59spod/kubernetes-dashboard-7548ffc8b7-v6bf5 1/1 Running 0 2m59sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/dashboard-metrics-scraper ClusterIP 10.0.0.6 <none> 8000/TCP 2m59sservice/kubernetes-dashboard NodePort 10.0.0.2 <none> 443:30001/TCP 2m59s

访问地址:https://NodeIP:30001

创建service account并绑定默认cluster-admin管理员集群角色:

kubectl create serviceaccount dashboard-admin -n kube-systemkubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

[root@vms71 soft]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')Name: dashboard-admin-token-d5ffvNamespace: kube-systemLabels: <none>Annotations: kubernetes.io/service-account.name: dashboard-adminkubernetes.io/service-account.uid: cc5ad18b-5dae-43be-8db0-594a53668ad6Type: kubernetes.io/service-account-tokenData====ca.crt: 1359 bytesnamespace: 11 bytestoken: eyJhbGciOiJSUzI1NiIsImtpZCI6IjQyVldoSXVBSjF3b05xYm91NEhzYUwzdFdrTlNQcnNDeWM2d3QyeUdVRHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZDVmZnYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiY2M1YWQxOGItNWRhZS00M2JlLThkYjAtNTk0YTUzNjY4YWQ2Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.CtjxzQRZ95_ViRAwK3sslwktWMwiFyb5wYCmS0qOuMU4Owt0rPqldPQXibjEIM11LZHRMYPVZhy2Lv3m0_CW8kNvwBwf3RWvyeILbRIN32h2RnI91YuzHYbW8Ibtz_vGdcFIpwT_Rcyf-fyGzW7vAtOp7OLvNe_fI4Ml-OQS0VOR0PzLQCveR3JIe4eSpbQ2nLm89Oh9j1enLGWJihPdpvmua9AxFb7blXq98akoNCa9KdQjfqKH8QBXEV6nVaauuhTN2fhD2C-7dgqlYb8_1Ctn7xLc4OWCjLrTdeWJ5rYSGzyuK2bOmFwurKtAGUwNb4at4eCLcgYvmRdwjBCCIA

使用输出的token登录Dashboard。https://192.168.26.71:30001

点击Sign in

选择Namespace Overview Workloads

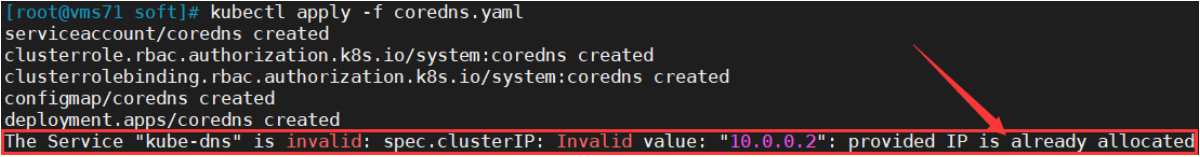

6.2 部署CoreDNS

CoreDNS用于集群内部Service名称解析。DNS服务监视Kubernetes API,为每一个Service创建DNS记录用于域名解析。

获取地址:https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/coredns/coredns.yaml.base

(复制出来后进行修改)

下载所需要的镜像:

[root@vms71 soft]# grep image coredns.yaml

image: k8s.gcr.io/coredns:1.6.7 直接下载不行时,修改为下面的镜像并下载: docker pull registry.aliyuncs.com/google_containers/coredns:1.6.7

修改coredns.yaml(三个地方:变量替换)

__PILLAR__DNS__DOMAIN__改为:cluster.local__PILLAR__DNS__MEMORY__LIMIT__改为:70Mi__PILLAR__DNS__SERVER__改为:10.0.0.2对应配置:/opt/kubernetes/cfg/kubelet-config.yml

kubectl apply -f coredns.yamlserviceaccount/coredns createdclusterrole.rbac.authorization.k8s.io/system:coredns createdclusterrolebinding.rbac.authorization.k8s.io/system:coredns createdconfigmap/coredns createddeployment.apps/coredns createdservice/kube-dns created

如果出现以下错误信息:

处理办法:找出谁占用了IP10.0.0.2

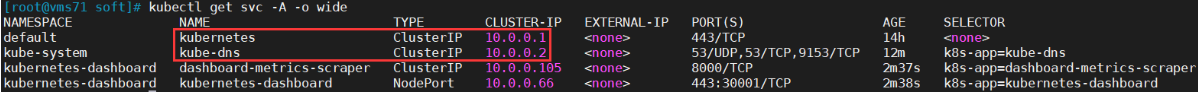

[root@vms71 soft]# kubectl get svc -A -o wide

先删除:

[root@vms71 soft]# kubectl delete -f kubernetes-dashboard.yaml

[root@vms71 soft]# kubectl delete -f coredns.yaml

再按以下顺序创建:

[root@vms71 soft]# kubectl apply -f coredns.yaml

[root@vms71 soft]# kubectl apply -f kubernetes-dashboard.yaml

kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-765cd546fc-vnjk9 1/1 Running 0 84skube-flannel-ds-amd64-96vrz 1/1 Running 0 139mkube-flannel-ds-amd64-qsp5g 1/1 Running 0 170mkube-flannel-ds-amd64-slmtp 1/1 Running 0 138m

DNS解析测试:

kubectl run -it --rm dns-test --image=busybox:1.28.4 shIf you don't see a command prompt, try pressing enter./ # nslookup kubernetesServer: 10.0.0.2Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localName: kubernetesAddress 1: 10.0.0.1 kubernetes.default.svc.cluster.local

解析没问题。

至此,单master的k8s集群完美创建!

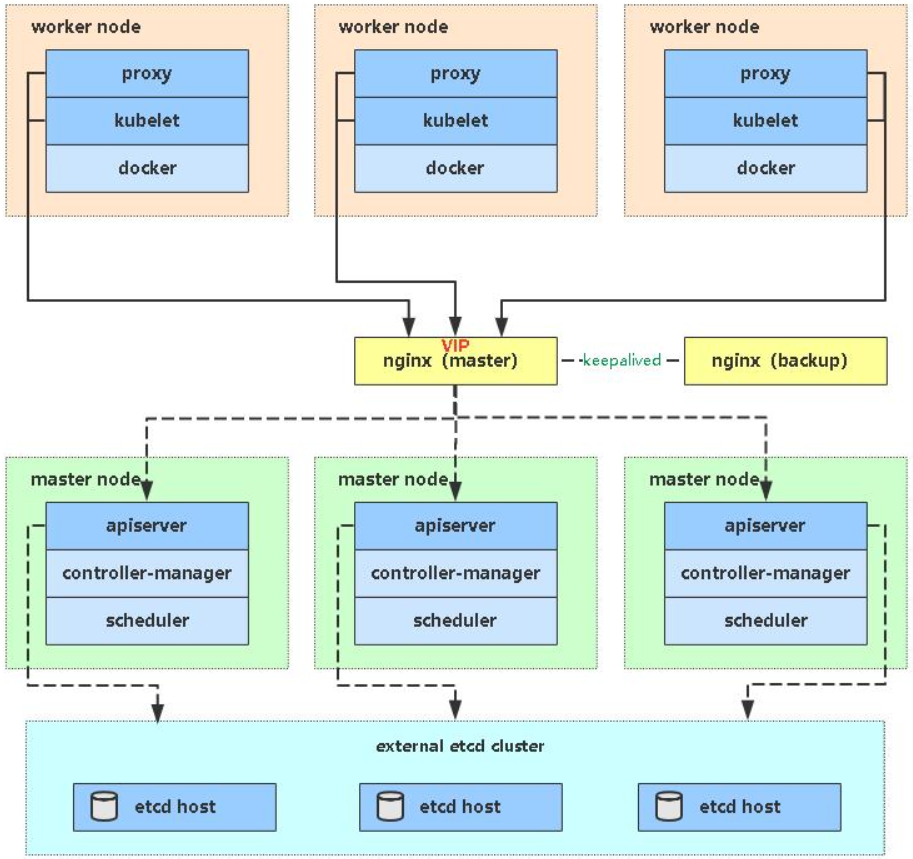

七、高可用架构(扩容多Master架构)

Kubernetes作为容器集群系统,通过健康检查+重启策略实现了Pod故障自我修复能力,通过调度算法实现将Pod分布式部署,并保持预期副本数,根据Node失效状态自动在其他Node拉起Pod,实现了应用层的高可用性。

针对Kubernetes集群,高可用性还应包含以下两个层面的考虑:Etcd数据库的高可用性和Kubernetes Master组件的高可用性。 而Etcd我们已经采用3个节点组建集群实现高可用,本节将对Master节点高可用进行说明和实施。

Master节点扮演着总控中心的角色,通过不断与工作节点上的Kubelet和kube-proxy进行通信来维护整个集群的健康工作状态。如果Master节点故障,将无法使用kubectl工具或者API做任何集群管理。

Master节点主要有三个服务kube-apiserver、kube-controller-manager和kube-scheduler,其中kube-controller-manager和kube-scheduler组件自身通过选择机制已经实现了高可用,所以Master高可用主要针对kube-apiserver组件,而该组件是以HTTP API提供服务,因此对他高可用与Web服务器类似,增加负载均衡器对其负载均衡即可,并且可水平扩容。

多Master架构图:

7.1 安装Docker(192.168.26.74)

同上,不再赘述。

7.2 部署Master2 Node(192.168.26.74)

Master2 与已部署的Master1所有操作一致。所以我们只需将Master1所有K8s文件拷贝过来,再修改下服务器IP和主机名启动即可。

1. 创建etcd证书目录

在Master2创建etcd证书目录:(Master2操作)

mkdir -p /opt/etcd/ssl

2. 拷贝文件(Master1操作)

拷贝Master1上所有K8s文件和etcd证书到Master2:

scp -r /opt/kubernetes root@192.168.26.74:/optscp -r /opt/cni/ root@192.168.26.74:/optscp -r /opt/etcd/ssl root@192.168.26.74:/opt/etcdscp /usr/lib/systemd/system/kube* root@192.168.26.74:/usr/lib/systemd/systemscp /usr/bin/kubectl root@192.168.26.74:/usr/bin

3. 删除证书文件

删除kubelet证书和kubeconfig文件:(Master2操作)

rm -f /opt/kubernetes/ssl/kubelet*rm -f /opt/kubernetes/cfg/kubelet.kubeconfig

4. 修改配置文件IP和主机名

修改apiserver、kubelet和kube-proxy配置文件为本地IP:(Master2操作)

vi /opt/kubernetes/cfg/kube-apiserver.conf...--bind-address=192.168.26.74 \--advertise-address=192.168.26.74 \...vi /opt/kubernetes/cfg/kubelet.conf--hostname-override=k8s-master2vi /opt/kubernetes/cfg/kube-proxy-config.ymlhostnameOverride: k8s-master2

5. 启动设置开机启动

(Master2操作)

systemctl daemon-reloadsystemctl start kube-apiserversystemctl start kube-controller-managersystemctl start kube-schedulersystemctl start kubeletsystemctl start kube-proxysystemctl enable kube-apiserversystemctl enable kube-controller-managersystemctl enable kube-schedulersystemctl enable kubeletsystemctl enable kube-proxy

6. 查看集群状态

(Master2操作)

kubectl get csNAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-2 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}etcd-0 Healthy {"health":"true"}

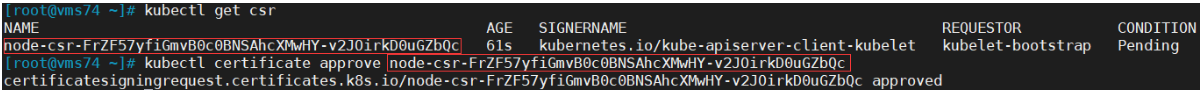

7. 批准kubelet证书申请

(Master2操作)

kubectl get csrNAME AGE SIGNERNAME REQUESTOR CONDITIONnode-csr-FrZF57yfiGmvB0c0BNSAhcXMwHY-v2JOirkD0uGZbQc 61s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pendingkubectl certificate approve node-csr-FrZF57yfiGmvB0c0BNSAhcXMwHY-v2JOirkD0uGZbQc

kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master Ready <none> 31h v1.18.4k8s-master2 Ready <none> 111s v1.18.4k8s-node1 Ready <none> 23h v1.18.4k8s-node2 Ready <none> 23h v1.18.4

7.3 部署Nginx负载均衡器

kube-apiserver高可用架构图:

- Nginx是一个主流Web服务和反向代理服务器,这里用四层实现对apiserver实现负载均衡。

- Keepalived是一个主流高可用软件,基于VIP绑定实现服务器双机热备,在上述拓扑中,Keepalived主要根据Nginx运行状态判断是否需要故障转移(偏移VIP),例如当Nginx主节点挂掉,VIP会自动绑定在Nginx备节点,从而保证VIP一直可用,实现Nginx高可用。

1. 安装软件包(主/备)

192.168.26.81、192.168.26.82

yum install epel-release -yyum install nginx keepalived -y

2. Nginx配置文件(主/备一样)

192.168.26.81、192.168.26.82

cat > /etc/nginx/nginx.conf << "EOF"user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}# 四层负载均衡,为两台Master apiserver组件提供负载均衡stream {log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 192.168.26.71:6443; # Master1 APISERVER IP:PORTserver 192.168.26.74:6443; # Master2 APISERVER IP:PORT}server {listen 6443;proxy_pass k8s-apiserver;}}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 2048;include /etc/nginx/mime.types;default_type application/octet-stream;server {listen 80 default_server;server_name _;location / {}}}EOF

3. keepalived配置文件(Nginx Master)

192.168.26.81:配置VIP:192.168.26.88

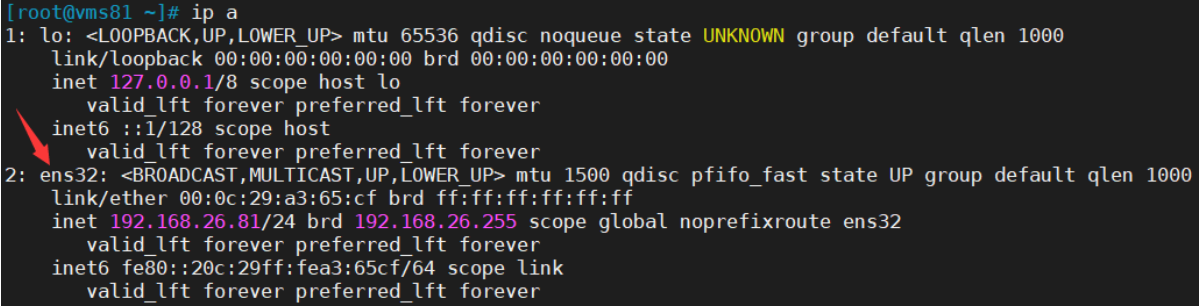

cat > /etc/keepalived/keepalived.conf << EOFglobal_defs {notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_MASTERscript_user rootenable_script_security}vrrp_script check_nginx {script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 {state MASTERinterface ens32virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的priority 100 # 优先级,备服务器设置 90advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒authentication {auth_type PASSauth_pass 1111}# 虚拟IPvirtual_ipaddress {192.168.26.88/24}track_script {check_nginx}}EOF

- vrrp_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移)

- virtual_ipaddress:虚拟IP(VIP)

- 上述配置文件中

state MASTER - 上述配置文件中

interface ens32的设置要与主机一致:

- 上述配置文件中检查nginx运行状态脚本:

cat > /etc/keepalived/check_nginx.sh << "EOF"#!/bin/bashcount=$(ps -ef |grep nginx |egrep -cv "grep|$$")if [ "$count" -eq 0 ];thenexit 1elseexit 0fiEOFchmod +x /etc/keepalived/check_nginx.sh

4. keepalived配置文件(Nginx Backup)

192.168.26.82

cat > /etc/keepalived/keepalived.conf << EOFglobal_defs {notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_BACKUPscript_user rootenable_script_security}vrrp_script check_nginx {script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 {state BACKUPinterface ens32virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的priority 90advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.26.88/24}track_script {check_nginx}}EOF

- 上述配置文件中

state BACKUP - 上述配置文件中

interface ens32的设置要与主机网卡一致:

- 上述配置文件中检查nginx运行状态脚本:

cat > /etc/keepalived/check_nginx.sh << "EOF"#!/bin/bashcount=$(ps -ef |grep nginx |egrep -cv "grep|$$")if [ "$count" -eq 0 ];thenexit 1elseexit 0fiEOFchmod +x /etc/keepalived/check_nginx.sh

注:keepalived根据脚本返回状态码(0为工作正常,非0不正常)判断是否故障转移。

5. 启动并设置开机启动

systemctl daemon-reloadsystemctl start nginxsystemctl start keepalivedsystemctl enable nginxsystemctl enable keepalived

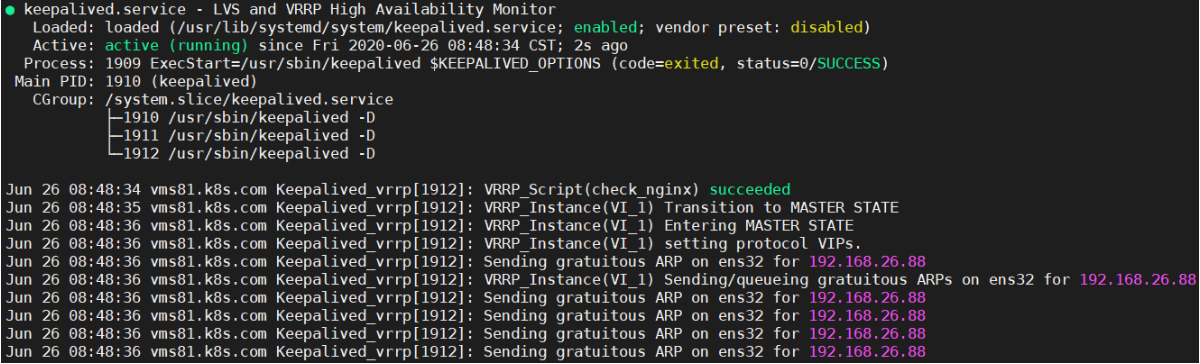

6. 查看keepalived工作状态

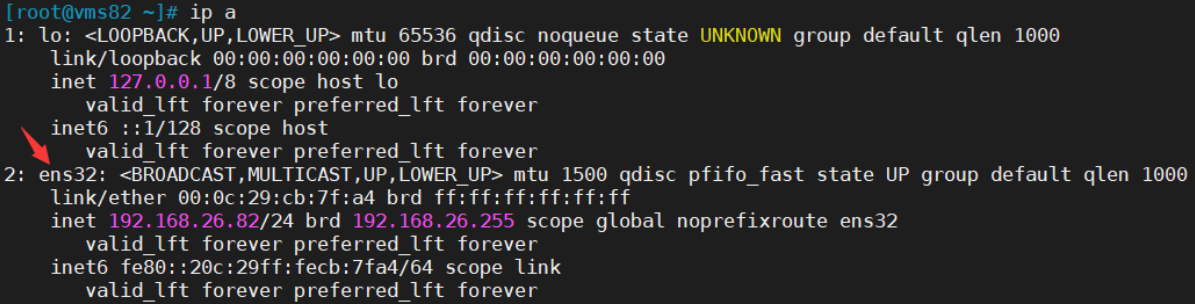

192.168.26.81 [root@vms81 ~]# systemctl status keepalived

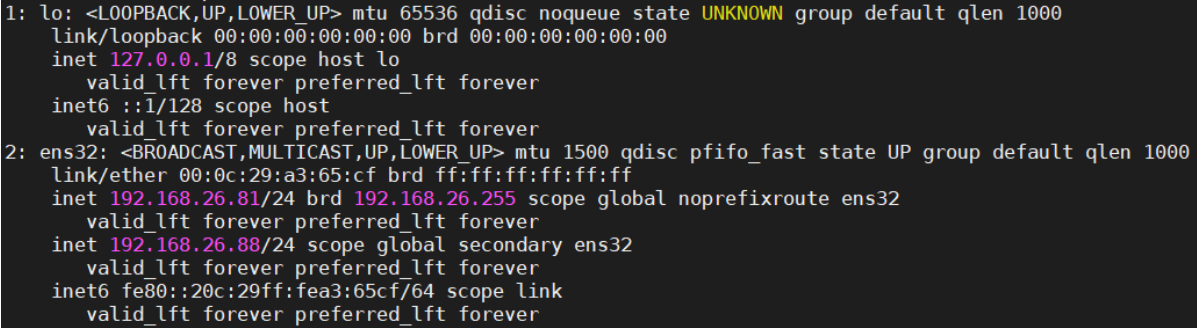

ip a

可以看到,在ens32网卡绑定了192.168.26.88 虚拟IP,说明工作正常。

7. Nginx+Keepalived高可用测试

关闭主节点Nginx,测试VIP是否漂移到备节点服务器。

在Nginx Master执行 pkill nginx

[root@vms81 ~]# pkill nginx

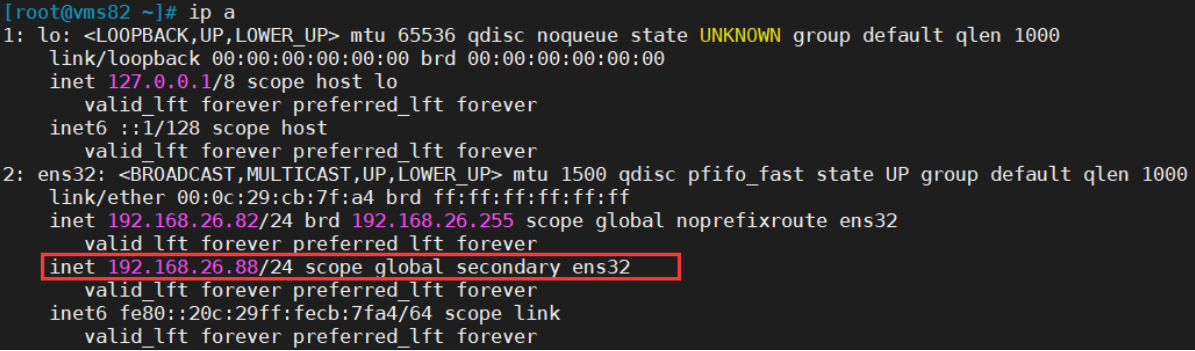

在Nginx Backup,ip addr命令查看已成功绑定VIP。

[root@vms82 ~]# ip a

在Nginx Master启动nginx,VIP又切换回Master

8. 访问负载均衡器测试

找K8s集群中任意一个节点,使用curl查看K8s版本测试,使用VIP访问:

[root@vms71 soft]# curl -k https://192.168.26.88:6443/version{"major": "1","minor": "18","gitVersion": "v1.18.4","gitCommit": "c96aede7b5205121079932896c4ad89bb93260af","gitTreeState": "clean","buildDate": "2020-06-17T11:33:59Z","goVersion": "go1.13.9","compiler": "gc","platform": "linux/amd64"}[root@vms74 ~]# curl -k https://192.168.26.88:6443/version{"major": "1","minor": "18","gitVersion": "v1.18.4","gitCommit": "c96aede7b5205121079932896c4ad89bb93260af","gitTreeState": "clean","buildDate": "2020-06-17T11:33:59Z","goVersion": "go1.13.9","compiler": "gc","platform": "linux/amd64"}

可以正确获取到K8s版本信息,说明负载均衡器搭建正常。该请求数据流程:curl -> vip(nginx) -> apiserver

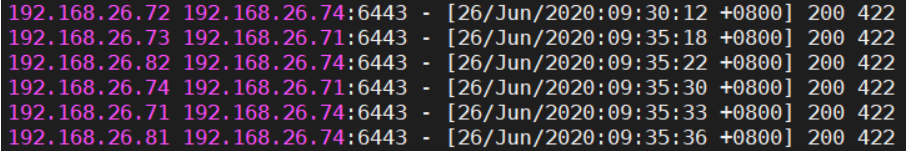

通过查看Nginx日志也可以看到转发apiserver IP:

[root@vms81 ~]# tail /var/log/nginx/k8s-access.log -f192.168.26.71 192.168.26.71:6443 - [26/Jun/2020:09:12:50 +0800] 200 422192.168.26.74 192.168.26.74:6443 - [26/Jun/2020:09:13:12 +0800] 200 422

[root@vms72 ~]# curl -k https://192.168.26.88:6443/version

[root@vms73 ~]# curl -k https://192.168.26.88:6443/version

[root@vms82 ~]# curl -k https://192.168.26.88:6443/version

[root@vms74 ~]# curl -k https://192.168.26.88:6443/version

[root@vms71 ~]# curl -k https://192.168.26.88:6443/version

[root@vms81 ~]# curl -k https://192.168.26.88:6443/version

[root@vms81 ~]# tail /var/log/nginx/k8s-access.log -f

到此还没结束,还有下面最关键的一步。

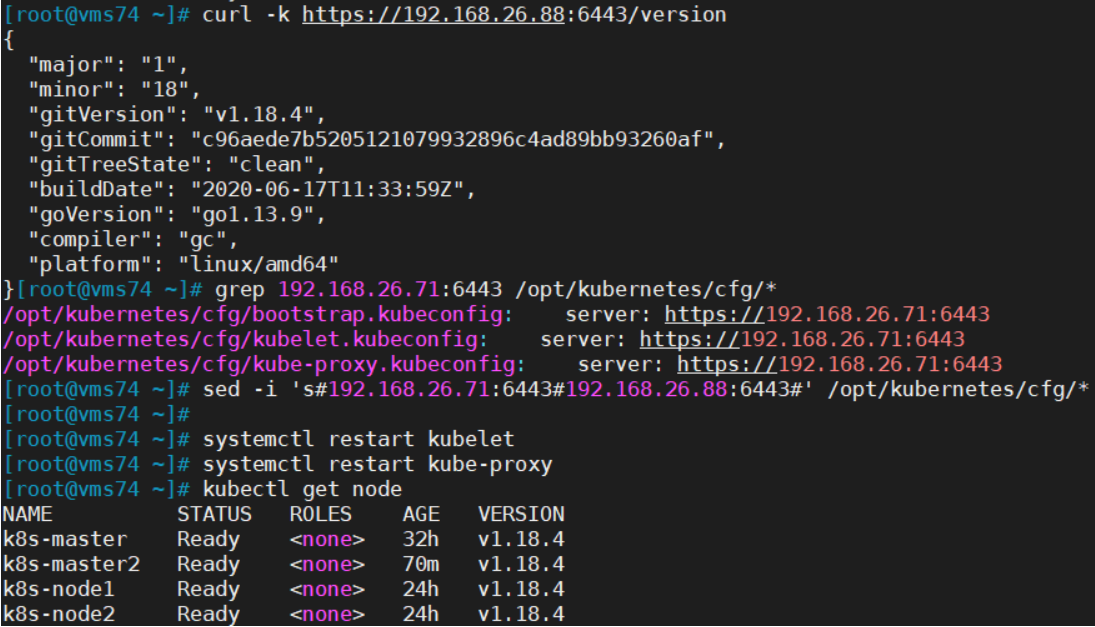

7.4 修改所有Worker Node连接LB VIP

试想下,虽然我们增加了Master2和负载均衡器,但是我们是从单Master架构扩容的,也就是说目前所有的Node组件连接都还是Master1,如果不改为连接VIP走负载均衡器,那么Master还是单点故障。

因此接下来就是要改所有Node组件配置文件,由原来192.168.26.71修改为192.168.26.88(VIP):

| 角色 | IP |

|---|---|

| k8s-master1 | 192.168.26.71 |

| k8s-master2 | 192.168.26.74 |

| k8s-node1 | 192.168.26.72 |

| k8s-node2 | 192.168.26.73 |

也就是通过kubectl get node命令查看到的节点。

在上述所有Worker Node执行:

grep 192.168.26.71:6443 /opt/kubernetes/cfg/*sed -i 's#192.168.26.71:6443#192.168.26.88:6443#' /opt/kubernetes/cfg/*grep 192.168.26.88:6443 /opt/kubernetes/cfg/*systemctl restart kubeletsystemctl restart kube-proxy

检查节点状态:

kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master Ready <none> 32h v1.18.4k8s-master2 Ready <none> 68m v1.18.4k8s-node1 Ready <none> 24h v1.18.4k8s-node2 Ready <none> 24h v1.18.4

至此,一套完整的 Kubernetes 高可用集群就部署完成了!

PS:如果是在公有云上,一般都不支持keepalived,那么可以直接用它们的负载均衡器产品(内网就行,还免费~),架构与上面一样,直接负载均衡多台Master kube-apiserver即可!

- END -

kube-proxy修改成ipvs模式

我们知道kube-proxy支持 iptables 和 ipvs 两种模式,在kubernetes v1.8 中引入了 ipvs 模式,在 v1.9 中处于 beta 阶段,在 v1.11 中已经正式可用了。iptables 模式在 v1.1 中就添加支持了,从 v1.2 版本开始 iptables 就是 kube-proxy 默认的操作模式,ipvs 和 iptables 都是基于netfilter的,那么 ipvs 模式和 iptables 模式之间有哪些差异呢?

- ipvs 为大型集群提供了更好的可扩展性和性能。

- ipvs 支持比 iptables 更复杂的复制均衡算法(最小负载、最少连接、加权等等)。

- ipvs 支持服务器健康检查和连接重试等功能。

- 可以动态修改ipset集合。即使iptables的规则正在使用这个集合。

ipvs 依赖 iptables

由于ipvs 无法提供包过滤、SNAT、masquared(伪装)等功能。因此在某些场景(如Nodeport的实现)下还是要与iptables搭配使用,ipvs 将使用ipset来存储需要DROP或masquared的流量的源或目标地址,以确保 iptables 规则的数量是恒定的。 假设要禁止上万个IP访问我们的服务器,则用iptables的话,就需要一条一条地添加规则,会在iptables中生成大量的规则;但是使用ipset的话,只需要将相关的IP地址(网段)加入到ipset集合中即可,这样只需要设置少量的iptables规则即可实现目标。

安装ipset及ipvsadm(所有节点)

yum -y install ipset ipvsadm yum install ipvsadm ipset sysstat conntrack libseccomp -y #上面安装有问题时用这个

添加需要加载的模块(所有节点)

cat >/etc/sysconfig/modules/ipvs.modules <<EOF#!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrack_ipv4EOF

授权、运行、检查是否加载

chmod 755 /etc/sysconfig/modules/ipvs.modules && sh /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

检查是否加载

lsmod | grep -e ipvs -e nf_conntrack_ipv4

参考1:(上述无效时)

cat > /etc/sysconfig/modules/ipvs.modules <<EOF#!/bin/bashipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack_ipv4"for kernel_module in \${ipvs_modules}; do/usr/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1if [ $? -eq 0 ]; then/usr/sbin/modprobe \${kernel_module}fidoneEOFchmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

修改Kube-proxy配置文件kube-proxy-config.yml将mode设置为ipvs

(所有节点)

加入配置:

mode: "ipvs"

vi /opt/kubernetes/cfg/kube-proxy-config.ymlkind: KubeProxyConfigurationapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0metricsBindAddress: 0.0.0.0:10249clientConnection:kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfighostnameOverride: k8s-master2clusterCIDR: 10.0.0.0/24mode: "ipvs"ipvs:scheduler: "rr"参考配置文件:

hostnameOverride: k8s-m1iptables:masqueradeAll: truemasqueradeBit: 14minSyncPeriod: 0ssyncPeriod: 30sipvs:excludeCIDRs: nullminSyncPeriod: 0sscheduler: ""syncPeriod: 30skind: KubeProxyConfigurationmetricsBindAddress: 192.168.0.200:10249mode: "ipvs"nodePortAddresses: nulloomScoreAdj: -999portRange: ""resourceContainer: /kube-proxyudpIdleTimeout: 250ms重启服务及验证:

systemctl daemon-reloadsystemctl restart kube-proxysystemctl status kube-proxyipvsadm -L -n

创建 ClusterIP 类型服务时,IPVS proxier 将执行以下三项操作:

- 确保节点中存在虚拟接口,默认为 kube-ipvs0

- 将Service IP 地址绑定到虚拟接口

- 分别为每个Service IP 地址创建 IPVS virtual servers

[root@vms74 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 2d20h[root@vms74 ~]# kubectl describe svc kubernetesName: kubernetesNamespace: defaultLabels: component=apiserverprovider=kubernetesAnnotations: <none>Selector: <none>Type: ClusterIPIP: 10.0.0.1Port: https 443/TCPTargetPort: 6443/TCPEndpoints: 192.168.26.71:6443,192.168.26.74:6443Session Affinity: NoneEvents: <none>

[root@vms74 ~]# ip -4 a......8: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group defaultinet 10.0.0.227/32 brd 10.0.0.227 scope global kube-ipvs0valid_lft forever preferred_lft foreverinet 10.0.0.37/32 brd 10.0.0.37 scope global kube-ipvs0valid_lft forever preferred_lft foreverinet 10.0.0.1/32 brd 10.0.0.1 scope global kube-ipvs0valid_lft forever preferred_lft foreverinet 10.0.0.2/32 brd 10.0.0.2 scope global kube-ipvs0valid_lft forever preferred_lft forever

[root@vms74 ~]# ipvsadm -lnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.0.0.1:443 rr-> 192.168.26.71:6443 Masq 1 0 0-> 192.168.26.74:6443 Masq 1 0 0TCP 10.0.0.2:53 rr-> 10.244.3.3:53 Masq 1 0 0TCP 10.0.0.2:9153 rr-> 10.244.3.3:9153 Masq 1 0 0TCP 10.0.0.37:443 rr-> 10.244.0.15:8443 Masq 1 0 0TCP 10.0.0.227:8000 rr-> 10.244.0.16:8000 Masq 1 0 0TCP 127.0.0.1:30001 rr-> 10.244.0.15:8443 Masq 1 0 0TCP 172.17.0.1:30001 rr-> 10.244.0.15:8443 Masq 1 0 0TCP 192.168.26.74:30001 rr-> 10.244.0.15:8443 Masq 1 0 0TCP 10.244.3.0:30001 rr-> 10.244.0.15:8443 Masq 1 0 0TCP 10.244.3.1:30001 rr-> 10.244.0.15:8443 Masq 1 0 0UDP 10.0.0.2:53 rr-> 10.244.3.3:53 Masq 1 0 0

删除 Kubernetes Service将触发删除相应的 IPVS 虚拟服务器,IPVS 物理服务器及其绑定到虚拟接口的 IP 地址。

参考:所有机器需要设定/etc/sysctl.d/k8s.conf的系统参数

cat <<EOF > /etc/sysctl.d/k8s.conf# https://github.com/moby/moby/issues/31208# ipvsadm -l --timout# 修复ipvs模式下长连接timeout问题 小于900即可net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_intvl = 30net.ipv4.tcp_keepalive_probes = 10net.ipv6.conf.all.disable_ipv6 = 1net.ipv6.conf.default.disable_ipv6 = 1net.ipv6.conf.lo.disable_ipv6 = 1net.ipv4.neigh.default.gc_stale_time = 120net.ipv4.conf.all.rp_filter = 0net.ipv4.conf.default.rp_filter = 0net.ipv4.conf.default.arp_announce = 2net.ipv4.conf.lo.arp_announce = 2net.ipv4.conf.all.arp_announce = 2net.ipv4.ip_forward = 1net.ipv4.tcp_max_tw_buckets = 5000net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 1024net.ipv4.tcp_synack_retries = 2# 要求iptables不对bridge的数据进行处理net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-arptables = 1net.netfilter.nf_conntrack_max = 2310720fs.inotify.max_user_watches=89100fs.may_detach_mounts = 1fs.file-max = 52706963fs.nr_open = 52706963vm.swappiness = 0vm.overcommit_memory=1vm.panic_on_oom=0EOF