- 核心插件-CNI网络插件Flannel

- 核心插件-CoreDNS服务发现

- 核心插件-ingress(服务暴露)控制器-traefik

- 核心插件-dashboard(Kubernetes 集群的通用Web UI)

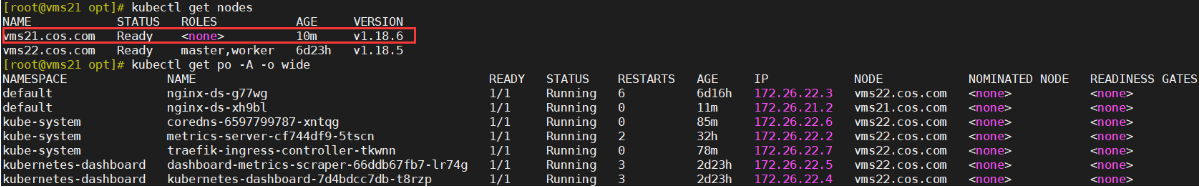

- 平滑升级到kubernetes-v1.18.6

k8s-centos8u2-集群部署03-核心插件:CNI-flannel、CoreDNS、Ingress-traefik、Dashboard

本实验环境每次关机后重启需要检查:

- keepalived是否工作(systemctl status keepalived),vip是否正常。(ip addr查看192.168.26.10是否存在)

- harbaor启动是否正常:在启动目录下docker-compose ps查看是否正常。

- supervisorctl status:查看各进程启动状态。

- 检查docker和k8s集群。

核心插件-CNI网络插件Flannel

1 CNI网络插件

- kubernetes设计了网络模型,但是pod之间通信的具体实现交给了CNI网络插件。

- 常用的CNI网络插件有:

Flannel、Calico、Canal、Contiv等,其中Flannel和Calico占比接近80%,Flannel占比略多于Calico,但使用Calico可以实现k8s网络策略功能。 - 本次部署使用

Flannel(v0.12.0)作为网络插件。涉及的机器 vms21.cos.com、vms22.cos.com。

集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| vms21.cos.com | flannel | 192.168.26.21 |

| vms22.cos.com | flannel | 192.168.26.22 |

注意:这里部署文档以vms21.cos.com主机为例,另外一台运算节点安装部署方法类似

2 下载Flannel软件,解压,做软连接

github地址:https://github.com/coreos/flannel/releases

涉及的机器 vms21、vms22

[root@vms21 ~]# cd /opt/src/[root@vms21 src]# wget https://github.com/coreos/flannel/releases/download/v0.12.0/flannel-v0.12.0-linux-amd64.tar.gz...[root@vms21 src]# mkdir -p /opt/release/flannel-v0.12.0 #因为flannel压缩包内部没有套目录[root@vms21 src]# tar -xf flannel-v0.12.0-linux-amd64.tar.gz -C /opt/release/flannel-v0.12.0[root@vms21 src]# mkdir /opt/apps[root@vms21 src]# ln -s /opt/release/flannel-v0.12.0 /opt/apps/flannel[root@vms21 src]# ll /opt/apps/flannellrwxrwxrwx 1 root root 28 Jul 19 08:27 /opt/apps/flannel -> /opt/release/flannel-v0.12.0

vms22.cos.com上:(其它节点)

[root@vms22 ~]# mkdir /opt/src[root@vms22 ~]# cd /opt/src/[root@vms22 src]# scp vms21:/opt/src/flannel-v0.12.0-linux-amd64.tar.gz .[root@vms22 src]# mkdir -p /opt/release/flannel-v0.12.0[root@vms22 src]# tar -xf flannel-v0.12.0-linux-amd64.tar.gz -C /opt/release/flannel-v0.12.0[root@vms22 src]# mkdir /opt/apps[root@vms22 src]# ln -s /opt/release/flannel-v0.12.0 /opt/apps/flannel[root@vms22 src]# ll /opt/apps/flannellrwxrwxrwx 1 root root 28 Jul 19 10:50 /opt/apps/flannel -> /opt/release/flannel-v0.12.0

3 拷贝证书

flannel 需要以客户端的身份访问etcd,需要相关证书

[root@vms21 src]# mkdir /opt/apps/flannel/certs[root@vms21 src]# cd /opt/apps/flannel/certs/[root@vms21 certs]# cp /opt/kubernetes/server/bin/cert/ca.pem .[root@vms21 certs]# cp /opt/kubernetes/server/bin/cert/client.pem .[root@vms21 certs]# cp /opt/kubernetes/server/bin/cert/client-key.pem .[root@vms21 certs]# lltotal 12-rw-r--r-- 1 root root 1338 Jul 19 08:34 ca.pem-rw------- 1 root root 1675 Jul 19 08:34 client-key.pem-rw-r--r-- 1 root root 1363 Jul 19 08:34 client.pem

此处可以共用/opt/kubernetes/server/bin/cert下的证书,后面相关配置修改证书路径即可。

vms22.cos.com上或其它节点操作相同。

4 创建配置和启动脚本

涉及的机器vms21、vms22

[root@vms21 certs]# vi /opt/apps/flannel/subnet.env #创建子网信息,26-22的subnet需要修改

FLANNEL_NETWORK=172.26.0.0/16FLANNEL_SUBNET=172.26.21.1/24FLANNEL_MTU=1500FLANNEL_IPMASQ=false

注意:flannel集群各主机的配置略有不同,部署其他节点时注意修改。

vms22.cos.com上修改内容:FLANNEL_SUBNET=172.26.22.1/24

操作etcd,增加host-gw。只需要在一台etcd机器上设置就可以了。

[root@vms21 certs]# ETCDCTL_API=2 /opt/etcd/etcdctl set /coreos.com/network/config '{"Network": "172.26.0.0/16", "Backend": {"Type": "host-gw"}}'

在vms22上检查:

[root@vms22 ~]# ETCDCTL_API=2 /opt/etcd/etcdctl get /coreos.com/network/config/coreos.com/network/config{"Network": "172.26.0.0/16", "Backend": {"Type": "host-gw"}}

[root@vms21 flannel]# vi /opt/apps/flannel/flannel-startup.sh #创建启动脚本

vms21.cos.com上:

#!/bin/sh./flanneld \--public-ip=192.168.26.21 \--etcd-endpoints=https://192.168.26.12:2379,https://192.168.26.21:2379,https://192.168.26.22:2379 \--etcd-keyfile=./certs/client-key.pem \--etcd-certfile=./certs/client.pem \--etcd-cafile=./certs/ca.pem \--iface=ens160 \--subnet-file=./subnet.env \--healthz-port=2401

注意:flannel集群各主机的启动脚本略有不同,部署其他节点时注意修改。public-ip 为本机IP,iface 为当前宿主机对外网卡。

vms22.cos.com上修改内容:--public-ip=192.168.26.22

检查配置,权限,创建日志目录

[root@vms21 flannel]# chmod +x /opt/apps/flannel/flannel-startup.sh[root@vms21 flannel]# mkdir -p /data/logs/flanneld

5 创建supervisor配置

vms21.cos.com上:

[root@vms21 flannel]# vi /etc/supervisord.d/flannel.ini

[program:flanneld-26-21]command=/opt/apps/flannel/flannel-startup.sh ; the program (relative uses PATH, can take args)numprocs=1 ; number of processes copies to start (def 1)directory=/opt/apps/flannel ; directory to cwd to before exec (def no cwd)autostart=true ; start at supervisord start (default: true)autorestart=true ; retstart at unexpected quit (default: true)startsecs=30 ; number of secs prog must stay running (def. 1)startretries=3 ; max # of serial start failures (default 3)exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)stopsignal=QUIT ; signal used to kill process (default TERM)stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)user=root ; setuid to this UNIX account to run the programredirect_stderr=true ; redirect proc stderr to stdout (default false)stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTOstdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)stdout_logfile_backups=5 ; # of stdout logfile backups (default 10)stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)stdout_events_enabled=false ; emit events on stdout writes (default false)killasgroup=truestopasgroup=true

vms22.cos.com上修改内容:[program:flanneld-26-22]

6 启动服务并检查

vms21.cos.com上部署成功时:

[root@vms21 flannel]# supervisorctl updateflanneld-26-21: added process group[root@vms21 flannel]# supervisorctl statusetcd-server-26-21 RUNNING pid 1040, uptime 16:07:40flanneld-26-21 RUNNING pid 202210, uptime 0:00:34kube-apiserver-26-21 RUNNING pid 5472, uptime 12:12:21kube-controller-manager-26-21 RUNNING pid 5695, uptime 12:11:16kube-kubelet-26-21 RUNNING pid 14382, uptime 11:41:11kube-proxy-26-21 RUNNING pid 31874, uptime 10:11:37kube-scheduler-26-21 RUNNING pid 5864, uptime 12:10:30

[root@vms21 ~]# route -nKernel IP routing tableDestination Gateway Genmask Flags Metric Ref Use Iface0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens160172.26.21.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0172.26.22.0 192.168.26.22 255.255.255.0 UG 0 0 0 ens160192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens160

注意到加了一条路由:172.26.22.0 192.168.26.22 255.255.255.0 UG 0 0 0 ens160

vms22.cos.com上部署成功时:

[root@vms22 certs]# supervisorctl updateflanneld-26-22: added process group[root@vms22 certs]# supervisorctl statusetcd-server-26-22 RUNNING pid 1038, uptime 16:05:36flanneld-26-22 RUNNING pid 153030, uptime 0:01:49kube-apiserver-26-22 RUNNING pid 1715, uptime 12:32:14kube-controller-manager-26-22 RUNNING pid 1729, uptime 12:31:23kube-kubelet-26-22 RUNNING pid 1833, uptime 11:44:38kube-proxy-26-22 RUNNING pid 97223, uptime 3:21:36kube-scheduler-26-22 RUNNING pid 1739, uptime 12:29:28

[root@vms22 etcd]# route -nKernel IP routing tableDestination Gateway Genmask Flags Metric Ref Use Iface0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens160172.26.21.0 192.168.26.21 255.255.255.0 UG 0 0 0 ens160172.26.22.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens160

注意到加了一条路由:172.26.21.0 192.168.26.21 255.255.255.0 UG 0 0 0 ens160

可见,flanneld的实质就是添加路由。

检查日志是否正常。如果etcd没有设置为v2版本,会有如下报错:

[root@vms22 certs]# tail /data/logs/flanneld/flanneld.stdout.logtimed outE0719 12:25:50.935471 175193 main.go:386] Couldn't fetch network config: client: response is invalid json. The endpoint is probably not valid etcd cluster endpoint.timed outE0719 12:25:51.938562 175193 main.go:386] Couldn't fetch network config: client: response is invalid json. The endpoint is probably not valid etcd cluster endpoint.

解决方案一:是因为flannel v0.12版本不支持etcd v3.4.9的默认v3版本,可以将etcd降低版本到3.3.x。

解决方案二:是因为flannel v0.12版本不支持etcd v3.4.9的默认v3版本,修改etcd启动配置,设置为v2版本。

本文档采用方案二进行处理

7 验证跨网络访问

采用之前创建的pod和svc

[root@vms21 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-ds-j24hm 1/1 Running 1 4h27m 172.26.22.2 vms22.cos.com <none> <none>nginx-ds-zk2bg 1/1 Running 1 4h27m 172.26.21.2 vms21.cos.com <none> <none>[root@vms21 ~]# kubectl get svc -o wideNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORkubernetes ClusterIP 10.168.0.1 <none> 443/TCP 5h3m <none>nginx-svc NodePort 10.168.250.78 <none> 80:26604/TCP 4h30m app=nginx-ds

节点—>pod容器、svc

在vms21:

[root@vms21 ~]# ping 172.26.22.2PING 172.26.22.2 (172.26.22.2) 56(84) bytes of data.64 bytes from 172.26.22.2: icmp_seq=1 ttl=63 time=0.703 ms64 bytes from 172.26.22.2: icmp_seq=2 ttl=63 time=1.16 ms64 bytes from 172.26.22.2: icmp_seq=3 ttl=63 time=1.10 ms...--- 172.26.22.2 ping statistics ---8 packets transmitted, 8 received, 0% packet loss, time 65msrtt min/avg/max/mdev = 0.616/1.034/2.406/0.551 ms[root@vms21 ~]# curl -I 172.26.22.2HTTP/1.1 200 OKServer: nginx/1.7.9Date: Mon, 20 Jul 2020 13:27:53 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 23 Dec 2014 16:25:09 GMTConnection: keep-aliveETag: "54999765-264"Accept-Ranges: bytes[root@vms21 ~]# ping 10.168.250.78PING 10.168.250.78 (10.168.250.78) 56(84) bytes of data.64 bytes from 10.168.250.78: icmp_seq=1 ttl=64 time=0.053 ms64 bytes from 10.168.250.78: icmp_seq=2 ttl=64 time=0.051 ms64 bytes from 10.168.250.78: icmp_seq=3 ttl=64 time=0.054 ms^C--- 10.168.250.78 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 39msrtt min/avg/max/mdev = 0.051/0.052/0.054/0.008 ms[root@vms21 ~]# ping nginx-svc #使用svc名称还不能访问,因为还没安装CoreDNS。ping: nginx-svc: Name or service not known

在vms22:

[root@vms22 etcd]# ping 172.26.21.2PING 172.26.21.2 (172.26.21.2) 56(84) bytes of data.64 bytes from 172.26.21.2: icmp_seq=1 ttl=63 time=0.769 ms64 bytes from 172.26.21.2: icmp_seq=2 ttl=63 time=1.62 ms64 bytes from 172.26.21.2: icmp_seq=3 ttl=63 time=0.455 ms^C--- 172.26.21.2 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 6msrtt min/avg/max/mdev = 0.455/0.947/1.617/0.490 ms[root@vms22 etcd]# curl -I 172.26.21.2HTTP/1.1 200 OKServer: nginx/1.7.9Date: Mon, 20 Jul 2020 13:39:24 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 23 Dec 2014 16:25:09 GMTConnection: keep-aliveETag: "54999765-264"Accept-Ranges: bytes[root@vms22 etcd]# ping 10.168.250.78PING 10.168.250.78 (10.168.250.78) 56(84) bytes of data.64 bytes from 10.168.250.78: icmp_seq=1 ttl=64 time=0.050 ms64 bytes from 10.168.250.78: icmp_seq=2 ttl=64 time=0.032 ms64 bytes from 10.168.250.78: icmp_seq=3 ttl=64 time=0.084 ms^C--- 10.168.250.78 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 34msrtt min/avg/max/mdev = 0.032/0.055/0.084/0.022 m[root@vms22 etcd]# ping nginx-svc #使用svc名称还不能访问,因为还没安装CoreDNS。ping: nginx-svc: Name or service not known

进入pod容器进行ping、curl -I其它pod容器的IP和所在节点IP,都可以。

在vms21:

[root@vms21 ~]# kubectl exec -it nginx-ds-zk2bg -- bash #进入本节点的podroot@nginx-ds-zk2bg:/# ping 172.26.22.2PING 172.26.22.2 (172.26.22.2): 48 data bytes56 bytes from 172.26.22.2: icmp_seq=0 ttl=62 time=0.817 ms56 bytes from 172.26.22.2: icmp_seq=1 ttl=62 time=0.662 ms56 bytes from 172.26.22.2: icmp_seq=2 ttl=62 time=0.811 ms^C--- 172.26.22.2 ping statistics ---3 packets transmitted, 3 packets received, 0% packet lossround-trip min/avg/max/stddev = 0.662/0.763/0.817/0.072 msroot@nginx-ds-zk2bg:/# ping 192.168.26.22PING 192.168.26.22 (192.168.26.22): 48 data bytes56 bytes from 192.168.26.22: icmp_seq=0 ttl=63 time=1.874 ms56 bytes from 192.168.26.22: icmp_seq=1 ttl=63 time=0.623 ms56 bytes from 192.168.26.22: icmp_seq=2 ttl=63 time=0.815 ms^C--- 192.168.26.22 ping statistics ---3 packets transmitted, 3 packets received, 0% packet lossround-trip min/avg/max/stddev = 0.623/1.104/1.874/0.550 ms

在vms22:

[root@vms22 etcd]# kubectl exec -it nginx-ds-j24hm -- bash #进入本节点的podroot@nginx-ds-j24hm:/# ping 172.26.21.2PING 172.26.21.2 (172.26.21.2): 48 data bytes56 bytes from 172.26.21.2: icmp_seq=0 ttl=62 time=0.882 ms56 bytes from 172.26.21.2: icmp_seq=1 ttl=62 time=0.846 ms^C--- 172.26.21.2 ping statistics ---2 packets transmitted, 2 packets received, 0% packet lossround-trip min/avg/max/stddev = 0.846/0.864/0.882/0.000 msroot@nginx-ds-j24hm:/# ping 192.168.26.21PING 192.168.26.21 (192.168.26.21): 48 data bytes56 bytes from 192.168.26.21: icmp_seq=0 ttl=63 time=0.934 ms56 bytes from 192.168.26.21: icmp_seq=1 ttl=63 time=0.794 ms^C--- 192.168.26.21 ping statistics ---2 packets transmitted, 2 packets received, 0% packet lossround-trip min/avg/max/stddev = 0.794/0.864/0.934/0.070 ms

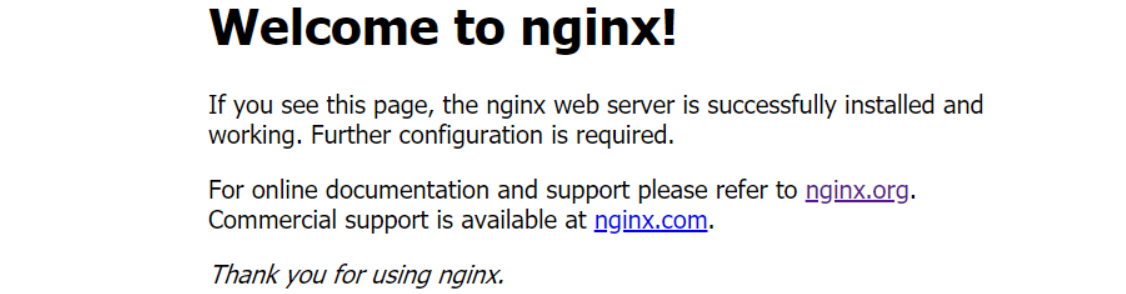

在浏览器输入:http://192.168.26.21:26604/ 或 http://192.168.26.22:26604/

进一步实验:可以进入容器,修改主页,观察流量导入的哪个节点的pod。

8 解决pod间IP透传问题

进入vms21所在的pod

[root@vms21 ~]# kubectl exec -it nginx-ds-zk2bg -- bash

root@nginx-ds-zk2bg:/# cat /etc/issue #因为没有curl,尝试安装

Debian GNU/Linux 7 \n \l

#不好安装,退出pod升级nginx镜像

[root@vms21 ~]# kubectl get ds -o wide

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

nginx-ds 2 2 2 2 2 <none> 6h35m my-nginx harbor.op.com/public/nginx:v1.7.9 app=nginx-ds

[root@vms21 ~]# kubectl set image ds nginx-ds my-nginx=harbor.op.com/public/nginx:v2007.20

daemonset.apps/nginx-ds image updated

[root@vms21 ~]# kubectl get ds -o wide

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

nginx-ds 2 2 1 0 1 <none> 6h52m my-nginx harbor.op.com/public/nginx:v2007.20 app=nginx-ds

[root@vms21 ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-g77wg 1/1 Running 0 98s 172.26.22.2 vms22.cos.com <none> <none>

nginx-ds-zggfw 1/1 Running 0 71s 172.26.21.2 vms21.cos.com <none> <none>

- 从本节点vms21的pod进行

curl -I 172.26.22.2

[root@vms21 ~]# kubectl exec -it nginx-ds-zggfw -- bash

root@nginx-ds-zggfw:/# curl

curl: try 'curl --help' or 'curl --manual' for more information

root@nginx-ds-zggfw:/# curl -I 172.26.22.2

HTTP/1.1 200 OK

Server: nginx/1.19.1

Date: Mon, 20 Jul 2020 15:52:15 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 07 Jul 2020 15:52:25 GMT

Connection: keep-alive

ETag: "5f049a39-264"

Accept-Ranges: bytes

root@nginx-ds-zggfw:/#

- 从vms22的pod(

172.26.22.2)查看日志

[root@vms22 etcd]# kubectl logs -f nginx-ds-g77wg

192.168.26.21 - - [20/Jul/2020:15:52:15 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"

发现从vms21的pod访问vms22的pod,来源IP(192.168.26.21 vms21)不是pod本身的IP,已经进行了转换。这种转换没必要,是多余的,因此需要优化。

所有Node上操作,优化SNAT网络

[root@vms21 ~]# iptables-save |grep POSTROUTING|grep docker # 引发问题的规则,需要删除

-A POSTROUTING -s 172.26.21.0/24 ! -o docker0 -j MASQUERADE

安装

iptables-services

[root@vms21 ~]# rpm -q iptables-services

package iptables-services is not installed

[root@vms21 ~]# yum install -y iptables-services

...

Installed:

iptables-services-1.8.4-10.el8.x86_64

Complete!

[root@vms21 ~]# systemctl start iptables.service ; systemctl enable iptables.service

在各运算节点上增加iptables规则

注意:iptables规则各主机的略有不同,其他运算节点上执行时注意修改。

- 优化SNAT规则,各运算节点之间的各POD之间的网络通信不再出网

vms21:

- 需要删除的规则

[root@vms21 ~]# iptables-save |grep POSTROUTING|grep docker

-A POSTROUTING -s 172.26.21.0/24 ! -o docker0 -j MASQUERADE

[root@vms21 ~]# iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

- 删除规则

[root@vms21 ~]# iptables -t nat -D POSTROUTING -s 172.26.21.0/24 ! -o docker0 -j MASQUERADE

[root@vms21 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@vms21 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

- 添加规则并保存

[root@vms21 ~]# iptables -t nat -I POSTROUTING -s 172.26.21.0/24 ! -d 172.26.0.0/16 ! -o docker0 -j MASQUERADE

[root@vms21 ~]# iptables-save |grep POSTROUTING|grep docker

-A POSTROUTING -s 172.26.21.0/24 ! -d 172.26.0.0/16 ! -o docker0 -j MASQUERADE

[root@vms21 ~]# iptables-save > /etc/sysconfig/iptables

192.168.26.21主机上的,来源是172.26.21.0/24段的docker的ip,目标ip不是172.26.0.0/16段,网络发包不从docker0桥设备出站的,才进行SNAT转换

vms22:

- 需要删除的规则

[root@vms22 ~]# iptables-save |grep POSTROUTING|grep docker

-A POSTROUTING -s 172.26.22.0/24 ! -o docker0 -j MASQUERADE

[root@vms22 ~]# iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

- 删除规则

[root@vms22 ~]# iptables -t nat -D POSTROUTING -s 172.26.22.0/24 ! -o docker0 -j MASQUERADE

[root@vms22 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@vms22 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

- 添加规则并保存

[root@vms22 ~]# iptables -t nat -I POSTROUTING -s 172.26.22.0/24 ! -d 172.26.0.0/16 ! -o docker0 -j MASQUERADE

[root@vms22 ~]# iptables-save |grep POSTROUTING|grep docker

-A POSTROUTING -s 172.26.22.0/24 ! -d 172.26.0.0/16 ! -o docker0 -j MASQUERADE

[root@vms22 ~]# iptables-save > /etc/sysconfig/iptables

192.168.26.22主机上的,来源是172.26.22.0/24段的docker的ip,目标ip不是172.26.0.0/16段,网络发包不从docker0桥设备出站的,才进行SNAT转换

此时再vms21的pod对vms22的pod进行curl测试:(反过来进行测试,结论一样)

[root@vms21 ~]# kubectl exec -it nginx-ds-zggfw -- bash

root@nginx-ds-zggfw:/# curl -I 172.26.22.2

[root@vms22 etcd]# kubectl logs -f nginx-ds-g77wg

...

172.26.21.2 - - [20/Jul/2020:16:46:51 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.64.0" "-"

[root@vms22 etcd]# curl -I 172.26.21.2 #从宿主机curl

[root@vms21 ~]# kubectl logs -f nginx-ds-zggfw #看到的是宿主机IP

...

192.168.26.22 - - [20/Jul/2020:17:11:39 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.61.1" "-"

补充:iptables出现异常时的修改与保存

[root@vms21 supervisord.d]# iptables-save | grep -i postrouting

:POSTROUTING ACCEPT [0:0]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.26.21.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.26.21.3/32 -d 172.26.21.3/32 -p tcp -m tcp --dport 443 -j MASQUERADE

-A POSTROUTING -s 172.26.21.3/32 -d 172.26.21.3/32 -p tcp -m tcp --dport 80 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

:POSTROUTING ACCEPT [0:0]

[root@vms21 supervisord.d]# iptables -t nat -D POSTROUTING -s 172.26.21.0/24 ! -o docker0 -j MASQUERADE

[root@vms21 supervisord.d]# service iptables save

iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

[root@vms21 supervisord.d]# iptables-save | grep -i postrouting

:POSTROUTING ACCEPT [0:0]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.26.21.3/32 -d 172.26.21.3/32 -p tcp -m tcp --dport 443 -j MASQUERADE

-A POSTROUTING -s 172.26.21.3/32 -d 172.26.21.3/32 -p tcp -m tcp --dport 80 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

:POSTROUTING ACCEPT [0:0]

[root@vms22 supervisord.d]# iptables-save | grep -i postrouting

:POSTROUTING ACCEPT [0:0]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -s 172.26.22.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.26.22.2/32 -d 172.26.22.2/32 -p tcp -m tcp --dport 443 -j MASQUERADE

-A POSTROUTING -s 172.26.22.2/32 -d 172.26.22.2/32 -p tcp -m tcp --dport 80 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

:POSTROUTING ACCEPT [0:0]

[root@vms22 supervisord.d]# iptables -t nat -D POSTROUTING -s 172.26.22.0/24 ! -o docker0 -j MASQUERADE

[root@vms22 supervisord.d]# service iptables save

iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

[root@vms22 supervisord.d]# iptables-save | grep -i postrouting

:POSTROUTING ACCEPT [0:0]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.26.22.2/32 -d 172.26.22.2/32 -p tcp -m tcp --dport 443 -j MASQUERADE

-A POSTROUTING -s 172.26.22.2/32 -d 172.26.22.2/32 -p tcp -m tcp --dport 80 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

:POSTROUTING ACCEPT [0:0]

核心插件-CoreDNS服务发现

CoreDNS用于实现 service -> cluster IP 的DNS解析。以容器的方式交付到k8s集群,由k8s自行管理,降低人工操作的复杂度。 github下载地址:https://github.com/coredns/coredns (这里不使用二进制包)

1 coredns的资源清单文件

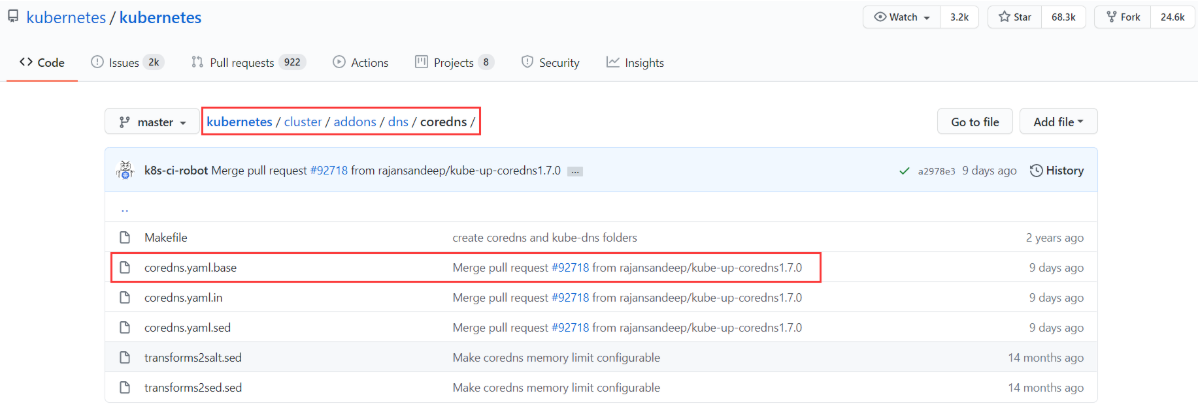

github下载地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns

运维主机vms200.cos.com上:

复制

coredns.yaml.base文件内容(用wget下载时注意选择raw格式)

[root@vms200 coredns]# pwd

/data/k8s-yaml/coredns

[root@vms200 coredns]# vi /data/k8s-yaml/coredns/coredns-1.7.0.yaml #复制`coredns.yaml.base`文件内容

修改

coredns-1.7.0.yaml

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local 10.168.0.0/16

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

#image: k8s.gcr.io/coredns:1.7.0

image: harbor.op.com/public/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 150Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

- 浏览器打开:http://k8s-yaml.op.com/coredns/ 检查资源配置清单文件是否正确创建。

- 此文件可以按资源类型分拆为4个yaml文件:rabc.yaml、configmap.yaml、deployment.yaml、service.yaml。

- 主要修改IP地址、内存限制大小。

[root@vms200 coredns]# diff coredns-1.7.0.yaml coredns-1.7.0.yaml.base

70c70,74

< kubernetes cluster.local 10.168.0.0/16

---

> kubernetes __PILLAR__DNS__DOMAIN__ in-addr.arpa ip6.arpa {

> pods insecure

> fallthrough in-addr.arpa ip6.arpa

> ttl 30

> }

130,131c134

< #image: k8s.gcr.io/coredns:1.7.0

< image: harbor.op.com/public/coredns:1.7.0

---

> image: k8s.gcr.io/coredns:1.7.0

135c138

< memory: 150Mi

---

> memory: __PILLAR__DNS__MEMORY__LIMIT__

201c204

< clusterIP: 10.168.0.2

---

> clusterIP: __PILLAR__DNS__SERVER__

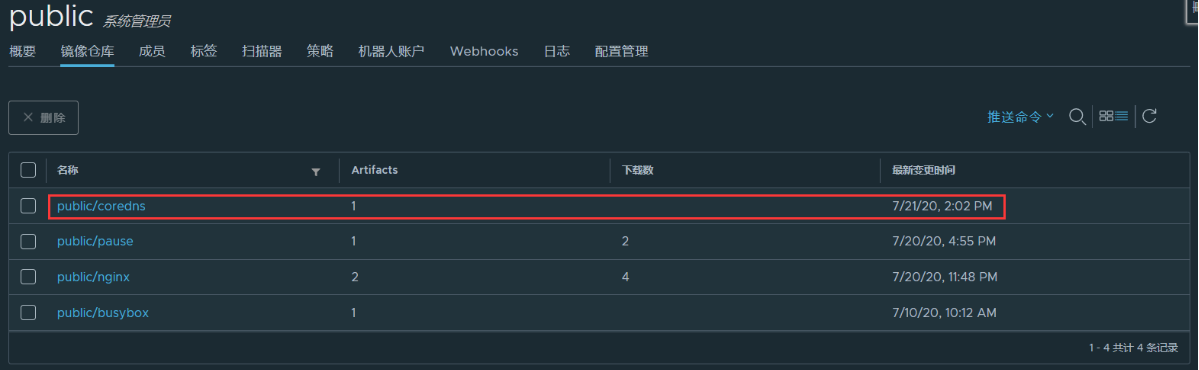

提前下载镜像

[root@vms200 coredns]# grep image coredns-1.7.0.yaml

image: k8s.gcr.io/coredns:1.7.0

imagePullPolicy: IfNotPresent

[root@vms200 coredns]# docker pull k8s.gcr.io/coredns:1.7.0 #很难拉取,需要科学上网

Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

[root@vms200 coredns]# docker pull coredns/coredns:1.7.0

...

[root@vms200 coredns]# docker images | grep coredns

coredns/coredns 1.7.0 bfe3a36ebd25 4 weeks ago 45.2MB

[root@vms200 coredns]# docker tag coredns/coredns:1.7.0 harbor.op.com/public/coredns:1.7.0

[root@vms200 coredns]# docker push harbor.op.com/public/coredns:1.7.0

The push refers to repository [harbor.op.com/public/coredns]

96d17b0b58a7: Pushed

225df95e717c: Pushed

1.7.0: digest: sha256:242d440e3192ffbcecd40e9536891f4d9be46a650363f3a004497c2070f96f5a size: 739

修改

coredns-1.7.0.yaml:image: harbor.op.com/public/coredns:1.7.0

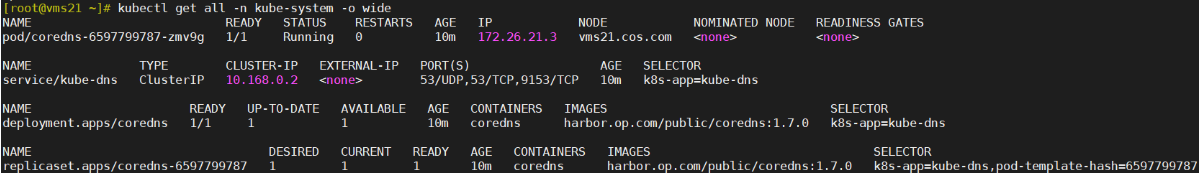

2 在任意运算节点上应用资源配置清单

vms21.cos.com上

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/coredns/coredns-1.7.0.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

[root@vms21 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-6597799787-zmv9g 1/1 Running 0 2m42s 172.26.21.3 vms21.cos.com <none> <none>

[root@vms21 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 3m30s

[root@vms21 ~]# kubectl get all -n kube-system -o wide

3 检查测试dns

vms21.cos.com上

检查dns解析

nginx-svc是否成功

[root@vms21 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.168.0.1 <none> 443/TCP 22h

nginx-svc NodePort 10.168.250.78 <none> 80:26604/TCP 21h

[root@vms21 ~]# dig -t A nginx-svc.default.svc.cluster.local @10.168.0.2 +short #内网解析OK

10.168.250.78

[root@vms21 ~]# dig -t A vms21.cos.com @10.168.0.2 +short

192.168.26.21

[root@vms21 ~]# dig -t A www.baidu.com @10.168.0.2 +short

www.a.shifen.com.

14.215.177.39

测试DNS,集群外必须使用FQDN(Fully Qualified Domain Name),全域名

进入pod容器,可以ping通

nginx-svc

[root@vms21 ~]# kubectl run pod1 --image=harbor.op.com/public/busybox:v2007.10 -- sh -c "sleep 10000"

pod/pod1 created

[root@vms21 ~]# kubectl exec -it pod1 -- sh

/ # ping nginx-svc

PING nginx-svc (10.168.250.78): 56 data bytes

64 bytes from 10.168.250.78: seq=0 ttl=64 time=0.196 ms

64 bytes from 10.168.250.78: seq=1 ttl=64 time=0.060 ms

64 bytes from 10.168.250.78: seq=2 ttl=64 time=0.090 ms

^C

--- nginx-svc ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.060/0.115/0.196 ms

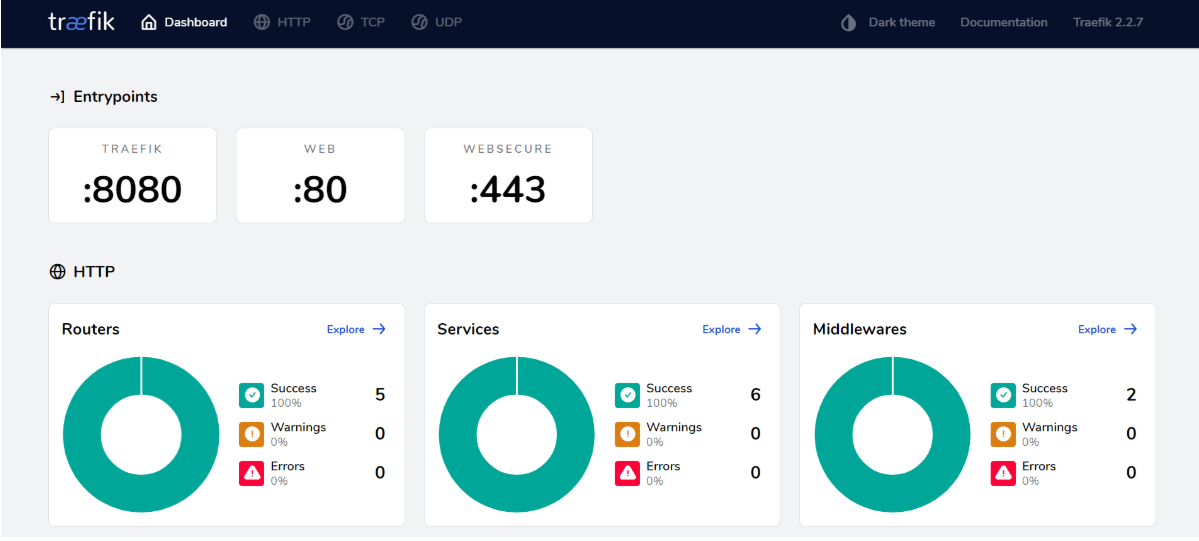

核心插件-ingress(服务暴露)控制器-traefik

Ingress-Controller

- service是将一组pod管理起来,提供了一个cluster ip和service name的统一访问入口,屏蔽了pod的ip变化。

- ingress 是一种基于七层的流量转发策略,即将符合条件的域名或者location流量转发到特定的service上,而ingress仅仅是一种规则,k8s内部并没有自带代理程序完成这种规则转发。

- ingress-controller 是一个代理服务器,将ingress的规则能真正实现的方式,常用的有 nginx、traefik、haproxy。但是在k8s集群中,建议使用traefik,性能比haroxy强大,更新配置不需要重载服务,是首选的ingress-controller。

- github地址:https://github.com/containous/traefik

- 参考一:http://www.mydlq.club/article/72/ https://github.com/my-dlq/blog-example/tree/master/kubernetes

- 参考二:https://cloud.tencent.com/developer/article/1636080 https://zhuanlan.zhihu.com/p/91905771

本文档记录在 Kubernetes 环境下部署并配置 Traefik v2.2.7。

- 当部署完 Traefik 后还需要创建外部访问 Kubernetes 内部应用的路由规则,Traefik 支持两种方式创建路由规则,一种是创建 Traefik 自定义

Kubernetes CRD资源方式,还有一种是创建Kubernetes Ingress资源方式。 - 注意:这里 Traefik 是部署在 Kube-system Namespace 下,如果不想部署到配置的 Namespace,需要修改下面部署文件中的 Namespace 参数。

1 创建 CRD 资源

在 Traefik v2.0 版本后,开始使用 CRD(Custom Resource Definition)来完成路由配置等,所以需要提前创建 CRD 资源。

运维主机vms200.cos.com上:

[root@vms200 ~]# cd /data/k8s-yaml/traefik [root@vms200 traefik]# vi /data/k8s-yaml/traefik/traefik-crd.yaml #创建 traefik-crd.yaml 文件

## IngressRoute

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutes.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRoute

plural: ingressroutes

singular: ingressroute

---

## IngressRouteTCP

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutetcps.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteTCP

plural: ingressroutetcps

singular: ingressroutetcp

---

## Middleware

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: middlewares.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: Middleware

plural: middlewares

singular: middleware

---

## TLSOption

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsoptions.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSOption

plural: tlsoptions

singular: tlsoption

---

## TraefikService

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: traefikservices.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TraefikService

plural: traefikservices

singular: traefikservice

---

## TLSStore

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsstores.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSStore

plural: tlsstores

singular: tlsstore

scope: Namespaced

---

## IngressRouteUDP

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressrouteudps.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteUDP

plural: ingressrouteudps

singular: ingressrouteudp

scope: Namespaced

vms21.cos.com上:创建 Traefik CRD 资源

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/traefik/traefik-crd.yaml

customresourcedefinition.apiextensions.k8s.io/ingressroutes.traefik.containo.us created

customresourcedefinition.apiextensions.k8s.io/ingressroutetcps.traefik.containo.us created

customresourcedefinition.apiextensions.k8s.io/middlewares.traefik.containo.us created

customresourcedefinition.apiextensions.k8s.io/tlsoptions.traefik.containo.us created

customresourcedefinition.apiextensions.k8s.io/traefikservices.traefik.containo.us created

customresourcedefinition.apiextensions.k8s.io/tlsstores.traefik.containo.us created

customresourcedefinition.apiextensions.k8s.io/ingressrouteudps.traefik.containo.us created

2 创建 RBAC 权限

Kubernetes 在 1.6 版本中引入了基于角色的访问控制(RBAC)策略,方便对 Kubernetes 资源和 API 进行细粒度控制。Traefik 需要一定的权限,所以,这里提前创建好 Traefik ServiceAccount 并分配一定的权限。

运维主机vms200.cos.com上:

[root@vms200 traefik]# vi /data/k8s-yaml/traefik/traefik-rbac.yaml #创建 traefik-rbac.yaml 文件

## ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-system

name: traefik-ingress-controller

---

## ClusterRole

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups: [""]

resources: ["services","endpoints","secrets"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses/status"]

verbs: ["update"]

- apiGroups: ["traefik.containo.us"]

resources: ["middlewares","ingressroutes","ingressroutetcps","tlsoptions","ingressrouteudps","traefikservices","tlsstores"]

verbs: ["get","list","watch"]

---

## ClusterRoleBinding

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

vms21.cos.com上:创建 Traefik RBAC 资源

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/traefik/traefik-rbac.yaml -n kube-system

serviceaccount/traefik-ingress-controller created

clusterrole.rbac.authorization.k8s.io/traefik-ingress-controller created

clusterrolebinding.rbac.authorization.k8s.io/traefik-ingress-controller created

- -n:指定部署的 Namespace

3 创建 Traefik 配置文件

由于 Traefik 配置很多,通过 CLI 定义不是很方便,一般时候都会通过配置文件配置 Traefik 参数,然后存入 ConfigMap,将其挂入 Traefik 中。

运维主机vms200.cos.com上:

- 下面配置中可以通过配置 kubernetesCRD 与 kubernetesIngress 两项参数,让 Traefik 支持 CRD 与 Ingress 两种路由方式。

[root@vms200 traefik]# vi /data/k8s-yaml/traefik/traefik-config.yaml #创建 traefik-config.yaml 文件

kind: ConfigMap

apiVersion: v1

metadata:

name: traefik-config

data:

traefik.yaml: |-

ping: "" ## 启用 Ping

serversTransport:

insecureSkipVerify: true ## Traefik 忽略验证代理服务的 TLS 证书

api:

insecure: true ## 允许 HTTP 方式访问 API

dashboard: true ## 启用 Dashboard

debug: false ## 启用 Debug 调试模式

metrics:

prometheus: "" ## 配置 Prometheus 监控指标数据,并使用默认配置

entryPoints:

web:

address: ":80" ## 配置 80 端口,并设置入口名称为 web

websecure:

address: ":443" ## 配置 443 端口,并设置入口名称为 websecure

providers:

kubernetesCRD: "" ## 启用 Kubernetes CRD 方式来配置路由规则

kubernetesIngress: "" ## 启动 Kubernetes Ingress 方式来配置路由规则

log:

filePath: "" ## 设置调试日志文件存储路径,如果为空则输出到控制台

level: error ## 设置调试日志级别

format: json ## 设置调试日志格式

accessLog:

filePath: "" ## 设置访问日志文件存储路径,如果为空则输出到控制台

format: json ## 设置访问调试日志格式

bufferingSize: 0 ## 设置访问日志缓存行数

filters:

#statusCodes: ["200"] ## 设置只保留指定状态码范围内的访问日志

retryAttempts: true ## 设置代理访问重试失败时,保留访问日志

minDuration: 20 ## 设置保留请求时间超过指定持续时间的访问日志

fields: ## 设置访问日志中的字段是否保留(keep 保留、drop 不保留)

defaultMode: keep ## 设置默认保留访问日志字段

names: ## 针对访问日志特别字段特别配置保留模式

ClientUsername: drop

headers: ## 设置 Header 中字段是否保留

defaultMode: keep ## 设置默认保留 Header 中字段

names: ## 针对 Header 中特别字段特别配置保留模式

User-Agent: redact

Authorization: drop

Content-Type: keep

#tracing: ## 链路追踪配置,支持 zipkin、datadog、jaeger、instana、haystack 等

# serviceName: ## 设置服务名称(在链路追踪端收集后显示的服务名)

# zipkin: ## zipkin配置

# sameSpan: true ## 是否启用 Zipkin SameSpan RPC 类型追踪方式

# id128Bit: true ## 是否启用 Zipkin 128bit 的跟踪 ID

# sampleRate: 0.1 ## 设置链路日志采样率(可以配置0.0到1.0之间的值)

# httpEndpoint: http://localhost:9411/api/v2/spans ## 配置 Zipkin Server 端点

vms21.cos.com上:创建 Traefik ConfigMap 资源

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/traefik/traefik-config.yaml -n kube-system

configmap/traefik-config created

- -n: 指定程序启的 Namespace

4 节点设置 Label 标签

由于是

Kubernetes DeamonSet这种方式部署Traefik,所以需要提前给节点设置Label,这样当程序部署时会自动调度到设置Label的节点上。

vms21.cos.com上:

[root@vms21 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms21.cos.com Ready master,worker 2d23h v1.18.5

vms22.cos.com Ready master,worker 2d23h v1.18.5

[root@vms21 ~]# kubectl label nodes vms21.cos.com IngressProxy=true

node/vms21.cos.com labeled

[root@vms21 ~]# kubectl label nodes vms22.cos.com IngressProxy=true

node/vms22.cos.com labeled

[root@vms21 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

vms21.cos.com Ready master,worker 2d23h v1.18.5 IngressProxy=true,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms21.cos.com,kubernetes.io/os=linux,node-role.kubernetes.io/master=,node-role.kubernetes.io/worker=

vms22.cos.com Ready master,worker 2d23h v1.18.5 IngressProxy=true,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms22.cos.com,kubernetes.io/os=linux,node-role.kubernetes.io/master=,node-role.kubernetes.io/worker=

[root@vms21 ~]# kubectl label nodes vms22.cos.com IngressProxy-

[root@vms21 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

vms21.cos.com Ready master,worker 2d23h v1.18.5 IngressProxy=true,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms21.cos.com,kubernetes.io/os=linux,node-role.kubernetes.io/master=,node-role.kubernetes.io/worker=

vms22.cos.com Ready master,worker 2d23h v1.18.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms22.cos.com,kubernetes.io/os=linux,node-role.kubernetes.io/master=,node-role.kubernetes.io/worker=

如果想删除标签,可以使用

kubectl label nodes vms22.cos.com IngressProxy-命令

5 Kubernetes 部署 Traefik

下面将用

DaemonSet方式部署Traefik,便于在多服务器间扩展,用hostport方式绑定服务器80、443端口,方便流量通过物理机进入Kubernetes内部。

运维主机vms200.cos.com上:

[root@vms200 traefik]# vi /data/k8s-yaml/traefik/traefik-deploy.yaml #创建 traefik 部署 traefik-deploy.yaml 文件

apiVersion: v1

kind: Service

metadata:

name: traefik

spec:

ports:

- name: web

port: 80

- name: websecure

port: 443

- name: admin

port: 8080

selector:

app: traefik

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: traefik-ingress-controller

labels:

app: traefik

spec:

selector:

matchLabels:

app: traefik

template:

metadata:

name: traefik

labels:

app: traefik

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 1

containers:

- image: harbor.op.com/public/traefik:v2.2.7

name: traefik-ingress-lb

ports:

- name: web

containerPort: 80

hostPort: 81 ## 将容器端口绑定所在服务器的 81 端口

- name: websecure

containerPort: 443

hostPort: 443 ## 将容器端口绑定所在服务器的 443 端口

- name: admin

containerPort: 8080 ## Traefik Dashboard 端口

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 1000m

memory: 1024Mi

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --configfile=/config/traefik.yaml

volumeMounts:

- mountPath: "/config"

name: "config"

readinessProbe:

httpGet:

path: /ping

port: 8080

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

livenessProbe:

httpGet:

path: /ping

port: 8080

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumes:

- name: config

configMap:

name: traefik-config

tolerations: ## 设置容忍所有污点,防止节点被设置污点

- operator: "Exists"

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

IngressProxy: "true"

- 修改镜像:

- image: harbor.op.com/public/traefik:v2.2.7 - 提前拉取镜像并上传自己的私有仓库(运维主机

vms200.cos.com上)

[root@vms200 harbor]# docker pull traefik:v2.2.7

...

[root@vms200 harbor]# docker images |grep traefik

traefik v2.2.7 de086c281ea7 2 days ago 78.4MB

[root@vms200 harbor]# docker tag traefik:v2.2.7 harbor.op.com/public/traefik:v2.2.7

[root@vms200 harbor]# docker push harbor.op.com/public/traefik:v2.2.7

...

vms21.cos.com上:Kubernetes 部署 Traefik

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/traefik/traefik-deploy.yaml -n kube-system

service/traefik created

daemonset.apps/traefik-ingress-controller created

[root@vms21 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6597799787-zmv9g 1/1 Running 2 2d1h

traefik-ingress-controller-fmfn5 1/1 Running 0 30s

[root@vms21 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 2d1h

traefik ClusterIP 10.168.183.81 <none> 80/TCP,443/TCP,8080/TCP 5s

到此traefik:v2.2.7成功部署完成。

6 配置路由规则

方式一:使用 CRD 方式配置 Traefik 路由规则

使用 CRD 方式创建路由规则可言参考 Traefik 文档 Kubernetes IngressRoute

配置 HTTP 路由规则 (Traefik Dashboard 为例)

运维主机vms200.cos.com上:[目录:/data/k8s-yaml/traefik/]

创建 Traefik Dashboard 路由规则 traefik-dashboard-route.yaml 文件

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: traefik-dashboard-route

spec:

entryPoints:

- web

routes:

- match: Host(`traefik.op.com`)

kind: Rule

services:

- name: traefik

port: 8080

vms21.cos.com上:创建 Traefik Dashboard 路由规则对象

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/traefik/traefik-dashboard-route.yaml -n kube-system

ingressroute.traefik.containo.us/traefik-dashboard-route created

[root@vms21 ~]# kubectl get ingressroute -n kube-system

NAME AGE

traefik-dashboard-route 10m

此时还没配置DNS解析traefik.op.com,在这里可以通过配置hosts文件(C:\Windows\System32\drivers\etc\hosts)达到解析目的。

- 在host文件末尾增加一行:

192.168.26.21 traefik.op.com,保存。 - 打开(或重启)浏览器输入地址:http://traefik.op.com` 打开 traefik Dashboard。

配置 HTTPS 路由规则(Kubernetes Dashboard 为例)(此步在部署dashborad后再做)

这里我们创建 Kubernetes 的 Dashboard 看板创建路由规则,它是 Https 协议方式,由于它是需要使用 Https 请求,所以我们配置基于 Https 的路由规则并指定证书。

创建私有证书 dashboard-tls.key、dashboard-tls.crt 文件

- 创建自签名证书 (

vms200.cos.com上:)

[root@vms200 certs]# openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout dashboard-tls.key -out dashboard-tls.crt -subj "/CN=dashboard.op.com"

Generating a RSA private key

...............................................................................................................+++++

............................................................................+++++

writing new private key to 'dashboard-tls.key'

-----

[root@vms200 certs]# ll |grep dashboard-tls.

-rw-r--r-- 1 root root 1131 Jul 24 23:06 dashboard-tls.crt

-rw------- 1 root root 1708 Jul 24 23:06 dashboard-tls.key

以上无效时,使用下面的方法创建:

[root@vms200 certs]# (umask 077; openssl genrsa -out dashboard.op.com.key 2048)

Generating RSA private key, 2048 bit long modulus (2 primes)

...................................+++++

..............................................................+++++

e is 65537 (0x010001)

[root@vms200 certs]# openssl req -new -key dashboard.op.com.key -out dashboard.op.com.csr -subj "/CN=dashboard.op.com/C=CN/ST=beijing/L=beijing/O=op/OU=ops"

[root@vms200 certs]# openssl x509 -req -in dashboard.op.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.op.com.crt -days 3650

Signature ok

subject=CN = dashboard.op.com, C = CN, ST = beijing, L = beijing, O = op, OU = ops

Getting CA Private Key

[root@vms200 certs]# ls dashboard.op.com.* -l

-rw-r--r-- 1 root root 1204 Jul 25 07:22 dashboard.op.com.crt

-rw-r--r-- 1 root root 1005 Jul 25 07:22 dashboard.op.com.csr

-rw------- 1 root root 1675 Jul 25 07:21 dashboard.op.com.key

[root@vms200 certs]# cfssl-certinfo -cert dashboard.op.com.crt #查看证书信息

- 将证书存储到 Kubernetes Secret 中(

vms21.cos.com上:[/opt/kubernetes/server/bin/cert])

[root@vms21 ~]# cd /opt/kubernetes/server/bin/cert/

[root@vms21 cert]# scp vms200:/opt/certs/dashboard-tls.crt . #dashboard.op.com.crt注意更换

[root@vms21 cert]# scp vms200:/opt/certs/dashboard-tls.key . #dashboard.op.com.key注意更换

[root@vms21 cert]# ll |grep dashboard-tls.

-rw-r--r-- 1 root root 1131 Jul 24 23:14 dashboard-tls.crt

-rw------- 1 root root 1708 Jul 24 23:15 dashboard-tls.key

[root@vms21 cert]# kubectl create secret generic dashboard-op-route-tls --from-file=dashboard-tls.crt --from-file=dashboard-tls.key -n kubernetes-dashboard

secret/dashboard-op-route-tls created

创建 Traefik Dashboard CRD 路由规则 kubernetes-dashboard-route.yaml 文件 (

vms200.cos.com上:)

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: kubernetes-dashboard-route

spec:

entryPoints:

- websecure

tls:

secretName: dashboard-op-route-tls

routes:

- match: Host(`dashboard.op.com`)

kind: Rule

services:

- name: kubernetes-dashboard

port: 443

创建 Kubernetes Dashboard 路由规则对象 (

vms21.cos.com上:)

[root@vms21 cert]# kubectl apply -f http://k8s-yaml.op.com/traefik/kubernetes-dashboard-route.yaml -n kubernetes-dashboard

ingressroute.traefik.containo.us/kubernetes-dashboard-route created

[root@vms21 cert]# kubectl get ingressroute -n kubernetes-dashboard

NAME AGE

kubernetes-dashboard-route 95s

由于域名dashboard.op.com已经做了DNS解析,并通过nginx反向代理,本部分的配置规则不能直接让浏览器登陆,这里通过配置hosts文件(C:\Windows\System32\drivers\etc\hosts)达到解析目的。

- 在host文件末尾增加一行:

192.168.26.21 dashboard.op.com,保存。 - 登录前,如果k8s集群内dashboard有其他规则,则需要删除,如:

[root@vms21 cert]# kubectl delete ingress kubernetes-dashboard-ingress -n kubernetes-dashboard

ingress.extensions "kubernetes-dashboard-ingress" deleted

- 打开(或重启)浏览器输入地址:http://dashboard.op.com 打开 Kubernetes Dashboard。

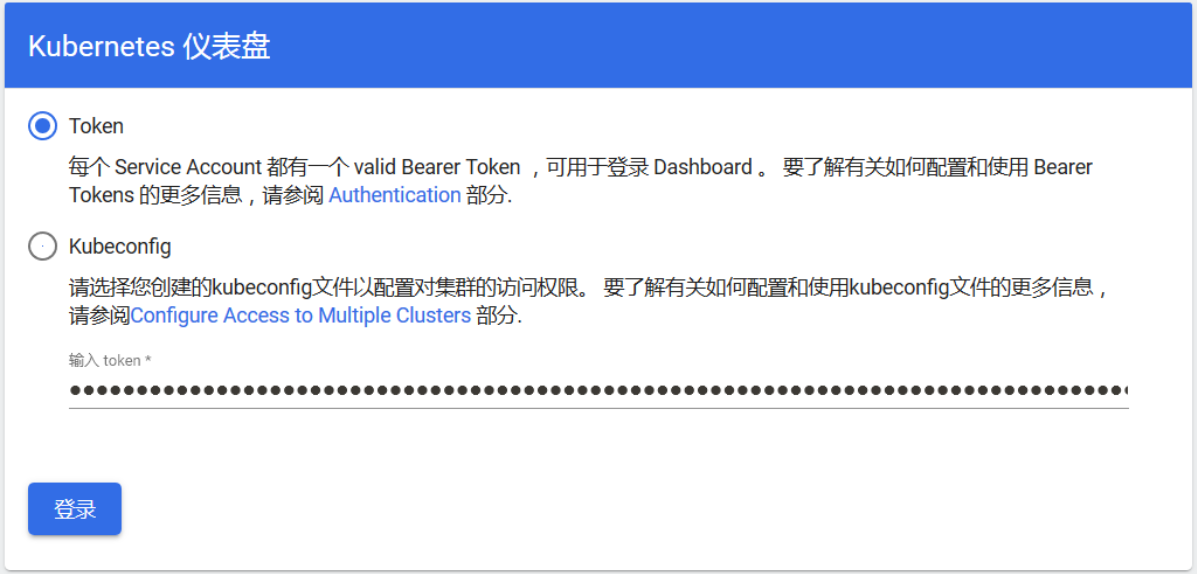

输入token进行登录:

方式二:使用 Ingress 方式配置 Traefik 路由规则

使用 Ingress 方式创建路由规则可言参考 Traefik 文档 Kubernetes Ingress

配置 HTTP 路由规则 (Traefik Dashboard 为例)

运维主机vms200.cos.com上:[目录:/data/k8s-yaml/traefik/]

创建 Traefik Ingress 路由规则 traefik-dashboard-ingress.yaml 文件

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-dashboard-ingress

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

traefik.ingress.kubernetes.io/router.entrypoints: web

spec:

rules:

- host: traefik.op.com

http:

paths:

- path: /

backend:

serviceName: traefik

servicePort: 8080

vms21.cos.com上:创建 Traefik Dashboard Ingress 路由规则对象

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/traefik/traefik-dashboard-ingress.yaml -n kube-system

ingress.extensions/traefik-dashboard-ingress created

[root@vms21 ~]# kubectl get ingress -n kube-system

NAME CLASS HOSTS ADDRESS PORTS AGE

traefik-dashboard-ingress <none> traefik.op.com 80 16s

为了方便登录验证,需要删除之前创建的ingressroute:

[root@vms21 ~]# kubectl delete -f http://k8s-yaml.op.com/traefik/traefik-dashboard-route.yaml -n kube-system

ingressroute.traefik.containo.us "traefik-dashboard-route" deleted

[root@vms21 ~]# kubectl get ingressroute -n kube-system

No resources found in kube-system namespace.

配置 HTTPS 路由规则(Kubernetes Dashboard 为例)(此步在部署dashborad后再做)

跟上面以 CRD 方式创建路由规则一样,也需要创建使用证书,然后再以 Ingress 方式创建路由规则。

创建私有证书 dashboard-tls.key、dashboard-tls.crt 文件 (同上)

- 将证书存储到 Kubernetes Secret 中(

vms21.cos.com上:[/opt/kubernetes/server/bin/cert])

[root@vms21 ~]# cd /opt/kubernetes/server/bin/cert/

[root@vms21 cert]# scp vms200:/opt/certs/dashboard.op.com.crt .

[root@vms21 cert]# scp vms200:/opt/certs/dashboard.op.com.key .

[root@vms21 cert]# ll |grep dashboard.op.com

-rw-r--r-- 1 root root 1204 Jul 25 07:24 dashboard.op.com.crt

-rw------- 1 root root 1675 Jul 25 07:25 dashboard.op.com.key

[root@vms21 cert]# kubectl create secret generic dashboard-op-tls --from-file=dashboard-tls.crt --from-file=dashboard-tls.key -n kubernetes-dashboard

secret/dashboard-op-tls created

创建 Traefik Dashboard Ingress 路由规则 kubernetes-dashboard-ingress.yaml 文件 (

vms200.cos.com上:) [root@vms200 traefik]# vi /data/k8s-yaml/traefik/kubernetes-dashboard-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard-ingress

namespace: kubernetes-dashboard

annotations:

kubernetes.io/ingress.class: traefik

traefik.ingress.kubernetes.io/router.tls: "true"

traefik.ingress.kubernetes.io/router.entrypoints: websecure

spec:

tls:

- secretName: dashboard-op-tls

rules:

- host: dashboard.op.com

http:

paths:

- path: /

backend:

serviceName: kubernetes-dashboard

servicePort: 443

创建 Traefik Dashboard 路由规则对象 (

vms21.cos.com上:)

[root@vms21 cert]# kubectl apply -f http://k8s-yaml.op.com/traefik/kubernetes-dashboard-ingress.yaml -n kubernetes-dashboard

ingress.extensions/kubernetes-dashboard-ingress created

[root@vms21 cert]# kubectl get ingress -n kubernetes-dashboard

NAME CLASS HOSTS ADDRESS PORTS AGE

kubernetes-dashboard-ingress <none> dashboard.op.com 80, 443 76m

登录前,如果k8s集群内dashboard有其他规则,则需要删除,如:

[root@vms21 cert]# kubectl delete ingressroute kubernetes-dashboard-route -n kubernetes-dashboard

ingressroute.traefik.containo.us "kubernetes-dashboard-route" deleted

7 在前端nginx上做反向代理

在vms11和vms12上,都做反向代理,将泛域名(*.op.com)的解析都转发到traefik上去

cat >/etc/nginx/conf.d/op.com.conf <<'EOF'

upstream default_backend_traefik {

server 192.168.26.21:81 max_fails=3 fail_timeout=10s;

server 192.168.26.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.op.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

EOF

# 重启nginx服务

nginx -t

nginx -s reload

8 在bind9中添加域名解析

在vms11上:

需要将traefik 服务的解析记录添加的DNS解析中,注意是绑定到VIP上 [root@vms11 ~]# vi /var/named/op.com.zone #在末尾追加

........

traefik A 192.168.26.10

注意前滚serial编号

重启named服务

[root@vms11 ~]# systemctl restart named

#dig验证解析结果

[root@vms11 ~]# dig -t A traefik.op.com +short

192.168.26.10

9 测试验证

在集群外访问验证:在集群外,访问http://traefik.op.com,如果能正常显示web页面,说明已经暴露服务成功!

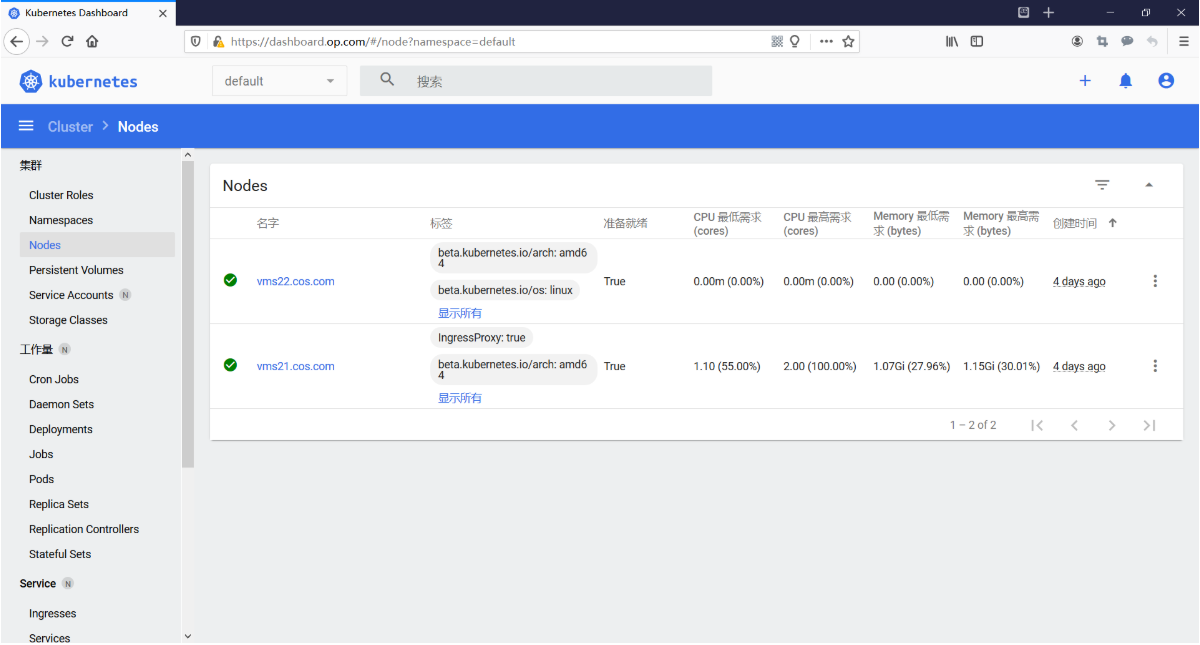

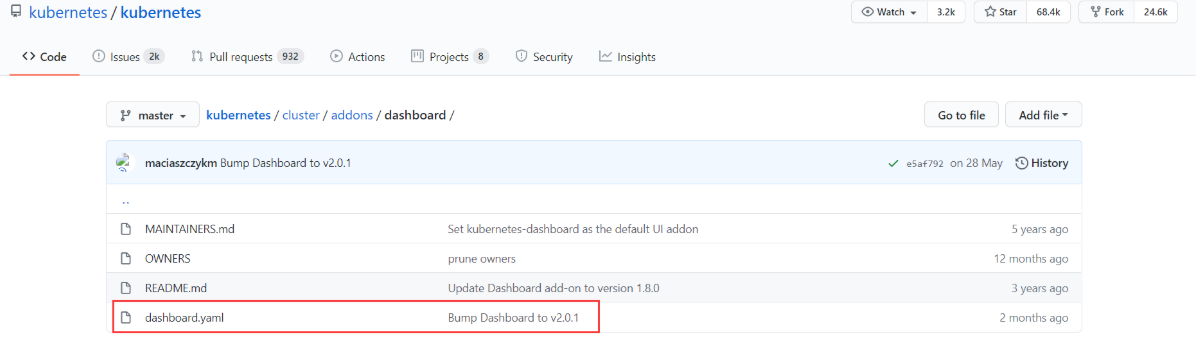

核心插件-dashboard(Kubernetes 集群的通用Web UI)

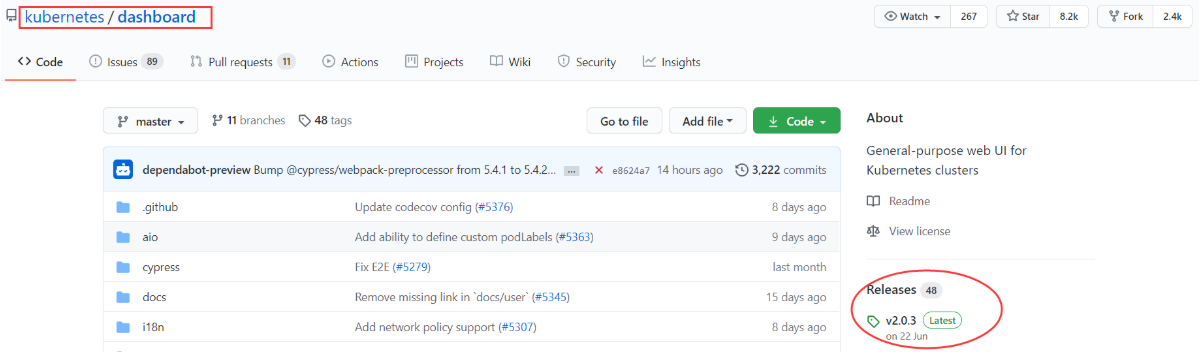

dashboard是k8s的可视化管理平台。https://github.com/kubernetes/dashboard

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

1 下载v2.0.3的资源清单文件

运维主机vms200.cos.com上:[目录:/data/k8s-yaml/dashboard]

[root@vms200 dashboard]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

--2020-07-24 14:44:16-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 7552 (7.4K) [text/plain]

Saving to: ‘recommended.yaml’

recommended.yaml 100%[==================================================================>] 7.38K 6.40KB/s in 1.2s

2020-07-24 14:44:26 (6.40 KB/s) - ‘recommended.yaml’ saved [7552/7552]

[root@vms200 dashboard]# ll

total 8

-rw-r--r-- 1 root root 7552 Jul 24 14:44 recommended.yaml

2 下载安装所需要的镜像

运维主机vms200.cos.com上:

- 从yaml文件中获取镜像名并摘取

[root@vms200 dashboard]# grep image recommended.yaml

image: kubernetesui/dashboard:v2.0.3

imagePullPolicy: Always

image: kubernetesui/metrics-scraper:v1.0.4

[root@vms200 dashboard]# docker pull kubernetesui/dashboard:v2.0.3

v2.0.3: Pulling from kubernetesui/dashboard

d5ba0740de2a: Pull complete

Digest: sha256:45ef224759bc50c84445f233fffae4aa3bdaec705cb5ee4bfe36d183b270b45d

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.3

docker.io/kubernetesui/dashboard:v2.0.3

[root@vms200 dashboard]# docker pull kubernetesui/metrics-scraper:v1.0.4

v1.0.4: Pulling from kubernetesui/metrics-scraper

07008dc53a3e: Pull complete

1f8ea7f93b39: Pull complete

04d0e0aeff30: Pull complete

Digest: sha256:555981a24f184420f3be0c79d4efb6c948a85cfce84034f85a563f4151a81cbf

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.4

docker.io/kubernetesui/metrics-scraper:v1.0.4

- 镜像tag并上传私有仓库

http://harbor.op.com/

[root@vms200 dashboard]# docker tag kubernetesui/dashboard:v2.0.3 harbor.op.com/public/dashboard:v2.0.3

[root@vms200 dashboard]# docker tag kubernetesui/metrics-scraper:v1.0.4 harbor.op.com/public/metrics-scraper:v1.0.4

[root@vms200 dashboard]# docker push harbor.op.com/public/dashboard:v2.0.3

The push refers to repository [harbor.op.com/public/dashboard]

3019294f9e33: Pushed

v2.0.3: digest: sha256:635b2efff46ac709b77ee699579e171aabc37a86a3e8c2c78153b763edcadcf4 size: 529

[root@vms200 dashboard]# docker push harbor.op.com/public/metrics-scraper:v1.0.4

The push refers to repository [harbor.op.com/public/metrics-scraper]

52b345e4c8e0: Pushed

14f2e8fb1e35: Pushed

57757cd7bb95: Pushed

v1.0.4: digest: sha256:d78f995c07124874c2a2e9b404cffa6bc6233668d63d6c6210574971f3d5914b size: 946

- 修改

recommended.yaml

修改

imagePullPolicy: Always为:imagePullPolicy: IfNotPresent修改为从私有仓库拉取镜像

...

image: harbor.op.com/public/dashboard:v2.0.3

imagePullPolicy: IfNotPresent

...

image: harbor.op.com/metrics-scraper:v1.0.4

imagePullPolicy: IfNotPresent

...

[root@vms200 dashboard]# vi recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

#image: kubernetesui/dashboard:v2.0.3

image: harbor.op.com/public/dashboard:v2.0.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

- --token-ttl=43200

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

#image: kubernetesui/metrics-scraper:v1.0.4

image: harbor.op.com/public/metrics-scraper:v1.0.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

[root@vms200 dashboard]# vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

rules:

- host: dashboard.op.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

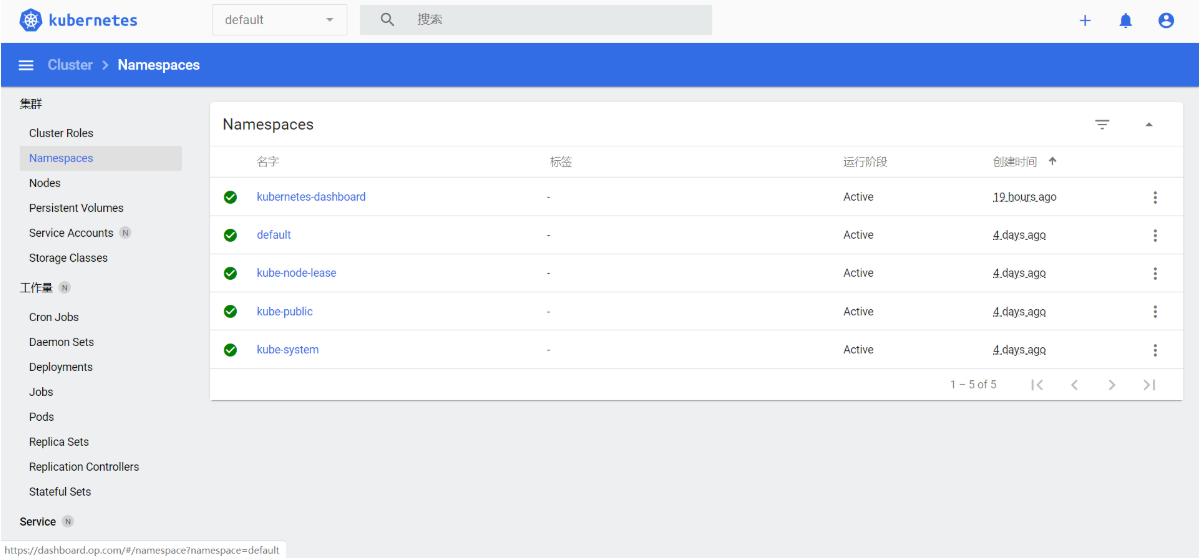

3 在任意运算节点上应用资源配置清单

vms21.cos.com上

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dashboard/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

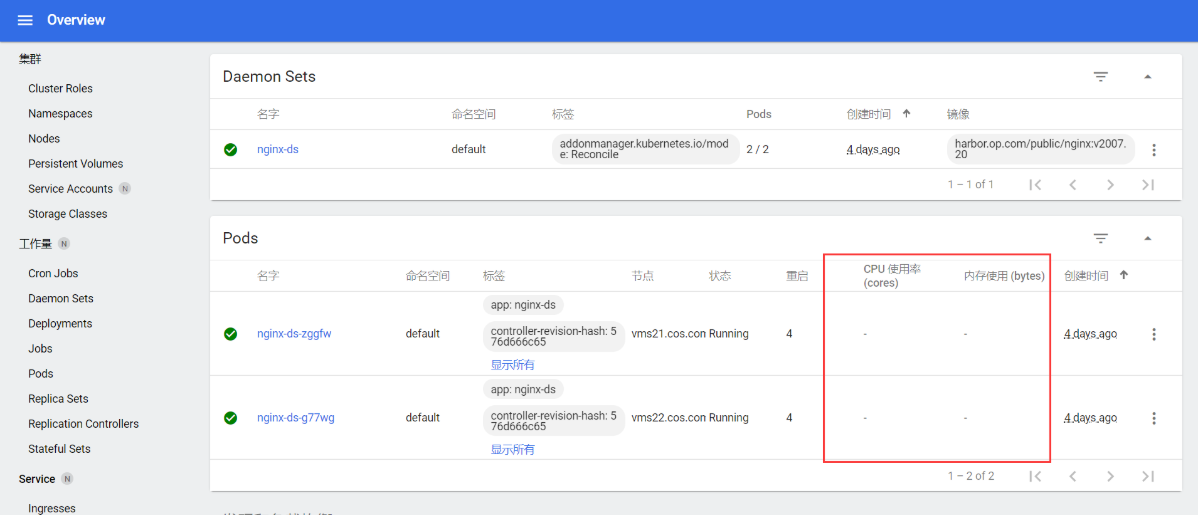

[root@vms21 ~]# kubectl get po -o wide -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-66ddb67fb7-lr74g 1/1 Running 0 2m25s 172.26.22.4 vms22.cos.com <none> <none>

kubernetes-dashboard-7d4bdcc7db-t8rzp 1/1 Running 0 2m26s 172.26.22.3 vms22.cos.com <none> <none>

[root@vms21 ~]# kubectl get svc -o wide -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

dashboard-metrics-scraper ClusterIP 10.168.143.75 <none> 8000/TCP 3m36s k8s-app=dashboard-metrics-scraper

kubernetes-dashboard ClusterIP 10.168.13.115 <none> 443/TCP 3m37s k8s-app=kubernetes-dashboard

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dashboard/ingress.yaml

ingress.extensions/kubernetes-dashboard created

[root@vms21 ~]# kubectl get ingress -n kubernetes-dashboard

NAME CLASS HOSTS ADDRESS PORTS AGE

kubernetes-dashboard <none> dashboard.op.com 80 8s

4 Creating a Service Account

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

vms200.cos.com上:[目录:/data/k8s-yaml/dashboard]

[root@vms200 dashboard]# vi dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

[root@vms200 dashboard]# vi dashboard-adminuserbinging.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

在vms21上:

[root@vms21 cert]# kubectl apply -f http://k8s-yaml.op.com/dashboard/dashboard-adminuser.yaml

[root@vms21 cert]# kubectl apply -f http://k8s-yaml.op.com/dashboard/dashboard-adminuserbinging.yaml

[root@vms21 cert]# kubectl get serviceaccounts -n kubernetes-dashboard

NAME SECRETS AGE

admin-user 1 64m

default 1 17h

kubernetes-dashboard 1 17h

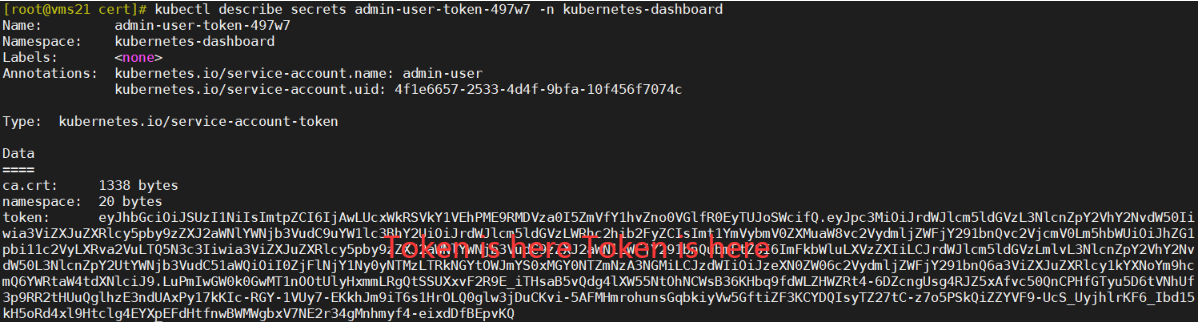

5 获取token

在vms21上:

列表中有很多角色,不同到角色有不同的权限,找到想要的角色

kubernetes-dashboard-token后,再用describe命令获取详情。

[root@vms21 cert]# kubectl get secrets -n kubernetes-dashboard

NAME TYPE DATA AGE

admin-user-token-497w7 kubernetes.io/service-account-token 3 69m

dashboard-op-tls Opaque 2 133m

default-token-2bd4w kubernetes.io/service-account-token 3 17h

kubernetes-dashboard-certs Opaque 0 17h

kubernetes-dashboard-csrf Opaque 1 17h

kubernetes-dashboard-key-holder Opaque 2 17h

kubernetes-dashboard-token-zpj6z kubernetes.io/service-account-token 3 17h

[root@vms21 cert]# kubectl describe secrets admin-user-token-497w7 -n kubernetes-dashboard

| Token 仅供本次部署使用 |

|---|

| eyJhbGciOiJSUzI1NiIsImtpZCI6IjAwLUcxWkRSVkY1VEhPME9RMDVza0I5ZmVfY1hvZno0VGlfR0EyTUJoSWcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTQ5N3c3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI0ZjFlNjY1Ny0yNTMzLTRkNGYtOWJmYS0xMGY0NTZmNzA3NGMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.LuPmIwGW0k0GwMT1nOOtUlyHxmmLRgQtSSUXxvF2R9E_iTHsaB5vQdg4lXW55NtOhNCWsB36KHbq9fdWLZHWZRt4-6DZcngUsg4RJZ5xAfvc50QnCPHfGTyu5D6tVNhUf3p9RR2tHUuQglhzE3ndUAxPy17kKIc-RGY-1VUy7-EKkhJm9iT6s1HrOLQ0glw3jDuCKvi-5AFMHmrohunsGqbkiyVw5GftiZF3KCYDQIsyTZ27tC-z7o5PSkQiZZYVF9-UcS_UyjhlrKF6_Ibd15kH5oRd4xl9Htclg4EYXpEFdHtfnwBWMWgbxV7NE2r34gMnhmyf4-eixdDfBEpvKQ |

6 在bind9中添加域名解析

在vms11上:

[root@vms11 ~]# vi /var/named/op.com.zone

$ORIGIN op.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.op.com. dnsadmin.op.com. (

20200704 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.op.com.

$TTL 60 ; 1 minute

dns A 192.168.26.11

harbor A 192.168.26.200

k8s-yaml A 192.168.26.200

traefik A 192.168.26.10

dashboard A 192.168.26.10

- 在末尾追加一行:

dashboard A 192.168.26.10 - 注意前滚serial编号

重启named

[root@vms11 ~]# systemctl restart named

[root@vms11 ~]# dig -t A dashboard.op.com +short

192.168.26.10

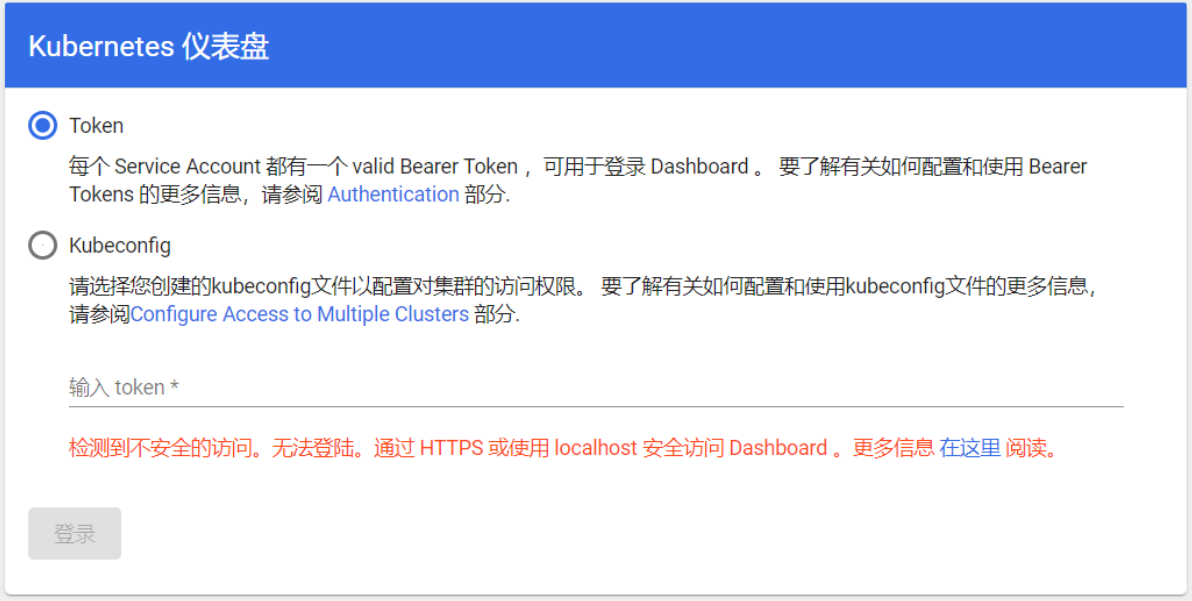

7 通过浏览器验证

在本机浏览器上访问http://dashboard.op.com,如果出来web界面,表示部署成功

如果没有签发证书,使用token去尝试登录,发现仍然登录不了,因为必须使用https登录,所以需要签发证书。

8 签发证书

运维主机vms200.cos.com上:

生成证书

创建生成证书签名请求(csr)的JSON配置文件

[root@vms200 ~]# cd /opt/certs/

[root@vms200 certs]# cat /opt/certs/dashboard-csr.json

{

"CN": "*.op.com",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "op",

"OU": "ops"

}

]

}

EOF

生成dashboard证书和私钥

[root@vms200 certs]# cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=server \

dashboard-csr.json |cfssl-json -bare dashboard

查看生成的证书和私钥

[root@vms200 certs]# ll |grep dash

-rw-r--r-- 1 root root 993 Jul 24 22:53 dashboard.csr

-rw-r--r-- 1 root root 280 Jul 24 22:52 dashboard-csr.json

-rw------- 1 root root 1675 Jul 24 22:53 dashboard-key.pem

-rw-r--r-- 1 root root 1363 Jul 24 22:53 dashboard.pem

修改nginx配置,部署证书,走https

在vms11、vms12两个前端代理上,都做相同操作。

拷贝证书

mkdir /etc/nginx/certs

scp vms200:/opt/certs/dashboard.pem /etc/nginx/certs

scp vms200:/opt/certs/dashboard-key.pem /etc/nginx/certs

ll /etc/nginx/certs

total 8

-rw------- 1 root root 1675 Jul 25 10:45 dashboard-key.pem

-rw-r--r-- 1 root root 1363 Jul 25 10:45 dashboard.pem

创建nginx配置

cat >/etc/nginx/conf.d/dashboard.op.com.conf <<'EOF'

server {

listen 80;

server_name dashboard.op.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.op.com;

ssl_certificate "certs/dashboard.pem";

ssl_certificate_key "certs/dashboard-key.pem";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

EOF

重启nginx服务

nginx -t

nginx -s reload

再次登录dashboard

刷新页面后,再次使用前面获取的token登录,就可以成功登录进去了。

9 关于dashboard授权

思考一个问题,使用rbac授权来访问dashboard,如何做到权限精细化呢?比如开发,只能看,不能进行其它操作,不同的项目组,看到的资源应该是不一样的,测试看到的应该是测试相关的资源。

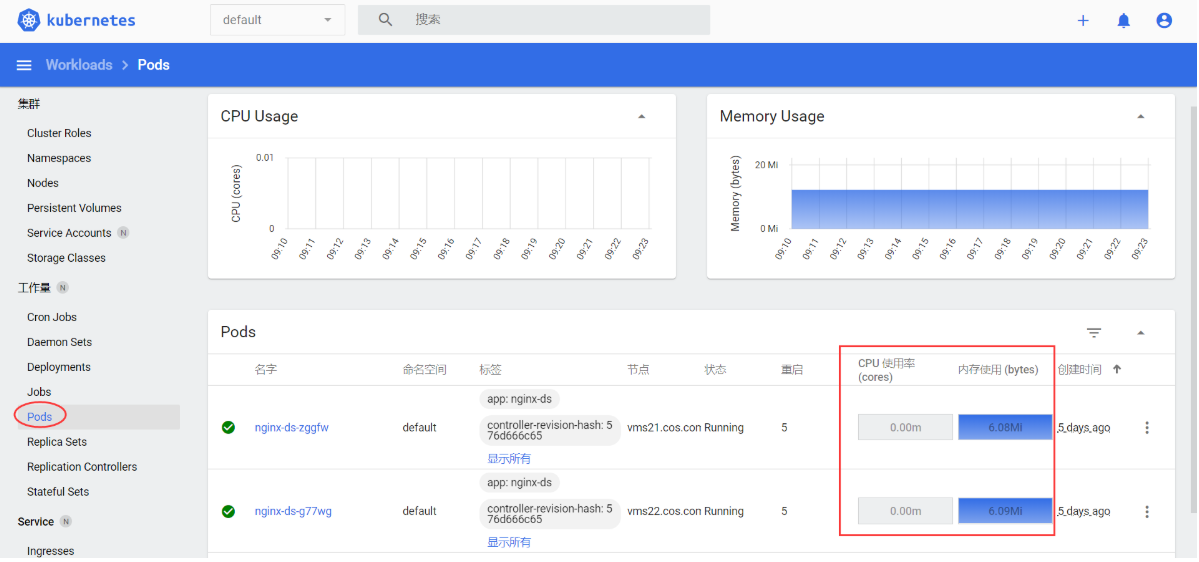

10 部署metrics-server

github地址:https://github.com/kubernetes-sigs/metrics-server 本次部署使用

metrics-server:v0.3.7(当前Latest release)

以k8s-Deployment方式进行部署

运维主机vms200.cos.com上:(目录:/data/k8s-yaml/dashboard)

- 下载

components.yaml文件

[root@vms200 dashboard]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.7/components.yaml

- 拉取镜像并上传到私有仓库

[root@vms200 dashboard]# grep image components.yaml

# mount in tmp so we can safely use from-scratch images and/or read-only containers

image: k8s.gcr.io/metrics-server/metrics-server:v0.3.7

imagePullPolicy: IfNotPresent

[root@vms200 dashboard]# docker pull juestnow/metrics-server:v0.3.7 #k8s.gcr.io很难下载

[root@vms200 dashboard]# docker tag juestnow/metrics-server:v0.3.7 harbor.op.com/public/metrics-server:v0.3.7

[root@vms200 dashboard]# docker push harbor.op.com/public/metrics-server:v0.3.7

- 修改

components.yaml文件中Deployment的镜像及拉取方式、增加args:

...

containers:

- name: metrics-server

#image: k8s.gcr.io/metrics-server/metrics-server:v0.3.7

image: harbor.op.com/public/metrics-server:v0.3.7

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- --metric-resolution=30s

...

hostNetwork: true

...

vms21.cos.com上:交付到k8s

[root@vms21 cert]# kubectl apply -f http://k8s-yaml.op.com/dashboard/components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@vms21 cert]# kubectl get pod -n kube-system | grep metrics-server

metrics-server-68ccbddf95-kss26 1/1 Running 0 87s

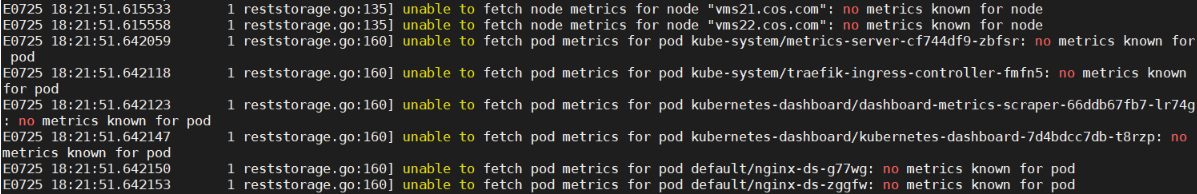

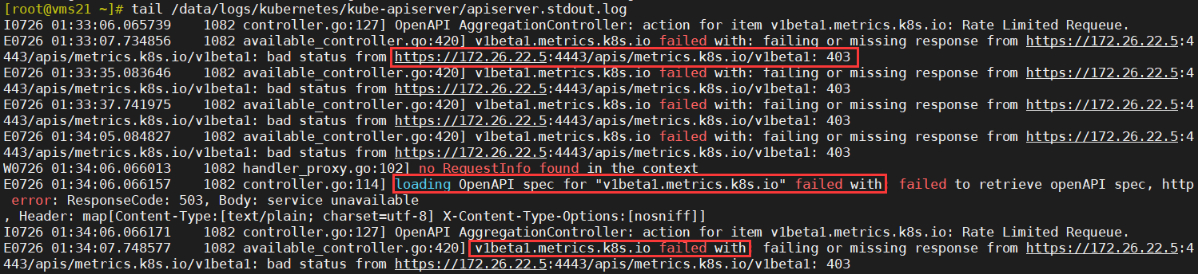

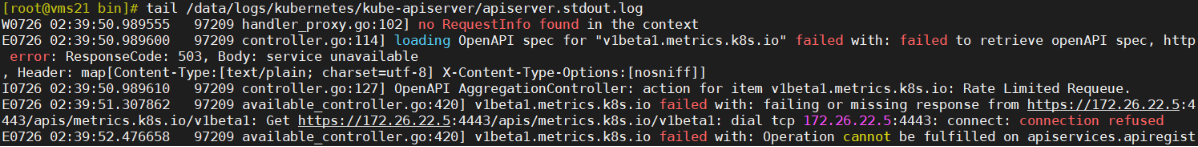

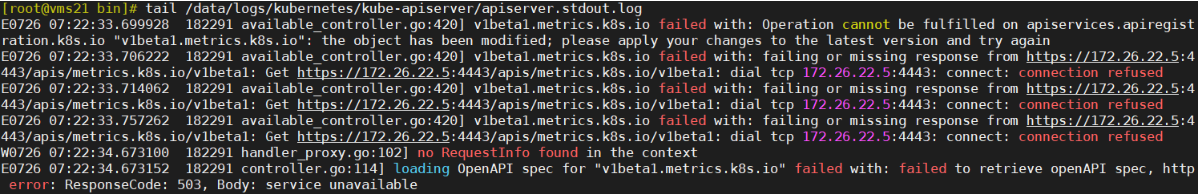

注意:pod正常并不代表部署完全成功!还须进行验证与排错

签发证书

运维主机vms200.cos.com上:

创建生成证书签名请求(csr)的JSON配置文件

[root@vms200 ~]# cd /opt/certs

[root@vms200 certs]# vi metrics-server-csr.json

{

"CN": "system:metrics-server",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "op",

"OU": "ops"

}

]

}

生成metrics-server证书和私钥

[root@vms200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer metrics-server-csr.json | cfssl-json -bare metrics-server

查看生成的证书和私钥

[root@vms200 certs]# ll metr*