- 离线部署k8s-v1.20.2集群(一)

- 若想选择安装指定的版本,可以yum list kubelet —showduplicate查找对应版本

- 执行结束后,查看相关的镜像:

- 离线部署k8s-v1.20.2集群(二)

- 离线部署k8s-v1.20.2集群(三)

- Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- Allow Dashboard to get and update ‘kubernetes-dashboard-settings’ config map.

- Allow Dashboard to get metrics.

- Allow Metrics Scraper to get metrics from the Metrics server

离线部署k8s-v1.20.2集群(一)

概述

因为网络问题,使用的服务器都无法连接公网,因此必须使用离线部署的方式进行部署。 使用的设备列表如下:

| ip | hostname |

|---|---|

| 192.168.26.71 | master01 |

| 192.168.26.72 | master02 |

| 192.168.26.73 | node01 |

| 192.168.26.74 | node02 |

| 192.168.26.75 | node03 |

以上设备均无法连接公网,yum也只能连接base库,k8s所需的安装包都需要从别的方式来获取,因此需要一台能上网的设备进行下载所需的安装包,设备的系统最好是全新的,未进行过任何的操作,因为需要使用yum下载所需要的的包。 本地可以连接公网的设备需要保证与以上使用的设备内核版本一致,这里使用的都是Centos7.9。

在vm10上进行操作:

[root@vm10 ~]# uname -r3.10.0-1160.el7.x86_64[root@vm10 ~]# cat /etc/redhat-releaseCentOS Linux release 7.9.2009 (Core)## 修改yum安装保存rpm包[root@vm10 ~]# vi /etc/yum.conf修改:keepcache=1~]# cat << EOF >> /etc/hosts192.168.26.71 master01192.168.26.72 master02192.168.26.73 node01192.168.26.74 node02192.168.26.75 node03EOF

集群部署所需要的包下载

在vm10上进行操作,安装docker-ce,keepalived,以及k8s组件:kubelet,kubectl,kubeadm 操作步骤:

#docker 安装~]# yum install -y yum-utils device-mapper-persistent-data lvm2 bash-completion~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo~]# yum install docker-ce docker-ce-cli containerd.io -y#k8s相关安装~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

若想选择安装指定的版本,可以yum list kubelet —showduplicate查找对应版本

~]# yum install -y kubelet kubeadm kubectl ##这样是安装的最新版本v1.20.2

相关工具及yum源、docker安装

[root@vm10 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2 bash-completion keepalived...[root@vm10 ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo...[root@vm10 ~]# yum install docker-ce docker-ce-cli containerd.io -y...

k8s相关安装

[root@vm10 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF[root@vm10 ~]# yum install -y kubelet kubeadm kubectl

若想选择安装指定的版本,可以yum list kubelet —showduplicate查找对应版本。这里是安装的最新版本v1.20.2。

由于k8s集群启动需要docker image,所以需要在本地机器上将相关的镜像下载之后,传到部署的服务器上。

配置及启动docker

[root@vm10 ~]# mkdir -p /etc/docker /data/docker[root@vm10 ~]# cat <<EOF > /etc/docker/daemon.json{"graph": "/data/docker","storage-driver": "overlay2","registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"],"bip": "172.26.10.1/24","exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true}EOF

[root@vm10 ~]# systemctl start docker ; systemctl enable docker[root@vm10 ~]# ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:33:18:da brd ff:ff:ff:ff:ff:ffinet 192.168.26.10/24 brd 192.168.26.255 scope global noprefixroute ens32valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe33:18da/64 scope linkvalid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group defaultlink/ether 02:42:22:21:ef:8b brd ff:ff:ff:ff:ff:ffinet 172.26.10.1/24 brd 172.26.10.255 scope global docker0valid_lft forever preferred_lft forever

拉取镜像

使用aliyun的镜像站拉取镜像,可以通过脚本的方式进行拉取。这里根据脚本分解为单个拉取来进行练习

~]# vim images.sh

#!/bin/bashurl=registry.cn-hangzhou.aliyuncs.com/google_containersversion=v1.20.2images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)for imagename in ${images[@]} ; dodocker pull $url/$imagenamedocker tag $url/$imagename k8s.gcr.io/$imagenamedocker rmi -f $url/$imagenamedone

执行结束后,查看相关的镜像:

~]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEk8s.gcr.io/kube-proxy v1.20.2 43154ddb57a8 2 weeks ago 118MBk8s.gcr.io/kube-apiserver v1.20.2 a8c2fdb8bf76 2 weeks ago 122MBk8s.gcr.io/kube-controller-manager v1.20.2 a27166429d98 2 weeks ago 116MBk8s.gcr.io/kube-scheduler v1.20.2 ed2c44fbdd78 2 weeks ago 46.4MBk8s.gcr.io/etcd 3.4.13-0 0369cf4303ff 5 months ago 253MBk8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 7 months ago 45.2MBk8s.gcr.io/pause 3.2 80d28bedfe5d 11 months ago 683kB## 在test-node上起一个单节点的k8s~]# kubeadm --init## 同时需要下载flannel镜像对应的配置文件## flannel的配置文件需要在github上找,https://github.com/coreos/flannel/tree/master/Documentation~]# kubectl apply -f kube-flannel.yml

flannel:v0.13.1-rc1镜像对应的配置文件:(要在浏览器中打开后进行复制)

https://github.com/coreos/flannel/blob/v0.13.1-rc1/Documentation/kube-flannel.yml

查看k8s安装使用的镜像

[root@vm10 ~]# kubeadm config images list --kubernetes-version=v1.20.2k8s.gcr.io/kube-apiserver:v1.20.2k8s.gcr.io/kube-controller-manager:v1.20.2k8s.gcr.io/kube-scheduler:v1.20.2k8s.gcr.io/kube-proxy:v1.20.2k8s.gcr.io/pause:3.2k8s.gcr.io/etcd:3.4.13-0k8s.gcr.io/coredns:1.7.0

从阿里云拉取镜像后改成安装使用的镜像名

[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.2...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.2 k8s.gcr.io/kube-apiserver:v1.20.2[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.2...[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.2...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.2 k8s.gcr.io/kube-controller-manager:v1.20.2[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.2...[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.2...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.2 k8s.gcr.io/kube-scheduler:v1.20.2[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.2...[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.2...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.2 k8s.gcr.io/kube-proxy:v1.20.2[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.2...[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2...[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0 k8s.gcr.io/etcd:3.4.13-0[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0...[root@vm10 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0...[root@vm10 ~]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0[root@vm10 ~]# docker rmi -f registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0...[root@vm10 ~]# docker pull flannel.tar quay.io/coreos/flannel:v0.13.1-rc1...

查看相关的镜像

[root@vm10 ~]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEk8s.gcr.io/kube-proxy v1.20.2 43154ddb57a8 3 weeks ago 118MBk8s.gcr.io/kube-apiserver v1.20.2 a8c2fdb8bf76 3 weeks ago 122MBk8s.gcr.io/kube-controller-manager v1.20.2 a27166429d98 3 weeks ago 116MBk8s.gcr.io/kube-scheduler v1.20.2 ed2c44fbdd78 3 weeks ago 46.4MBquay.io/coreos/flannel v0.13.1-rc1 f03a23d55e57 2 months ago 64.6MBk8s.gcr.io/etcd 3.4.13-0 0369cf4303ff 5 months ago 253MBk8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 7 months ago 45.2MBk8s.gcr.io/pause 3.2 80d28bedfe5d 11 months ago 683kB

打包

将下载好的yum 包,以及docker images 都打包,传到相关的服务器

#打包yum包~]# cd /var/cache/~]# tar zcvf yum.tar.gz yum#打包docker镜像(一定要使用tag的方式进行打包,若用id进行打包,导出的时候tag信息是空)~]# docker save -o kube-proxy.tar k8s.gcr.io/kube-proxy:v1.20.2~]# docker save -o kube-apiserver.tar k8s.gcr.io/kube-apiserver:v1.20.2~]# docker save -o kube-controller-manager.tar k8s.gcr.io/kube-controller-manager:v1.20.2~]# docker save -o kube-scheduler.tar k8s.gcr.io/kube-scheduler:v1.20.2~]# docker save -o etcd.tar k8s.gcr.io/etcd:3.4.13-0~]# docker save -o coredns.tar k8s.gcr.io/coredns:1.7.0~]# docker save -o pause.tar k8s.gcr.io/pause:3.2~]# docker save -o flannel.tar quay.io/coreos/flannel:v0.13.1-rc1#打包镜像~]# tar zcvf images.tar.gz images

打包yum包

[root@vm10 ~]# cd /var/cache/[root@vm10 cache]# tar zcvf yum.tar.gz yum...[root@vm10 cache]# mkdir /opt/tar[root@vm10 cache]# mv yum.tar.gz /opt/tar/.

导出docker镜像

[root@vm10 cache]# cd /opt/tar/[root@vm10 tar]# mkdir images[root@vm10 tar]# cd images/[root@vm10 images]# docker save -o kube-proxy.tar k8s.gcr.io/kube-proxy:v1.20.2[root@vm10 images]# docker save -o kube-apiserver.tar k8s.gcr.io/kube-apiserver:v1.20.2[root@vm10 images]# docker save -o kube-controller-manager.tar k8s.gcr.io/kube-controller-manager:v1.20.2[root@vm10 images]# docker save -o kube-scheduler.tar k8s.gcr.io/kube-scheduler:v1.20.2[root@vm10 images]# docker save -o etcd.tar k8s.gcr.io/etcd:3.4.13-0[root@vm10 images]# docker save -o coredns.tar k8s.gcr.io/coredns:1.7.0[root@vm10 images]# docker save -o pause.tar k8s.gcr.io/pause:3.2[root@vm10 images]# docker save -o flannel.tar quay.io/coreos/flannel:v0.13.1-rc1

打包镜像:

[root@vm10 ~]# cd /opt/tar[root@vm10 tar]# tar zcvf images.tar.gz images...

将

yum.tar.gz和yum.tar.gz复制到相关的服务器上[root@vm10 tar]# scp /opt/tar/yum.tar.gz master01:/usr/local/src/.[root@vm10 tar]# scp /opt/tar/images.tar.gz master01:/usr/local/src/.[root@vm10 tar]# scp /opt/tar/yum.tar.gz master02:/usr/local/src/.[root@vm10 tar]# scp /opt/tar/images.tar.gz master02:/usr/local/src/....

离线部署k8s-v1.20.2集群(二)

上一篇文章将所需要的的docker images,以及rpm包上传到指定的服务器 设备信息:

| ip | hostname |

|---|---|

| 192.168.26.71 | master01 |

| 192.168.26.72 | master02 |

| 192.168.26.73 | node01 |

| 192.168.26.74 | node02 |

| 192.168.26.75 | node03 |

| 192.168.26.11 | vip |

开始安装配置k8s集群

所有服务器开始进行设备初始化

1. hostname设定

每台设备的hostname按照之前预定的进行设定,以master01为例

[root@vm71 ~]# hostnamectl set-hostname master01

同时将其他设备的信息记录写到/etc/hosts中

~]# cat << EOF >> /etc/hosts192.168.26.71 master01192.168.26.72 master02192.168.26.73 node01192.168.26.74 node02192.168.26.75 node03EOF

验证各节点mac、uuid唯一

~]# cat /sys/class/net/ens32/address~]# cat /sys/class/dmi/id/product_uuid

2.禁用swap,关闭selinux,禁用Networkmanager,开启相关内核模块

~]# cat << EOF >> init.sh#!/bin/bash###初始化脚本#####关闭firewalldsystemctl stop firewalld && systemctl disable firewalld#关闭selinuxsetenforce 0sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux#关闭NetworkManagersystemctl stop NetworkManager && systemctl disable NetworkManager#修改内核参数modprobe br_netfiltercat << EOF >>/etc/sysctl.confnet.ipv4.ip_forward = 1net.ipv4.ip_nonlocal_bind = 1net.bridge.bridge-nf-call-iptables=1net.bridge.bridge-nf-call-ip6tables=1net.ipv4.ip_forward=1EOF#修改完之后需要执行sysctl -pecho "----init finish-----"

每台机器都需要执行此脚本

安装相关的yum包~]# cd /usr/local/srcsrc]# tar -zxvf yum.tar.gzsrc]# cd yum/x86_64/7/drwxr-xr-x 4 basedrwxr-xr-x 4 docker-ce-stabledrwxr-xr-x 4 extrasdrwxr-xr-x 4 kubernetes-rw-r--r-- 1 timedhosts-rw-r--r-- 1 timedhosts.txtdrwxr-xr-x 4 updates

将base、extras、docker-ce-stable、kubernetes中所有的rpm进行安装

base]# rpm -ivh packages/*.rpm --force --nodepsextras]# rpm -ivh packages/*.rpm --force --nodepsdocker-ce-stable]# rpm -ivh packages/*.rpm --force --nodepskubernetes]# rpm -ivh packages/*.rpm --force --nodeps

使用

rpm -ivh 包名或rpm -Uvh 包名进行安装src]# rpm -Uvh yum/x86_64/7/base/packages/*.rpm --nodeps --forcesrc]# rpm -Uvh yum/x86_64/7/extras/packages/*.rpm --nodeps --forcesrc]# rpm -Uvh yum/x86_64/7/docker-ce-stable/packages/*.rpm --nodeps --forcesrc]# rpm -Uvh yum/x86_64/7/kubernetes/packages/*.rpm --nodeps --force

配置与启动docker

所有安装包安装完成后,配置docker

~]# mkdir -p /etc/docker /data/docker~]# cat <<EOF >/etc/docker/daemon.json{"graph": "/data/docker","storage-driver": "overlay2","registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"],"bip": "172.26.71.1/24","exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true}EOF

bip与主机地址对应,如:192.168.26.71对应172.26.71.1启动docker

~]# systemctl start docker ; systemctl enable docker

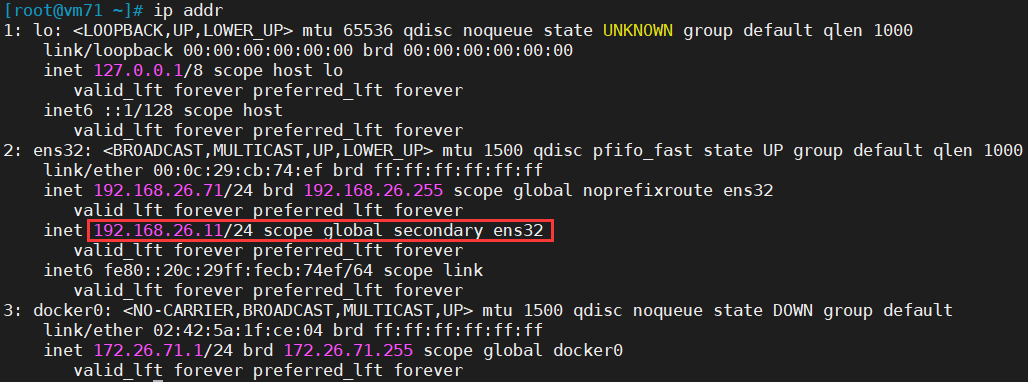

查看

docker0地址~]# ip a

查看容器ip

~]# docker pull busybox~]# docker run -it --rm busybox/ # ip a...11: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:1a:49:02 brd ff:ff:ff:ff:ff:ffinet 172.26.71.2/24 brd 172.26.73.255 scope global eth0valid_lft forever preferred_lft forever/ # exit

配置keepalived

配置master01

[root@vm71 ~]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id master01}vrrp_instance VI_1 {state MASTERinterface ens32virtual_router_id 51priority 100advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.26.11/24}}

配置master02

[root@vm72 src]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id master02}vrrp_instance VI_1 {state BACKUPinterface ens32virtual_router_id 50priority 90advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.26.11/24}}

配置好了之后,master01、master02上启动keepalived

systemctl enable keepalived && systemctl start keepalived

启动报错处理

使用

systemctl status keepalived进行查看,发现有如下error: …keepalived[1928]: /usr/sbin/keepalived: error while loading shared libraries: libnetsnmpmibs.so.31: …

处理方法:在原安装主机查找文件libnetsnmpmibs.so.31

~]# find / -name libnetsnmpmibs.so.31/usr/lib64/libnetsnmpmibs.so.31~]# ls /usr/lib64/libnetsnmp*/usr/lib64/libnetsnmpagent.so.31 /usr/lib64/libnetsnmpmibs.so.31 /usr/lib64/libnetsnmp.so.31~]# scp /usr/lib64/libnetsnmp* master01:/usr/lib64/.

复制libnetsnmpagent.so.31、libnetsnmpmibs.so.31、libnetsnmp.so.31这3个文件

- 验证

查看master上keepalive及ip

~]# ps -ef|grep keepalive...~]# ip addr...

keepalived高可用测试

以192.168.26.11登录,查看主机ip;然后关闭systemctl stop keepalived;再以192.168.26.11进行登录,发现已经切换了。

导入镜像

]# tar -zxvf images.tar.gzimages/images/kube-proxy.tarimages/kube-apiserver.tarimages/kube-controller-manager.tarimages/kube-scheduler.tarimages/etcd.tarimages/coredns.tarimages/pause.tarimages/flannel.tar]# cd images]# docker load < coredns.tar]# docker load < etcd.tar]# docker load < flannel.tar]# docker load < kube-apiserver.tar]# docker load < kube-controller-manager.tar]# docker load < kube-proxy.tar]# docker load < kube-scheduler.tar]# docker load < pause.tar

导入完成之后,查看

]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEk8s.gcr.io/kube-proxy v1.20.2 43154ddb57a8 3 weeks ago 118MBk8s.gcr.io/kube-apiserver v1.20.2 a8c2fdb8bf76 3 weeks ago 122MBk8s.gcr.io/kube-controller-manager v1.20.2 a27166429d98 3 weeks ago 116MBk8s.gcr.io/kube-scheduler v1.20.2 ed2c44fbdd78 3 weeks ago 46.4MBquay.io/coreos/flannel v0.13.1-rc1 f03a23d55e57 2 months ago 64.6MBk8s.gcr.io/etcd 3.4.13-0 0369cf4303ff 5 months ago 253MBk8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 7 months ago 45.2MBk8s.gcr.io/pause 3.2 80d28bedfe5d 11 months ago 683kB

K8S 计算节点初始化

master01:使用kubeadm.config的文件进行初始化

[root@master01 ~]# mkdir /opt/kubernetes[root@master01 ~]# cd /opt/kubernetes[root@master01 kubernetes]# vi kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2kind: ClusterConfigurationkubernetesVersion: v1.20.2apiServer:certSANs: #填写所有kube-apiserver节点的hostname、IP、VIP- master01- master02- master03- node01- node02- node03- 192.168.26.71- 192.168.26.72- 192.168.26.73- 192.168.26.74- 192.168.26.75- 192.168.26.76- 192.168.26.77- 192.168.26.78- 192.168.26.11controlPlaneEndpoint: "192.168.26.11:6443"networking:podSubnet: "10.26.0.0/16"

这里需要注意的一点是network,一定要按照网络规划去实施,否则会出现各种各样的问题。

执行初始化操作

[root@master01 kubernetes]# kubeadm init --config=kubeadm-config.yaml

...Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of control-plane nodes by copying certificate authoritiesand service account keys on each node and then running the following as root:kubeadm join 192.168.26.11:6443 --token twhj2u.0mqgy41h6dk12j34 \--discovery-token-ca-cert-hash sha256:a2f32400830bbfe678c98ed082c4bf9d6429f4e3f4e6bd9731a667bf64bfccdb \--control-planeThen you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.26.11:6443 --token twhj2u.0mqgy41h6dk12j34 \--discovery-token-ca-cert-hash sha256:a2f32400830bbfe678c98ed082c4bf9d6429f4e3f4e6bd9731a667bf64bfccdb

如果初始化失败需要执行:(根据提示删除相关文件和目录)

kubeadm reset

加载坏境变量

[root@master01 kubernetes]# mkdir -p $HOME/.kube[root@master01 kubernetes]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config[root@master01 kubernetes]# chown $(id -u):$(id -g) $HOME/.kube/config[root@master01 kubernetes]# echo "source <(kubectl completion bash)" >> ~/.bash_profile[root@master01 kubernetes]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile[root@master01 kubernetes]# source ~/.bash_profile

添加flannel网络

使用之前的下载好的kube-flannel.yml文件(需要修改镜像拉取策略、IP)

[root@master01 kubernetes]# kubectl apply -f kube-flannel.yml

查看pod

[root@master01 kubernetes]# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster01 Ready control-plane,master 2m16s v1.20.2[root@master01 kubernetes]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-74ff55c5b-br454 0/1 Running 0 109scoredns-74ff55c5b-gjmr4 1/1 Running 0 109setcd-master01 1/1 Running 0 117skube-apiserver-master01 1/1 Running 0 117skube-controller-manager-master01 1/1 Running 0 117skube-flannel-ds-nwv5s 1/1 Running 0 14skube-proxy-hfhs9 1/1 Running 0 109skube-scheduler-master01 1/1 Running 0 117s

加入计算节点

最关键的一步是分发相关的证书:master01上的相关证书文件分发到master02、master03(若作为master)

vi cert-main-master.sh

USER=root # customizableCONTROL_PLANE_IPS="192.168.26.72 192.168.226.73"for host in ${CONTROL_PLANE_IPS}; doscp /etc/kubernetes/pki/ca.crt "${USER}"@$host:scp /etc/kubernetes/pki/ca.key "${USER}"@$host:scp /etc/kubernetes/pki/sa.key "${USER}"@$host:scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:etcd-ca.crt# Quote this line if you are using external etcdscp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:etcd-ca.keydonemaster02,master03上执行以下脚本:vi cert-other-master.shUSER=root # customizablemkdir -p /etc/kubernetes/pki/etcdmv /${USER}/ca.crt /etc/kubernetes/pki/mv /${USER}/ca.key /etc/kubernetes/pki/mv /${USER}/sa.pub /etc/kubernetes/pki/mv /${USER}/sa.key /etc/kubernetes/pki/mv /${USER}/front-proxy-ca.crt /etc/kubernetes/pki/mv /${USER}/front-proxy-ca.key /etc/kubernetes/pki/mv /${USER}/etcd-ca.crt /etc/kubernetes/pki/etcd/ca.crt# Quote this line if you are using external etcdmv /${USER}/etcd-ca.key /etc/kubernetes/pki/etcd/ca.key

本次实验只采用master01、master02作为master,在master02操作如下:

[root@master02 ~]# mkdir -p /etc/kubernetes/pki/etcd[root@master02 ~]# cd /etc/kubernetes/pki/[root@master02 pki]# scp master01:/etc/kubernetes/pki/ca.* .[root@master02 pki]# scp master01:/etc/kubernetes/pki/sa.* .[root@master02 pki]# scp master01:/etc/kubernetes/pki/front-proxy-ca.* .[root@master02 pki]# scp master01:/etc/kubernetes/pki/etcd/ca.* ./etcd/.

master02,mater03加入计算节点

需要注意的是,master02,03上必须完成docker、kubelet、kubectl等yum包的安装,并且导入相关镜像之后才能进行以下操作

]# kubeadm join 192.168.26.11:6443 --token twhj2u.0mqgy41h6dk12j34 \--discovery-token-ca-cert-hash sha256:a2f32400830bbfe678c98ed082c4bf9d6429f4e3f4e6bd9731a667bf64bfccdb \--control-plane

...This node has joined the cluster and a new control plane instance was created:* Certificate signing request was sent to apiserver and approval was received.* The Kubelet was informed of the new secure connection details.* Control plane (master) label and taint were applied to the new node.* The Kubernetes control plane instances scaled up.* A new etcd member was added to the local/stacked etcd cluster.To start administering your cluster from this node, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configRun 'kubectl get nodes' to see this node join the cluster.

同时导入相关的环境变量

]# scp master01:/etc/kubernetes/admin.conf /etc/kubernetes/]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile]# source ~/.bash_profile

验证可以使用以下指令

[root@master02 pki]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster01 Ready control-plane,master 11m v1.20.2master02 Ready control-plane,master 4m53s v1.20.2[root@master02 pki]# kubectl get pod -n kube-system -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEScoredns-74ff55c5b-br454 1/1 Running 0 14m 10.26.0.2 master01 <none> <none>coredns-74ff55c5b-gjmr4 1/1 Running 0 14m 10.26.0.3 master01 <none> <none>etcd-master01 1/1 Running 1 15m 192.168.26.71 master01 <none> <none>etcd-master02 1/1 Running 0 5m15s 192.168.26.72 master02 <none> <none>kube-apiserver-master01 1/1 Running 3 15m 192.168.26.71 master01 <none> <none>kube-apiserver-master02 1/1 Running 4 8m49s 192.168.26.72 master02 <none> <none>kube-controller-manager-master01 1/1 Running 1 15m 192.168.26.71 master01 <none> <none>kube-controller-manager-master02 1/1 Running 0 8m49s 192.168.26.72 master02 <none> <none>kube-flannel-ds-9lr7q 1/1 Running 6 8m50s 192.168.26.72 master02 <none> <none>kube-flannel-ds-nwv5s 1/1 Running 0 13m 192.168.26.71 master01 <none> <none>kube-proxy-hfhs9 1/1 Running 0 14m 192.168.26.71 master01 <none> <none>kube-proxy-p9p2g 1/1 Running 0 8m50s 192.168.26.72 master02 <none> <none>kube-scheduler-master01 1/1 Running 1 15m 192.168.26.71 master01 <none> <none>kube-scheduler-master02 1/1 Running 0 8m49s 192.168.26.72 master02 <none> <none>

加入worker节点

在node01、node02、node03上进行操作(这里只添加了node01)同样这些节点需要完成节点初始化,docker、kubelet、kubeadm等包的完成,同样需要导入docker镜像

]# rpm -Uvh yum/x86_64/7/base/packages/*.rpm --nodeps --force...]# rpm -Uvh yum/x86_64/7/extras/packages/*.rpm --nodeps --force...]# rpm -Uvh yum/x86_64/7/docker-ce-stable/packages/*.rpm --nodeps --force...]# rpm -Uvh yum/x86_64/7/kubernetes/packages/*.rpm --nodeps --force...

执行:

]# kubeadm join 192.168.26.11:6443 --token twhj2u.0mqgy41h6dk12j34 \--discovery-token-ca-cert-hash sha256:a2f32400830bbfe678c98ed082c4bf9d6429f4e3f4e6bd9731a667bf64bfccdb

...This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

执行都无问题之后,可以在master节点上验证是否加入成功。

[root@master02 ~]# kubectl get node -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEmaster01 Ready control-plane,master 4h43m v1.20.2 192.168.26.71 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://20.10.3master02 Ready control-plane,master 4h37m v1.20.2 192.168.26.72 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://20.10.3node01 Ready <none> 10m v1.20.2 192.168.26.73 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://20.10.3

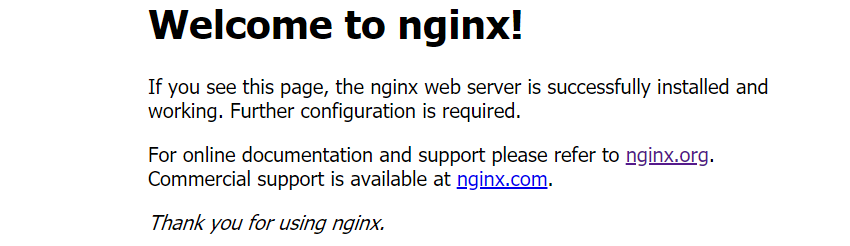

验证:部署nginx

创建deployment、pod、service

[root@master02 ~]# kubectl create deployment nginx-dep --image=nginx --replicas=2deployment.apps/nginx-dep created[root@master02 ~]# kubectl expose deployment nginx-dep --port=80 --target-port=80 --type=NodePortservice/nginx-dep exposed[root@master02 ~]# kubectl get svc | grep nginxnginx-dep NodePort 10.98.67.230 <none> 80:30517/TCP 13s

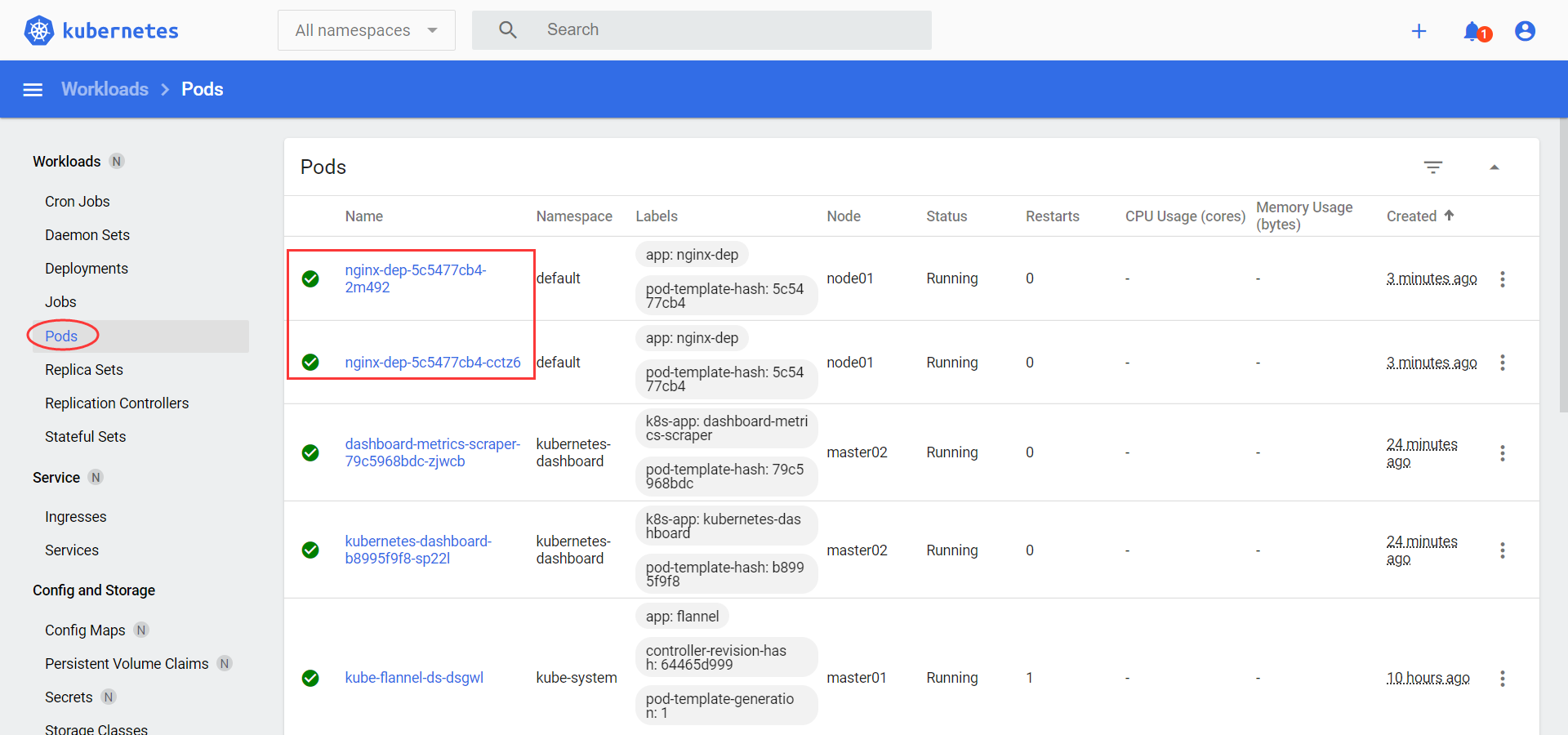

- 查看pod

至此,k8s-v1.20.2完美成功部署![root@master02 ~]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-dep-5c5477cb4-85d6x 1/1 Running 0 11m 10.26.2.2 node01 <none> <none>nginx-dep-5c5477cb4-vm2g9 1/1 Running 0 11m 10.26.2.3 node01 <none> <none>

**离线部署k8s-v1.20.2集群(三)

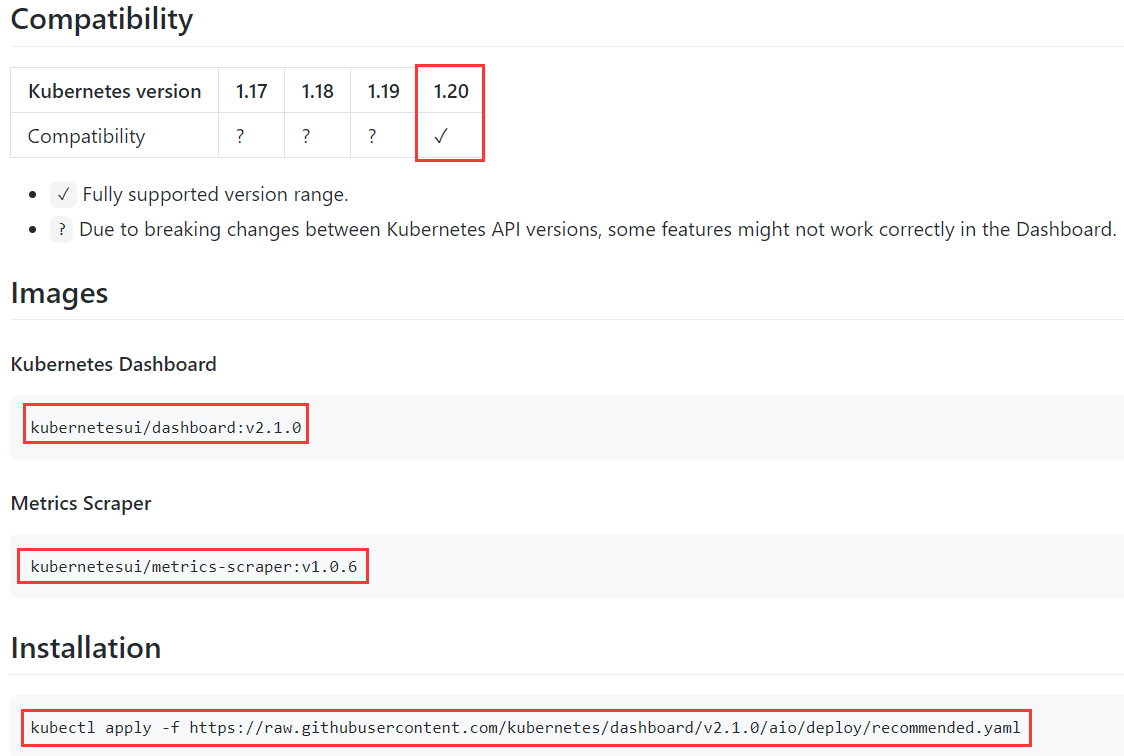

部署Kubernetes Dashboard

下载镜像及yaml文件

github下载地址:https://github.com/kubernetes/dashboard/releases

选择版本:https://github.com/kubernetes/dashboard/releases/tag/v2.1.0

recommended.yaml文件很难下载,可从浏览器复制:~]# docker pull kubernetesui/dashboard:v2.1.0...~]# docker pull kubernetesui/metrics-scraper:v1.0.6...~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.1.0/aio/deploy/recommended.yaml

https://github.com/kubernetes/dashboard/blob/v2.1.0/aio/deploy/recommended.yaml

```yaml apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard[root@master01 kubernetes]# vi dashboard.yaml

apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard

namespace: kubernetes-dashboard

kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports:

- port: 443targetPort: 8443nodePort: 30123

type: NodePort selector:

k8s-app: kubernetes-dashboard

apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard

type: Opaque

apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data:

csrf: “”

apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard

type: Opaque

kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules:

Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [“”]

resources: [“secrets”]

resourceNames: [“kubernetes-dashboard-key-holder”, “kubernetes-dashboard-certs”, “kubernetes-dashboard-csrf”]

verbs: [“get”, “update”, “delete”]

Allow Dashboard to get and update ‘kubernetes-dashboard-settings’ config map.

- apiGroups: [“”]

resources: [“configmaps”]

resourceNames: [“kubernetes-dashboard-settings”]

verbs: [“get”, “update”]

Allow Dashboard to get metrics.

- apiGroups: [“”] resources: [“services”] resourceNames: [“heapster”, “dashboard-metrics-scraper”] verbs: [“proxy”]

- apiGroups: [“”] resources: [“services/proxy”] resourceNames: [“heapster”, “http:heapster:”, “https:heapster:”, “dashboard-metrics-scraper”, “http:dashboard-metrics-scraper”] verbs: [“get”]

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules:

Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: [“metrics.k8s.io”] resources: [“pods”, “nodes”] verbs: [“get”, “list”, “watch”]

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects:

- kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects:

- kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard

kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers:

- name: kubernetes-dashboardimage: kubernetesui/dashboard:v2.1.0imagePullPolicy: IfNotPresentports:- containerPort: 8443protocol: TCPargs:- --auto-generate-certificates- --namespace=kubernetes-dashboard# Uncomment the following line to manually specify Kubernetes API server Host# If not specified, Dashboard will attempt to auto discover the API server and connect# to it. Uncomment only if the default does not work.# - --apiserver-host=http://my-address:portvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs# Create on-disk volume to store exec logs- mountPath: /tmpname: tmp-volumelivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30securityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule

kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports:

- port: 8000targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper annotations: seccomp.security.alpha.kubernetes.io/pod: ‘runtime/default’ spec: containers:

- name: dashboard-metrics-scraperimage: kubernetesui/metrics-scraper:v1.0.6imagePullPolicy: IfNotPresentports:- containerPort: 8000protocol: TCPlivenessProbe:httpGet:scheme: HTTPpath: /port: 8000initialDelaySeconds: 30timeoutSeconds: 30volumeMounts:- mountPath: /tmpname: tmp-volumesecurityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedulevolumes:- name: tmp-volumeemptyDir: {}

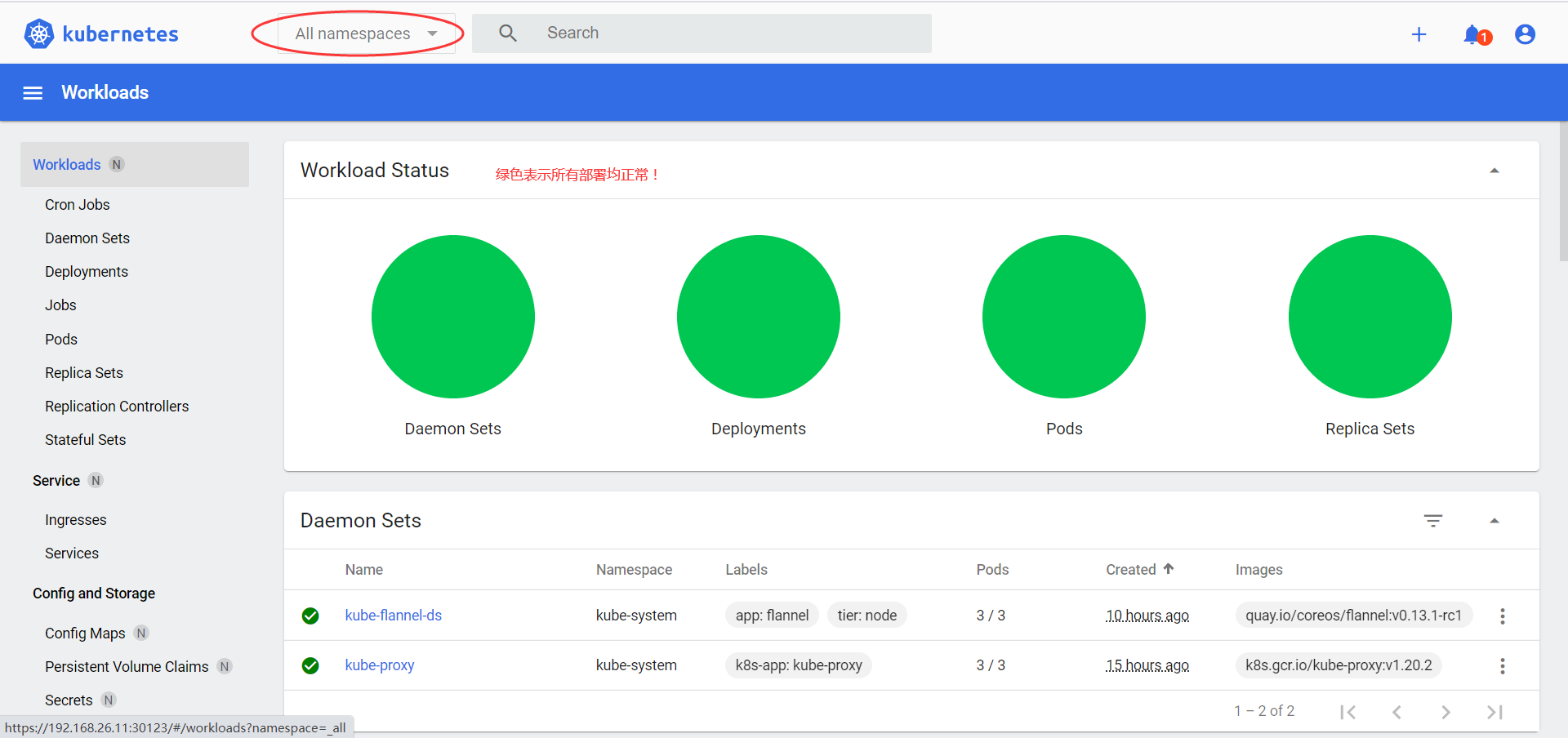

- **默认Dashboard只能集群内部访问,修改`Service`为`NodePort`类型,`nodePort: 30123`,暴露到外部:**<br />- 镜像策略为:imagePullPolicy: IfNotPresent<br /><a name="idDdD"></a>### 应用配置文件```shellshell[root@master01 kubernetes]# kubectl apply -f dashboard.yaml...[root@master01 kubernetes]# kubectl get pods,svc -n kubernetes-dashboardNAME READY STATUS RESTARTS AGEpod/dashboard-metrics-scraper-79c5968bdc-zjwcb 1/1 Running 0 83spod/kubernetes-dashboard-b8995f9f8-sp22l 1/1 Running 0 83sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/dashboard-metrics-scraper ClusterIP 10.105.36.135 <none> 8000/TCP 83sservice/kubernetes-dashboard NodePort 10.107.175.193 <none> 443:30123/TCP 84s

访问地址:https://NodeIP:30123 即:https://192.168.26.11:30123

登录

- 创建service account并绑定默认cluster-admin管理员集群角色

kubernetes-dashboard命名空间的token权限太小,这里使用管理员角色可以查看集群中所有资源对象。

[root@master01 kubernetes]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@master01 kubernetes]# kubectl create clusterrolebinding dashboard-admin —clusterrole=cluster-admin —serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

- 获取登录token

[root@master01 kubernetes]# kubectl -n kube-system get secret | grep dashboard

dashboard-admin-token-g6tg7 kubernetes.io/service-account-token 3 26s

[root@master01 kubernetes]# kubectl describe secrets -n kube-system dashboard-admin-token-g6tg7

Name: dashboard-admin-token-g6tg7

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: f6e73447-5f15-46a4-a578-12e815a998f5

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImFjVm9aWHZ5eFc2M2VTbFdZUXdpZXFhWm5UQXZZY05zZFVGdm8xcFhqSzgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZzZ0ZzciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZjZlNzM0NDctNWYxNS00NmE0LWE1NzgtMTJlODE1YTk5OGY1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.F5ayMbfCA5H0-W9FU211WpE74w0kzPfH0Sj-sPCK-EGeSSnZ6KqtO2HD7H01f5rv45IIIyguj1_0Z6b3qVtDsR9EwlJVEKxBvaV7aoU-MopuUL4W-ZoXqndgGHLsZp3G9tdcw-MdTsIBXa5Kx7BVMmNTMQasSO4RkKHA03GASxbhcS5NbMYIsBe9ZAJ1X4wE-SMix31c0l2Wf6fIWJOb4KFBxUWzYH0gSZrUhDsKkKSF4GNEXi99AccopbOYFuY_xZ0BEqmanhQKU2NqtttaVm01XC9B1WuGsDUyq_CBzB9sewERpaL06MDGsd6FUPv8_Fa8yKMXEIRjUqBrgQZ-uA

- 查看之前部署的nginx

至此,kubernetes dashboard完美成功部署。

2021/2/9 广州