- 使用ConfigMap管理应用配置

- 停止dubbo微服务集群

- !/bin/bash

- 交付Apollo至Kubernetes集群

- 实战dubbo微服务接入Apollo配置中心

- 实战维护多套dubbo微服务环境

- 互联网公司技术部的日常-发版流程

k8s-centos8u2-集群-集成Apollo配置中心

使用ConfigMap管理应用配置

k8s ConfigMap 作为k8s一种标准资源,专门用来集中管理应用的配置。

拆分环境

| 主机名 | 角色 | ip |

|---|---|---|

| vms11.cos.com | zk1.op.com (Test环境) | 192.168.26.11 |

| vms12.cos.com | zk2.op.com (Prod环境) | 192.168.26.12 |

停止dubbo微服务集群

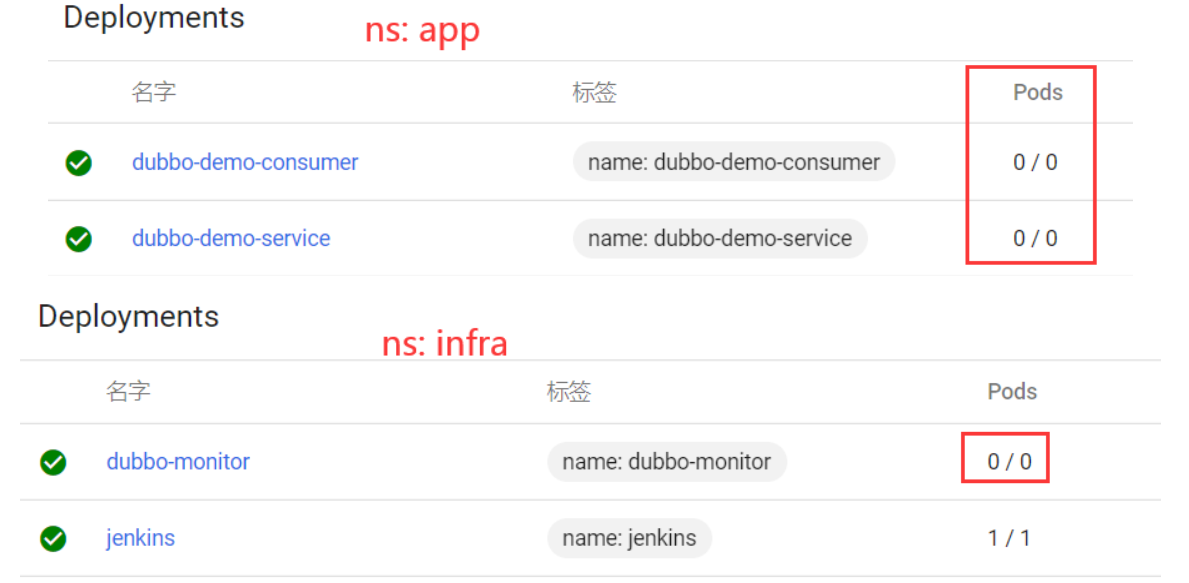

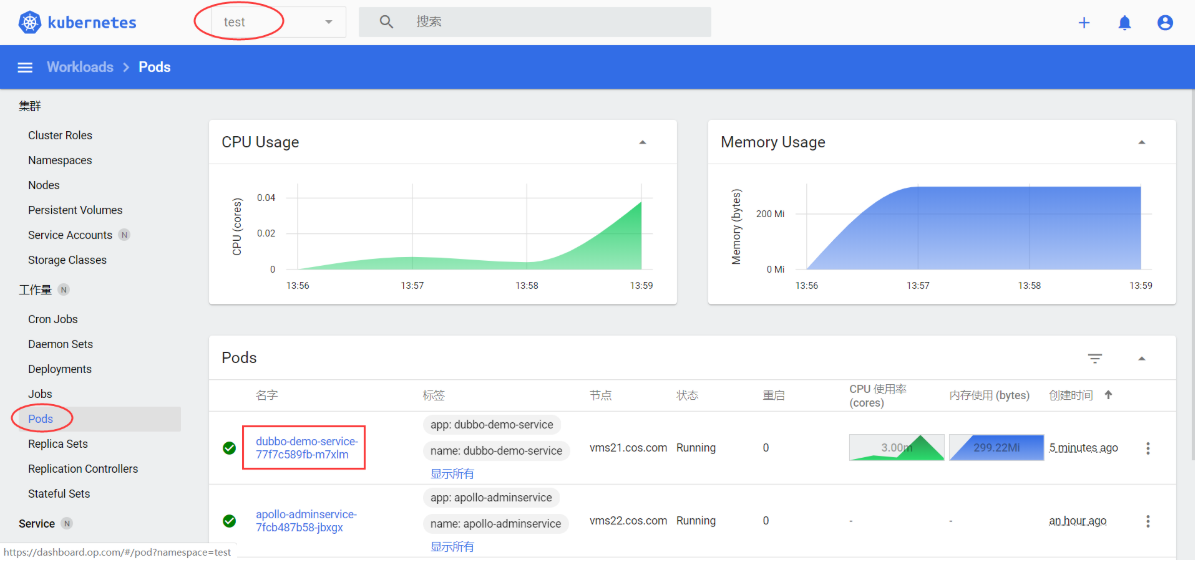

在dashboard中将Provider、Consumer、Monitor全部停止掉。(节省资源)

重配zookeeper

vms11上:停止zk、删除data和logs目录下的所有文件、删除zoo.cfg中的server配置

[root@vms11 ~]# cd /opt/zookeeper/bin[root@vms11 bin]# ./zkServer.sh stop[root@vms11 bin]# ./zkServer.sh status[root@vms11 bin]# ps aux|gtep zoo[root@vms11 bin]# rm -rf /data/zookeeper/data/*[root@vms11 bin]# rm -rf /data/zookeeper/logs/*[root@vms11 bin]# vi /opt/zookeeper/conf/zoo.cfg

tickTime=2000initLimit=10syncLimit=5dataDir=/data/zookeeper/datadataLogDir=/data/zookeeper/logsclientPort=2181

vms12上:停止zk、删除data和logs目录下的所有文件、删除zoo.cfg中的server配置

[root@vms12 ~]# cd /opt/zookeeper/bin[root@vms12 bin]# ./zkServer.sh stop[root@vms12 bin]# ./zkServer.sh status[root@vms12 bin]# ps aux|gtep zoo[root@vms12 bin]# rm -rf /data/zookeeper/data/*[root@vms12 bin]# rm -rf /data/zookeeper/logs/*[root@vms12 bin]# vi /opt/zookeeper/conf/zoo.cfg

tickTime=2000initLimit=10syncLimit=5dataDir=/data/zookeeper/datadataLogDir=/data/zookeeper/logsclientPort=2181

重启zk:

[root@vms11 bin]# ./zkServer.sh startZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgStarting zookeeper ... STARTED[root@vms11 bin]# ./zkServer.sh statusZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgClient port found: 2181. Client address: localhost.Mode: standalone[root@vms12 bin]# ./zkServer.sh startZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgStarting zookeeper ... STARTED[root@vms12 bin]# ./zkServer.sh statusZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgClient port found: 2181. Client address: localhost.Mode: standalone[root@vms21 ~]# /opt/zookeeper/bin/zkServer.sh stopZooKeeper JMX enabled by defaultUsing config: /opt/zookeeper/bin/../conf/zoo.cfgStopping zookeeper ... /opt/zookeeper/bin/zkServer.sh: line 213: kill: (6101) - No such processSTOPPED

准备资源配置清单(dubbo-monitor)

在运维主机vms200上:

[root@vms200 ~]# cd /data/k8s-yaml/dubbo-monitor[root@vms200 dubbo-monitor]# vi configmap.yaml

apiVersion: v1kind: ConfigMapmetadata:name: dubbo-monitor-cmnamespace: infradata:dubbo.properties: |dubbo.container=log4j,spring,registry,jettydubbo.application.name=simple-monitordubbo.application.owner=op.configdubbo.registry.address=zookeeper://zk1.op.com:2181dubbo.protocol.port=20880dubbo.jetty.port=8080dubbo.jetty.directory=/dubbo-monitor-simple/monitordubbo.charts.directory=/dubbo-monitor-simple/chartsdubbo.statistics.directory=/dubbo-monitor-simple/statisticsdubbo.log4j.file=/dubbo-monitor-simple/logs/dubbo-monitor.logdubbo.log4j.level=WARN

[root@vms200 dubbo-monitor]# vi deployment-cm.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: dubbo-monitornamespace: infralabels:name: dubbo-monitorspec:replicas: 1selector:matchLabels:name: dubbo-monitortemplate:metadata:labels:app: dubbo-monitorname: dubbo-monitorspec:containers:- name: dubbo-monitorimage: harbor.op.com/infra/dubbo-monitor:latestports:- containerPort: 8080protocol: TCP- containerPort: 20880protocol: TCPimagePullPolicy: IfNotPresentvolumeMounts:- name: configmap-volumemountPath: /dubbo-monitor-simple/confvolumes:- name: configmap-volumeconfigMap:name: dubbo-monitor-cmimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

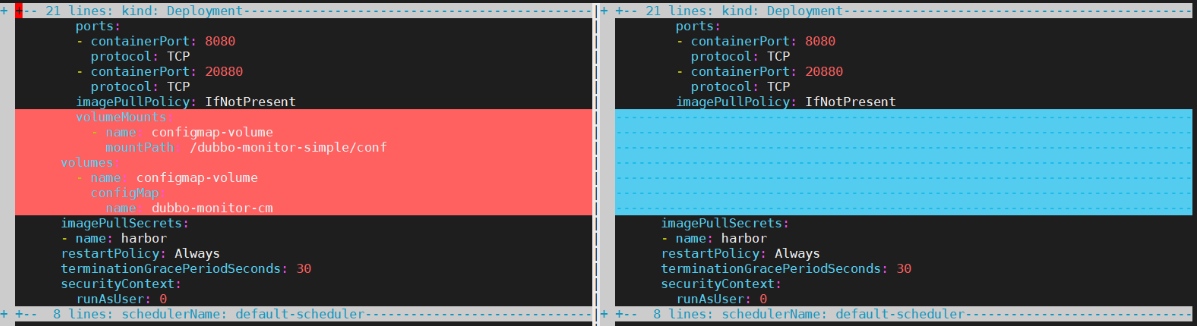

[root@vms200 dubbo-monitor]# vimdiff deployment-cm.yaml deployment.yaml

红色块中的内容是在原

deployment.yaml中增加的内容:

- 申明一个卷,卷名为

configmap-volume- 指定这个卷使用名为

dubbo-monitor-cm的configMap- 在

containers中挂载卷,卷名与申明的卷相同- 用

mountPath的方式挂载到指定目录(/dubbo-monitor-simple/conf),会使容器内的被挂载目录中原有的文件不可见,覆盖启动脚本/dubbo-monitor-simple/bin/start.sh以下功能:```sh!/bin/bash

sed -e “s/{ZOOKEEPER_ADDRESS}/$ZOOKEEPER_ADDRESS/g” /dubbo-monitor-simple/conf/dubbo_origin.properties > /dubbo-monitor-simple/conf/dubbo.properties … ```

应用资源配置清单

- 在任意一台k8s运算节点(vms21或vms22)执行:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-monitor/configmap.yamlconfigmap/dubbo-monitor-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-monitor/deployment-cm.yamldeployment.apps/dubbo-monitor configured

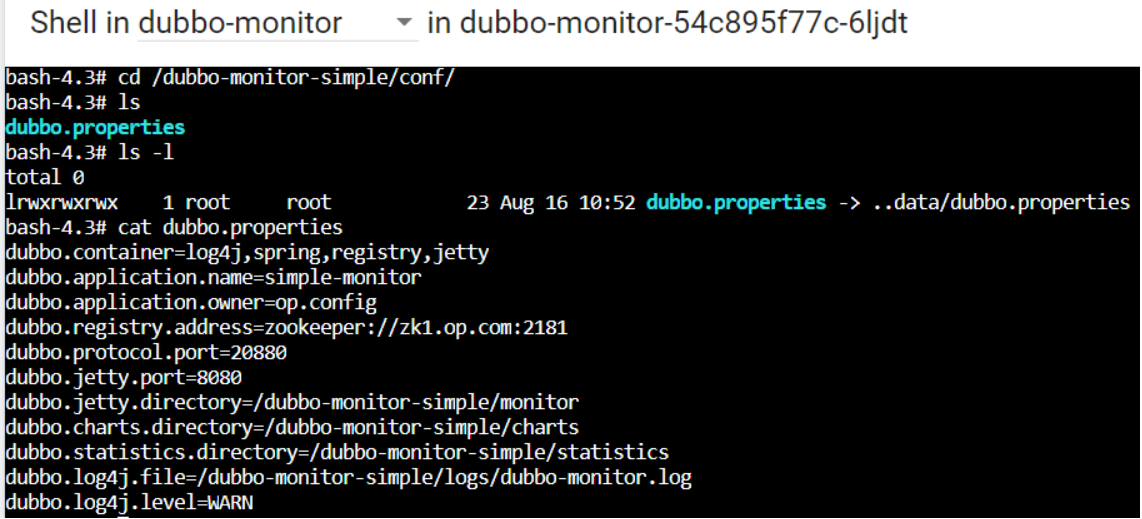

- 在dashboard进入pod查看:

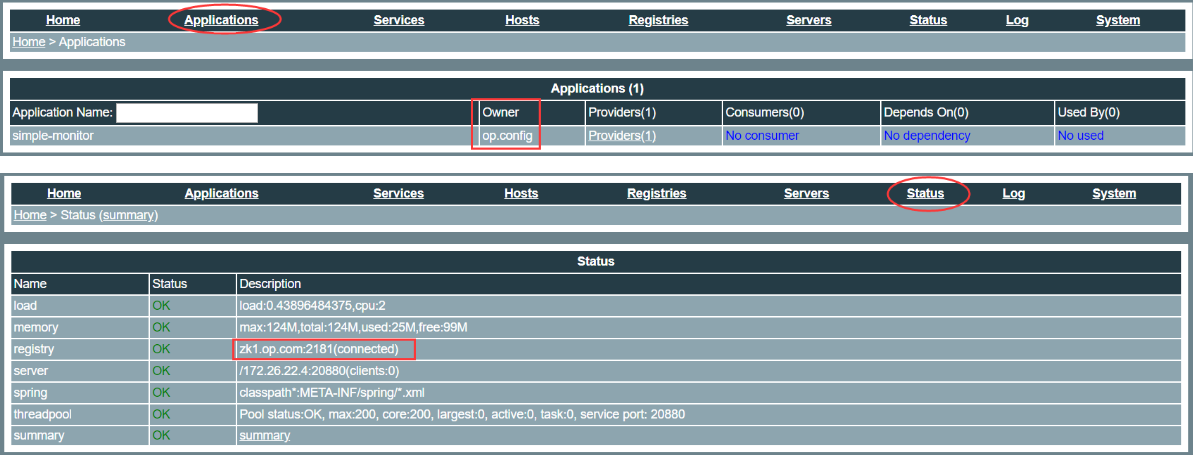

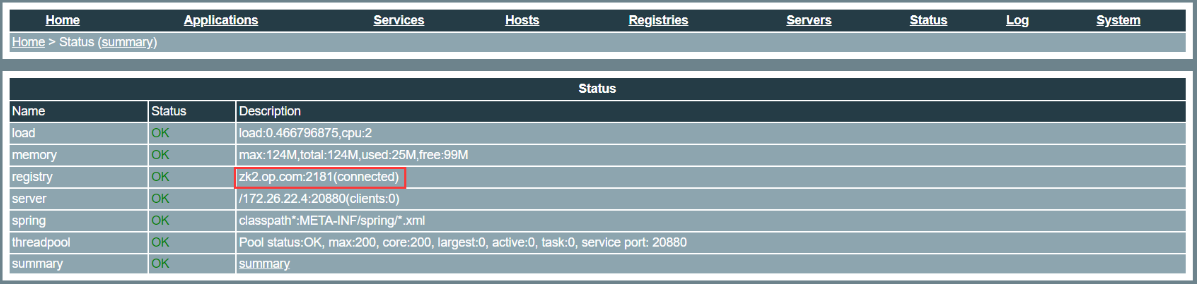

- 验证configmap的配置:

在K8S的dashboard上,修改dubbo-monitor的configmap配置为不同的zk(

zk2.op.com:2181),重启POD(dp修改副本数0->1或删除POD),浏览器刷新或打开http://dubbo-monitor.op.com观察效果。

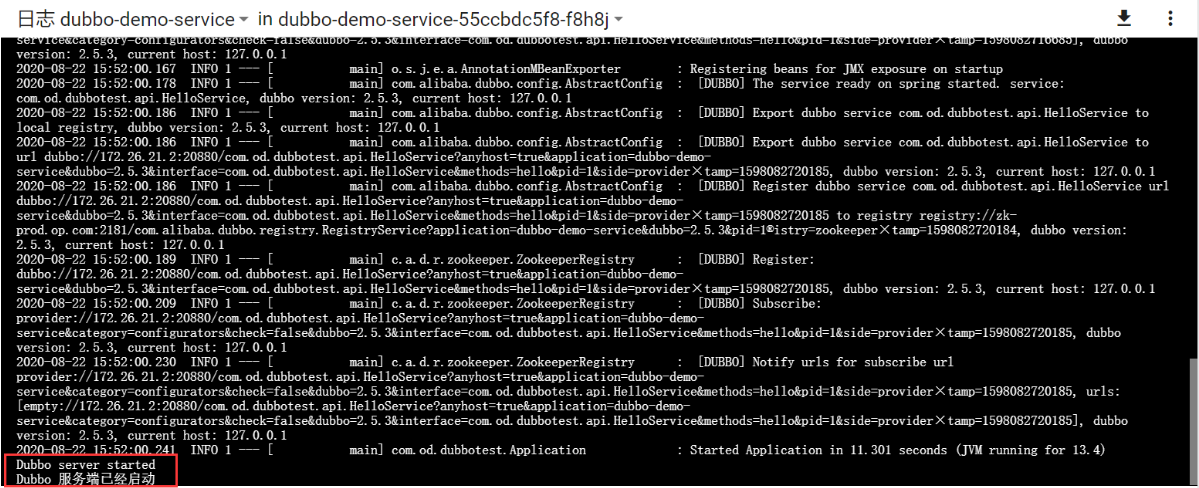

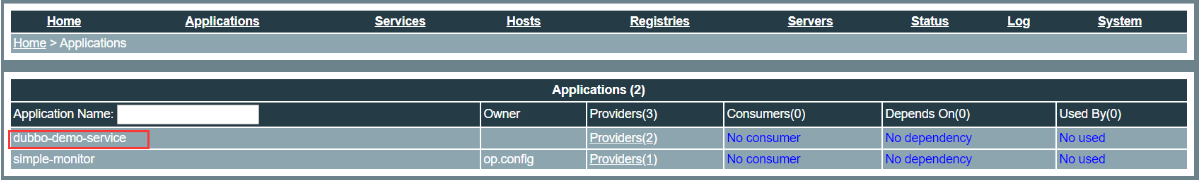

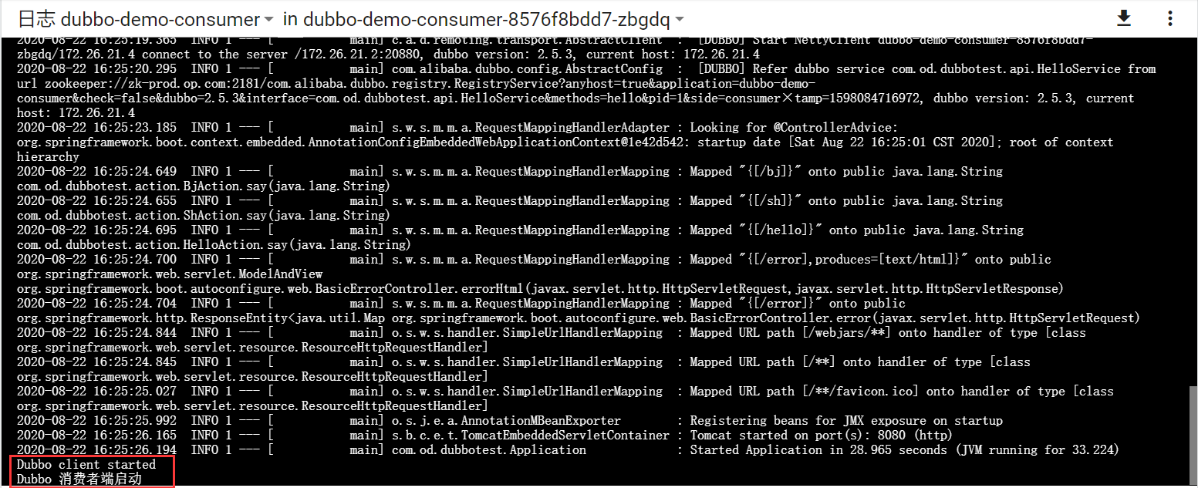

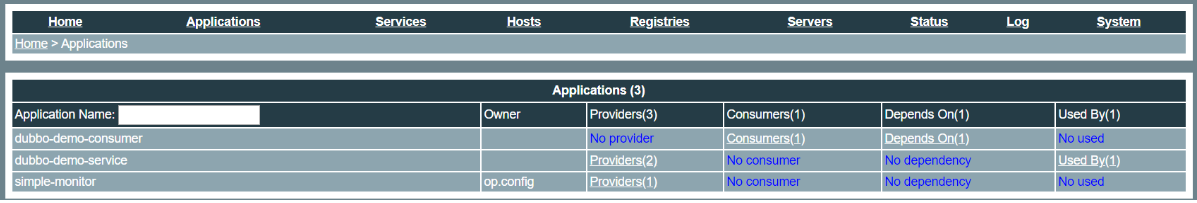

重新发版,修改dubbo项目的配置文件

修改项目源代码

- duboo-demo-service

dubbo-server/src/main/java/config.propertiesdubbo.registry=zookeeper://zk1.op.com:2181dubbo.port=28080

- dubbo-demo-web

dubbo-client/src/main/java/config.propertiesdubbo.registry=zookeeper://zk1.op.com:2181

使用Jenkins进行CI

修改/应用资源配置清单

k8s的dashboard上,修改deployment使用的容器版本,提交应用。

交付Apollo至Kubernetes集群

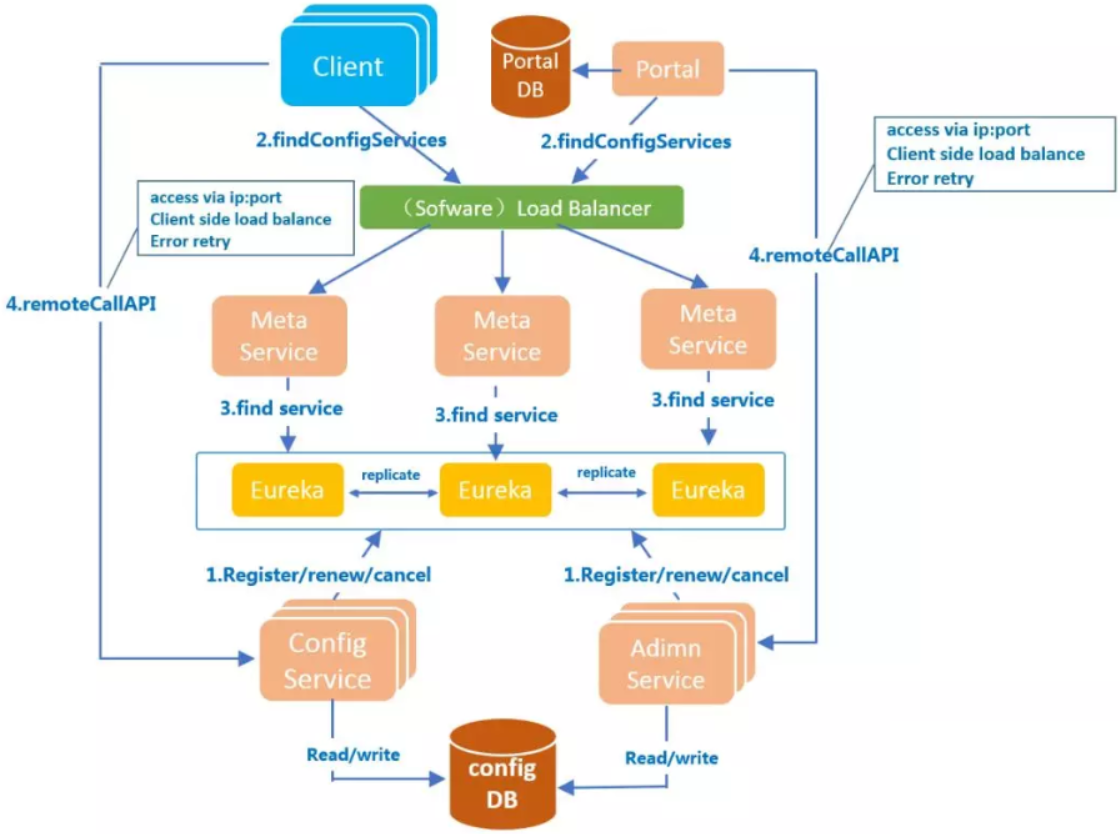

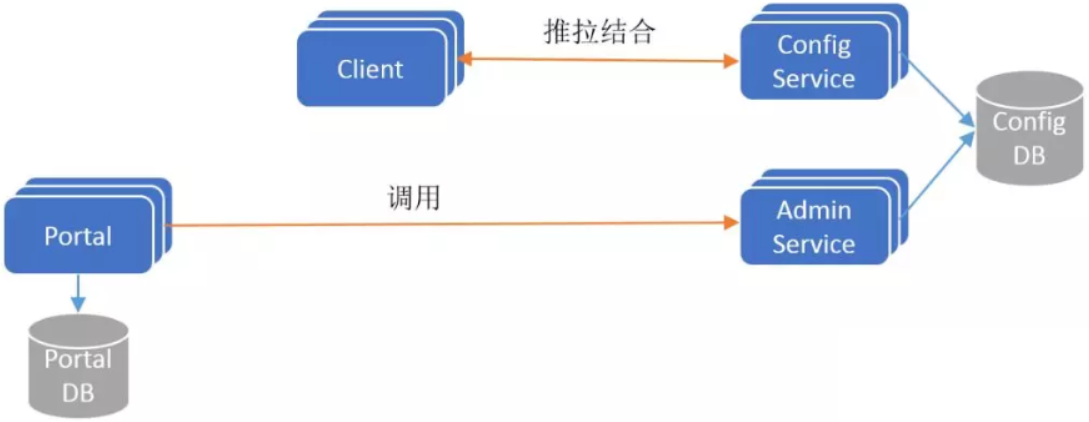

Apollo简介

Apollo(阿波罗)是携程框架部门研发的分布式配置中心,能够集中化管理应用不同环境、不同集群的配置,配置修改后能够实时推送到应用端,并且具备规范的权限、流程治理等特性,适用于微服务配置管理场景。

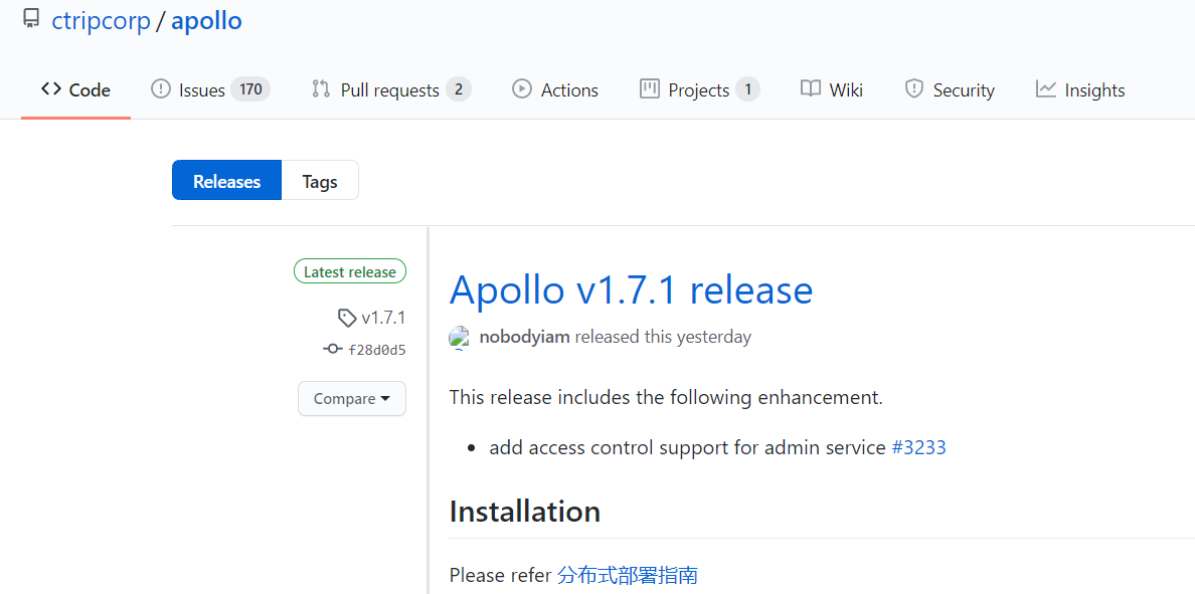

官方GitHub地址

Apollo官方地址:https://github.com/ctripcorp/apollo

下载:https://github.com/ctripcorp/apollo/releases

安装文档:https://github.com/ctripcorp/apollo/wiki/分布式部署指南

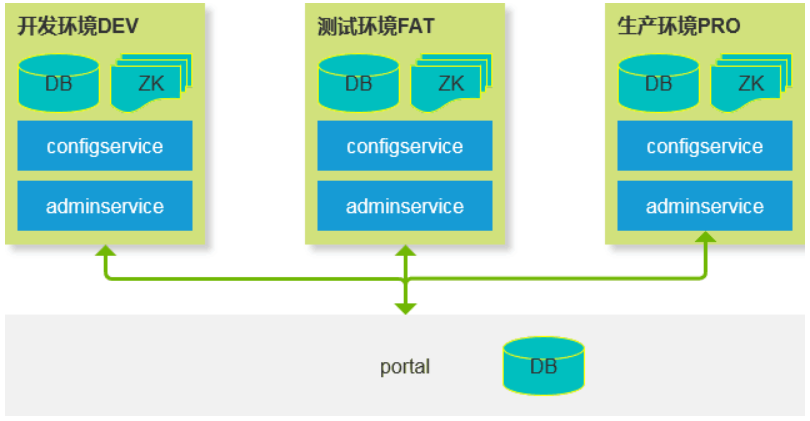

基础架构

简化模型

交付apollo-configservice

准备软件包

在运维主机vms200上:

下载官方release包:https://github.com/ctripcorp/apollo/releases

下载版本:https://github.com/ctripcorp/apollo/releases/tag/v1.7.1

[root@vms200 ~]# cd /opt/src[root@vms200 src]# wget https://github.com/ctripcorp/apollo/releases/download/v1.7.1/apollo-adminservice-1.7.1-github.zip...[root@vms200 src]# wget https://github.com/ctripcorp/apollo/releases/download/v1.7.1/apollo-configservice-1.7.1-github.zip...[root@vms200 src]# wget https://github.com/ctripcorp/apollo/releases/download/v1.7.1/apollo-portal-1.7.1-github.zip...[root@vms200 src]# ls -l apo*-rw-r--r-- 1 root root 54498643 Aug 16 21:10 apollo-adminservice-1.7.1-github.zip-rw-r--r-- 1 root root 57809310 Aug 16 21:10 apollo-configservice-1.7.1-github.zip-rw-r--r-- 1 root root 41719847 Aug 16 21:11 apollo-portal-1.7.1-github.zip

安装mysql数据库和执行数据库脚本

- 安装方式一:在主机

vms11上:(vms11为数据库主机) - 安装方式二:在主机

vms200上:(容器方式)

安装方式一:yum安装mariadb

mariadb地址:https://mariadb.org/

在主机vms11上

- 更新yum源:/etc/yum.repos.d/MariaDB.repo

[mariadb]name = MariaDBbaseurl = https://mirrors.ustc.edu.cn/mariadb/yum/10.1/centos7-amd64/gpgkey=https://mirrors.ustc.edu.cn/mariadb/yum/RPM-GPG-KEY-MariaDBgpgcheck=1

- 导入GPG-KEY

# rpm --import https://mirrors.ustc.edu.cn/mariadb/yum/RPM-GPG-KEY-MariaDB# yum clean all && rm -rf /var/cache/yum/*# yum makecache && yum update -y

- 查看和安装

# yum list mariadb --showduplicates #数据库客户端# yum list mariadb-server --showduplicates #数据库服务端# yum install mariadb-server -y

- 更新数据库版本

# yum update MariaDB-server -y

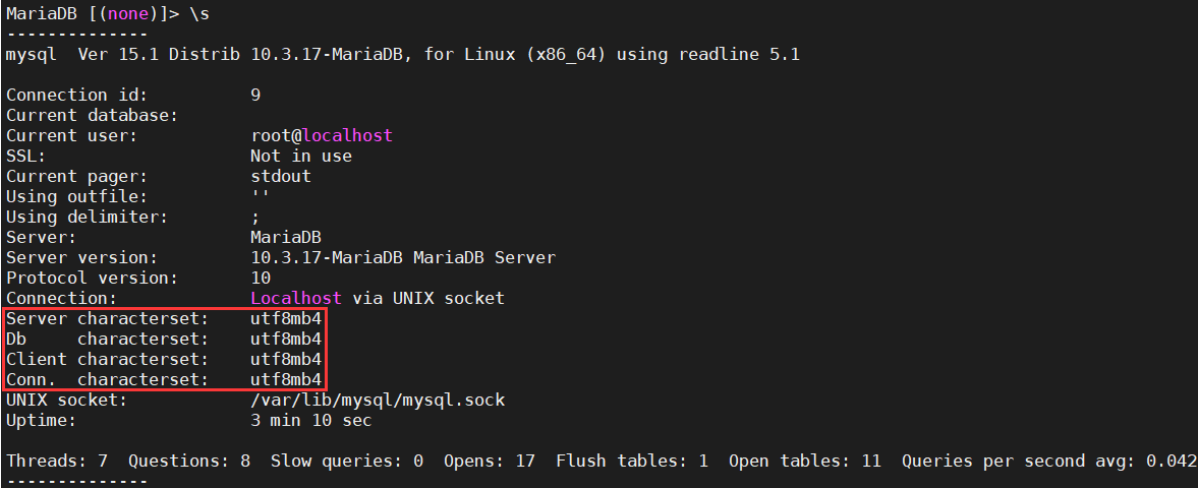

- 基本配置:设置字符编码

# vi /etc/my.cnf.d/mariadb-server.cnf #在对应位置[mysqld]下面增加

character_set_server = utf8mb4collation_server = utf8mb4_general_ciinit_connect = "SET NAMES 'utf8mb4'"

# vi /etc/my.cnf.d/mysql-clients.cnf #在对应位置[mysql]下面增加

default-character-set = utf8mb4

- 启动:

# systemctl start mariadb# systemctl enable mariadb

- 设置root密码:

[root@vms11 mysql]# mysqladmin -uroot passwordNew password:Confirm new password:

- 查看:

[root@vms11 mysql]# mysql -uroot -pEnter password:Welcome to the MariaDB monitor. Commands end with ; or \g.Your MariaDB connection id is 9Server version: 10.3.17-MariaDB MariaDB ServerCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> \s

注意字符集都是utf8

[root@vms11 mysql]# ps aux |grep mysqldmysql 190971 0.1 4.4 1751308 89444 ? Ssl 10:06 0:01 /usr/libexec/mysqld --basedir=/usrroot 194408 0.0 0.0 221900 1060 pts/0 S+ 10:28 0:00 grep --color=auto mysqld[root@vms11 mysql]# ps aux |grep mariadbroot 194486 0.0 0.0 221900 1072 pts/0 S+ 10:28 0:00 grep --color=auto mariadb[root@vms11 mysql]# ps aux |grep MariaDBroot 194513 0.0 0.0 221900 1088 pts/0 S+ 10:29 0:00 grep --color=auto MariaDB[root@vms11 mysql]# netstat -luntp |grep 3306tcp6 0 0 :::3306 :::* LISTEN 190971/mysqld

其实就是mysql数据库

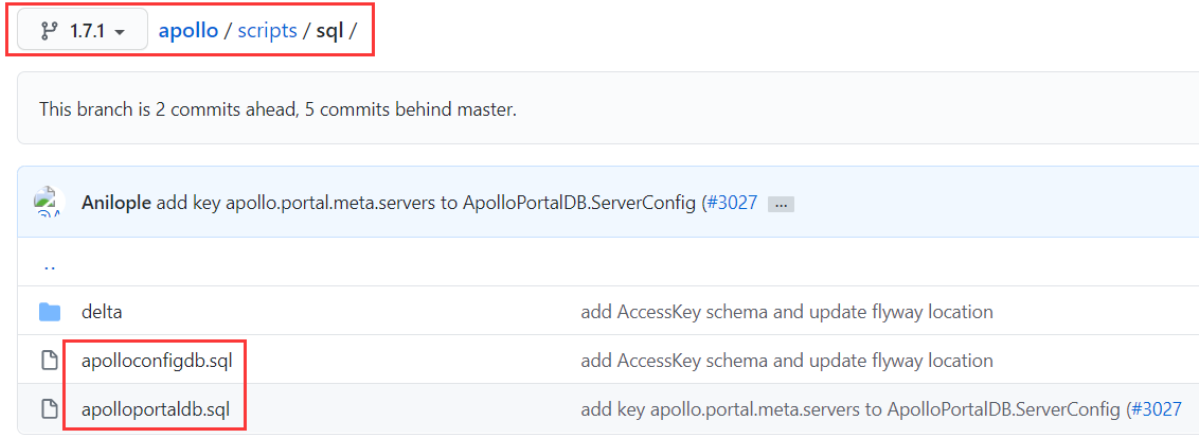

- 获取

apolloconfigdb.sql和apolloportaldb.sql(选择Raw格式)

# mkdir /data/mysql# cd /data/mysql# wget https://raw.githubusercontent.com/ctripcorp/apollo/1.7.1/scripts/sql/apolloconfigdb.sql# wget https://raw.githubusercontent.com/ctripcorp/apollo/1.7.1/scripts/sql/apolloportaldb.sql

- 创建数据库

ApolloConfigDB

[root@vms11 mysql]# mysql -uroot -p < apolloconfigdb.sql[root@vms11 mysql]# mysql -uroot -p...MariaDB [(none)]> show databases;+--------------------+| Database |+--------------------+| ApolloConfigDB || information_schema || mysql || performance_schema |+--------------------+MariaDB [(none)]> use ApolloConfigDB;...MariaDB [ApolloConfigDB]> show tables;+--------------------------+| Tables_in_ApolloConfigDB |+--------------------------+| AccessKey || App || AppNamespace || Audit || Cluster || Commit || GrayReleaseRule || Instance || InstanceConfig || Item || Namespace || NamespaceLock || Release || ReleaseHistory || ReleaseMessage || ServerConfig |+--------------------------+

另一种创建方式:

# mysql -uroot -pmysql> create database ApolloConfigDB;mysql> source .apolloconfigdb.sql

安装方式二:容器

- 下载镜像并推送到harbor仓库:在vms200上

[root@vms200 ~]# docker pull hub.c.163.com/library/mysql[root@vms200 ~]# docker images |grep mysqlhub.c.163.com/library/mysql latest 9e64176cd8a2 3 years ago 407MB[root@vms200 ~]# docker run -dit --name=db --restart=always -e MYSQL_ROOT_PASSWORD=123456 hub.c.163.com/library/mysql[root@vms200 ~]# docker exec -it db bashroot@c725c7302aa9:/# mysql -uroot -p123456mysql> SHOW VARIABLES WHERE Variable_name = 'version';+---------------+--------+| Variable_name | Value |+---------------+--------+| version | 5.7.18 |+---------------+--------+mysql> quitByeroot@c725c7302aa9:/# exitexit[root@vms200 ~]# docker rm -f dbdb[root@vms200 ~]# docker tag hub.c.163.com/library/mysql:latest harbor.op.com/public/mysql:v5.7.18[root@vms200 ~]# docker push harbor.op.com/public/mysql:v5.7.18

- 在vms200上运行mysql容器

创建容器挂载目录

[root@vms11 ~]# mkdir -p /data/mysql/db

启动容器

docker run -dit --name=eopdb -p 3306:3306 -v /data/mysql/db:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 --restart=always harbor.op.com/public/mysql:v5.7.18

安装mysql客户端

[root@vms200 src]# yum install mariadb -y

数据库用户授权

[root@vms11 mysql]# mysql -uroot -p> grant INSERT,DELETE,UPDATE,SELECT on ApolloConfigDB.* to "apolloconfig"@"192.168.26.%" identified by "123456";> select user,host from mysql.user;+--------------+---------------+| user | host |+--------------+---------------+| root | 127.0.0.1 || apolloconfig | 192.168.26.% |

修改初始数据

MariaDB [(none)]> use ApolloConfigDB;...MariaDB [ApolloConfigDB]> show tables;...MariaDB [ApolloConfigDB]> select * from ServerConfig \G*************************** 1. row ***************************Id: 1Key: eureka.service.urlCluster: defaultValue: http://localhost:8080/eureka/Comment: Eureka服务Url,多个service以英文逗号分隔IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-18 14:25:12DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-18 14:25:12...

修改Key: eureka.service.url:

MariaDB [ApolloConfigDB]> update ApolloConfigDB.ServerConfig set ServerConfig.Value="http://config.op.com/eureka" where ServerConfig.Key="eureka.service.url";Query OK, 1 row affected (0.003 sec)Rows matched: 1 Changed: 1 Warnings: 0MariaDB [ApolloConfigDB]> select * from ServerConfig \G*************************** 1. row ***************************Id: 1Key: eureka.service.urlCluster: defaultValue: http://config.op.com/eurekaComment: Eureka服务Url,多个service以英文逗号分隔IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-18 14:25:12DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-18 14:49:10

解析域名

DNS主机vms11上:

# vi /var/named/op.com.zone

config A 192.168.26.10

注意前滚序号serial

# systemctl restart named# dig -t A config.op.com @192.168.26.11 +short192.168.26.10

在vms21上测试:

[root@vms21 ~]# dig -t A config.op.com @10.168.0.2 +short192.168.26.10

制作Docker镜像

在运维主机vms200上:

[root@vms200 src]# mkdir /data/dockerfile/apollo-configservice[root@vms200 src]# unzip -o apollo-configservice-1.7.1-github.zip -d /data/dockerfile/apollo-configserviceArchive: apollo-configservice-1.7.1-github.zipinflating: /data/dockerfile/apollo-configservice/scripts/shutdown.shinflating: /data/dockerfile/apollo-configservice/scripts/startup.shinflating: /data/dockerfile/apollo-configservice/config/app.propertiesinflating: /data/dockerfile/apollo-configservice/apollo-configservice-1.7.1.jarinflating: /data/dockerfile/apollo-configservice/apollo-configservice.confinflating: /data/dockerfile/apollo-configservice/config/application-github.propertiesinflating: /data/dockerfile/apollo-configservice/apollo-configservice-1.7.1-sources.jar

配置数据库连接串

查看配置:在vms200上

[root@vms200 src]# cd /data/dockerfile/apollo-configservice[root@vms200 apollo-configservice]# cat config/application-github.properties# DataSourcespring.datasource.url = jdbc:mysql://fill-in-the-correct-server:3306/ApolloConfigDB?characterEncoding=utf8spring.datasource.username = FillInCorrectUserspring.datasource.password = FillInCorrectPassword

修改数据库配置:在vms200上:/data/dockerfile/apollo-configservice

[root@vms200 apollo-configservice]# vi config/application-github.properties

# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456

配置mysql域名解析:在vms11上

[root@vms11 ~]# vi /var/named/op.com.zone

末尾增加一行:

mysql A 192.168.26.11

注意serial前滚。

重启并检查

[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A mysql.op.com @192.168.26.11 +short192.168.26.11

更新启动脚本

在vms200上:/data/dockerfile/apollo-configservice

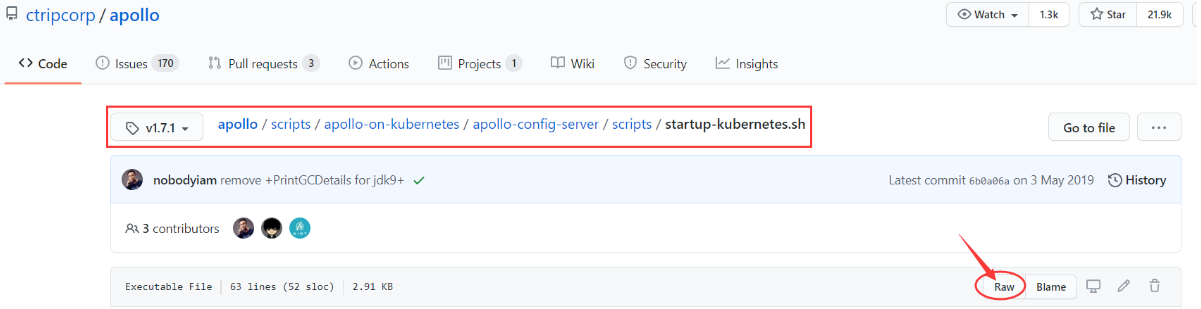

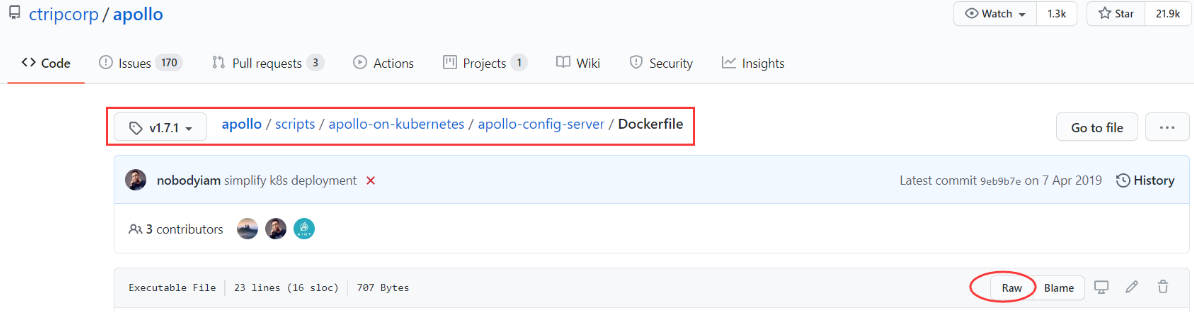

因为在k8s部署,因此从github下载对应版本的启动脚本:

因为使用pod关停,scripts/shutdown.sh用不上,可以删除。

- 全部删除后,下载对应版本的启动脚本:

[root@vms200 apollo-configservice]# cd scripts/[root@vms200 scripts]# rm -rf *.sh[root@vms200 scripts]# wget https://raw.githubusercontent.com/ctripcorp/apollo/v1.7.1/scripts/apollo-on-kubernetes/apollo-config-server/scripts/startup-kubernetes.sh[root@vms200 scripts]# vi startup-kubernetes.sh

- 加入一行:

APOLLO_CONFIG_SERVICE_NAME=$(hostname -i)

- 修改jvm参数

export JAVA_OPTS="-Xms128m -Xmx128m -Xss256k -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=384m -XX:NewSize=256m -XX:MaxNewSize=256m -XX:SurvivorRatio=8"

- 修改后的完整脚本如下:/data/dockerfile/apollo-configservice/scripts/startup-kubernetes.sh

#!/bin/bashSERVICE_NAME=apollo-configservice## Adjust log dir if necessaryLOG_DIR=/opt/logs/apollo-config-server## Adjust server port if necessarySERVER_PORT=8080APOLLO_CONFIG_SERVICE_NAME=$(hostname -i)SERVER_URL="http://${APOLLO_CONFIG_SERVICE_NAME}:${SERVER_PORT}"## Adjust memory settings if necessary#export JAVA_OPTS="-Xms6144m -Xmx6144m -Xss256k -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=384m -XX:NewSize=4096m -XX:MaxNewSize=4096m -XX:SurvivorRatio=8"export JAVA_OPTS="-Xms128m -Xmx128m -Xss256k -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=384m -XX:NewSize=256m -XX:MaxNewSize=256m -XX:SurvivorRatio=8"## Only uncomment the following when you are using server jvm#export JAVA_OPTS="$JAVA_OPTS -server -XX:-ReduceInitialCardMarks"########### The following is the same for configservice, adminservice, portal ###########export JAVA_OPTS="$JAVA_OPTS -XX:ParallelGCThreads=4 -XX:MaxTenuringThreshold=9 -XX:+DisableExplicitGC -XX:+ScavengeBeforeFullGC -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+ExplicitGCInvokesConcurrent -XX:+HeapDumpOnOutOfMemoryError -XX:-OmitStackTraceInFastThrow -Duser.timezone=Asia/Shanghai -Dclient.encoding.override=UTF-8 -Dfile.encoding=UTF-8 -Djava.security.egd=file:/dev/./urandom"export JAVA_OPTS="$JAVA_OPTS -Dserver.port=$SERVER_PORT -Dlogging.file=$LOG_DIR/$SERVICE_NAME.log -XX:HeapDumpPath=$LOG_DIR/HeapDumpOnOutOfMemoryError/"# Find Javaif [[ -n "$JAVA_HOME" ]] && [[ -x "$JAVA_HOME/bin/java" ]]; thenjavaexe="$JAVA_HOME/bin/java"elif type -p java > /dev/null 2>&1; thenjavaexe=$(type -p java)elif [[ -x "/usr/bin/java" ]]; thenjavaexe="/usr/bin/java"elseecho "Unable to find Java"exit 1fiif [[ "$javaexe" ]]; thenversion=$("$javaexe" -version 2>&1 | awk -F '"' '/version/ {print $2}')version=$(echo "$version" | awk -F. '{printf("%03d%03d",$1,$2);}')# now version is of format 009003 (9.3.x)if [ $version -ge 011000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elif [ $version -ge 010000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elif [ $version -ge 009000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elseJAVA_OPTS="$JAVA_OPTS -XX:+UseParNewGC"JAVA_OPTS="$JAVA_OPTS -Xloggc:$LOG_DIR/gc.log -XX:+PrintGCDetails"JAVA_OPTS="$JAVA_OPTS -XX:+UseConcMarkSweepGC -XX:+UseCMSCompactAtFullCollection -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=60 -XX:+CMSClassUnloadingEnabled -XX:+CMSParallelRemarkEnabled -XX:CMSFullGCsBeforeCompaction=9 -XX:+CMSClassUnloadingEnabled -XX:+PrintGCDateStamps -XX:+PrintGCApplicationConcurrentTime -XX:+PrintHeapAtGC -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=5 -XX:GCLogFileSize=5M"fifiprintf "$(date) ==== Starting ==== \n"cd `dirname $0`/..chmod 755 $SERVICE_NAME".jar"./$SERVICE_NAME".jar" startrc=$?;if [[ $rc != 0 ]];thenecho "$(date) Failed to start $SERVICE_NAME.jar, return code: $rc"exit $rc;fitail -f /dev/null

- +x权限

[root@vms200 scripts]# chmod +x startup-kubernetes.sh

写Dockerfile

从github下载或复制:

在vms200:/data/dockerfile/apollo-configservice

[root@vms200 apollo-configservice]# vi Dockerfile

# Dockerfile for apollo-config-server# Build with:# docker build -t apollo-config-server:v1.0.0 .# docker build . -t harbor.op.com/infra/apollo-configservice:v1.7.1FROM harbor.op.com/base/jre8:8u112ENV VERSION 1.7.1RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\echo "Asia/Shanghai" > /etc/timezoneADD apollo-configservice-${VERSION}.jar /apollo-configservice/apollo-configservice.jarADD config/ /apollo-configservice/configADD scripts/ /apollo-configservice/scriptsCMD ["/apollo-configservice/scripts/startup-kubernetes.sh"]

制作镜像并推送

在vms200:/data/dockerfile/apollo-configservice

[root@vms200 apollo-configservice]# docker build . -t harbor.op.com/infra/apollo-configservice:v1.7.1Sending build context to Docker daemon 64.73MBStep 1/7 : FROM harbor.op.com/base/jre8:8u112---> 9fa5bdd784cbStep 2/7 : ENV VERSION 1.7.1---> Running in 1d9215c8d2dbRemoving intermediate container 1d9215c8d2db---> 86bc848b0b16Step 3/7 : RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone---> Running in e218dc5bcf97Removing intermediate container e218dc5bcf97---> 7c0aed62f2ceStep 4/7 : ADD apollo-configservice-${VERSION}.jar /apollo-configservice/apollo-configservice.jar---> a3e77ab2da91Step 5/7 : ADD config/ /apollo-configservice/config---> 8872877ed0e9Step 6/7 : ADD scripts/ /apollo-configservice/scripts---> 101e5921034dStep 7/7 : CMD ["/apollo-configservice/scripts/startup-kubernetes.sh"]---> Running in 236843be2fa6Removing intermediate container 236843be2fa6---> 84f9d933410dSuccessfully built 84f9d933410dSuccessfully tagged harbor.op.com/infra/apollo-configservice:v1.7.1[root@vms200 apollo-configservice]# docker push harbor.op.com/infra/apollo-configservice:v1.7.1...

准备资源配置清单

资源清单github地址:https://github.com/ctripcorp/apollo/tree/v1.7.1/scripts/apollo-on-kubernetes/kubernetes

在运维主机vms200上:

[root@vms200 ~]# cd /data/k8s-yaml/[root@vms200 k8s-yaml]# mkdir apollo-configservice[root@vms200 k8s-yaml]# cd apollo-configservice[root@vms200 apollo-configservice]#

- deployment.yaml

[root@vms200 apollo-configservice]# vi deployment.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: apollo-configservicenamespace: infralabels:name: apollo-configservicespec:replicas: 1selector:matchLabels:name: apollo-configservicetemplate:metadata:labels:app: apollo-configservicename: apollo-configservicespec:volumes:- name: configmap-volumeconfigMap:name: apollo-configservice-cmcontainers:- name: apollo-configserviceimage: harbor.op.com/infra/apollo-configservice:v1.7.1ports:- containerPort: 8080protocol: TCPvolumeMounts:- name: configmap-volumemountPath: /apollo-configservice/configterminationMessagePath: /dev/termination-logterminationMessagePolicy: FileimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

- svc.yaml

[root@vms200 apollo-configservice]# vi svc.yaml

kind: ServiceapiVersion: v1metadata:name: apollo-configservicenamespace: infraspec:ports:- protocol: TCPport: 8080targetPort: 8080selector:app: apollo-configservice

- ingress.yaml

[root@vms200 apollo-configservice]# vi ingress.yaml

kind: IngressapiVersion: extensions/v1beta1metadata:name: apollo-configservicenamespace: infraspec:rules:- host: config.op.comhttp:paths:- path: /backend:serviceName: apollo-configserviceservicePort: 8080

- configmap.yaml

[root@vms200 apollo-configservice]# vi configmap.yaml

apiVersion: v1kind: ConfigMapmetadata:name: apollo-configservice-cmnamespace: infradata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456eureka.service.url = http://config.op.com/eurekaapp.properties: |appId=100003171

创建configmap,覆盖了镜像config目录下的配置文件,方便修改配置,不用写死在镜像中。

应用资源配置清单

在任意一台k8s运算节点(vms21或vms22)执行:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-configservice/configmap.yamlconfigmap/apollo-configservice-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-configservice/deployment.yamldeployment.apps/apollo-configservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-configservice/svc.yamlservice/apollo-configservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-configservice/ingress.yamlingress.extensions/apollo-configservice created

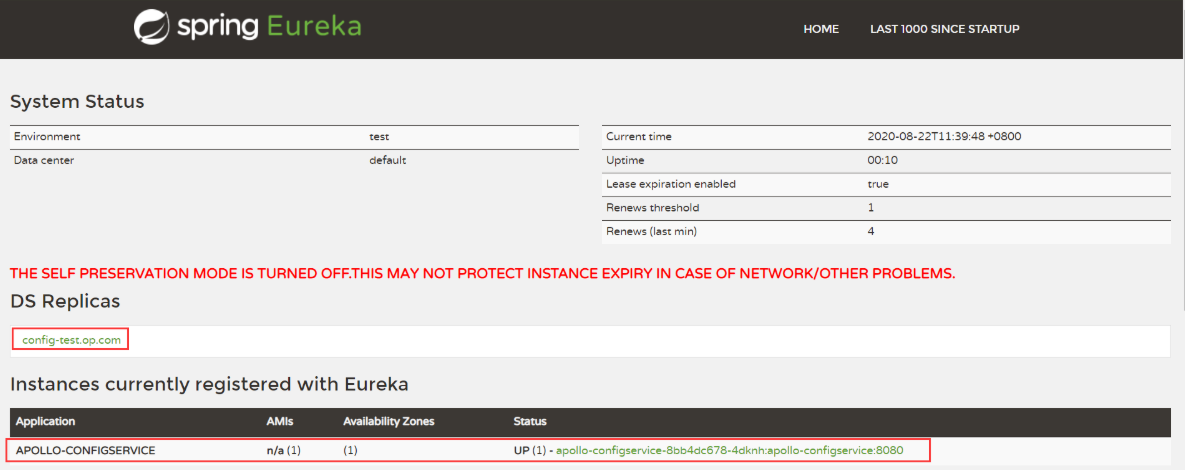

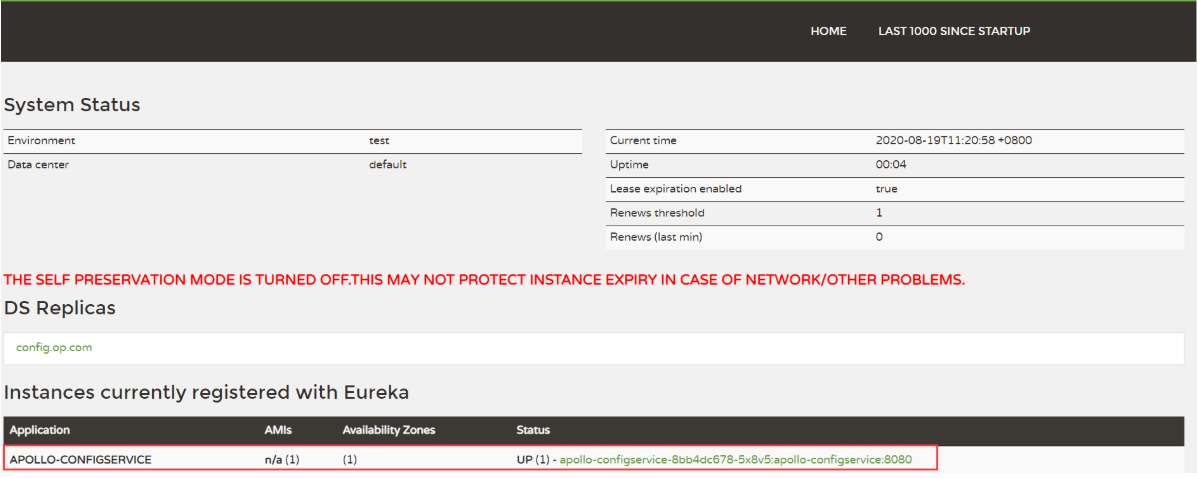

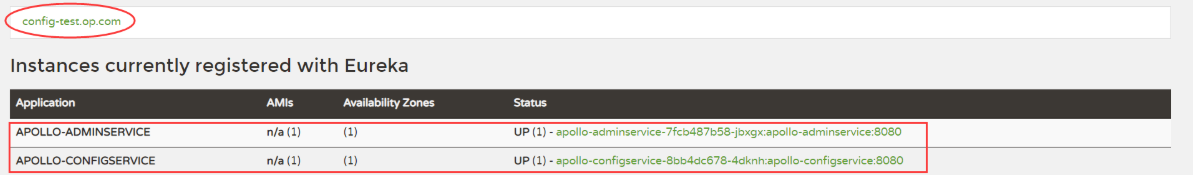

浏览器访问:http://config.op.com

鼠标移到UP(1)那一行上悬停,在浏览器左下可以看到或右键复制链接地址172.26.22.7:8080/info:

[root@vms21 ~]# curl 172.26.22.7:8080/info{"git":{"commit":{"time":{"seconds":1597583113,"nanos":0},"id":"057489d"},"branch":"1.7.1"}}

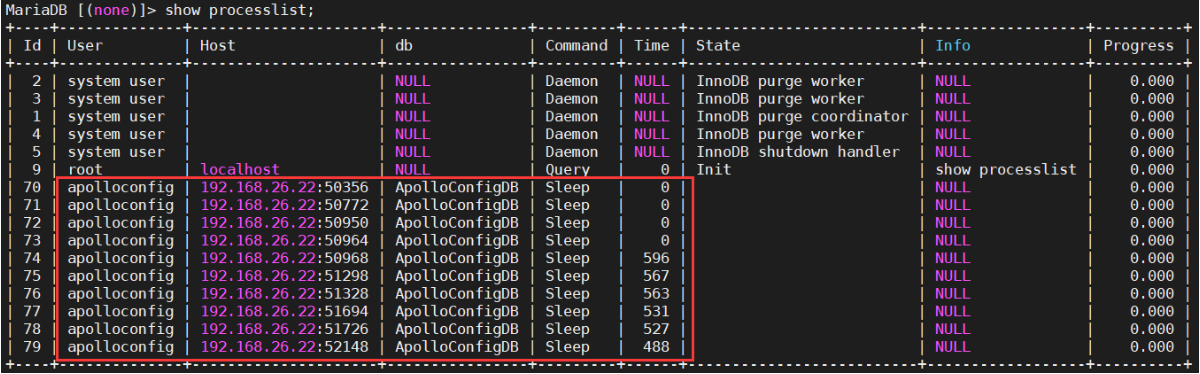

在数据库查看:MariaDB [(none)]> show processlist; (数据库连接地址是节点IP,不是pod地址。出了集群,需要snat转换)

查看授权,与上面Host中的地址是一致的,否则,就会连接不上数据库。

MariaDB [(none)]> show grants for 'apolloconfig'@'192.168.26.%';+------------------------------------------------------------------------------------------------------------------------+| Grants for apolloconfig@192.168.26.% |+------------------------------------------------------------------------------------------------------------------------+| GRANT USAGE ON *.* TO 'apolloconfig'@'192.168.26.%' IDENTIFIED BY PASSWORD '*6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9' || GRANT SELECT, INSERT, UPDATE, DELETE ON `ApolloConfigDB`.* TO 'apolloconfig'@'192.168.26.%' |+------------------------------------------------------------------------------------------------------------------------+

交付apollo-adminservice

准备软件包

在运维主机vms200上:/opt/src

[root@vms200 src]# mkdir /data/dockerfile/apollo-adminservice[root@vms200 src]# unzip -o apollo-adminservice-1.7.1-github.zip -d /data/dockerfile/apollo-adminservice[root@vms200 src]# cd /data/dockerfile/apollo-adminservice[root@vms200 apollo-adminservice]# lltotal 59680-rwxr-xr-x 1 root root 61072345 Aug 16 21:09 apollo-adminservice-1.7.1.jar-rwxr-xr-x 1 root root 29227 Aug 16 21:09 apollo-adminservice-1.7.1-sources.jar-rw-r--r-- 1 root root 57 Feb 24 2019 apollo-adminservice.confdrwxr-xr-x 2 root root 65 Feb 24 2019 configdrwxr-xr-x 2 root root 43 Aug 19 14:53 scripts

制作Docker镜像

在运维主机vms200上:

- 配置数据库连接串

在configmap中创建,此处可不用改了。 - 更新启动脚本:/data/dockerfile/apollo-adminservice/scripts

[root@vms200 scripts]# rm -rf *.sh[root@vms200 scripts]# wget https://raw.githubusercontent.com/ctripcorp/apollo/v1.7.1/scripts/apollo-on-kubernetes/apollo-admin-server/scripts/startup-kubernetes.sh[root@vms200 scripts]# vi startup-kubernetes.sh

#!/bin/bashSERVICE_NAME=apollo-adminservice## Adjust log dir if necessaryLOG_DIR=/opt/logs/apollo-admin-server## Adjust server port if necessarySERVER_PORT=8080APOLLO_ADMIN_SERVICE_NAME=$(hostname -i)# SERVER_URL="http://localhost:${SERVER_PORT}"SERVER_URL="http://${APOLLO_ADMIN_SERVICE_NAME}:${SERVER_PORT}"## Adjust memory settings if necessary#export JAVA_OPTS="-Xms2560m -Xmx2560m -Xss256k -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=384m -XX:NewSize=1536m -XX:MaxNewSize=1536m -XX:SurvivorRatio=8"## Only uncomment the following when you are using server jvm#export JAVA_OPTS="$JAVA_OPTS -server -XX:-ReduceInitialCardMarks"########### The following is the same for configservice, adminservice, portal ###########export JAVA_OPTS="$JAVA_OPTS -XX:ParallelGCThreads=4 -XX:MaxTenuringThreshold=9 -XX:+DisableExplicitGC -XX:+ScavengeBeforeFullGC -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+ExplicitGCInvokesConcurrent -XX:+HeapDumpOnOutOfMemoryError -XX:-OmitStackTraceInFastThrow -Duser.timezone=Asia/Shanghai -Dclient.encoding.override=UTF-8 -Dfile.encoding=UTF-8 -Djava.security.egd=file:/dev/./urandom"export JAVA_OPTS="$JAVA_OPTS -Dserver.port=$SERVER_PORT -Dlogging.file=$LOG_DIR/$SERVICE_NAME.log -XX:HeapDumpPath=$LOG_DIR/HeapDumpOnOutOfMemoryError/"# Find Javaif [[ -n "$JAVA_HOME" ]] && [[ -x "$JAVA_HOME/bin/java" ]]; thenjavaexe="$JAVA_HOME/bin/java"elif type -p java > /dev/null 2>&1; thenjavaexe=$(type -p java)elif [[ -x "/usr/bin/java" ]]; thenjavaexe="/usr/bin/java"elseecho "Unable to find Java"exit 1fiif [[ "$javaexe" ]]; thenversion=$("$javaexe" -version 2>&1 | awk -F '"' '/version/ {print $2}')version=$(echo "$version" | awk -F. '{printf("%03d%03d",$1,$2);}')# now version is of format 009003 (9.3.x)if [ $version -ge 011000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elif [ $version -ge 010000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elif [ $version -ge 009000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elseJAVA_OPTS="$JAVA_OPTS -XX:+UseParNewGC"JAVA_OPTS="$JAVA_OPTS -Xloggc:$LOG_DIR/gc.log -XX:+PrintGCDetails"JAVA_OPTS="$JAVA_OPTS -XX:+UseConcMarkSweepGC -XX:+UseCMSCompactAtFullCollection -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=60 -XX:+CMSClassUnloadingEnabled -XX:+CMSParallelRemarkEnabled -XX:CMSFullGCsBeforeCompaction=9 -XX:+CMSClassUnloadingEnabled -XX:+PrintGCDateStamps -XX:+PrintGCApplicationConcurrentTime -XX:+PrintHeapAtGC -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=5 -XX:GCLogFileSize=5M"fifiprintf "$(date) ==== Starting ==== \n"cd `dirname $0`/..chmod 755 $SERVICE_NAME".jar"./$SERVICE_NAME".jar" startrc=$?;if [[ $rc != 0 ]];thenecho "$(date) Failed to start $SERVICE_NAME.jar, return code: $rc"exit $rc;fitail -f /dev/null

更新内容

SERVER_PORT=8080APOLLO_ADMIN_SERVICE_NAME=$(hostname -i)

设置权限:

[root@vms200 scripts]# chmod +x startup-kubernetes.sh

- 写Dockerfile:/data/dockerfile/apollo-adminservice/Dockerfile

FROM harbor.op.com/base/jre8:8u112ENV VERSION 1.7.1RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\echo "Asia/Shanghai" > /etc/timezoneADD apollo-adminservice-${VERSION}.jar /apollo-adminservice/apollo-adminservice.jarADD config/ /apollo-adminservice/configADD scripts/ /apollo-adminservice/scriptsCMD ["/apollo-adminservice/scripts/startup-kubernetes.sh"]

- 制作镜像并推送:/data/dockerfile/apollo-adminservice

[root@vms200 apollo-adminservice]# docker build . -t harbor.op.com/infra/apollo-adminservice:v1.7.1Sending build context to Docker daemon 61.08MBStep 1/7 : FROM harbor.op.com/base/jre8:8u112---> 9fa5bdd784cbStep 2/7 : ENV VERSION 1.7.1---> Using cache---> 86bc848b0b16Step 3/7 : RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone---> Using cache---> 7c0aed62f2ceStep 4/7 : ADD apollo-adminservice-${VERSION}.jar /apollo-adminservice/apollo-adminservice.jar---> 8465b91a827cStep 5/7 : ADD config/ /apollo-adminservice/config---> dca3a4f2c3aaStep 6/7 : ADD scripts/ /apollo-adminservice/scripts---> a7aac3a49afdStep 7/7 : CMD ["/apollo-adminservice/scripts/startup-kubernetes.sh"]---> Running in 5169389a0bbaRemoving intermediate container 5169389a0bba---> fa5cf359cbb9Successfully built fa5cf359cbb9Successfully tagged harbor.op.com/infra/apollo-adminservice:v1.7.1[root@vms200 apollo-adminservice]# docker push harbor.op.com/infra/apollo-adminservice:v1.7.1...

docker push <ESC .>:ESC .快捷键组合可以快速获取上一行的参数

准备资源配置清单

在运维主机vms200上:/data/k8s-yaml

[root@vms200 k8s-yaml]# mkdir apollo-adminservice[root@vms200 k8s-yaml]# cd apollo-adminservice

vi deployment.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: apollo-adminservicenamespace: infralabels:name: apollo-adminservicespec:replicas: 1selector:matchLabels:name: apollo-adminservicetemplate:metadata:labels:app: apollo-adminservicename: apollo-adminservicespec:volumes:- name: configmap-volumeconfigMap:name: apollo-adminservice-cmcontainers:- name: apollo-adminserviceimage: harbor.op.com/infra/apollo-adminservice:v1.7.1ports:- containerPort: 8080protocol: TCPvolumeMounts:- name: configmap-volumemountPath: /apollo-adminservice/configterminationMessagePath: /dev/termination-logterminationMessagePolicy: FileimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

vi configmap.yaml

apiVersion: v1kind: ConfigMapmetadata:name: apollo-adminservice-cmnamespace: infradata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456eureka.service.url = http://config.op.com/eurekaapp.properties: |appId=100003172

应用资源配置清单

在任意一台k8s运算节点(vms21或vms22)执行:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-adminservice/configmap.yamlconfigmap/apollo-adminservice-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-adminservice/deployment.yamldeployment.apps/apollo-adminservice created

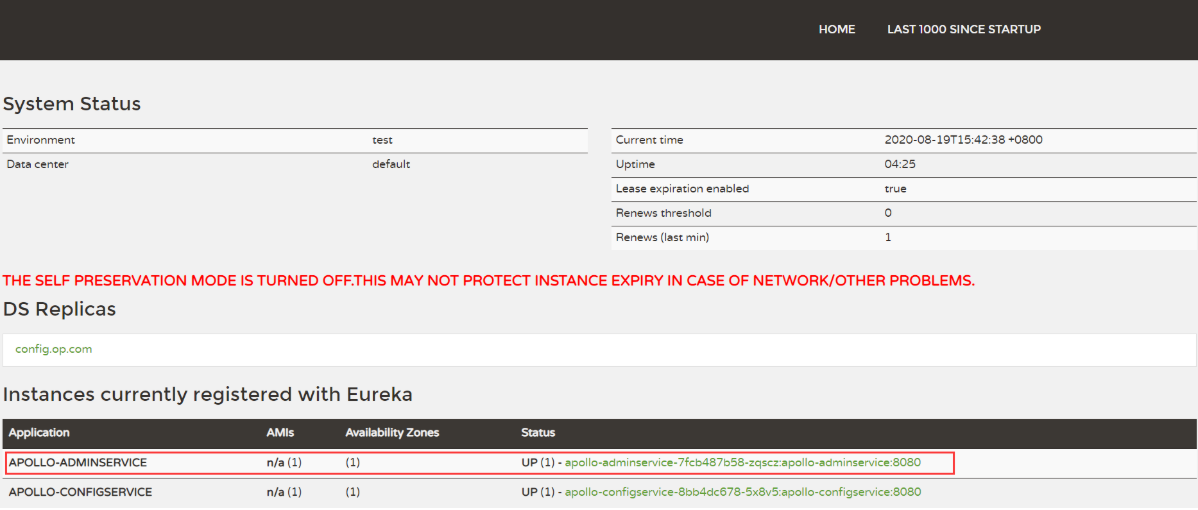

浏览器访问或刷新:http://config.od.com

鼠标移到UP(1)那一行上悬停,在浏览器左下可以看到或右键复制链接地址172.26.22.8:8080/info:

[root@vms21 ~]# curl http://172.26.22.8:8080/info{"git":{"commit":{"time":{"seconds":1597583113,"nanos":0},"id":"057489d"},"branch":"1.7.1"}}

交付apollo-portal

准备软件包

在运维主机vms200上:/opt/src

[root@vms200 src]# mkdir /data/dockerfile/apollo-portal[root@vms200 src]# unzip -o apollo-portal-1.7.1-github.zip -d /data/dockerfile/apollo-portal[root@vms200 src]# cd /data/dockerfile/apollo-portal[root@vms200 apollo-portal]# lltotal 45240-rwxr-xr-x 1 root root 45097924 Aug 16 21:09 apollo-portal-1.7.1.jar-rwxr-xr-x 1 root root 1218394 Aug 16 21:09 apollo-portal-1.7.1-sources.jar-rw-r--r-- 1 root root 57 Feb 24 2019 apollo-portal.confdrwxr-xr-x 2 root root 94 Aug 16 21:09 configdrwxr-xr-x 2 root root 43 May 4 13:19 scripts[root@vms200 apollo-portal]# cd scripts/[root@vms200 scripts]# rm -rf *.sh[root@vms200 scripts]# wget https://raw.githubusercontent.com/ctripcorp/apollo/v1.7.1/scripts/apollo-on-kubernetes/apollo-portal-server/scripts/startup-kubernetes.sh[root@vms200 scripts]# chmod +x startup-kubernetes.sh

执行数据库脚本

在数据库主机vms11上:

- 获取数据库脚本:

[root@vms11 mysql]# wget https://raw.githubusercontent.com/ctripcorp/apollo/v1.7.1/scripts/apollo-on-kubernetes/db/portal-db/apolloportaldb.sql

- 执行数据库脚本:

[root@vms11 mysql]# mysql -uroot -pMariaDB [(none)]> source ./apolloportaldb.sql...MariaDB [ApolloPortalDB]> show databases;+--------------------+| Database |+--------------------+| ApolloConfigDB || ApolloPortalDB || information_schema || mysql || performance_schema |+--------------------+MariaDB [ApolloPortalDB]> use ApolloPortalDB;Database changedMariaDB [ApolloPortalDB]> show tables;+--------------------------+| Tables_in_ApolloPortalDB |+--------------------------+| App || AppNamespace || Authorities || Consumer || ConsumerAudit || ConsumerRole || ConsumerToken || Favorite || Permission || Role || RolePermission || ServerConfig || UserRole || Users |+--------------------------+

MariaDB [ApolloPortalDB]> select * from ServerConfig\G*************************** 1. row ***************************Id: 1Key: apollo.portal.envsValue: dev, fat, uat, proComment: 可支持的环境列表IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 2. row ***************************Id: 2Key: organizationsValue: [{"orgId":"TEST1","orgName":"样例部门1"},{"orgId":"TEST2","orgName":"样例部门2"}]Comment: 部门列表IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 3. row ***************************Id: 3Key: superAdminValue: apolloComment: Portal超级管理员IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 4. row ***************************Id: 4Key: api.readTimeoutValue: 10000Comment: http接口read timeoutIsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 5. row ***************************Id: 5Key: consumer.token.saltValue: someSaltComment: consumer token saltIsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 6. row ***************************Id: 6Key: admin.createPrivateNamespace.switchValue: trueComment: 是否允许项目管理员创建私有namespaceIsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 7. row ***************************Id: 7Key: configView.memberOnly.envsValue: proComment: 只对项目成员显示配置信息的环境列表,多个env以英文逗号分隔IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43*************************** 8. row ***************************Id: 8Key: apollo.portal.meta.serversValue: {}Comment: 各环境Meta Service列表IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43

更新部门列表:

MariaDB [ApolloPortalDB]> update ApolloPortalDB.ServerConfig set Value='[{"orgId":"op01","orgName":"研发部"},{"orgId":"op02","orgName":"运维部"},{"orgId":"op03","orgName":"测试部"}]' where Id=2;Query OK, 1 row affected (0.002 sec)Rows matched: 1 Changed: 1 Warnings: 0MariaDB [ApolloPortalDB]> select * from ServerConfig where Id=2\G*************************** 1. row ***************************Id: 2Key: organizationsValue: [{"orgId":"op01","orgName":"研发部"},{"orgId":"op02","orgName":"运维部"},{"orgId":"op03","orgName":"测试部"}]Comment: 部门列表

数据库用户授权

MariaDB [ApolloPortalDB]> grant INSERT,DELETE,UPDATE,SELECT on ApolloPortalDB.* to "apolloportal"@"192.168.26.%" identified by "123456";Query OK, 0 rows affected (0.045 sec)MariaDB [ApolloPortalDB]> select user,host from mysql.user;+--------------+---------------+| user | host |+--------------+---------------+| root | 127.0.0.1 || apolloconfig | 192.168.26.% || apolloportal | 192.168.26.% || root | ::1 || root | localhost || root | vms11.cos.com |+--------------+---------------+

制作Docker镜像

在运维主机vms200上:/data/dockerfile/apollo-portal

- 配置数据库连接串

在configmap中创建,此处可不用改了。 - 配置Portal的meta service

在configmap中创建,此处可不用改了。 - 更新启动脚本:/data/dockerfile/apollo-portal/scripts

[root@vms200 apollo-portal]# cd scripts/[root@vms200 scripts]# rm -rf *.sh[root@vms200 scripts]# wget https://raw.githubusercontent.com/ctripcorp/apollo/v1.7.1/scripts/apollo-on-kubernetes/apollo-portal-server/scripts/startup-kubernetes.sh[root@vms200 scripts]# vi startup-kubernetes.sh

#!/bin/bashSERVICE_NAME=apollo-portal## Adjust log dir if necessaryLOG_DIR=/opt/logs/apollo-portal-server## Adjust server port if necessarySERVER_PORT=8080APOLLO_PORTAL_SERVICE_NAME=$(hostname -i)# SERVER_URL="http://localhost:$SERVER_PORT"SERVER_URL="http://${APOLLO_PORTAL_SERVICE_NAME}:${SERVER_PORT}"## Adjust memory settings if necessary#export JAVA_OPTS="-Xms2560m -Xmx2560m -Xss256k -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=384m -XX:NewSize=1536m -XX:MaxNewSize=1536m -XX:SurvivorRatio=8"## Only uncomment the following when you are using server jvm#export JAVA_OPTS="$JAVA_OPTS -server -XX:-ReduceInitialCardMarks"########### The following is the same for configservice, adminservice, portal ###########export JAVA_OPTS="$JAVA_OPTS -XX:ParallelGCThreads=4 -XX:MaxTenuringThreshold=9 -XX:+DisableExplicitGC -XX:+ScavengeBeforeFullGC -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+ExplicitGCInvokesConcurrent -XX:+HeapDumpOnOutOfMemoryError -XX:-OmitStackTraceInFastThrow -Duser.timezone=Asia/Shanghai -Dclient.encoding.override=UTF-8 -Dfile.encoding=UTF-8 -Djava.security.egd=file:/dev/./urandom"export JAVA_OPTS="$JAVA_OPTS -Dserver.port=$SERVER_PORT -Dlogging.file=$LOG_DIR/$SERVICE_NAME.log -XX:HeapDumpPath=$LOG_DIR/HeapDumpOnOutOfMemoryError/"# Find Javaif [[ -n "$JAVA_HOME" ]] && [[ -x "$JAVA_HOME/bin/java" ]]; thenjavaexe="$JAVA_HOME/bin/java"elif type -p java > /dev/null 2>&1; thenjavaexe=$(type -p java)elif [[ -x "/usr/bin/java" ]]; thenjavaexe="/usr/bin/java"elseecho "Unable to find Java"exit 1fiif [[ "$javaexe" ]]; thenversion=$("$javaexe" -version 2>&1 | awk -F '"' '/version/ {print $2}')version=$(echo "$version" | awk -F. '{printf("%03d%03d",$1,$2);}')# now version is of format 009003 (9.3.x)if [ $version -ge 011000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elif [ $version -ge 010000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elif [ $version -ge 009000 ]; thenJAVA_OPTS="$JAVA_OPTS -Xlog:gc*:$LOG_DIR/gc.log:time,level,tags -Xlog:safepoint -Xlog:gc+heap=trace"elseJAVA_OPTS="$JAVA_OPTS -XX:+UseParNewGC"JAVA_OPTS="$JAVA_OPTS -Xloggc:$LOG_DIR/gc.log -XX:+PrintGCDetails"JAVA_OPTS="$JAVA_OPTS -XX:+UseConcMarkSweepGC -XX:+UseCMSCompactAtFullCollection -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=60 -XX:+CMSClassUnloadingEnabled -XX:+CMSParallelRemarkEnabled -XX:CMSFullGCsBeforeCompaction=9 -XX:+CMSClassUnloadingEnabled -XX:+PrintGCDateStamps -XX:+PrintGCApplicationConcurrentTime -XX:+PrintHeapAtGC -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=5 -XX:GCLogFileSize=5M"fifiprintf "$(date) ==== Starting ==== \n"cd `dirname $0`/..chmod 755 $SERVICE_NAME".jar"./$SERVICE_NAME".jar" startrc=$?;if [[ $rc != 0 ]];thenecho "$(date) Failed to start $SERVICE_NAME.jar, return code: $rc"exit $rc;fitail -f /dev/null

更新内容

SERVER_PORT=8080APOLLO_PORTAL_SERVICE_NAME=$(hostname -i)

设置权限:

[root@vms200 scripts]# chmod +x startup-kubernetes.sh

- 写Dockerfile:/data/dockerfile/apollo-portal/Dockerfile

FROM harbor.op.com/base/jre8:8u112ENV VERSION 1.7.1RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\echo "Asia/Shanghai" > /etc/timezoneADD apollo-portal-${VERSION}.jar /apollo-portal/apollo-portal.jarADD config/ /apollo-portal/configADD scripts/ /apollo-portal/scriptsCMD ["/apollo-portal/scripts/startup-kubernetes.sh"]

- 制作镜像并推送:/data/dockerfile/apollo-portal

[root@vms200 apollo-portal]# vi /data/dockerfile/apollo-portal/Dockerfile[root@vms200 apollo-portal]# docker build . -t harbor.op.com/infra/apollo-portal:v1.7.1Sending build context to Docker daemon 45.11MBStep 1/7 : FROM harbor.op.com/base/jre8:8u112---> 9fa5bdd784cbStep 2/7 : ENV VERSION 1.7.1---> Using cache---> 86bc848b0b16Step 3/7 : RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone---> Using cache---> 7c0aed62f2ceStep 4/7 : ADD apollo-portal-${VERSION}.jar /apollo-portal/apollo-portal.jar---> 1e48cc895e47Step 5/7 : ADD config/ /apollo-portal/config---> aaa30a645f4aStep 6/7 : ADD scripts/ /apollo-portal/scripts---> 97531215c1b8Step 7/7 : CMD ["/apollo-portal/scripts/startup-kubernetes.sh"]---> Running in 7cc838701f8fRemoving intermediate container 7cc838701f8f---> d58ed8029507Successfully built d58ed8029507Successfully tagged harbor.op.com/infra/apollo-portal:v1.7.1[root@vms200 apollo-portal]# docker push harbor.op.com/infra/apollo-portal:v1.7.1The push refers to repository [harbor.op.com/infra/apollo-portal]...

解析域名

DNS主机vms11上:

# vi /var/named/op.com.zone

portal A 192.168.26.10

注意前滚序号serial

# systemctl restart named# dig -t A portal.op.com @192.168.26.11 +short192.168.26.10

准备资源配置清单

在运维主机vms200上:/data/k8s-yaml

[root@vms200 k8s-yaml]# mkdir apollo-portal[root@vms200 k8s-yaml]# cd apollo-portal[root@vms200 apollo-portal]#

vi deployment.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: apollo-portalnamespace: infralabels:name: apollo-portalspec:replicas: 1selector:matchLabels:name: apollo-portaltemplate:metadata:labels:app: apollo-portalname: apollo-portalspec:volumes:- name: configmap-volumeconfigMap:name: apollo-portal-cmcontainers:- name: apollo-portalimage: harbor.op.com/infra/apollo-portal:v1.7.1ports:- containerPort: 8080protocol: TCPvolumeMounts:- name: configmap-volumemountPath: /apollo-portal/configterminationMessagePath: /dev/termination-logterminationMessagePolicy: FileimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

vi svc.yaml

kind: ServiceapiVersion: v1metadata:name: apollo-portalnamespace: infraspec:ports:- protocol: TCPport: 8080targetPort: 8080selector:app: apollo-portal

vi ingress.yaml

kind: IngressapiVersion: extensions/v1beta1metadata:name: apollo-portalnamespace: infraspec:rules:- host: portal.op.comhttp:paths:- path: /backend:serviceName: apollo-portalservicePort: 8080

vi configmap.yaml

apiVersion: v1kind: ConfigMapmetadata:name: apollo-portal-cmnamespace: infradata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloPortalDB?characterEncoding=utf8spring.datasource.username = apolloportalspring.datasource.password = 123456app.properties: |appId=100003173apollo-env.properties: |dev.meta=http://config.op.com

应用资源配置清单

在任意一台k8s运算节点(vms21或vms22)执行:

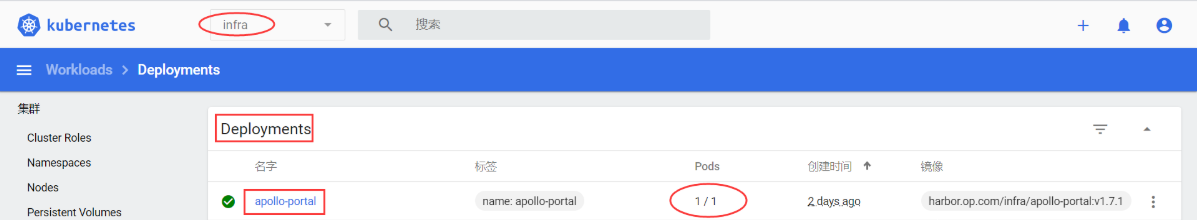

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-portal/configmap.yamlconfigmap/apollo-portal-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-portal/deployment.yamldeployment.apps/apollo-portal created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-portal/svc.yamlservice/apollo-portal created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-portal/ingress.yamlingress.extensions/apollo-portal created

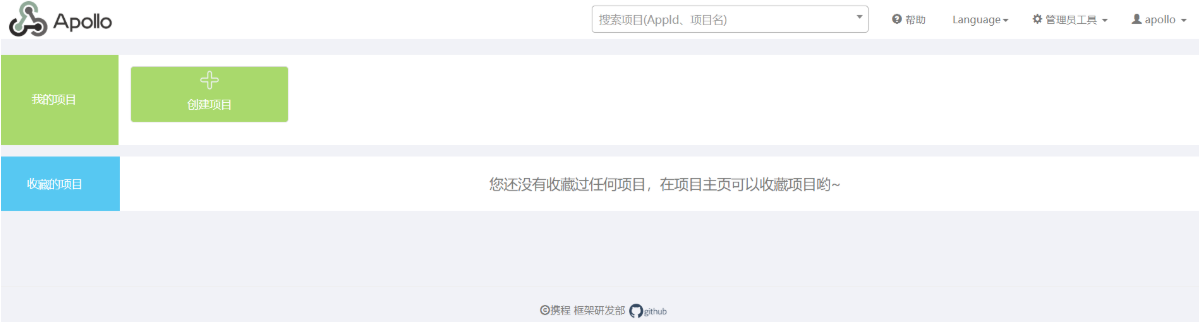

浏览器访问:http://portal.op.com

- 默认用户名:apollo 密码: admin

- 登录:

- 选择

管理员工具->用户管理修改用户密码,然后退出重新登录。

- 选择

管理员工具->系统信息查看:

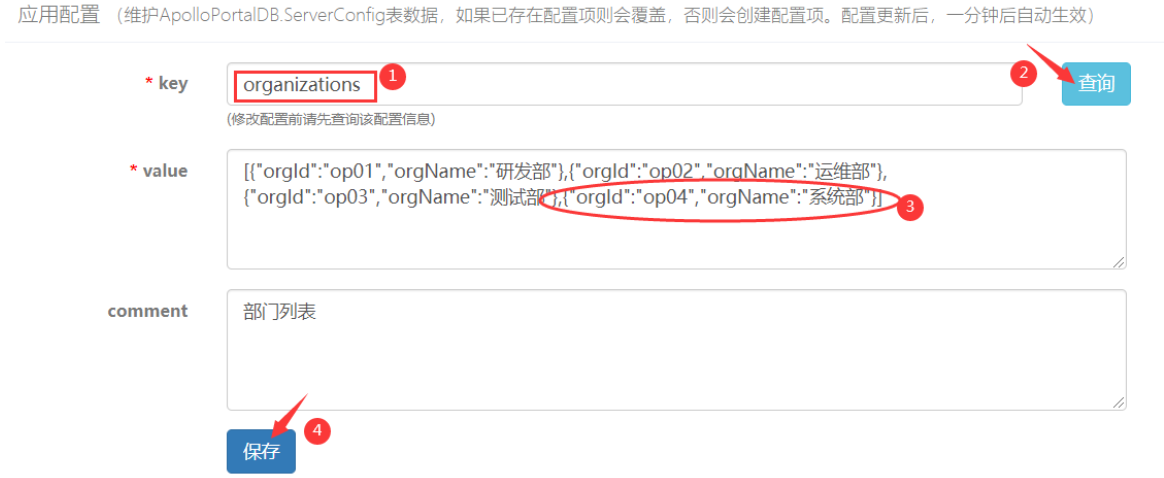

- 选择

管理员工具->系统参数输入key:organizations,然后查询。可以在value增加部门。

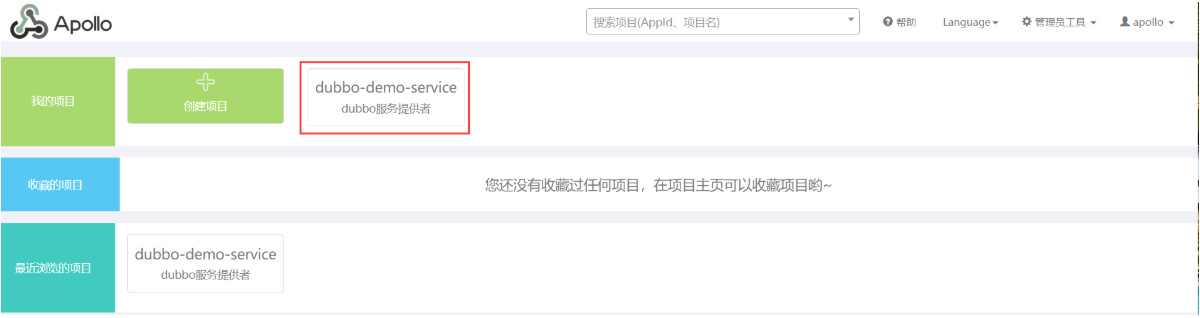

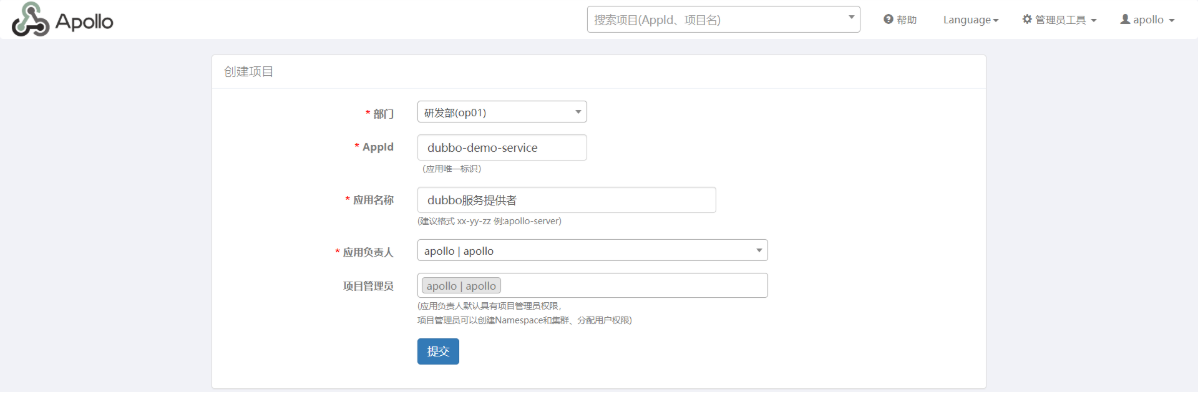

- 点击logo图标,回到主页面,创建项目:

app.id=dubbo-demo-service来自项目:dubbo-demo-service/dubbo-server/src/main/resources/META-INF/app.properties

提交后,主页变为:

实战dubbo微服务接入Apollo配置中心

改造dubbo-demo-service项目

此改造主要实现对apollo配置中心的访问,拉取配置。仓库中源码包已经修改,实验中不用再改,直接使用即可。

使用IDE拉取项目(这里使用git bash作为范例)

$ git clone git@gitee.com/stanleywang/dubbo-demo-service.git

切到apollo分支

$ git checkout -b apollo

修改pom.xml

- 加入apollo客户端jar包的依赖:dubbo-server/pom.xml

<dependency><groupId>com.ctrip.framework.apollo</groupId><artifactId>apollo-client</artifactId><version>1.1.0</version></dependency>

- 修改resource段:dubbo-server/pom.xml

<resource><directory>src/main/resources</directory><includes><include>**/*</include></includes><filtering>false</filtering></resource>

增加resources目录:

/d/workspace/dubbo-demo-service/dubbo-server/src/main

$ mkdir -pv resources/META-INFmkdir: created directory 'resources'mkdir: created directory 'resources/META-INF'

修改config.properties文件

/d/workspace/dubbo-demo-service/dubbo-server/src/main/resources/config.properties

dubbo.registry=${dubbo.registry}dubbo.port=${dubbo.port}

修改srping-config.xml文件

- beans段新增属性:/d/workspace/dubbo-demo-service/dubbo-server/src/main/resources/spring-config.xml

xmlns:apollo="http://www.ctrip.com/schema/apolloxmlns:apollo="http://www.ctrip.com/schema/apollo"

- xsi:schemaLocation段内新增属性:/d/workspace/dubbo-demo-service/dubbo-server/src/main/resources/spring-config.xml

http://www.ctrip.com/schema/apollo http://www.ctrip.com/schema/apollo.xsd

- 新增配置项:/d/workspace/dubbo-demo-service/dubbo-server/src/main/resources/spring-config.xml

<apollo:config/>

- 删除配置项(注释):/d/workspace/dubbo-demo-service/dubbo-server/src/main/resources/spring-config.xml

<!-- <context:property-placeholder location="classpath:config.properties"/> -->

增加app.properties文件:

/d/workspace/dubbo-demo-service/dubbo-server/src/main/resources/META-INF/app.properties

app.id=dubbo-demo-service

提交git中心仓库(gitee)

$ git push origin apollo

配置apollo-portal

创建项目

- 部门:研发部

- AppId:dubbo-demo-service (来自项目:dubbo-demo-service/dubbo-server/src/main/resources/META-INF/app.properties)

- 应用名称:dubbo服务提供者

- 应用负责人:apollo|apollo

- 项目管理员:apollo|apollo

进入配置页面

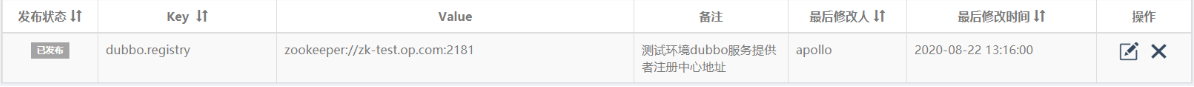

为新项目添加配置如下:

| Key | Value | Comment | 选择集群 |

|---|---|---|---|

| dubbo.registry | zookeeper://zk1.op.com:2181 | dubbo服务的注册中心地址 | DEV |

| dubbo.port | 20880 | dubbo服务提供者的监听端口 | DEV |

发布配置

使用jenkins进行CI

在项目

dubbo-demo上点击下拉按钮,选择Build with Parameters,填写10个构建的参数:

- 使用之前的流水线,但是使用分支为apollo的代码进行打包 | 参数名 | 参数值 | | —- | —- | | app_name | dubbo-demo-service | | image_name | app/dubbo-demo-service | | git_repo | https://gitee.com/cloudlove2007/dubbo-demo-service.git | | git_ver | apollo | | add_tag | 200820_1500 | | mvn_dir | ./ | | target_dir | ./dubbo-server/target | | mvn_cmd | mvn clean package -Dmaven.test.skip=true | | base_image | base/jre8:8u112 | | maven | 3.6.3-8u261 |

构建过程中页面出现Bad Gateway,并不是构建出错了,重新刷新页面即可。

上线新构建的项目

准备资源配置清单

运维主机vms200上:/data/k8s-yaml/dubbo-demo-service

[root@vms200 dubbo-demo-service]# cp deployment.yaml deployment-apollo.yaml[root@vms200 dubbo-demo-service]# vi deployment-apollo.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: dubbo-demo-servicenamespace: applabels:name: dubbo-demo-servicespec:replicas: 1selector:matchLabels:name: dubbo-demo-servicetemplate:metadata:labels:app: dubbo-demo-servicename: dubbo-demo-servicespec:containers:- name: dubbo-demo-serviceimage: harbor.op.com/app/dubbo-demo-service:apollo_200820_1500ports:- containerPort: 20880protocol: TCPenv:- name: JAR_BALLvalue: dubbo-server.jar- name: C_OPTSvalue: -Denv=dev -Dapollo.meta=http://config.op.comimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

注意:增加了env段配置

- name: C_OPTSvalue: -Denv=dev -Dapollo.meta=http://config.op.com

应用资源配置清单

在任意一台k8s运算节点(vms21或vms22)上执行:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-demo-service/deployment-apollo.yamldeployment.apps/dubbo-demo-service configured

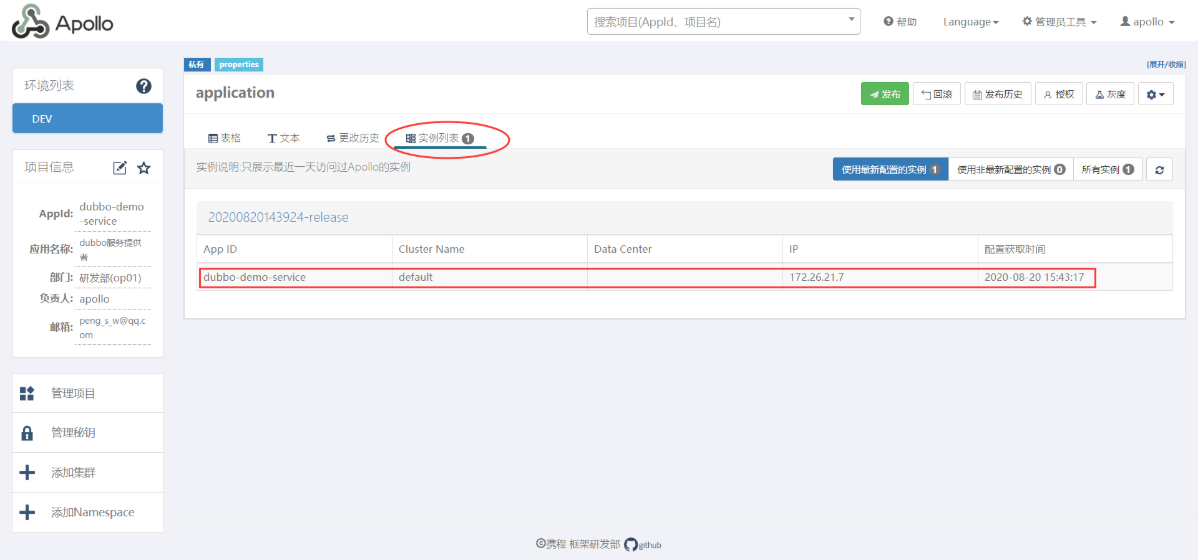

观察项目运行情况

- apollo中看实例列表

改造dubbo-demo-web

此改造主要实现对apollo配置中心的访问,拉取配置。仓库中源码包已经修改,实验中不用再改,直接使用即可。

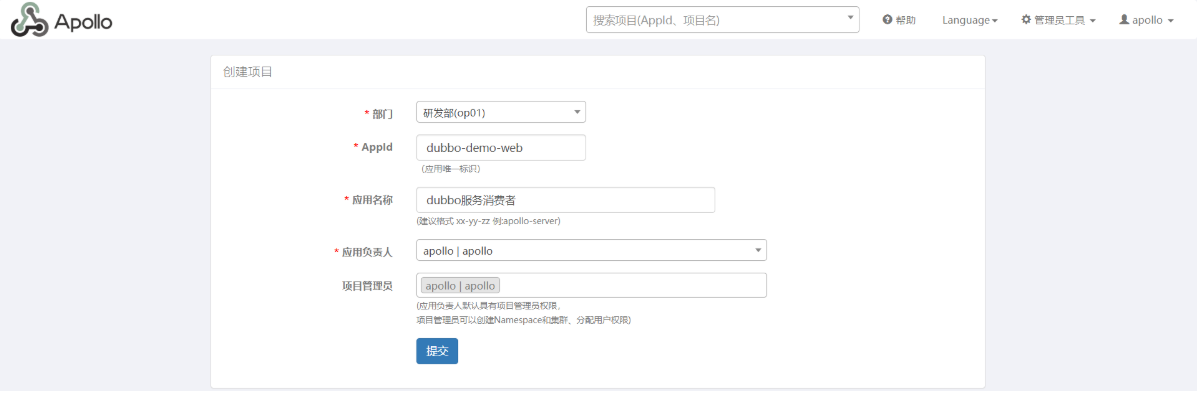

配置apollo-portal

创建项目

- 部门:研发部

- AppId:dubbo-demo-web

- 应用名称:dubbo服务消费者

- 应用负责人:apollo|apollo

- 项目管理员:apollo|apollo

AppId来自:来自项目:dubbo-demo-web/dubbo-client/src/main/resources/META-INF/app.properties

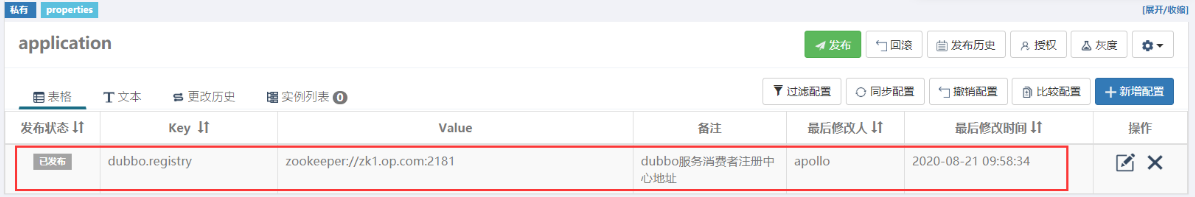

进入配置页面

新增配置项1

- Key:dubbo.registry

- Value:zookeeper://zk1.op.com:2181

- Comment:dubbo服务消费者注册中心地址

- 选择集群:DEV

发布配置

使用jenkins进行CI

在项目

dubbo-demo上点击下拉按钮,选择Build with Parameters,填写10个构建的参数:

- 使用之前的流水线,但是使用分支为apollo的代码进行打包 | 参数名 | 参数值 | | —- | —- | | app_name | dubbo-demo-consumer | | image_name | app/dubbo-demo-consumer | | git_repo | git@gitee.com:cloudlove2007/dubbo-demo-web.git | | git_ver | apollo | | add_tag | 200821_1101 | | mvn_dir | ./ | | target_dir | ./dubbo-client/target | | mvn_cmd | mvn clean package -Dmaven.test.skip=true | | base_image | base/jre8:8u112 | | maven | 3.6.3-8u261 |

上线新构建的项目

准备资源配置清单

运维主机vms200上:

[root@vms200 ~]# cd /data/k8s-yaml/dubbo-consumer/[root@vms200 dubbo-consumer]# cp deployment.yaml deployment-apollo.yaml[root@vms200 dubbo-consumer]# vi deployment-apollo.yaml

kind: DeploymentapiVersion: apps/v1metadata:name: dubbo-demo-consumernamespace: applabels:name: dubbo-demo-consumerspec:replicas: 1selector:matchLabels:name: dubbo-demo-consumertemplate:metadata:labels:app: dubbo-demo-consumername: dubbo-demo-consumerspec:containers:- name: dubbo-demo-consumerimage: harbor.op.com/app/dubbo-demo-consumer:apollo_200821_1101ports:- containerPort: 8080protocol: TCP- containerPort: 20880protocol: TCPenv:- name: JAR_BALLvalue: dubbo-client.jar- name: C_OPTSvalue: -Denv=dev -Dapollo.meta=http://config.op.comimagePullPolicy: IfNotPresentimagePullSecrets:- name: harborrestartPolicy: AlwaysterminationGracePeriodSeconds: 30securityContext:runAsUser: 0schedulerName: default-schedulerstrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1maxSurge: 1revisionHistoryLimit: 7progressDeadlineSeconds: 600

注意:增加了env段配置

- name: C_OPTSvalue: -Denv=dev -Dapollo.meta=http://config.op.com

应用资源配置清单

在任意一台k8s运算节点(vms21或vms22)上执行:

[root@vms22 ~]# kubectl apply -f http://k8s-yaml.op.com/dubbo-consumer/deployment-apollo.yamldeployment.apps/dubbo-demo-consumer configured

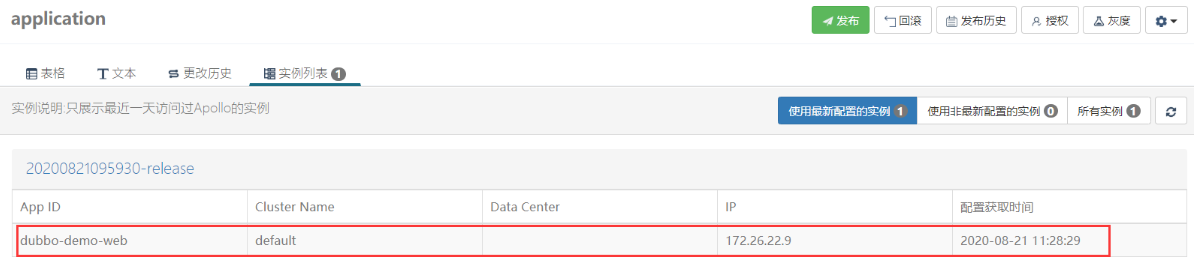

观察项目运行情况

页面出现Bad Gateway,需要等待一会(因资源紧张,容器还在启动中),重新刷新页面即可。

- apollo中看实例列表

通过Apollo配置中心动态维护项目的配置

以dubbo-demo-service项目为例,不用修改代码

- 在http://portal.op.com 里修改dubbo.port配置项

- 重启dubbo-demo-service项目

- 配置生效

实战维护多套dubbo微服务环境

要进行分环境,需要将现有实验环境进行拆分:

- portal服务,可以各个环境共用,只部署一套

- adminservice和configservice必须要分开每个环境一套

- zk和namespace也要区分环境

生产中,最好将测试环境、生产环境部署到不同的k8s集群,mysql数据分别使用不同的实例,实现完全的物理隔离。

生产实践

- 迭代新需求/修复BUG(编码->提GIT)

- 测试环境发版,测试(应用通过编译打包发布至TEST命名空间)

- 测试通过,上线(应用镜像直接发布至PROD命名空间)

系统架构

- 物理架构 | 主机名 | 角色 | ip | | :—- | :—- | :—- | | vms11.cos.com | zk-test(测试环境Test) | 192.168.26.11 | | vms12.cos.com | zk-prod(生产环境Prod) | 192.168.26.12 | | vms21.cos.com | kubernetes运算节点 | 192.168.26.21 | | vms22.cos.com | kubernetes运算节点 | 192.168.26.22 | | vms200.cos.com | 运维主机,harbor仓库 | 192.168.26.200 |

- K8S内系统架构 | 环境 | 命名空间 | 应用 | | :—- | :—- | :—- | | 测试环境(TEST) | test | apollo-config,apollo-admin | | 测试环境(TEST) | test | dubbo-demo-service,dubbo-demo-web | | 生产环境(PROD) | prod | apollo-config,apollo-admin | | 生产环境(PROD) | prod | dubbo-demo-service,dubbo-demo-web | | ops环境(infra) | infra | jenkins,dubbo-monitor,apollo-portal |

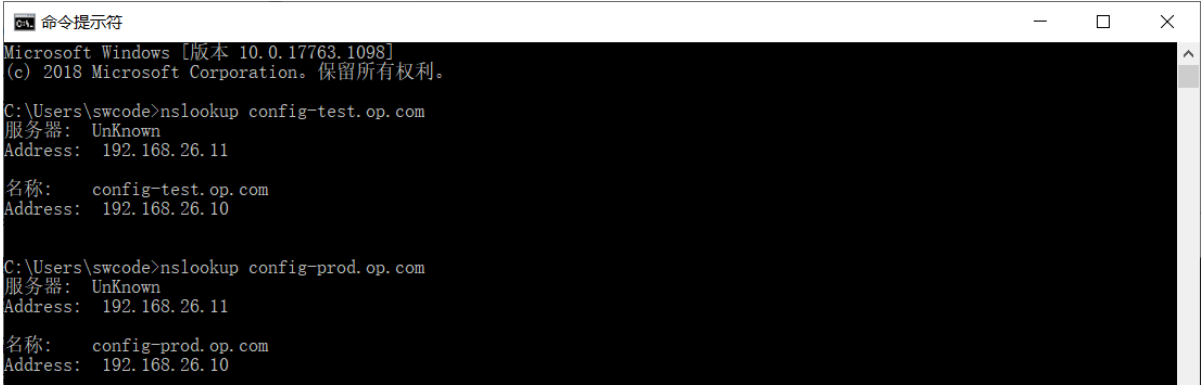

修改/添加域名解析

DNS主机vms11上:/var/named/od.com.zone在文件末尾增加:

zk-test A 192.168.26.11zk-prod A 192.168.26.12config-test A 192.168.26.10config-prod A 192.168.26.10demo-test A 192.168.26.10demo-prod A 192.168.26.10

注意前滚序号serial

[root@vms11 ~]# systemctl restart named[root@vms11 ~]# dig -t A zk-test.op.com +short192.168.26.11[root@vms11 ~]# dig -t A zk-prod.op.com +short192.168.26.12[root@vms11 mysql]# dig -t A config-test.op.com +short192.168.26.10[root@vms11 mysql]# dig -t A config-prod.op.com +short192.168.26.10[root@vms11 mysql]# dig -t A demo-prod.op.com +short192.168.26.10[root@vms11 mysql]# dig -t A demo-test.op.com +short192.168.26.10

命令行工具查看解析:nslookup config-test.op.com

Apollo的k8s应用配置

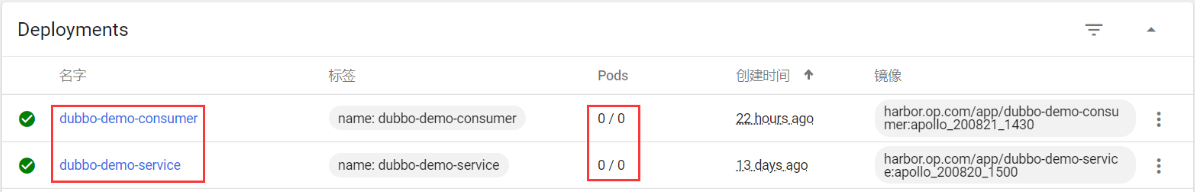

1、删除app命名空间内应用

dashboard中操作,设置deployment副本数为0

2、创建test命名空间和prod命名空间

在任意一台k8s运算节点(vms21或vms22)上执行:

[root@vms21 ~]# kubectl create ns testnamespace/test created[root@vms21 ~]# kubectl create secret docker-registry harbor \--docker-server=harbor.op.com \--docker-username=admin \--docker-password=Harbor12543 \-n test[root@vms21 ~]# kubectl create ns prodnamespace/prod created[root@vms21 ~]# kubectl create secret docker-registry harbor \--docker-server=harbor.op.com \--docker-username=admin \--docker-password=Harbor12543 \-n prod

3、删除infra命名空间内apollo-configservice,apollo-adminservice应用

dashboard中操作,设置deployment副本数为0

4、数据库:创建ApolloConfigTestDB和ApolloConfigProdDB

在vms11:/data/mysql

创建ApolloConfigTestDB:修改apolloconfigdb.sql,将ApolloConfigDB改为ApolloConfigTestDB

[root@vms11 mysql]# mysql -uroot -p < apolloconfigdb.sql[root@vms11 mysql]# mysql -uroot -p

MariaDB [(none)]> show databases;MariaDB [(none)]> use ApolloConfigTestDB;MariaDB [ApolloConfigTestDB]> show tables;MariaDB [ApolloConfigTestDB]> select * from ServerConfig\GMariaDB [ApolloConfigTestDB]> update ApolloConfigTestDB.ServerConfig set ServerConfig.Value="http://config-test.op.com/eureka" where ServerConfig.Key="eureka.service.url";

创建ApolloConfigProdDB:修改apolloconfigdb.sql,将ApolloConfigDB改为ApolloConfigProdDB

[root@vms11 mysql]# mysql -uroot -p < apolloconfigdb.sql[root@vms11 mysql]# mysql -uroot -p

MariaDB [(none)]> show databases;MariaDB [(none)]> use ApolloConfigProdDB;MariaDB [ApolloConfigProdDB]> show tables;MariaDB [ApolloConfigProdDB]> update ApolloConfigProdDB.ServerConfig set ServerConfig.Value="http://config-prod.op.com/eureka" where ServerConfig.Key="eureka.service.url";

授权

MariaDB [(none)]> grant INSERT,DELETE,UPDATE,SELECT on ApolloConfigTestDB.* to "apolloconfig"@"192.168.26.%" identified by "123456";MariaDB [(none)]> grant INSERT,DELETE,UPDATE,SELECT on ApolloConfigProdDB.* to "apolloconfig"@"192.168.26.%" identified by "123456";

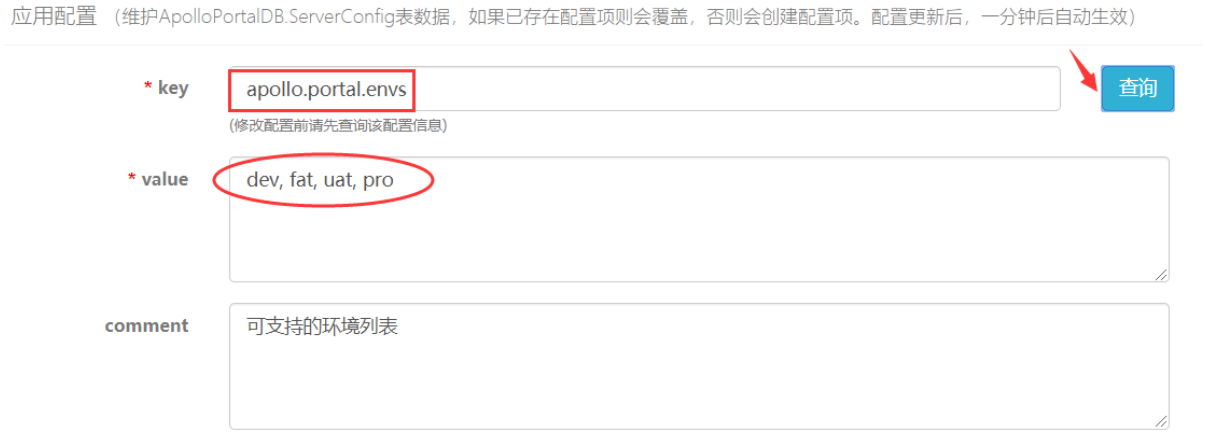

5、数据库:ApolloPortalDB.ServerConfig表数据更新

MariaDB [ApolloPortalDB]> update ApolloPortalDB.ServerConfig set Value='dev, fat, uat, pro' where Id=1;MariaDB [ApolloPortalDB]> select * from ServerConfig\G*************************** 1. row ***************************Id: 1Key: apollo.portal.envsValue: dev, fat, uat, proComment: 可支持的环境列表IsDeleted:DataChange_CreatedBy: defaultDataChange_CreatedTime: 2020-08-19 16:27:43DataChange_LastModifiedBy:DataChange_LastTime: 2020-08-19 16:27:43...

Apollo目前支持以下环境:

- DEV:开发环境

- FAT:测试环境,相当于alpha环境(功能测试)

- UAT:集成环境,相当于beta环境(回归测试)

- PRO:生产环境

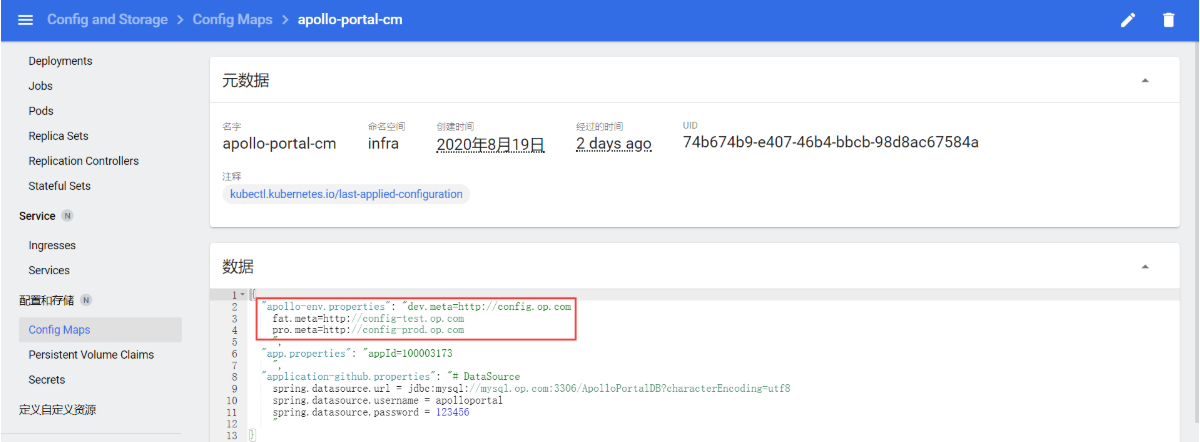

6、修改及应用配置:/data/k8s-yaml/apollo-portal/configmap.yaml

在vms200上:

[root@vms200 harbor]# cd /data/k8s-yaml/apollo-portal/[root@vms200 apollo-portal]# vi configmap.yaml

apiVersion: v1kind: ConfigMapmetadata:name: apollo-portal-cmnamespace: infradata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloPortalDB?characterEncoding=utf8spring.datasource.username = apolloportalspring.datasource.password = 123456app.properties: |appId=100003173apollo-env.properties: |dev.meta=http://config.op.comfat.meta=http://config-test.op.compro.meta=http://config-prod.op.com

增加:fat.meta=http://config-test.op.com、pro.meta=http://config-prod.op.com

在vms21或vms22上:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/apollo-portal/configmap.yamlconfigmap/apollo-portal-cm configured

7、k8s资源配置清单

apollo-configservice

在vms200上:/data/k8s-yaml

- 创建目录

[root@vms200 ~]# cd /data/k8s-yaml[root@vms200 k8s-yaml]# mkdir -pv test/{apollo-adminservice,apollo-configservice,dubbo-demo-service,dubbo-demo-consumer}[root@vms200 k8s-yaml]# mkdir -pv prod/{apollo-adminservice,apollo-configservice,dubbo-demo-service,dubbo-demo-consumer}

- Test环境

[root@vms200 k8s-yaml]# cd test/apollo-configservice/[root@vms200 apollo-configservice]# cp -a /data/k8s-yaml/apollo-configservice/*.yaml .[root@vms200 apollo-configservice]# lltotal 16-rw-r--r-- 1 root root 426 Aug 19 11:01 configmap.yaml-rw-r--r-- 1 root root 1218 Aug 19 10:58 deployment.yaml-rw-r--r-- 1 root root 272 Aug 19 11:12 ingress.yaml-rw-r--r-- 1 root root 200 Aug 19 11:05 svc.yaml

configmap.yaml:修改namespace、url为测试环境

apiVersion: v1kind: ConfigMapmetadata:name: apollo-configservice-cmnamespace: testdata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigTestDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456eureka.service.url = http://config-test.op.com/eurekaapp.properties: |appId=100003171

deployment.yaml、svc.yaml:修改namespace为测试环境

...namespace: test...

ingress.yaml:修改namespace、host为测试环境(注意添加config-test.op.com域名解析)

kind: IngressapiVersion: extensions/v1beta1metadata:name: apollo-configservicenamespace: testspec:rules:- host: config-test.op.comhttp:paths:- path: /backend:serviceName: apollo-configserviceservicePort: 8080

- Prod环境

[root@vms200 apollo-configservice]# cd /data/k8s-yaml/prod/apollo-configservice/[root@vms200 apollo-configservice]# cp /data/k8s-yaml/test/apollo-configservice/*.yaml .[root@vms200 apollo-configservice]# lltotal 16-rw-r--r-- 1 root root 434 Aug 22 11:19 configmap.yaml-rw-r--r-- 1 root root 1217 Aug 22 11:19 deployment.yaml-rw-r--r-- 1 root root 276 Aug 22 11:19 ingress.yaml-rw-r--r-- 1 root root 200 Aug 22 11:19 svc.yaml

configmap.yaml:修改namespace、url为生产环境

apiVersion: v1kind: ConfigMapmetadata:name: apollo-configservice-cmnamespace: proddata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigProdDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456eureka.service.url = http://config-prod.op.com/eurekaapp.properties: |appId=100003171

deployment.yaml、svc.yaml:修改namespace为生产环境

...namespace: test...

ingress.yaml:修改namespace、host为生产环境(注意添加config-prod.op.com域名解析)

kind: IngressapiVersion: extensions/v1beta1metadata:name: apollo-configservicenamespace: prodspec:rules:- host: config-prod.op.comhttp:paths:- path: /backend:serviceName: apollo-configserviceservicePort: 8080

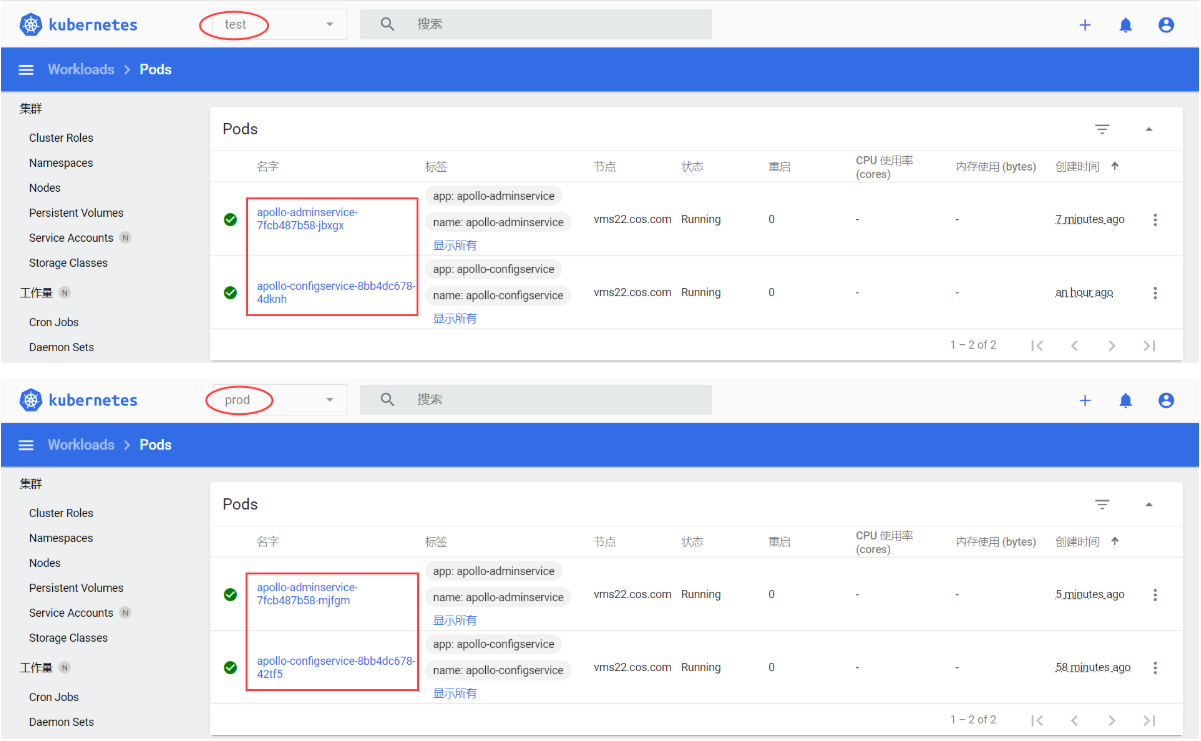

- 依次应用,分别发布在test和prod命名空间

在vms21或vms22

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/apollo-configservice/configmap.yamlconfigmap/apollo-configservice-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/apollo-configservice/deployment.yamldeployment.apps/apollo-configservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/apollo-configservice/svc.yamlservice/apollo-configservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/apollo-configservice/ingress.yamlingress.extensions/apollo-configservice created[root@vms21 ~]#[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/apollo-configservice/configmap.yamlconfigmap/apollo-configservice-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/apollo-configservice/deployment.yamldeployment.apps/apollo-configservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/apollo-configservice/svc.yamlservice/apollo-configservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/apollo-configservice/ingress.yamlingress.extensions/apollo-configservice created

apollo-adminservice

在vms200上:/data/k8s-yaml

- Test环境

[root@vms200 ~]# cd /data/k8s-yaml/[root@vms200 k8s-yaml]# cd test/apollo-adminservice[root@vms200 apollo-adminservice]# cp -a /data/k8s-yaml/apollo-adminservice/*.yaml .[root@vms200 apollo-adminservice]# lltotal 8-rw-r--r-- 1 root root 425 Aug 19 15:31 configmap.yaml-rw-r--r-- 1 root root 1209 Aug 19 15:34 deployment.yaml

configmap.yaml:修改namespace、url为测试环境

apiVersion: v1kind: ConfigMapmetadata:name: apollo-adminservice-cmnamespace: testdata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigTestDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456eureka.service.url = http://config-test.op.com/eurekaapp.properties: |appId=100003172

deployment.yaml:修改namespace为测试环境

...namespace: test...

- Prod环境

[root@vms200 apollo-adminservice]# cd /data/k8s-yaml/prod/apollo-adminservice/[root@vms200 apollo-adminservice]# lltotal 0[root@vms200 apollo-adminservice]# cp /data/k8s-yaml/test/apollo-adminservice/*.yaml .[root@vms200 apollo-adminservice]# lltotal 8-rw-r--r-- 1 root root 433 Aug 22 12:16 configmap.yaml-rw-r--r-- 1 root root 1208 Aug 22 12:16 deployment.yaml

configmap.yaml:修改namespace、url为生产环境

apiVersion: v1kind: ConfigMapmetadata:name: apollo-adminservice-cmnamespace: proddata:application-github.properties: |# DataSourcespring.datasource.url = jdbc:mysql://mysql.op.com:3306/ApolloConfigProdDB?characterEncoding=utf8spring.datasource.username = apolloconfigspring.datasource.password = 123456eureka.service.url = http://config-prod.op.com/eurekaapp.properties: |appId=100003172

deployment.yaml:修改namespace为生产环境

...namespace: test...

- 依次应用,分别发布在test和prod命名空间

在vms21或vms22

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/apollo-adminservice/configmap.yamlconfigmap/apollo-adminservice-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/apollo-adminservice/deployment.yamldeployment.apps/apollo-adminservice created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/apollo-adminservice/configmap.yamlconfigmap/apollo-adminservice-cm created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/apollo-adminservice/deployment.yaml

登录或刷新:http://config-test.op.com/

登录或刷新:http://config-prod.op.com/

Apollo的portal配置

1、启动apollo-portal

2、管理员工具 > 删除应用、集群、AppNamespace

- 将已配置应用删除

3、管理员工具 > 系统参数

- Key:apollo.portal.envs

- Value:dev, fat, uat, pro

4、新建dubbo-demo-service项目

在测试环境/生产环境分别增加配置项并发布

提交:

新增配置:

为FAT(测试环境)新项目添加配置如下:

| Key | Value | Comment | 选择集群 |

|---|---|---|---|

| dubbo.registry | zookeeper://zk-test.op.com:2181 | 测试环境dubbo服务提供者注册中心地址 | FAT |

| dubbo.port | 20880 | 测试环境dubbo服务提供者的监听端口 | FAT |

发布:

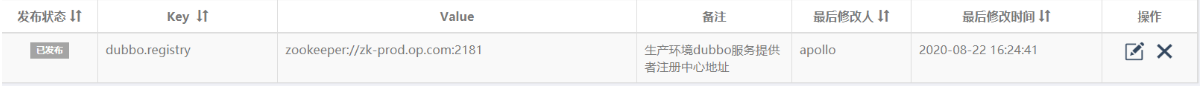

为PRO(生产环境)新项目添加配置如下:

在环境列表中选择PRO

新增配置

| Key | Value | Comment | 选择集群 |

|---|---|---|---|

| dubbo.registry | zookeeper://zk-prod.op.com:2181 | 生产环境dubbo服务提供者注册中心地址 | PRO |

| dubbo.port | 20880 | 生产环境dubbo服务提供者的监听端口 | PRO |

5、新建dubbo-demo-web项目

在测试环境/生产环境分别增加配置项并发布

提交后,新增配置:

为FAT(测试环境)新项目添加配置如下:

| Key | Value | Comment | 选择集群 |

|---|---|---|---|

| dubbo.registry | zookeeper://zk-test.op.com:2181 | 测试环境dubbo服务提供者注册中心地址 | FAT |

发布:

为PRO(生产环境)新项目添加配置如下:

在环境列表中选择PRO,然后新增配置,最后发布:

| Key | Value | Comment | 选择集群 |

|---|---|---|---|

| dubbo.registry | zookeeper://zk-prod.op.com:2181 | 生产环境dubbo服务提供者注册中心地址 | PRO |

6、回到主页

发布dubbo微服务

测试环境

更新dubbo-monitor配置为测试的zk

dubbo-monitor-cm:

dubbo.registry.address=zookeeper://zk-test.op.com:2181

dubbo-demo-service资源配置清单及应用

在vms200:/data/k8s-yaml

[root@vms200 ~]# cd /data/k8s-yaml/test/dubbo-demo-service/[root@vms200 dubbo-demo-service]# lltotal 0[root@vms200 dubbo-demo-service]# cp -a /data/k8s-yaml/dubbo-demo-service/*.yaml .[root@vms200 dubbo-demo-service]# lltotal 8-rw-r--r-- 1 root root 1067 Aug 20 15:39 deployment-apollo.yaml-rw-r--r-- 1 root root 982 Aug 8 19:35 deployment.yaml[root@vms200 dubbo-demo-service]# vi deployment-apollo.yaml #修改以下内容

...namespace: test...- name: C_OPTSvalue: -Denv=fat -Dapollo.meta=http://config-test.op.com...

在vms21或vms22:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/dubbo-demo-service/deployment-apollo.yamldeployment.apps/dubbo-demo-service created

查验:

- 在k8s-dashboard查看pod

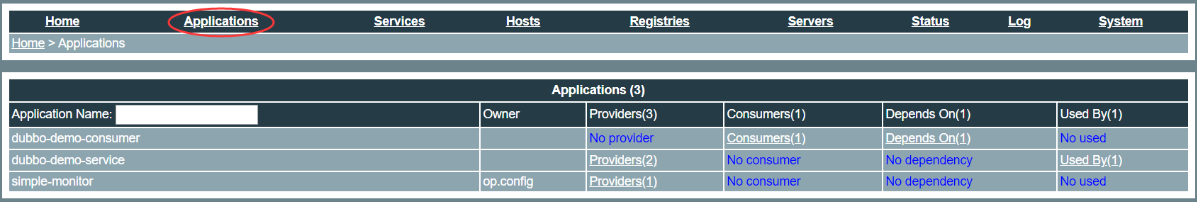

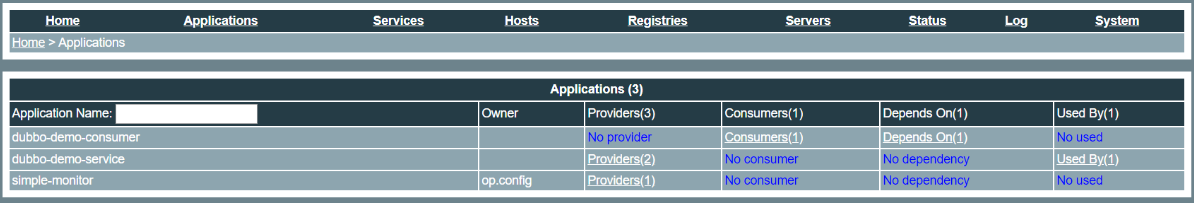

- 在Apollo配置中心(http://portal.op.com/)查看实例列表

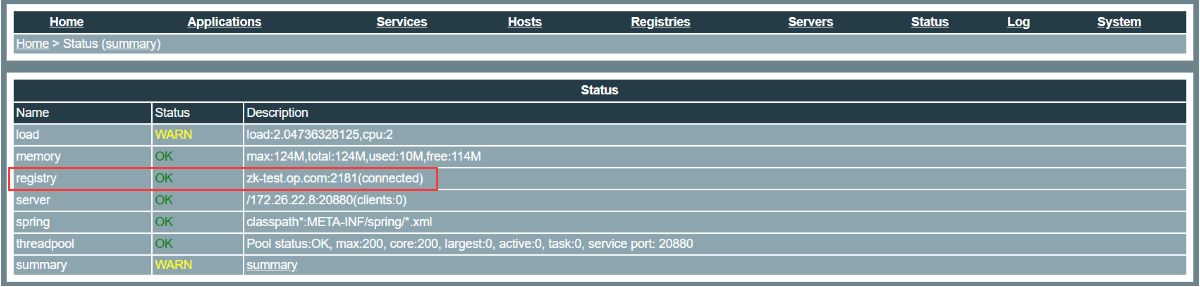

- 在dubbo-monitor查验

登录:http://dubbo-monitor.op.com/ (出现Bad Gateway,是服务还没启动好,或者zk没启动,请等待一会后再刷新)

dubbo-demo-web资源配置清单

在vms200

[root@vms200 dubbo-demo-service]# cd /data/k8s-yaml/test/dubbo-demo-consumer[root@vms200 dubbo-demo-consumer]# cp /data/k8s-yaml/dubbo-consumer/*.yaml .[root@vms200 dubbo-demo-consumer]# lltotal 16-rw-r--r-- 1 root root 1128 Aug 22 14:14 deployment-apollo.yaml-rw-r--r-- 1 root root 1043 Aug 22 14:14 deployment.yaml-rw-r--r-- 1 root root 270 Aug 22 14:14 ingress.yaml-rw-r--r-- 1 root root 194 Aug 22 14:14 svc.yaml[root@vms200 dubbo-demo-consumer]# vi deployment-apollo.yaml #修改以下内容

...namespace: test...- name: C_OPTSvalue: -Denv=fat -Dapollo.meta=http://config-test.op.com...

svc.yaml修改:namespace: test

ingress.yaml修改:namespace: test、host: demo-test.op.com

kind: IngressapiVersion: extensions/v1beta1metadata:name: dubbo-demo-consumernamespace: testspec:rules:- host: demo-test.op.comhttp:paths:- path: /backend:serviceName: dubbo-demo-consumerservicePort: 80

注意添加demo-test.op.com域名解析

在vms21或vms22:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/dubbo-demo-consumer/deployment-apollo.yamldeployment.apps/dubbo-demo-consumer created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/dubbo-demo-consumer/svc.yamlservice/dubbo-demo-consumer created[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/test/dubbo-demo-consumer/ingress.yamlingress.extensions/dubbo-demo-consumer created

查验:

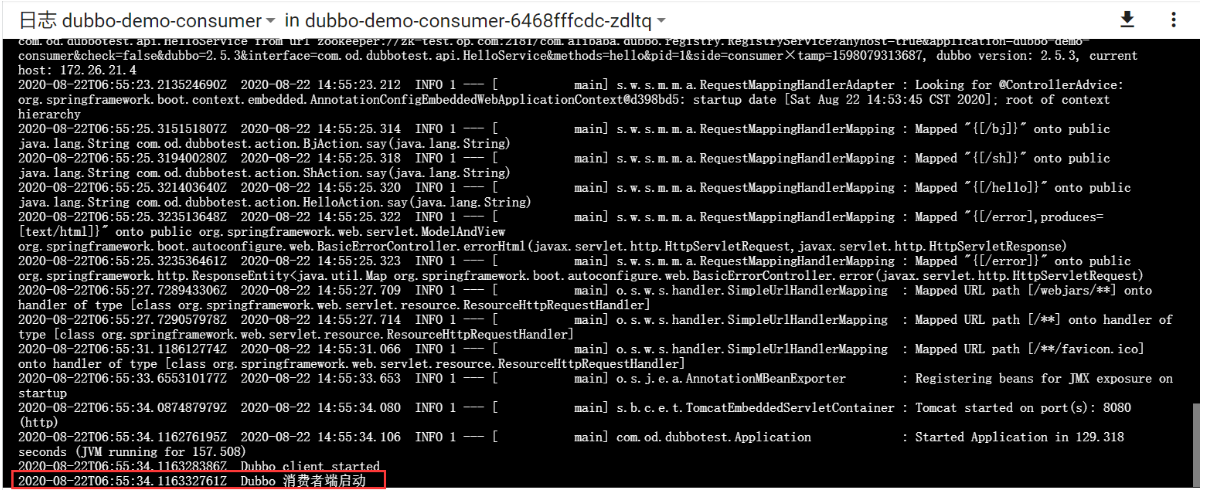

- 在k8s-dashboard查看pod日志

- 在Apollo配置中心(http://portal.op.com/)查看实例列表

- 在dubbo-monitor查验

至此,测试环境完美成功部署!

生产环境

更新dubbo-monitor配置为生产的zk

dubbo-monitor-cm:

dubbo.registry.address=zookeeper://zk-prod.op.com:2181

dubbo-demo-service资源配置清单及应用

在vms200:/data/k8s-yaml

[root@vms200 ~]# cd /data/k8s-yaml/prod/dubbo-demo-service/[root@vms200 dubbo-demo-service]# lltotal 0[root@vms200 dubbo-demo-service]# cp -a /data/k8s-yaml/test/dubbo-demo-service/*.yaml .[root@vms200 dubbo-demo-service]# lltotal 8-rw-r--r-- 1 root root 1073 Aug 22 13:49 deployment-apollo.yaml-rw-r--r-- 1 root root 982 Aug 8 19:35 deployment.yaml[root@vms200 dubbo-demo-service]# vi deployment-apollo.yaml

...namespace: prod...- name: C_OPTSvalue: -Denv=pro -Dapollo.meta=http://config-prod.op.com...

因为,apollo-configservice与dubbo-demo-service都在k8s同一命名空间,如:

[root@vms21 ~]# kubectl get svc -n testNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEapollo-configservice ClusterIP 10.168.236.3 <none> 8080/TCP 3h45mdubbo-demo-consumer ClusterIP 10.168.125.196 <none> 80/TCP 20m[root@vms21 ~]# dig -t A apollo-configservice.test @10.168.0.2 +short[root@vms21 ~]# dig -t A apollo-configservice.test.svc.cluster.local @10.168.0.2 +short10.168.236.3

所以,可以在deployment-apollo.yaml中替换为如下:(走svc,不走ingress)

...namespace: prod...- name: C_OPTSvalue: -Denv=pro -Dapollo.meta=http://apollo-configservice:8080#value: -Denv=pro -Dapollo.meta=http://config-prod.op.com...

默认当前命名空间prod。如果不在同一命名空间,则需要加上命名空间或全域名,如:apollo-configservice.test

svc用的是8080端口,走ingress,用80反代了8080端口,所以使用ingress时可以不加端口

在vms21或vms22:

[root@vms21 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/dubbo-demo-service/deployment-apollo.yamldeployment.apps/dubbo-demo-service created

查验:

- 在k8s-dashboard查看pod日志

- 在dubbo-monitor查验

登录:http://dubbo-monitor.op.com/ (出现Bad Gateway,是服务还没启动好,或者zk没启动,请等待一会后再刷新)

- 在Apollo配置中心(http://portal.op.com/)查看实例列表

dubbo-demo-web资源配置清单

在vms200

[root@vms200 dubbo-demo-service]# cd /data/k8s-yaml/prod/dubbo-demo-consumer/[root@vms200 dubbo-demo-consumer]# lltotal 0[root@vms200 dubbo-demo-consumer]# cp -a /data/k8s-yaml/test/dubbo-demo-consumer/*.yaml .[root@vms200 dubbo-demo-consumer]# lltotal 16-rw-r--r-- 1 root root 1134 Aug 22 14:33 deployment-apollo.yaml-rw-r--r-- 1 root root 1043 Aug 22 14:14 deployment.yaml-rw-r--r-- 1 root root 270 Aug 22 14:38 ingress.yaml-rw-r--r-- 1 root root 195 Aug 22 14:35 svc.yaml[root@vms200 dubbo-demo-consumer]# vi deployment-apollo.yaml

...namespace: prod...- name: C_OPTSvalue: -Denv=fat -Dapollo.meta=http://apollo-configservice:8080...

svc.yaml修改:namespace: prod

ingress.yaml修改:

kind: IngressapiVersion: extensions/v1beta1metadata:name: dubbo-demo-consumernamespace: prodspec:rules:- host: demo-prod.op.comhttp:paths:- path: /backend:serviceName: dubbo-demo-consumerservicePort: 80

注意添加demo-prod.op.com域名解析

在vms21或vms22:

[root@vms22 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/dubbo-demo-consumer/deployment-apollo.yamldeployment.apps/dubbo-demo-consumer created[root@vms22 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/dubbo-demo-consumer/svc.yamlservice/dubbo-demo-consumer created[root@vms22 ~]# kubectl apply -f http://k8s-yaml.op.com/prod/dubbo-demo-consumer/ingress.yamlingress.extensions/dubbo-demo-consumer created

查验:

- 在k8s-dashboard查看pod日志

- 在Apollo配置中心(http://portal.op.com/)查看实例列表

- 在dubbo-monitor查验

至此,生产环境完美成功部署!

没有重新打包,就把开发环境的镜像发布到测试环境、生产环境了。

互联网公司技术部的日常-发版流程

- 产品经理整理需求,需求评审,出产品原型

- 开发同学夜以继日的开发,提测

- 测试同学使用Jenkins持续集成,并发布至测试环境

- 验证功能,通过->待上线or打回->修改代码

- 提交发版申请,运维同学将测试后的包发往生产环境

- 无尽的BUG修复

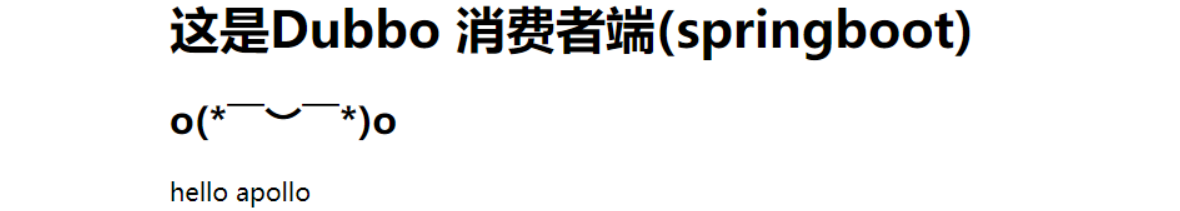

验证并模拟发布

1、验证访问两个环境

分别访问以下域名,看是否可以出来网页内容

test:http://demo-test.op.com/hello?name=test

prod:http://demo-prod.op.com/hello?name=prod

2、模拟发版:

任意修改码云上的dubbo-demo-web项目的say方法返回内容

路径dubbo-client/src/main/java/com/od/dubbotest/action/HelloAction.java

用jenkins构建新镜像

参数如下:

| 参数名 | 参数值 |

|---|---|

| app_name | dubbo-demo-consumer |

| image_name | app/dubbo-demo-consumer |

| git_repo | git@gitee.com:cloudlove2007/dubbo-demo-web.git |

| git_ver | a799758 |

| add_tag | 200822_1645 |

| mvn_dir | ./ |

| target_dir | ./dubbo-client/target |

| mvn_cmd | mvn clean package -Dmaven.test.skip=true |

| base_image | base/jre8:8u112 |

| maven | 3.6.3-8u261 |

git_ver:在https://gitee.com/项目分支中点击commit或提交进行查看:

发布test环境

构建成功,然后我们在测试环境发布此版本镜像:

修改测试环境的deployment-apollo.yaml

cd /data/k8s-yaml/test/dubbo-demo-consumersed -ri 's#(dubbo-demo-consumer:apollo).*#\1_200822_1645#g' deployment-apollo.yaml

应用修改后的资源配置清单:

kubectl apply -f http://k8s-yaml.op.com/test/dubbo-demo-consumer/deployment-apollo.yaml

以上发布操作可以在dashboard上直接更新镜像版本后重启pod

访问http://demo-test.op.com/hello?name=test看是否有我们更改的内容

发布prod环境

镜像在测试环境测试没有问题后,直接使用该镜像发布生产环境,不要不能重新打包,避免发生错误!

同样修改prod环境的deployment-apollo.yaml,并且应用该资源配置清单:

cd /data/k8s-yaml/prod/dubbo-demo-servicesed -ri 's#(dubbo-demo-consumer:apollo).*#\1_200822_1645#g' deployment-apollo.yaml

应用修改后的资源配置清单:

kubectl apply -f http://k8s-yaml.op.com/prod/dubbo-demo-consumer/deployment-apollo.yaml

以上发布操作可以在dashboard上直接更新镜像版本后重启pod

已经上线到生产环境,这样一套完整的分环境使用apollo配置中心发布流程已经可以使用了,并且真正做到了一次构建,多平台使用。

—End—