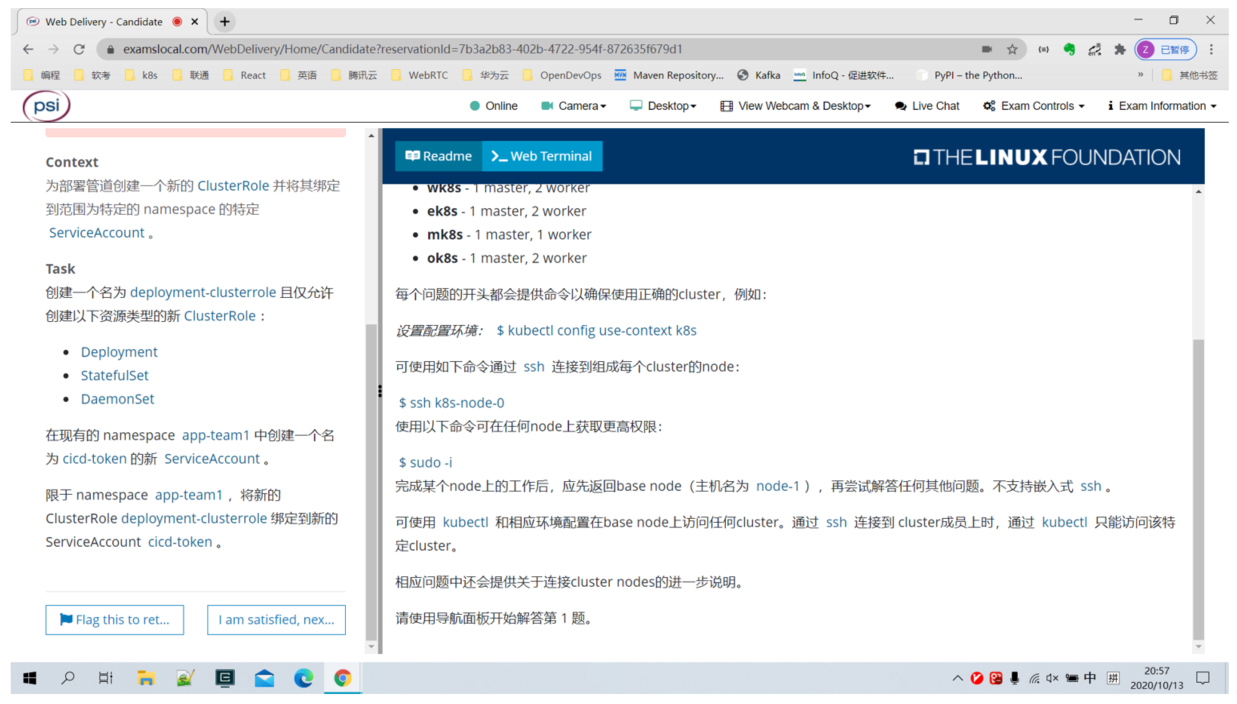

- 部署模拟环境

- 第一题 创建ClusterRole绑定到SA

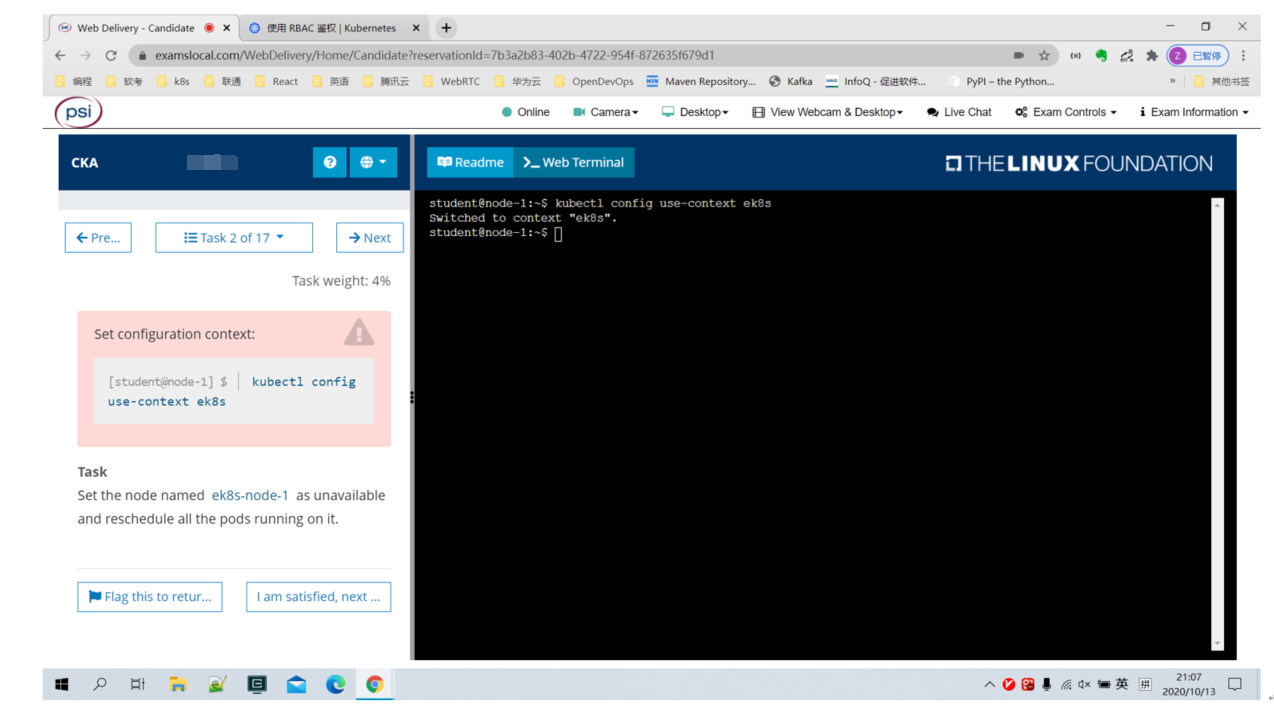

- 第二题 设置Node不可用

- 第三题 集群升级

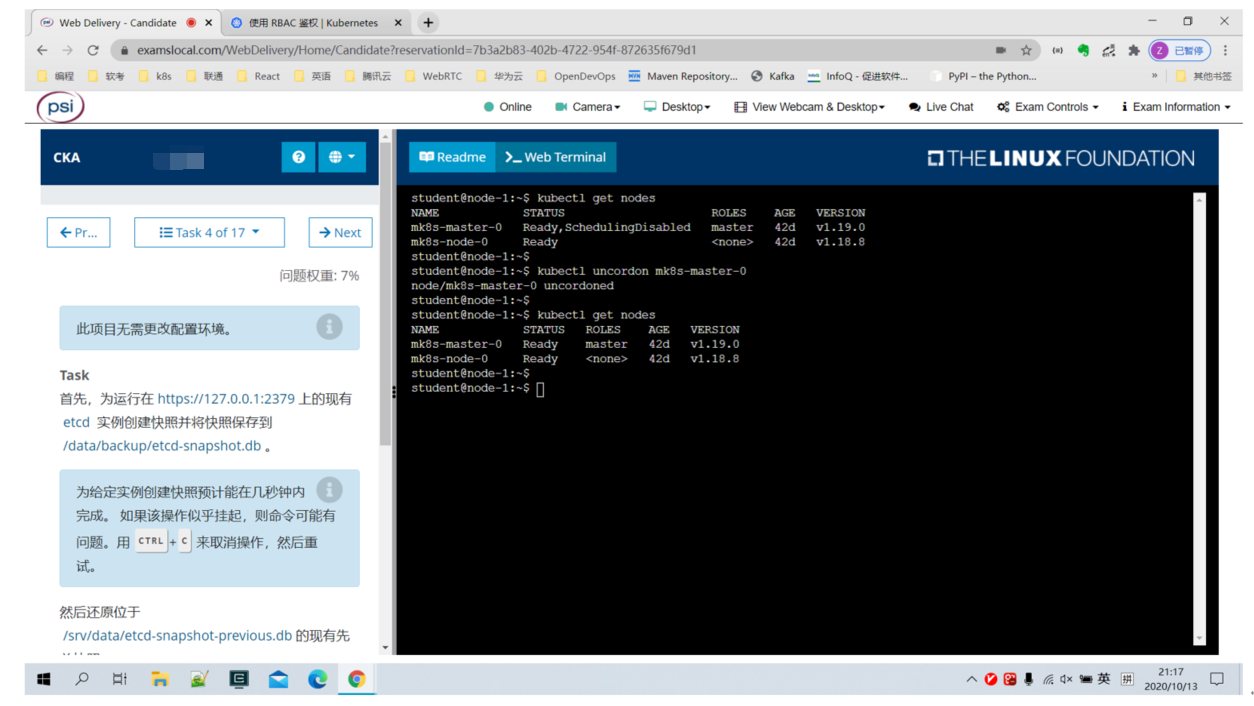

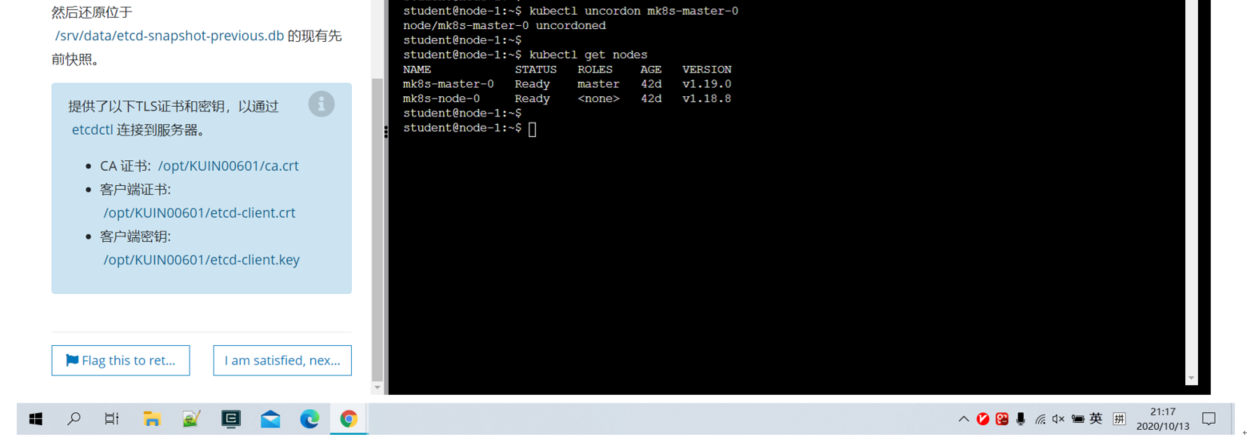

- 第四题 Backup/Restore etcd

- https://127.0.0.1:2379 \">ETCDCTL_API=3 etcdctl —endpoints=https://127.0.0.1:2379 \

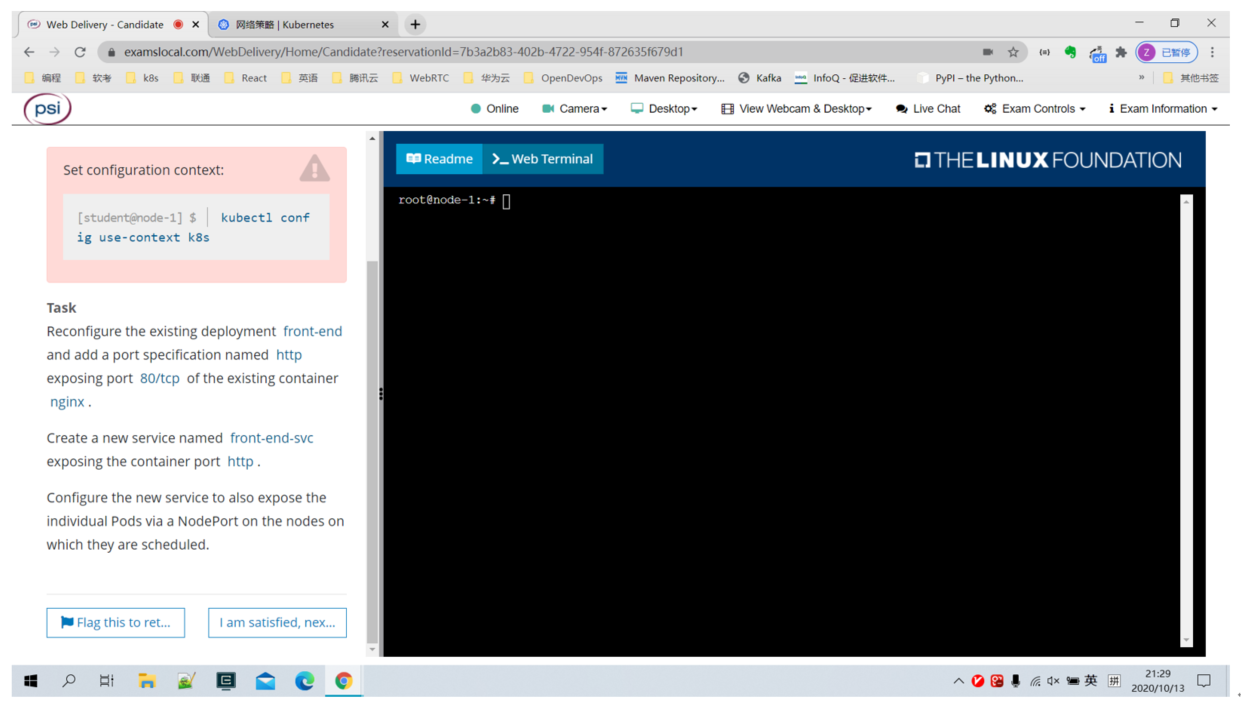

- 第六题 Deployment

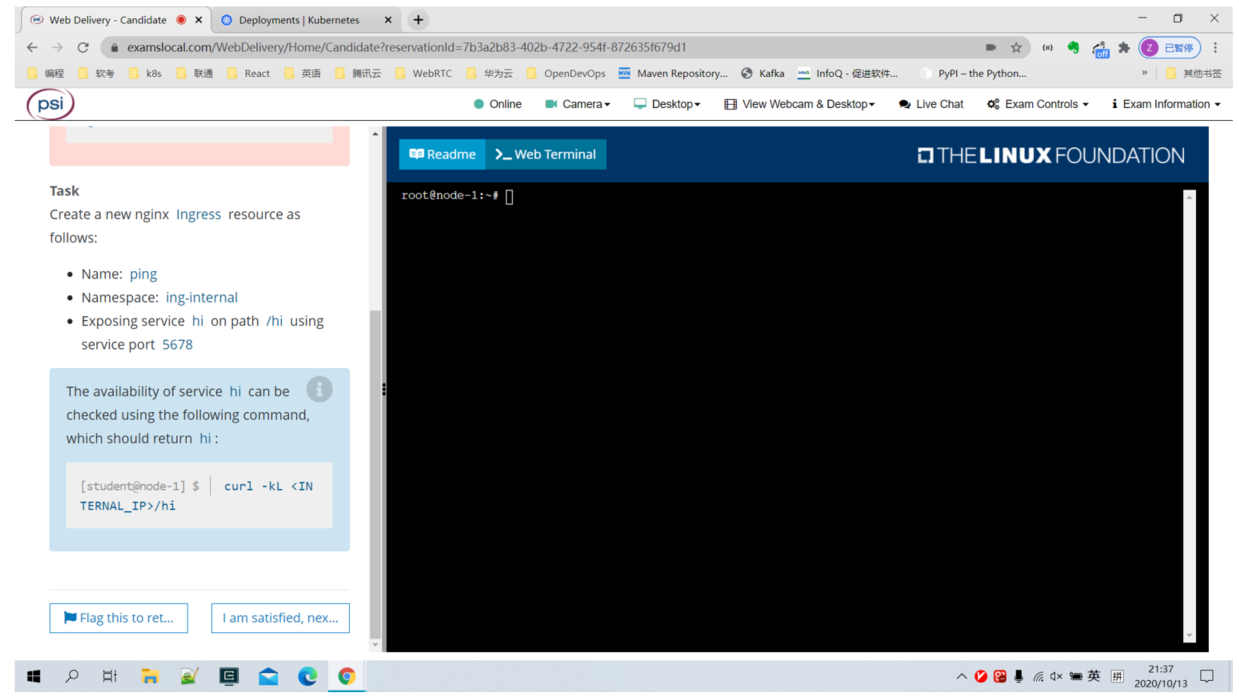

- 第七题 Ingress

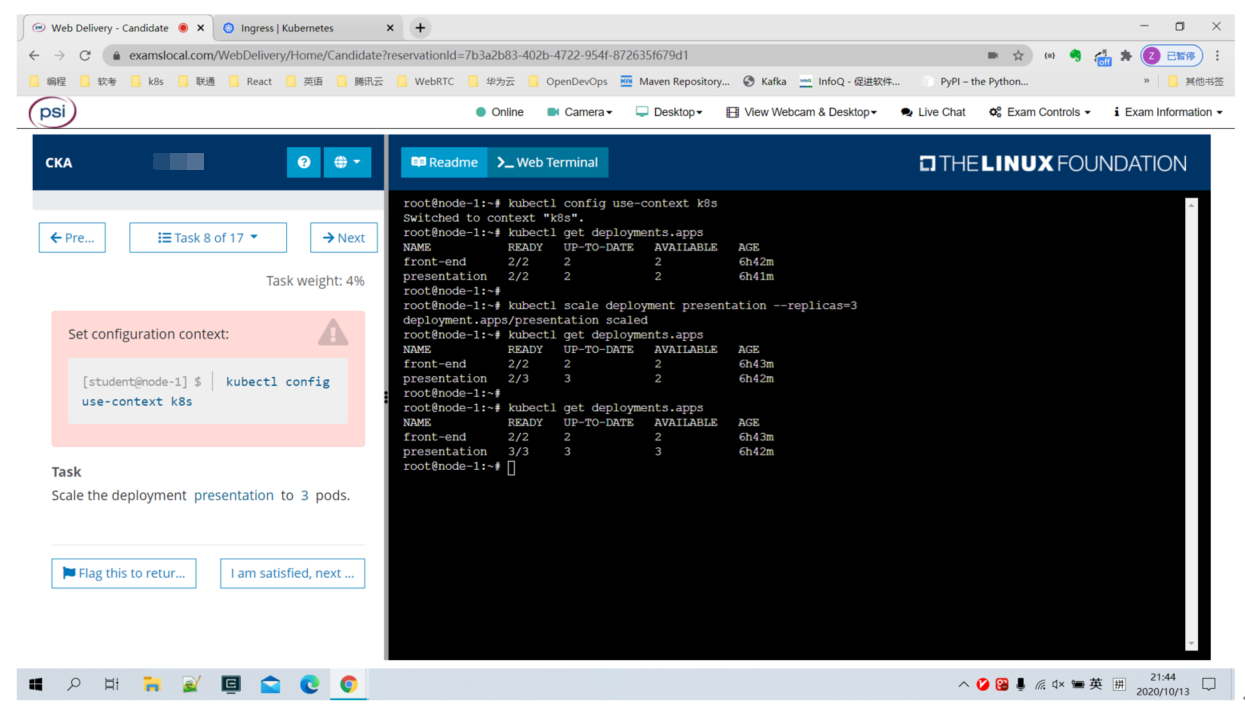

- 第八题 Scale deployment

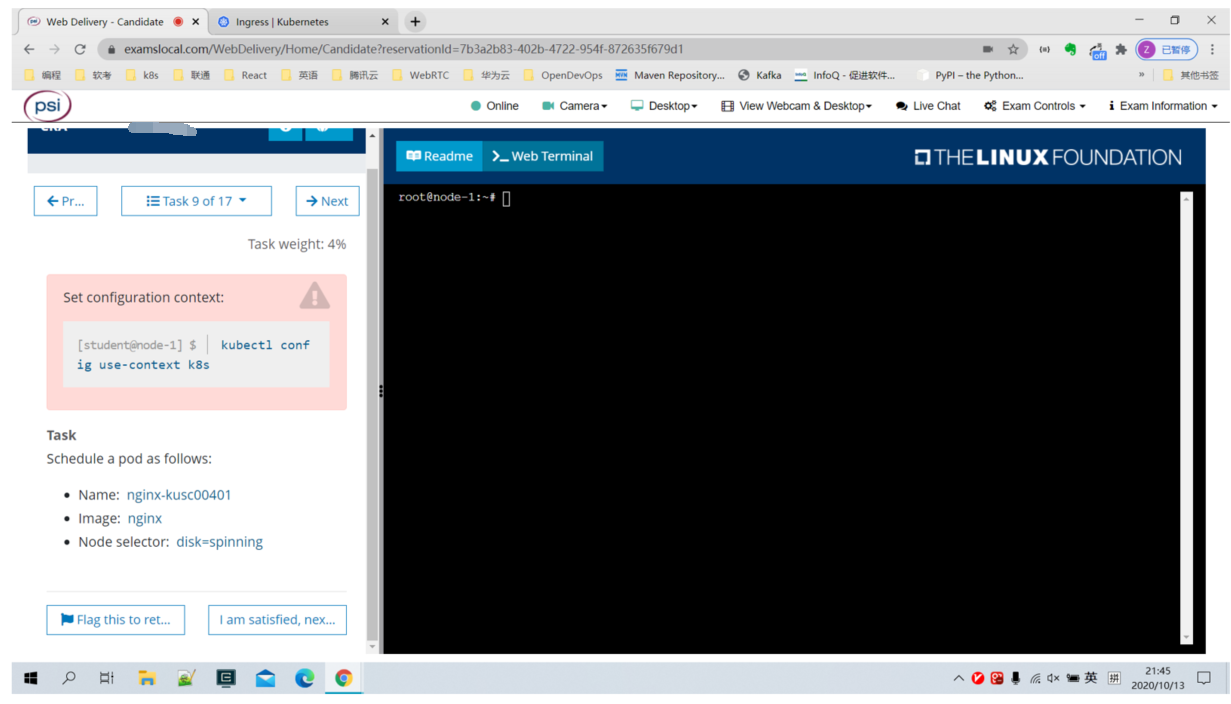

- 第九题 Pod调度

- 第十题 统计污染的节点

- 第十一题 多容器Pod

- 第十二题 创建hostPath PV

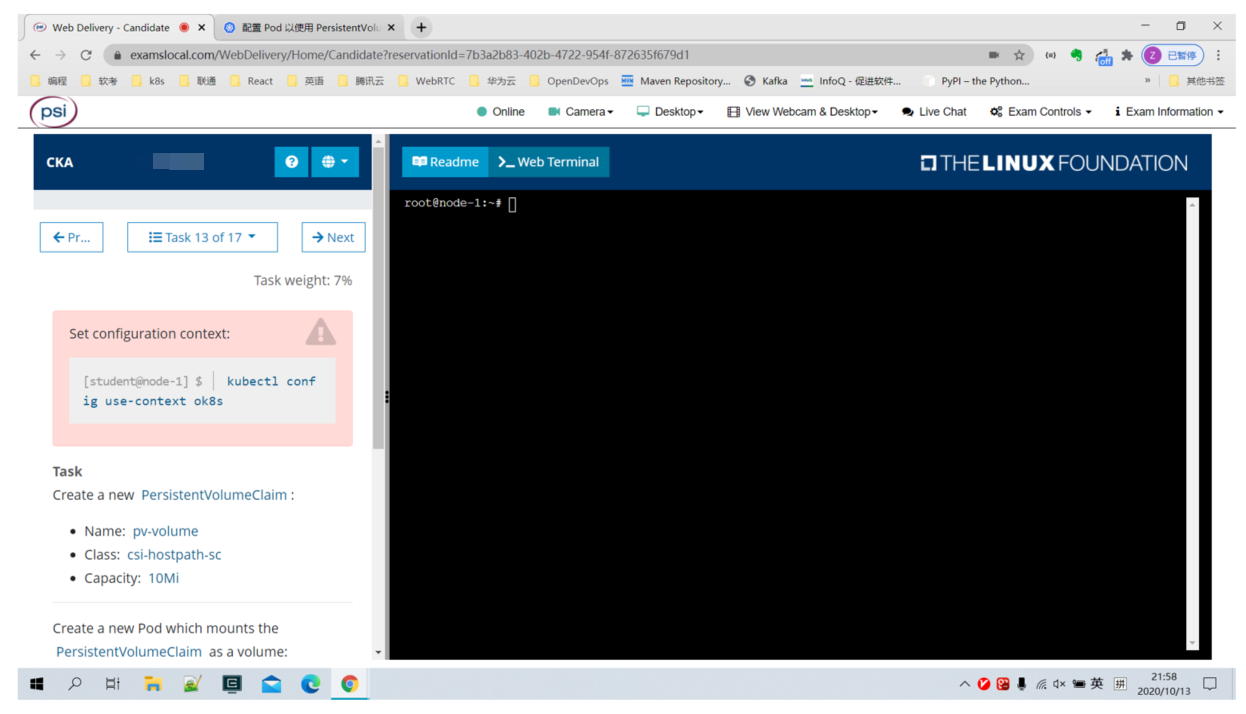

- 第十三题 PV And PVC

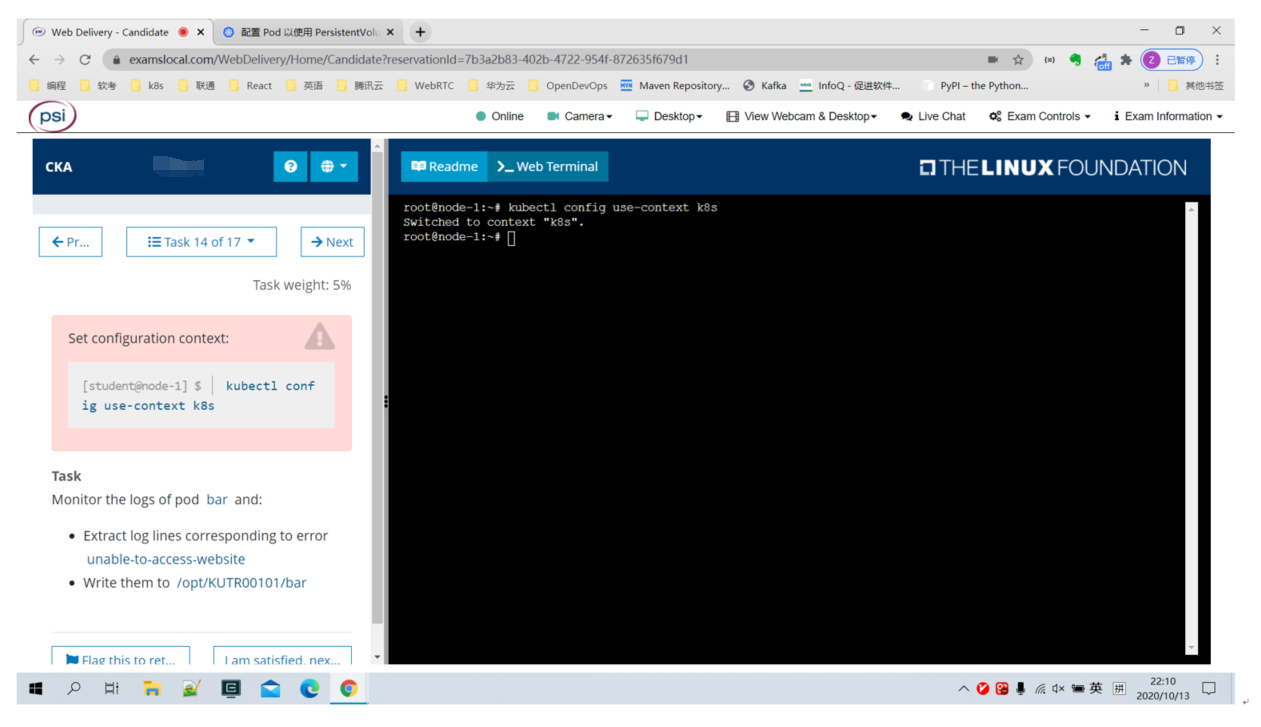

- 第十四题 查找Pod 日志

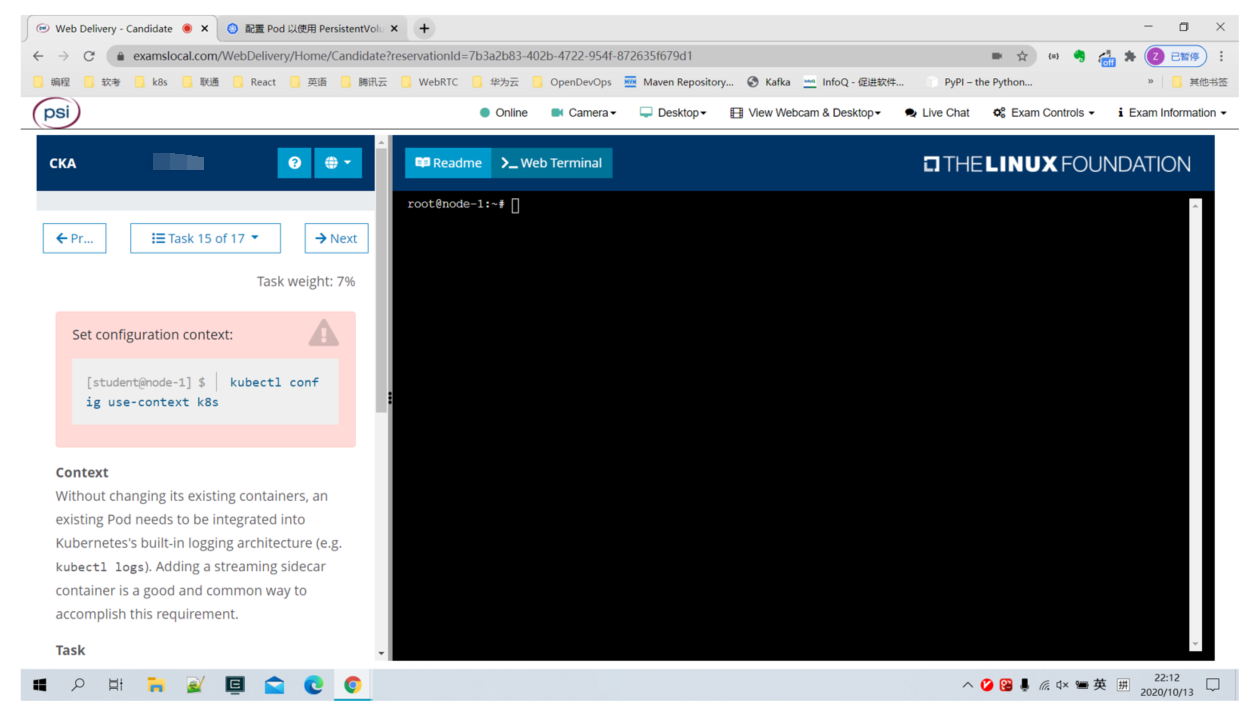

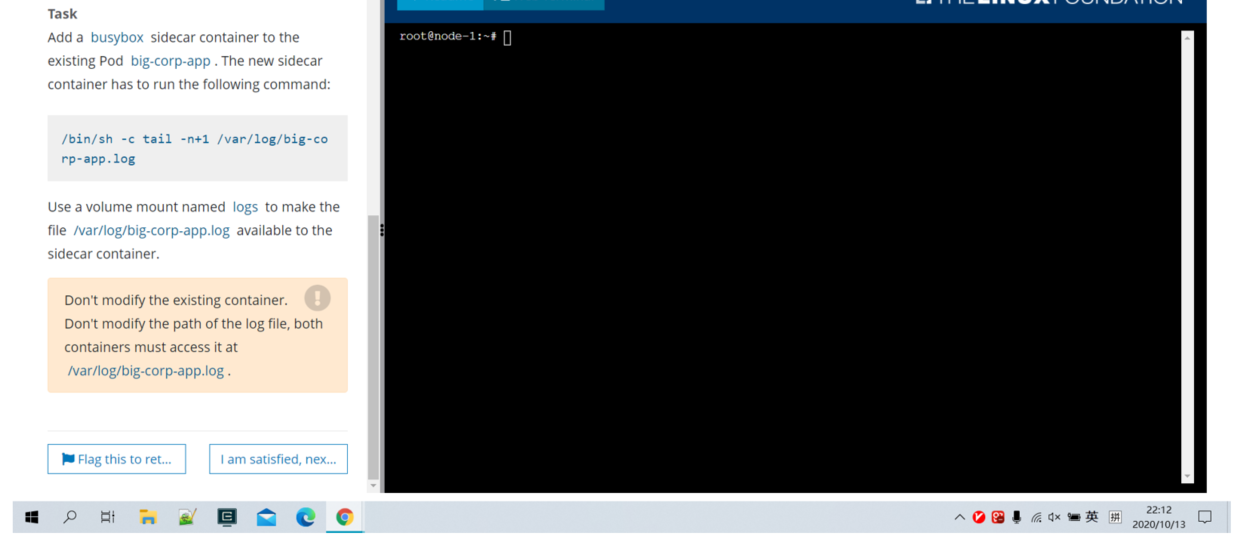

- 第十五题 Sidecar Pod

- 第十六题 使用Label查找高CPU Pod

- 第十七题 Worker节点排错

部署模拟环境

1)按照第一章节 “环境部署” 的步骤完成初始化集群配置

2)初始化CKA考试模拟环境

[root@clientvm ~]# /resources/cka-exam/init-cka-env.sh

第一题 创建ClusterRole绑定到SA

解答:

[root@clientvm cka-answer]# kubectl create clusterrole deployment-clusterrole --verb=create --resource=deployments,daemonsets,statefulsets[root@clientvm cka-answer]# kubectl create serviceaccount cicd-token -n app-team1[root@clientvm cka-answer]# kubectl create rolebinding mybinging --clusterrole deployment-clusterrole --serviceaccount app-team1:cicd-token -n app-team1

解题帮助:

## 查找verb,resource关键字[root@clientvm ~]# kubectl get clusterrole edit -o yaml | more## 查找命令[root@clientvm ~]# kubectl create clusterrole -h | head -10

官方文档:

https://kubernetes.io/zh/docs/reference/access-authn-authz/rbac/

https://kubernetes.io/zh/docs/reference/access-authn-authz/authentication/

第二题 设置Node不可用

解答:

[root@clientvm cka-answer]# kubectl cordon worker1.example.com[root@clientvm cka-answer]# kubectl drain worker1.example.com --ignore-daemonsets

官方文档:

https://kubernetes.io/zh/docs/tasks/administer-cluster/safely-drain-node/

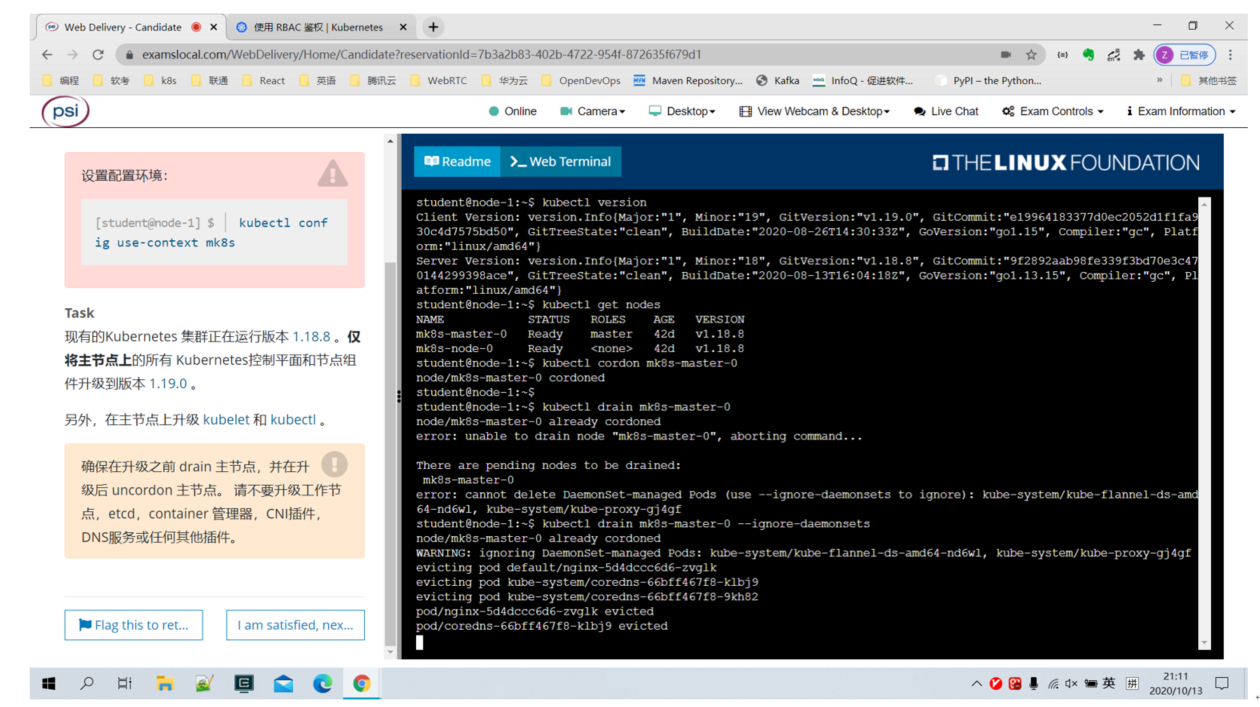

第三题 集群升级

解答:

参考:

https://kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

大概流程为:

Master上:

1) 升级kubeadm

2) kubeadm upgrade plan ; kubeadm upgrade apply xxx 升级集群

3) 升级kubelet,kubectl

4) 重启服务

Worker上不需要升级

注意: 1.20 版本和1.19版本的升级步骤有差异。以下以升级1.20版本为例:

1)获取要升级的版本

[root@master ~]# yum list --showduplicates kubeadmLoaded plugins: fastestmirrorLoading mirror speeds from cached hostfileInstalled Packageskubeadm.x86_64 1.20.0-0 @localAvailable Packageskubeadm.x86_64 1.19.0-0 localkubeadm.x86_64 1.19.1-0 localkubeadm.x86_64 1.20.0-0 localkubeadm.x86_64 1.20.1-0 local

2)升级kubeadm

[root@master ~]# yum install kubeadm-1.20.1-0 -y

3) 执行升级计划,查看升级版本

[root@master ~]# kubeadm upgrade plan[upgrade/config] Making sure the configuration is correct:[upgrade/config] Reading configuration from the cluster...[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'[preflight] Running pre-flight checks.[upgrade] Running cluster health checks[upgrade] Fetching available versions to upgrade to[upgrade/versions] Cluster version: v1.20.0[upgrade/versions] kubeadm version: v1.20.1[upgrade/versions] Latest stable version: v1.20.4[upgrade/versions] Latest stable version: v1.20.4[upgrade/versions] Latest version in the v1.20 series: v1.20.4[upgrade/versions] Latest version in the v1.20 series: v1.20.4Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':COMPONENT CURRENT AVAILABLEkubelet 3 x v1.20.0 v1.20.4Upgrade to the latest version in the v1.20 series:COMPONENT CURRENT AVAILABLEkube-apiserver v1.20.0 v1.20.4kube-controller-manager v1.20.0 v1.20.4kube-scheduler v1.20.0 v1.20.4kube-proxy v1.20.0 v1.20.4CoreDNS 1.7.0 1.7.0etcd 3.4.13-0 3.4.13-0You can now apply the upgrade by executing the following command:kubeadm upgrade apply v1.20.4Note: Before you can perform this upgrade, you have to update kubeadm to v1.20.4.

4)开始升级,并禁止etcd升级

[root@master ~]# kubeadm upgrade apply v1.20.1 --etcd-upgrade=false

5)驱逐master上的Pod,并设置不可调度

[root@master ~]# kubectl drain master.example.com --ignore-daemonsets[root@master ~]# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster.example.com Ready,SchedulingDisabled control-plane,master 4h25m v1.20.0worker1.example.com Ready <none> 4h25m v1.20.0worker2.example.com Ready <none> 4h25m v1.20.0

6)升级kubelet与kubectl

[root@master ~]# yum install -y kubelet-1.20.1-0 kubectl-1.20.1-0

7)重启kubelet服务

[root@master ~]# systemctl daemon-reload[root@master ~]# systemctl restart kubelet.service

8) 恢复master节点调度

[root@master ~]# kubectl uncordon master.example.com

9) 验证master节点已经升级到1.20.1

[root@master ~]# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster.example.com Ready control-plane,master 4h31m v1.20.1worker1.example.com Ready <none> 4h30m v1.20.0worker2.example.com Ready <none> 4h30m v1.20.0

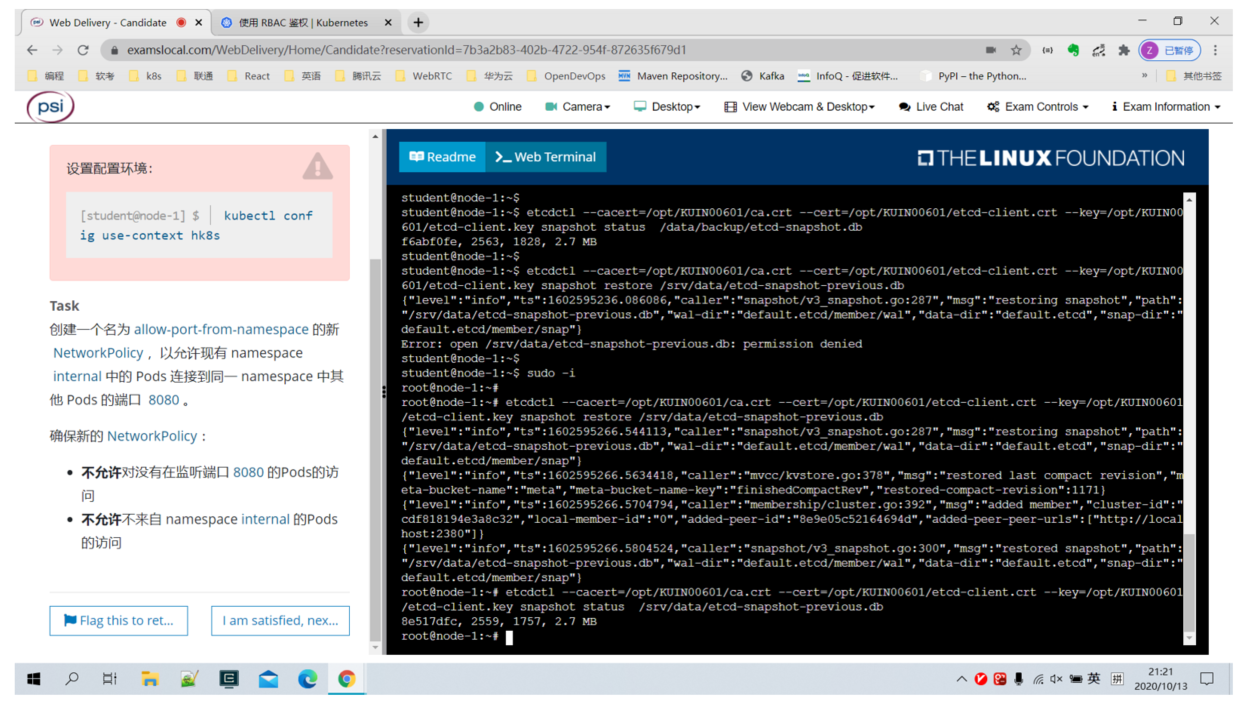

第四题 Backup/Restore etcd

解答:

这题,练习时需要在Master节点上进行。

1) sudo -i

2) 检查本机是否存在etcdctl命令,如果不存在,需要从pod中copy一个

方法1:

[root@master ~]# kubectl exec -n kube-system etcd-master.example.com -it -- shsh-5.0# cp /usr/local/bin/etcdctl /etc/kubernetes/pki/etcd/etcdctl sh-5.0# cp /usr/local/bin/etcdctl /etc/kubernetes/pki/etcd/etcdctlsh-5.0# exitexit[root@master ~]# ll /etc/kubernetes/pki/etcd/etcdctl-r-xr-xr-x. 1 root root 17620576 Jan 10 11:20 /etc/kubernetes/pki/etcd/etcdctl[root@master ~]# mv /etc/kubernetes/pki/etcd/etcdctl /bin/

3) backup

$ ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 \--cacert=/opt/KUIN00601/ca.crt \--cert=/opt/KUIN00601/etcd-client.crt \--key=/opt/KUIN00601/etcd-client.key snapshot save /data/backup/etcd-snapshot.db## 验证[root@master ~]# ETCDCTL_API=3 etcdctl --write-out=table snapshot status /data/backup/etcd-snapshot.db+----------+----------+------------+------------+| HASH | REVISION | TOTAL KEYS | TOTAL SIZE |+----------+----------+------------+------------+| 23212e4e | 96006 | 1894 | 5.5 MB |+----------+----------+------------+------------+

4) Restore

- Stop API Server:移动etcd.yaml到其他目录

- Stop ETCD Server:移动kube-apiserver.yaml到其他目录

- backup ETCD data: /var/lib/etcd/

- Restore

- start ETCD SERVER and API server: 移回kube-apiserver.yaml和etcd.yaml.old到/etc/kubernetes/manifests ```bash

restore命令如下,其他步骤略

[root@master manifests]# ETCDCTL_API=3 etcdctl snapshot restore \ /srv/data/etcd-snapshot-previous.db —data-dir=”/var/lib/etcd”

验证

ETCDCTL_API=3 etcdctl —endpoints=https://127.0.0.1:2379 \

—cacert=/opt/KUIN00601/ca.crt \ —cert=/opt/KUIN00601/etcd-client.crt \ —key=/opt/KUIN00601/etcd-client.key endpoint health

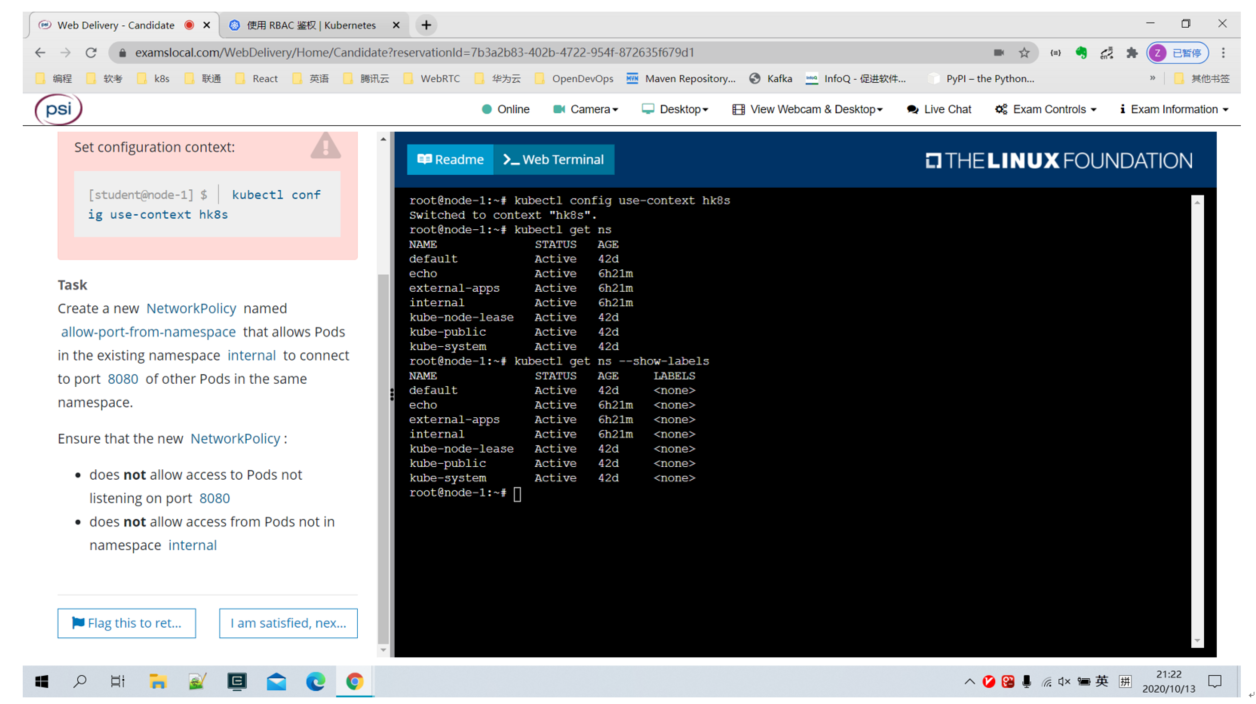

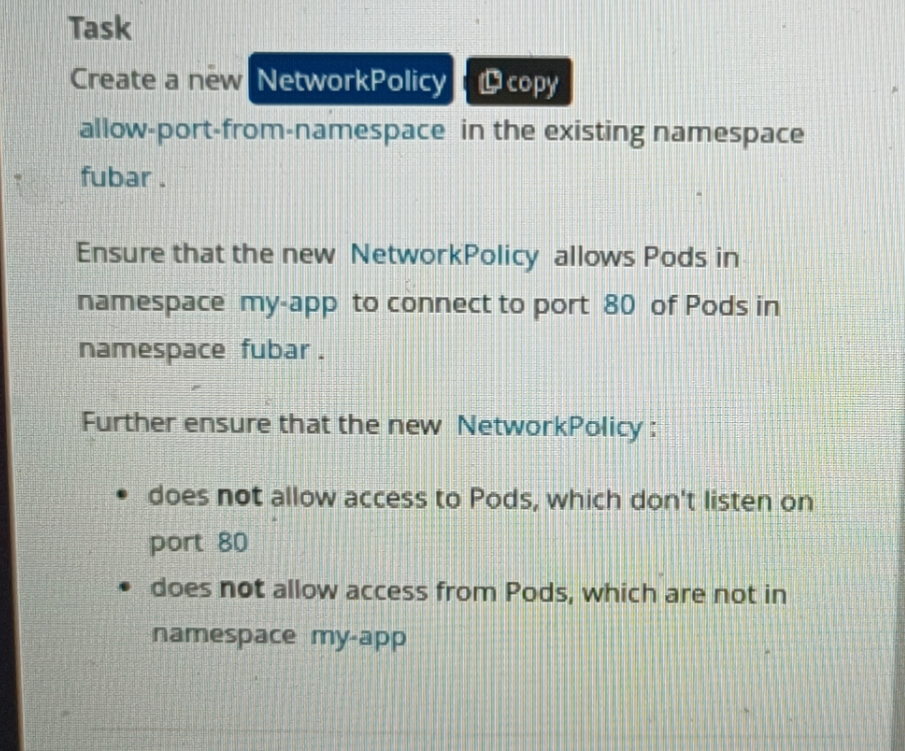

[](https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/#snapshot-using-etcdctl-options)<a name="GuJ2D"></a># 第五题 Network Policy<br />解答:<br />参考:<br />[https://kubernetes.io/zh/docs/concepts/services-networking/network-policies/](https://kubernetes.io/zh/docs/concepts/services-networking/network-policies/)

先查看internal 这个NameSpace的Label,如果没有就手动打一个

然后创建如下yaml,替换namespaceSelector

[root@clientvm cka-answer]# cat 5-networkpolicy.yaml apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-port-from-namespace spec: podSelector: {} policyTypes:

- Ingress

- Egress ingress:

- from:

- namespaceSelector:

matchLabels:

ports:app: networkpolicy

- protocol: TCP port: 8080 egress:

- namespaceSelector:

matchLabels:

- to:

- namespaceSelector:

matchLabels:

ports:app: networkpolicy

- protocol: TCP

port: 8080

```[root@clientvm cka-answer]# kubectl apply -f 5-networkpolicy.yaml -n internal

- namespaceSelector:

matchLabels:

变化:

apiVersion: networking.k8s.io/v1kind: NetworkPolicymetadata:name: allow-port-from-namespace1namespace: internalspec:podSelector: {}policyTypes:- Ingressingress:- from:- namespaceSelector:matchLabels:kubernetes.io/metadata.name: my-appports:- protocol: TCPport: 80

第六题 Deployment

解答:

1): add port

## Edit deployment front-end and add following below line"ports"

[root@clientvm cka-answer]# kubectl edit deployments.apps front-end

......

ports:

- name: http

protocol: TCP

containerPort: 80

2): create svc

[root@clientvm cka-exam]# kubectl expose deployment front-end --port=80 --target-port=80 --name front-end-svc --type NodePort

Ref yaml:

[root@clientvm cka-answer]# cat 6-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: front-end

name: front-end-svc

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: front-end

[root@clientvm cka-answer]# kubectl apply -f 6-service.yaml

参考:

[root@clientvm cka-exam]# kubectl explain pod.spec.containers.ports

[root@clientvm cka-answer]# kubectl expose deployment front-end --port=80 --target-port=80 -o yaml --dry-run=client

[root@clientvm cka-exam]# kubectl explain service.spec

官方文档:

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#expose

第七题 Ingress

参考:

https://kubernetes.io/docs/concepts/services-networking/ingress/#the-ingress-resource

解答:

[root@clientvm cka-answer]# cat 7-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ping

namespace: ing-internal

## 本例中不需要如下注解,在考试中视pod中的web内容目录,看是否需要

# annotations:

# nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- http:

paths:

- pathType: Prefix

path: "/hi"

backend:

service:

name: hi

port:

number: 5678

[root@clientvm cka-answer]# kubectl apply -f 7-ingress.yaml

ingress.networking.k8s.io/ping created

验证:

[root@clientvm cka-answer]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.99.233.39 <pending> 80:30507/TCP,443:31963/TCP 43h

[root@clientvm cka-exam]# curl NodeIP:30507/hi/

hi

第八题 Scale deployment

解答:

[root@clientvm cka-exam]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

front-end 2/2 2 2 43h

presentation 1/1 1 1 43h

[root@clientvm cka-exam]# kubectl scale deployment presentation --replicas=3

deployment.apps/presentation scaled

[root@clientvm cka-exam]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

front-end 2/2 2 2 43h

presentation 3/3 3 3 43h

官方文档:

https://kubernetes.io/docs/concepts/workloads/controllers/deployment/#scaling-a-deployment

第九题 Pod调度

[root@clientvm cka-exam]# kubectl run nginx-kusc00401 --image=nginx --image-pull-policy=IfNotPresent -o yaml --dry-run=client

[root@clientvm cka-answer]# kubectl explain pod.spec

解答:

[root@clientvm cka-exam]# cat 9-pod-selector.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx-kusc00401

name: nginx-kusc00401

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-kusc00401

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

nodeSelector:

disk: spinning

[root@clientvm cka-exam]# kubectl apply -f 9-pod-selector.yaml

[root@clientvm cka-exam]# kubectl get pod nginx-kusc00401 -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-kusc00401 1/1 Running 0 2m9s 10.244.102.184 worker1.example.com <none> <none>

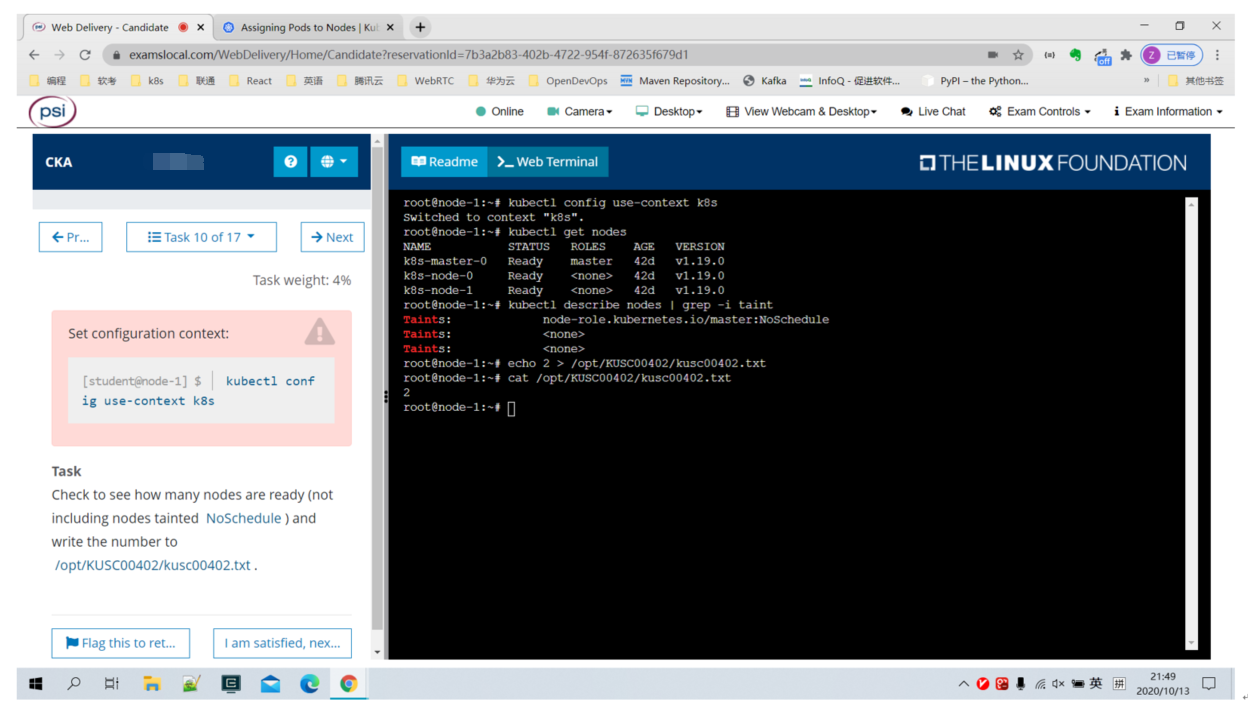

第十题 统计污染的节点

解答:

[root@clientvm cka-answer]# kubectl get node

NAME STATUS ROLES AGE VERSION

master.example.com Ready master 12d v1.19.1

worker1.example.com Ready <none> 12d v1.19.1

worker2.example.com Ready <none> 12d v1.19.1

[root@clientvm cka-answer]# kubectl describe nodes | grep -i taint

Taints: node-role.kubernetes.io/master:NoSchedule

Taints: <none>

Taints: <none>

[root@clientvm cka-answer]# echo 2 >/opt/KUSC00402/kusc00402.txt

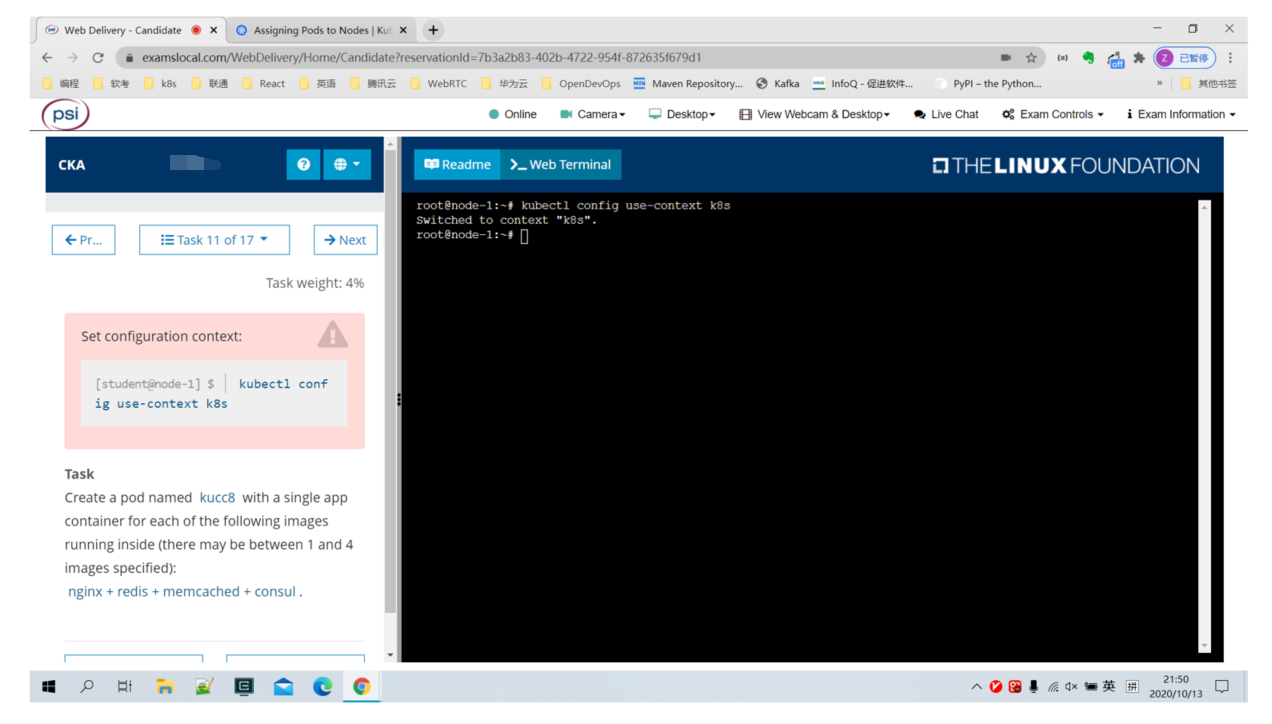

第十一题 多容器Pod

解答:

[root@clientvm cka-answer]# cat 11-multi-pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: kucc8

name: kucc8

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

- image: redis

name: redis

imagePullPolicy: IfNotPresent

- image: memcached

name: memcached

imagePullPolicy: IfNotPresent

- image: consul

name: consul

imagePullPolicy: IfNotPresent

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

[root@clientvm cka-answer]# kubectl apply -f 11-multi-pod.yaml

pod/kucc8 created

[root@clientvm cka-answer]# kubectl get pod kucc8

NAME READY STATUS RESTARTS AGE

kucc8 4/4 Running 0 2m8s

参考:

[root@clientvm cka-answer]# kubectl run kucc8 --image=nginx -o yaml --dry-run=client >11-multi-pod.yaml

官方文档:

https://kubernetes.io/zh/docs/concepts/workloads/pods/init-containers/

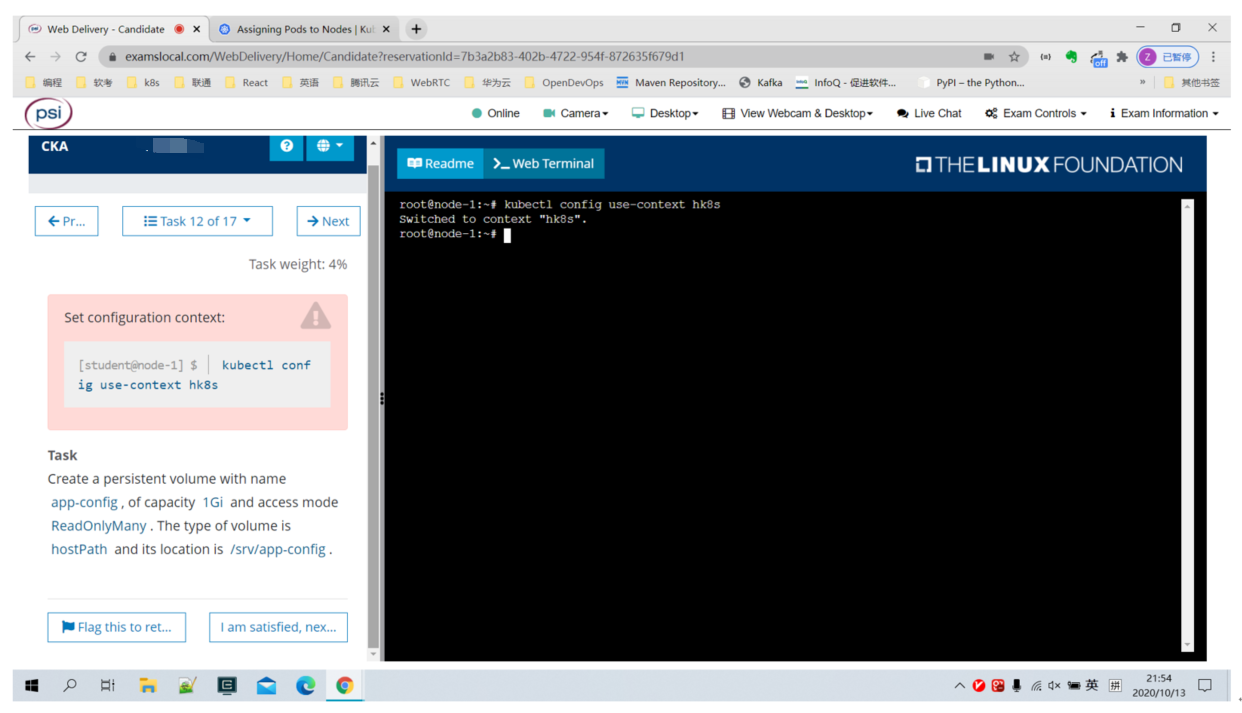

第十二题 创建hostPath PV

解答:

[root@clientvm cka-answer]# cat 12-hostpath-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: app-config

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadOnlyMany

hostPath:

path: "/srv/app-config"

type: DirectoryOrCreate

[root@clientvm cka-answer]# kubectl apply -f 12-hostpath-pv.yaml

persistentvolume/app-config created

[root@clientvm cka-answer]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

app-config 1Gi ROX Retain Available manual 22s

参考:

https://kubernetes.io/zh/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

第十三题 PV And PVC

解答:

参考:

https://kubernetes.io/zh/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

1) create

[root@clientvm cka-answer]# cat 13-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-volume

spec:

accessModes:

- ReadWriteOnce

storageClassName: csi-hostpath-sc

resources:

requests:

storage: 10Mi

[root@clientvm cka-answer]# cat 13-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: web-server

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: pv-volume

containers:

- name: task-pv-container

image: nginx

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

[root@clientvm cka-answer]# kubectl apply -f 13-pvc.yaml

persistentvolumeclaim/pv-volume created

[root@clientvm cka-answer]# kubectl apply -f 13-pod.yaml

pod/web-server created

[root@clientvm cka-answer]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/app-config 1Gi ROX Retain Available manual 25m

persistentvolume/pvc-3423e535-6a63-45e5-bde7-e915d4a952a7 10Mi RWO Delete Bound default/pv-volume csi-hostpath-sc 82s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pv-volume Bound pvc-3423e535-6a63-45e5-bde7-e915d4a952a7 10Mi RWO csi-hostpath-sc 7m39s

2) Edit

[root@clientvm cka-answer]# kubectl edit pvc pv-volume --record=true

......

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 70Mi

官方文档:

https://kubernetes.io/zh/docs/concepts/storage/dynamic-provisioning/

https://kubernetes.io/zh/docs/tasks/configure-pod-container/configure-persistent-volume-storage/#%E5%88%9B%E5%BB%BA-pod

第十四题 查找Pod 日志

解答:

[root@clientvm cka-answer]# kubectl logs bar |grep "unable-to-access-website" >/opt/KUTR00101/bar

第十五题 Sidecar Pod

解答:

本题存在一点小问题,视考试环境确定。

[root@clientvm ~]# kubectl get pod big-corp-app -o yaml > 15-sidecar-pod.yaml

[root@clientvm ~]# vim 15-sidecar-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: big-corp-app

spec:

containers:

- args:

- -c

- while true; do echo echo $(date -u) 'Hi I am from Sidecar container' >> /var/log/big-corp-app.log; sleep 5;done

command:

- /bin/sh

image: busybox

imagePullPolicy: IfNotPresent

name: big-corp-app

resources: {}

volumeMounts:

- mountPath: /var/log

name: logs

- name: sidecar

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh"]

args: ["-c", "tail -n+1 -f /var/log/big-corp-app.log"]

volumeMounts:

- mountPath: /var/log

name: logs

dnsPolicy: Default

enableServiceLinks: true

nodeName: worker2.example.com

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

volumes:

- emptyDir: {}

name: logs

[root@clientvm ~]# kubectl apply -f 15-sidecar-pod.yaml

[root@clientvm ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

bar 1/1 Running 0 3h56m

big-corp-app 2/2 Running 0 98s

参考:

https://kubernetes.io/zh/docs/concepts/cluster-administration/logging/

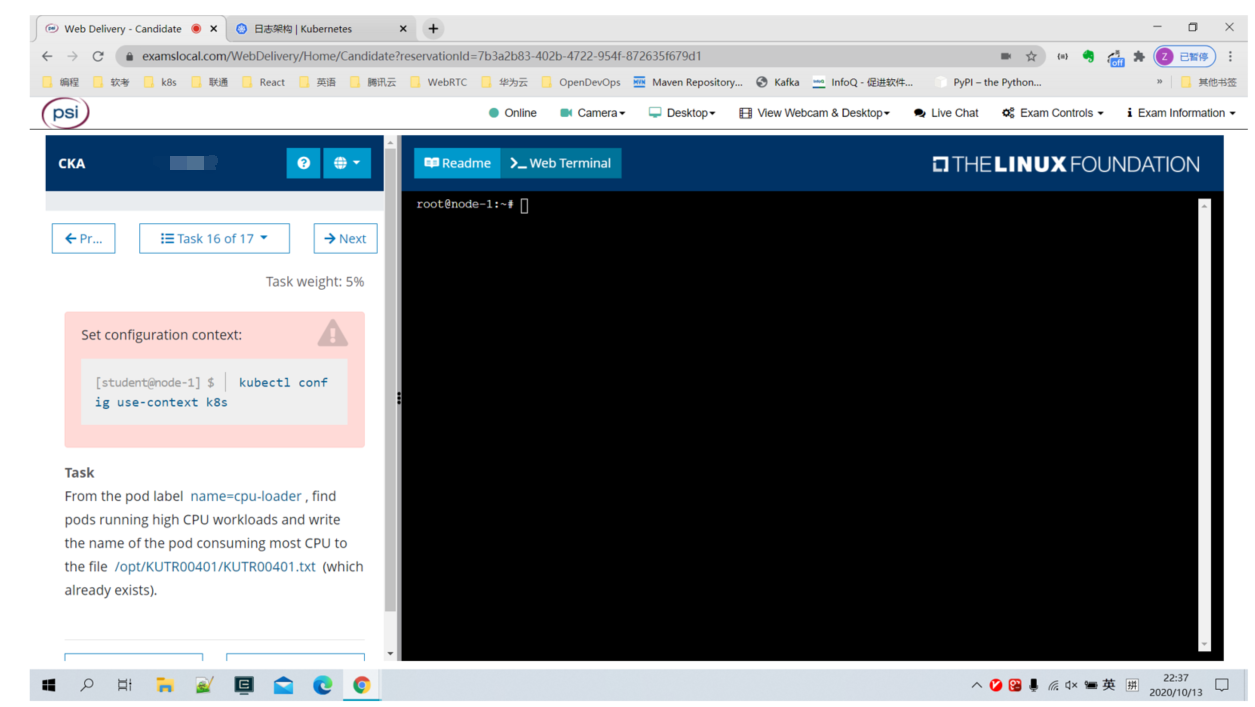

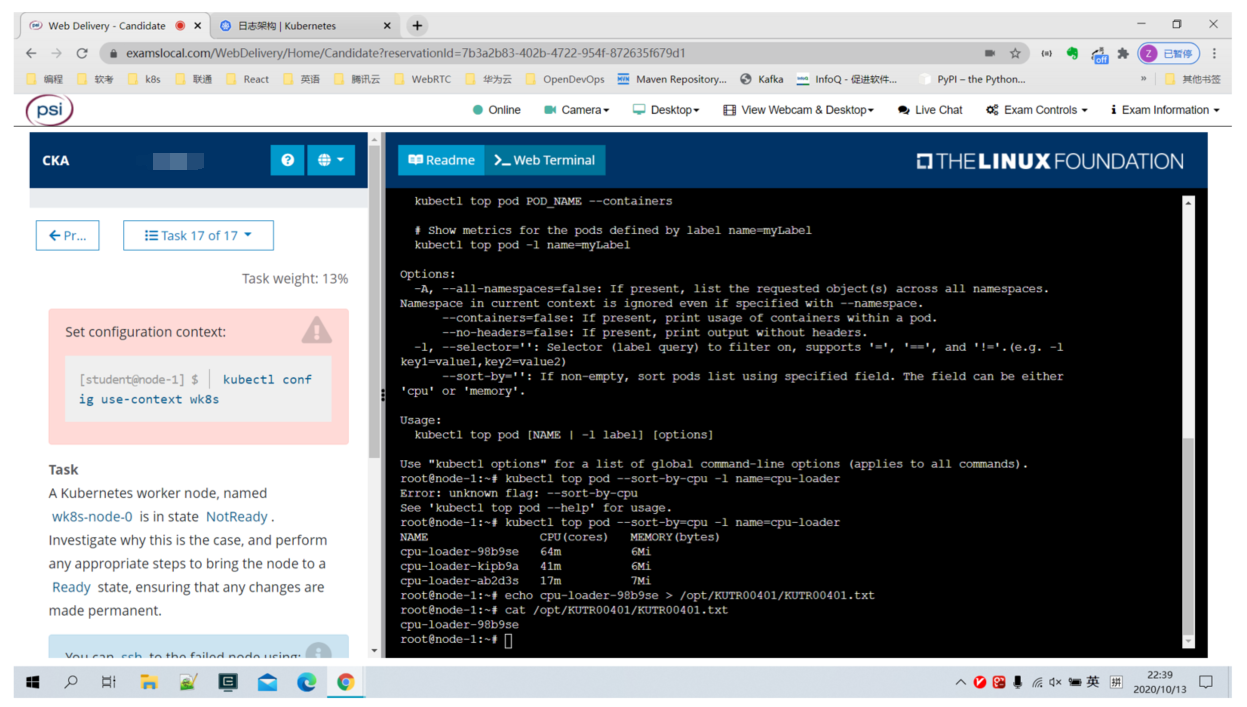

第十六题 使用Label查找高CPU Pod

解答:

[root@clientvm cka-answer]# kubectl top pod -A -l name=cpu-loader --sort-by cpu

NAMESPACE NAME CPU(cores) MEMORY(bytes)

default cpu-demo-2 1500m 0Mi

default cpu-demo-1 801m 0Mi

default cpu-demo-3 502m 0Mi

[root@clientvm cka-answer]# echo cpu-demo-2 >/opt/KUTR00401/kutr00401.txt

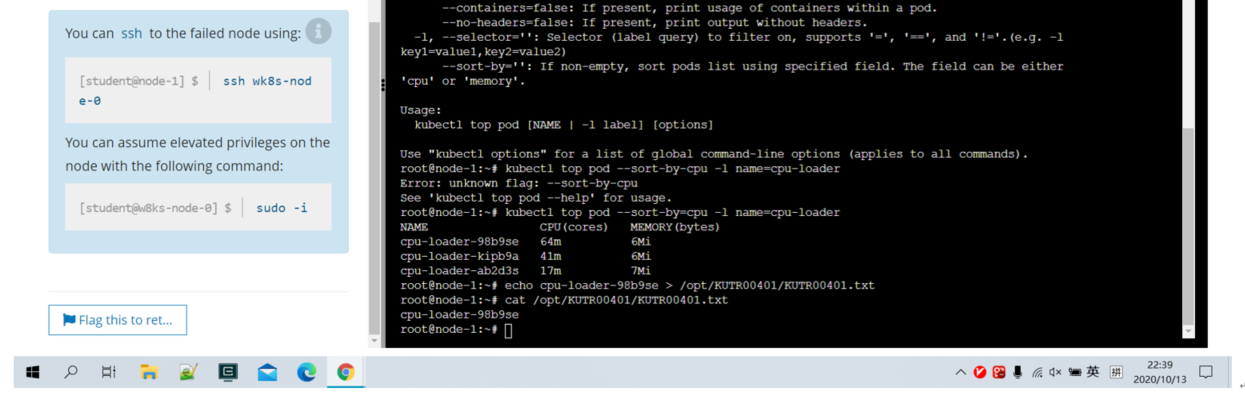

第十七题 Worker节点排错

解答:

视具体考试环境确定,通常是kubelet服务没启动,只需要启动服务,并将该服务设置为开机启动就可以了。

# ssh wk8s-node-0

# sudo -i

# systemctl status kubelet

# systemctl start kubelet

# systemctl enable kubelet