前置环境

- Centos基础环境配置

- JDK环境搭建

- SSH免密登录

- Zookeeper集群环境(HA模式下启动)

参考【Centos基础环境】、【JDK&&SSH环境搭建】、【Zookeeper集群环境】

Hadoop基础环境

服务器规划

| 机器IP | 主机名 | 备注 |

|---|---|---|

| 172.16.179.150 | master01 | 主节点 |

| 172.16.179.151 | master02 | 主节点(备用节点) |

| 172.16.179.160 | node01 | 从节点 |

| 172.16.179.161 | node02 | 从节点 |

设置IP及主机名

hostnamectl set-hostname master01

vim /etc/hosts

172.16.179.150 master01172.16.179.151 master02172.16.179.160 node01172.16.179.161 node02

目录规划

- /opt/bigdata 软件安装目录

- /var/bigdata 数据存放目录

下载Hadoop软件并解压

wget https://archive.apache.org/dist/hadoop/common/hadoop-2.10.1/hadoop-2.10.1.tar.gz

tar -zxvf hadoop-2.10.1.tar.gz -C /opt/bigdata

cd /opt/bigdata

ln -s hadoop-2.10.1 hadoop配置Hadoop命令环境

vim /etc/profile.d/hadoop_env.sh

分发配置到其他节点#set hadoop environment export HADOOP_HOME=/opt/bigdata/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

scp /etc/profile.d/hadoop.sh root@172.16.179.160:/etc/profile.d/JDK环境配置

vim hadoop-env.sh ```shelljkd环境

export JAVA_HOME=/usr/java/default

hadoop user配置(3.x需要,否则无法启动)

export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export HDFS_ZKFC_USER=root export HDFS_JOURNALNODE_USER=root

export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root

<a name="ssiUd"></a>

# 集群配置

<a name="rY7mh"></a>

## vim core-site.xml

```xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://master01:9000</value>

</property>

vim hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/var/bigdata/hadoop/full/dfs/name</value>

</property>

<property>

<name>dfs.datanode.name.dir</name>

<value>/var/bigdata/hadoop/full/dfs/data</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node01:50090</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/var/bigdata/hadoop/full/dfs/secondary</value>

</property>

vim slaves

服务启动和验证

- 格式化主节点 hdfs namenode -format

初始化创建一个空FsImage,只需要初始化一次 - 服务启动 start-dfs.sh

第一次启动创建datanode和secondary数据目录 - 服务停止 stop-dfs.sh

- 验证服务

hdfs dfs -mkdir -p /user/root

hdfs dfs -put demo.gz /user/root

http://master-01:50070HA集群配置(推荐)

机器规划

| HOST | NN | JNN | DN | ZKFC | ZK | | —- | —- | —- | —- | —- | —- | | master01 | name | JNN | | zkfc | zk | | master02 | name | | | zkfc | | | node01 | | JNN | data | | zk | | node02 | | JNN | data | | zk |

vim core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master01:2181,node01:2181,node02:2181</value>

</property>

vim hdfs-site.xml

注意:3.x版本后master01:50070改为9870

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/var/bigdata/hadoop/ha/dfs/name</value>

</property>

<property>

<name>dfs.datanode.name.dir</name>

<value>/var/bigdata/hadoop/ha/dfs/data</value>

</property>

# 主节点一对多关系映射

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>master01:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>node01:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>master01:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>node01:50070</value>

</property>

# journalnode节点启动位置

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master01:8485;node01:8485;node02:8485/mycluster</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/var/bigdata/hadoop/ha/dfs/jndata</value>

</property>

# HA角色切换的代理类和实现方法,我们使用SSH免密方式

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_dsa</value>

</property>

# 开启自动化,启动zkfc

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

vim slaves(workers:3.x)

服务启动

- SSH免密配置【JDK&&SSH环境搭建】

- HA依赖ZK,搭建ZK集群【Zookeeper环境搭建】

- core-site.xml 和 hdfs-site.xml配置修改

- 将配置修改分发到其他节点:scp core-site.xml hdfs-site.xml node02:

pwd - 依次启动JN:hadoop-daemon.sh start journalnode

hdfs —daemon start journalnode(3.x) - 选择一个NN做格式化(仅一次): hdfs namenode -format

- 启动这个格式化的NN,其他NN同步:hadoop-daemon.sh start namenode

hdfs —daemon start namenode(3.x) - 其他NN执行:hdfs namenode -bootstrapStandby

- 格式化(仅一次):hdfs zkfc -formatZK

- 启动: start-dfs.sh

服务验证

页面验证:

http://172.16.179.150:9870/(3.x)

http://172.16.179.150:50700/(2.x)

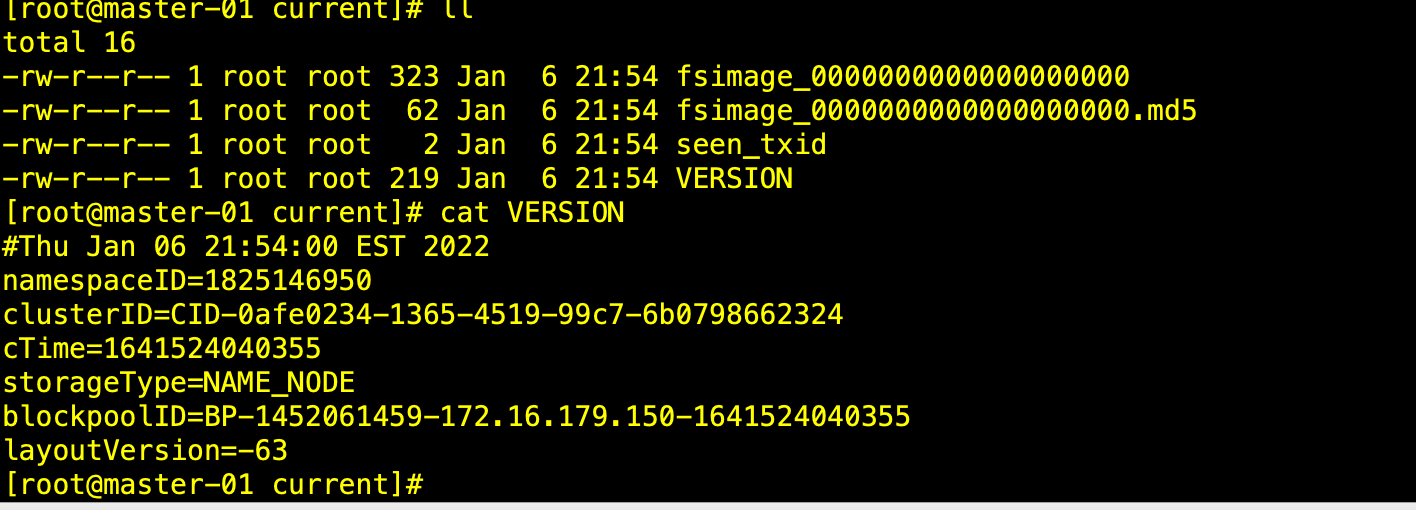

主节点初始化成功

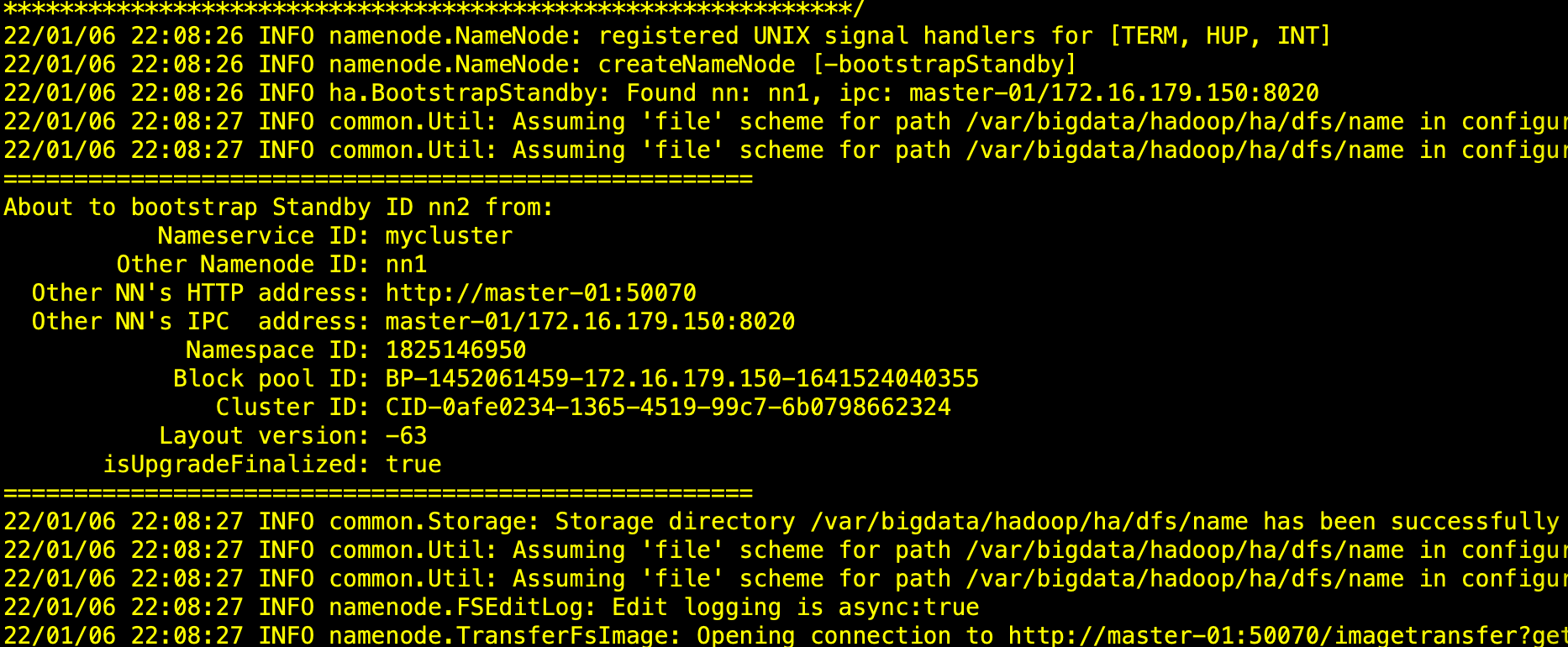

主节点(备份)同步成功

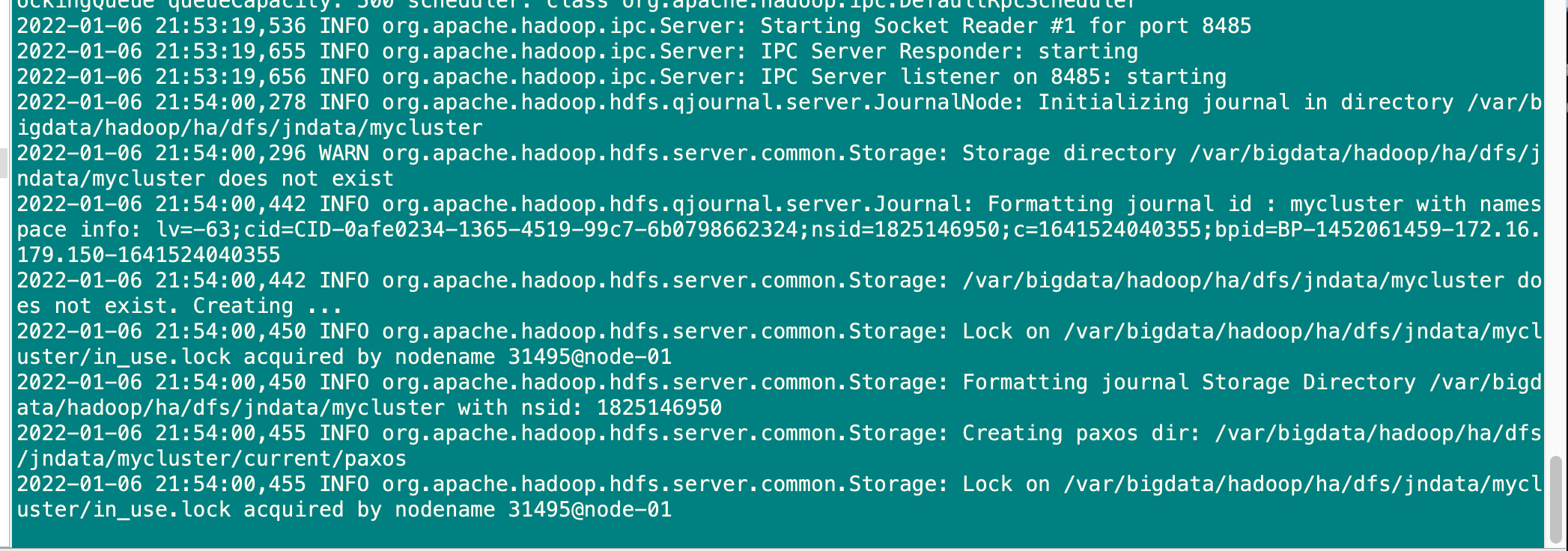

journalnode初始化成功

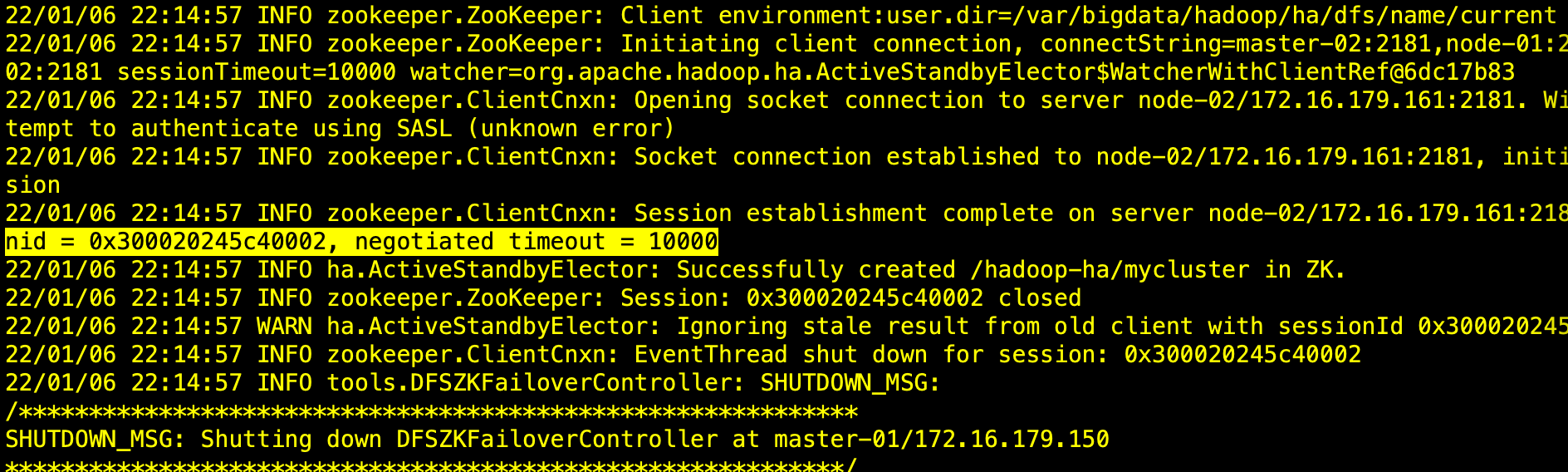

zkfc初始化成功

查看锁:zkCli.sh

ls /hadoop-ha/mycluster/

get /hadoop-ha/mycluster/ActiveStandbyElectorLock

遇到问题

start-dfs.sh启动后,DataNode没有启动成功?

原因:之前测试版本数据遗留(每个数据节点都需要做)

cd /tmp/hadoop-root/dfs/data

rm -rf *

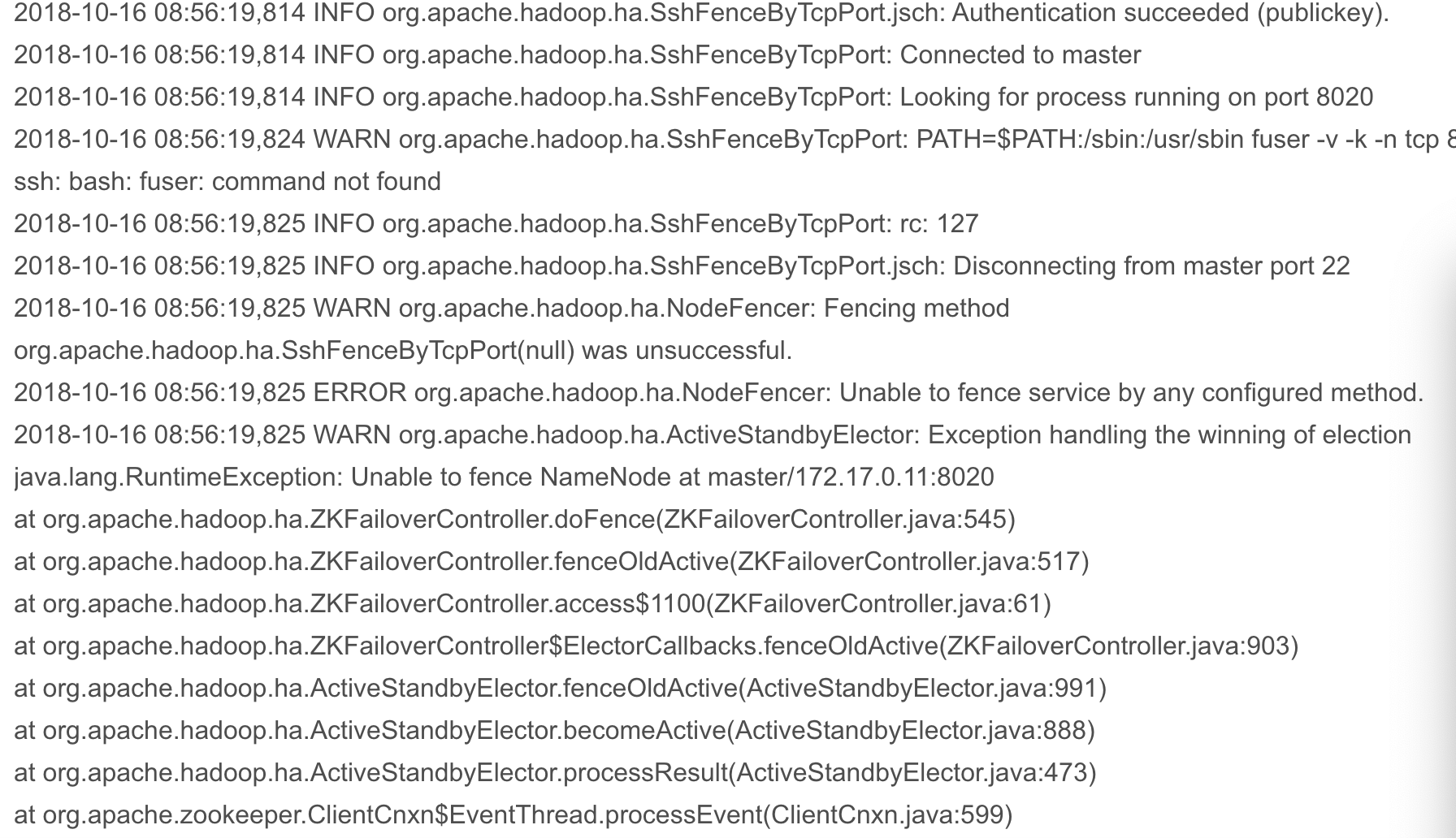

kill -9 namenode (active) 备用节点无法主从切换

解决方法:由于缺少组件造成

yum install psmisc

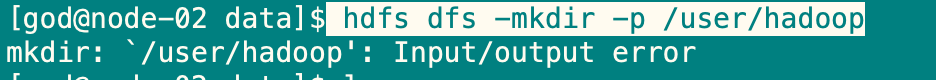

hdfs dfs -mkdir -p /user/hadoop 报错

解决方法:由于配置错误造成

在core-site.xml下没有配置 fs.defaultFS

在hdfs-site.xml下没有配置 dfs.nameservices

HDFS block丢失过多进入安全模式(safe mode)

执行命令退出安全模式:

hadoop dfsadmin -safemode leave

执行健康检查,删除损坏掉的block:

hdfs fsck / -delete

Permission denied: user=dr.who, access=WRITE, inode=”/user/god”:root:supergroup:drwxr-xr-x

hadoop fs -chmod -R 777 /user

vim core-site.xml

<!-- 访问页面操作用户 -->

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

3.x问题

but there is no HDFS_ZKFC_USER defined. Aborting operation.解决方法

vim hadoop-env.sh

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export HDFS_ZKFC_USER=root

export HDFS_JOURNALNODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

附录1:*-site.xml参数配置

core-site.xml参数配置

https://hadoop.apache.org/docs/r2.10.1/hadoop-project-dist/hadoop-common/core-default.xml

hdfs-site.xml参数配置

https://hadoop.apache.org/docs/r2.10.1/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml