一、datanode如下异常日志:

2020-08-04 17:34:33,185 INFO datanode.DataNode (BlockReceiver.java:receiveBlock(1010)) - Exception for BP-1617079829-10.170.30.10-1576821507362:blk_1600600951_863263842java.io.IOException: Premature EOF from inputStreamat org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:212)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:211)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:528)at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:971)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:891)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:173)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:107)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:290)at java.lang.Thread.run(Thread.java:748)2020-08-04 17:34:33,185 INFO datanode.DataNode (BlockReceiver.java:run(1470)) - PacketResponder: BP-1617079829-10.170.30.10-1576821507362:blk_1600600951_863263842, type=LAST_IN_PIPELINE: Thread is interrupted.2020-08-04 17:34:33,185 INFO datanode.DataNode (BlockReceiver.java:run(1506)) - PacketResponder: BP-1617079829-10.170.30.10-1576821507362:blk_1600600951_863263842, type=LAST_IN_PIPELINE terminating

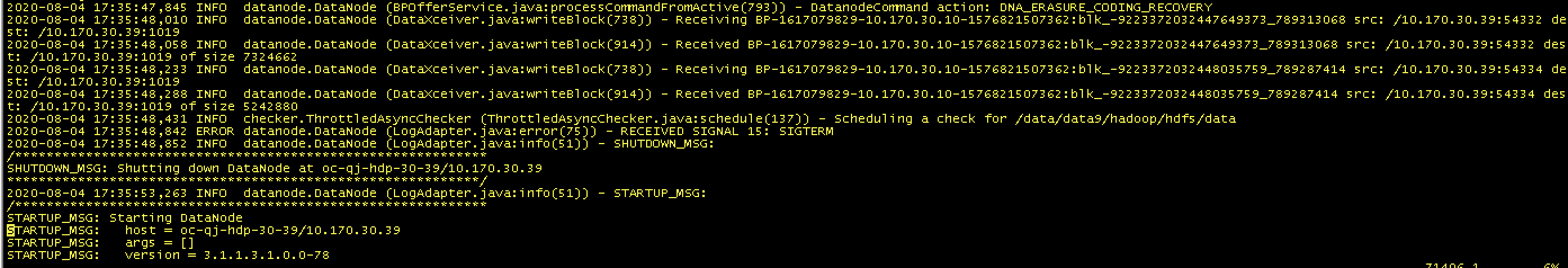

2020-08-04 17:35:48,431 INFO checker.ThrottledAsyncChecker (ThrottledAsyncChecker.java:schedule(137)) - Scheduling a check for /data/data9/hadoop/hdfs/data2020-08-04 17:35:48,842 ERROR datanode.DataNode (LogAdapter.java:error(75)) - RECEIVED SIGNAL 15: SIGTERM2020-08-04 17:35:48,852 INFO datanode.DataNode (LogAdapter.java:info(51)) - SHUTDOWN_MSG:/************************************************************SHUTDOWN_MSG: Shutting down DataNode at oc-qj-hdp-30-39/10.170.30.39************************************************************/2020-08-04 17:35:53,263 INFO datanode.DataNode (LogAdapter.java:info(51)) - STARTUP_MSG:/************************************************************STARTUP_MSG: Starting DataNodeSTARTUP_MSG: host = oc-qj-hdp-30-39/10.170.30.39STARTUP_MSG: args = []STARTUP_MSG: version = 3.1.1.3.1.0.0-78

2020-08-04 17:36:15,924 INFO datanode.DataNode (BPServiceActor.java:register(783)) - Block pool Block pool BP-1617079829-10.170.30.10-1576821507362 (Datanode Uuid 0434690e-cc8c-4d4a-9c20-16dd975821bb) service to oc-qj-hdp-30-10/10.170.30.10:8020 successfully registered with NN2020-08-04 17:36:15,924 INFO datanode.DataNode (DataNode.java:registerBlockPoolWithSecretManager(1614)) - Block token params received from NN: for block pool BP-1617079829-10.170.30.10-1576821507362 keyUpdateInterval=600 min(s), tokenLifetime=600 min(s)2020-08-04 17:36:15,925 ERROR datanode.DataNode (DataXceiver.java:run(321)) - oc-qj-hdp-30-39:1019:DataXceiver error processing READ_BLOCK operation src: /10.170.30.129:38566 dst: /10.170.30.39:1019java.lang.IllegalArgumentException: Block pool BP-1617079829-10.170.30.10-1576821507362 is not foundat org.apache.hadoop.hdfs.security.token.block.BlockPoolTokenSecretManager.get(BlockPoolTokenSecretManager.java:57)at org.apache.hadoop.hdfs.security.token.block.BlockPoolTokenSecretManager.checkAccess(BlockPoolTokenSecretManager.java:138)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.checkAccess(DataXceiver.java:1410)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.checkAccess(DataXceiver.java:1395)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.readBlock(DataXceiver.java:576)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opReadBlock(Receiver.java:152)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:104)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:290)at java.lang.Thread.run(Thread.java:748)2020-08-04 17:36:15,926 INFO block.BlockTokenSecretManager (BlockTokenSecretManager.java:addKeys(210)) - Setting block keys2020-08-04 17:36:15,926 INFO datanode.DataNode (BPServiceActor.java:offerService(612)) - For namenode oc-qj-hdp-30-10/10.170.30.10:8020 using BLOCKREPORT_INTERVAL of 21600000msec CACHEREPORT_INTERVAL of 10000msec Initial delay: 120000msec; heartBeatInterval=3000

2020-08-04 17:36:16,156 INFO datanode.DataNode (DataXceiver.java:writeBlock(738)) - Receiving BP-1617079829-10.170.30.10-1576821507362:blk_-9223372032443264542_789708514 src: /10.170.30.39:54506 dest: /10.170.30.39:10192020-08-04 17:36:16,248 ERROR datanode.DataNode (DataXceiver.java:run(321)) - oc-qj-hdp-30-39:1019:DataXceiver error processing READ_BLOCK operation src: /10.170.30.97:34688 dst: /10.170.30.39:1019java.io.IOException: Not ready to serve the block pool, BP-1617079829-10.170.30.10-1576821507362.at org.apache.hadoop.hdfs.server.datanode.DataXceiver.checkAndWaitForBP(DataXceiver.java:1389)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.checkAccess(DataXceiver.java:1405)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.checkAccess(DataXceiver.java:1395)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.readBlock(DataXceiver.java:576)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opReadBlock(Receiver.java:152)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:104)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:290)at java.lang.Thread.run(Thread.java:748)

2020-08-04 17:40:32,110 INFO datanode.DataNode (BPOfferService.java:processCommandFromActive(793)) - DatanodeCommand action: DNA_ERASURE_CODING_RECOVERY2020-08-04 17:40:32,111 WARN datanode.DataNode (ErasureCodingWorker.java:processErasureCodingTasks(150)) - Failed to reconstruct striped block blk_-9223372033630275216_654890444java.lang.IllegalArgumentException: No enough live striped blocks.at com.google.common.base.Preconditions.checkArgument(Preconditions.java:88)at org.apache.hadoop.hdfs.server.datanode.erasurecode.StripedReader.<init>(StripedReader.java:126)at org.apache.hadoop.hdfs.server.datanode.erasurecode.StripedReconstructor.<init>(StripedReconstructor.java:133)at org.apache.hadoop.hdfs.server.datanode.erasurecode.StripedBlockReconstructor.<init>(StripedBlockReconstructor.java:41)at org.apache.hadoop.hdfs.server.datanode.erasurecode.ErasureCodingWorker.processErasureCodingTasks(ErasureCodingWorker.java:133)at org.apache.hadoop.hdfs.server.datanode.BPOfferService.processCommandFromActive(BPOfferService.java:796)at org.apache.hadoop.hdfs.server.datanode.BPOfferService.processCommandFromActor(BPOfferService.java:680)at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.processCommand(BPServiceActor.java:876)at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.offerService(BPServiceActor.java:675)at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:842)at java.lang.Thread.run(Thread.java:748)2020-08-04 17:40:32,317 INFO datanode.DataNode (DataXceiver.java:writeBlock(738)) - Receiving BP-1617079829-10.170.30.10-1576821507362:blk_-9223372031614932991_863503944 src: /10.170.30.226:49264 dest: /10.170.30.39:1019

二、nn异常如下

2020-08-11 15:21:13,915 WARN ipc.Server (Server.java:processResponse(1523)) - IPC Server handler 488 on 8020, call Call#410 Retry#0 org.apache.hadoop.hdfs.protocol.ClientProtocol.getFileInfo from 10.174.20.62:56762: output error2020-08-11 15:21:13,915 INFO ipc.Server (Server.java:run(2695)) - IPC Server handler 488 on 8020 caught an exceptionjava.nio.channels.ClosedChannelExceptionat sun.nio.ch.SocketChannelImpl.ensureWriteOpen(SocketChannelImpl.java:270)at sun.nio.ch.SocketChannelImpl.write(SocketChannelImpl.java:461)at org.apache.hadoop.ipc.Server.channelWrite(Server.java:3250)at org.apache.hadoop.ipc.Server.access$1700(Server.java:137)at org.apache.hadoop.ipc.Server$Responder.processResponse(Server.java:1473)at org.apache.hadoop.ipc.Server$Responder.doRespond(Server.java:1543)at org.apache.hadoop.ipc.Server$Connection.sendResponse(Server.java:2593)at org.apache.hadoop.ipc.Server$Connection.access$300(Server.java:1615)at org.apache.hadoop.ipc.Server$RpcCall.doResponse(Server.java:940)at org.apache.hadoop.ipc.Server$Call.sendResponse(Server.java:774)at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:885)at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:822)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2682)2020-08-11 15:21:13,916 INFO hdfs.StateChange (FSNamesystem.java:completeFile(2865)) - DIR* completeFile: /app-logs/yx_gaowj/logs/application_1597070923507_9868/oc-yx-hdp-18-15_45454.tmp is closed by DFSClient_NONMAPREDUCE_1652087027_96672020-08-11 15:21:13,916 INFO hdfs.StateChange (FSNamesystem.java:fsync(3278)) - BLOCK* fsync: /spark2-history/application_1597070923507_9894.inprogress for DFSClient_NONMAPREDUCE_-1731935637_1

三、JournalNodes日志无异常

四、ZKFailoverController日志无异常

五、hive报错如下

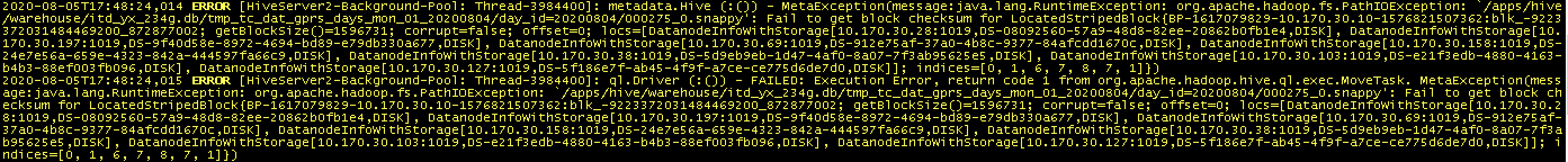

2020-08-05T17:48:24,014 ERROR [HiveServer2-Background-Pool: Thread-3984400]: metadata.Hive (:()) - MetaException(message:java.lang.RuntimeException: org.apache.hadoop.fs.PathIOException: `/apps/hive/warehouse/itd_yx_234g.db/tmp_tc_dat_gprs_days_mon_01_20200804/day_id=20200804/000275_0.snappy': Fail to get block checksum for LocatedStripedBlock{BP-1617079829-10.170.30.10-1576821507362:blk_-9223372031484469200_872877002; getBlockSize()=1596731; corrupt=false; offset=0; locs=[DatanodeInfoWithStorage[10.170.30.28:1019,DS-08092560-57a9-48d8-82ee-20862b0fb1e4,DISK], DatanodeInfoWithStorage[10.170.30.197:1019,DS-9f40d58e-8972-4694-bd89-e79db330a677,DISK], DatanodeInfoWithStorage[10.170.30.69:1019,DS-912e75af-37a0-4b8c-9377-84afcdd1670c,DISK], DatanodeInfoWithStorage[10.170.30.158:1019,DS-24e7e56a-659e-4323-842a-444597fa66c9,DISK], DatanodeInfoWithStorage[10.170.30.38:1019,DS-5d9eb9eb-1d47-4af0-8a07-7f3ab95625e5,DISK], DatanodeInfoWithStorage[10.170.30.103:1019,DS-e21f3edb-4880-4163-b4b3-88ef003fb096,DISK], DatanodeInfoWithStorage[10.170.30.127:1019,DS-5f186e7f-ab45-4f9f-a7ce-ce775d6de7d0,DISK]]; indices=[0, 1, 6, 7, 8, 7, 1]})2020-08-05T17:48:24,015 ERROR [HiveServer2-Background-Pool: Thread-3984400]: ql.Driver (:()) - FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.MoveTask. MetaException(message:java.lang.RuntimeException: org.apache.hadoop.fs.PathIOException: `/apps/hive/warehouse/itd_yx_234g.db/tmp_tc_dat_gprs_days_mon_01_20200804/day_id=20200804/000275_0.snappy': Fail to get block checksum for LocatedStripedBlock{BP-1617079829-10.170.30.10-1576821507362:blk_-9223372031484469200_872877002; getBlockSize()=1596731; corrupt=false; offset=0; locs=[DatanodeInfoWithStorage[10.170.30.28:1019,DS-08092560-57a9-48d8-82ee-20862b0fb1e4,DISK], DatanodeInfoWithStorage[10.170.30.197:1019,DS-9f40d58e-8972-4694-bd89-e79db330a677,DISK], DatanodeInfoWithStorage[10.170.30.69:1019,DS-912e75af-37a0-4b8c-9377-84afcdd1670c,DISK], DatanodeInfoWithStorage[10.170.30.158:1019,DS-24e7e56a-659e-4323-842a-444597fa66c9,DISK], DatanodeInfoWithStorage[10.170.30.38:1019,DS-5d9eb9eb-1d47-4af0-8a07-7f3ab95625e5,DISK], DatanodeInfoWithStorage[10.170.30.103:1019,DS-e21f3edb-4880-4163-b4b3-88ef003fb096,DISK], DatanodeInfoWithStorage[10.170.30.127:1019,DS-5f186e7f-ab45-4f9f-a7ce-ce775d6de7d0,DISK]]; indices=[0, 1, 6, 7, 8, 7, 1]})

六、部分datanode部署监控

nohup strace -T -tt -e trace=all -p 55150 > trace.log &

13,17,19,21,23,27,29,31,33,37,39,41,43,47,49,51,53,57,59,61,63,67,69,71,73,77,79,81,83,87,89,91,93,97,99,

结果如下:

datanode挂掉后,trace.log没有输出

系统日志(/var/log/messages)也无异常