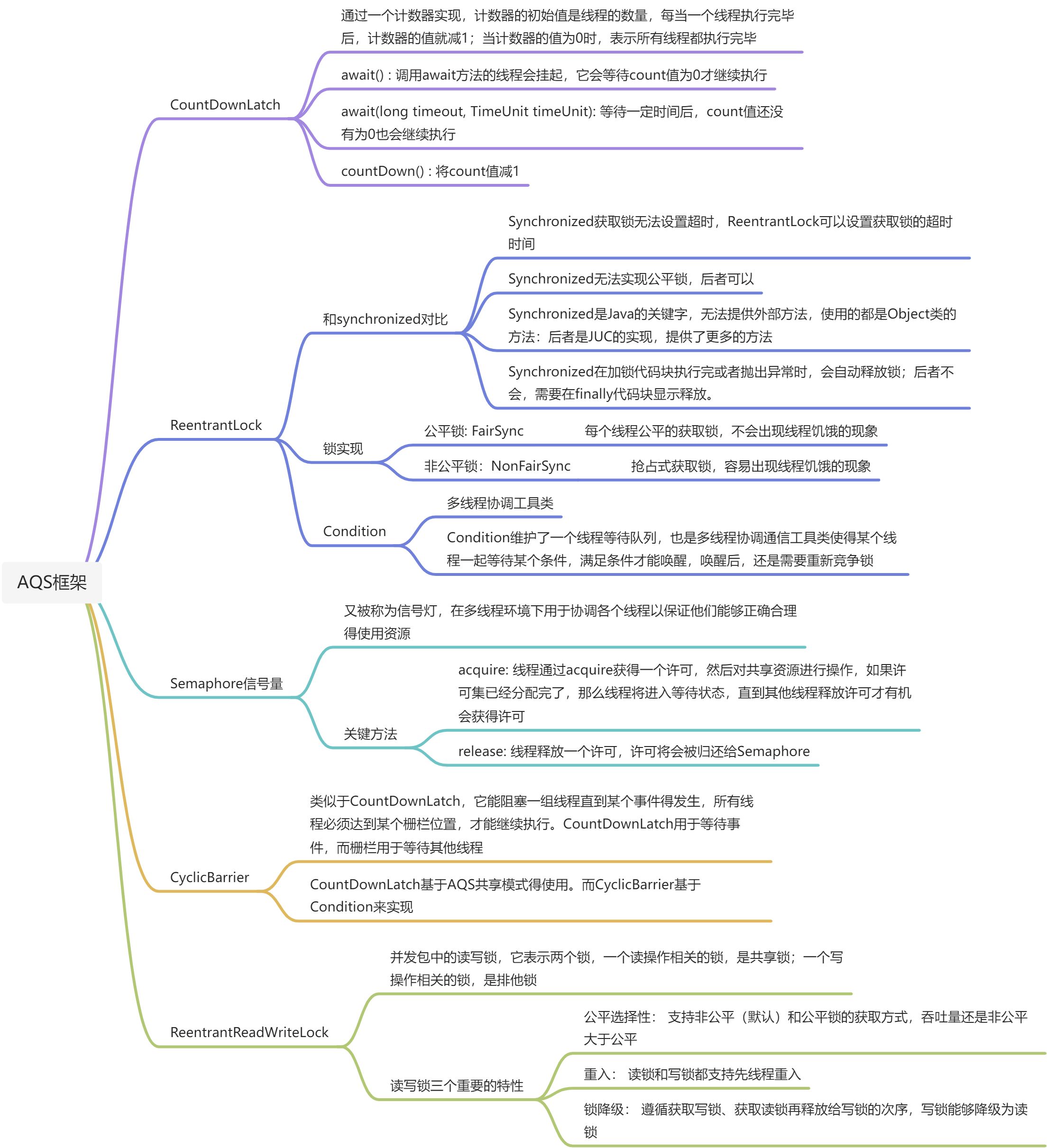

AQS是什么?

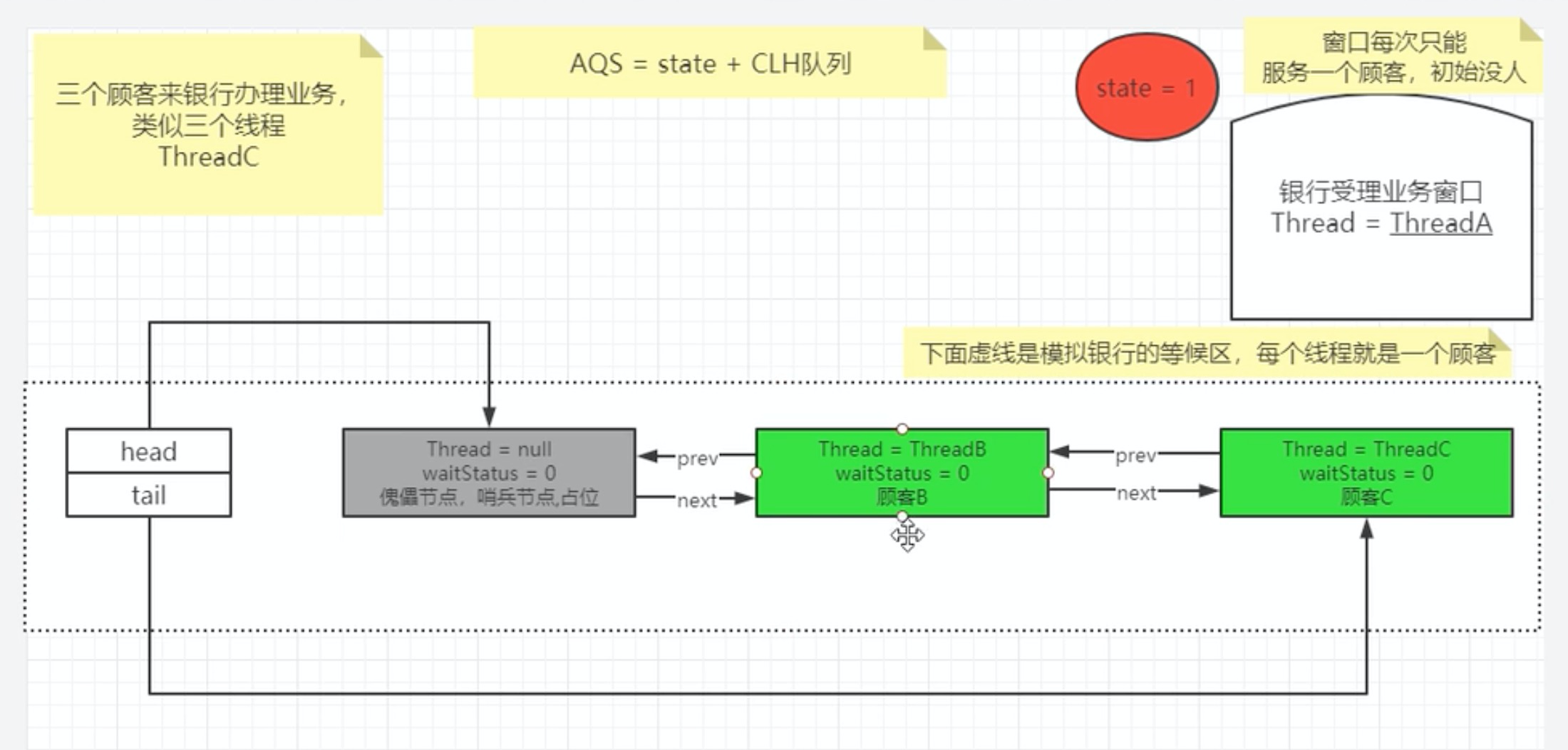

抽象的队列同步器。是用来构建锁或者其他同步器组件的重量级基础框架和整个JUC体系的基石,通过内置的FIFO队列来完成资源获取线程的排队工作,并通过一个int类型的变量表示持有锁的状态。

为什么AQS是JUC基石?

和AQS相关的:

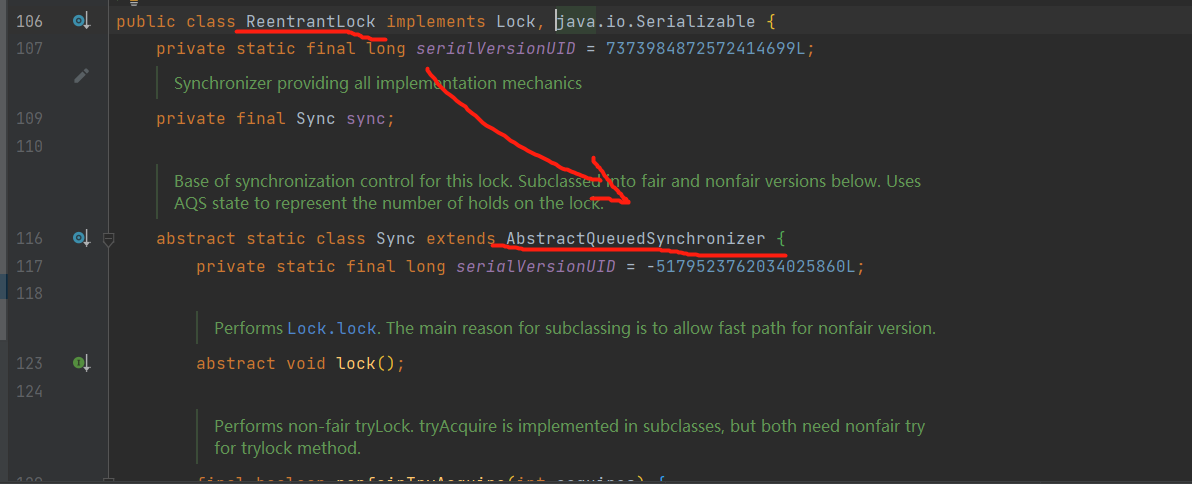

ReentrantLock

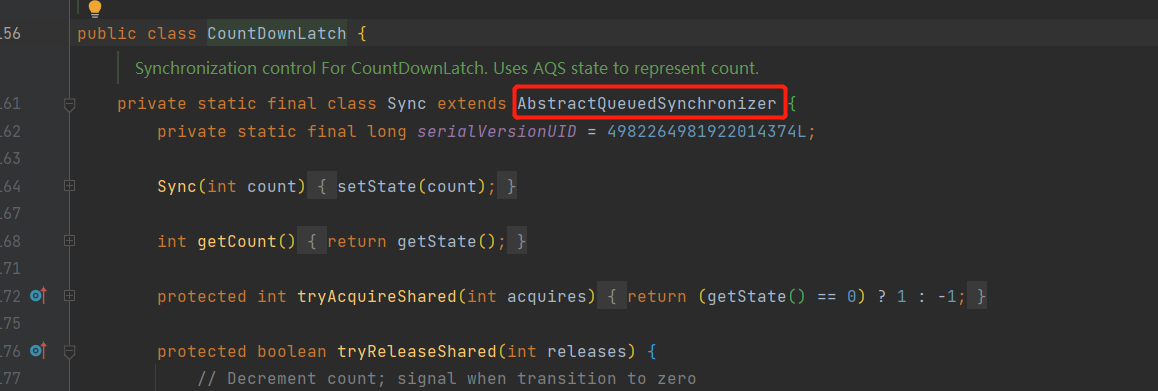

CountDownLatch

ReentrantReadWriteLock

Semaphore

进一步理解锁和同步器的关系

锁: 面向锁的使用者 — 用户层面

定义了程序员和锁交互层面的API,隐藏了实现细节,调用即可

同步器: 面向锁的实现者

提供统一规范并简化了锁的实现,屏蔽了同步状态管理、阻塞线程排队和通知、唤醒机制等。

能干什么?

加锁会导致阻塞

解释说明

抢到资源的线程直接使用处理业务逻辑,抢不到资源的必然涉及一种排队等候机制。抢占资源失败的线程继续去等待,但等待线程仍然保留获取锁的可能且获取锁流程仍在继续。

排队等候机制—队列?什么数据结构呢?

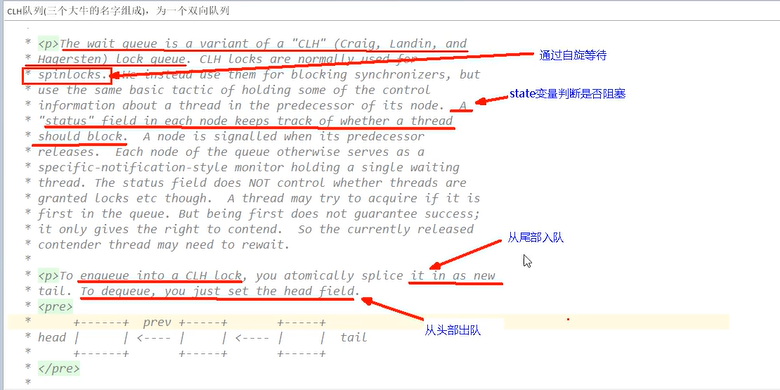

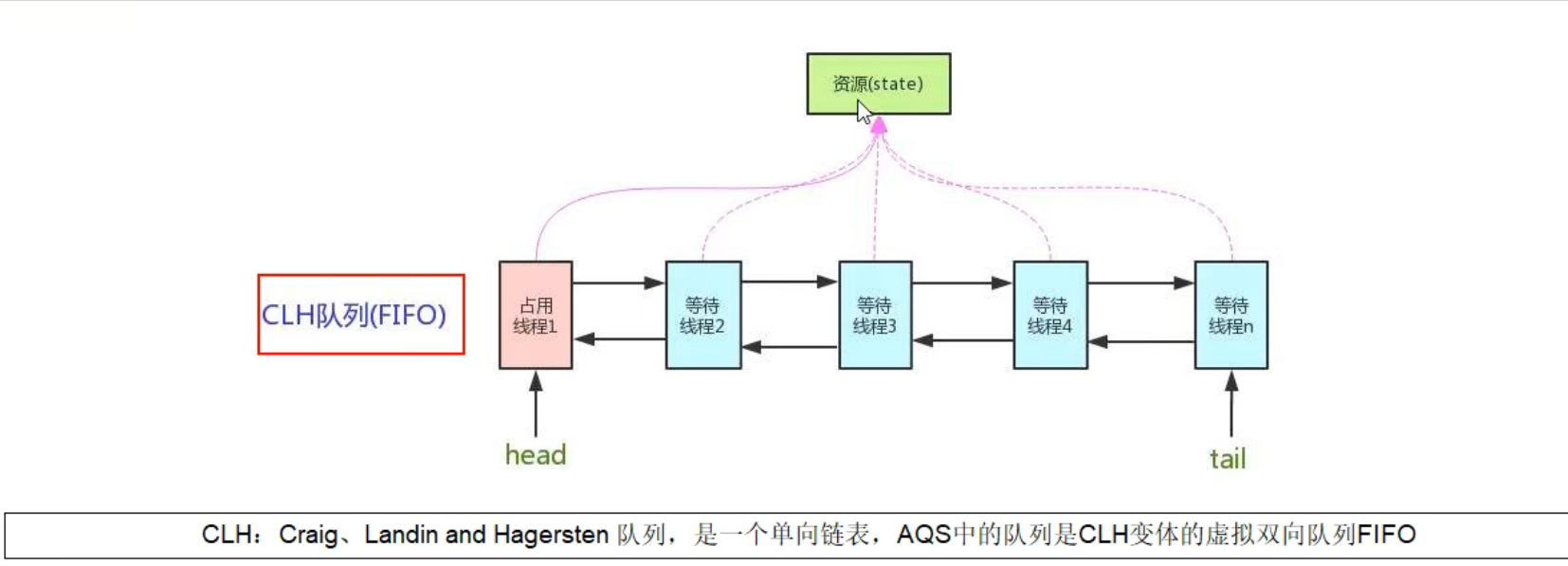

如果共享资源被占用,就需要一定的阻塞等待唤醒机制来保证锁分配,这个机制主要是CLH队列的变体实现的。将暂时获取不到锁的线程加入到队列中,这个队列就是AQS的抽象表现,它将请求共享资源的线程封装成队列的节点(Node),通过CAS、自旋以及LockSupport.park()的方式,维护state变量的状态,使并发达到同步的控制效果。

AQS初步

队列:

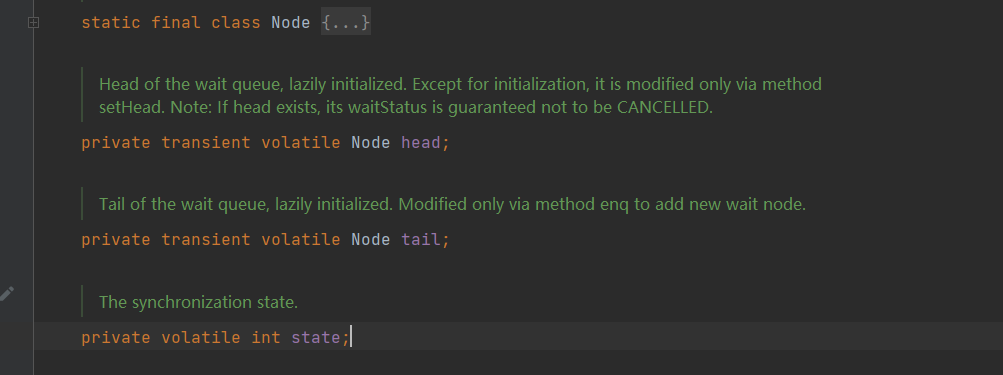

AQS使用一个volatile的int类型的成员变量来表示同步状态,通过内置的FIFO队列来完成资源获取的排队工作将每条要抢占资源的线程封装成一个Node节点来实现锁的分配,通过CAS完成对state值的修改。

内部体系架构:

AQS自身

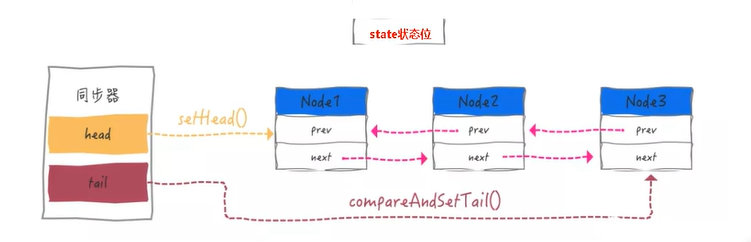

AQS = state + CLH 变种的双端队列

AQS同步队列基本结构

同步状态state变量

CLH队列

Node

头指针 尾指针 前指针 后指针

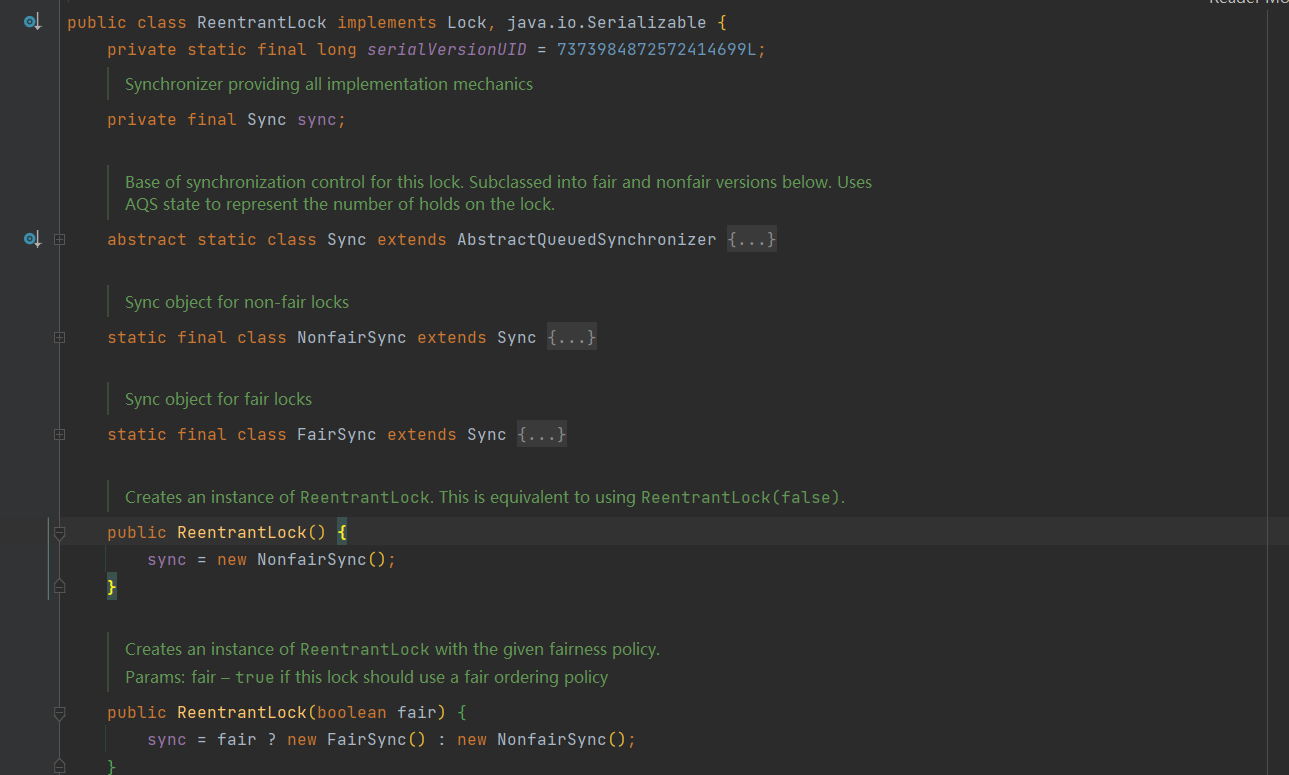

Node等待状态:waitState成员变量

static final class Node {/** Marker to indicate a node is waiting in shared mode */static final Node SHARED = new Node();/** Marker to indicate a node is waiting in exclusive mode */static final Node EXCLUSIVE = null;/** waitStatus value to indicate thread has cancelled */static final int CANCELLED = 1;/** waitStatus value to indicate successor's thread needs unparking */static final int SIGNAL = -1;/** waitStatus value to indicate thread is waiting on condition */static final int CONDITION = -2;/*** waitStatus value to indicate the next acquireShared should* unconditionally propagate*/static final int PROPAGATE = -3;/*** Status field, taking on only the values:* SIGNAL: The successor of this node is (or will soon be)* blocked (via park), so the current node must* unpark its successor when it releases or* cancels. To avoid races, acquire methods must* first indicate they need a signal,* then retry the atomic acquire, and then,* on failure, block.* CANCELLED: This node is cancelled due to timeout or interrupt.* Nodes never leave this state. In particular,* a thread with cancelled node never again blocks.* CONDITION: This node is currently on a condition queue.* It will not be used as a sync queue node* until transferred, at which time the status* will be set to 0. (Use of this value here has* nothing to do with the other uses of the* field, but simplifies mechanics.)* PROPAGATE: A releaseShared should be propagated to other* nodes. This is set (for head node only) in* doReleaseShared to ensure propagation* continues, even if other operations have* since intervened.* 0: None of the above** The values are arranged numerically to simplify use.* Non-negative values mean that a node doesn't need to* signal. So, most code doesn't need to check for particular* values, just for sign.** The field is initialized to 0 for normal sync nodes, and* CONDITION for condition nodes. It is modified using CAS* (or when possible, unconditional volatile writes).*/volatile int waitStatus;/*** Link to predecessor node that current node/thread relies on* for checking waitStatus. Assigned during enqueuing, and nulled* out (for sake of GC) only upon dequeuing. Also, upon* cancellation of a predecessor, we short-circuit while* finding a non-cancelled one, which will always exist* because the head node is never cancelled: A node becomes* head only as a result of successful acquire. A* cancelled thread never succeeds in acquiring, and a thread only* cancels itself, not any other node.*/volatile Node prev;/*** Link to the successor node that the current node/thread* unparks upon release. Assigned during enqueuing, adjusted* when bypassing cancelled predecessors, and nulled out (for* sake of GC) when dequeued. The enq operation does not* assign next field of a predecessor until after attachment,* so seeing a null next field does not necessarily mean that* node is at end of queue. However, if a next field appears* to be null, we can scan prev's from the tail to* double-check. The next field of cancelled nodes is set to* point to the node itself instead of null, to make life* easier for isOnSyncQueue.*/volatile Node next;/*** The thread that enqueued this node. Initialized on* construction and nulled out after use.*/volatile Thread thread;/*** Link to next node waiting on condition, or the special* value SHARED. Because condition queues are accessed only* when holding in exclusive mode, we just need a simple* linked queue to hold nodes while they are waiting on* conditions. They are then transferred to the queue to* re-acquire. And because conditions can only be exclusive,* we save a field by using special value to indicate shared* mode.*/Node nextWaiter;/*** Returns true if node is waiting in shared mode.*/final boolean isShared() {return nextWaiter == SHARED;}/*** Returns previous node, or throws NullPointerException if null.* Use when predecessor cannot be null. The null check could* be elided, but is present to help the VM.** @return the predecessor of this node*/final Node predecessor() throws NullPointerException {Node p = prev;if (p == null)throw new NullPointerException();elsereturn p;}Node() { // Used to establish initial head or SHARED marker}Node(Thread thread, Node mode) { // Used by addWaiterthis.nextWaiter = mode;this.thread = thread;}Node(Thread thread, int waitStatus) { // Used by Conditionthis.waitStatus = waitStatus;this.thread = thread;}}

Node 有共享和独占两种模式。

Node = waitStatus + 前后指针

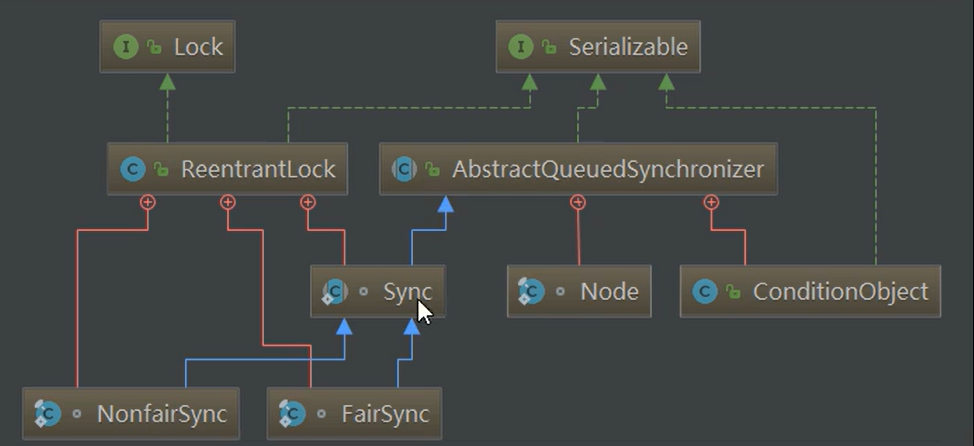

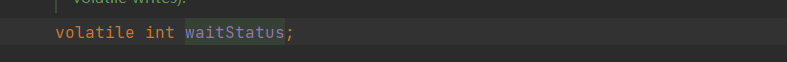

从ReentrantLock解读AQS

Lock接口的实现类,基本都是通过聚合了一个队列同步器的子类完成对线程访问控制的。

Reentrant的原理

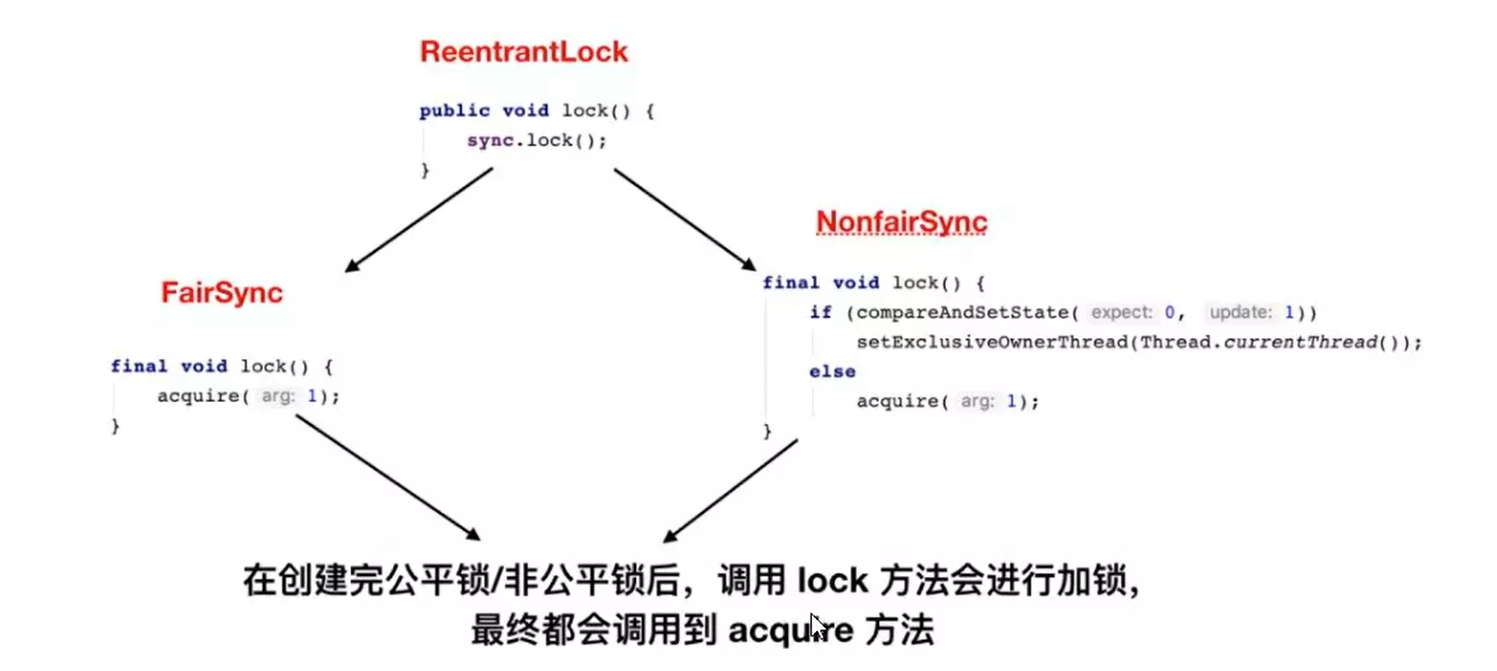

从lock方法看公平和非公平

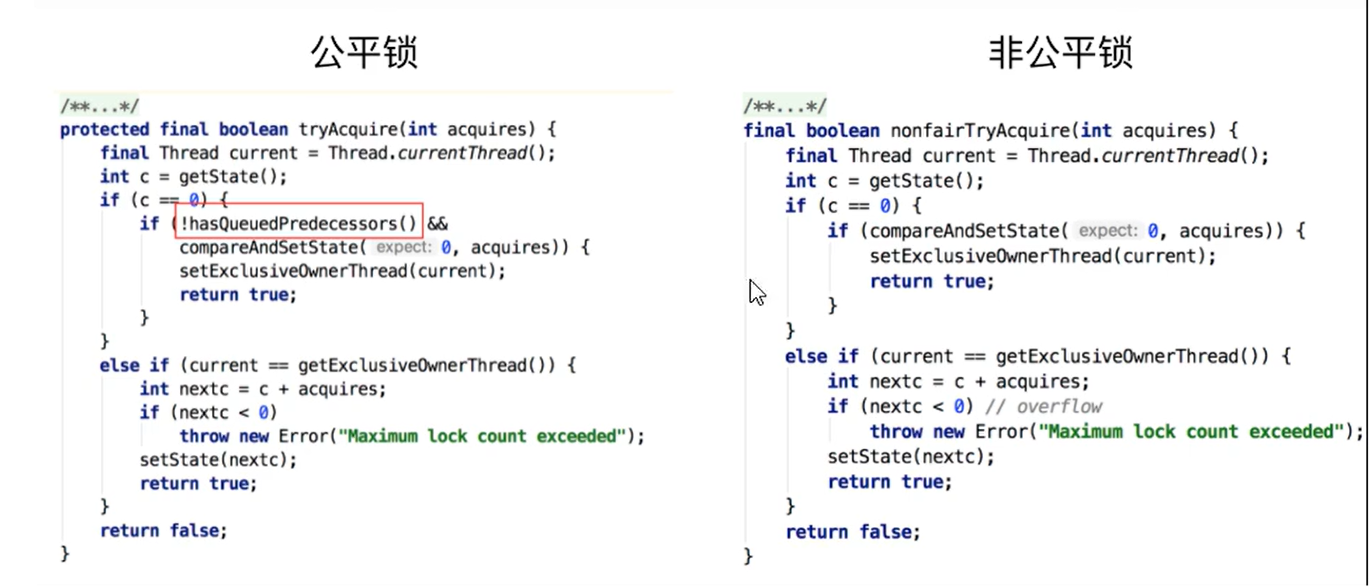

公平锁和非公平锁的lock()方法唯一的区别在于公平锁在获取同步状态的时候多了一个限制条件hasQueuedPredecessors(),这个是公平锁加锁时判断等待队列中是否存在有效节点的方法。

非公平锁

整个体系

static final class NonfairSync extends Sync {private static final long serialVersionUID = 7316153563782823691L;/*** Performs lock. Try immediate barge, backing up to normal* acquire on failure.*/final void lock() {if (compareAndSetState(0, 1))setExclusiveOwnerThread(Thread.currentThread());elseacquire(1);}protected final boolean tryAcquire(int acquires) {return nonfairTryAcquire(acquires);}}

整个ReentrantLock的加锁过程分为三个阶段:

- 尝试加锁

- 加锁失败,线程入队列

- 线程入队列后,进入阻塞状态

双向链表中,第一个节点是一个虚节点(也叫哨兵节点),其实并不存储任何信息,只是占位。真正的第一个有数据的节点,是从第二个节点开始。负责唤醒后面的节点。