一、产品分类

1.1 初步了解

InfiniBand enabled

- H-series

- N-series

支持InfiniBand OFED 5.1

- H-series: HB, HC, HBv2, HBv3

- N-series: NDv2, NDv4

支持RDMA (RDMA capable)

- HDR rate: HBv3, HBv2

- EDR rate: HB, HC, NDv2

- FDR rate: H16r, H16mr以及其他部分支持RDMA的N-series

SR-IOV support

- 目前Azure的HPC分为两类,取决于vm是否支持SR-IOV的InfiniBand。几乎所有新的机型无论是RDMA-capable或者InfiniBand enabled都是使用了SR-IOV,除了H16r,H16mr和NC24r。

- RDMA只支持over IB,不支持over Ethernet。(非SR-IOV下使用RDMA需要ND drivers - Network Direct)

- IPoIB只在SR-IOV的vm上支持。

术语

- pNUMA: physical NUMA domain

- vNUMA: virtualized NUMA domain

- pCore: physical CPU core

-

1.2 具体机型

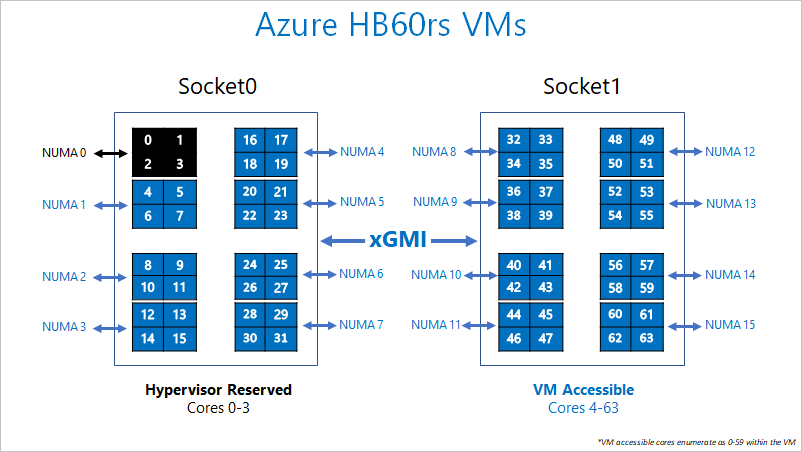

1.2.1 HB-series

Hardware Specification

Cores: 60 (SMT disabled)

- CPU: AMD EPYC 7551 (2*32core)

- CPU Frequency (non-AVX): 2.55GHz

- Memory: 4GB/core (240GB total)

- Local Disk: 700 GB SSD

- InfiniBand: 100Gb EDR Mellanox ConnectX-5

- Network: 50Gb Ethernet (40 Gb usable) Azure second Gen SmartNIC

注意点:

- 共16个NUMA节点,每个CCX 4core

- NUMA0 (cpu 0-3)分配给hypervisor,虚拟机使用其中60C

- 不开超线程

- 相邻的一组CCX共享两个DRAM channel(32GB per DRAM)

1.2.2 HBv2-series

Hardware Specification

- Cores: 120 (SMT disabled)

- CPU: AMD EPYC 7742 (2*64core)

- CPU Frequency (non-AVX): 3.1GHz

- Memory: 4GB/core (480GB total)

- Local Disk: 960GB NVMe, 480GB SSD

- InfiniBand: 200Gb EDR Mellanox ConnectX-6

- Network: 50Gb Ethernet (40 Gb usable) Azure second Gen SmartNIC

注意点:

- 共32个NUMA节点,每个CCX 4core

- NUMA0 和NUMA16(每个socket的第一个CCX)分配给hypervisor,虚拟机使用其中120C

- 不开超线程

- 四个相邻的CCX共享两个DRAM channel

1.2.3 HBv3-series

Hardware Specification

- Cores: 120, 96, 64, 32, 16 (SMT disabled)

- CPU: AMD EPYC 7V13 (2*64core)

- CPU Frequency (non-AVX): 3.1GHz (all cores), 3.675GHz (up to 10 cores)

- Memory: 448GB

- Local Disk: 2*960GB NVMe, 480GB SSD

- InfiniBand: 200Gb HDR Mellanox ConnectX-6

- Network: 50Gb Ethernet (40 Gb usable) Azure second Gen SmartNIC

注意点:

- NPS2

- 每个NUMA节点拥有4个直连DRAM channel,可达3200MT/s

- 每个服务器预留8个物理核给hypervisor,取每个NUMA domain的第一个CCD的前两个核

- 每个CCD的8个物理核共享32MB的L3 cache

- 120, 96, 64, 32, 16不同的规格分别在4个NUMA domain中取对应的核数30, 24, 16, 8, 4 (通过减少暴露给VM的物理核实现,其他共享的资源不变)

- NIC通过SRIOV passthrough给VM

- 支持Adaptive Routing, the Dynamic Connected Transport (DCT, in addition to standard RC and UD transports)

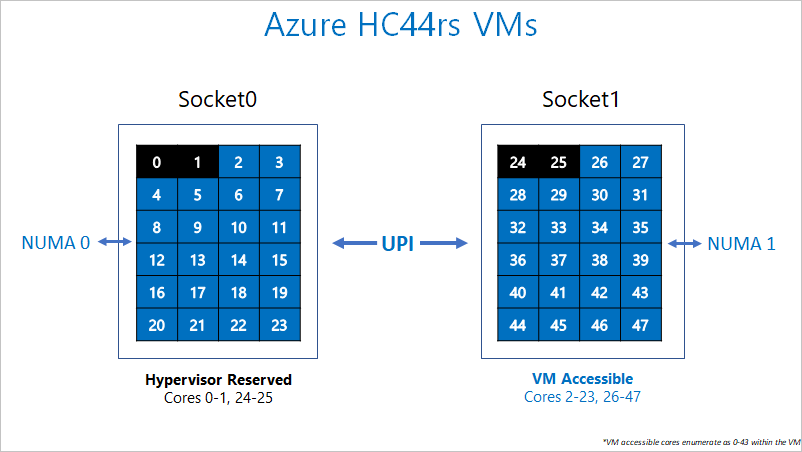

1.2.4 HC-series

Hardware Specification

- Cores: 44 (HT disabled)

- CPU: Intel Xeon Platinum 8168 (2*24core)

- CPU Frequency (non-AVX): 2.7-3.4GHz (all cores), 3.7GHz (single core)

- Memory: 8GB/core (352 total)

- Local Disk: 700GB SSD

- InfiniBand: 100Gb EDR Mellanox ConnectX-5

- Network: 50Gb Ethernet (40 Gb usable) Azure second Gen SmartNIC

注意点:

- 预留pCore 0-1和24-25(每个socket上的前两个pCore)给hypervisor

- 每个NUMA domain有6个DRAM channel

1.3 网卡配置

支持RDMA的机型配置两张网卡,一张跑以太网,一张跑RDMA网络。

(配置IB网络)1.4 已知问题

1.4.1 qp0访问控制

为了防止访问底层硬件带来的安全隐患,guest不能使用Queue Pair 0。这应该只影响ConnectX NIC的管理,或者跑类似ibdiagnet的诊断工具,不应该对用户应用程序有影响。1.4.2 Duplicate MAC with cloud-init with Ubuntu on H-series and N-series VMs

为已知问题,在高版本的内核中会解决。可能出现在vm重启或创建vm镜像之后。walkround见参考文档中的链接。二、应用场景

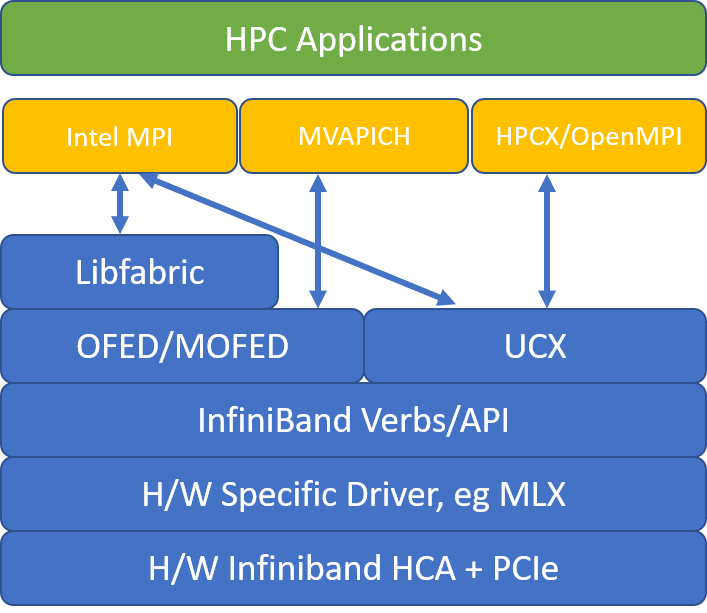

加速MPI (Message Passing Interface)应用的扩展性和性能。

常见的MPI library架构图:

MPI的程序中有些需要指定pkey来达到租户隔离,如下所示

pkey

| cat /sys/class/infiniband/mlx5_0/ports/1/pkeys/0 0x800b cat /sys/class/infiniband/mlx5_0/ports/1/pkeys/1 0x7fff |

|---|

三、计费

结论

从计费上可以看出,HB120-16rs_v3、HB120-32rs_v3、HB120-64rs_v3、HB120-96rs_v3、HB120_v3系列不管客户购买多少cpu,每台物理机上均只卖一台vm,其余不使用的cpu不对vm展示即可。

四、虚拟机配置

选取机型HBv3

CentOS_HPC 7.9

ip a

| [azureuser@hbv3 ~]$ ip a 1: lo: link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: link/ether 00:22:48:20:34:94 brd ff:ff:ff:ff:ff:ff inet 10.1.0.4/24 brd 10.1.0.255 scope global noprefixroute eth0 valid_lft forever preferred_lft forever inet6 fe80::222:48ff:fe20:3494/64 scope link valid_lft forever preferred_lft forever 3: eth1: link/ether 00:15:5d:33:ff:39 brd ff:ff:ff:ff:ff:ff 4: ib0: link/infiniband 00:00:09:28:fe:80:00:00:00:00:00:00:00:15:5d:ff:fd:33:ff:39 brd 00:ff:ff:ff:ff:12:40:1b:80:52:00:00:00:00:00:00:ff:ff:ff:ff inet 172.16.1.48/16 brd 172.16.255.255 scope global ib0 valid_lft forever preferred_lft forever inet6 fe80::215:5dff:fd33:ff39/64 scope link valid_lft forever preferred_lft forever |

|---|

lspci

| [azureuser@hbv3 ~]$ lspci 0000:00:00.0 Host bridge: Intel Corporation 440BX/ZX/DX - 82443BX/ZX/DX Host bridge (AGP disabled) (rev 03) 0000:00:07.0 ISA bridge: Intel Corporation 82371AB/EB/MB PIIX4 ISA (rev 01) 0000:00:07.1 IDE interface: Intel Corporation 82371AB/EB/MB PIIX4 IDE (rev 01) 0000:00:07.3 Bridge: Intel Corporation 82371AB/EB/MB PIIX4 ACPI (rev 02) 0000:00:08.0 VGA compatible controller: Microsoft Corporation Hyper-V virtual VGA 52af:00:00.0 Non-Volatile memory controller: Microsoft Corporation Device b111 6118:00:00.0 Non-Volatile memory controller: Microsoft Corporation Device b111 f71d:00:02.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] |

|---|

ethtool -i ethX

| [azureuser@hbv3 ~]$ ethtool -i eth0 driver: hv_netvsc version: firmware-version: N/A expansion-rom-version: bus-info: supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no [azureuser@hbv3 ~]$ ethtool -i eth1 driver: hv_netvsc version: firmware-version: N/A expansion-rom-version: bus-info: supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no |

|---|

lsmod

| [azureuser@hbv3 ~]$ lsmod |grep ib ib_ipoib 124872 0 ib_cm 53085 2 rdma_cm,ib_ipoib ib_umad 27744 0 mlx5_ib 371350 0 ib_uverbs 132788 2 mlx5_ib,rdma_ucm ib_core 357701 8 rdma_cm,ib_cm,iw_cm,mlx5_ib,ib_umad,ib_uverbs,rdma_ucm,ib_ipoib libcrc32c 12644 2 xfs,nf_conntrack mlx5_core 1330983 1 mlx5_ib libata 243094 3 pata_acpi,ata_generic,ata_piix mlx_compat 55063 10 rdma_cm,ib_cm,iw_cm,mlx5_ib,ib_core,ib_umad,ib_uverbs,mlx5_core,rdma_ucm,ib_ipoib |

|---|

lscpu

| [azureuser@hbv3 ~]$ lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 120 On-line CPU(s) list: 0-119 Thread(s) per core: 1 Core(s) per socket: 60 Socket(s): 2 NUMA node(s): 4 Vendor ID: AuthenticAMD CPU family: 25 Model: 1 Model name: AMD EPYC 7V13 64-Core Processor Stepping: 0 CPU MHz: 2445.403 BogoMIPS: 4890.80 Hypervisor vendor: Microsoft Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 512K L3 cache: 32768K NUMA node0 CPU(s): 0-29 NUMA node1 CPU(s): 30-59 NUMA node2 CPU(s): 60-89 NUMA node3 CPU(s): 90-119 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm art rep_good nopl extd_apicid aperfmperf eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext invpcid_single retpoline_amd vmmcall fsgsbase bmi1 avx2 smep bmi2 erms invpcid rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 clzero xsaveerptr arat umip vaes vpclmulqdq |

|---|

rpm

| [azureuser@hbv3 ~]$ rpm -qa|grep ofed ofed-scripts-5.2-OFED.5.2.2.2.3.x86_64 mlnxofed-docs-5.2-2.2.3.0.noarch [azureuser@hbv3 ~]$ rpm -qa|grep mpi mpi-selector-1.0.3-1.52223.x86_64 mpitests_openmpi-3.2.20-5d20b49.52223.x86_64 intel-mpi-doc-2018-2018.4-274.x86_64 intel-mpi-samples-2018.4-274-2018.4-274.x86_64 intel-mpi-installer-license-2018.4-274-2018.4-274.x86_64 intel-mpi-sdk-2018.4-274-2018.4-274.x86_64 openmpi-4.1.0rc5-1.52223.x86_64 intel-mpi-rt-2018.4-274-2018.4-274.x86_64 intel-mpi-psxe-2018.4-057-2018.4-057.x86_64 [azureuser@hbv3 ~]$ rpm -qa|grep sharp sharp-2.4.5.MLNX20210302.7c3c223-1.52223.x86_64 [azureuser@hbv3 ~]$ rpm -qa|grep sm opensm-libs-5.8.1.MLNX20210120.81574f7-0.1.52223.x86_64 libsmartcols-2.23.2-65.el7_9.1.x86_64 opensm-5.8.1.MLNX20210120.81574f7-0.1.52223.x86_64 opensm-static-5.8.1.MLNX20210120.81574f7-0.1.52223.x86_64 smartmontools-7.0-2.el7.x86_64 psmisc-22.20-17.el7.x86_64 opensm-devel-5.8.1.MLNX20210120.81574f7-0.1.52223.x86_64 |

|---|

pkey

| [azureuser@hbv3 site-packages]$ cd /sys/class/infiniband/mlx5_ib0/ports/1 [azureuser@hbv3 1]$ ls cap_mask cm_rx_msgs cm_tx_retries gid_attrs has_smi lid link_layer pkeys sm_lid state cm_rx_duplicates cm_tx_msgs counters gids hw_counters lid_mask_count phys_state rate sm_sl [azureuser@hbv3 1]$ cd pkeys/ [azureuser@hbv3 pkeys]$ ls 0 102 107 111 116 120 125 15 2 24 29 33 38 42 47 51 56 60 65 7 74 79 83 88 92 97 1 103 108 112 117 121 126 16 20 25 3 34 39 43 48 52 57 61 66 70 75 8 84 89 93 98 10 104 109 113 118 122 127 17 21 26 30 35 4 44 49 53 58 62 67 71 76 80 85 9 94 99 100 105 11 114 119 123 13 18 22 27 31 36 40 45 5 54 59 63 68 72 77 81 86 90 95 101 106 110 115 12 124 14 19 23 28 32 37 41 46 50 55 6 64 69 73 78 82 87 91 96 [azureuser@hbv3 pkeys]$ [azureuser@hbv3 pkeys]$ cat 120 0x0000 [azureuser@hbv3 pkeys]$ cat 119 0x0000 [azureuser@hbv3 pkeys]$ cat 0 0x8052 [azureuser@hbv3 pkeys]$ cat 1 0x7fff [azureuser@hbv3 pkeys]$ cat 2 0x0000 |

|---|

CentOS 7.9非HPC镜像

ip a

| [azureuser@hbv3 ~]$ ip a 1: lo: link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: link/ether 00:0d:3a:11:81:b1 brd ff:ff:ff:ff:ff:ff inet 10.0.0.4/24 brd 10.0.0.255 scope global noprefixroute eth0 valid_lft forever preferred_lft forever inet6 fe80::20d:3aff:fe11:81b1/64 scope link valid_lft forever preferred_lft forever 3: eth1: link/ether 00:15:5d:34:00:0a brd ff:ff:ff:ff:ff:ff 4: eth2: link/ether 00:0d:3a:11:81:b1 brd ff:ff:ff:ff:ff:ff |

|---|

lspci

| [azureuser@hbv3 network-scripts]$ lspci 0000:00:00.0 Host bridge: Intel Corporation 440BX/ZX/DX - 82443BX/ZX/DX Host bridge (AGP disabled) (rev 03) 0000:00:07.0 ISA bridge: Intel Corporation 82371AB/EB/MB PIIX4 ISA (rev 01) 0000:00:07.1 IDE interface: Intel Corporation 82371AB/EB/MB PIIX4 IDE (rev 01) 0000:00:07.3 Bridge: Intel Corporation 82371AB/EB/MB PIIX4 ACPI (rev 02) 0000:00:08.0 VGA compatible controller: Microsoft Corporation Hyper-V virtual VGA 037e:00:02.0 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] (rev 80) 7af8:00:00.0 Non-Volatile memory controller: Microsoft Corporation Device b111 93b8:00:02.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] a929:00:00.0 Non-Volatile memory controller: Microsoft Corporation Device b111 |

|---|

ethtool -i ethX

| [azureuser@hbv3 network-scripts]$ ethtool -i eth0 driver: hv_netvsc version: firmware-version: N/A expansion-rom-version: bus-info: supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no [azureuser@hbv3 network-scripts]$ ethtool -i eth1 driver: hv_netvsc version: firmware-version: N/A expansion-rom-version: bus-info: supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no [azureuser@hbv3 network-scripts]$ ethtool -i eth2 driver: mlx5_core version: 5.0-0 firmware-version: 14.25.8368 (MSF0010110035) expansion-rom-version: bus-info: 037e:00:02.0 supports-statistics: yes supports-test: yes supports-eeprom-access: no supports-register-dump: no supports-priv-flags: yes |

|---|

- 镜像不支持HPC时虚拟机内部一个cx4 vf,一个cx6 vf,但cx6的interface无法加载

镜像支持HPC时虚拟机内存仅有cx6 vf,eth0 eth1均非mlx网卡

五、参考文档

HPC产品文档

- HPC VM产品文档

- HPC配置

Virtual Machine介绍