- 1. logstash

- 3. Beats

- ============================== Filebeat inputs ===============================

- Each - is an input. Most options can be set at the input level, so

- you can use different inputs for various configurations.

- Below are the input specific configurations.

- Change to true to enable this input configuration.

- Paths that should be crawled and fetched. Glob based paths.

- ================================== Outputs ===================================

- Configure what output to use when sending the data collected by the beat.

- —————————————— Elasticsearch Output ——————————————

- output.elasticsearch:

- Array of hosts to connect to.

- hosts: [“localhost:9200”]

- Protocol - either

http(default) orhttps. - protocol: “https”

- Authentication credentials - either API key or username/password.

- api_key: “id:api_key”

- username: “elastic”

- password: “changeme”

- Metricbeat">2.2 Metricbeat

- Packetbeat">2.3 Packetbeat

- 4. ELK整体安装

1. logstash

1.1 logshash 安装

Logstash要求java版本必须是java 8、java 11、java 14其中之一,并不是支持所有Java版本。配置以及环境要求,参考:

https://www.elastic.co/cn/support/matrix#matrix_jvm

https://www.elastic.co/cn/support/matrix

国内镜像地址:https://mirrors.huaweicloud.com/logstash/

- 下载

wget https://mirrors.huaweicloud.com/logstash/7.9.3/logstash-7.9.3.tar.gz

- 解压

tar -xvf filebeat-7.11.2-linux-x86_64.tar.gz

- 启动

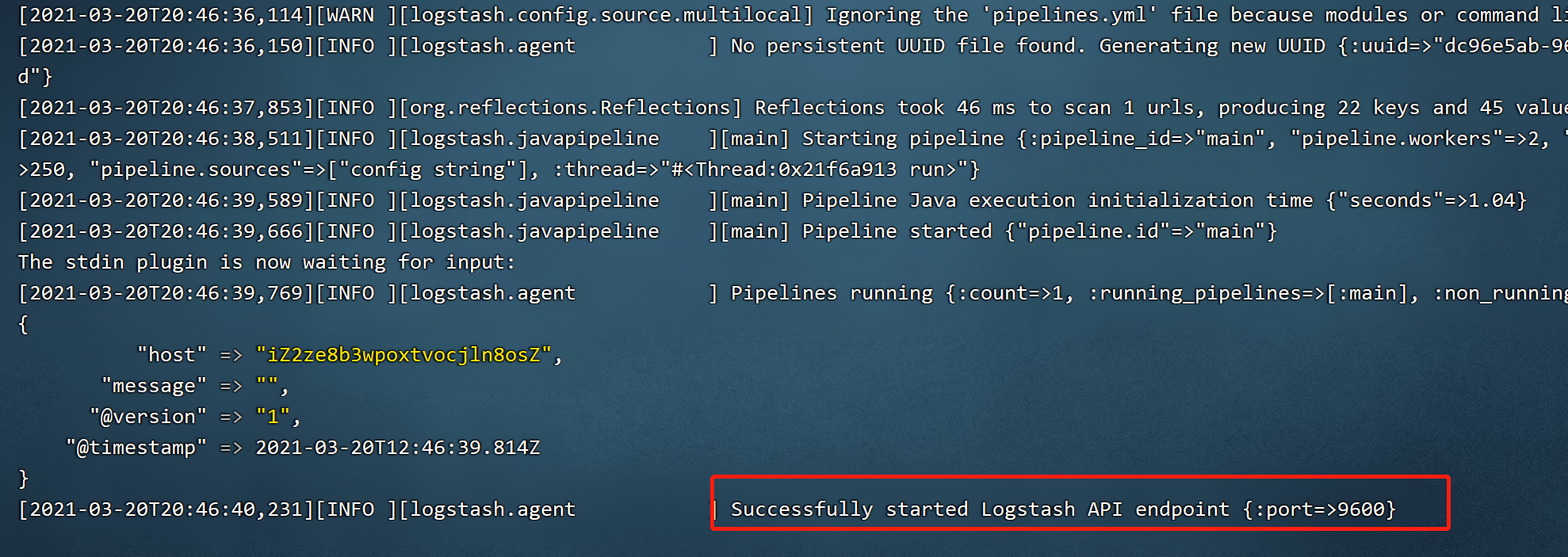

$ cd filebeat-7.11.2-linux-x86_64/bin/$ ./logstash -e 'input{ stdin{} } output{ stdout{} }'

'input{ stdin{} } output{ stdout{} }': 表示输入源为标准输入, 输出的目标地址为标准输出.

看到如下显示表示启动成功:

看启动台可以发现,logshash的默认端口是9600

- 测试

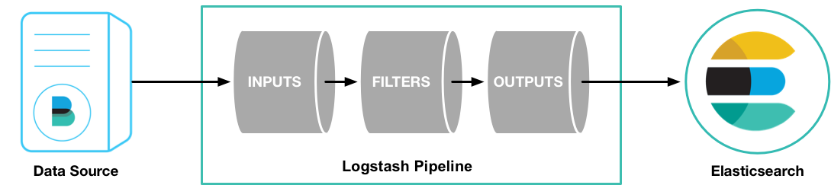

1.2 input、filter、output

Logstash的数据处理过程主要包括:Inputs, Filters, Outputs 三部分,如图:

INPUTS:

用于从数据源获取数据,常见的插件如下:

- beats

- 文件

- 各种MQ

- log4j

- redis

参考官方文档:https://www.elastic.co/guide/en/logstash/current/input-plugins.html

FILTERS:

筛选器是Logstash管道中的数据处理器,input时会触发事件,触发filter对数据进行transport,即转换解析各种格式的数据,常见的过滤器插件如下:

grok:解析和构造任意文本。是Logstash过滤器的基础,广泛用于从非结构化数据中导出结构,当前,Grok是Logstash中将非结构化日志数据解析为结构化和可查询内容的最佳方法。mutate:对事件字段执行常规转换。支持对事件中字段进行重命名,删除,替换和修改。date:把字符串类型的时间字段转换成时间戳类型drop:完全删除事件,例如调试事件。clone:复制事件,可能会添加或删除字段。geoip:添加有关IP地址地理位置的信息。

OUTPUTS:

用于数据输出,常见的插件如:

elasticsearch:最高效、方便、易于查询的存储器,最有选择,官方推荐!file:将输出数据以文件的形式写入磁盘。stupidgraphite:将事件数据发送到graphite,graphite是一种流行的开源工具,用于存储和绘制指标。 官方文档:http://graphite.readthedocs.io/en/latest/statsd:将事件数据发送到statsd,该服务“通过UDP侦听统计信息(如计数器和计时器),并将聚合发送到一个或多个可插拔后端服务”。1.3 logstash配置

ES中使用自动检测对索引字段进行索引,例如IP、日期自动检测(默认开启)、数字自动检测(默认关闭)进行动态映射自动为文档设定索引,当需要为字段指定特定的类型时,可能使用Mapping在索引生成定义映射。

Logstash中默认索引的设置是基于模板的,对于indexer角色的logstash。首先我们需要指定一个默认的映射文件,文件的内容大致如下

(我们将它命名为logstash.json,存放在/home/apps/logstash/template/logstash.json):

{"template" : "logstash*", //需跟logstash配置文件中的index相匹配"settings" : {"index.number_of_shards" : 5,"number_of_replicas" : 1,"index.refresh_interval" : "60s"},"mappings" : {"_default_" : {"_all" : {"enabled" : true},"dynamic_templates" : [ {"string_fields" : {"match" : "*","match_mapping_type" : "string","mapping" : {"type" : "string", "index" : "not_analyzed", "omit_norms" : true, "doc_values": true,"fields" : {"raw" : {"type": "string", "index" : "not_analyzed", "ignore_above" : 256,"doc_values": true}}}}} ],"properties" : {"@version": { "type": "string", "index": "not_analyzed" },"geoip" : {"type" : "object","dynamic": true,"path": "full","properties" : {"location" : { "type" : "geo_point" }}}}}}}

例如假设有一字段存储内容为IP,不希望被自动检测识别为string类型,则可以在default中定义ip字段的type为IP,如下:

$ curl -XPUT localhost:9200/test?pretty -d ‘{“mappings”:{“default“:{“properties”:{“ip”:{“type”:”ip”}}}}}’

其中template定义了匹配的索引模式,如果针对于特定的某个索引,则直接写成索引的名字即可。下面定义了映射的相关信息,与API的内容相同。

有了上面的配置文件,就可以在Logstash中配置output插件了:

/

output {elasticsearch {host => "localhost" #ES的服务器地址protocol => "http" #使用的协议,默认可能会使用Node,具体还要看机器的环境index => "logstash-%{+YYYY.MM.dd}" #匹配的索引模式document_type => "test" #索引的类型,旧的配置会使用index_type,但是这个字段在新版本中已经被舍弃了,推荐使用document_typemanage_template => true #注意默认为true,一定不能设置为falsetemplate_overwrite => true #如果设置为true,模板名字一样的时候,新的模板会覆盖旧的模板template_name => "myLogstash" #注意这个名字是用来查找映射配置的,尽量设置成全局唯一的template => "/home/apps/logstash/template/logstash.json" #映射配置文件的位置}}

1.4 数据库数据通过logstash同步至es

https://www.elastic.co/guide/en/logstash/7.12/plugins-inputs-jdbc.html

3. Beats

Beats是基于golang语言开发,开源的、轻量级的日志收集器的统称。

官方文档:https://www.elastic.co/guide/en/beats/libbeat/current/beats-reference.html

国内镜像地址:https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.11.2-linux-x86_64.tar.gz

Beats有如下特点:

- 开源:社区中维护了上百个beat,社区地址: https://github.com/elastic/beats/blob/master/libbeat/docs/communitybeats.asciidoc

- 轻量级:体积小,功能单一、基于go语言开发,具有先天性能优势,不依赖于Java环境。

- 高性能:对CPU、内存和IO的资源占用极小

Beats:定位:

就功能而言,Beats是弟弟,得益于Java生态优势,Logstash功能明显更加强大。但是Logstash在数据收集上的性能表现饱受诟病,Beats的诞生,其目的就是为了取代Logstash Forwarder 。

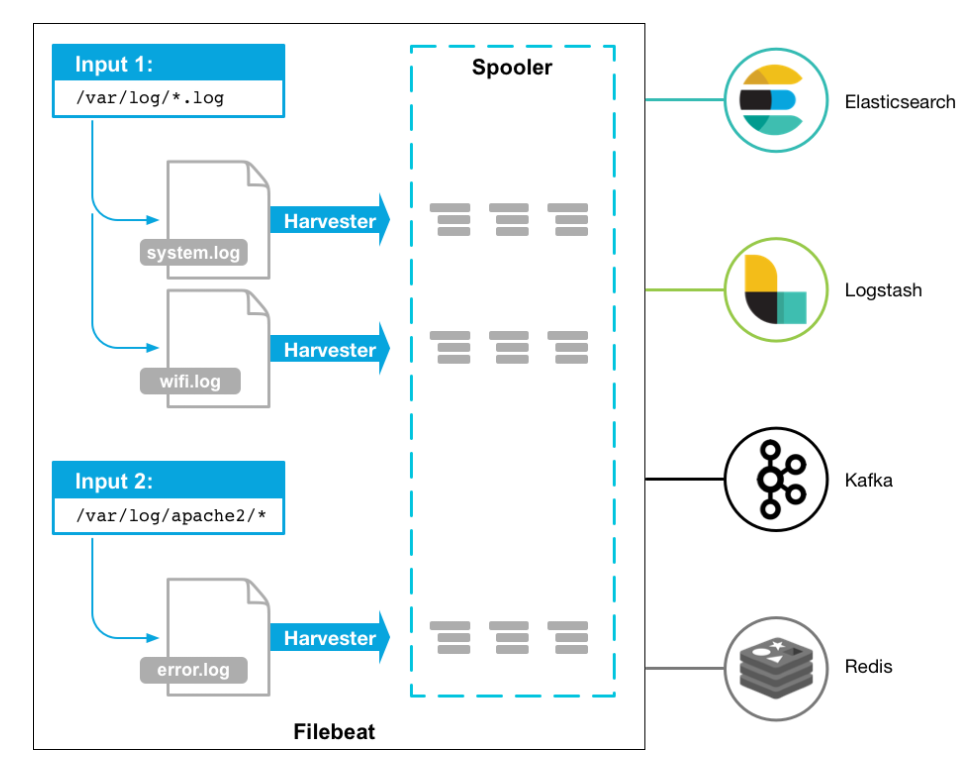

2.1 Filebeat

Filebeats是文件日志监控采集 ,主要用于收集日志数据。

FileBeat结构:

安装,部署Filebeats

filebeat.inputs:

Each - is an input. Most options can be set at the input level, so

you can use different inputs for various configurations.

Below are the input specific configurations.

type: stdin

Change to true to enable this input configuration.

enabled: true

Paths that should be crawled and fetched. Glob based paths.

paths:

- /root/soft/logstash/*.log

================================== Outputs ===================================

Configure what output to use when sending the data collected by the beat.

output.console: pretty: true

—————————————— Elasticsearch Output ——————————————

output.elasticsearch:

Array of hosts to connect to.

hosts: [“localhost:9200”]

Protocol - either http (default) or https.

protocol: “https”

Authentication credentials - either API key or username/password.

api_key: “id:api_key”

username: “elastic”

password: “changeme”

4. 启动先准备一个日志文件:/root/soft/logstash/product.log```powershellhead /root/soft/logstash/product.log | ./filebeat -e -c filebeat.yml

head为linux系统命令,命令的意思为将日志文件log数据流通过管道运符输入至filebeat,并指定filebeat的配置文件。

具体配置请参考官方文档: https://www.elastic.co/guide/en/beats/filebeat/current/elasticsearch-output.html

2.2 Metricbeat

进行指标采集,指标可以是系统的,也可以是众多中间件产品的,主要用于监控系统和软件的性能

2.3 Packetbeat

通过网络抓包、协议分析,基于协议和端口对一些系统通信进行监控和数据收集。Packetbeat是一个实时网络数据包分析器,可以将其与Elasticsearch一起使用,以提供应用程序监视和性能分析系统。

支持的协议:

- ICMP (v4 and v6)

- DHCP (v4)

- DNS

- HTTP

- AMQP 0.9.1

- Cassandra

- Mysql

- PostgreSQL

- Redis

- Thrift-RPC

- MongoDB

- Memcache

- NFS

- TLS

4. ELK整体安装

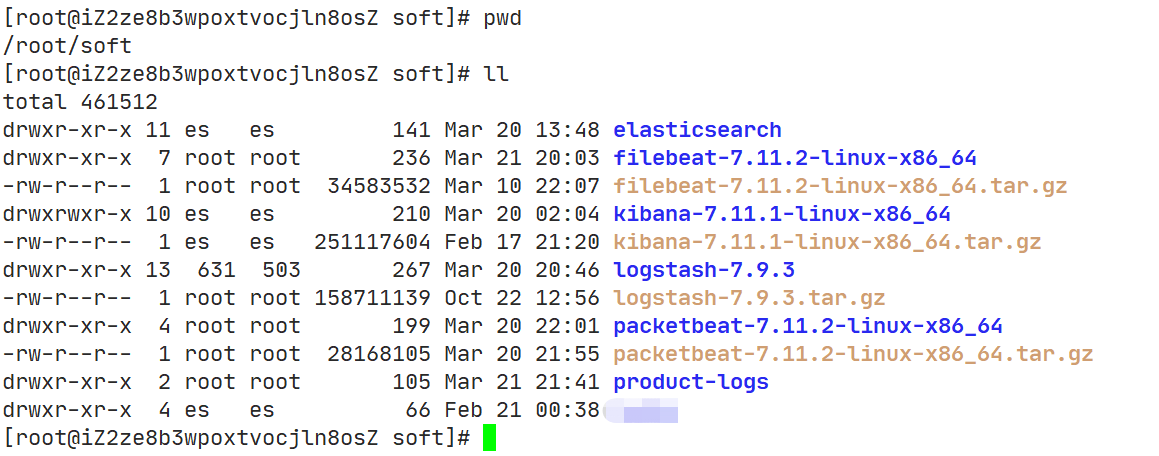

4.1 环境说明

单台机器模拟ELK。

机器内网ip:127.0.0.1

机器外网ip:8.140.122.156

所有的软件都装在/root/soft/目录下

Elasticsearch总共6个节点,其中3个master节点,2个data节点,1个voting_noly节点。

kibana就1个节点

logstash就1个节点

filebeat就1个节点

Elasticsearch的6个节点安装路径为:

- /root/soft/elasticsearch/pro-cluster/master01

- /root/soft/elasticsearch/pro-cluster/master02

- /root/soft/elasticsearch/pro-cluster/master03

- /root/soft/elasticsearch/pro-cluster/data01

- /root/soft/elasticsearch/pro-cluster/data02

- /root/soft/elasticsearch/pro-cluster/vote01

es的数据存储路径为:

- /root/soft/elasticsearch/pro-cluster/datas

es的日志存储路径为:

- /root/soft/elasticsearch/pro-cluster

Kibana的安装路径为:

- /root/soft/kibana-7.11.1-linux-x86_64

logstash的安装路径为:

- /root/soft/logstash-7.9.3

filebeat的安装路径为:

- /root/soft/filebeat-7.11.2-linux-x86_64

应用程序产生的日志的存放路径为:

使用vim /etc/profile命令编辑环境变量文件,在最后插入如下数据。

#es master01export ES_MASTER_NODE01_HOME=/root/soft/elasticsearch/pro-cluster/master-node-01#es master02export ES_MASTER_NODE02_HOME=/root/soft/elasticsearch/pro-cluster/master-node-02#es master03export ES_MASTER_NODE03_HOME=/root/soft/elasticsearch/pro-cluster/master-node-03#es data01export ES_DATA_NODE01_HOME=/root/soft/elasticsearch/pro-cluster/data-node-01#es data02export ES_DATA_NODE02_HOME=/root/soft/elasticsearch/pro-cluster/data-node-02#es vote01export ES_VOTE_NODE01_HOME=/root/soft/elasticsearch/pro-cluster/vote-node-01#logstash01export LOGSTASH_NODE01_HOME=/root/soft/logstash/logstash-7.9.3-01#kibana01export KIBANA_NODE01_HOME=/root/soft/kibana/kibana01#filebeatexport FILEBEAT_HOME=/root/soft/filebeat/filebeat-7.11.2-linux-x86_64

使用 source /etc/profile 命令是环境变量文件生效。

4.2 elasticsearch 配置

配置文件说明:cluster.name: 整个集群的名称,整个集群使用一个名字,其他节点通过cluster.name发现集群。

**node.name**: 集群内某个节点的名称,其他节点可通过node.name发现节点,默认是机器名。**path.data**: 数据存放地址,生产环境必须不能设置为es内部,否则es更新时会直接抹除数据**path.logs**: 日志存在地址,生产环境必须不能设置为es内部,否则es更新时会直接抹除数据**bootstrap.memory_lock**:是否禁用swap交换区(swap交换区为系统内存不够时使用磁盘作为临时空间), 生产环境必须禁用**network.host**:给当前节点绑定ip地址,切记一旦指定,则必须是这个地址才能访问,比如:如果配置为”127.0.0.1”, 则其他服务必须能访问”127.0.0.1”才行, localhost 或者192.168.0.1 都不行。如果想要所有机器都能访问,则配置”0.0.0.0”**http.port**: 当前节点的服务端口号**transport.port**: 当前节点的通讯端口号,集群内节点之间通讯使用此端口号, 比如选举master节点时。**discovery.seed_hosts**:当前master节点和master候选节点的配置,端口号不是服务的端口号,而是通讯的端口号,也就是transport.port。这是master选举使用的配置,当一个master宕机了以后会从这个列表中选举一个节点当做master节点。如果想要此节点能外网访问,则需多配置一个”[::1]”**cluster.initial_master_nodes**: 集群初始化时会从这个列表中取出一个node.name选为master节点。**discovery.zen.minimum_master_nodes**: 避免脑裂的配置,配置成**{matser节点数量/2+1} **http.cors.enabled: 是否开启开启跨域支持true/false**http.cors.allow-origin**:设置哪些地址可以跨域,*代表允许任何地址跨域。

整个集群搭建模型:

| 节点名称 | node.master | node.data | 描述 |

|---|---|---|---|

| master01 | true | false | master节点 |

| master02 | true | false | master节点 |

| master03 | true | false | master节点 |

| data01 | false | true | 纯数据节点 |

| data02 | false | true | 纯数据节点 |

| vote01 | false | false | 仅投票节点,路由节点 |

master01 配置:

cluster.name: pro-clusternode.name: master01path.data: /root/soft/elasticsearch/pro-cluster/datas/master01path.logs: /root/soft/elasticsearch/pro-cluster/logs/master01bootstrap.memory_lock: falsenetwork.host: 127.0.0.1http.port: 9200transport.port: 9300discovery.seed_hosts: ["127.0.0.1:9300", "127.0.0.1:9301","127.0.0.1:9302"]cluster.initial_master_nodes: ["master01","master02","master03"]http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: truenode.data: falsenode.max_local_storage_nodes: 5

master02 配置:

cluster.name: pro-clusternode.name: master02path.data: /root/soft/elasticsearch/pro-cluster/datas/master02path.logs: /root/soft/elasticsearch/pro-cluster/logs/master02bootstrap.memory_lock: falsenetwork.host: 127.0.0.1http.port: 9201transport.port: 9301discovery.seed_hosts: ["127.0.0.1:9300", "127.0.0.1:9301","127.0.0.1:9302"]cluster.initial_master_nodes: ["master01","master02","master03"]http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: truenode.data: falsenode.max_local_storage_nodes: 5

master03 配置:

cluster.name: pro-clusternode.name: master03path.data: /root/soft/elasticsearch/pro-cluster/datas/master03path.logs: /root/soft/elasticsearch/pro-cluster/logs/master03bootstrap.memory_lock: falsenetwork.host: 127.0.0.1http.port: 9202transport.port: 9302discovery.seed_hosts: ["127.0.0.1:9300", "127.0.0.1:9301","127.0.0.1:9302"]cluster.initial_master_nodes: ["master01","master02","master03"]http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: truenode.data: falsenode.max_local_storage_nodes: 5

data01 配置:

cluster.name: pro-clusternode.name: data01path.data: /root/soft/elasticsearch/pro-cluster/datas/data01path.logs: /root/soft/elasticsearch/pro-cluster/logs/data01bootstrap.memory_lock: falsenetwork.host: 127.0.0.1http.port: 9203transport.port: 9303discovery.seed_hosts: ["127.0.0.1:9300", "127.0.0.1:9301","127.0.0.1:9302"]cluster.initial_master_nodes: ["master01","master02","master03"]http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: falsenode.data: truenode.max_local_storage_nodes: 5

data02 配置:

cluster.name: pro-clusternode.name: data02path.data: /root/soft/elasticsearch/pro-cluster/datas/data02path.logs: /root/soft/elasticsearch/pro-cluster/logs/data02bootstrap.memory_lock: falsenetwork.host: 127.0.0.1http.port: 9204transport.port: 9304discovery.seed_hosts: ["127.0.0.1:9300", "127.0.0.1:9301","127.0.0.1:9302"]cluster.initial_master_nodes: ["master01","master02","master03"]http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: falsenode.data: truenode.max_local_storage_nodes: 5

vote01 配置:

cluster.name: pro-clusternode.name: vote01path.data: /root/soft/elasticsearch/pro-cluster/datas/vote01path.logs: /root/soft/elasticsearch/pro-cluster/logs/vote01bootstrap.memory_lock: falsenetwork.host: 127.0.0.1http.port: 9205transport.port: 9305discovery.seed_hosts: ["127.0.0.1:9300", "127.0.0.1:9301","127.0.0.1:9302"]cluster.initial_master_nodes: ["master01","master02","master03"]http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: falsenode.data: falsenode.max_local_storage_nodes: 5

4.2 kibana配置

server.host: "0.0.0.0"elasticsearch.hosts: ["http://127.0.0.19200","http://127.0.0.19201","http://127.0.0.19202"]

启动kibana:

$ $KIBANA_NODE01_HOME/bin/kibana

不挂起运行:

$ nohup $KIBANA_NODE01_HOME/bin/kibana > /dev/null 2>&1 &

4.3 nginx配置

下载安装nginx

#安装httpd$ yum -y install httpd#查看是否安装$ which htpasswd#生成密码文件$ htpasswd -cb /etc/nginx/db/passwd.db {账号} {密码}#安装nginx$ yum -y install nginx#配置nginx$ vim /etc/nginx/nginx.conf

# For more information on configuration, see:# * Official English Documentation: http://nginx.org/en/docs/# * Official Russian Documentation: http://nginx.org/ru/docs/user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 2048;include /etc/nginx/mime.types;default_type application/octet-stream;# Load modular configuration files from the /etc/nginx/conf.d directory.# See http://nginx.org/en/docs/ngx_core_module.html#include# for more information.include /etc/nginx/conf.d/*.conf;server {listen 8080 default_server;listen [::]:8080 default_server;server_name _;root /usr/share/nginx/html;# Load configuration files for the default server block.include /etc/nginx/default.d/*.conf;location / {}error_page 404 /404.html;location = /40x.html {}error_page 500 502 503 504 /50x.html;location = /50x.html {}}#kibanaserver {listen 8081;#server_name ***.***.com;location / {auth_basic "请登录";auth_basic_user_file /etc/nginx/db/passwd.db;proxy_pass http://127.0.0.1:5601$request_uri;proxy_set_header Host $host:$server_port;proxy_set_header X-Real_IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;proxy_set_header X-Forwarded-Scheme $scheme;proxy_connect_timeout 3;proxy_read_timeout 3;proxy_send_timeout 3;access_log off;break;}error_page 500 502 503 504 /50x.html;location = /50x.html {root html;}}}

#启动nginx$ nginx

4.4 logstash 配置

配置的文件不是$LOGSTASH_NODE01_HOME/config/logstash.yml, 而是$LOGSTASH_NODE01_HOME/config/logstash-sample.conf

# Sample Logstash configuration for creating a simple# Beats -> Logstash -> Elasticsearch pipeline.#输入配置input {#beats插件监听端口beats {port => 5044tags => ["baobei-product-test"]}#baobei测试环境监听端口tcp {host => "0.0.0.0"port => 4560mode => "server"tags => ["baobei-product-test"]codec => json_lines}#baobei生产环境监听端口tcp {host => "0.0.0.0"port => 4561mode => "server"tags => ["baobei-product-pro"]codec => json_lines}}#过滤器配置#示例log日志:2021-03-21 21:12:45.767 [appName_IS_UNDEFINED,,,] [pool-1-thread-2] INFO c.y.b.product.productapi.BBService - 下载电子保单地址:https://mtest.sinosafe.com.cn/elec/netSaleQueryElecPlyServlet?c_ply_no=H10131P06123920212200525&idCard=420122198403035522filter {grok {match => { "message" => "%{TIMESTAMP_ISO8601:time} \[%{NOTSPACE:appName}\] \[%{NOTSPACE:thread}\] %{LOGLEVEL:level} %{DATA:class} - %{GREEDYDATA:msg}" }}}#输出配置output {#标准输出stdout { codec => rubydebug }#baobei测试环境输出if "baobei-product-test" in [tags]{elasticsearch {hosts => ["http://127.0.0.1:9200","http://127.0.0.1:9201","http://127.0.0.1:9202"]index => "baobei-product-test-%{+YYYY.MM.dd}"#user => "elastic"#password => "changeme"}}#baobei生产环境输出if "baobei-product-pro" in [tags]{elasticsearch {hosts => ["http://127.0.0.1:9200","http://127.0.0.1:9201","http://127.0.0.1:9202"]index => "baobei-product-pro-%{+YYYY.MM.dd}"#user => "elastic"#password => "changeme"}}}

启动logstash:

$ $LOGSTASH_NODE01_HOME/bin/logstash -f $LOGSTASH_NODE01_HOME/config/logstash-sample.conf

不挂起运行:

$ nohup $LOGSTASH_NODE01_HOME/bin/logstash -f $LOGSTASH_NODE01_HOME/config/logstash-sample.conf > /dev/null 2>&1 &

可能遇到的错误:

[2021-04-12T18:17:36,406][FATAL][logstash.runner ] Logstash could not be started because there is already another instance using the configured data directory. If you wish to run multiple instances, you must change the “path.data” setting.

产生的原因:

前运行的instance有缓冲,保存在path.data里面有.lock文件,删除掉就可以。

解决办法:

在 $LOGSTASH_NODE01_HOME/config/logstash.yml 文件中找到 Data path 的路径(默认在安装目录的data目录下) 查看是否存在 .lock 文件,如果存在把它删除. .lock是隐藏文件需要用 ls -a

4.5 filebeat 设置

# ============================== Filebeat inputs ===============================filebeat.inputs:- type: logenabled: truepaths:multiline.pattern: ^(\d{4}-\d{2}-\d{2})\s(\d{2}:\d{2}:\d{2})multiline.negate: truemultiline.match: after# ================================== General ===================================tags: ["baobei-product-test"]# ================================== Outputs ===================================# ------------------------------ Logstash Output -------------------------------output.logstash:hosts: ["localhost:5044"]

启动filebeat:

$ $FILEBEAT_HOME/filebeat -e -c $FILEBEAT_HOME/filebeat.yml

不挂起运行:

$ nohup $FILEBEAT_HOME/filebeat -e -c $FILEBEAT_HOME/filebeat.yml > /dev/null 2>&1 &

4.6 spring boot设置

maven加入依赖

<!-- logstash --><dependency><groupId>net.logstash.logback</groupId><artifactId>logstash-logback-encoder</artifactId><version>6.6</version></dependency>

spring boot项目内logback-spring.xml配置

<?xml version="1.0" encoding="UTF-8"?><configuration debug="true"><include resource="org/springframework/boot/logging/logback/defaults.xml" /><springProperty scope="context" name="appName" source="spring.application.name" /><springProperty scope="context" name="appPort" source="server.port" /><springProperty scope="context" name="logstash-ip" source="logstash.ip" /><springProperty scope="context" name="logstash-port" source="logstash.port" /><appender name="console" class="ch.qos.logback.core.ConsoleAppender"><filter class="ch.qos.logback.classic.filter.ThresholdFilter"><level>INFO</level></filter><encoder><pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [${appName:-},%X{X-B3-TraceId:-},%X{X-B3-SpanId:-},%X{X-Span-Export:-}] [%thread] %-5level %logger{36} - %msg%n</pattern><charset>utf8</charset></encoder></appender><!--按天生成日志--><appender name="logFile" class="ch.qos.logback.core.rolling.RollingFileAppender"><!-- 指定日志文件的名称 --><file>logs/product/product.log</file><append>false</append><rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><!-- 滚动时产生的文件的存放位置及文件名称 %d{yyyy-MM-dd}:按天进行日志滚动 %i:当文件大小超过maxFileSize时,按照i进行文件滚动 --><fileNamePattern>logs/product/product-%d{yyyy-MM-dd}-%i.log</fileNamePattern><!-- 可选节点,控制保留的归档文件的最大数量,超出数量就删除旧文件。假设设置每天滚动, 且maxHistory是365,则只保存最近365天的文件,删除之前的旧文件。注意,删除旧文件是,那些为了归档而创建的目录也会被删除。 --><maxHistory>60</maxHistory><!-- 当日志文件超过maxFileSize指定的大小是,根据上面提到的%i进行日志文件滚动 注意此处配置SizeBasedTriggeringPolicy是无法实现按文件大小进行滚动的,必须配置timeBasedFileNamingAndTriggeringPolicy --><timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"><maxFileSize>512MB</maxFileSize></timeBasedFileNamingAndTriggeringPolicy></rollingPolicy><layout class="ch.qos.logback.classic.PatternLayout"><pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [${appName:-},%X{X-B3-TraceId:-},%X{X-B3-SpanId:-},%X{X-Span-Export:-}] [%thread] %-5level %logger{36} - %msg%n</pattern></layout></appender><!--logstash配置--><appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender"><destination>${logstash-ip}:${logstash-port}</destination><!-- 日志输出编码 --><encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder"><providers><timestamp><timeZone>UTC</timeZone></timestamp><pattern><pattern>{"time": "%d{yyyy-MM-dd HH:mm:ss.SSS}","level": "%level","appName": "${appName:-},%X{X-B3-TraceId:-},%X{X-B3-SpanId:-},%X{X-Span-Export:-}","pid": "${PID:-}","thread": "%thread","class": "%logger{40}","msg":"%msg%n"}</pattern></pattern></providers></encoder><!--<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>--></appender><root level="INFO"><appender-ref ref="LOGSTASH"/><appender-ref ref="logFile"/><appender-ref ref="console"/></root></configuration>

application-dev.properties配置

#logstash配置logstash.ip=${logstash地址}logstash.port:4560

application-pro.properties配置

#logstash配置logstash.ip=${logstash地址}logstash.port:4561

spring boot异常配置

import lombok.extern.slf4j.Slf4j;import org.springframework.web.bind.annotation.CrossOrigin;import org.springframework.web.bind.annotation.ExceptionHandler;import org.springframework.web.bind.annotation.ResponseBody;import org.springframework.web.bind.annotation.RestControllerAdvice;import vo.Result;import java.io.PrintWriter;import java.io.StringWriter;/*** 异常处理* @author wentang**/@RestControllerAdvice@CrossOrigin@Slf4jpublic class ExceptionConfig {/*** 拦截所有运行时的全局异常*/@ExceptionHandler(RuntimeException.class)public Result<?> runtimeException(RuntimeException e) {StringWriter stringWriter = new StringWriter();PrintWriter printWriter = new PrintWriter(stringWriter);e.printStackTrace(printWriter);log.error(stringWriter.toString());return new Result<>().fail500(e.toString());}/*** 系统异常捕获处理*/@ExceptionHandler(Exception.class)@ResponseBodypublic Result<?> exception(Exception e) {StringWriter stringWriter = new StringWriter();PrintWriter printWriter = new PrintWriter(stringWriter);e.printStackTrace(printWriter);log.error(stringWriter.toString());// 返回 JOSNreturn new Result<>().fail500(e.toString());}}