安装Flink

根据官网的示例做一次MySQL->MySQL 的同步

准备sql

注意两个数据库不一样

- 我用的是docker启动的两个数据库

数据库为:mysql8.0 +

源数据

CREATE DATABASE IF NOT EXISTS `flink_source` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci ;CREATE TABLE `flink_test` (`id` bigint NOT NULL AUTO_INCREMENT COMMENT 'id',`t_name` varchar(100) NOT NULL COMMENT '名称',`logo_url` varchar(200) DEFAULT NULL COMMENT '图片路径',PRIMARY KEY (`id`) USING BTREE) ENGINE=InnoDB AUTO_INCREMENT=14 DEFAULT CHARSET=utf8mb3 ROW_FORMAT=DYNAMIC COMMENT='test';

目标数据

一模一样的同步

CREATE DATABASE IF NOT EXISTS `flink_sink` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci ;CREATE TABLE `flink_test` (`id` bigint NOT NULL AUTO_INCREMENT COMMENT 'id',`t_name` varchar(100) NOT NULL COMMENT '名称',`logo_url` varchar(200) DEFAULT NULL COMMENT '图片路径',PRIMARY KEY (`id`) USING BTREE) ENGINE=InnoDB AUTO_INCREMENT=14 DEFAULT CHARSET=utf8mb3 ROW_FORMAT=DYNAMIC COMMENT='test';

不一样的同步

CREATE DATABASE IF NOT EXISTS `flink_sink` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci ;CREATE TABLE `flink_test2` (`name` varchar(100) NOT NULL COMMENT '名称',`logo` varchar(200) DEFAULT NULL COMMENT '图片路径',`UNIQUE KEY name (name`)`) ENGINE=InnoDB AUTO_INCREMENT=14 DEFAULT CHARSET=utf8mb3 ROW_FORMAT=DYNAMIC COMMENT='test';

在 Flink SQL CLI 中使用 Flink DDL 创建表

进入 sql CLI :

**./bin/sql-client.sh**依次输入以下命令后,对表格进行增删改查看效果

- MySQL IP注意更改

SET execution.checkpointing.interval = 3s;CREATE TABLE mysql_binlog (id INT NOT NULL,t_name STRING,logo_url STRING,primary key (id) not enforced) WITH ('connector' = 'mysql-cdc','hostname' = '192.168.0.99','port' = '3306','username' = 'root','password' = 'root','database-name' = 'flink_source','table-name' = 'flink_test');CREATE TABLE test_cdc_sink (id INT,t_name STRING,logo_url STRING,primary key (id) not enforced) WITH ('connector' = 'jdbc','driver' = 'com.mysql.cj.jdbc.Driver','url' = 'jdbc:mysql://192.168.0.51:3316/flink_sink?serverTimezone=Asia/Shanghai&useSSL=false','username' = 'root','password' = 'root','table-name' = 'flink_test');insert into test_cdc_sink select * from mysql_binlog;

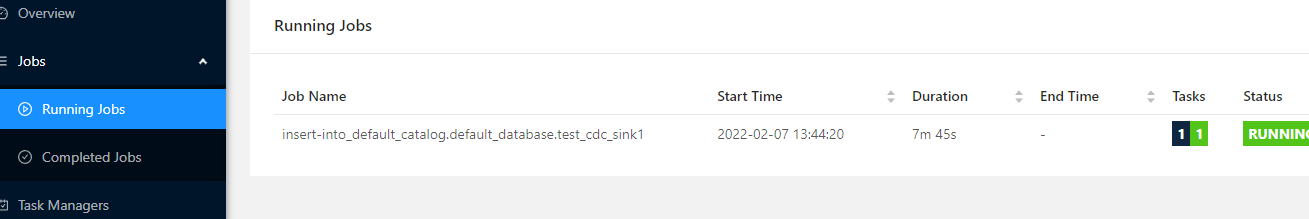

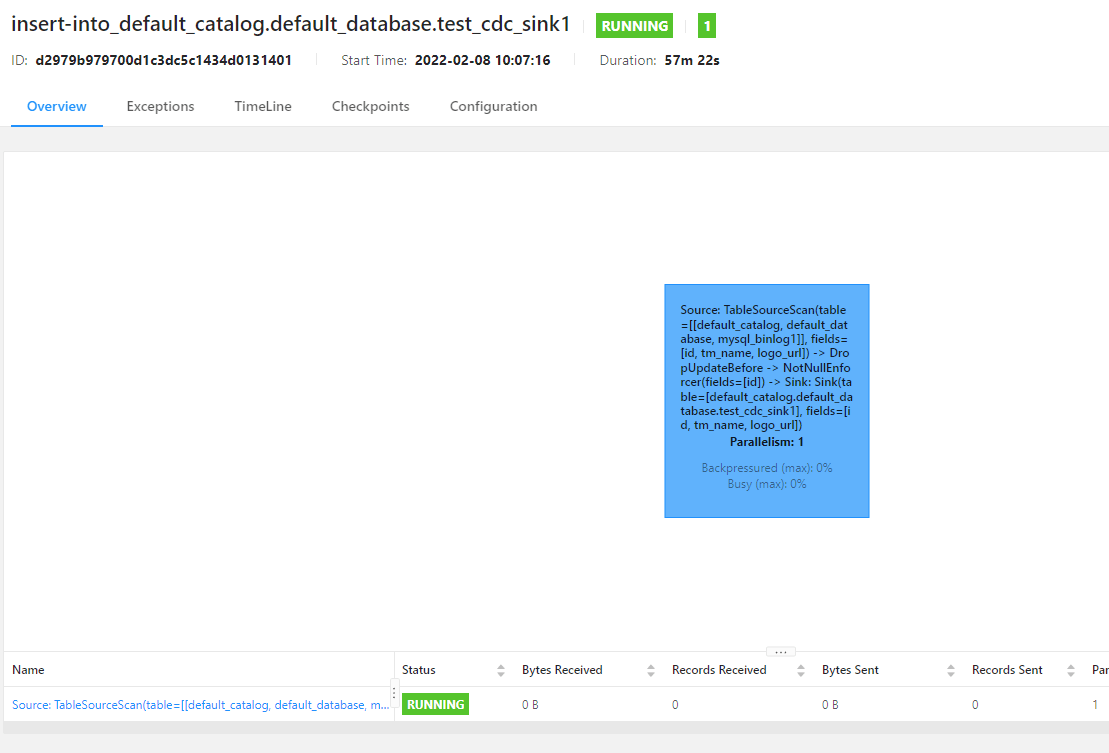

查看Flink任务同时测试数据同步

对源数据进行增删改看看目标数据有咩有进行同步

使用JAVA实现上述功能

搭建 Flink 项目

在Flink的lib中新增jar

也可以不用上传,那包就不轻量了

- flink-connector-jdbc_2.12-1.13.5.jar

- flink-sql-connector-mysql-cdc-2.1.1.jar

- Gson 我就没有上传,打进工作包里直接用了

-

maven详情

```xml <!— Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the “License”); you may not use this file except in compliance with the License. You may obtain a copy of the License at

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. —>

<a name="PlRTa"></a>## JAVA代码详情 SQL```javapackage cn.tannn;import org.apache.flink.streaming.api.CheckpointingMode;import org.apache.flink.streaming.api.environment.CheckpointConfig;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.table.api.EnvironmentSettings;import org.apache.flink.table.api.SqlDialect;import org.apache.flink.table.api.TableResult;import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;public class FlinkCDCWitchSQL {public static void main(String[] args) throws Exception {StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();// Configuration configuration = new Configuration();// configuration.setString(RestOptions.BIND_PORT, "8081-8089");// StreamExecutionEnvironment env = StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(configuration);// env.getCheckpointConfig().setCheckpointStorage(new FileSystemCheckpointStorage("file:///F://project//java//flink-cdc-demo-1//doc"));// 每隔1000 ms进行启动一个检查点【设置checkpoint的周期】env.enableCheckpointing(3000);// 高级选项:// 设置模式为exactly-once (这是默认值)env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);// 确保检查点之间有至少500 ms的间隔【checkpoint最小间隔】env.getCheckpointConfig().setMinPauseBetweenCheckpoints(1500);// 检查点必须在一分钟内完成,或者被丢弃【checkpoint的超时时间】env.getCheckpointConfig().setCheckpointTimeout(60000);// 同一时间只允许进行一个检查点env.getCheckpointConfig().setMaxConcurrentCheckpoints(1);// 表示一旦Flink处理程序被cancel后,会保留Checkpoint数据,以便根据实际需要恢复到指定的Checkpoint【详细解释见备注】//ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION:表示一旦Flink处理程序被cancel后,会保留Checkpoint数据,以便根据实际需要恢复到指定的Checkpoint//ExternalizedCheckpointCleanup.DELETE_ON_CANCELLATION: 表示一旦Flink处理程序被cancel后,会删除Checkpoint数据,只有job执行失败的时候才会保存checkpointenv.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);env.setParallelism(1);EnvironmentSettings Settings = EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, Settings);// 设置 jobNametableEnv.getConfig().getConfiguration().setString("pipeline.name", "table_sql_job");tableEnv.getConfig().setSqlDialect(SqlDialect.DEFAULT);// 数据源表String sourceDDL = "CREATE TABLE mysql_binlog1 ( " +" id INT NOT NULL, " +" t_name STRING, " +" logo_url STRING, " +" primary key (id) not enforced " +") WITH ( " +" 'connector' = 'mysql-cdc', " +" 'hostname' = '192.168.0.99', " +" 'port' = '3306', " +" 'username' = 'root', " +" 'password' = 'root', " +" 'database-name' = 'flink_source', " +" 'table-name' = 'flink_test' " +// " , 'scan.startup.mode' = 'latest-offset' " + // 默认全量加增量") ";// 输出目标表 (一样的字段)String sinkDDL ="CREATE TABLE test_cdc_sink1 ( " +" id INT, " +" t_name STRING, " +" logo_url STRING, " +" primary key (id) not enforced " +") WITH ( " +" 'connector' = 'jdbc', " +" 'driver' = 'com.mysql.cj.jdbc.Driver', " +" 'url' = 'jdbc:mysql://192.168.0.51:3316/flink_sink?serverTimezone=Asia/Shanghai&useSSL=false'," +" 'username' = 'root', " +" 'password' = 'root', " +" 'table-name' = 'flink_test' " +")";// 输出目标表 (不一样的字段 没有主键 更新和删除会出问题,实在没有主键的情况下必须设置唯一索引)String sinkDDL2 ="CREATE TABLE test_cdc_sink2 ( " +" name STRING, " +" logo STRING, " +" primary key (name) not enforced " +") WITH ( " +" 'connector' = 'jdbc', " +" 'driver' = 'com.mysql.cj.jdbc.Driver', " +" 'url' = 'jdbc:mysql://127.0.0.1:3316/test?serverTimezone=Asia/Shanghai&useSSL=false'," +" 'username' = 'root', " +" 'password' = 'root', " +" 'table-name' = 'flink_test2' " +")";// 简单的聚合处理String transformDmlSQL = "insert into test_cdc_sink1 select * from mysql_binlog1";String transformDmlSQL2 = "insert into test_cdc_sink2 select tm_name as name , logo_url as logo from mysql_binlog1";TableResult sourceSQL = tableEnv.executeSql(sourceDDL);TableResult sinkSQL = tableEnv.executeSql(sinkDDL);TableResult transformSQL = tableEnv.executeSql(transformDmlSQL);// tableEnv.executeSql(sinkDDL2);// tableEnv.executeSql(transformDmlSQL2);// 下面的不注释上传到集群会报错// sourceSQL.print();// transformSQL.print();// sinkSQL.print();// env.execute("mysql-sql-mysql-cdc");}}

JAVA代码详情 DataStream API

自定义的存储,数据库已存在的不会同步过去

- 可以把 Read的数据 insert 就行了

FlinkCDC

package cn.tannn;import cn.tannn.sink.MysqlSink;import com.ververica.cdc.connectors.mysql.source.MySqlSource;import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;import org.apache.flink.api.common.eventtime.WatermarkStrategy;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;/*** @author tn* @version 1* @date 2022-02-06 21:34*/public class FlinkCDC {public static void main(String[] args) throws Exception {StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();env.enableCheckpointing(3000);// 读取mysqlbinlogMySqlSource<String> mySqlSource = MySqlSource.<String>builder().hostname("192.168.0.99").port(3306).databaseList("flink_source") // set captured database.tableList("flink_source.flink_test") // set captured table.username("root").password("root").deserializer(new JsonDebeziumDeserializationSchema()) // converts SourceRecord to JSON String.build();DataStreamSource<String> mySQL_source = env.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source");// sink操作mySQL_source.print();mySQL_source.addSink(new MysqlSink());env.execute("mysql-mysql-cdc");}}

MysqlSink

package cn.tannn.sink;import com.google.gson.Gson;import com.google.gson.JsonElement;import com.google.gson.JsonObject;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;import org.slf4j.Logger;import org.slf4j.LoggerFactory;import java.sql.Connection;import java.sql.DriverManager;import java.sql.PreparedStatement;public class MysqlSink extends RichSinkFunction<String>{protected final Logger logger = LoggerFactory.getLogger(this.getClass());Connection connection;PreparedStatement iStmt,dStmt,uStmt;private Connection getConnection() {Connection conn = null;try {Class.forName("com.mysql.cj.jdbc.Driver");String url = "jdbc:mysql://192.168.0.51:3316/flink_sink?serverTimezone=Asia/Shanghai&useSSL=false";conn = DriverManager.getConnection(url,"root","root");} catch (Exception e) {e.printStackTrace();}return conn;}@Overridepublic void open(Configuration parameters) throws Exception {super.open(parameters);connection = getConnection();String insertSql = "insert into flink_test(`id`, `t_name`, `logo_url`) values (?,?,?)";String deleteSql = "delete from flink_test where id=?";String updateSql = "update flink_test set t_name=?,logo_url=? where id=?";iStmt = connection.prepareStatement(insertSql);dStmt = connection.prepareStatement(deleteSql);uStmt = connection.prepareStatement(updateSql);}// 每条记录插入时调用一次@Overridepublic void invoke(String value, Context context) throws Exception {Gson t = new Gson();JsonObject hs = t.fromJson(value, JsonObject.class);JsonObject source = hs.get("source").getAsJsonObject();String database = source.get("db").getAsString();String table = source.get("table").getAsString();// 操作类型String type = hs.get("op").getAsString();if ("flink".equals(database) && "base_trademark".equals(table)) {if (OperationTypeEnum.INSERT.getAbbr().equals(type)) {logger.info("insert => " + value);JsonObject data = hs.get("after").getAsJsonObject();String id = verifyNull(data.get("id"));String tmName = verifyNull(data.get("t_name"));String logoUrl = verifyNull(data.get("logo_url"));iStmt.setString(1, id);iStmt.setString(2, tmName);iStmt.setString(3, logoUrl);iStmt.executeUpdate();}else if (OperationTypeEnum.DELETE.getAbbr().equals(type)) {logger.info("delete => " + value);JsonObject data = hs.get("before").getAsJsonObject();String id = verifyNull(data.get("id"));dStmt.setString(1, id);dStmt.executeUpdate();}else if (OperationTypeEnum.UPDATE.getAbbr().equals(type)) {logger.info("update => " + value);JsonObject data = hs.get("after").getAsJsonObject();String id = verifyNull(data.get("id"));String tmName = verifyNull(data.get("t_name"));String logoUrl = verifyNull(data.get("logo_url"));uStmt.setString(1, tmName);uStmt.setString(2, logoUrl);uStmt.setString(3, id);int count = uStmt.executeUpdate();if(count<=0){//不存在需要被更新的数据,那就插入iStmt.setString(1, id);iStmt.setString(2, tmName);iStmt.setString(3, logoUrl);iStmt.executeUpdate();}}else {logger.info("read => " + value);}}}@Overridepublic void close() throws Exception {super.close();if(iStmt != null) {iStmt.close();}if(dStmt != null) {dStmt.close();}if(uStmt != null) {uStmt.close();}if(connection != null) {connection.close();}}private static String verifyNull(JsonElement jsonElement) {if(jsonElement.isJsonNull()){return null;}return jsonElement.getAsString();}}

OperationTypeEnum

package cn.tannn.sink;/*** 操作类型** @author tn* @date 2022-02-07 15:44*/@SuppressWarnings("AlibabaEnumConstantsMustHaveComment")public enum OperationTypeEnum {INSERT("c","insert","新增插入(after)"),UPDATE("u","update","更新修改(before and after)"),DELETE("d","delete","删除(before)"),READ("r","select","查询(after)"),;private String abbr;private String type;private String chineseName;OperationTypeEnum(String abbr, String type, String chineseName) {this.abbr = abbr;this.type = type;this.chineseName = chineseName;}public static String getTypeByAddr(String addr){OperationTypeEnum[] values = values();for (OperationTypeEnum va : values ) {if(va.abbr.equals(addr)){return va.type;}}return null;}public static OperationTypeEnum getByAddr(String addr){OperationTypeEnum[] values = values();for (OperationTypeEnum va : values ) {if(va.abbr.equals(addr)){return va;}}return null;}public String getAbbr() {return abbr;}public String getType() {return type;}public String getChineseName() {return chineseName;}}

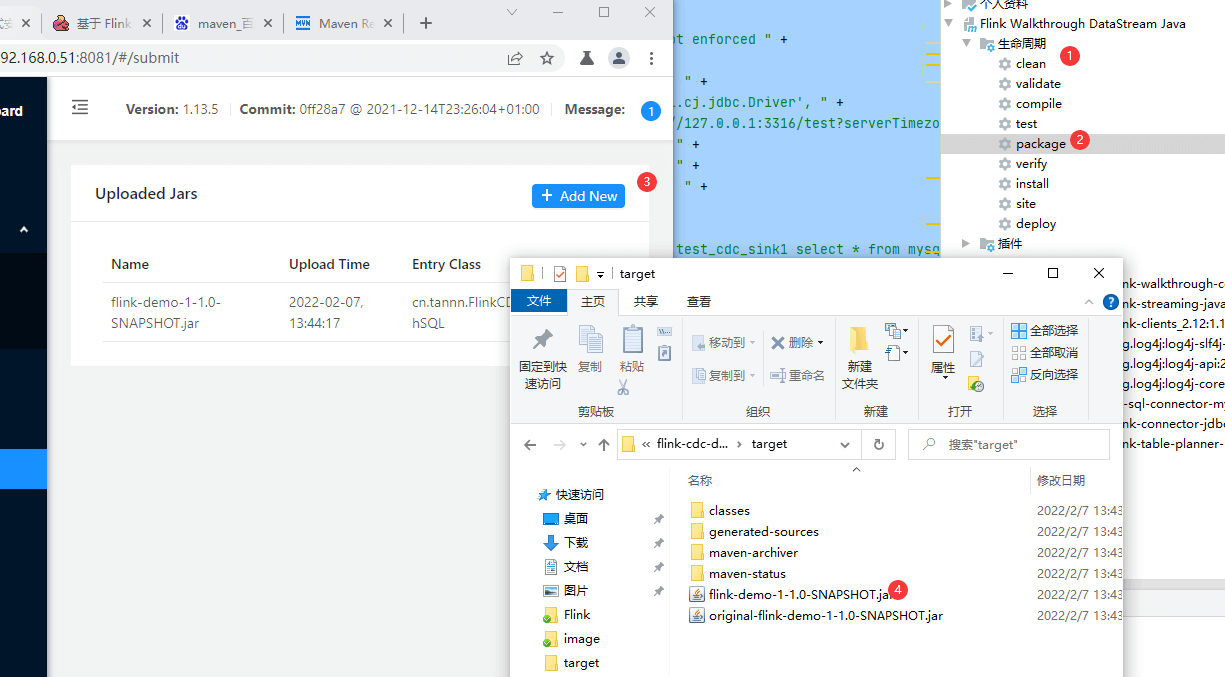

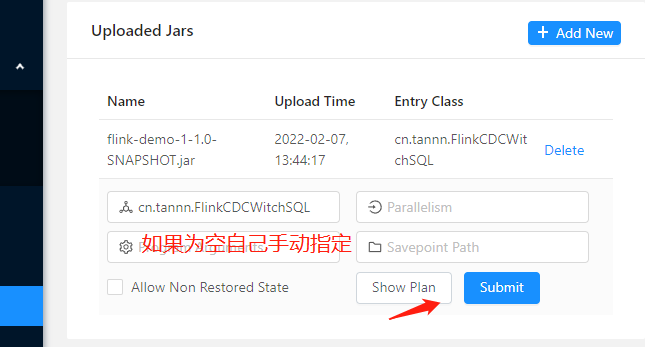

打包上传启动

还可以使用命令行的方式操作 ./bin/flink run -c cn.tannn.FlinkCDCWitchSQL /xx/flink-demo-1-1.0-SNAPSHOT.jar

- JobMain全限定名: cn.tannn.FlinkCDCWitchSQL

- JobJar 路径:/xx/flink-demo-1-1.0-SNAPSHOT.jar