- Error: A JNI error has occurred, please check your installation and try again Exception in thread “main” java.lang.NoClassDefFoundError: org/apache/flink/api/java/typeutils/ResultTypeQueryable

- org/apache/flink/connector/base/source/reader/RecordEmitter

- Flink本地运行webUI无法访问显示{“errors“:[“Not found.“]}

- Could not instantiate the executor. Make sure a planner module is on the classpath

- Could not find any factory for identifier ‘jdbc’ that implements ‘org.apache.flink.table.factories

- please declare primary key for sink table when query contains update/delete record.

- unable to open JDBC writer

- Couldnot acquire the minimum required resources.

- 数据只会在任务启动时同步一次

- PostgreSQL

- Data source rejected establishment of connection, message from server: “Too many connections”

- File upload failed.

- Channel became inactive

Error: A JNI error has occurred, please check your installation and try again Exception in thread “main” java.lang.NoClassDefFoundError: org/apache/flink/api/java/typeutils/ResultTypeQueryable

Error: A JNI error has occurred, please check your installation and try again

Exception in thread “main” java.lang.NoClassDefFoundError: org/apache/flink/api/java/typeutils/ResultTypeQueryable

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:756)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader%0A%09at%20java.security.AccessController.doPrivileged(Native%20Method)%0A%09at%20java.net.URLClassLoader.findClass(URLClassLoader.java%3A362)%0A%09at%20java.lang.ClassLoader.loadClass(ClassLoader.java%3A418)%0A%09at%20sun.misc.Launcher#card=math&code=1.run%28URLClassLoader.java%3A363%29%0A%09at%20java.security.AccessController.doPrivileged%28Native%20Method%29%0A%09at%20java.net.URLClassLoader.findClass%28URLClassLoader.java%3A362%29%0A%09at%20java.lang.ClassLoader.loadClass%28ClassLoader.java%3A418%29%0A%09at%20sun.misc.Launcher&id=F2Ni1)AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2701)

at java.lang.Class.privateGetMethodRecursive(Class.java:3048)

at java.lang.Class.getMethod0(Class.java:3018)

at java.lang.Class.getMethod(Class.java:1784)

at sun.launcher.LauncherHelper.validateMainClass(LauncherHelper.java:650)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:632)

Caused by: java.lang.ClassNotFoundException: org.apache.flink.api.java.typeutils.ResultTypeQueryable

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

… 19 more

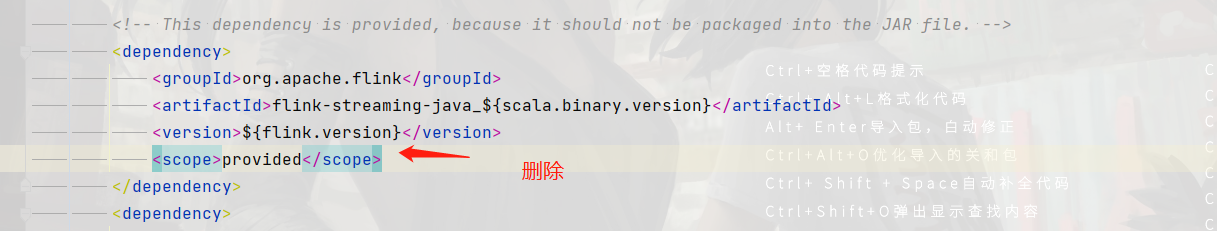

方法一

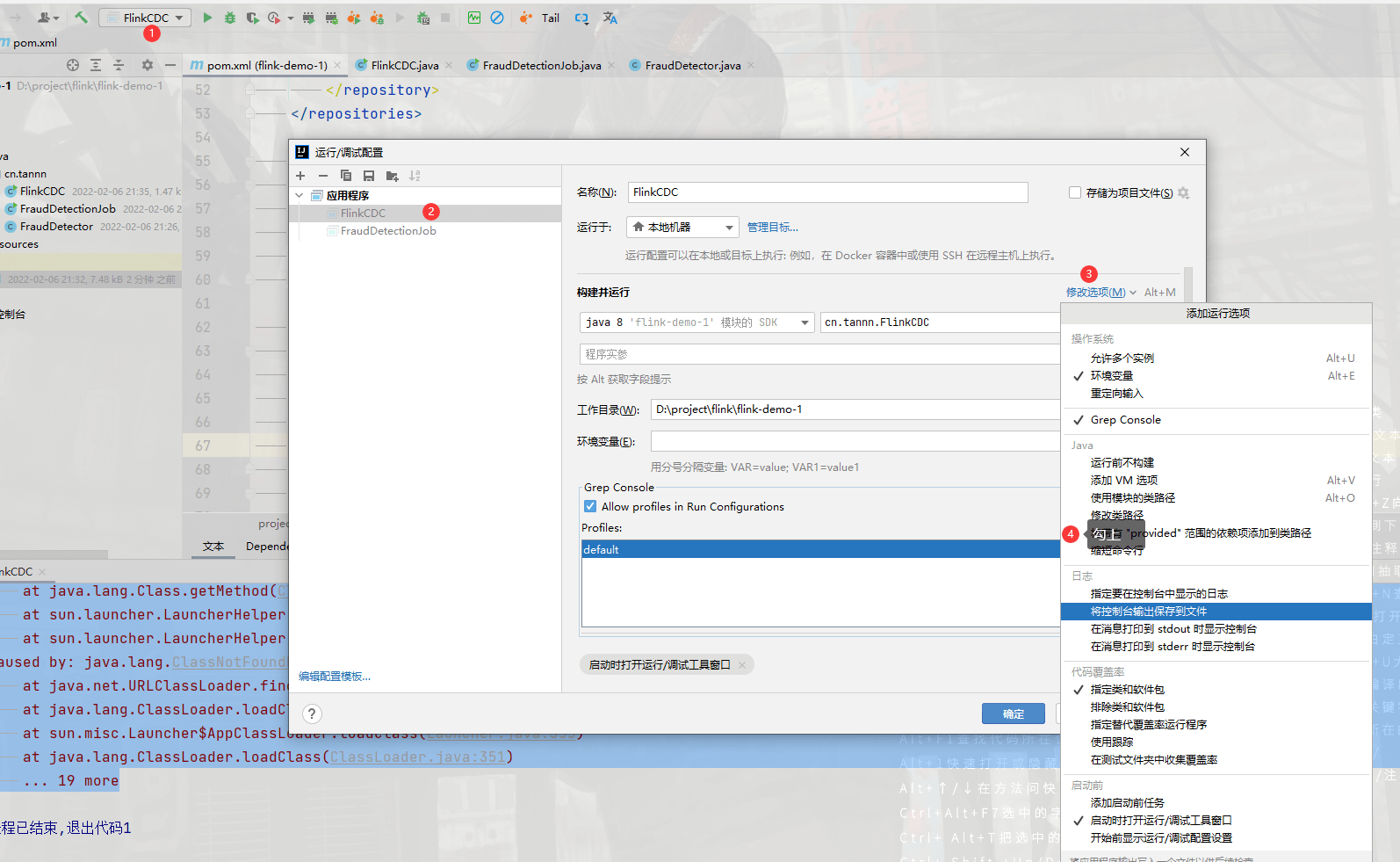

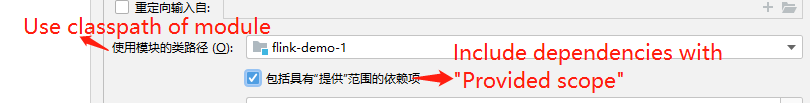

方法二

新版IDEA

- 3 + (估计是这个版本开始)

旧版IDEA

2020.1.2 没有

org/apache/flink/connector/base/source/reader/RecordEmitter

Exception in thread “main” java.lang.NoClassDefFoundError: org/apache/flink/connector/base/source/reader/RecordEmitter

at cn.tannn.FlinkCDC.main(FlinkCDC.java:16)

Caused by: java.lang.ClassNotFoundException: org.apache.flink.connector.base.source.reader.RecordEmitter

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

… 1 more

新增依赖

<dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-api-scala-bridge_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-planner_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency>

Flink本地运行webUI无法访问显示{“errors“:[“Not found.“]}

不知道那个版本开始这个依赖不会随模板创建项目而创建

新增依賴

<!-- 本地web --><dependency><groupId>org.apache.flink</groupId><artifactId>flink-runtime-web_${scala.binary.version}</artifactId><version>${flink.version}</version><scope>provided</scope></dependency>

Could not instantiate the executor. Make sure a planner module is on the classpath

Exception in thread "main" org.apache.flink.table.api.TableException: Could not instantiate the executor. Make sure a planner module is on the classpathat org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.lookupExecutor(StreamTableEnvironmentImpl.scala:534)at org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:488)at org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:939)at org.apache.flink.table.api.bridge.scala.StreamTableEnvironment.create(StreamTableEnvironment.scala)at cn.tannn.FlinkCDCWitchSQL.main(FlinkCDCWitchSQL.java:37)Caused by: org.apache.flink.table.api.NoMatchingTableFactoryException: Could not find a suitable table factory for 'org.apache.flink.table.delegation.ExecutorFactory' inthe classpath.Reason: No factory supports the additional filters.The following properties are requested:class-name=org.apache.flink.table.planner.delegation.BlinkExecutorFactorystreaming-mode=trueThe following factories have been considered:org.apache.flink.table.executor.StreamExecutorFactoryat org.apache.flink.table.factories.ComponentFactoryService.find(ComponentFactoryService.java:76)at org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.lookupExecutor(StreamTableEnvironmentImpl.scala:518)... 4 more进程已结束,退出代码1

新增依赖

<dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-planner-blink_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency>

Could not find any factory for identifier ‘jdbc’ that implements ‘org.apache.flink.table.factories

Exception in thread “main” org.apache.flink.table.api.ValidationException: Unable to create a sink for writing table ‘default_catalog.default_database.test_cdc_sink’.

Table options are:

‘connector’=’jdbc’

‘driver’=’com.mysql.cj.jdbc.Driver’

‘password’=’root’

‘table-name’=’base_trademark’

‘url’=’jdbc:mysql://127.0.0.1:3306/test?serverTimezone=Asia/Shanghai&useSSL=false’

‘username’=’root’

at org.apache.flink.table.factories.FactoryUtil.createTableSink(FactoryUtil.java:171)

at org.apache.flink.table.planner.delegation.PlannerBase.getTableSink(PlannerBase.scala:373)

at org.apache.flink.table.planner.delegation.PlannerBase.translateToRel(PlannerBase.scala:201)

at org.apache.flink.table.planner.delegation.PlannerBase.translate

%0A%09at%20scala.collection.TraversableLike.#card=math&code=1%28PlannerBase.scala%3A162%29%0A%09at%20scala.collection.TraversableLike.&id=Is3ri)anonfun$map

%0A%09at%20scala.collection.Iterator.foreach(Iterator.scala%3A937)%0A%09at%20scala.collection.Iterator.foreach#card=math&code=1%28TraversableLike.scala%3A233%29%0A%09at%20scala.collection.Iterator.foreach%28Iterator.scala%3A937%29%0A%09at%20scala.collection.Iterator.foreach&id=E3NcP)(Iterator.scala:937)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1425)

at scala.collection.IterableLike.foreach(IterableLike.scala:70)

at scala.collection.IterableLike.foreach%0A%09at%20scala.collection.AbstractIterable.foreach(Iterable.scala%3A54)%0A%09at%20scala.collection.TraversableLike.map(TraversableLike.scala%3A233)%0A%09at%20scala.collection.TraversableLike.map#card=math&code=%28IterableLike.scala%3A69%29%0A%09at%20scala.collection.AbstractIterable.foreach%28Iterable.scala%3A54%29%0A%09at%20scala.collection.TraversableLike.map%28TraversableLike.scala%3A233%29%0A%09at%20scala.collection.TraversableLike.map&id=w16sU)(TraversableLike.scala:226)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.flink.table.planner.delegation.PlannerBase.translate(PlannerBase.scala:162)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.translate(TableEnvironmentImpl.java:1518)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:740)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:856)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeSql(TableEnvironmentImpl.java:730)

at cn.tannn.FlinkCDCWitchSQL.main(FlinkCDCWitchSQL.java:77)

Caused by: org.apache.flink.table.api.ValidationException: Cannot discover a connector using option: ‘connector’=’jdbc’

at org.apache.flink.table.factories.FactoryUtil.enrichNoMatchingConnectorError(FactoryUtil.java:467)

at org.apache.flink.table.factories.FactoryUtil.getDynamicTableFactory(FactoryUtil.java:441)

at org.apache.flink.table.factories.FactoryUtil.createTableSink(FactoryUtil.java:167)

… 19 more

Caused by: org.apache.flink.table.api.ValidationException: Could not find any factory for identifier ‘jdbc’ that implements ‘org.apache.flink.table.factories.DynamicTableFactory’ in the classpath.Available factory identifiers are:

blackhole

datagen

filesystem

mysql-cdc

oracle-cdc

at org.apache.flink.table.factories.FactoryUtil.discoverFactory(FactoryUtil.java:319)

at org.apache.flink.table.factories.FactoryUtil.enrichNoMatchingConnectorError(FactoryUtil.java:463)

… 21 more

新增依赖

<dependency><groupId>org.apache.flink</groupId><artifactId>flink-connector-jdbc_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency>

please declare primary key for sink table when query contains update/delete record.

sql中没有主键

primary key (id) not enforced

- 尽量在目标标准设置主键ID

primary key (ID字段) not enforced

- 实在没有主键ID,那就设置唯一索引

primary key (唯一索引字段) not enforcedUNIQUE KEY name (name)`- 我没试

unable to open JDBC writer

新增依赖

<dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>8.0.28</version></dependency>

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

java.io.IOException: unable to open JDBC writer at org.apache.flink.connector.jdbc.internal.AbstractJdbcOutputFormat.open(AbstractJdbcOutputFormat.java:56) at org.apache.flink.connector.jdbc.internal.JdbcBatchingOutputFormat.open(JdbcBatchingOutputFormat.java:115) at org.apache.flink.connector.jdbc.internal.GenericJdbcSinkFunction.open(GenericJdbcSinkFunction.java:49) at org.apache.flink.api.common.functions.util.FunctionUtils.openFunction(FunctionUtils.java:34) at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.open(AbstractUdfStreamOperator.java:102) at org.apache.flink.table.runtime.operators.sink.SinkOperator.open(SinkOperator.java:58) at org.apache.flink.streaming.runtime.tasks.OperatorChain.initializeStateAndOpenOperators(OperatorChain.java:442) at org.apache.flink.streaming.runtime.tasks.StreamTask.restoreGates(StreamTask.java:585) at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.call(StreamTaskActionExecutor.java:55) at org.apache.flink.streaming.runtime.tasks.StreamTask.executeRestore(StreamTask.java:565) at org.apache.flink.streaming.runtime.tasks.StreamTask.runWithCleanUpOnFail(StreamTask.java:650) at org.apache.flink.streaming.runtime.tasks.StreamTask.restore(StreamTask.java:540) at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:759) at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566) at java.lang.Thread.run(Thread.java:750) Causedby: com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server. at com.mysql.cj.jdbc.exceptions.SQLError.createCommunicationsException(SQLError.java:174) at com.mysql.cj.jdbc.exceptions.SQLExceptionsMapping.translateException(SQLExceptionsMapping.java:64) at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:836) at com.mysql.cj.jdbc.ConnectionImpl.

(ConnectionImpl.java:456) at com.mysql.cj.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:246) at com.mysql.cj.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:197) at org.apache.flink.connector.jdbc.internal.connection.SimpleJdbcConnectionProvider.getOrEstablishConnection(SimpleJdbcConnectionProvider.java:121) at org.apache.flink.connector.jdbc.internal.AbstractJdbcOutputFormat.open(AbstractJdbcOutputFormat.java:54) … 14 more Causedby: com.mysql.cj.exceptions.CJCommunicationsException: Communications link failure The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server. at sun.reflect.NativeConstructorAccessorImpl.newInstance0(NativeMethod) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:61) at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:105) at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:151) at com.mysql.cj.exceptions.ExceptionFactory.createCommunicationsException(ExceptionFactory.java:167) at com.mysql.cj.protocol.a.NativeSocketConnection.connect(NativeSocketConnection.java:91) at com.mysql.cj.NativeSession.connect(NativeSession.java:144) at com.mysql.cj.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:956) at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:826) … 19 more Causedby: java.net.ConnectException: Connection refused (Connection refused) at java.net.PlainSocketImpl.socketConnect(NativeMethod) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:607) at com.mysql.cj.protocol.StandardSocketFactory.connect(StandardSocketFactory.java:155) at com.mysql.cj.protocol.a.NativeSocketConnection.connect(NativeSocketConnection.java:65) … 22 more

还有可能是数据库连接不到的问题

PostgreSQL

windows PgSQL 的配置文件一般在于:

**C:\Program Files\PostgreSQL\14\data**目录下

远程连接问题

默认监听在127.0.0.1上,不支持远程访问

Caused by: org.postgresql.util.PSQLException: 致命错误: 没有用于主机 “192.168.31.61”, 用户 “postgres”, 数据库 “flink”, no encryption 的 pg_hba.conf 记录 (pgjdbc: autodetected server-encoding to be GB2312, if the message is not readable, please check database logs and/or host, port, dbname, user, password, pg_hba.conf) at org.postgresql.core.v3.ConnectionFactoryImpl.doAuthentication(ConnectionFactoryImpl.java:525) at org.postgresql.core.v3.ConnectionFactoryImpl.tryConnect(ConnectionFactoryImpl.java:146) at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:197) at org.postgresql.core.ConnectionFactory.openConnection(ConnectionFactory.java:49) at org.postgresql.jdbc.PgConnection.

(PgConnection.java:217) at org.postgresql.Driver.makeConnection(Driver.java:458) at org.postgresql.Driver.connect(Driver.java:260) at io.debezium.jdbc.JdbcConnection.lambda$patternBasedFactory$1(JdbcConnection.java:231) at io.debezium.jdbc.JdbcConnection.connection(JdbcConnection.java:872) at io.debezium.jdbc.JdbcConnection.connection(JdbcConnection.java:867) at io.debezium.connector.postgresql.TypeRegistry. (TypeRegistry.java:122)

- 处理方法一: 使用127.0.0.1/localhost 代理IP链接

-

打开

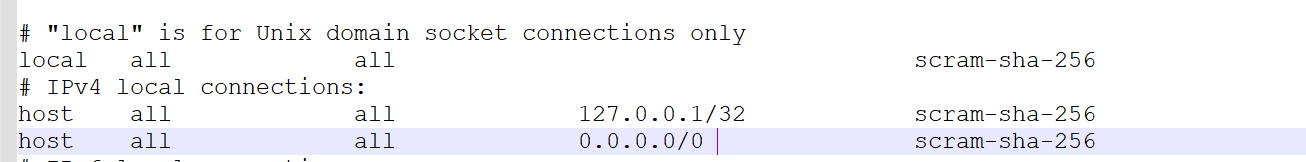

**data/pg_hdb.conf**找到**# IPv4 local connections**# IPv4 local connections:host all all 127.0.0.1/32 scram-sha-256

新增 (我这设置的是 所有主机都能连,根据你自己的环境设置相应主机IP)

host all all 0.0.0.0/0 scram-sha-256

- 重启PostgreSQL

- 去服务里右击重启

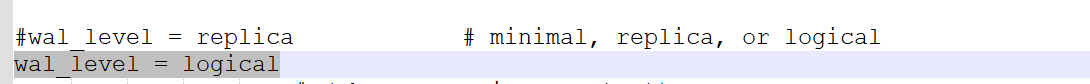

错误: 逻辑解码要求wal_level >= logical

根据提示修改配置文件

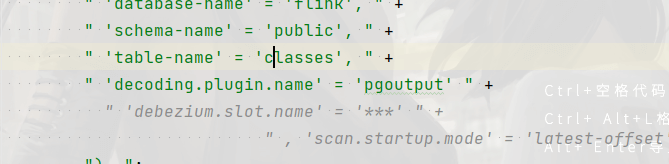

我也不知道怎么回事儿照着他的配置了参数就好了

- “ ‘decoding.plugin.name’ = ‘pgoutput’ “

Data source rejected establishment of connection, message from server: “Too many connections”

数据源拒绝建立连接,来自服务器的消息:“太多连接”

-- https://blog.csdn.net/qq_31454017/article/details/711082781. 查询最大连接数show variables like "max_connections";2. 临时修改最大连接数set GLOBAL max_connections=10003. 在配置文件中修改最大连接数 [mysqld] 下max_connections=10003.1 重启服务

排除当前连接数

1. 所有连接show full processlist;2. kill 连接select concat('KILL ',id,';') from information_schema.processlist where user='root'

File upload failed.

- 环境为docker

- flink 1.13.6

- Dlinky

打开target/flink-web-upload文件夹的权限

造成原因

- 我映射了 target 的目录到宿主机中,然乎宿主集中的文件夹权限不足导致一直上次失败

- chmond -R 777 /home/detabes/flink/target

- /home/detabes/flink/target:/opt/flink/target # 防止flink 重启 submit的jar包丢失- /home/detabes/flink/sqlfile:/opt/flink/sqlfile

- chmond -R 777 /home/detabes/flink/target

Caused by: org.apache.flink.runtime.client.JobSubmissionException: Failed to submit JobGraph.at org.apache.flink.client.program.rest.RestClusterClient.lambda$submitJob$9(RestClusterClient.java:405)at java.util.concurrent.CompletableFuture.uniExceptionally(CompletableFuture.java:884)at java.util.concurrent.CompletableFuture$UniExceptionally.tryFire(CompletableFuture.java:866)at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1990)at org.apache.flink.runtime.concurrent.FutureUtils.lambda$retryOperationWithDelay$9(FutureUtils.java:364)at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:750)at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)at java.util.concurrent.CompletableFuture.postFire(CompletableFuture.java:575)at java.util.concurrent.CompletableFuture$UniCompose.tryFire(CompletableFuture.java:943)at java.util.concurrent.CompletableFuture$Completion.run(CompletableFuture.java:456)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)... 1 moreCaused by: org.apache.flink.runtime.rest.util.RestClientException: [File upload failed.]at org.apache.flink.runtime.rest.RestClient.parseResponse(RestClient.java:486)at org.apache.flink.runtime.rest.RestClient.lambda$submitRequest$3(RestClient.java:466)at java.util.concurrent.CompletableFuture.uniCompose(CompletableFuture.java:966)at java.util.concurrent.CompletableFuture$UniCompose.tryFire(CompletableFuture.java:940)... 4 more

Channel became inactive

此为 dinky报的异常

mysql binlog_format设置错误

错误详情

Caused by: org.apache.flink.table.api.ValidationException:The MySQL server is configured with binlog_format MIXED rather than ROW,which is required for this connector to work properly.Change the MySQL configuration to use a binlog_format=ROW and restart the connector.

修改mysql binlog_format

vim /etc/mysql/my.cnf# [mysqld] 下binlog_format=row# 重启mysqldocker restart mysql or service mysqld restart