- 参考视频

- 官网(使用开源版的就够了

- 官方文档

- docker

- mongodb 4.2

- elasticsearch 7.10.2

- graylog 4.1

- spring

- boot 2.5.4

- logback-gelf 3.0.0(de.siegmar)

docker-compose file

gray 的访问地址为:127.0.0.1:9000

2021-08-30 目前官网最新 注意:

- 设置时区GRAYLOG_ROOT_TIMEZONE: Asia/Shanghai,默认UTC

version: "3.8"services:mongodb:image: "mongo:4.2"container_name: graylog_demo_mongovolumes:- "mongodb_data:/data/db"restart: "always"elasticsearch:environment:ES_JAVA_OPTS: "-Xms1g -Xmx1g"bootstrap.memory_lock: "true"discovery.type: "single-node"http.host: "0.0.0.0"action.auto_create_index: "false"image: "docker.elastic.co/elasticsearch/elasticsearch-oss:7.10.2"container_name: graylog_demo_elasticsearchulimits:memlock:hard: -1soft: -1volumes:- "es_data:/usr/share/elasticsearch/data"restart: "always"graylog:image: "graylog/graylog:4.1"container_name: graylog_demo_graylogdepends_on:elasticsearch:condition: "service_started"mongodb:condition: "service_started"entrypoint: "/usr/bin/tini -- wait-for-it elasticsearch:9200 -- /docker-entrypoint.sh"environment:GRAYLOG_NODE_ID_FILE: "/usr/share/graylog/data/config/node-id"GRAYLOG_PASSWORD_SECRET: somepasswordpepper # CHANGE ME (must be at least 16 characters)!GRAYLOG_ROOT_PASSWORD_SHA2: 8c6976e5b5410415bde908bd4dee15dfb167a9c873fc4bb8a81f6f2ab448a918 # Password: adminGRAYLOG_HTTP_BIND_ADDRESS: "0.0.0.0:9000"GRAYLOG_HTTP_EXTERNAL_URI: "http://localhost:9000/"GRAYLOG_ELASTICSEARCH_HOSTS: "http://elasticsearch:9200"GRAYLOG_MONGODB_URI: "mongodb://mongodb:27017/graylog"GRAYLOG_ROOT_TIMEZONE: Asia/Shanghaiports:- "5044:5044/tcp" # Beats- "5140:5140/udp" # Syslog- "5140:5140/tcp" # Syslog- "5555:5555/tcp" # RAW TCP- "5555:5555/udp" # RAW TCP- "9000:9000/tcp" # Server API- "12201:12201/tcp" # GELF TCP- "12201:12201/udp" # GELF UDP#- "10000:10000/tcp" # Custom TCP port#- "10000:10000/udp" # Custom UDP port- "13301:13301/tcp" # Forwarder data- "13302:13302/tcp" # Forwarder configvolumes:- "graylog_data:/usr/share/graylog/data/data"- "graylog_journal:/usr/share/graylog/data/journal"restart: "always"volumes:mongodb_data:es_data:graylog_data:graylog_journal:

spring boot

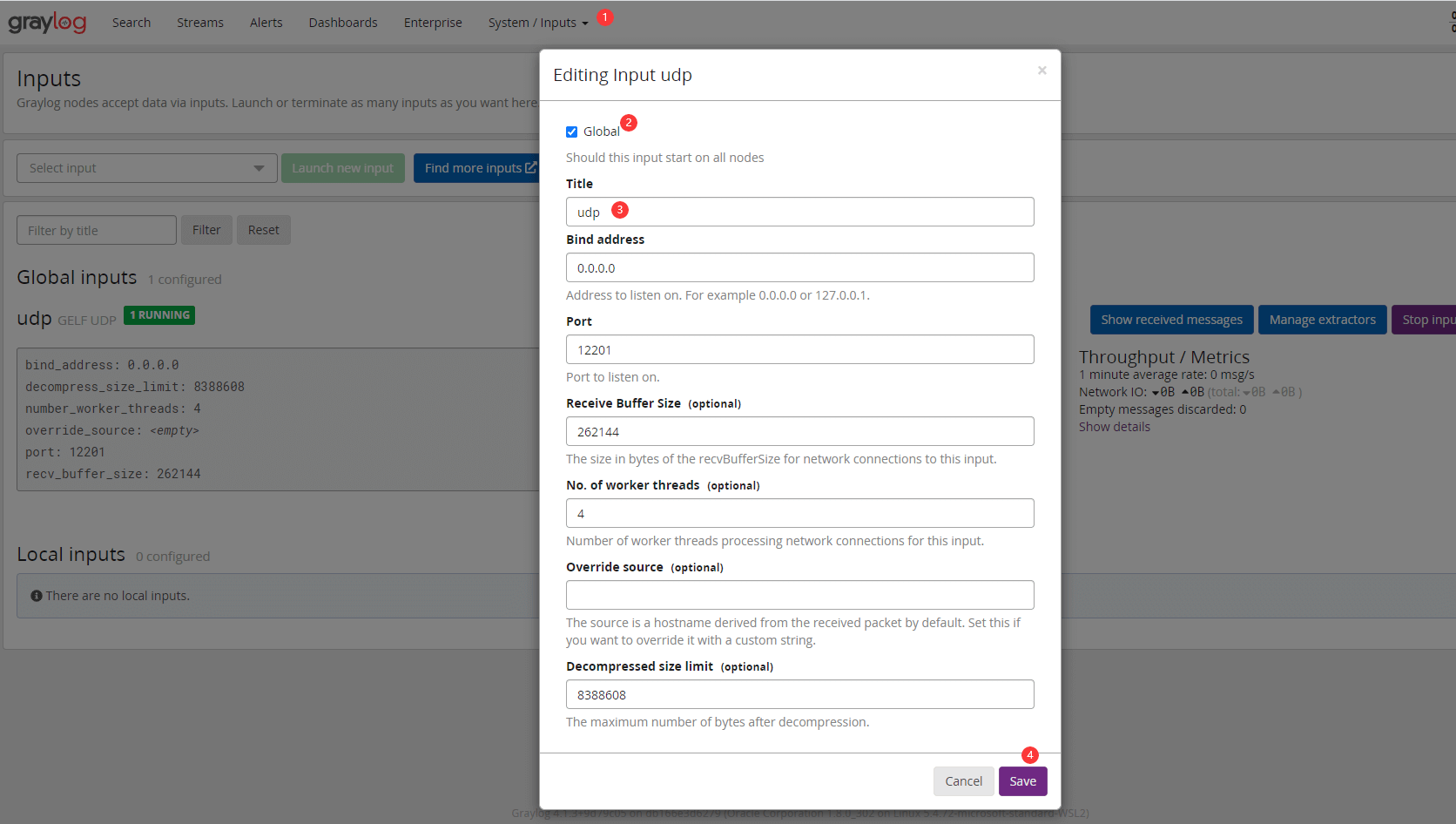

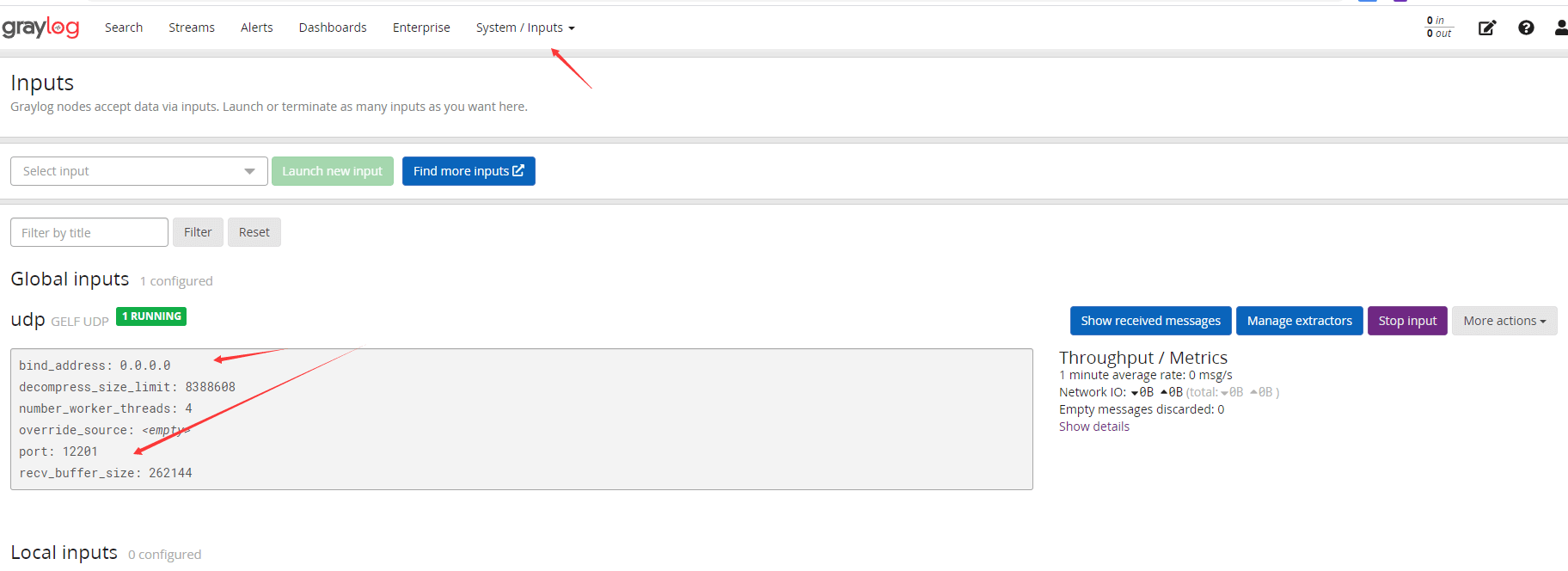

配置日志传输通道

依赖

选用的日志插件是:https://github.com/osiegmar/logback-gelf 插件查询地址:https://marketplace.graylog.org/

<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>2.5.4</version><relativePath/> <!-- lookup parent from repository --></parent><groupId>com.tan</groupId><artifactId>graylog</artifactId><version>0.0.1-SNAPSHOT</version><name>graylog</name><description>Demo project for Spring Boot</description><properties><java.version>1.8</java.version></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><optional>true</optional></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><!--日志平台--><!-- https://mvnrepository.com/artifact/de.siegmar/logback-gelf --><dependency><groupId>de.siegmar</groupId><artifactId>logback-gelf</artifactId><version>3.0.0</version></dependency></dependencies><build><plugins><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId><configuration><excludes><exclude><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId></exclude></excludes></configuration></plugin></plugins></build></project>

logback.xml

官方配置:https://github.com/osiegmar/logback-gelf/blob/v3.0.0/examples/advanced_udp.xml 注意:

includeLevelName是否显示日志级别的名字(默认看的是等级1-6)<staticField>app_name:graylog</staticField>设置当前应用的名称,方便查询(一定要设置)- 数据传输的端口和地址

<graylogHost>localhost</graylogHost><graylogPort>12201</graylogPort>

<?xml version="1.0" encoding="UTF-8"?><!-- logging.config= classpath:logback-spring.xml --><!-- 日志级别从低到高分为TRACE < DEBUG < INFO < WARN < ERROR < FATAL,如果设置为WARN,则低于WARN的信息都不会输出 --><!-- scan:当此属性设置为true时,配置文件如果发生改变,将会被重新加载,默认值为true --><!-- scanPeriod:设置监测配置文件是否有修改的时间间隔,如果没有给出时间单位,默认单位是毫秒;当scan为true时,此属性生效。默认的时间间隔为1分钟。 --><!-- debug:当此属性设置为true时,将打印出logback内部日志信息,实时查看logback运行状态,默认值为false。 --><configurationxmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:noNamespaceSchemaLocation="http://www.padual.com/java/logback.xsd"debug="false" scan="true" scanPeriod="10 second"><!-- 配置日志输出目录文件夹以及进行重命名 --><property name="PROJECT" value="controller" /><property name="ROOT" value="logs/${PROJECT}/" /><!-- 配置日志文件大小 --><property name="FILESIZE" value="50MB" /><!-- 设置日志保留的时间,单位为天 --><property name="MAXHISTORY" value="2" /><!-- 格式化输出日期 --><timestamp key="DATETIME" datePattern="yyyy-MM-dd HH:mm:ss.SSS" /><!-- 控制台打印 --><appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"><encoder charset="utf-8"><!-- 格式化日志输出:%-5level表示级别从左显示5个字符宽度,%d表示日期,%thread表示线程名,%m表示日志消息,%n是换行符%logger{36}表示logger是class的全名,36表示限制最长字符--><pattern>%yellow(%date{yyyy-MM-dd HH:mm:ss}) |%highlight(%-5level) |%green(%logger:%line) |%white(%msg%n)</pattern></encoder></appender><!-- ERROR日志输出到文件 --><appender name="ERROR" class="ch.qos.logback.core.rolling.RollingFileAppender"><encoder charset="utf-8"><pattern>[%-5level] %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %logger{36} - %m%n</pattern></encoder><!-- 设置当前日志文档输出的级别,只记录ERROR级别的日志 --><filter class="ch.qos.logback.classic.filter.LevelFilter"><level>ERROR</level><onMatch>ACCEPT</onMatch><onMismatch>DENY</onMismatch></filter><!-- 设置日志记录器的滚动策略,按日期和大小记录--><rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><fileNamePattern>${ROOT}%d/error.%i.log</fileNamePattern><maxHistory>${MAXHISTORY}</maxHistory><timeBasedFileNamingAndTriggeringPolicyclass="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"><maxFileSize>${FILESIZE}</maxFileSize></timeBasedFileNamingAndTriggeringPolicy></rollingPolicy></appender><!-- WARN日志输出到文件 --><appender name="WARN" class="ch.qos.logback.core.rolling.RollingFileAppender"><encoder charset="utf-8"><pattern>[%-5level] %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %logger{36} - %m%n</pattern></encoder><filter class="ch.qos.logback.classic.filter.LevelFilter"><level>WARN</level><onMatch>ACCEPT</onMatch><onMismatch>DENY</onMismatch></filter><rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><fileNamePattern>${ROOT}%d/warn.%i.log</fileNamePattern><maxHistory>${MAXHISTORY}</maxHistory><timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"><maxFileSize>${FILESIZE}</maxFileSize></timeBasedFileNamingAndTriggeringPolicy></rollingPolicy></appender><!-- INFO日志输出到文件 --><appender name="INFO" class="ch.qos.logback.core.rolling.RollingFileAppender"><encoder charset="utf-8"><pattern>[%-5level] %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %logger{36} - %m%n</pattern></encoder><filter class="ch.qos.logback.classic.filter.LevelFilter"><level>INFO</level><onMatch>ACCEPT</onMatch><onMismatch>DENY</onMismatch></filter><rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><fileNamePattern>${ROOT}%d/info.%i.log</fileNamePattern><maxHistory>${MAXHISTORY}</maxHistory><timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"><maxFileSize>${FILESIZE}</maxFileSize></timeBasedFileNamingAndTriggeringPolicy></rollingPolicy></appender><!-- DEBUG日志输出到文件 --><appender name="DEBUG" class="ch.qos.logback.core.rolling.RollingFileAppender"><encoder charset="utf-8"><pattern>[%-5level] %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %logger{36} - %m%n</pattern></encoder><filter class="ch.qos.logback.classic.filter.LevelFilter"><level>DEBUG</level><onMatch>ACCEPT</onMatch><onMismatch>DENY</onMismatch></filter><rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><fileNamePattern>${ROOT}%d/debug.%i.log</fileNamePattern><maxHistory>${MAXHISTORY}</maxHistory><timeBasedFileNamingAndTriggeringPolicyclass="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"><maxFileSize>${FILESIZE}</maxFileSize></timeBasedFileNamingAndTriggeringPolicy></rollingPolicy></appender><!-- TRACE日志输出到文件 --><appender name="TRACE" class="ch.qos.logback.core.rolling.RollingFileAppender"><encoder charset="utf-8"><pattern>[%-5level] %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %logger{36} - %m%n</pattern></encoder><filter class="ch.qos.logback.classic.filter.LevelFilter"><level>TRACE</level><onMatch>ACCEPT</onMatch><onMismatch>DENY</onMismatch></filter><rollingPolicyclass="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><fileNamePattern>${ROOT}%d/trace.%i.log</fileNamePattern><maxHistory>${MAXHISTORY}</maxHistory><timeBasedFileNamingAndTriggeringPolicyclass="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"><maxFileSize>${FILESIZE}</maxFileSize></timeBasedFileNamingAndTriggeringPolicy></rollingPolicy></appender><!-- <appender name="GELF" class="de.siegmar.logbackgelf.GelfUdpAppender"><graylogHost>localhost</graylogHost><graylogPort>12201</graylogPort></appender>--><appender name="GELF" class="de.siegmar.logbackgelf.GelfUdpAppender"><graylogHost>localhost</graylogHost><graylogPort>12201</graylogPort><maxChunkSize>508</maxChunkSize><useCompression>true</useCompression><messageIdSupplier class="de.siegmar.logbackgelf.MessageIdSupplier"/><encoder class="de.siegmar.logbackgelf.GelfEncoder"><originHost>localhost</originHost><includeRawMessage>false</includeRawMessage><includeMarker>true</includeMarker><includeMdcData>true</includeMdcData><includeCallerData>false</includeCallerData><includeRootCauseData>false</includeRootCauseData><includeLevelName>true</includeLevelName><shortPatternLayout class="ch.qos.logback.classic.PatternLayout"><pattern>%d - %m%nopex</pattern></shortPatternLayout><fullPatternLayout class="ch.qos.logback.classic.PatternLayout"><pattern>%d - %m%n</pattern></fullPatternLayout><numbersAsString>false</numbersAsString><staticField>app_name:graylog</staticField><staticField>os_arch:${os.arch}</staticField><staticField>os_name:${os.name}</staticField><staticField>os_version:${os.version}</staticField></encoder></appender><!-- 输出sql相关日志,不需要可删除;additivity:是否向上级loger传递打印信息,默认是true--><logger name="org.apache.ibatis" level="INFO" additivity="false" /><logger name="org.mybatis.spring" level="INFO" additivity="false" /><logger name="com.github.miemiedev.mybatis.paginator" level="INFO" additivity="false" /><!-- nacos改变日志级别 --><logger name="com.alibaba.nacos.client" level="ERROR" additivity="false" /><springProfile name="dev"><root level="debug"><appender-ref ref="STDOUT"/><appender-ref ref="GELF" /></root></springProfile><!-- 非dev环境下--><springProfile name="!dev"><!-- logger设置输出到文件,输出级别为info --><root level="INFO"><appender-ref ref="GELF" /><appender-ref ref="STDOUT" /><appender-ref ref="DEBUG" /><appender-ref ref="ERROR" /><appender-ref ref="WARN" /><appender-ref ref="INFO" /><appender-ref ref="TRACE" /></root></springProfile></configuration>