1. 虚拟化管理平台

kvm宿主机 500台 , kvm虚拟机5000台

kvm虚拟机 qemu进程开启的虚拟机不透明(需要登录才能查看,容器内部是透明的)

如何 统计centos7.6系统的虚拟机有多少台? 每个虚拟机的IP是多少? 统计4c8g的虚拟机数量。2h4g虚拟机的数量???

- 所以: 需要一个kvm 虚拟化管理平台, 对宿主机和虚拟机资产批量的管理工具。

- 虚拟化管理产品:

小规模管理: ovirt WebVirtMgr openstack cloudstack zstack Rroxmox VE..

大规模管理: openstack 阿里云,腾讯云,青云,ucloud的专有云。

2. 云平台介绍(公有云,私有云)

- kvm管理平台 + 计费系统 == 简单共有云

共有云简单介绍: 亚马逊 06年 aws业务

阿里云 2008年写下第一行代码 2011年上线业务

openstack 2012年飞速发展2017年国内云厂商大规模倒闭(免费抢客户,成本增高)

3. openstack介绍

一开始模仿aws, 后开加入自己的特色,兼容aws,过渡方便,开源,社区活跃,之后半年更新一次。

版本介绍:

A,B,C,D….K版L M N O Pike Q R S T U V W X Y Z版

L版的中文官方文档https://docs.openstack.org/liberty/zh_CN/install-guide-rdo/M版的中文官方文档https://docs.openstack.org/mitaka/zh_CN/install-guide-rdo/N版的中文官方文档https://docs.openstack.org/newton/zh_CN/install-guide-rdo/#最后一个中文版:https://docs.openstack.org/ocata/zh_CN/install-guide-rdo/ <----本次示例

T版以后,需要centos8的系统

P版开始,官方文档大变样,不适合新手学习

安装注意:

- 由于远程仓库的跟新,导致一些老板openstack安装失败。

- centos7 最新支持到 openstack T版 之后需要centos8才能安装最新版

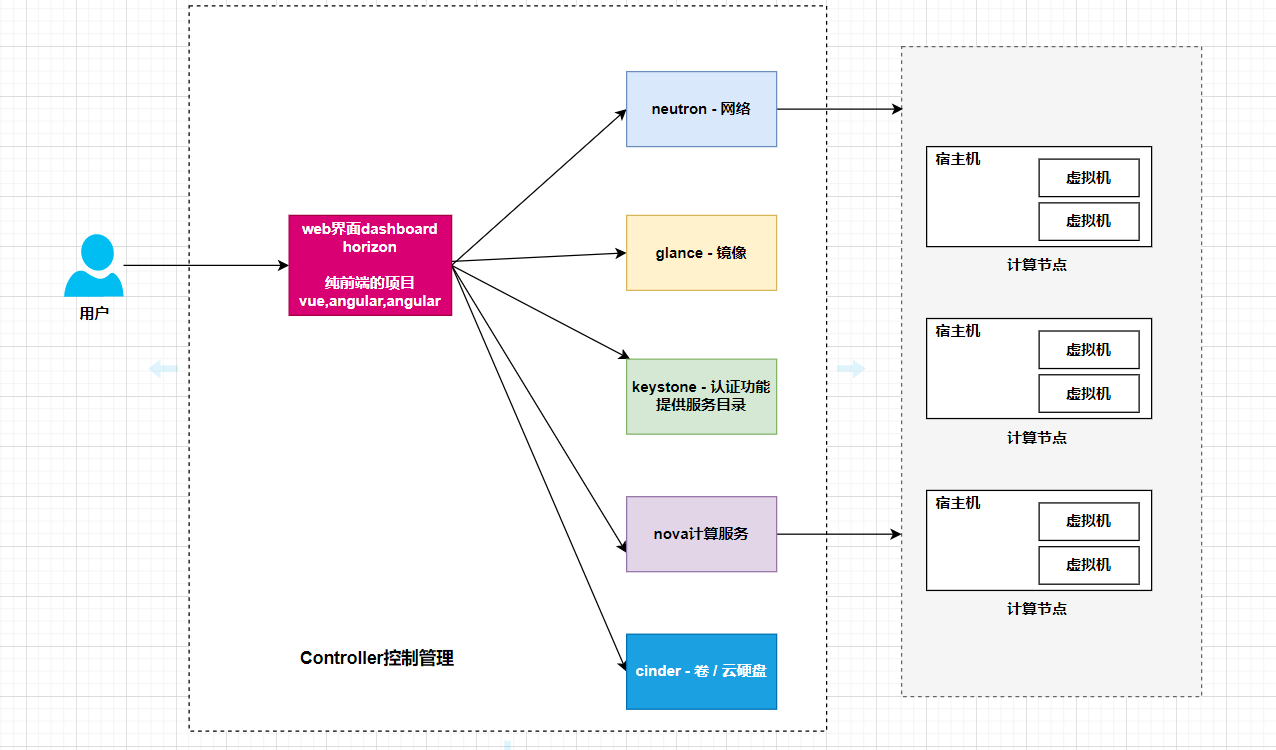

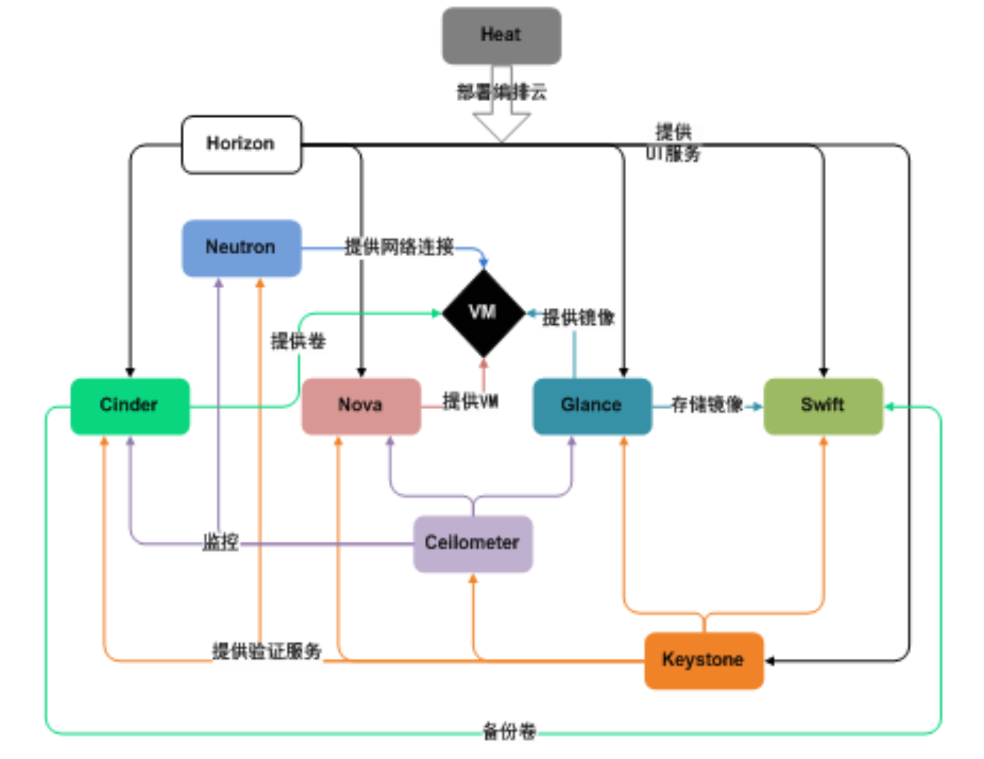

4. dpenstack架构

模仿aws,设计一个大规模的云平台产品(拆分—-为了支持大规模的集群管理)

keyston相当与QQ授权

- 个服务组件功能及关系图:

核心: Nova : 提供CUP 内存 计算资源服务 Neutron: 提供玩咯连接 Glance : 提供镜像 前端是动态生效的: 有什么服务就展示什么。 微服务,也是为了支持大规模的使用。 各个服务能单独维护

5. openstack集群环境准备

| 主机名 | IP | 内存规划 | |

|---|---|---|---|

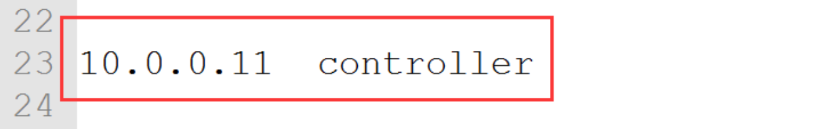

| 控制节点 | controller | 10.0.0.0.11 | 4G |

| 计算节点 | computer1 | 10.0.0.31 | 2G |

| 计算节点 | computer2 | 10.0.0.32 | 2G |

5.1 配置软件包源

- 配置本地源

```javascript

准备镜像:

[root@gateway html]# cat >rsync_exclude.txt<<’EOF’ centosplus/ contrib/ cr/ fasttrack/ isos/ sclo/ i386/ debug/ drpms/ atomic/ configmanagement/ dotnet/ nfv/ opstools/ paas/ rt/ Source/ EOF

同步源

/usr/bin/rsync -zaP —exclude-from rsync_exclude.txt rsync://mirror.tuna.tsinghua.edu.cn/centos-vault/7.3.1611 centos

配置本之源

安装nginx并 配置nginx开启autoindex配置本地yum源 linux主机修改hosts解析

- 清华源

```javascript

#没有配置本地源操作:

openstack-ocata版清华源

rm -fr /etc/yum.repos.d/local.repo

yum源配置

cd /etc/yum.repos.d/

[root@test yum.repos.d]# cat >CentOS-Base.repo<<'EOF'

[base]

name=CentOS-$releasever - Base

baseurl=https://mirror.tuna.tsinghua.edu.cn/centos-vault/7.6.1810/os/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

[updates]

name=CentOS-$releasever - Updates

baseurl=https://mirror.tuna.tsinghua.edu.cn/centos-vault/7.6.1810/updates/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

[extras]

name=CentOS-$releasever - Extras

baseurl=https://mirror.tuna.tsinghua.edu.cn/centos-vault/7.6.1810/extras/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

EOF

yum makecache

yum install centos-release-openstack-ocata.noarch -y

#修改:

CentOS-Ceph-Jewel.repo:

baseurl=https://mirror.tuna.tsinghua.edu.cn/centos-vault/7.6.1810/storage/x86_64/ceph-jewel/

CentOS-OpenStack-ocata.repo:

baseurl=https://mirror.tuna.tsinghua.edu.cn/centos-vault/7.6.1810/cloud/x86_64/openstack-ocata/

CentOS-QEMU-EV.repo:

baseurl=https://mirror.tuna.tsinghua.edu.cn/centos-vault/7.6.1810/virt/x86_64/kvm-common/

5.2 安装基础服务

5.2.1 安装openstack客户端

#所有节点

yum install python-openstackclient -y

5.2.2 数据库安装

#控制节点

#安装

yum install mariadb mariadb-server python2-PyMySQL -y

#配置

vi /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 10.0.0.11

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

#启动

systemctl start mariadb

systemctl enable mariadb

#安全初始化

mysql_secure_installation

回车

n

y

y

y

y

#验证

[root@controller ~]# netstat -lntup|grep 3306

tcp 0 0 10.0.0.11:3306 0.0.0.0:* LISTEN 23304/mysqld

5.2.3 安装消息队列

#控制节点

#安装

yum install rabbitmq-server -y

#启动

systemctl start rabbitmq-server.service

systemctl enable rabbitmq-server.service

#验证

[root@controller ~]# netstat -lntup|grep 5672

tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 23847/beam

tcp6 0 0 :::5672 :::* LISTEN 23847/beam

#创建用户并授权

rabbitmqctl add_user openstack RABBIT_PASS

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

5.2.4 安装memcache缓存

#控制节点

#安装

yum install memcached python-memcached -y

#配置

vim /etc/sysconfig/memcached

OPTIONS="-l 10.0.0.11"

#启动

systemctl start memcached.service

systemctl enable memcached.service

#验证

[root@controller ~]# netstat -lntup|grep 11211

tcp 0 0 10.0.0.11:11211 0.0.0.0:* LISTEN 24519/memcached

udp 0 0 10.0.0.11:11211 0.0.0.0:* 24519/memcached

6. 安装keystone

控制节点

创建数据库

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

安装keystone软件包

yum install openstack-keystone httpd mod_wsgi -y

修改配置文件

cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

grep -Ev '^$|^#' /etc/keystone/keystone.conf.bak >/etc/keystone/keystone.conf

vim /etc/keystone/keystone.conf

[DEFAULT]

[assignment]

[auth]

[cache]

[catalog]

[cors]

[cors.subdomain]

[credential]

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

[domain_config]

[endpoint_filter]

[endpoint_policy]

[eventlet_server]

[federation]

[fernet_tokens]

[healthcheck]

[identity]

[identity_mapping]

[kvs]

[ldap]

[matchmaker_redis]

[memcache]

[oauth1]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

[policy]

[profiler]

[resource]

[revoke]

[role]

[saml]

[security_compliance]

[shadow_users]

[signing]

[token]

provider = fernet

[tokenless_auth]

[trust]

同步数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

初始化fernet

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

初始化keystone

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://controller:35357/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

配置httpd

echo "ServerName controller" >>/etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

启动httpd

systemctl start httpd

systemctl enable httpd

验证keystone

vim admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

source admin-openrc

获取token测试

openstack token issue

创建services项目

openstack project create --domain default \

--description "Service Project" service

7. 安装glance

控制节点

1:创建数据库,授权

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'GLANCE_DBPASS';

2:在keystone创建服务用户,并关联角色

openstack user create --domain default --password GLANCE_PASS glance

openstack role add --project service --user glance admin

3:在keystone上注册api访问地址

openstack service create --name glance \

--description "OpenStack Image" image

openstack endpoint create --region RegionOne \

image public http://controller:9292

openstack endpoint create --region RegionOne \

image internal http://controller:9292

openstack endpoint create --region RegionOne \

image admin http://controller:9292

4:安装软件包

yum install openstack-glance -y

5:修改配置文件

修改glance-api配置文件

cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

grep -Ev '^$|^#' /etc/glance/glance-api.conf.bak >/etc/glance/glance-api.conf

vim /etc/glance/glance-api.conf

[DEFAULT]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[image_format]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = GLANCE_PASS

[matchmaker_redis]

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

修改glance-registry配置文件

cp /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.bak

grep -Ev '^$|^#' /etc/glance/glance-registry.conf.bak >/etc/glance/glance-registry.conf

vim /etc/glance/glance-registry.conf

[DEFAULT]

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = GLANCE_PASS

[matchmaker_redis]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

6:同步数据库

su -s /bin/sh -c "glance-manage db_sync" glance

7:启动服务

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl enable openstack-glance-api.service openstack-glance-registry.service

8:验证

#上传镜像cirros-0.3.4-x86_64-disk.img

openstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public

8. 安装nova

8.1控制节点

1:创建数据库,授权

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

2:在keystone创建服务用户,并关联角色

openstack user create --domain default --password NOVA_PASS nova

openstack role add --project service --user nova admin

openstack user create --domain default --password PLACEMENT_PASS placement

openstack role add --project service --user placement admin

3:在keystone上注册api访问地址

openstack service create --name nova \

--description "OpenStack Compute" compute

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

4:安装软件包

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api -y

5:修改配置文件

修改nova配置文件

cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

grep -Ev '^$|^#' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@controller

my_ip = 10.0.0.11

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

[cloudpipe]

[conductor]

[console]

[consoleauth]

[cors]

[cors.subdomain]

[crypto]

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_file_url]

[ironic]

[key_manager]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

[matchmaker_redis]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = PLACEMENT_PASS

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[ssl]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[workarounds]

[wsgi]

[xenserver]

[xvp]

修改placement配置

vim /etc/httpd/conf.d/00-nova-placement-api.conf

#在</VirtualHost>前插入以下内容

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

启动placement

systemctl restart httpd

6:同步数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

验证

nova-manage cell_v2 list_cells

7:启动服务

systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

8:验证

nova service-list

8.2计算节点

1.安装

yum install openstack-nova-compute -y

2.配置

cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

grep -Ev '^$|^#' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@controller

my_ip = 10.0.0.31

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

[barbican]

[cache]

[cells]

[cinder]

[cloudpipe]

[conductor]

[console]

[consoleauth]

[cors]

[cors.subdomain]

[crypto]

[database]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_file_url]

[ironic]

[key_manager]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

[matchmaker_redis]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = PLACEMENT_PASS

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[ssl]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

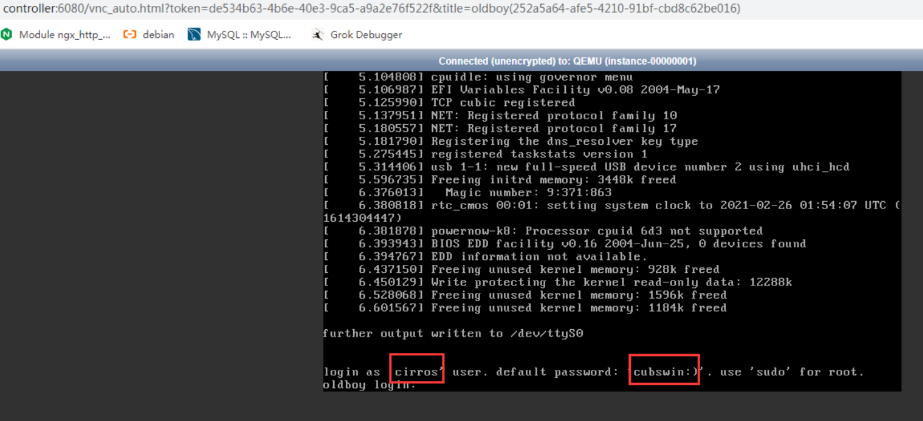

novncproxy_base_url = http://controller:6080/vnc_auto.html

[workarounds]

[wsgi]

[xenserver]

[xvp]

3.启动

systemctl start libvirtd openstack-nova-compute.service

systemctl enable libvirtd openstack-nova-compute.service

3. 重要步骤

控制节点

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

9. 安装neutron

9.0 网络模式介绍:

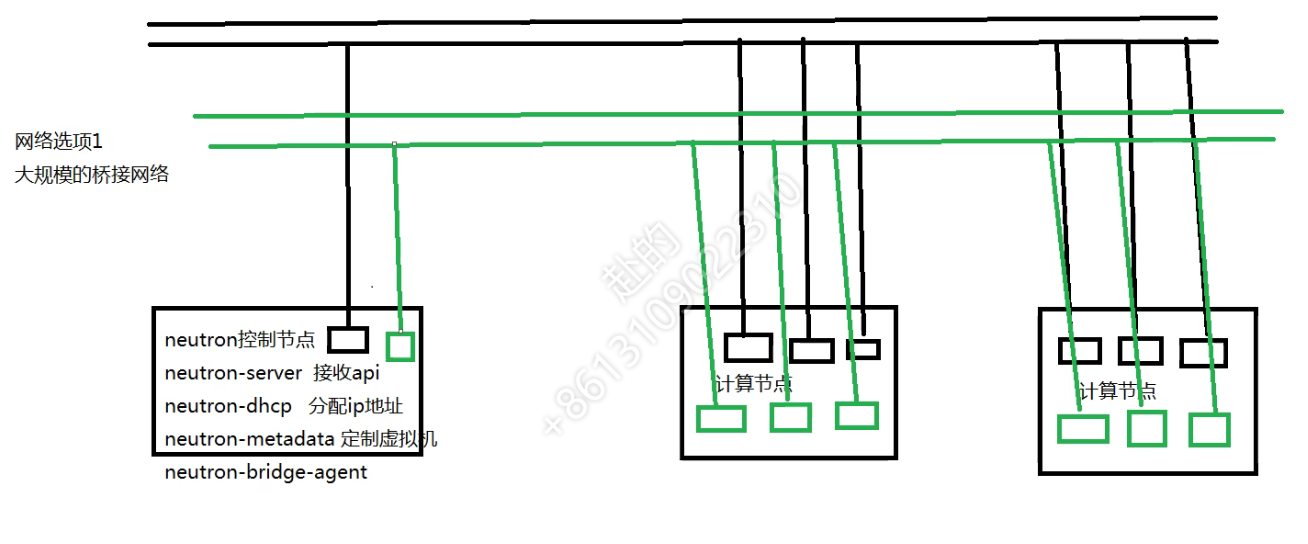

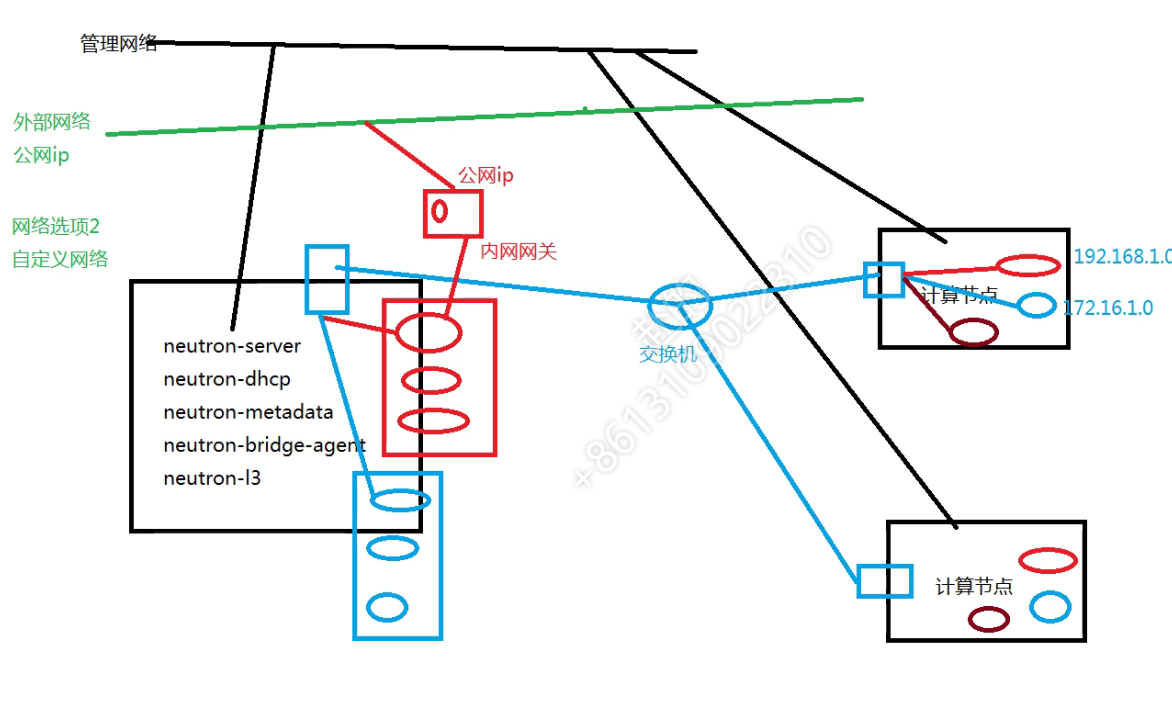

- 网络选项1 (向当于大规模的桥接网络,缺点占用大量IP,适合私有云)

- 网络选项2 (节省大量公网IP,瓶颈: 所有节点流量都走neutron-server节点。适合共有云)

Ps: 自定义网络的实现借助于 蓝色部分的线段,vxlan网络。

9.1控制节点

1:创建数据库,授权

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';

2:在keystone创建服务用户,并关联角色

openstack user create --domain default --password NEUTRON_PASS neutron

openstack role add --project service --user neutron admin

3:在keystone上注册api访问地址

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696

4:安装软件包

yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables -y

5:修改配置文件

修改neutron配置文件

cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

grep -Ev '^$|^#' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins =

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[agent]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[matchmaker_redis]

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[qos]

[quotas]

[ssl]

修改ml2_conf.in

cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.bak

grep -Ev '^$|^#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak >/etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

[securitygroup]

enable_ipset = true

修改linuxbridge-agent配置

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak

grep -Ev '^$|^#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth0

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

[vxlan]

enable_vxlan = false

修改dhcp配置

vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

修改metadata-agent配置

vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = METADATA_SECRET

修改nova.conf配置

#控制节点

vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

6:同步数据库

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

7:启动服务

systemctl restart openstack-nova-api.service

systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

8:验证

9.2计算节点

1.安装

yum install openstack-neutron-linuxbridge ebtables ipset -y

2.配置

修改neutron.conf

cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

grep -Ev '^$|^#' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

[agent]

[cors]

[cors.subdomain]

[database]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[qos]

[quotas]

[ssl]

修改linuxbridge_agent.ini

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth0

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

[vxlan]

enable_vxlan = false

修改nova.conf

#计算节点

vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

3.启动

systemctl restart openstack-nova-compute.service

systemctl start neutron-linuxbridge-agent.service

systemctl enable neutron-linuxbridge-agent.service

10. 安装horizon

1.安装

#计算节点

yum install openstack-dashboard -y

2.配置

详情,参考官方文档

Ps: vim替换方式: 282,292s#True#False#gc

3.启动

systemctl start httpd

systemctl enable httpd

11.启动实例

1.创建网络

命令行创建

openstacknetworkcreate--share--external\

--

provider-physical-networkprovider\

provider-network-typeflatwan

web界面创建

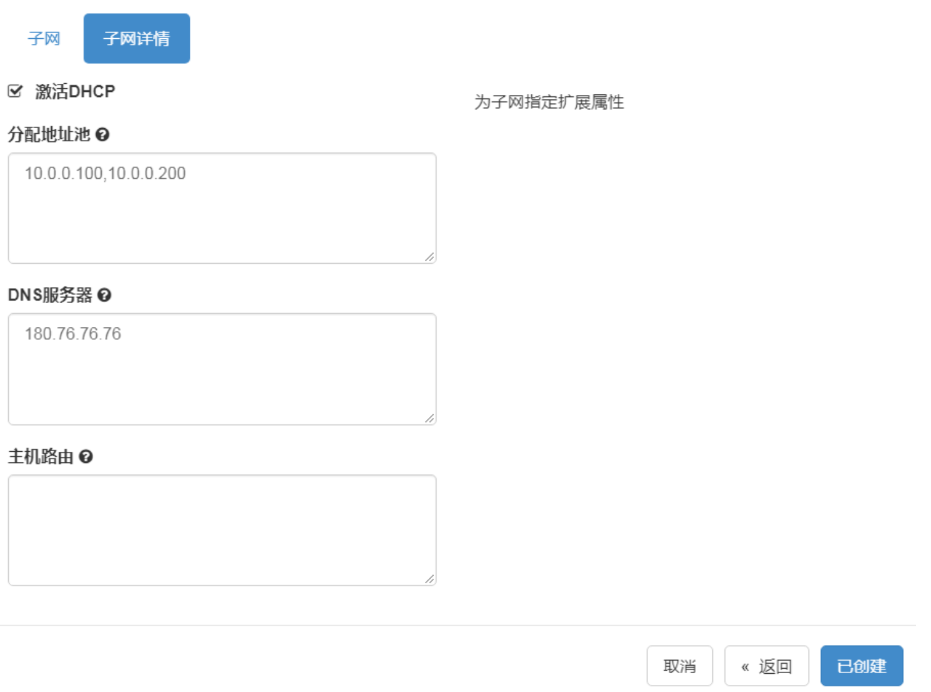

创建子网

命令行

openstacksubnetcreate--networkwan\

--

allocation-pool

start=10.0.0.100,end=10.0.0.200\

--

dns-nameserver180.76.76.76--gateway

10.0.0.254\

--

subnet-range10.0.0.0/2410.0.0.0

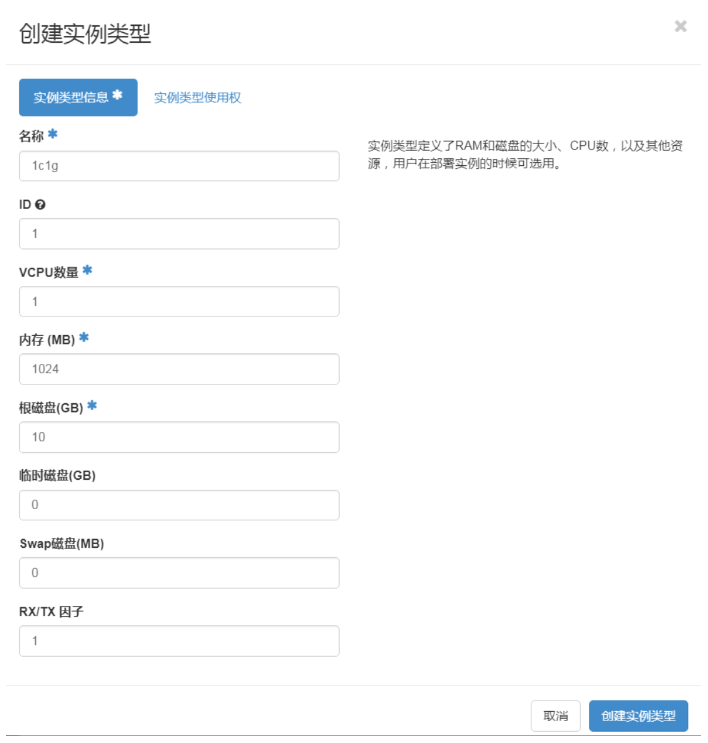

创建虚拟机硬件配置方案

命令行

openstackflavorcreate--id0--vcpus1--ram

64--disk1m1.nano

web界面创建

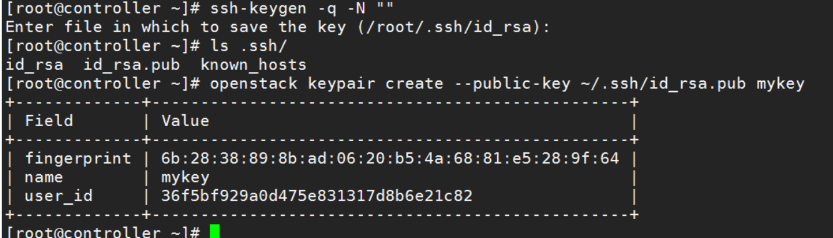

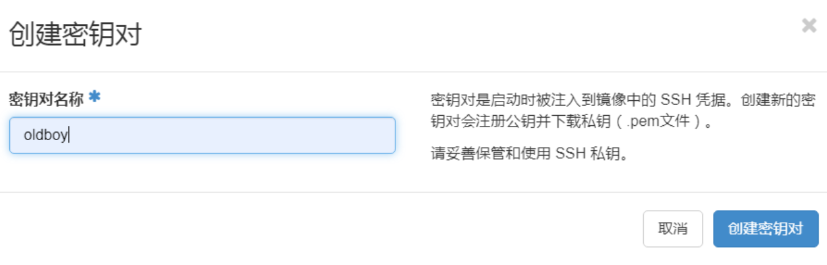

创建键值对

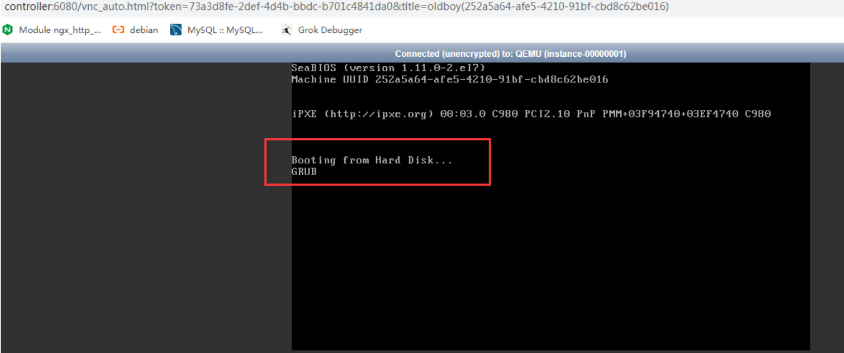

启动实例

命令行

openstackservercreate--flavorm1.nano--

imagecirros\

--

nicnet-id=b571f3b4-cc7b-4f84-a582-

889044fb5c04--security-groupdefault\

--

key-namemykeyoldboy

12.故障解决

#计算节点

vim/etc/nova/nova.conf

[libvirt]

cpu_mode=none

virt_type=qemu

systemctlrestartopenstack-nova-

compute.service

硬重启虚拟机