1. 准备环境

服务器规划

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master | 192.168.58.21 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

| k8s-node1 | 192.168.58.22 | kubelet,kube-proxy,docker etcd |

| k8s-node2 | 192.168.58.23 | kubelet,kube-proxy,docker,etcd |

软件环境

| 软件 | 版本 |

|---|---|

| 操作系统 | Centos8.2 |

| etcd | 3.5.1 |

| flannel | 0.10.0 |

| Docker | 1.20.12 |

| Kubernetes | 1.18.1 |

操作系统初始化配置

- 和之前的配置一致,关闭防火墙、selinux、swap 等。

添加 k8s 和 docker 账户

# 每台机器上添加 docker 账户,将 k8s 账户添加到 docker 组中,同时配置 dockerd 参数(注:安装完docker才有):sudo useradd -m dockersudo gpasswd -a k8s dockersudo mkdir -p /opt/docker/vim /opt/docker/daemon.json #可以后续部署docker时在操作{"registry-mirrors": ["https://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"],"max-concurrent-downloads": 20}

无密码 ssh 登录其他服务器

1、生成秘钥对ssh-keygen #连续回车即可2、将自己的公钥发给其他服务器ssh-copy-id root@k8s-master01ssh-copy-id root@k8s-node01ssh-copy-id root@k8s-node02

2. 部署Etcd集群

2.1 认识Etcd

Etcd 是一个分布式键值存储系统,Kubernetes 使用 Etcd 进行数据存储,所以先准备一个 Etcd 数据库,为解决 Etcd 单点故障,应采用集群方式部署,这里使用 3 台组建集群,可容忍 1 台机器故障,当然,你也可以使用 5 台组建集群,可容忍 2 台机器故障。

注:为了节省及其,这里与 k8s 节点机器复用,也可以独立于 k8s 集群之外部署,只要 apiserver 能连接到就行。

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz(尚硅谷使用:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz)

2.2 准备cfssl证书生成工具

cfssl 是一个开源的证书管理工具,使用 json 文件生成证书,相比 openssl 更方便使用。找任意一台服务器操作,这里用 Master 节点。

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64mv cfssl_linux-amd64 /usr/local/bin/cfsslmv cfssljson_linux-amd64 /usr/local/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2.3 生成Etcd证书

1、自签证书颁发机构(CA)。

# 创建工作目录mkdir -p ~/certs/{etcd,k8s}cd certs/etcd# 自签CA# 创建配置文件cat > ca-config.json<< EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "87600h"}}}}EOF# 创建证书签名请求文件cat > ca-csr.json<< EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing"}]}EOF# 生成证书cfssl gencert -initca ca-csr.json | cfssljson -bare ca# 查看证书[root@k8s-master01 etcd]# ll总用量 20-rw-r--r-- 1 root root 277 1月 14 10:08 ca-config.json-rw-r--r-- 1 root root 956 1月 14 10:08 ca.csr-rw-r--r-- 1 root root 167 1月 14 10:08 ca-csr.json-rw------- 1 root root 1679 1月 14 10:08 ca-key.pem-rw-r--r-- 1 root root 1273 1月 14 10:08 ca.pem

2、使用自签 CA 签发 Etcd HTTPS 证书。

# 创建证书申请文件,方便后期扩容可以多写几个预留的IPcat > server-csr.json<< EOF{"CN": "kubernetes","hosts": ["127.0.0.1","192.168.58.21","192.168.58.22","192.168.58.23"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]}EOF# 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server# 查看生成的证书[root@k8s-master01 etcd]# ls server*pemserver-key.pem server.pem

这里统一下,将所有的 etcd.service 类似的 service 文件放到 /var/lib/systemd/system/ 目录下。

2.4 部署操作

在 master 节点上操作,等完成后,拷贝文件到 node 节点操作。

1、创建工作目录并解压二进制包。

mkdir – p /opt/etcd/{bin,cfg,ssl}tar -zxvf etcd-v3.5.1-linux-amd64.tar.gzmv etcd-v3.5.1-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/# 配置环境变量 vi /etc/profileexport PATH=$PATH:/opt/etcd/bin

2、创建 etcd 配置文件。

ETCD_NAME:节点名称,集群中唯一 ETCD_DATA_DIR:数据目录 ETCD_LISTEN_PEER_URLS:集群通信监听地址 ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址 ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址 ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址 ETCD_INITIAL_CLUSTER:集群节点地址 ETCD_INITIAL_CLUSTER_TOKEN:集群 Token ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入已有集群

cat > /opt/etcd/cfg/etcd.conf << EOF#[Member]ETCD_NAME="etcd-1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.58.21:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.58.21:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.58.21:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.58.21:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.58.21:2380,etcd-2=https://192.168.58.22:2380,etcd-3=https://192.168.58.23:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF

3、systemd 管理 etcd。

cat > /etc/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=/opt/etcd/cfg/etcd.confExecStart=/opt/etcd/bin/etcd \--cert-file=/opt/etcd/ssl/server.pem \--key-file=/opt/etcd/ssl/server-key.pem \--peer-cert-file=/opt/etcd/ssl/server.pem \--peer-key-file=/opt/etcd/ssl/server-key.pem \--trusted-ca-file=/opt/etcd/ssl/ca.pem \--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \--logger=zap \--enable-v2=trueRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

4、把刚才生成的证书拷贝到配置文件中的路径。

cp ~/certs/etcd/ca*pem ~/certs/etcd/server*pem /opt/etcd/ssl/

5、启动并设置开机启动,这里需要注意:必须 3 台机器同时启动,否则报错。

# 拷贝/opt/etcd/和/etc/systemd/system/etcd.service目录和文档到其他两台机器scp -r /opt/etcd/ root@192.168.58.22:/opt/scp -r /opt/etcd/ root@192.168.58.23:/opt/scp /etc/systemd/system/etcd.service root@192.168.58.22:/etc/systemd/system/etcd.servicescp /etc/systemd/system/etcd.service root@192.168.58.23:/etc/systemd/system/etcd.servicescp /usr/lib/systemd/system/etcd.service root@192.168.58.22:/usr/lib/systemd/system/etcd.servicescp /usr/lib/systemd/system/etcd.service root@192.168.58.23:/usr/lib/systemd/system/etcd.servicesystemctl daemon-reloadsystemctl start etcdsystemctl enable etcd# 添加软链接ln -s /opt/etcd/bin/etcd /usr/local/bin/etcdln -s /opt/etcd/bin/etcdctl /usr/local/bin/etcdctl

6、查看集群状态信息。

[root@k8s-master01 etcd]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.58.21:2379,https://192.168.58.22:2379,https://192.168.58.23:2379" endpoint healthhttps://192.168.58.21:2379 is healthy: successfully committed proposal: took = 8.092927mshttps://192.168.58.23:2379 is healthy: successfully committed proposal: took = 8.287973mshttps://192.168.58.22:2379 is healthy: successfully committed proposal: took = 22.099142ms

3. 安装Docker

yum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoyum install docker-ce -ysystemctl start dockersystemctl enable docker

部署Flannel网络

配置证书

1、创建TLS 密钥和证书。

cat > flanneld-csr.json <<EOF{"CN": "flanneld","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneldls flanneld*cp flanneld* /opt/etcd/ssl/

2、向 etcd 写入集群Pod 网段信息

# 上面的证书没用上

ETCDCTL_API=2 /opt/etcd/bin/etcdctl \

--ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem \

--endpoints="https://192.168.58.21:2379,https://192.168.58.22:2379,https://192.168.58.23:2379" \

set /coreos.com/network/config '{"Network": "172.18.0.0/16","Backend": {"Type": "vxlan"}}'

# 查看

/opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem \

--cert=/opt/etcd/ssl/server.pem \

--key=/opt/etcd/ssl/server-key.pem \

--endpoints="https://192.168.58.21:2379,https://192.168.58.22:2379,https://192.168.58.23:2379" \

get /coreos.com/network/config

配置flannel(在所有节点上操作)

1、下载二进制包。

wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

tar -zxvf flannel-v0.10.0-linux-amd64.tar.gz

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

mv flanneld mk-docker-opts.sh /opt/kubernetes/bin/

2、配置Flannel。

# vi /opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.58.21:2379,https://192.168.58.22:2379,https://192.168.58.23:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"

# vi /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

# 配置Docker启动指定子网段,vi /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

3、重启 docker 和 flannel。

systemctl daemon-reload

systemctl start flanneld

systemctl enable flanneld

systemctl restart docker

# 检查是否生效,确保docker和flannel在同一网段

ip addr

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:40:a1:15:0b brd ff:ff:ff:ff:ff:ff

inet 172.18.66.1/24 brd 172.18.66.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 22:e8:e0:f9:8b:2c brd ff:ff:ff:ff:ff:ff

inet 172.18.66.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

4、将 master 节点中的 /opt/kubernetes 目录和 docker.service、flanneld.service 文件拷贝到 22、23 服务器上。

4. 部署Master Node

4.1 生成kube-apiserver证书

在部署Kubernetes之前一定要确保etcd、flannel、docker是正常工作的,否则先解决问题再继续。

1、在 /root/certs/k8s/ 目录下进行设置,自签证书颁发机构(CA)

cat > ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json<< EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

2、生成证书。

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

3、使用自签 CA 签发 kube-apiserver HTTPS 证书。

# 创建证书申请文件

cat > server-csr.json<< EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.58.21",

"192.168.58.22",

"192.168.58.23",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

4.2 下载Kubernetes

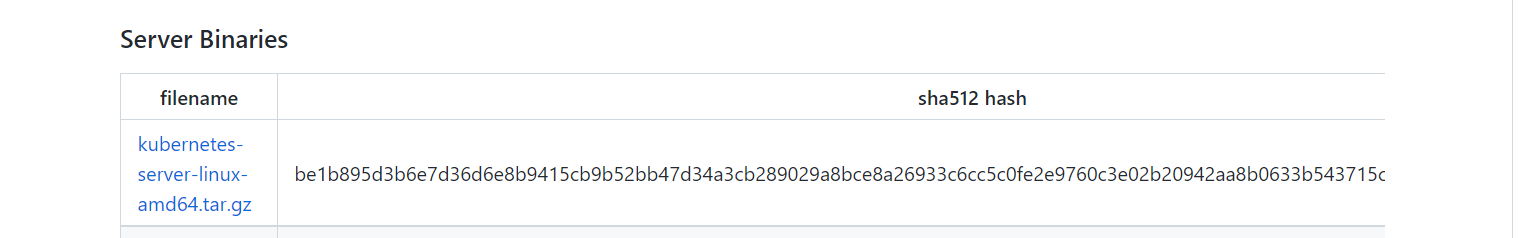

1、从 GitHub 上下载 Kuberneters,下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.23.md#downloads-for-v1231(最新版,我这里部署 kube-apiserver 报错,更换 1.18 版本:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#v1183),这里只下载这个 server 包就够了,包含了 Master 和 Worker Node 二进制文件。

2、解压二进制包。

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

4.3 部署kube-apiserver

1、创建配置文件。

–logtostderr:启用日志 —v:日志等级 –log-dir:日志目录 –etcd-servers:etcd 集群地址 –bind-address:监听地址 –secure-port:https 安全端口 –advertise-address:集群通告地址 –allow-privileged:启用授权 –service-cluster-ip-range:Service 虚拟 IP 地址段 –enable-admission-plugins:准入控制模块 –authorization-mode:认证授权,启用 RBAC 授权和节点自管理 –enable-bootstrap-token-auth:启用 TLS bootstrap 机制 –token-auth-file:bootstrap token 文件 –service-node-port-range:Service nodeport 类型默认分配端口范围 –kubelet-client-xxx:apiserver 访问 kubelet 客户端证书 –tls-xxx-file:apiserver https 证书 –etcd-xxxfile:连接 Etcd 集群证书 –audit-log-xxx:审计日志

cat > /opt/kubernetes/cfg/kube-apiserver.conf<< EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--etcd-servers=https://192.168.58.21:2379,https://192.168.58.22:2379,https://192.168.58.23:2379 \\

--bind-address=192.168.58.21 \\

--secure-port=6443 \\

--advertise-address=192.168.58.21 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-32767 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

2、拷贝证书。

cp ~/certs/k8s/ca*pem ~/certs/k8s/server*pem /opt/kubernetes/ssl/

3、启用 TLS Bootstrapping 机制:

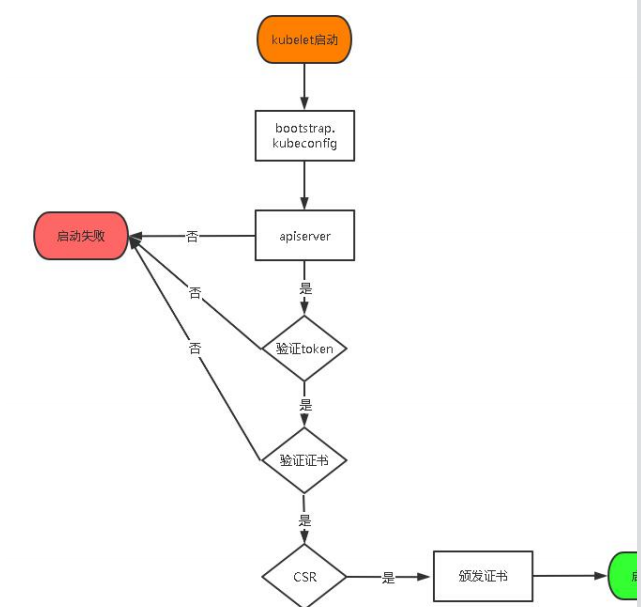

- TLS Bootstraping:Master apiserver 启用 TLS 认证后,Node 节点 kubelet 和 kube-proxy 要与 kube-apiserver 进行通信,必须使用 CA 签发的有效证书才可以,当 Node 节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet 会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。

- 所以强烈建议在 Node 上使用这种方式,目前主要用于 kubelet,kube-proxy 还是由我们统一颁发一个证书。

- TLS bootstraping 工作流程:

具体操作如下:

# 创建上述配置文件中 token 文件

# 格式:token,用户名,UID,用户组

# token 也可自行生成替换:head -c 16 /dev/urandom | od -An -t x | tr -d ' '

cat > /opt/kubernetes/cfg/token.csv << EOF

3681985df76a35088045c18e3e0a5467,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

4、systemd 管理 apiserver。

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

5、启动并设置开机启动。

systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

systemctl status kube-apiserver

6、授权 kubelet-bootstrap 用户允许请求证书。我这一步报错:error: failed to create clusterrolebinding: Post "[http://localhost:8080/apis/rbac.authorization.k8s.io/v1/clusterrolebindings?fieldManager=kubectl-create":](http://localhost:8080/apis/rbac.authorization.k8s.io/v1/clusterrolebindings?fieldManager=kubectl-create":) dial tcp 127.0.0.1:8080: connect: connection refused

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

# 如果存在则删除

kubectl delete clusterrolebindings kubelet-bootstrap

4.4 部署kube-controller-manager

1、创建配置文件。

–master:通过本地非安全本地端口 8080 连接 apiserver。 –leader-elect:当该组件启动多个时,自动选举(HA) –cluster-signing-cert-file/–cluster-signing-key-file:自动为 kubelet 颁发证书 的 CA,与 apiserver 保持一致

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect=true \\

--master=127.0.0.1:8080 \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF

2、systemd 管理 controller-manager。

cat > /etc/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

3、启动并设置开机启动。

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

systemctl status kube-controller-manager

4.5 部署kube-scheduler

1、创建配置文件。

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect \

--master=127.0.0.1:8080 \

--bind-address=127.0.0.1"

EOF

2、systemd 管理 scheduler。

cat > /etc/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

3、启动并设置开机启动。

systemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

systemctl status kube-scheduler

4、查看集群状态,所有组件都已经启动成功,通过 kubectl 工具查看当前集群组件状态:

kubectl get cs

5. 部署Work Node

5.1 创建工作目录并拷贝二进制文件

在所有的 worker node 服务器上操作:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从 master 节点拷贝:

tar -zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kubectl /usr/bin/

cp kubelet kube-proxy /opt/kubernetes/bin # 本地拷贝

5.2 部署kubelet

1、创建配置文件。

–hostname-override:显示名称,集群中唯一 –network-plugin:启用 CNI –kubeconfig:空路径,会自动生成,后面用于连接 apiserver –bootstrap-kubeconfig:首次启动向 apiserver 申请证书 –config:配置参数文件 –cert-dir:kubelet 证书生成目录 –pod-infra-container-image:管理 Pod 网络容器的镜像

# 这里需要手动修改hostname-overrider=k8s-node01

cat > /opt/kubernetes/cfg/kubelet.conf << EOF

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--hostname-override=k8s-node01 \\

--network-plugin=cni \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=lizhenliang/pause-amd64:3.0"

EOF

2、配置参数文件。

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

3、将 master 一些配置文件拷贝到 node 节点上。

scp -r /opt/kubernetes/ssl root@192.168.58.22:/opt/kubernetes

scp -r /opt/kubernetes/ssl root@192.168.58.23:/opt/kubernetes

4、在生成kubernetes证书的目录下执行以下命令生成kubeconfig文件:

# 生成 bootstrap.kubeconfig 文件

KUBE_APISERVER="https://192.168.58.21:6443" # apiserver IP:PORT

TOKEN="3681985df76a35088045c18e3e0a5467" # 与token.csv里保持一致

5、生成 kubelet bootstrap kubeconfig 配置文件。

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

mv bootstrap.kubeconfig /opt/kubernetes/cfg

6、systemd 管理 kubelet。

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

7、启动并设置开机启动。

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

systemctl status kubelet

5.3 批准kubelet证书申请并加入集群(master操作)

# 查看kubelet证书请求

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-5rKdgT7bN0WwmMHGtuq2uQYV7ThGce5xa6G3F0ZbNpc 12s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-aUSjUXBVumO4BfPEuLl4qGhiLpgXZQQWTqRsIiiStOc 8s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

# 批准申请

kubectl certificate approve node-csr-5rKdgT7bN0WwmMHGtuq2uQYV7ThGce5xa6G3F0ZbNpc

kubectl certificate approve node-csr-aUSjUXBVumO4BfPEuLl4qGhiLpgXZQQWTqRsIiiStOc

# 查看节点

[root@k8s-master01 cfg]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node01 NotReady <none> 4s v1.18.3

k8s-node02 NotReady <none> 3s v1.18.3

注:由于网络插件还没有部署,节点会没有准备就绪 NotReady,我这里节点没有显示。

7.4 部署kube-proxy

1、创建配置文件。

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

2、配置参数文件。

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: m1

clusterCIDR: 10.0.0.0/24

EOF

3、生成 kube-proxy.kubeconfig 文件(master 生成传到 node)。

# 切换工作目录

cd ~/certs/k8s

# 创建证书请求文件

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

scp -r /root/certs/k8s/kube-proxy*pem root@192.168.58.22:/opt/kubernetes/ssl

scp -r /root/certs/k8s/kube-proxy*pem root@192.168.58.23:/opt/kubernetes/ssl

4、生成 kubeconfig 文件:

# 拷贝证书到ssl目录

cp ~/certs/k8s/kube-proxy*pem /opt/kubernetes/ssl

# ssl目录下操作

KUBE_APISERVER="https://192.168.58.21:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

mv kube-proxy.kubeconfig /opt/kubernetes/cfg/

5、systemd管理kube-proxy。

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

6、启动并设置开机启动。

systemctl daemon-reload

systemctl start kube-proxy

systemctl enable kube-proxy

systemctl status kube-proxy

7.5 部署CNI网络

1、准备好 CNI 二进制文件:https://github.com/containernetworking/plugins/releases。

2、node 节点操作。

mkdir /opt/cni/bin -p

tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

scp -r /opt/cni root@192.168.58.22:/opt/

scp -r /opt/cni root@192.168.58.23:/opt/

3、master 节点操作:

kubectl apply -f kube-flannel.yml

kubectl get pods -n kube-system

kubectl get node

7.6 授权apiserver访问kubelet

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

kubectl apply -f apiserver-to-kubelet-rbac.yaml

7.7 新增加Work Node

1、拷贝已部署好的 Node 相关文件到新节点:在 master 节点将 Worker Node 涉及文件拷贝到新节点 192.168.31.72/73

scp -r /opt/kubernetes root@192.168.31.72:/opt/

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.31.72:/usr/lib/systemd/system

scp -r /opt/cni/ root@192.168.31.72:/opt/

scp /opt/kubernetes/ssl/ca.pem root@192.168.31.72:/opt/kubernetes/ssl

2、删除 kubelet 证书和 kubeconfig 文件。

注:这几个文件是证书申请审批后自动生成的,每个 Node 不同,必须删除重新生成。

rm /opt/kubernetes/cfg/kubelet.kubeconfig

rm -f /opt/kubernetes/ssl/kubelet*

3、修改主机名。

# vi /opt/kubernetes/cfg/kubelet.conf

--hostname-override=k8s-node1

# vi /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: k8s-node1

4、启动并设置开机启动。

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

5、在 Master 上批准新 Node kubelet 证书申请。

kubectl get csr

kubectl certificate approve node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei- aE2jyTP81Uro

# 查看节点状态

Kubectl get node

6. 运行测试示例

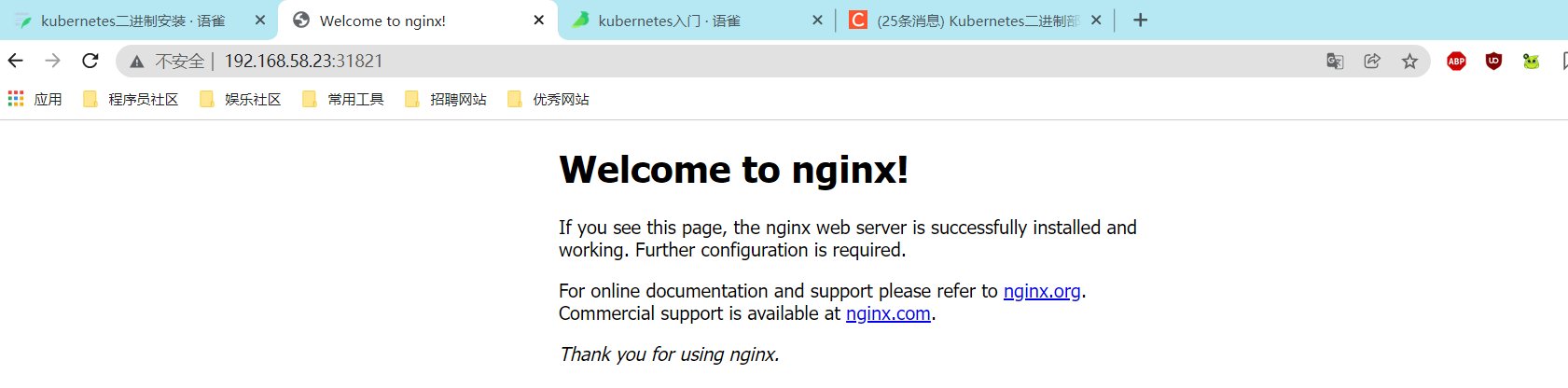

创建一个Nginx Web,测试集群是否正常工作:

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort

kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort

查看Pod,Service:

[root@k8s-master01 yamlconfig]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-f89759699-n8tqk 1/1 Running 0 2m8s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 64m

service/nginx NodePort 10.0.0.54 <none> 80:31821/TCP 2m4s

浏览器访问:http://192.168.58.22:31821,即可成功访问到 nginx。这里需要注意,因没有在 master 节点上配置 kubelet 和 kube-proxy,所以 master 节点无法访问。

7. 参考资料

- 尚硅谷 k8s 学习文档。

- https://blog.csdn.net/qq_40942490/article/details/114022294。

- https://blog.csdn.net/yujia_666/article/details/106896723?spm=1001.2101.3001.6650.5&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7Edefault-5.pc_relevant_paycolumn_v2&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7Edefault-5.pc_relevant_paycolumn_v2&utm_relevant_index=9。