背景介绍

幸福感是一个古老而深刻的话题,是人类世代追求的方向。与幸福感相关的因素成千上万、因人而异,大如国计民生,小如路边烤红薯,都会对幸福感产生影响。这些错综复杂的因素中,我们能找到其中的共性,一窥幸福感的要义吗?

另外,在社会科学领域,幸福感的研究占有重要的位置。这个涉及了哲学、心理学、社会学、经济学等多方学科的话题复杂而有趣;同时与大家生活息息相关,每个人对幸福感都有自己的衡量标准。如果能发现影响幸福感的共性,生活中是不是将多一些乐趣;如果能找到影响幸福感的政策因素,便能优化资源配置来提升国民的幸福感。目前社会科学研究注重变量的可解释性和未来政策的落地,主要采用了线性回归和逻辑回归的方法,在收入、健康、职业、社交关系、休闲方式等经济人口因素;以及政府公共服务、宏观经济环境、税负等宏观因素上有了一系列的推测和发现。

该案例为幸福感预测这一经典课题,希望在现有社会科学研究外有其他维度的算法尝试,结合多学科各自优势,挖掘潜在的影响因素,发现更多可解释、可理解的相关关系。

具体来说,该案例就是一个数据挖掘类型的比赛——幸福感预测的baseline。具体来说,我们需要使用包括个体变量(性别、年龄、地域、职业、健康、婚姻与政治面貌等等)、家庭变量(父母、配偶、子女、家庭资本等等)、社会态度(公平、信用、公共服务等等)等139维度的信息来预测其对幸福感的影响。

我们的数据来源于国家官方的《中国综合社会调查(CGSS)》文件中的调查结果中的数据,数据来源可靠可依赖:)

数据信息

赛题要求使用以上139维的特征,使用8000余组数据进行对于个人幸福感的预测(预测值为1,2,3,4,5,其中1代表幸福感最低,5代表幸福感最高)。 因为考虑到变量个数较多,部分变量间关系复杂,数据分为完整版和精简版两类。可从精简版入手熟悉赛题后,使用完整版挖掘更多信息。在这里我直接使用了完整版的数据。赛题也给出了index文件中包含每个变量对应的问卷题目,以及变量取值的含义;survey文件中为原版问卷,作为补充以方便理解问题背景。

评价指标

实战项目代码

1.导入包

import osimport timeimport pandas as pdimport numpy as npimport seaborn as snsimport matplotlib.pyplot as pltimport lightgbm as lgbimport xgboost as xgbimport loggingfrom datetime import datetimefrom sklearn import preprocessingfrom sklearn import metricsfrom sklearn.metrics import roc_auc_score, roc_curve, mean_squared_error,mean_absolute_error, f1_scorefrom sklearn.ensemble import RandomForestRegressor as rfrfrom sklearn.ensemble import ExtraTreesRegressor as etrfrom sklearn.ensemble import GradientBoostingRegressor as gbrfrom sklearn.ensemble import RandomForestClassifierfrom sklearn.linear_model import BayesianRidge as brfrom sklearn.linear_model import Ridgefrom sklearn.linear_model import Lassofrom sklearn.linear_model import LinearRegression as lrfrom sklearn.linear_model import LogisticRegressionfrom sklearn.linear_model import ElasticNet as enfrom sklearn.linear_model import Perceptronfrom sklearn.linear_model import SGDClassifierfrom sklearn.kernel_ridge import KernelRidge as krfrom sklearn.model_selection import KFold, StratifiedKFold,GroupKFold, RepeatedKFoldfrom sklearn.model_selection import train_test_splitfrom sklearn.model_selection import GridSearchCVfrom sklearn.svm import SVC, LinearSVCfrom sklearn.neighbors import KNeighborsClassifierfrom sklearn.naive_bayes import GaussianNBfrom sklearn.tree import DecisionTreeClassifierimport warningswarnings.filterwarnings('ignore') #消除warning

2.导入数据集

train = pd.read_csv("train.csv", parse_dates=['survey_time'],encoding='latin-1')test = pd.read_csv("test.csv", parse_dates=['survey_time'],encoding='latin-1') #latin-1向下兼容ASCIItrain = train[train["happiness"]!=-8].reset_index(drop=True)train_data_copy = train.copy() #删去"happiness" 为-8的行target_col = "happiness" #目标列target = train_data_copy[target_col]del train_data_copy[target_col] #去除目标列# 一般来说要对测试集进行分开来处理,不然会有数据泄露的风险data = pd.concat([train_data_copy,test],axis=0,ignore_index=True)train.columns

:::success

Index([‘id’, ‘happiness’, ‘survey_type’, ‘province’, ‘city’, ‘county’,

‘survey_time’, ‘gender’, ‘birth’, ‘nationality’,

… ,’neighbor_familiarity’, ‘public_service_1’, ‘public_service_2’,

‘public_service_3’, ‘public_service_4’, ‘public_service_5’,

‘public_service_6’, ‘public_service_7’, ‘public_service_8’,

‘public_service_9’],

dtype=’object’, length=140)

:::

train.happiness.describe() #数据的基本信息

:::success

count 7988.000000

mean 3.867927

std 0.818717

min 1.000000

25% 4.000000

50% 4.000000

75% 4.000000

max 5.000000

Name: happiness, dtype: float64

:::

3.数据预处理

首先需要对于数据中的连续出现的负数值进行处理。由于数据中的负数值只有-1,-2,-3,-8这几种数值,所以它们进行分别的操作,实现代码如下:

#make feature +5#csv中有复数值:-1、-2、-3、-8,将他们视为有问题的特征,但是不删去def getres1(row):return len([x for x in row.values if type(x)==int and x<0])def getres2(row):return len([x for x in row.values if type(x)==int and x==-8])def getres3(row):return len([x for x in row.values if type(x)==int and x==-1])def getres4(row):return len([x for x in row.values if type(x)==int and x==-2])def getres5(row):return len([x for x in row.values if type(x)==int and x==-3])#检查数据data['neg1'] = data[data.columns].apply(lambda row:getres1(row),axis=1)data.loc[data['neg1']>20,'neg1'] = 20 #平滑处理,最多出现20次data['neg2'] = data.apply(lambda row:getres2(row),axis=1)data['neg3'] = data.apply(lambda row:getres3(row),axis=1)data['neg4'] = data.apply(lambda row:getres4(row),axis=1)data['neg5'] = data.apply(lambda row:getres5(row),axis=1)

填充缺失值,在这里我采取的方式是将缺失值补全,使用fillna(value),其中value的数值根据具体的情况来确定。例如将大部分缺失信息认为是零,将家庭成员数认为是1,将家庭收入这个特征认为是66365,即所有家庭的收入平均值。部分实现代码如下:

#填充缺失值 共25列 去掉4列 填充21列#以下的列都是缺省的,视情况填补data['work_status'] = data['work_status'].fillna(0)data['work_yr'] = data['work_yr'].fillna(0)data['work_manage'] = data['work_manage'].fillna(0)data['work_type'] = data['work_type'].fillna(0)data['edu_yr'] = data['edu_yr'].fillna(0)data['edu_status'] = data['edu_status'].fillna(0)data['s_work_type'] = data['s_work_type'].fillna(0)data['s_work_status'] = data['s_work_status'].fillna(0)data['s_political'] = data['s_political'].fillna(0)data['s_hukou'] = data['s_hukou'].fillna(0)data['s_income'] = data['s_income'].fillna(0)data['s_birth'] = data['s_birth'].fillna(0)data['s_edu'] = data['s_edu'].fillna(0)data['s_work_exper'] = data['s_work_exper'].fillna(0)data['minor_child'] = data['minor_child'].fillna(0)data['marital_now'] = data['marital_now'].fillna(0)data['marital_1st'] = data['marital_1st'].fillna(0)data['social_neighbor']=data['social_neighbor'].fillna(0)data['social_friend']=data['social_friend'].fillna(0)data['hukou_loc']=data['hukou_loc'].fillna(1) #最少为1,表示户口data['family_income']=data['family_income'].fillna(66365) #删除问题值后的平均值

除此之外,还有特殊格式的信息需要另外处理,比如与时间有关的信息,这里主要分为两部分进行处理:

首先是将“连续”的年龄,进行分层处理,即划分年龄段,具体地在这里我们将年龄分为了6个区间。

其次是计算具体的年龄,在Excel表格中,只有出生年月以及调查时间等信息,我们根据此计算出每一位调查者的真实年龄。具体实现代码如下:

#144+1 =145#继续进行特殊的列进行数据处理#读happiness_index.xlsxdata['survey_time'] = pd.to_datetime(data['survey_time'], format='%Y-%m-%d',errors='coerce')#防止时间格式不同的报错errors='coerce‘data['survey_time'] = data['survey_time'].dt.year #仅仅是year,方便计算年龄data['age'] = data['survey_time']-data['birth']# print(data['age'],data['survey_time'],data['birth'])#年龄分层 145+1=146bins = [0,17,26,34,50,63,100]data['age_bin'] = pd.cut(data['age'], bins, labels=[0,1,2,3,4,5])

在这里因为家庭的收入是连续值,所以不能再使用取众数的方法进行处理,这里就直接使用了均值进行缺失值的补全。第三种方法是使用我们日常生活中的真实情况,例如“宗教信息”特征为负数的认为是“不信仰宗教”,并认为“参加宗教活动的频率”为1,即没有参加过宗教活动,主观的进行补全,这也是我在这一步骤中使用最多的一种方式。就像我自己填表一样,这里我全部都使用了我自己的想法进行缺省值的补全。

#对‘宗教’处理data.loc[data['religion']<0,'religion'] = 1 #1为不信仰宗教data.loc[data['religion_freq']<0,'religion_freq'] = 1 #1为从来没有参加过#对‘教育程度’处理data.loc[data['edu']<0,'edu'] = 4 #初中data.loc[data['edu_status']<0,'edu_status'] = 0data.loc[data['edu_yr']<0,'edu_yr'] = 0#对‘个人收入’处理data.loc[data['income']<0,'income'] = 0 #认为无收入#对‘政治面貌’处理data.loc[data['political']<0,'political'] = 1 #认为是群众#对体重处理data.loc[(data['weight_jin']<=80)&(data['height_cm']>=160),'weight_jin']= data['weight_jin']*2data.loc[data['weight_jin']<=60,'weight_jin']= data['weight_jin']*2 #个人的想法,哈哈哈,没有60斤的成年人吧#对身高处理data.loc[data['height_cm']<150,'height_cm'] = 150 #成年人的实际情况#对‘健康’处理data.loc[data['health']<0,'health'] = 4 #认为是比较健康data.loc[data['health_problem']<0,'health_problem'] = 4#对‘沮丧’处理data.loc[data['depression']<0,'depression'] = 4 #一般人都是很少吧#对‘媒体’处理data.loc[data['media_1']<0,'media_1'] = 1 #都是从不data.loc[data['media_2']<0,'media_2'] = 1data.loc[data['media_3']<0,'media_3'] = 1data.loc[data['media_4']<0,'media_4'] = 1data.loc[data['media_5']<0,'media_5'] = 1data.loc[data['media_6']<0,'media_6'] = 1#对‘空闲活动’处理data.loc[data['leisure_1']<0,'leisure_1'] = 1 #都是根据自己的想法data.loc[data['leisure_2']<0,'leisure_2'] = 5data.loc[data['leisure_3']<0,'leisure_3'] = 3

使用众数(代码中使用mode()来实现异常值的修正),由于这里的特征是空闲活动,所以采用众数对于缺失值进行处理比较合理。具体的代码参考如下:

data.loc[data['leisure_4']<0,'leisure_4'] = data['leisure_4'].mode() #取众数data.loc[data['leisure_5']<0,'leisure_5'] = data['leisure_5'].mode()data.loc[data['leisure_6']<0,'leisure_6'] = data['leisure_6'].mode()data.loc[data['leisure_7']<0,'leisure_7'] = data['leisure_7'].mode()data.loc[data['leisure_8']<0,'leisure_8'] = data['leisure_8'].mode()data.loc[data['leisure_9']<0,'leisure_9'] = data['leisure_9'].mode()data.loc[data['leisure_10']<0,'leisure_10'] = data['leisure_10'].mode()data.loc[data['leisure_11']<0,'leisure_11'] = data['leisure_11'].mode()data.loc[data['leisure_12']<0,'leisure_12'] = data['leisure_12'].mode()data.loc[data['socialize']<0,'socialize'] = 2 #很少data.loc[data['relax']<0,'relax'] = 4 #经常data.loc[data['learn']<0,'learn'] = 1 #从不,哈哈,也有可能是学习时间干其他的了#对‘社交’处理data.loc[data['social_neighbor']<0,'social_neighbor'] = 0data.loc[data['social_friend']<0,'social_friend'] = 0data.loc[data['socia_outing']<0,'socia_outing'] = 1data.loc[data['neighbor_familiarity']<0,'social_neighbor']= 4#对‘社会公平性’处理data.loc[data['equity']<0,'equity'] = 4#对‘社会等级’处理data.loc[data['class_10_before']<0,'class_10_before'] = 3data.loc[data['class']<0,'class'] = 5data.loc[data['class_10_after']<0,'class_10_after'] = 5data.loc[data['class_14']<0,'class_14'] = 2#对‘工作情况’处理data.loc[data['work_status']<0,'work_status'] = 0data.loc[data['work_yr']<0,'work_yr'] = 0data.loc[data['work_manage']<0,'work_manage'] = 0data.loc[data['work_type']<0,'work_type'] = 0#对‘社会保障’处理data.loc[data['insur_1']<0,'insur_1'] = 1data.loc[data['insur_2']<0,'insur_2'] = 1data.loc[data['insur_3']<0,'insur_3'] = 1data.loc[data['insur_4']<0,'insur_4'] = 1data.loc[data['insur_1']==0,'insur_1'] = 0data.loc[data['insur_2']==0,'insur_2'] = 0data.loc[data['insur_3']==0,'insur_3'] = 0data.loc[data['insur_4']==0,'insur_4'] = 0

取均值进行缺失值的补全(代码实现为means()),在这里因为家庭的收入是连续值,所以不能再使用取众数的方法进行处理,这里就直接使用了均值进行缺失值的补全。具体的代码参考如下:

#对家庭情况处理family_income_mean = data['family_income'].mean()data.loc[data['family_income']<0,'family_income'] = family_income_meandata.loc[data['family_m']<0,'family_m'] = 2data.loc[data['family_status']<0,'family_status'] = 3data.loc[data['house']<0,'house'] = 1data.loc[data['car']<0,'car'] = 0data.loc[data['car']==2,'car'] = 0data.loc[data['son']<0,'son'] = 1data.loc[data['daughter']<0,'daughter'] = 0data.loc[data['minor_child']<0,'minor_child'] = 0#对‘婚姻’处理data.loc[data['marital_1st']<0,'marital_1st'] = 0data.loc[data['marital_now']<0,'marital_now'] = 0#对‘配偶’处理data.loc[data['s_birth']<0,'s_birth'] = 0data.loc[data['s_edu']<0,'s_edu'] = 0data.loc[data['s_political']<0,'s_political'] = 0data.loc[data['s_hukou']<0,'s_hukou'] = 0data.loc[data['s_income']<0,'s_income'] = 0data.loc[data['s_work_type']<0,'s_work_type'] = 0data.loc[data['s_work_status']<0,'s_work_status'] = 0data.loc[data['s_work_exper']<0,'s_work_exper'] = 0#对‘父母情况’处理data.loc[data['f_birth']<0,'f_birth'] = 1945data.loc[data['f_edu']<0,'f_edu'] = 1data.loc[data['f_political']<0,'f_political'] = 1data.loc[data['f_work_14']<0,'f_work_14'] = 2data.loc[data['m_birth']<0,'m_birth'] = 1940data.loc[data['m_edu']<0,'m_edu'] = 1data.loc[data['m_political']<0,'m_political'] = 1data.loc[data['m_work_14']<0,'m_work_14'] = 2#和同龄人相比社会经济地位data.loc[data['status_peer']<0,'status_peer'] = 2#和3年前比社会经济地位data.loc[data['status_3_before']<0,'status_3_before'] = 2#对‘观点’处理data.loc[data['view']<0,'view'] = 4#对期望年收入处理data.loc[data['inc_ability']<=0,'inc_ability']= 2inc_exp_mean = data['inc_exp'].mean()data.loc[data['inc_exp']<=0,'inc_exp']= inc_exp_mean #取均值#部分特征处理,取众数for i in range(1,9+1):data.loc[data['public_service_'+str(i)]<0,'public_service_'+str(i)] = int(data['public_service_'+str(i)].dropna().mode().values)for i in range(1,13+1):data.loc[data['trust_'+str(i)]<0,'trust_'+str(i)] = int(data['trust_'+str(i)].dropna().mode().values)

4.数据增广

这一步,我们需要进一步分析每一个特征之间的关系,从而进行数据增广。经过思考,这里我添加了如下的特征:第一次结婚年龄、最近结婚年龄、是否再婚、配偶年龄、配偶年龄差、各种收入比(与配偶之间的收入比、十年后预期收入与现在收入之比等等)、收入与住房面积比(其中也包括10年后期望收入等等各种情况)、社会阶级(10年后的社会阶级、14年后的社会阶级等等)、悠闲指数、满意指数、信任指数等等。除此之外,我还考虑了对于同一省、市、县进行了归一化。例如同一省市内的收入的平均值等以及一个个体相对于同省、市、县其他人的各个指标的情况。同时也考虑了对于同龄人之间的相互比较,即在同龄人中的收入情况、健康情况等等。具体的实现代码如下:

#第一次结婚年龄 147data['marital_1stbir'] = data['marital_1st'] - data['birth']#最近结婚年龄 148data['marital_nowtbir'] = data['marital_now'] - data['birth']#是否再婚 149data['mar'] = data['marital_nowtbir'] - data['marital_1stbir']#配偶年龄 150data['marital_sbir'] = data['marital_now']-data['s_birth']#配偶年龄差 151data['age_'] = data['marital_nowtbir'] - data['marital_sbir']#收入比 151+7 =158data['income/s_income'] = data['income']/(data['s_income']+1)data['income+s_income'] = data['income']+(data['s_income']+1)data['income/family_income'] = data['income']/(data['family_income']+1)data['all_income/family_income'] = (data['income']+data['s_income'])/(data['family_income']+1)data['income/inc_exp'] = data['income']/(data['inc_exp']+1)data['family_income/m'] = data['family_income']/(data['family_m']+0.01)data['income/m'] = data['income']/(data['family_m']+0.01)#收入/面积比 158+4=162data['income/floor_area'] = data['income']/(data['floor_area']+0.01)data['all_income/floor_area'] = (data['income']+data['s_income'])/(data['floor_area']+0.01)data['family_income/floor_area'] = data['family_income']/(data['floor_area']+0.01)data['floor_area/m'] = data['floor_area']/(data['family_m']+0.01)#class 162+3=165data['class_10_diff'] = (data['class_10_after'] - data['class'])data['class_diff'] = data['class'] - data['class_10_before']data['class_14_diff'] = data['class'] - data['class_14']#悠闲指数 166leisure_fea_lis = ['leisure_'+str(i) for i in range(1,13)]data['leisure_sum'] = data[leisure_fea_lis].sum(axis=1) #skew#满意指数 167public_service_fea_lis = ['public_service_'+str(i) for i in range(1,10)]data['public_service_sum'] = data[public_service_fea_lis].sum(axis=1) #skew#信任指数 168trust_fea_lis = ['trust_'+str(i) for i in range(1,14)]data['trust_sum'] = data[trust_fea_lis].sum(axis=1) #skew#province 省 mean 168+13=181data['province_income_mean'] = data.groupby(['province'])['income'].transform('mean').valuesdata['province_family_income_mean'] = data.groupby(['province'])['family_income'].transform('mean').valuesdata['province_equity_mean'] = data.groupby(['province'])['equity'].transform('mean').valuesdata['province_depression_mean'] = data.groupby(['province'])['depression'].transform('mean').valuesdata['province_floor_area_mean'] = data.groupby(['province'])['floor_area'].transform('mean').valuesdata['province_health_mean'] = data.groupby(['province'])['health'].transform('mean').valuesdata['province_class_10_diff_mean'] = data.groupby(['province'])['class_10_diff'].transform('mean').valuesdata['province_class_mean'] = data.groupby(['province'])['class'].transform('mean').valuesdata['province_health_problem_mean'] = data.groupby(['province'])['health_problem'].transform('mean').valuesdata['province_family_status_mean'] = data.groupby(['province'])['family_status'].transform('mean').valuesdata['province_leisure_sum_mean'] = data.groupby(['province'])['leisure_sum'].transform('mean').valuesdata['province_public_service_sum_mean'] = data.groupby(['province'])['public_service_sum'].transform('mean').valuesdata['province_trust_sum_mean'] = data.groupby(['province'])['trust_sum'].transform('mean').values#city 城 mean 181+13=194data['city_income_mean'] = data.groupby(['city'])['income'].transform('mean').valuesdata['city_family_income_mean'] = data.groupby(['city'])['family_income'].transform('mean').valuesdata['city_equity_mean'] = data.groupby(['city'])['equity'].transform('mean').valuesdata['city_depression_mean'] = data.groupby(['city'])['depression'].transform('mean').valuesdata['city_floor_area_mean'] = data.groupby(['city'])['floor_area'].transform('mean').valuesdata['city_health_mean'] = data.groupby(['city'])['health'].transform('mean').valuesdata['city_class_10_diff_mean'] = data.groupby(['city'])['class_10_diff'].transform('mean').valuesdata['city_class_mean'] = data.groupby(['city'])['class'].transform('mean').valuesdata['city_health_problem_mean'] = data.groupby(['city'])['health_problem'].transform('mean').valuesdata['city_family_status_mean'] = data.groupby(['city'])['family_status'].transform('mean').valuesdata['city_leisure_sum_mean'] = data.groupby(['city'])['leisure_sum'].transform('mean').valuesdata['city_public_service_sum_mean'] = data.groupby(['city'])['public_service_sum'].transform('mean').valuesdata['city_trust_sum_mean'] = data.groupby(['city'])['trust_sum'].transform('mean').values#county 县 mean 194 + 13 = 207data['county_income_mean'] = data.groupby(['county'])['income'].transform('mean').valuesdata['county_family_income_mean'] = data.groupby(['county'])['family_income'].transform('mean').valuesdata['county_equity_mean'] = data.groupby(['county'])['equity'].transform('mean').valuesdata['county_depression_mean'] = data.groupby(['county'])['depression'].transform('mean').valuesdata['county_floor_area_mean'] = data.groupby(['county'])['floor_area'].transform('mean').valuesdata['county_health_mean'] = data.groupby(['county'])['health'].transform('mean').valuesdata['county_class_10_diff_mean'] = data.groupby(['county'])['class_10_diff'].transform('mean').valuesdata['county_class_mean'] = data.groupby(['county'])['class'].transform('mean').valuesdata['county_health_problem_mean'] = data.groupby(['county'])['health_problem'].transform('mean').valuesdata['county_family_status_mean'] = data.groupby(['county'])['family_status'].transform('mean').valuesdata['county_leisure_sum_mean'] = data.groupby(['county'])['leisure_sum'].transform('mean').valuesdata['county_public_service_sum_mean'] = data.groupby(['county'])['public_service_sum'].transform('mean').valuesdata['county_trust_sum_mean'] = data.groupby(['county'])['trust_sum'].transform('mean').values#ratio 相比同省 207 + 13 =220data['income/province'] = data['income']/(data['province_income_mean'])data['family_income/province'] = data['family_income']/(data['province_family_income_mean'])data['equity/province'] = data['equity']/(data['province_equity_mean'])data['depression/province'] = data['depression']/(data['province_depression_mean'])data['floor_area/province'] = data['floor_area']/(data['province_floor_area_mean'])data['health/province'] = data['health']/(data['province_health_mean'])data['class_10_diff/province'] = data['class_10_diff']/(data['province_class_10_diff_mean'])data['class/province'] = data['class']/(data['province_class_mean'])data['health_problem/province'] = data['health_problem']/(data['province_health_problem_mean'])data['family_status/province'] = data['family_status']/(data['province_family_status_mean'])data['leisure_sum/province'] = data['leisure_sum']/(data['province_leisure_sum_mean'])data['public_service_sum/province'] = data['public_service_sum']/(data['province_public_service_sum_mean'])data['trust_sum/province'] = data['trust_sum']/(data['province_trust_sum_mean']+1)#ratio 相比同市 220 + 13 =233data['income/city'] = data['income']/(data['city_income_mean'])data['family_income/city'] = data['family_income']/(data['city_family_income_mean'])data['equity/city'] = data['equity']/(data['city_equity_mean'])data['depression/city'] = data['depression']/(data['city_depression_mean'])data['floor_area/city'] = data['floor_area']/(data['city_floor_area_mean'])data['health/city'] = data['health']/(data['city_health_mean'])data['class_10_diff/city'] = data['class_10_diff']/(data['city_class_10_diff_mean'])data['class/city'] = data['class']/(data['city_class_mean'])data['health_problem/city'] = data['health_problem']/(data['city_health_problem_mean'])data['family_status/city'] = data['family_status']/(data['city_family_status_mean'])data['leisure_sum/city'] = data['leisure_sum']/(data['city_leisure_sum_mean'])data['public_service_sum/city'] = data['public_service_sum']/(data['city_public_service_sum_mean'])data['trust_sum/city'] = data['trust_sum']/(data['city_trust_sum_mean'])#ratio 相比同个地区 233 + 13 =246data['income/county'] = data['income']/(data['county_income_mean'])data['family_income/county'] = data['family_income']/(data['county_family_income_mean'])data['equity/county'] = data['equity']/(data['county_equity_mean'])data['depression/county'] = data['depression']/(data['county_depression_mean'])data['floor_area/county'] = data['floor_area']/(data['county_floor_area_mean'])data['health/county'] = data['health']/(data['county_health_mean'])data['class_10_diff/county'] = data['class_10_diff']/(data['county_class_10_diff_mean'])data['class/county'] = data['class']/(data['county_class_mean'])data['health_problem/county'] = data['health_problem']/(data['county_health_problem_mean'])data['family_status/county'] = data['family_status']/(data['county_family_status_mean'])data['leisure_sum/county'] = data['leisure_sum']/(data['county_leisure_sum_mean'])data['public_service_sum/county'] = data['public_service_sum']/(data['county_public_service_sum_mean'])data['trust_sum/county'] = data['trust_sum']/(data['county_trust_sum_mean'])#age mean 246+ 13 =259data['age_income_mean'] = data.groupby(['age'])['income'].transform('mean').valuesdata['age_family_income_mean'] = data.groupby(['age'])['family_income'].transform('mean').valuesdata['age_equity_mean'] = data.groupby(['age'])['equity'].transform('mean').valuesdata['age_depression_mean'] = data.groupby(['age'])['depression'].transform('mean').valuesdata['age_floor_area_mean'] = data.groupby(['age'])['floor_area'].transform('mean').valuesdata['age_health_mean'] = data.groupby(['age'])['health'].transform('mean').valuesdata['age_class_10_diff_mean'] = data.groupby(['age'])['class_10_diff'].transform('mean').valuesdata['age_class_mean'] = data.groupby(['age'])['class'].transform('mean').valuesdata['age_health_problem_mean'] = data.groupby(['age'])['health_problem'].transform('mean').valuesdata['age_family_status_mean'] = data.groupby(['age'])['family_status'].transform('mean').valuesdata['age_leisure_sum_mean'] = data.groupby(['age'])['leisure_sum'].transform('mean').valuesdata['age_public_service_sum_mean'] = data.groupby(['age'])['public_service_sum'].transform('mean').valuesdata['age_trust_sum_mean'] = data.groupby(['age'])['trust_sum'].transform('mean').values# 和同龄人相比259 + 13 =272data['income/age'] = data['income']/(data['age_income_mean'])data['family_income/age'] = data['family_income']/(data['age_family_income_mean'])data['equity/age'] = data['equity']/(data['age_equity_mean'])data['depression/age'] = data['depression']/(data['age_depression_mean'])data['floor_area/age'] = data['floor_area']/(data['age_floor_area_mean'])data['health/age'] = data['health']/(data['age_health_mean'])data['class_10_diff/age'] = data['class_10_diff']/(data['age_class_10_diff_mean'])data['class/age'] = data['class']/(data['age_class_mean'])data['health_problem/age'] = data['health_problem']/(data['age_health_problem_mean'])data['family_status/age'] = data['family_status']/(data['age_family_status_mean'])data['leisure_sum/age'] = data['leisure_sum']/(data['age_leisure_sum_mean'])data['public_service_sum/age'] = data['public_service_sum']/(data['age_public_service_sum_mean'])data['trust_sum/age'] = data['trust_sum']/(data['age_trust_sum_mean'])

经过如上的操作后,最终我们的特征从一开始的131维,扩充为了272维的特征。接下来考虑特征工程、训练模型以及模型融合的工作。

我们还应该删去有效样本数很少的特征,例如负值太多的特征或者是缺失值太多的特征,这里我一共删除了包括“目前的最高教育程度”在内的9类特征,得到了最终的263维的特征。

#272-9=263#删除数值特别少的和之前用过的特征del_list=['id','survey_time','edu_other','invest_other','property_other','join_party','province','city','county']use_feature = [clo for clo in data.columns if clo not in del_list]data.fillna(0,inplace=True) #还是补0train_shape = train.shape[0] #一共的数据量,训练集features = data[use_feature].columns #删除后所有的特征X_train_263 = data[:train_shape][use_feature].valuesy_train = targetX_test_263 = data[train_shape:][use_feature].valuesX_train_263.shape #最终一种263个特征

这里选择了最重要的49个特征,作为除了以上263维特征外的另外一组特征。(手动调整)

imp_fea_49 = ['equity','depression','health','class','family_status','health_problem','class_10_after','equity/province','equity/city','equity/county','depression/province','depression/city','depression/county','health/province','health/city','health/county','class/province','class/city','class/county','family_status/province','family_status/city','family_status/county','family_income/province','family_income/city','family_income/county','floor_area/province','floor_area/city','floor_area/county','leisure_sum/province','leisure_sum/city','leisure_sum/county','public_service_sum/province','public_service_sum/city','public_service_sum/county','trust_sum/province','trust_sum/city','trust_sum/county','income/m','public_service_sum','class_diff','status_3_before','age_income_mean','age_floor_area_mean','weight_jin','height_cm','health/age','depression/age','equity/age','leisure_sum/age']train_shape = train.shape[0]X_train_49 = data[:train_shape][imp_fea_49].valuesX_test_49 = data[train_shape:][imp_fea_49].valuesX_train_49.shape #最重要的49个特征

选择需要进行onehot编码的离散变量进行one-hot编码,再合成为第三类特征,共383维。

cat_fea = ['survey_type','gender','nationality','edu_status','political','hukou','hukou_loc','work_exper','work_status','work_type','work_manage','marital','s_political','s_hukou','s_work_exper','s_work_status','s_work_type','f_political','f_work_14','m_political','m_work_14']noc_fea = [clo for clo in use_feature if clo not in cat_fea]onehot_data = data[cat_fea].valuesenc = preprocessing.OneHotEncoder(categories = 'auto')oh_data=enc.fit_transform(onehot_data).toarray()oh_data.shape #变为onehot编码格式X_train_oh = oh_data[:train_shape,:]X_test_oh = oh_data[train_shape:,:]X_train_oh.shape #其中的训练集X_train_383 = np.column_stack([data[:train_shape][noc_fea].values,X_train_oh])#先是noc,再是cat_feaX_test_383 = np.column_stack([data[train_shape:][noc_fea].values,X_test_oh])X_train_383.shape# (7988, 383)

基于此,我们构建完成了三种特征工程(训练数据集),其一是上面提取的最重要的49中特征,其中包括健康程度、社会阶级、在同龄人中的收入情况等等特征。其二是扩充后的263维特征(这里可以认为是初始特征)。其三是使用One-hot编码后的特征,这里要使用One-hot进行编码的原因在于,有部分特征为分离值,例如性别中男女,男为1,女为2,我们想使用One-hot将其变为男为0,女为1,来增强机器学习算法的鲁棒性能;再如民族这个特征,原本是1-56这56个数值,如果直接分类会让分类器的鲁棒性变差,所以使用One-hot编码将其变为6个特征进行非零即一的处理。

5.特征建模

首先我们对于原始的263维的特征,使用lightGBM进行处理,这里我们使用5折交叉验证的方法:

5.1 lightGBM

##### lgb_263 ##lightGBM决策树lgb_263_param = {'num_leaves': 7,'min_data_in_leaf': 20, #叶子可能具有的最小记录数'objective':'regression','max_depth': -1, #树深度,-1是没有限制'learning_rate': 0.003,"boosting": "gbdt", #用gbdt算法"feature_fraction": 0.18, #例如 0.18时,意味着在每次迭代中随机选择18%的参数来建树"bagging_freq": 1,"bagging_fraction": 0.55, #每次迭代时用的数据比例"bagging_seed": 14,"metric": 'mse',"lambda_l1": 0.1005,"lambda_l2": 0.1996,"verbosity": -1}folds = StratifiedKFold(n_splits=5, shuffle=True, random_state=4) #交叉切分:5oof_lgb_263 = np.zeros(len(X_train_263))predictions_lgb_263 = np.zeros(len(X_test_263))for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):print("fold n°{}".format(fold_+1))trn_data = lgb.Dataset(X_train_263[trn_idx], y_train[trn_idx])val_data = lgb.Dataset(X_train_263[val_idx], y_train[val_idx])#train:val=4:1num_round = 10000lgb_263 = lgb.train(lgb_263_param, trn_data, num_round, valid_sets = [trn_data, val_data], verbose_eval=500, early_stopping_rounds = 800)oof_lgb_263[val_idx] = lgb_263.predict(X_train_263[val_idx], num_iteration=lgb_263.best_iteration)predictions_lgb_263 += lgb_263.predict(X_test_263, num_iteration=lgb_263.best_iteration) / folds.n_splitsprint("CV score: {:<8.8f}".format(mean_squared_error(oof_lgb_263, target)))

:::success

fold n°1

Training until validation scores don’t improve for 800 rounds

[500] training’s l2: 0.499759 valid_1’s l2: 0.532511

[1000] training’s l2: 0.451529 valid_1’s l2: 0.499127

[1500] training’s l2: 0.425443 valid_1’s l2: 0.485366

[2000] training’s l2: 0.407395 valid_1’s l2: 0.479303

[2500] training’s l2: 0.393001 valid_1’s l2: 0.475556

[3000] training’s l2: 0.380761 valid_1’s l2: 0.473666

[3500] training’s l2: 0.370005 valid_1’s l2: 0.472563

[4000] training’s l2: 0.360215 valid_1’s l2: 0.471631

[4500] training’s l2: 0.351235 valid_1’s l2: 0.470938

[5000] training’s l2: 0.342828 valid_1’s l2: 0.470683

[5500] training’s l2: 0.334901 valid_1’s l2: 0.470155

[6000] training’s l2: 0.327402 valid_1’s l2: 0.470052

[6500] training’s l2: 0.320161 valid_1’s l2: 0.470037

[7000] training’s l2: 0.313321 valid_1’s l2: 0.469874

[7500] training’s l2: 0.306768 valid_1’s l2: 0.469917

[8000] training’s l2: 0.30036 valid_1’s l2: 0.469934

Early stopping, best iteration is:

[7456] training’s l2: 0.307369 valid_1’s l2: 0.469776

fold n°2

Training until validation scores don’t improve for 800 rounds

[500] training’s l2: 0.504322 valid_1’s l2: 0.513628

[1000] training’s l2: 0.454889 valid_1’s l2: 0.47926

[1500] training’s l2: 0.428783 valid_1’s l2: 0.465975

[2000] training’s l2: 0.410928 valid_1’s l2: 0.459213

[2500] training’s l2: 0.39726 valid_1’s l2: 0.455058

[3000] training’s l2: 0.385428 valid_1’s l2: 0.45243

[3500] training’s l2: 0.374844 valid_1’s l2: 0.45074

[4000] training’s l2: 0.365256 valid_1’s l2: 0.449344

[4500] training’s l2: 0.356342 valid_1’s l2: 0.448434

[5000] training’s l2: 0.348007 valid_1’s l2: 0.447479

[5500] training’s l2: 0.339992 valid_1’s l2: 0.446721

[6000] training’s l2: 0.332347 valid_1’s l2: 0.44621

[6500] training’s l2: 0.325096 valid_1’s l2: 0.446006

[7000] training’s l2: 0.318201 valid_1’s l2: 0.445802

[7500] training’s l2: 0.311493 valid_1’s l2: 0.445323

[8000] training’s l2: 0.305194 valid_1’s l2: 0.444907

[8500] training’s l2: 0.29904 valid_1’s l2: 0.444854

[9000] training’s l2: 0.293065 valid_1’s l2: 0.44465

[9500] training’s l2: 0.287296 valid_1’s l2: 0.444403

[10000] training’s l2: 0.281761 valid_1’s l2: 0.444107

Did not meet early stopping. Best iteration is:

[10000] training’s l2: 0.281761 valid_1’s l2: 0.444107

fold n°3

Training until validation scores don’t improve for 800 rounds

[500] training’s l2: 0.50317 valid_1’s l2: 0.518027

[1000] training’s l2: 0.455063 valid_1’s l2: 0.480538

[1500] training’s l2: 0.429865 valid_1’s l2: 0.46407

[2000] training’s l2: 0.412416 valid_1’s l2: 0.455411

[2500] training’s l2: 0.398181 valid_1’s l2: 0.449859

[3000] training’s l2: 0.386273 valid_1’s l2: 0.446564

[3500] training’s l2: 0.375498 valid_1’s l2: 0.444636

[4000] training’s l2: 0.36571 valid_1’s l2: 0.442973

[4500] training’s l2: 0.356725 valid_1’s l2: 0.442256

[5000] training’s l2: 0.348308 valid_1’s l2: 0.441686

[5500] training’s l2: 0.340182 valid_1’s l2: 0.441066

[6000] training’s l2: 0.332496 valid_1’s l2: 0.440792

[6500] training’s l2: 0.325103 valid_1’s l2: 0.440478

[7000] training’s l2: 0.318144 valid_1’s l2: 0.440624

Early stopping, best iteration is:

[6645] training’s l2: 0.323029 valid_1’s l2: 0.440355

fold n°4

Training until validation scores don’t improve for 800 rounds

[500] training’s l2: 0.504279 valid_1’s l2: 0.512194

[1000] training’s l2: 0.455536 valid_1’s l2: 0.477492

[1500] training’s l2: 0.429192 valid_1’s l2: 0.465315

[2000] training’s l2: 0.411059 valid_1’s l2: 0.459402

[2500] training’s l2: 0.396766 valid_1’s l2: 0.455938

[3000] training’s l2: 0.384721 valid_1’s l2: 0.453697

[3500] training’s l2: 0.374098 valid_1’s l2: 0.452265

[4000] training’s l2: 0.364292 valid_1’s l2: 0.451164

[4500] training’s l2: 0.355251 valid_1’s l2: 0.450266

[5000] training’s l2: 0.346795 valid_1’s l2: 0.449724

[5500] training’s l2: 0.338917 valid_1’s l2: 0.449109

[6000] training’s l2: 0.331386 valid_1’s l2: 0.448832

[6500] training’s l2: 0.324066 valid_1’s l2: 0.448557

[7000] training’s l2: 0.317231 valid_1’s l2: 0.448274

[7500] training’s l2: 0.310604 valid_1’s l2: 0.448261

Early stopping, best iteration is:

[7138] training’s l2: 0.315372 valid_1’s l2: 0.448119

fold n°5

Training until validation scores don’t improve for 800 rounds

[500] training’s l2: 0.503075 valid_1’s l2: 0.519874

[1000] training’s l2: 0.454635 valid_1’s l2: 0.484867

[1500] training’s l2: 0.42871 valid_1’s l2: 0.471137

[2000] training’s l2: 0.410716 valid_1’s l2: 0.464987

[2500] training’s l2: 0.396241 valid_1’s l2: 0.46153

[3000] training’s l2: 0.383972 valid_1’s l2: 0.459225

[3500] training’s l2: 0.372947 valid_1’s l2: 0.458011

[4000] training’s l2: 0.362992 valid_1’s l2: 0.457355

[4500] training’s l2: 0.353769 valid_1’s l2: 0.45729

[5000] training’s l2: 0.345122 valid_1’s l2: 0.457312

[5500] training’s l2: 0.33702 valid_1’s l2: 0.457019

[6000] training’s l2: 0.329492 valid_1’s l2: 0.456984

[6500] training’s l2: 0.322041 valid_1’s l2: 0.457141

Early stopping, best iteration is:

[5850] training’s l2: 0.33172 valid_1’s l2: 0.456869

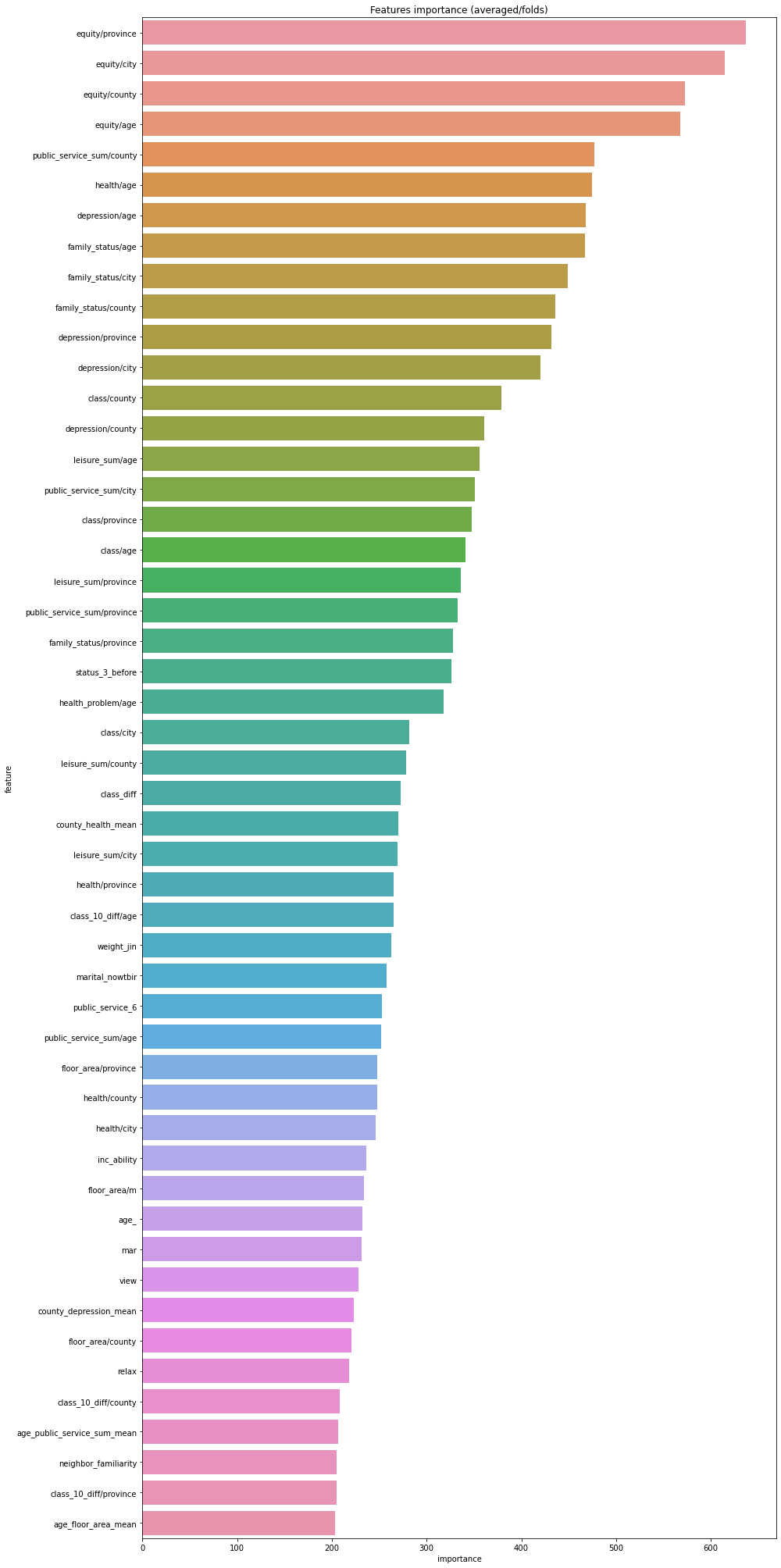

CV score: 0.45184501 ::: 接着,我使用已经训练完的lightGBM的模型进行特征重要性的判断以及可视化,从结果我们可以看出,排在重要性第一位的是health/age,就是同龄人中的健康程度,与我们主观的看法基本一致。

#---------------特征重要性pd.set_option('display.max_columns', None)#显示所有行pd.set_option('display.max_rows', None)#设置value的显示长度为100,默认为50pd.set_option('max_colwidth',100)df = pd.DataFrame(data[use_feature].columns.tolist(), columns=['feature'])df['importance']=list(lgb_263.feature_importance())df = df.sort_values(by='importance',ascending=False)plt.figure(figsize=(14,28))sns.barplot(x="importance", y="feature", data=df.head(50))plt.title('Features importance (averaged/folds)')plt.tight_layout()

后面,我们使用常见的机器学习方法,对于263维特征进行建模:

5.2 xgboost

##### xgb_263#xgboostxgb_263_params = {'eta': 0.02, #lr'max_depth': 6,'min_child_weight':3,#最小叶子节点样本权重和'gamma':0, #指定节点分裂所需的最小损失函数下降值。'subsample': 0.7, #控制对于每棵树,随机采样的比例'colsample_bytree': 0.3, #用来控制每棵随机采样的列数的占比 (每一列是一个特征)。'lambda':2,'objective': 'reg:linear','eval_metric': 'rmse','silent': True,'nthread': -1}folds = KFold(n_splits=5, shuffle=True, random_state=2019)oof_xgb_263 = np.zeros(len(X_train_263))predictions_xgb_263 = np.zeros(len(X_test_263))for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):print("fold n°{}".format(fold_+1))trn_data = xgb.DMatrix(X_train_263[trn_idx], y_train[trn_idx])val_data = xgb.DMatrix(X_train_263[val_idx], y_train[val_idx])watchlist = [(trn_data, 'train'), (val_data, 'valid_data')]xgb_263 = xgb.train(dtrain=trn_data, num_boost_round=3000, evals=watchlist, early_stopping_rounds=600, verbose_eval=500, params=xgb_263_params)oof_xgb_263[val_idx] = xgb_263.predict(xgb.DMatrix(X_train_263[val_idx]), ntree_limit=xgb_263.best_ntree_limit)predictions_xgb_263 += xgb_263.predict(xgb.DMatrix(X_test_263), ntree_limit=xgb_263.best_ntree_limit) / folds.n_splitsprint("CV score: {:<8.8f}".format(mean_squared_error(oof_xgb_263, target)))

:::success

fold n°1

[10:13:29] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/objective/regression_obj.cu:171: reg:linear is now deprecated in favor of reg:squarederror.

[10:13:29] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/learner.cc:573:

Parameters: { “silent” } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.40431 valid_data-rmse:3.38325

[500] train-rmse:0.40605 valid_data-rmse:0.70569

[1000] train-rmse:0.27191 valid_data-rmse:0.70849

[1117] train-rmse:0.24721 valid_data-rmse:0.70913

fold n°2

[10:13:43] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/objective/regression_obj.cu:171: reg:linear is now deprecated in favor of reg:squarederror.

[10:13:43] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/learner.cc:573:

Parameters: { “silent” } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.39807 valid_data-rmse:3.40794

[500] train-rmse:0.40656 valid_data-rmse:0.69137

[1000] train-rmse:0.27393 valid_data-rmse:0.69203

[1185] train-rmse:0.23678 valid_data-rmse:0.69250

fold n°3

[10:14:02] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/objective/regression_obj.cu:171: reg:linear is now deprecated in favor of reg:squarederror.

[10:14:02] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/learner.cc:573:

Parameters: { “silent” } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.40186 valid_data-rmse:3.39310

[500] train-rmse:0.41178 valid_data-rmse:0.66068

[1000] train-rmse:0.27470 valid_data-rmse:0.66375

[1091] train-rmse:0.25623 valid_data-rmse:0.66438

fold n°4

[10:14:22] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/objective/regression_obj.cu:171: reg:linear is now deprecated in favor of reg:squarederror.

[10:14:22] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/learner.cc:573:

Parameters: { “silent” } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.40240 valid_data-rmse:3.39014

[500] train-rmse:0.41224 valid_data-rmse:0.66538

[1000] train-rmse:0.27442 valid_data-rmse:0.66683

[1188] train-rmse:0.23671 valid_data-rmse:0.66777

fold n°5

[10:14:45] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/objective/regression_obj.cu:171: reg:linear is now deprecated in favor of reg:squarederror.

[10:14:45] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.4.0/src/learner.cc:573:

Parameters: { “silent” } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.39344 valid_data-rmse:3.42628

[500] train-rmse:0.41812 valid_data-rmse:0.65074

[1000] train-rmse:0.28203 valid_data-rmse:0.65237

[1366] train-rmse:0.21046 valid_data-rmse:0.65408

CV score: 0.45502827

:::

5.3 RandomForestRegressor随机森林

#RandomForestRegressor随机森林folds = KFold(n_splits=5, shuffle=True, random_state=2019)oof_rfr_263 = np.zeros(len(X_train_263))predictions_rfr_263 = np.zeros(len(X_test_263))for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):print("fold n°{}".format(fold_+1))tr_x = X_train_263[trn_idx]tr_y = y_train[trn_idx]rfr_263 = rfr(n_estimators=1600,max_depth=9, min_samples_leaf=9, min_weight_fraction_leaf=0.0,max_features=0.25,verbose=1,n_jobs=-1)#verbose = 0 为不在标准输出流输出日志信息#verbose = 1 为输出进度条记录#verbose = 2 为每个epoch输出一行记录rfr_263.fit(tr_x,tr_y)oof_rfr_263[val_idx] = rfr_263.predict(X_train_263[val_idx])predictions_rfr_263 += rfr_263.predict(X_test_263) / folds.n_splitsprint("CV score: {:<8.8f}".format(mean_squared_error(oof_rfr_263, target)))

:::success CV score: 0.47836001 :::

5.4 GradientBoostingRegressor梯度提升决策树

#GradientBoostingRegressor梯度提升决策树folds = StratifiedKFold(n_splits=5, shuffle=True, random_state=2018)oof_gbr_263 = np.zeros(train_shape)predictions_gbr_263 = np.zeros(len(X_test_263))for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):print("fold n°{}".format(fold_+1))tr_x = X_train_263[trn_idx]tr_y = y_train[trn_idx]gbr_263 = gbr(n_estimators=400, learning_rate=0.01,subsample=0.65,max_depth=7, min_samples_leaf=20,max_features=0.22,verbose=1)gbr_263.fit(tr_x,tr_y)oof_gbr_263[val_idx] = gbr_263.predict(X_train_263[val_idx])predictions_gbr_263 += gbr_263.predict(X_test_263) / folds.n_splitsprint("CV score: {:<8.8f}".format(mean_squared_error(oof_gbr_263, target)))

:::success

fold n°1

Iter Train Loss OOB Improve Remaining Time

1 0.6673 0.0033 1.55m

2 0.6640 0.0035 1.05m

3 0.6636 0.0030 57.81s

4 0.6584 0.0030 51.86s

5 0.6587 0.0029 47.07s

6 0.6278 0.0028 44.25s

7 0.6360 0.0026 41.93s

8 0.6423 0.0030 40.12s

9 0.6291 0.0027 38.87s

10 0.6553 0.0029 37.70s

20 0.6006 0.0022 32.04s

30 0.5507 0.0022 28.76s

40 0.5410 0.0016 26.46s

50 0.5245 0.0012 24.77s

60 0.4794 0.0012 23.64s

70 0.4654 0.0010 22.50s

80 0.4789 0.0007 21.47s

90 0.4606 0.0006 20.53s

100 0.4265 0.0005 19.68s

200 0.3370 0.0000 12.76s

300 0.2966 -0.0000 6.81s

400 0.2706 -0.0000 0.00s

fold n°2

Iter Train Loss OOB Improve Remaining Time

1 0.6723 0.0035 23.94s

2 0.6611 0.0035 24.67s

3 0.6557 0.0033 29.50s

4 0.6406 0.0032 28.50s

5 0.6553 0.0026 27.48s

6 0.6371 0.0033 26.65s

7 0.6574 0.0028 26.43s

8 0.6330 0.0029 26.30s

9 0.6350 0.0031 26.01s

10 0.6464 0.0026 25.58s

20 0.5872 0.0025 33.64s

30 0.5815 0.0018 29.55s

40 0.5334 0.0016 26.87s

50 0.5079 0.0012 24.86s

60 0.4937 0.0010 23.38s

70 0.4693 0.0009 22.28s

80 0.4465 0.0007 21.21s

90 0.4428 0.0007 20.24s

100 0.4309 0.0004 19.32s

200 0.3603 0.0002 12.11s

300 0.2949 0.0000 5.87s

400 0.2640 0.0000 0.00s

fold n°3

Iter Train Loss OOB Improve Remaining Time

1 0.6731 0.0034 22.74s

2 0.6926 0.0033 22.08s

3 0.6474 0.0035 21.70s

4 0.6554 0.0031 21.38s

5 0.6383 0.0029 20.93s

6 0.6499 0.0029 20.81s

7 0.6146 0.0034 20.65s

8 0.6170 0.0031 20.53s

9 0.6436 0.0029 20.41s

10 0.6328 0.0024 20.27s

20 0.6040 0.0023 19.53s

30 0.5580 0.0020 18.88s

40 0.5333 0.0016 18.33s

50 0.5007 0.0015 17.77s

60 0.4889 0.0012 17.36s

70 0.4648 0.0010 16.84s

80 0.4494 0.0007 16.30s

90 0.4325 0.0008 15.78s

100 0.4399 0.0005 15.28s

200 0.3478 0.0001 10.55s

300 0.2896 -0.0000 5.25s

400 0.2623 -0.0000 0.00s

fold n°4

Iter Train Loss OOB Improve Remaining Time

1 0.6641 0.0032 25.92s

2 0.6575 0.0034 25.26s

3 0.6495 0.0033 25.31s

4 0.6664 0.0030 24.97s

5 0.6430 0.0031 25.22s

6 0.6691 0.0030 24.97s

7 0.6380 0.0030 24.83s

8 0.6320 0.0030 24.56s

9 0.6509 0.0028 24.42s

10 0.6174 0.0030 24.26s

20 0.6087 0.0022 24.55s

30 0.5536 0.0021 24.22s

40 0.5415 0.0017 22.93s

50 0.5207 0.0013 22.44s

60 0.5072 0.0009 22.50s

70 0.4670 0.0010 21.50s

80 0.4685 0.0007 20.55s

90 0.4464 0.0007 19.66s

100 0.4196 0.0006 18.86s

200 0.3501 0.0001 12.16s

300 0.3021 0.0001 6.15s

400 0.2634 -0.0001 0.00s

fold n°5

Iter Train Loss OOB Improve Remaining Time

1 0.6622 0.0034 23.93s

2 0.6839 0.0031 23.08s

3 0.6485 0.0034 22.62s

4 0.6581 0.0031 22.86s

5 0.6486 0.0033 22.90s

6 0.6417 0.0031 22.78s

7 0.6440 0.0027 22.51s

8 0.6525 0.0028 22.29s

9 0.6471 0.0029 22.19s

10 0.6456 0.0030 22.07s

20 0.5927 0.0022 21.79s

30 0.5568 0.0021 21.11s

40 0.5466 0.0016 20.40s

50 0.5120 0.0013 19.64s

60 0.4836 0.0011 18.96s

70 0.4712 0.0010 18.27s

80 0.4518 0.0007 17.66s

90 0.4363 0.0005 17.04s

100 0.4395 0.0005 16.44s

200 0.3429 0.0001 10.85s

300 0.3021 0.0000 5.44s

400 0.2721 -0.0001 0.00s

CV score: 0.45467480

:::

5.5 ExtraTreesRegressor 极端随机森林回归

#ExtraTreesRegressor 极端随机森林回归folds = KFold(n_splits=5, shuffle=True, random_state=13)oof_etr_263 = np.zeros(train_shape)predictions_etr_263 = np.zeros(len(X_test_263))for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):print("fold n°{}".format(fold_+1))tr_x = X_train_263[trn_idx]tr_y = y_train[trn_idx]etr_263 = etr(n_estimators=1000,max_depth=8, min_samples_leaf=12, min_weight_fraction_leaf=0.0,max_features=0.4,verbose=1,n_jobs=-1)etr_263.fit(tr_x,tr_y)oof_etr_263[val_idx] = etr_263.predict(X_train_263[val_idx])predictions_etr_263 += etr_263.predict(X_test_263) / folds.n_splitsprint("CV score: {:<8.8f}".format(mean_squared_error(oof_etr_263, target)))

:::success CV score: 0.48623824 ::: 至此,我们得到了以上5种模型的预测结果以及模型架构及参数。其中在每一种特征工程中,进行5折的交叉验证,并重复两次(Kernel Ridge Regression,核脊回归),取得每一个特征数下的模型的结果。

train_stack2 = np.vstack([oof_lgb_263,oof_xgb_263,oof_gbr_263,oof_rfr_263,oof_etr_263]).transpose()# transpose()函数的作用就是调换x,y,z的位置,也就是数组的索引值test_stack2 = np.vstack([predictions_lgb_263, predictions_xgb_263,predictions_gbr_263,predictions_rfr_263,predictions_etr_263]).transpose()#交叉验证:5折,重复2次folds_stack = RepeatedKFold(n_splits=5, n_repeats=2, random_state=7)oof_stack2 = np.zeros(train_stack2.shape[0])predictions_lr2 = np.zeros(test_stack2.shape[0])for fold_, (trn_idx, val_idx) in enumerate(folds_stack.split(train_stack2,target)):print("fold {}".format(fold_))trn_data, trn_y = train_stack2[trn_idx], target.iloc[trn_idx].valuesval_data, val_y = train_stack2[val_idx], target.iloc[val_idx].values#Kernel Ridge Regressionlr2 = kr()lr2.fit(trn_data, trn_y)oof_stack2[val_idx] = lr2.predict(val_data)predictions_lr2 += lr2.predict(test_stack2) / 10mean_squared_error(target.values, oof_stack2)

:::success 0.44746512119368353 :::