- 1. 导论

- 2. Boosting方法的基本思路

- 3. Adaboost算法

- 数据预处理

- 仅仅考虑2,3类葡萄酒,去除1类

- 将分类标签变成二进制编码:

- 按8:2分割训练集和测试集

- 4. 前向分步算法

- 5. 梯度提升决策树(GBDT)

- 6. XGBoost算法

- %7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=t2SE9)6.1 精确贪心分裂算法:">

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=t2SE9)6.1 精确贪心分裂算法:

- %7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=EgY7q)6.2基于直方图的近似算法:">

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=EgY7q)6.2基于直方图的近似算法:

- %7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=t2SE9)6.1 精确贪心分裂算法:">

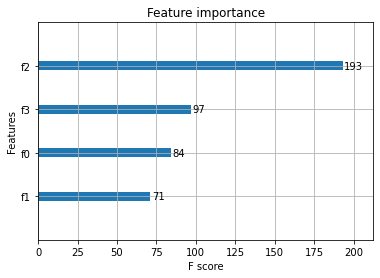

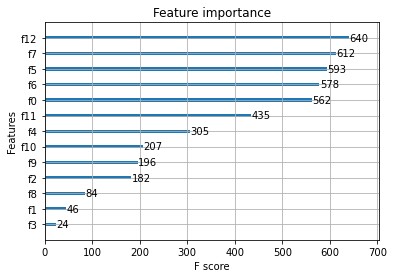

- 2. Xgboost算法案例

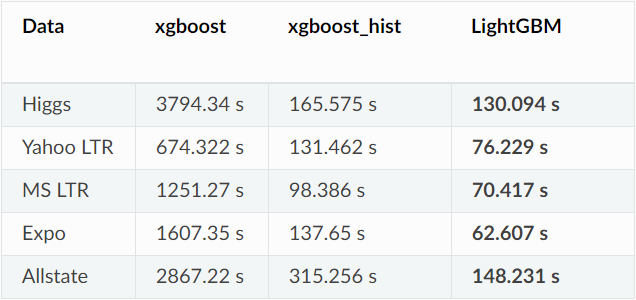

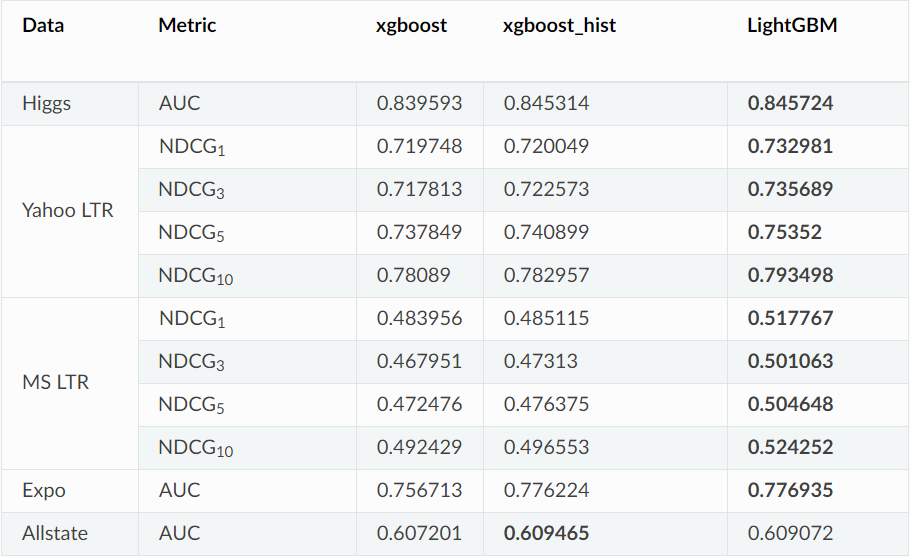

- 3. LightGBM算法

- 4. 结语

- 5. 本章作业

1. 导论

在前面的学习中,我们探讨了一系列简单而实用的回归和分类模型,同时也探讨了如何使用集成学习家族中的Bagging思想去优化最终的模型。

Bagging思想的实质是:通过Bootstrap 的方式对全样本数据集进行抽样得到抽样子集,对不同的子集使用同一种基本模型进行拟合,然后投票得出最终的预测。

我们也从前面的探讨知道:Bagging主要通过降低方差的方式减少预测误差。那么,本章介绍的Boosting是与Bagging截然不同的思想,Boosting方法是使用同一组数据集进行反复学习,得到一系列简单模型,然后组合这些模型构成一个预测性能十分强大的机器学习模型。

显然,Boosting思想提高最终的预测效果是通过不断减少偏差的形式,与Bagging有着本质的不同。在Boosting这一大类方法中,笔者主要介绍两类常用的Boosting方式:Adaptive Boosting 和 Gradient Boosting 以及它们的变体Xgboost、LightGBM以及Catboost。

2. Boosting方法的基本思路

是一种可以用来减小监督式学习中偏差的机器学习算法。面对的问题是迈可·肯斯(Michael Kearns)提出的:一组“弱学习者”的集合能否生成一个“强学习者”?弱学习者一般是指一个分类器,它的结果只比随机分类好一点点;强学习者指分类器的结果非常接近真值。在概率近似正确PAC学习的框架下:

- 弱学习:识别错误率小于1/2(即准确率仅比随机猜测略高的学习算法)

- 强学习:识别准确率很高并能在多项式时间内完成的学习算法

在PAC 学习的框架下,强可学习和弱可学习是等价的。

这样一来,问题便是:在学习中,如果已经发现了弱可学习算法,能否将他提升至强可学习算法。因为,弱可学习算法比强可学习算法容易得多。提升方法就是从弱学习算法出发,反复学习,得到一系列弱分类器(又称为基本分类器),然后通过一定的形式去组合这些弱分类器构成一个强分类器。

大多数的Boosting方法都是通过改变训练数据集的概率分布(训练数据不同样本的权值),针对不同概率分布的数据调用弱分类算法学习一系列的弱分类器。

对于Boosting方法来说,有两个问题需要给出答案:

- 每一轮学习应该如何改变数据的概率分布;

- 如何将各个弱分类器组合起来;

关于这两个问题,不同的Boosting算法会有不同的答案,我们接下来介绍一种最经典的Boosting算法——Adaboost,我们需要理解Adaboost是怎么处理这两个问题以及为什么这么处理的。

3. Adaboost算法

3.1Adaboost的基本原理

对于Adaboost来说,解决上述的两个问题的方式是:

- 提高那些被前一轮分类器错误分类的样本的权重,而降低那些被正确分类的样本的权重。这样一来,那些在上一轮分类器中没有得到正确分类的样本,由于其权重的增大而在后一轮的训练中“备受关注”。

- 各个弱分类器的组合是通过采取加权多数表决的方式,具体来说,加大分类错误率低的弱分类器的权重,因为这些分类器能更好地完成分类任务,而减小分类错误率较大的弱分类器的权重,使其在表决中起较小的作用。

现在,我们来具体介绍Adaboost算法:(参考李航老师的《统计学习方法》)

假设给定一个二分类的训练数据集:,其中每个样本点由特征与类别组成。特征

,类别

,

是特征空间,

是类别集合,输出最终分类器

。Adaboost算法如下:

(1) 初始化训练数据的分布:

(2) 对于m=1,2,…,M

- 使用具有权值分布

的训练数据集进行学习,得到基本分类器:

- 计算

在训练集上的分类误差率

- 计算

的系数

,这里的log是自然对数ln

- 更新训练数据集的权重分布

这里的是规范化因子,使得

称为概率分布,

(3) 构建基本分类器的线性组合,得到最终的分类器

下面对Adaboost算法做如下说明:

对于步骤(1),假设训练数据的权值分布是均匀分布,是为了使得第一次没有先验信息的条件下每个样本在基本分类器的学习中作用一样。

对于步骤(2),每一次迭代产生的基本分类器在加权训练数据集上的分类错误率

代表了在

中分类错误的样本权重和,这点直接说明了权重分布

和

的分类错误率

有直接关系。同时,在步骤(2)中,计算基本分类器

的系数

,

,它表示了

在最终分类器的重要性程度,

的取值由基本分类器

的分类错误率有直接关系,当

时,

,并且

随着

的减少而增大,因此分类错误率越小的基本分类器在最终分类器的作用越大!

最重要的,对于步骤(2)中的样本权重的更新:

因此,从上式可以看到:被基本分类器错误分类的样本的权重扩大,被正确分类的样本权重减少,二者相比相差

倍。对于步骤(3),线性组合

实现了将M个基本分类器的加权表决,系数

标志了基本分类器

的重要性,值得注意的是:所有的

之和不为1。

的符号决定了样本x属于哪一类。

手推Adaboost

下面,我们使用一组简单的数据来手动计算Adaboost算法的过程:(例子来源:http://www.csie.edu.tw))

训练数据如下表,假设基本分类器的形式是一个分割或

表示,阈值v由该基本分类器在训练数据集上分类错误率

最低确定。

解:

初始化样本权值分布

对m=1:

- 在权值分布

的训练数据集上,遍历每个结点并计算分类误差率

,阈值取v=2.5时分类误差率最低,那么基本分类器为:

在训练数据集上的误差率为

- 计算

的系数:

更新训练数据的权值分布:

对于m=2:在权值分布

的训练数据集上,遍历每个结点并计算分类误差率

,阈值取v=8.5时分类误差率最低,那么基本分类器为:

在训练数据集上的误差率为

- 计算

的系数:

- 更新训练数据的权值分布:

对m=3:

在权值分布的训练数据集上,遍历每个结点并计算分类误差率

,阈值取v=5.5时分类误差率最低,那么基本分类器为:

在训练数据集上的误差率为

计算的系数:

更新训练数据的权值分布:

于是得到:,分类器

在训练数据集上的误分类点的个数为0。

3.2 Adaboost实战

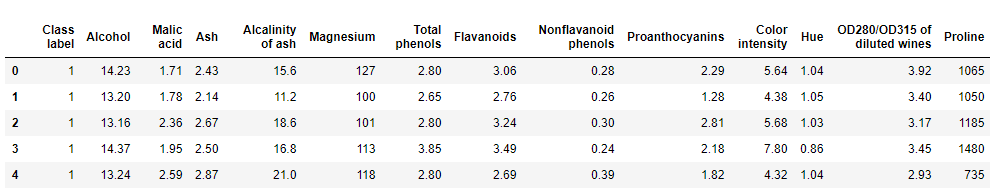

本次案例我们使用一份UCI的机器学习库里的开源数据集:葡萄酒数据集,该数据集可以在 ( https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data )上获得。该数据集包含了178个样本和13个特征,从不同的角度对不同的化学特性进行描述,我们的任务是根据这些数据预测红酒属于哪一个类别。(案例来源《python机器学习(第二版》)

# 引入数据科学相关工具包:import numpy as npimport pandas as pdimport matplotlib.pyplot as pltplt.style.use("ggplot")%matplotlib inlineimport seaborn as sns

加载训练数据:

wine = pd.read_csv("https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data",header=None)wine.columns = ['Class label', 'Alcohol', 'Malic acid', 'Ash', 'Alcalinity of ash','Magnesium', 'Total phenols','Flavanoids', 'Nonflavanoid phenols','Proanthocyanins','Color intensity', 'Hue','OD280/OD315 of diluted wines','Proline']

数据查看:

print("Class labels",np.unique(wine["Class label"]))# Class labels [1 2 3]wine.head()

下面对数据做简单解读:

- Class label:分类标签

- Alcohol:酒精

- Malic acid:苹果酸

- Ash:灰

- Alcalinity of ash:灰的碱度

- Magnesium:镁

- Total phenols:总酚

- Flavanoids:黄酮类化合物

- Nonflavanoid phenols:非黄烷类酚类

- Proanthocyanins:原花青素

- Color intensity:色彩强度

- Hue:色调

- OD280/OD315 of diluted wines:稀释酒OD280 OD350

- Proline:脯氨酸

```python

数据预处理

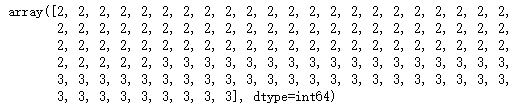

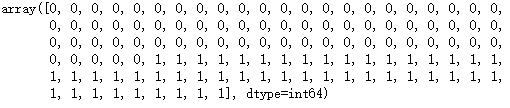

仅仅考虑2,3类葡萄酒,去除1类

wine = wine[wine[‘Class label’] != 1] y = wine[‘Class label’].values X = wine[[‘Alcohol’,’OD280/OD315 of diluted wines’]].values

将分类标签变成二进制编码:

from sklearn.preprocessing import LabelEncoder le = LabelEncoder() y = le.fit_transform(y)

按8:2分割训练集和测试集

from sklearn.model_selection import train_test_split X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.2,random_state=1,stratify=y) # stratify参数代表了按照y的类别等比例抽样

> wine['Class label'].values> > 处理之后的y> ```python# 使用单一决策树建模from sklearn.tree import DecisionTreeClassifiertree = DecisionTreeClassifier(criterion='entropy',random_state=1,max_depth=1)from sklearn.metrics import accuracy_scoretree = tree.fit(X_train,y_train)y_train_pred = tree.predict(X_train)y_test_pred = tree.predict(X_test)tree_train = accuracy_score(y_train,y_train_pred)tree_test = accuracy_score(y_test,y_test_pred)print('Decision tree train/test accuracies %.3f/%.3f' % (tree_train,tree_test))# Decision tree train/test accuracies 0.916/0.875

# 使用sklearn实现Adaboost(基分类器为决策树)'''AdaBoostClassifier相关参数:base_estimator:基本分类器,默认为DecisionTreeClassifier(max_depth=1)n_estimators:终止迭代的次数learning_rate:学习率algorithm:训练的相关算法,{'SAMME','SAMME.R'},默认='SAMME.R'random_state:随机种子'''from sklearn.ensemble import AdaBoostClassifierada = AdaBoostClassifier(base_estimator=tree,n_estimators=500,learning_rate=0.1,random_state=1)ada = ada.fit(X_train,y_train)y_train_pred = ada.predict(X_train)y_test_pred = ada.predict(X_test)ada_train = accuracy_score(y_train,y_train_pred)ada_test = accuracy_score(y_test,y_test_pred)print('Adaboost train/test accuracies %.3f/%.3f' % (ada_train,ada_test))# Adaboost train/test accuracies 1.000/0.917

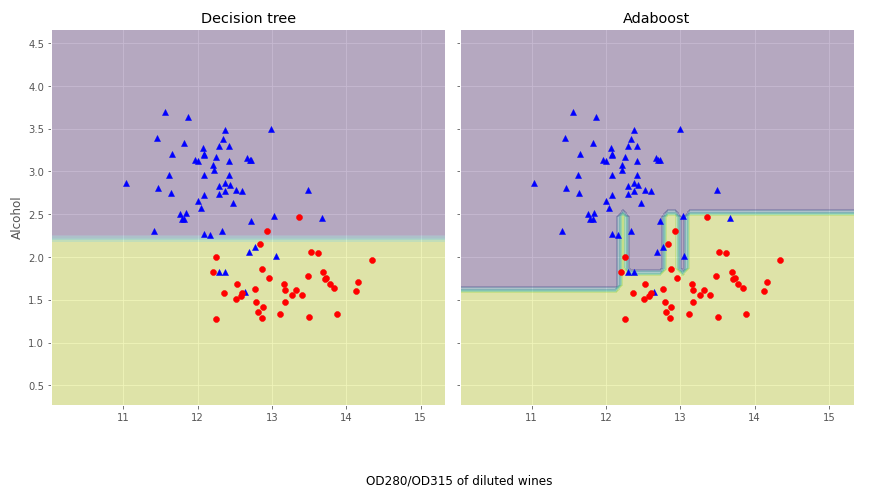

结果分析:单层决策树似乎对训练数据欠拟合,而Adaboost模型正确地预测了训练数据的所有分类标签,而且与单层决策树相比,Adaboost的测试性能也略有提高。然而,为什么模型在训练集和测试集的性能相差这么大呢?我们使用图像来简单说明下这个道理!

# 画出单层决策树与Adaboost的决策边界:x_min = X_train[:, 0].min() - 1x_max = X_train[:, 0].max() + 1y_min = X_train[:, 1].min() - 1y_max = X_train[:, 1].max() + 1xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1),np.arange(y_min, y_max, 0.1))f, axarr = plt.subplots(nrows=1, ncols=2,sharex='col',sharey='row',figsize=(12, 6))for idx, clf, tt in zip([0, 1],[tree, ada],['Decision tree', 'Adaboost']):clf.fit(X_train, y_train)Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])Z = Z.reshape(xx.shape)axarr[idx].contourf(xx, yy, Z, alpha=0.3)axarr[idx].scatter(X_train[y_train==0, 0],X_train[y_train==0, 1],c='blue', marker='^')axarr[idx].scatter(X_train[y_train==1, 0],X_train[y_train==1, 1],c='red', marker='o')axarr[idx].set_title(tt)axarr[0].set_ylabel('Alcohol', fontsize=12)plt.tight_layout()plt.text(0, -0.2,s='OD280/OD315 of diluted wines',ha='center',va='center',fontsize=12,transform=axarr[1].transAxes)plt.show()

从上面的决策边界图可以看到:Adaboost模型的决策边界比单层决策树的决策边界要复杂的多。也就是说,Adaboost试图用增加模型复杂度而降低偏差的方式去减少总误差,但是过程中引入了方差,可能出现过拟合,因此在训练集和测试集之间的性能存在较大的差距,这就简单地回答的刚刚问题。值的注意的是:与单个分类器相比,Adaboost等Boosting模型增加了计算的复杂度,在实践中需要仔细思考是否愿意为预测性能的相对改善而增加计算成本,而且Boosting方式无法做到现在流行的并行计算的方式进行训练,因为每一步迭代都要基于上一部的基本分类器。

4. 前向分步算法

回看Adaboost的算法内容,我们需要通过计算M个基本分类器,每个分类器的错误率、样本权重以及模型权重。我们可以认为:Adaboost每次学习单一分类器以及单一分类器的参数(权重)。接下来,我们抽象出Adaboost算法的整体框架逻辑,构建集成学习的一个非常重要的框架——前向分步算法,有了这个框架,我们不仅可以解决分类问题,也可以解决回归问题。

4.1 加法模型

在Adaboost模型中,我们把每个基本分类器合成一个复杂分类器的方法是每个基本分类器的加权和。即:,其中

为基本分类器,

为基本分类器参数,

为基本分类器的权重,显然这与在给定训练数据以及损失函数

的条件下,学习加法模型

就是:

通常这是一个复杂的优化问题,很难通过简单的凸优化的相关知识进行解决。前向分步算法可以用来求解这种方式的问题,它的基本思路是:因为学习的是加法模型,如果从前向后,每一步只优化一个基函数及其系数,逐步逼近目标函数,那么就可以降低优化的复杂度。具体而言,每一步只需要优化:

4.2 前向分步算法

给定数据集 ,

。损失函数

,基函数集合

,我们需要输出加法模型

。

- 初始化:

- 对m = 1,2,…,M:

- (a) 极小化损失函数:

得到参数

。

- (b) 更新:

- (a) 极小化损失函数:

- 得到加法模型:

这样,前向分步算法将同时求解从m=1到M的所有参数,

的优化问题简化为逐次求解各个

,

4.3 前向分步算法与Adaboost的关系

由于这里不是我们的重点,我们主要阐述这里的结论,不做相关证明,具体的证明见李航老师的《统计学习方法》第八章的3.2节。Adaboost算法是前向分步算法的特例,Adaboost算法是由基本分类器组成的加法模型,损失函数为指数损失函数。

5. 梯度提升决策树(GBDT)

5.1基于残差学习的提升树算法:

在前面的学习过程中,我们一直讨论的都是分类树,比如Adaboost算法,并没有涉及回归的例子。在上一小节我们提到了一个加法模型+前向分步算法的框架,那能否使用这个框架解决回归的例子呢?答案是肯定的。接下来我们来探讨下如何使用加法模型+前向分步算法的框架实现回归问题。

在使用加法模型+前向分步算法的框架解决问题之前,我们需要首先确定框架内使用的基函数是什么,在这里我们使用决策树分类器。

前面第二章我们已经学过了回归树的基本原理,树算法最重要是寻找最佳的划分点,分类树用纯度来判断最佳划分点使用信息增益(ID3算法),信息增益比(C4.5算法),基尼系数(CART分类树)。但是在回归树中的样本标签是连续数值,可划分点包含了所有特征的所有可取的值。所以再使用熵之类的指标不再合适,取而代之的是平方误差,它能很好的评判拟合程度。基函数确定了以后,我们需要确定每次提升的标准是什么。回想Adaboost算法,在Adaboost算法内使用了分类错误率修正样本权重以及计算每个基本分类器的权重,那回归问题没有分类错误率可言,也就没办法在这里的回归问题使用了,因此我们需要另辟蹊径。模仿分类错误率,我们用每个样本的残差表示每次使用基函数预测时没有解决的那部分问题。因此,我们可以得出如下算法:

输入数据集%2C%5Cleft(x%7B2%7D%2C%20y%7B2%7D%5Cright)%2C%20%5Ccdots%2C%5Cleft(x%7BN%7D%2C%20y%7BN%7D%5Cright)%5Cright%5C%7D%2C%20x%7Bi%7D%20%5Cin%20%5Cmathcal%7BX%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D%5E%7Bn%7D%2C%20y%7Bi%7D%20%5Cin%20%5Cmathcal%7BY%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D#card=math&code=T%3D%5Cleft%5C%7B%5Cleft%28x%7B1%7D%2C%20y%7B1%7D%5Cright%29%2C%5Cleft%28x%7B2%7D%2C%20y%7B2%7D%5Cright%29%2C%20%5Ccdots%2C%5Cleft%28x%7BN%7D%2C%20y%7BN%7D%5Cright%29%5Cright%5C%7D%2C%20x%7Bi%7D%20%5Cin%20%5Cmathcal%7BX%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D%5E%7Bn%7D%2C%20y%7Bi%7D%20%5Cin%20%5Cmathcal%7BY%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D&id=APw3G),输出最终的提升树

#card=math&code=f_%7BM%7D%28x%29&id=eS65D)

- 初始化

%20%3D%200#card=math&code=f_0%28x%29%20%3D%200&id=NEEHC)

- 对m = 1,2,…,M:

- 计算每个样本的残差:

%2C%20%5Cquad%20i%3D1%2C2%2C%20%5Ccdots%2C%20N#card=math&code=r%7Bm%20i%7D%3Dy%7Bi%7D-f%7Bm-1%7D%5Cleft%28x%7Bi%7D%5Cright%29%2C%20%5Cquad%20i%3D1%2C2%2C%20%5Ccdots%2C%20N&id=E006G)

- 拟合残差

学习一棵回归树,得到

#card=math&code=T%5Cleft%28x%20%3B%20%5CTheta_%7Bm%7D%5Cright%29&id=AUjxp)

- 更新

%3Df%7Bm-1%7D(x)%2BT%5Cleft(x%20%3B%20%5CTheta%7Bm%7D%5Cright)#card=math&code=f%7Bm%7D%28x%29%3Df%7Bm-1%7D%28x%29%2BT%5Cleft%28x%20%3B%20%5CTheta_%7Bm%7D%5Cright%29&id=CPMOQ)

- 计算每个样本的残差:

- 得到最终的回归问题的提升树:

%3D%5Csum%7Bm%3D1%7D%5E%7BM%7D%20T%5Cleft(x%20%3B%20%5CTheta%7Bm%7D%5Cright)#card=math&code=f%7BM%7D%28x%29%3D%5Csum%7Bm%3D1%7D%5E%7BM%7D%20T%5Cleft%28x%20%3B%20%5CTheta_%7Bm%7D%5Cright%29&id=HjFWW)

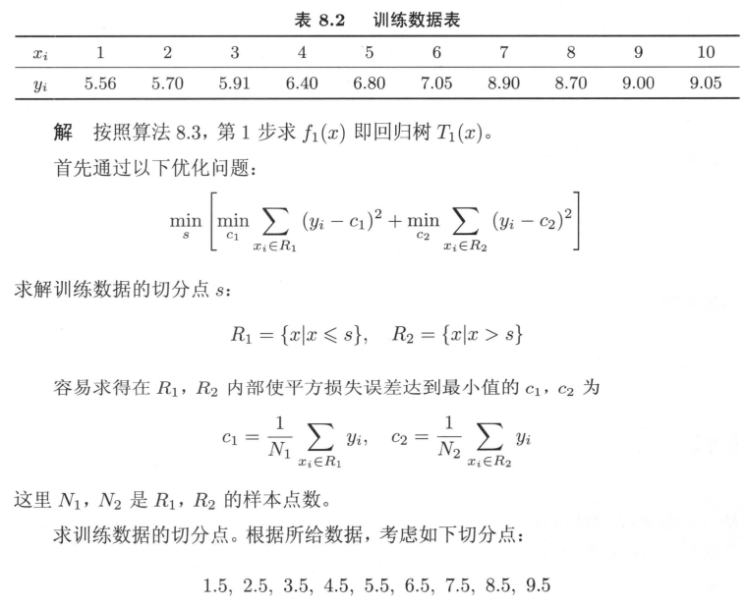

下面我们用一个实际的案例来使用这个算法:(案例来源:李航老师《统计学习方法》)

训练数据如下表,学习这个回归问题的提升树模型,考虑只用树桩作为基函数。

至此,我们已经能够建立起依靠加法模型+前向分步算法的框架解决回归问题的算法,叫提升树算法。那么,这个算法还是否有提升的空间呢?

5.2 梯度提升决策树算法(GBDT)

提升树利用加法模型和前向分步算法实现学习的过程,当损失函数为平方损失和指数损失时,每一步优化是相当简单的,也就是我们前面探讨的提升树算法和Adaboost算法。但是对于一般的损失函数而言,往往每一步的优化不是那么容易,针对这一问题,我们得分析问题的本质,也就是是什么导致了在一般损失函数条件下的学习困难。对比以下损失函数:

%5Cright)%20%2F%20%5Cpartial%20f%5Cleft(x%7Bi%7D%5Cright)%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Regression%20%7D%20%26%20%5Cfrac%7B1%7D%7B2%7D%5Cleft%5By%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%5D%5E%7B2%7D%20%26%20y%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Regression%20%7D%20%26%20%5Cleft%7Cy%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%7C%20%26%20%5Coperatorname%7Bsign%7D%5Cleft%5By%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%5D%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Regression%20%7D%20%26%20%5Ctext%20%7B%20Huber%20%7D%20%26%20y%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%20%5Ctext%20%7B%20for%20%7D%5Cleft%7Cy%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%7C%20%5Cleq%20%5Cdelta%7Bm%7D%20%5C%5C%0A%26%20%26%20%5Cdelta%7Bm%7D%20%5Coperatorname%7Bsign%7D%5Cleft%5By%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%5D%20%5Ctext%20%7B%20for%20%7D%5Cleft%7Cy%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%7C%3E%5Cdelta%7Bm%7D%20%5C%5C%0A%26%20%26%20%5Ctext%20%7B%20where%20%7D%20%5Cdelta%7Bm%7D%3D%5Calpha%20%5Ctext%20%7B%20th-quantile%20%7D%5Cleft%5C%7B%5Cleft%7Cy%7Bi%7D-f%5Cleft(x%7Bi%7D%5Cright)%5Cright%7C%5Cright%5C%7D%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Classification%20%7D%20%26%20%5Ctext%20%7B%20Deviance%20%7D%20%26%20k%20%5Ctext%20%7B%20th%20component%3A%20%7D%20I%5Cleft(y%7Bi%7D%3D%5Cmathcal%7BG%7D%7Bk%7D%5Cright)-p%7Bk%7D%5Cleft(x%7Bi%7D%5Cright)%20%5C%5C%0A%5Chline%0A%5Cend%7Barray%7D%0A#card=math&code=%5Cbegin%7Barray%7D%7Bl%7Cl%7Cl%7D%0A%5Chline%20%5Ctext%20%7B%20Setting%20%7D%20%26%20%5Ctext%20%7B%20Loss%20Function%20%7D%20%26%20-%5Cpartial%20L%5Cleft%28y%7Bi%7D%2C%20f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%29%20%2F%20%5Cpartial%20f%5Cleft%28x%7Bi%7D%5Cright%29%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Regression%20%7D%20%26%20%5Cfrac%7B1%7D%7B2%7D%5Cleft%5By%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%5D%5E%7B2%7D%20%26%20y%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Regression%20%7D%20%26%20%5Cleft%7Cy%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%7C%20%26%20%5Coperatorname%7Bsign%7D%5Cleft%5By%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%5D%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Regression%20%7D%20%26%20%5Ctext%20%7B%20Huber%20%7D%20%26%20y%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%20%5Ctext%20%7B%20for%20%7D%5Cleft%7Cy%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%7C%20%5Cleq%20%5Cdelta%7Bm%7D%20%5C%5C%0A%26%20%26%20%5Cdelta%7Bm%7D%20%5Coperatorname%7Bsign%7D%5Cleft%5By%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%5D%20%5Ctext%20%7B%20for%20%7D%5Cleft%7Cy%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%7C%3E%5Cdelta%7Bm%7D%20%5C%5C%0A%26%20%26%20%5Ctext%20%7B%20where%20%7D%20%5Cdelta%7Bm%7D%3D%5Calpha%20%5Ctext%20%7B%20th-quantile%20%7D%5Cleft%5C%7B%5Cleft%7Cy%7Bi%7D-f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%7C%5Cright%5C%7D%20%5C%5C%0A%5Chline%20%5Ctext%20%7B%20Classification%20%7D%20%26%20%5Ctext%20%7B%20Deviance%20%7D%20%26%20k%20%5Ctext%20%7B%20th%20component%3A%20%7D%20I%5Cleft%28y%7Bi%7D%3D%5Cmathcal%7BG%7D%7Bk%7D%5Cright%29-p%7Bk%7D%5Cleft%28x%7Bi%7D%5Cright%29%20%5C%5C%0A%5Chline%0A%5Cend%7Barray%7D%0A&id=D7dVS)

观察Huber损失函数:

)%3D%5Cleft%5C%7B%5Cbegin%7Barray%7D%7Bll%7D%0A%5Cfrac%7B1%7D%7B2%7D(y-f(x))%5E%7B2%7D%20%26%20%5Ctext%20%7B%20for%20%7D%7Cy-f(x)%7C%20%5Cleq%20%5Cdelta%20%5C%5C%0A%5Cdelta%7Cy-f(x)%7C-%5Cfrac%7B1%7D%7B2%7D%20%5Cdelta%5E%7B2%7D%20%26%20%5Ctext%20%7B%20otherwise%20%7D%0A%5Cend%7Barray%7D%5Cright.%0A#card=math&code=L_%7B%5Cdelta%7D%28y%2C%20f%28x%29%29%3D%5Cleft%5C%7B%5Cbegin%7Barray%7D%7Bll%7D%0A%5Cfrac%7B1%7D%7B2%7D%28y-f%28x%29%29%5E%7B2%7D%20%26%20%5Ctext%20%7B%20for%20%7D%7Cy-f%28x%29%7C%20%5Cleq%20%5Cdelta%20%5C%5C%0A%5Cdelta%7Cy-f%28x%29%7C-%5Cfrac%7B1%7D%7B2%7D%20%5Cdelta%5E%7B2%7D%20%26%20%5Ctext%20%7B%20otherwise%20%7D%0A%5Cend%7Barray%7D%5Cright.%0A&id=PhNaY)

针对上面的问题,Freidman提出了梯度提升算法(gradient boosting),这是利用最速下降法的近似方法,利用损失函数的负梯度在当前模型的值%5Cright)%7D%7B%5Cpartial%20f%5Cleft(x%7Bi%7D%5Cright)%7D%5Cright%5D%7Bf(x)%3Df%7Bm-1%7D(x)%7D#card=math&code=-%5Cleft%5B%5Cfrac%7B%5Cpartial%20L%5Cleft%28y%2C%20f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%29%7D%7B%5Cpartial%20f%5Cleft%28x%7Bi%7D%5Cright%29%7D%5Cright%5D%7Bf%28x%29%3Df_%7Bm-1%7D%28x%29%7D&id=UCcV7)作为回归问题提升树算法中的残差的近似值,拟合回归树。与其说负梯度作为残差的近似值,不如说残差是负梯度的一种特例。

5.2.1 梯度提升法的算法流程

以下开始具体介绍梯度提升算法:

输入训练数据集%2C%5Cleft(x%7B2%7D%2C%20y%7B2%7D%5Cright)%2C%20%5Ccdots%2C%5Cleft(x%7BN%7D%2C%20y%7BN%7D%5Cright)%5Cright%5C%7D%2C%20x%7Bi%7D%20%5Cin%20%5Cmathcal%7BX%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D%5E%7Bn%7D%2C%20y%7Bi%7D%20%5Cin%20%5Cmathcal%7BY%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D#card=math&code=T%3D%5Cleft%5C%7B%5Cleft%28x%7B1%7D%2C%20y%7B1%7D%5Cright%29%2C%5Cleft%28x%7B2%7D%2C%20y%7B2%7D%5Cright%29%2C%20%5Ccdots%2C%5Cleft%28x%7BN%7D%2C%20y%7BN%7D%5Cright%29%5Cright%5C%7D%2C%20x%7Bi%7D%20%5Cin%20%5Cmathcal%7BX%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D%5E%7Bn%7D%2C%20y%7Bi%7D%20%5Cin%20%5Cmathcal%7BY%7D%20%5Csubseteq%20%5Cmathbf%7BR%7D&id=Y6uUu)和损失函数

)#card=math&code=L%28y%2C%20f%28x%29%29&id=WDxnr),输出回归树

#card=math&code=%5Chat%7Bf%7D%28x%29&id=zOaCp)

- 初始化

%3D%5Carg%20%5Cmin%20%7Bc%7D%20%5Csum%7Bi%3D1%7D%5E%7BN%7D%20L%5Cleft(y%7Bi%7D%2C%20c%5Cright)#card=math&code=f%7B0%7D%28x%29%3D%5Carg%20%5Cmin%20%7Bc%7D%20%5Csum%7Bi%3D1%7D%5E%7BN%7D%20L%5Cleft%28y_%7Bi%7D%2C%20c%5Cright%29&id=PPKhN)

对于m=1,2,…,M:

- 对每个样本i = 1,2,…,N计算负梯度,即残差:

%5Cright)%7D%7B%5Cpartial%20f%5Cleft(x%7Bi%7D%5Cright)%7D%5Cright%5D%7Bf(x)%3Df%7Bm-1%7D(x)%7D%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-72%22%20d%3D%22M21%20287Q22%20290%2023%20295T28%20317T38%20348T53%20381T73%20411T99%20433T132%20442Q161%20442%20183%20430T214%20408T225%20388Q227%20382%20228%20382T236%20389Q284%20441%20347%20441H350Q398%20441%20422%20400Q430%20381%20430%20363Q430%20333%20417%20315T391%20292T366%20288Q346%20288%20334%20299T322%20328Q322%20376%20378%20392Q356%20405%20342%20405Q286%20405%20239%20331Q229%20315%20224%20298T190%20165Q156%2025%20151%2016Q138%20-11%20108%20-11Q95%20-11%2087%20-5T76%207T74%2017Q74%2030%20114%20189T154%20366Q154%20405%20128%20405Q107%20405%2092%20377T68%20316T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-6D%22%20d%3D%22M21%20287Q22%20293%2024%20303T36%20341T56%20388T88%20425T132%20442T175%20435T205%20417T221%20395T229%20376L231%20369Q231%20367%20232%20367L243%20378Q303%20442%20384%20442Q401%20442%20415%20440T441%20433T460%20423T475%20411T485%20398T493%20385T497%20373T500%20364T502%20357L510%20367Q573%20442%20659%20442Q713%20442%20746%20415T780%20336Q780%20285%20742%20178T704%2050Q705%2036%20709%2031T724%2026Q752%2026%20776%2056T815%20138Q818%20149%20821%20151T837%20153Q857%20153%20857%20145Q857%20144%20853%20130Q845%20101%20831%2073T785%2017T716%20-10Q669%20-10%20648%2017T627%2073Q627%2092%20663%20193T700%20345Q700%20404%20656%20404H651Q565%20404%20506%20303L499%20291L466%20157Q433%2026%20428%2016Q415%20-11%20385%20-11Q372%20-11%20364%20-4T353%208T350%2018Q350%2029%20384%20161L420%20307Q423%20322%20423%20345Q423%20404%20379%20404H374Q288%20404%20229%20303L222%20291L189%20157Q156%2026%20151%2016Q138%20-11%20108%20-11Q95%20-11%2087%20-5T76%207T74%2017Q74%2030%20112%20181Q151%20335%20151%20342Q154%20357%20154%20369Q154%20405%20129%20405Q107%20405%2092%20377T69%20316T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-69%22%20d%3D%22M184%20600Q184%20624%20203%20642T247%20661Q265%20661%20277%20649T290%20619Q290%20596%20270%20577T226%20557Q211%20557%20198%20567T184%20600ZM21%20287Q21%20295%2030%20318T54%20369T98%20420T158%20442Q197%20442%20223%20419T250%20357Q250%20340%20236%20301T196%20196T154%2083Q149%2061%20149%2051Q149%2026%20166%2026Q175%2026%20185%2029T208%2043T235%2078T260%20137Q263%20149%20265%20151T282%20153Q302%20153%20302%20143Q302%20135%20293%20112T268%2061T223%2011T161%20-11Q129%20-11%20102%2010T74%2074Q74%2091%2079%20106T122%20220Q160%20321%20166%20341T173%20380Q173%20404%20156%20404H154Q124%20404%2099%20371T61%20287Q60%20286%2059%20284T58%20281T56%20279T53%20278T49%20278T41%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3D%22%20d%3D%22M56%20347Q56%20360%2070%20367H707Q722%20359%20722%20347Q722%20336%20708%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H708Q722%20163%20722%20153Q722%20140%20707%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2212%22%20d%3D%22M84%20237T84%20250T98%20270H679Q694%20262%20694%20250T679%20230H98Q84%20237%2084%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-5B%22%20d%3D%22M118%20-250V750H255V710H158V-210H255V-250H118Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2202%22%20d%3D%22M202%20508Q179%20508%20169%20520T158%20547Q158%20557%20164%20577T185%20624T230%20675T301%20710L333%20715H345Q378%20715%20384%20714Q447%20703%20489%20661T549%20568T566%20457Q566%20362%20519%20240T402%2053Q321%20-22%20223%20-22Q123%20-22%2073%2056Q42%20102%2042%20148V159Q42%20276%20129%20370T322%20465Q383%20465%20414%20434T455%20367L458%20378Q478%20461%20478%20515Q478%20603%20437%20639T344%20676Q266%20676%20223%20612Q264%20606%20264%20572Q264%20547%20246%20528T202%20508ZM430%20306Q430%20372%20401%20400T333%20428Q270%20428%20222%20382Q197%20354%20183%20323T150%20221Q132%20149%20132%20116Q132%2021%20232%2021Q244%2021%20250%2022Q327%2035%20374%20112Q389%20137%20409%20196T430%20306Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-4C%22%20d%3D%22M228%20637Q194%20637%20192%20641Q191%20643%20191%20649Q191%20673%20202%20682Q204%20683%20217%20683Q271%20680%20344%20680Q485%20680%20506%20683H518Q524%20677%20524%20674T522%20656Q517%20641%20513%20637H475Q406%20636%20394%20628Q387%20624%20380%20600T313%20336Q297%20271%20279%20198T252%2088L243%2052Q243%2048%20252%2048T311%2046H328Q360%2046%20379%2047T428%2054T478%2072T522%20106T564%20161Q580%20191%20594%20228T611%20270Q616%20273%20628%20273H641Q647%20264%20647%20262T627%20203T583%2083T557%209Q555%204%20553%203T537%200T494%20-1Q483%20-1%20418%20-1T294%200H116Q32%200%2032%2010Q32%2017%2034%2024Q39%2043%2044%2045Q48%2046%2059%2046H65Q92%2046%20125%2049Q139%2052%20144%2061Q147%2065%20216%20339T285%20628Q285%20635%20228%20637Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-79%22%20d%3D%22M21%20287Q21%20301%2036%20335T84%20406T158%20442Q199%20442%20224%20419T250%20355Q248%20336%20247%20334Q247%20331%20231%20288T198%20191T182%20105Q182%2062%20196%2045T238%2027Q261%2027%20281%2038T312%2061T339%2094Q339%2095%20344%20114T358%20173T377%20247Q415%20397%20419%20404Q432%20431%20462%20431Q475%20431%20483%20424T494%20412T496%20403Q496%20390%20447%20193T391%20-23Q363%20-106%20294%20-155T156%20-205Q111%20-205%2077%20-183T43%20-117Q43%20-95%2050%20-80T69%20-58T89%20-48T106%20-45Q150%20-45%20150%20-87Q150%20-107%20138%20-122T115%20-142T102%20-147L99%20-148Q101%20-153%20118%20-160T152%20-167H160Q177%20-167%20186%20-165Q219%20-156%20247%20-127T290%20-65T313%20-9T321%2021L315%2017Q309%2013%20296%206T270%20-6Q250%20-11%20231%20-11Q185%20-11%20150%2011T104%2082Q103%2089%20103%20113Q103%20170%20138%20262T173%20379Q173%20380%20173%20381Q173%20390%20173%20393T169%20400T158%20404H154Q131%20404%20112%20385T82%20344T65%20302T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2C%22%20d%3D%22M78%2035T78%2060T94%20103T137%20121Q165%20121%20187%2096T210%208Q210%20-27%20201%20-60T180%20-117T154%20-158T130%20-185T117%20-194Q113%20-194%20104%20-185T95%20-172Q95%20-168%20106%20-156T131%20-126T157%20-76T173%20-3V9L172%208Q170%207%20167%206T161%203T152%201T140%200Q113%200%2096%2017Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-66%22%20d%3D%22M118%20-162Q120%20-162%20124%20-164T135%20-167T147%20-168Q160%20-168%20171%20-155T187%20-126Q197%20-99%20221%2027T267%20267T289%20382V385H242Q195%20385%20192%20387Q188%20390%20188%20397L195%20425Q197%20430%20203%20430T250%20431Q298%20431%20298%20432Q298%20434%20307%20482T319%20540Q356%20705%20465%20705Q502%20703%20526%20683T550%20630Q550%20594%20529%20578T487%20561Q443%20561%20443%20603Q443%20622%20454%20636T478%20657L487%20662Q471%20668%20457%20668Q445%20668%20434%20658T419%20630Q412%20601%20403%20552T387%20469T380%20433Q380%20431%20435%20431Q480%20431%20487%20430T498%20424Q499%20420%20496%20407T491%20391Q489%20386%20482%20386T428%20385H372L349%20263Q301%2015%20282%20-47Q255%20-132%20212%20-173Q175%20-205%20139%20-205Q107%20-205%2081%20-186T55%20-132Q55%20-95%2076%20-78T118%20-61Q162%20-61%20162%20-103Q162%20-122%20151%20-136T127%20-157L118%20-162Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-78%22%20d%3D%22M52%20289Q59%20331%20106%20386T222%20442Q257%20442%20286%20424T329%20379Q371%20442%20430%20442Q467%20442%20494%20420T522%20361Q522%20332%20508%20314T481%20292T458%20288Q439%20288%20427%20299T415%20328Q415%20374%20465%20391Q454%20404%20425%20404Q412%20404%20406%20402Q368%20386%20350%20336Q290%20115%20290%2078Q290%2050%20306%2038T341%2026Q378%2026%20414%2059T463%20140Q466%20150%20469%20151T485%20153H489Q504%20153%20504%20145Q504%20144%20502%20134Q486%2077%20440%2033T333%20-11Q263%20-11%20227%2052Q186%20-10%20133%20-10H127Q78%20-10%2057%2016T35%2071Q35%20103%2054%20123T99%20143Q142%20143%20142%20101Q142%2081%20130%2066T107%2046T94%2041L91%2040Q91%2039%2097%2036T113%2029T132%2026Q168%2026%20194%2071Q203%2087%20217%20139T245%20247T261%20313Q266%20340%20266%20352Q266%20380%20251%20392T217%20404Q177%20404%20142%20372T93%20290Q91%20281%2088%20280T72%20278H58Q52%20284%2052%20289Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-5D%22%20d%3D%22M22%20710V750H159V-250H22V-210H119V710H22Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ3-5B%22%20d%3D%22M247%20-949V1450H516V1388H309V-887H516V-949H247Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ3-5D%22%20d%3D%22M11%201388V1450H280V-949H11V-887H218V1388H11Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-31%22%20d%3D%22M213%20578L200%20573Q186%20568%20160%20563T102%20556H83V602H102Q149%20604%20189%20617T245%20641T273%20663Q275%20666%20285%20666Q294%20666%20302%20660V361L303%2061Q310%2054%20315%2052T339%2048T401%2046H427V0H416Q395%203%20257%203Q121%203%20100%200H88V46H114Q136%2046%20152%2046T177%2047T193%2050T201%2052T207%2057T213%2061V578Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-72%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(451%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-6D%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22878%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%221694%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%222751%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(3529%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ3-5B%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(528%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(120%2C0)%22%3E%0A%3Crect%20stroke%3D%22none%22%20width%3D%226007%22%20height%3D%2260%22%20x%3D%220%22%20y%3D%22220%22%3E%3C%2Frect%3E%0A%3Cg%20transform%3D%22translate(60%2C770)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2202%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%22567%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1415%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22693%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%221224%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-66%22%20x%3D%221669%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2386%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22809%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%221306%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%224082%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1513%2C-771)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2202%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-66%22%20x%3D%22567%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1284%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22809%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%221306%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ3-5D%22%20x%3D%226776%22%20y%3D%22-1%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(7304%2C-1057)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-66%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%22550%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%22940%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%221512%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%221902%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1895%2C0)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-66%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(346%2C-175)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-6D%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%22878%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%221657%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%225022%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%225412%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%225984%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=r%7Bm%20i%7D%3D-%5Cleft%5B%5Cfrac%7B%5Cpartial%20L%5Cleft%28y%7Bi%7D%2C%20f%5Cleft%28x%7Bi%7D%5Cright%29%5Cright%29%7D%7B%5Cpartial%20f%5Cleft%28x%7Bi%7D%5Cright%29%7D%5Cright%5D%7Bf%28x%29%3Df_%7Bm-1%7D%28x%29%7D&id=s2Eq0)

- 将

作为样本新的真实值拟合一个回归树,得到第m棵树的叶结点区域

。其中

为回归树t的叶子节点的个数。

- 对叶子区域j=1,2,…J,计算最佳拟合值:

%2Bc%5Cright)#card=math&code=c%7Bm%20j%7D%3D%5Carg%20%5Cmin%20%7Bc%7D%20%5Csum%7Bx%7Bi%7D%20%5Cin%20R%7Bm%20j%7D%7D%20L%5Cleft%28y%7Bi%7D%2C%20f%7Bm-1%7D%5Cleft%28x%7Bi%7D%5Cright%29%2Bc%5Cright%29&id=Z9hQO)

- 更新强学习器

%3Df%7Bm-1%7D(x)%2B%5Csum%7Bj%3D1%7D%5E%7BJ%7D%20c%7Bm%20j%7D%20I%5Cleft(x%20%5Cin%20R%7Bm%20j%7D%5Cright)#card=math&code=f%7Bm%7D%28x%29%3Df%7Bm-1%7D%28x%29%2B%5Csum%7Bj%3D1%7D%5E%7BJ%7D%20c%7Bm%20j%7D%20I%5Cleft%28x%20%5Cin%20R_%7Bm%20j%7D%5Cright%29&id=S99xL)

- 对每个样本i = 1,2,…,N计算负梯度,即残差:

得到回归树:

%3Df%7BM%7D(x)%3D%5Csum%7Bm%3D1%7D%5E%7BM%7D%20%5Csum%7Bj%3D1%7D%5E%7BJ%7D%20c%7Bm%20j%7D%20I%5Cleft(x%20%5Cin%20R%7Bm%20j%7D%5Cright)#card=math&code=%5Chat%7Bf%7D%28x%29%3Df%7BM%7D%28x%29%3D%5Csum%7Bm%3D1%7D%5E%7BM%7D%20%5Csum%7Bj%3D1%7D%5E%7BJ%7D%20c%7Bm%20j%7D%20I%5Cleft%28x%20%5Cin%20R%7Bm%20j%7D%5Cright%29&id=gjucA)

5.2.2 梯度提升法是如何运作的

下面,我们来使用一个具体的案例来说明GBDT是如何运作的(案例来源:https://blog.csdn.net/zpalyq110/article/details/79527653 ):

数据介绍:

如下表所示:一组数据,特征为年龄、体重,身高为标签值。共有5条数据,前四条为训练样本,最后一条为要预测的样本。

| 编号 | 年龄(岁) | 体重(kg) | 身高(m)(标签值) |

|---|---|---|---|

| 0 | 5 | 20 | 1.1 |

| 1 | 7 | 30 | 1.3 |

| 2 | 21 | 70 | 1.7 |

| 3 | 30 | 60 | 1.8 |

| 4(要预测的) | 25 | 65 | ? |

训练阶段:

参数设置:

- 学习率:learning_rate=0.1

- 迭代次数:n_trees=5

- 树的深度:max_depth=3

(1) 初始化弱学习器:

%3D%5Carg%20%5Cmin%20%7Bc%7D%20%5Csum%7Bi%3D1%7D%5E%7BN%7D%20L%5Cleft(y%7Bi%7D%2C%20c%5Cright)#card=math&code=f%7B0%7D%28x%29%3D%5Carg%20%5Cmin%20%7Bc%7D%20%5Csum%7Bi%3D1%7D%5E%7BN%7D%20L%5Cleft%28y_%7Bi%7D%2C%20c%5Cright%29&id=LFRaj)

损失函数为平方损失,因为平方损失函数是一个凸函数,直接求导,倒数等于零,得到 。

令导数等于0,即

得:

所以初始化时, 取值为所有训练样本标签值的均值:

此时得到初始学习器:

(2) 对于m=1,2,…,M:

由于我们设置了迭代次数:ntrees=5,这里的。

计算负梯度,根据上文损失函数为平方损失时,负梯度就是残差,再直白一点就是真实标签值 与上一轮得到的学习器的差值。

| 编号 | 年龄(岁) | 体重(kg) | 身高(m)( |

残差( |

|

|---|---|---|---|---|---|

| 0 | 5 | 20 | 1.1 | 20 | -0.375 |

| 1 | 7 | 30 | 1.3 | 30 | -0.175 |

| 2 | 21 | 70 | 1.7 | 70 | 0.225 |

| 3 | 30 | 60 | 1.8 | 60 | 0.325 |

学习决策树,分裂结点:

此时将残差作为样本的真实值来训练弱学习器,训练数据如下表:

| 编号 | 年龄(岁) | 体重(kg) | 身高(m)(标签值) |

|---|---|---|---|

| 0 | 5 | 20 | -0.375 |

| 1 | 7 | 30 | -0.175 |

| 2 | 21 | 70 | 0.225 |

| 3 | 30 | 60 | 0.325 |

接着,寻找回归树的最佳划分节点,遍历每个特征的每个可能取值。从年龄特征的5开始,到体重特征的70结束,分别计算分裂后两组数据的平方损失(Square Error),左节点平方损失,

右节点平方损失,找到使平方损失和

最小的那个划分节点,即为最佳划分节点。

例如:以年龄7为划分节点,将小于7的样本划分为到左节点,大于等于7的样本划分为右节点。左节点包括,右节点包括样本

,

,

,

。

所有可划分节点如下表:

| 划分点 | 小于划分点的样本 | 大于等于划分点的样本 | |||

|---|---|---|---|---|---|

| 年龄5 | / | 0,1,2,3 | 0 | 0.327 | 0.327 |

| 年龄7 | 0 | 1,2,3 | 0 | 0.140 | 0.140 |

| 年龄21 | 0,1 | 2,3 | 0.020 | 0.005 | 0.025 |

| 年龄30 | 0,1,2 | 3 | 0.187 | 0 | 0.187 |

| 体重20 | / | 0,1,2,3 | 0 | 0.327 | 0.327 |

| 体重30 | 0 | 1,2,3 | 0 | 0.140 | 0.140 |

| 体重60 | 0,1 | 2,3 | 0.020 | 0.005 | 0.025 |

| 体重70 | 0,1,3 | 2 | 0.260 | 0 | 0.260 |

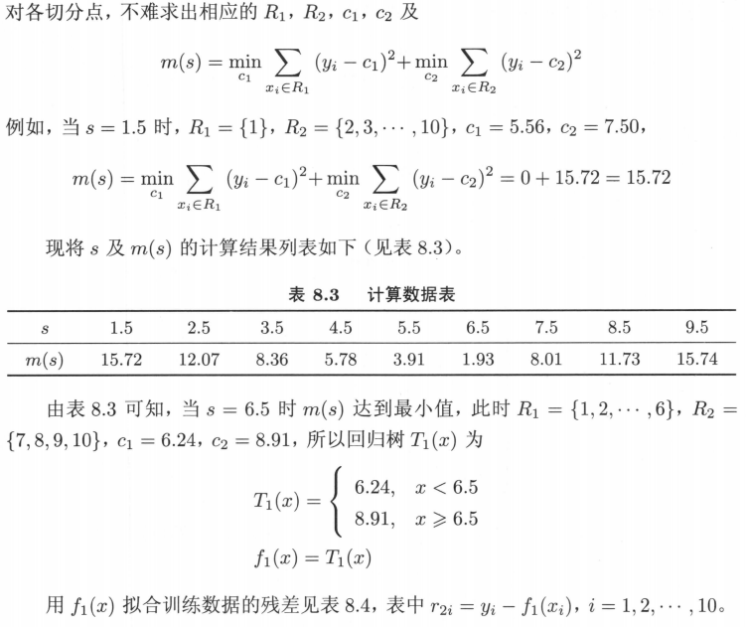

以上划分点是的总平方损失最小为0.025有两个划分点:年龄21和体重60,所以随机选一个作为划分点,这里我们选 年龄21 。

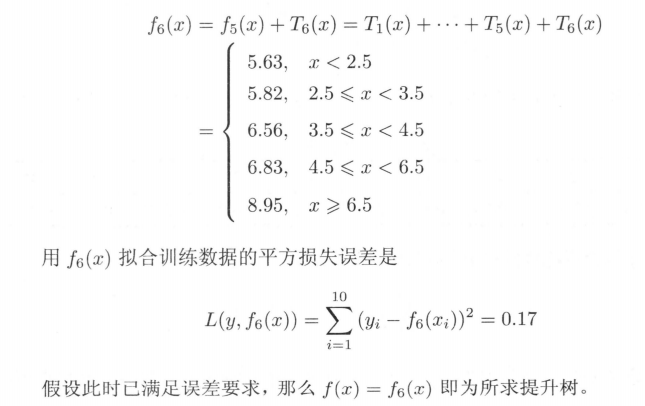

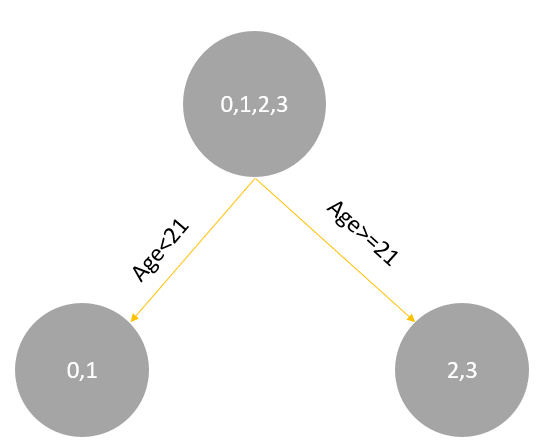

现在我们的第一棵树长这个样子:

我们设置的参数中树的深度max_depth=3,现在树的深度只有2,需要再进行一次划分,这次划分要对左右两个节点分别进行划分:

对于左节点,只有0,1两个样本,那么根据下表我们选择年龄7进行划分:

| 划分点 | 小于划分点的样本 | 大于等于划分点的样本 | |||

|---|---|---|---|---|---|

| 年龄5 | / | 0,1 | 0 | 0.020 | 0.020 |

| 年龄7 | 0 | 1 | 0 | 0 | 0 |

| 体重20 | / | 0,1 | 0 | 0.020 | 0.020 |

| 体重30 | 0 | 1 | 0 | 0 | 0 |

对于右节点,只含有2,3两个样本,根据下表我们选择年龄30划分(也可以选体重70)

| 划分点 | 小于划分点的样本 | 大于等于划分点的样本 | |||

|---|---|---|---|---|---|

| 年龄21 | / | 2,3 | 0 | 0.005 | 0.005 |

| 年龄30 | 2 | 3 | 0 | 0 | 0 |

| 体重60 | / | 2,3 | 0 | 0.005 | 0.005 |

| 体重70 | 3 | 2 | 0 | 0 | 0 |

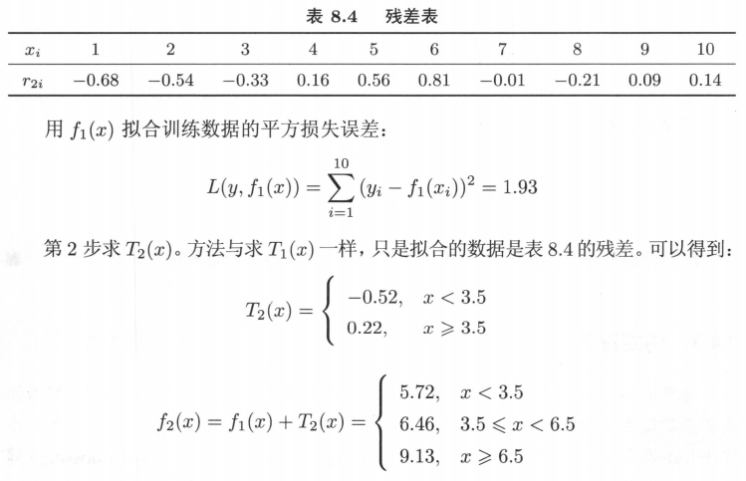

现在我们的第一棵树长这个样子:

此时我们的树深度满足了设置,还需要做一件事情,给这每个叶子节点分别赋一个参数来拟合残差。

%2B%5CUpsilon%5Cright)%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3A5%22%20d%3D%22M55%20551Q55%20604%2091%20654T194%20705Q240%20705%20277%20681T334%20624T367%20556T385%20498L389%20474L392%20488Q394%20501%20400%20521T414%20566T438%20615T473%20659T521%20692T584%20705Q620%20705%20648%20689T691%20647T714%20597T722%20551Q722%20540%20719%20538T699%20536Q680%20536%20677%20541Q677%20542%20677%20544T676%20548Q676%20576%20650%20596T588%20616H582Q538%20616%20505%20582Q466%20543%20454%20477T441%20318Q441%20301%20441%20269T442%20222V61Q448%2055%20452%2053T478%2048T542%2046H569V0H557Q533%203%20389%203T221%200H209V46H236Q256%2046%20270%2046T295%2047T311%2048T322%2051T328%2054T332%2057T337%2061V209Q337%20383%20333%20415Q313%20616%20189%20616Q154%20616%20128%20597T101%20548Q101%20540%2097%20538T78%20536Q63%20536%2059%20538T55%20551Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-6A%22%20d%3D%22M297%20596Q297%20627%20318%20644T361%20661Q378%20661%20389%20651T403%20623Q403%20595%20384%20576T340%20557Q322%20557%20310%20567T297%20596ZM288%20376Q288%20405%20262%20405Q240%20405%20220%20393T185%20362T161%20325T144%20293L137%20279Q135%20278%20121%20278H107Q101%20284%20101%20286T105%20299Q126%20348%20164%20391T252%20441Q253%20441%20260%20441T272%20442Q296%20441%20316%20432Q341%20418%20354%20401T367%20348V332L318%20133Q267%20-67%20264%20-75Q246%20-125%20194%20-164T75%20-204Q25%20-204%207%20-183T-12%20-137Q-12%20-110%207%20-91T53%20-71Q70%20-71%2082%20-81T95%20-112Q95%20-148%2063%20-167Q69%20-168%2077%20-168Q111%20-168%20139%20-140T182%20-74L193%20-32Q204%2011%20219%2072T251%20197T278%20308T289%20365Q289%20372%20288%20376Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-31%22%20d%3D%22M213%20578L200%20573Q186%20568%20160%20563T102%20556H83V602H102Q149%20604%20189%20617T245%20641T273%20663Q275%20666%20285%20666Q294%20666%20302%20660V361L303%2061Q310%2054%20315%2052T339%2048T401%2046H427V0H416Q395%203%20257%203Q121%203%20100%200H88V46H114Q136%2046%20152%2046T177%2047T193%2050T201%2052T207%2057T213%2061V578Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3D%22%20d%3D%22M56%20347Q56%20360%2070%20367H707Q722%20359%20722%20347Q722%20336%20708%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H708Q722%20163%20722%20153Q722%20140%20707%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-61%22%20d%3D%22M137%20305T115%20305T78%20320T63%20359Q63%20394%2097%20421T218%20448Q291%20448%20336%20416T396%20340Q401%20326%20401%20309T402%20194V124Q402%2076%20407%2058T428%2040Q443%2040%20448%2056T453%20109V145H493V106Q492%2066%20490%2059Q481%2029%20455%2012T400%20-6T353%2012T329%2054V58L327%2055Q325%2052%20322%2049T314%2040T302%2029T287%2017T269%206T247%20-2T221%20-8T190%20-11Q130%20-11%2082%2020T34%20107Q34%20128%2041%20147T68%20188T116%20225T194%20253T304%20268H318V290Q318%20324%20312%20340Q290%20411%20215%20411Q197%20411%20181%20410T156%20406T148%20403Q170%20388%20170%20359Q170%20334%20154%20320ZM126%20106Q126%2075%20150%2051T209%2026Q247%2026%20276%2049T315%20109Q317%20116%20318%20175Q318%20233%20317%20233Q309%20233%20296%20232T251%20223T193%20203T147%20166T126%20106Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-72%22%20d%3D%22M36%2046H50Q89%2046%2097%2060V68Q97%2077%2097%2091T98%20122T98%20161T98%20203Q98%20234%2098%20269T98%20328L97%20351Q94%20370%2083%20376T38%20385H20V408Q20%20431%2022%20431L32%20432Q42%20433%2060%20434T96%20436Q112%20437%20131%20438T160%20441T171%20442H174V373Q213%20441%20271%20441H277Q322%20441%20343%20419T364%20373Q364%20352%20351%20337T313%20322Q288%20322%20276%20338T263%20372Q263%20381%20265%20388T270%20400T273%20405Q271%20407%20250%20401Q234%20393%20226%20386Q179%20341%20179%20207V154Q179%20141%20179%20127T179%20101T180%2081T180%2066V61Q181%2059%20183%2057T188%2054T193%2051T200%2049T207%2048T216%2047T225%2047T235%2046T245%2046H276V0H267Q249%203%20140%203Q37%203%2028%200H20V46H36Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-67%22%20d%3D%22M329%20409Q373%20453%20429%20453Q459%20453%20472%20434T485%20396Q485%20382%20476%20371T449%20360Q416%20360%20412%20390Q410%20404%20415%20411Q415%20412%20416%20414V415Q388%20412%20363%20393Q355%20388%20355%20386Q355%20385%20359%20381T368%20369T379%20351T388%20325T392%20292Q392%20230%20343%20187T222%20143Q172%20143%20123%20171Q112%20153%20112%20133Q112%2098%20138%2081Q147%2075%20155%2075T227%2073Q311%2072%20335%2067Q396%2058%20431%2026Q470%20-13%20470%20-72Q470%20-139%20392%20-175Q332%20-206%20250%20-206Q167%20-206%20107%20-175Q29%20-140%2029%20-75Q29%20-39%2050%20-15T92%2018L103%2024Q67%2055%2067%20108Q67%20155%2096%20193Q52%20237%2052%20292Q52%20355%20102%20398T223%20442Q274%20442%20318%20416L329%20409ZM299%20343Q294%20371%20273%20387T221%20404Q192%20404%20171%20388T145%20343Q142%20326%20142%20292Q142%20248%20149%20227T179%20192Q196%20182%20222%20182Q244%20182%20260%20189T283%20207T294%20227T299%20242Q302%20258%20302%20292T299%20343ZM403%20-75Q403%20-50%20389%20-34T348%20-11T299%20-2T245%200H218Q151%200%20138%20-6Q118%20-15%20107%20-34T95%20-74Q95%20-84%20101%20-97T122%20-127T170%20-155T250%20-167Q319%20-167%20361%20-139T403%20-75Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6D%22%20d%3D%22M41%2046H55Q94%2046%20102%2060V68Q102%2077%20102%2091T102%20122T103%20161T103%20203Q103%20234%20103%20269T102%20328V351Q99%20370%2088%20376T43%20385H25V408Q25%20431%2027%20431L37%20432Q47%20433%2065%20434T102%20436Q119%20437%20138%20438T167%20441T178%20442H181V402Q181%20364%20182%20364T187%20369T199%20384T218%20402T247%20421T285%20437Q305%20442%20336%20442Q351%20442%20364%20440T387%20434T406%20426T421%20417T432%20406T441%20395T448%20384T452%20374T455%20366L457%20361L460%20365Q463%20369%20466%20373T475%20384T488%20397T503%20410T523%20422T546%20432T572%20439T603%20442Q729%20442%20740%20329Q741%20322%20741%20190V104Q741%2066%20743%2059T754%2049Q775%2046%20803%2046H819V0H811L788%201Q764%202%20737%202T699%203Q596%203%20587%200H579V46H595Q656%2046%20656%2062Q657%2064%20657%20200Q656%20335%20655%20343Q649%20371%20635%20385T611%20402T585%20404Q540%20404%20506%20370Q479%20343%20472%20315T464%20232V168V108Q464%2078%20465%2068T468%2055T477%2049Q498%2046%20526%2046H542V0H534L510%201Q487%202%20460%202T422%203Q319%203%20310%200H302V46H318Q379%2046%20379%2062Q380%2064%20380%20200Q379%20335%20378%20343Q372%20371%20358%20385T334%20402T308%20404Q263%20404%20229%20370Q202%20343%20195%20315T187%20232V168V108Q187%2078%20188%2068T191%2055T200%2049Q221%2046%20249%2046H265V0H257L234%201Q210%202%20183%202T145%203Q42%203%2033%200H25V46H41Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-69%22%20d%3D%22M69%20609Q69%20637%2087%20653T131%20669Q154%20667%20171%20652T188%20609Q188%20579%20171%20564T129%20549Q104%20549%2087%20564T69%20609ZM247%200Q232%203%20143%203Q132%203%20106%203T56%201L34%200H26V46H42Q70%2046%2091%2049Q100%2053%20102%2060T104%20102V205V293Q104%20345%20102%20359T88%20378Q74%20385%2041%20385H30V408Q30%20431%2032%20431L42%20432Q52%20433%2070%20434T106%20436Q123%20437%20142%20438T171%20441T182%20442H185V62Q190%2052%20197%2050T232%2046H255V0H247Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6E%22%20d%3D%22M41%2046H55Q94%2046%20102%2060V68Q102%2077%20102%2091T102%20122T103%20161T103%20203Q103%20234%20103%20269T102%20328V351Q99%20370%2088%20376T43%20385H25V408Q25%20431%2027%20431L37%20432Q47%20433%2065%20434T102%20436Q119%20437%20138%20438T167%20441T178%20442H181V402Q181%20364%20182%20364T187%20369T199%20384T218%20402T247%20421T285%20437Q305%20442%20336%20442Q450%20438%20463%20329Q464%20322%20464%20190V104Q464%2066%20466%2059T477%2049Q498%2046%20526%2046H542V0H534L510%201Q487%202%20460%202T422%203Q319%203%20310%200H302V46H318Q379%2046%20379%2062Q380%2064%20380%20200Q379%20335%20378%20343Q372%20371%20358%20385T334%20402T308%20404Q263%20404%20229%20370Q202%20343%20195%20315T187%20232V168V108Q187%2078%20188%2068T191%2055T200%2049Q221%2046%20249%2046H265V0H257L234%201Q210%202%20183%202T145%203Q42%203%2033%200H25V46H41Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E152%22%20d%3D%22M-24%20327L-18%20333H-1Q11%20333%2015%20333T22%20329T27%20322T35%20308T54%20284Q115%20203%20225%20162T441%20120Q454%20120%20457%20117T460%2095V60V28Q460%208%20457%204T442%200Q355%200%20260%2036Q75%20118%20-16%20278L-24%20292V327Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E153%22%20d%3D%22M-10%2060V95Q-10%20113%20-7%20116T9%20120Q151%20120%20250%20171T396%20284Q404%20293%20412%20305T424%20324T431%20331Q433%20333%20451%20333H468L474%20327V292L466%20278Q375%20118%20190%2036Q95%200%208%200Q-5%200%20-7%203T-10%2024V60Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E151%22%20d%3D%22M-10%2060Q-10%20104%20-10%20111T-5%20118Q-1%20120%2010%20120Q96%20120%20190%2084Q375%202%20466%20-158L474%20-172V-207L468%20-213H451H447Q437%20-213%20434%20-213T428%20-209T423%20-202T414%20-187T396%20-163Q331%20-82%20224%20-41T9%200Q-4%200%20-7%203T-10%2025V60Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E150%22%20d%3D%22M-18%20-213L-24%20-207V-172L-16%20-158Q75%202%20260%2084Q334%20113%20415%20119Q418%20119%20427%20119T440%20120Q454%20120%20457%20117T460%2098V60V25Q460%207%20457%204T441%200Q308%200%20193%20-55T25%20-205Q21%20-211%2018%20-212T-1%20-213H-18Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E154%22%20d%3D%22M-10%200V120H410V0H-10Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ2-2211%22%20d%3D%22M60%20948Q63%20950%20665%20950H1267L1325%20815Q1384%20677%201388%20669H1348L1341%20683Q1320%20724%201285%20761Q1235%20809%201174%20838T1033%20881T882%20898T699%20902H574H543H251L259%20891Q722%20258%20724%20252Q725%20250%20724%20246Q721%20243%20460%20-56L196%20-356Q196%20-357%20407%20-357Q459%20-357%20548%20-357T676%20-358Q812%20-358%20896%20-353T1063%20-332T1204%20-283T1307%20-196Q1328%20-170%201348%20-124H1388Q1388%20-125%201381%20-145T1356%20-210T1325%20-294L1267%20-449L666%20-450Q64%20-450%2061%20-448Q55%20-446%2055%20-439Q55%20-437%2057%20-433L590%20177Q590%20178%20557%20222T452%20366T322%20544L56%20909L55%20924Q55%20945%2060%20948Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-78%22%20d%3D%22M52%20289Q59%20331%20106%20386T222%20442Q257%20442%20286%20424T329%20379Q371%20442%20430%20442Q467%20442%20494%20420T522%20361Q522%20332%20508%20314T481%20292T458%20288Q439%20288%20427%20299T415%20328Q415%20374%20465%20391Q454%20404%20425%20404Q412%20404%20406%20402Q368%20386%20350%20336Q290%20115%20290%2078Q290%2050%20306%2038T341%2026Q378%2026%20414%2059T463%20140Q466%20150%20469%20151T485%20153H489Q504%20153%20504%20145Q504%20144%20502%20134Q486%2077%20440%2033T333%20-11Q263%20-11%20227%2052Q186%20-10%20133%20-10H127Q78%20-10%2057%2016T35%2071Q35%20103%2054%20123T99%20143Q142%20143%20142%20101Q142%2081%20130%2066T107%2046T94%2041L91%2040Q91%2039%2097%2036T113%2029T132%2026Q168%2026%20194%2071Q203%2087%20217%20139T245%20247T261%20313Q266%20340%20266%20352Q266%20380%20251%20392T217%20404Q177%20404%20142%20372T93%20290Q91%20281%2088%20280T72%20278H58Q52%20284%2052%20289Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-69%22%20d%3D%22M184%20600Q184%20624%20203%20642T247%20661Q265%20661%20277%20649T290%20619Q290%20596%20270%20577T226%20557Q211%20557%20198%20567T184%20600ZM21%20287Q21%20295%2030%20318T54%20369T98%20420T158%20442Q197%20442%20223%20419T250%20357Q250%20340%20236%20301T196%20196T154%2083Q149%2061%20149%2051Q149%2026%20166%2026Q175%2026%20185%2029T208%2043T235%2078T260%20137Q263%20149%20265%20151T282%20153Q302%20153%20302%20143Q302%20135%20293%20112T268%2061T223%2011T161%20-11Q129%20-11%20102%2010T74%2074Q74%2091%2079%20106T122%20220Q160%20321%20166%20341T173%20380Q173%20404%20156%20404H154Q124%20404%2099%20371T61%20287Q60%20286%2059%20284T58%20281T56%20279T53%20278T49%20278T41%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2208%22%20d%3D%22M84%20250Q84%20372%20166%20450T360%20539Q361%20539%20377%20539T419%20540T469%20540H568Q583%20532%20583%20520Q583%20511%20570%20501L466%20500Q355%20499%20329%20494Q280%20482%20242%20458T183%20409T147%20354T129%20306T124%20272V270H568Q583%20262%20583%20250T568%20230H124V228Q124%20207%20134%20177T167%20112T231%2048T328%207Q355%201%20466%200H570Q583%20-10%20583%20-20Q583%20-32%20568%20-40H471Q464%20-40%20446%20-40T417%20-41Q262%20-41%20172%2045Q84%20127%2084%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-52%22%20d%3D%22M230%20637Q203%20637%20198%20638T193%20649Q193%20676%20204%20682Q206%20683%20378%20683Q550%20682%20564%20680Q620%20672%20658%20652T712%20606T733%20563T739%20529Q739%20484%20710%20445T643%20385T576%20351T538%20338L545%20333Q612%20295%20612%20223Q612%20212%20607%20162T602%2080V71Q602%2053%20603%2043T614%2025T640%2016Q668%2016%20686%2038T712%2085Q717%2099%20720%20102T735%20105Q755%20105%20755%2093Q755%2075%20731%2036Q693%20-21%20641%20-21H632Q571%20-21%20531%204T487%2082Q487%20109%20502%20166T517%20239Q517%20290%20474%20313Q459%20320%20449%20321T378%20323H309L277%20193Q244%2061%20244%2059Q244%2055%20245%2054T252%2050T269%2048T302%2046H333Q339%2038%20339%2037T336%2019Q332%206%20326%200H311Q275%202%20180%202Q146%202%20117%202T71%202T50%201Q33%201%2033%2010Q33%2012%2036%2024Q41%2043%2046%2045Q50%2046%2061%2046H67Q94%2046%20127%2049Q141%2052%20146%2061Q149%2065%20218%20339T287%20628Q287%20635%20230%20637ZM630%20554Q630%20586%20609%20608T523%20636Q521%20636%20500%20636T462%20637H440Q393%20637%20386%20627Q385%20624%20352%20494T319%20361Q319%20360%20388%20360Q466%20361%20492%20367Q556%20377%20592%20426Q608%20449%20619%20486T630%20554Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-4C%22%20d%3D%22M228%20637Q194%20637%20192%20641Q191%20643%20191%20649Q191%20673%20202%20682Q204%20683%20217%20683Q271%20680%20344%20680Q485%20680%20506%20683H518Q524%20677%20524%20674T522%20656Q517%20641%20513%20637H475Q406%20636%20394%20628Q387%20624%20380%20600T313%20336Q297%20271%20279%20198T252%2088L243%2052Q243%2048%20252%2048T311%2046H328Q360%2046%20379%2047T428%2054T478%2072T522%20106T564%20161Q580%20191%20594%20228T611%20270Q616%20273%20628%20273H641Q647%20264%20647%20262T627%20203T583%2083T557%209Q555%204%20553%203T537%200T494%20-1Q483%20-1%20418%20-1T294%200H116Q32%200%2032%2010Q32%2017%2034%2024Q39%2043%2044%2045Q48%2046%2059%2046H65Q92%2046%20125%2049Q139%2052%20144%2061Q147%2065%20216%20339T285%20628Q285%20635%20228%20637Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-79%22%20d%3D%22M21%20287Q21%20301%2036%20335T84%20406T158%20442Q199%20442%20224%20419T250%20355Q248%20336%20247%20334Q247%20331%20231%20288T198%20191T182%20105Q182%2062%20196%2045T238%2027Q261%2027%20281%2038T312%2061T339%2094Q339%2095%20344%20114T358%20173T377%20247Q415%20397%20419%20404Q432%20431%20462%20431Q475%20431%20483%20424T494%20412T496%20403Q496%20390%20447%20193T391%20-23Q363%20-106%20294%20-155T156%20-205Q111%20-205%2077%20-183T43%20-117Q43%20-95%2050%20-80T69%20-58T89%20-48T106%20-45Q150%20-45%20150%20-87Q150%20-107%20138%20-122T115%20-142T102%20-147L99%20-148Q101%20-153%20118%20-160T152%20-167H160Q177%20-167%20186%20-165Q219%20-156%20247%20-127T290%20-65T313%20-9T321%2021L315%2017Q309%2013%20296%206T270%20-6Q250%20-11%20231%20-11Q185%20-11%20150%2011T104%2082Q103%2089%20103%20113Q103%20170%20138%20262T173%20379Q173%20380%20173%20381Q173%20390%20173%20393T169%20400T158%20404H154Q131%20404%20112%20385T82%20344T65%20302T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2C%22%20d%3D%22M78%2035T78%2060T94%20103T137%20121Q165%20121%20187%2096T210%208Q210%20-27%20201%20-60T180%20-117T154%20-158T130%20-185T117%20-194Q113%20-194%20104%20-185T95%20-172Q95%20-168%20106%20-156T131%20-126T157%20-76T173%20-3V9L172%208Q170%207%20167%206T161%203T152%201T140%200Q113%200%2096%2017Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-66%22%20d%3D%22M118%20-162Q120%20-162%20124%20-164T135%20-167T147%20-168Q160%20-168%20171%20-155T187%20-126Q197%20-99%20221%2027T267%20267T289%20382V385H242Q195%20385%20192%20387Q188%20390%20188%20397L195%20425Q197%20430%20203%20430T250%20431Q298%20431%20298%20432Q298%20434%20307%20482T319%20540Q356%20705%20465%20705Q502%20703%20526%20683T550%20630Q550%20594%20529%20578T487%20561Q443%20561%20443%20603Q443%20622%20454%20636T478%20657L487%20662Q471%20668%20457%20668Q445%20668%20434%20658T419%20630Q412%20601%20403%20552T387%20469T380%20433Q380%20431%20435%20431Q480%20431%20487%20430T498%20424Q499%20420%20496%20407T491%20391Q489%20386%20482%20386T428%20385H372L349%20263Q301%2015%20282%20-47Q255%20-132%20212%20-173Q175%20-205%20139%20-205Q107%20-205%2081%20-186T55%20-132Q55%20-95%2076%20-78T118%20-61Q162%20-61%20162%20-103Q162%20-122%20151%20-136T127%20-157L118%20-162Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-30%22%20d%3D%22M96%20585Q152%20666%20249%20666Q297%20666%20345%20640T423%20548Q460%20465%20460%20320Q460%20165%20417%2083Q397%2041%20362%2016T301%20-15T250%20-22Q224%20-22%20198%20-16T137%2016T82%2083Q39%20165%2039%20320Q39%20494%2096%20585ZM321%20597Q291%20629%20250%20629Q208%20629%20178%20597Q153%20571%20145%20525T137%20333Q137%20175%20145%20125T181%2046Q209%2016%20250%2016Q290%2016%20318%2046Q347%2076%20354%20130T362%20333Q362%20478%20354%20524T321%20597Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2B%22%20d%3D%22M56%20237T56%20250T70%20270H369V420L370%20570Q380%20583%20389%20583Q402%20583%20409%20568V270H707Q722%20262%20722%20250T707%20230H409V-68Q401%20-82%20391%20-82H389H387Q375%20-82%20369%20-68V230H70Q56%20237%2056%20250Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3A5%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(778%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-6A%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22412%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%221801%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2858%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-61%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-72%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-67%22%20x%3D%22893%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1560%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6D%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-69%22%20x%3D%22833%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6E%22%20x%3D%221112%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(12%2C-721)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E152%22%20x%3D%2223%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(499.37718253968256%2C0)%20scale(1.6186507936507941%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1163%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E151%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E150%22%20x%3D%22450%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(2080.210515873016%2C0)%20scale(1.6186507936507941%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E153%22%20x%3D%222754%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-3A5%22%20x%3D%221893%22%20y%3D%22-2264%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(6253%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ2-2211%22%20x%3D%22416%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(0%2C-1102)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22705%22%20y%3D%22-238%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-2208%22%20x%3D%22952%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1145%2C0)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-52%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(537%2C-140)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-6A%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%22412%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%228697%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(9546%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22693%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%221224%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1669%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-66%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%20x%3D%22693%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(2780%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-78%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22809%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%221306%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%224698%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3A5%22%20x%3D%225699%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%226477%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=%5CUpsilon%7Bj%201%7D%3D%5Cunderbrace%7B%5Carg%20%5Cmin%20%7D%7B%5CUpsilon%7D%20%5Csum%7Bx%7Bi%7D%20%5Cin%20R%7Bj%201%7D%7D%20L%5Cleft%28y%7Bi%7D%2C%20f%7B0%7D%5Cleft%28x%7Bi%7D%5Cright%29%2B%5CUpsilon%5Cright%29&id=m6ptc):

这里其实和上面初始化学习器是一个道理,平方损失,求导,令导数等于零,化简之后得到每个叶子节点的参数,其实就是标签值的均值。这个地方的标签值不是原始的

,而是本轮要拟合的标残差

根据上述划分结果,为了方便表示,规定从左到右为第1 , 2 , 3 , 4 个叶子结点%2C%20%5Cquad%20%5CUpsilon%7B11%7D%3D-0.375%20%5C%5C%0A%5Cleft(x%7B1%7D%20%5Cin%20R%7B21%7D%5Cright)%2C%20%5Cquad%20%5CUpsilon%7B21%7D%3D-0.175%20%5C%5C%0A%5Cleft(x%7B2%7D%20%5Cin%20R%7B31%7D%5Cright)%2C%20%5Cquad%20%5CUpsilon%7B31%7D%3D0.225%20%5C%5C%0A%5Cleft(x%7B3%7D%20%5Cin%20R%7B41%7D%5Cright)%2C%20%5Cquad%20%5CUpsilon%7B41%7D%3D0.325%0A%5Cend%7Barray%7D%0A#card=math&code=%5Cbegin%7Barray%7D%7Bl%7D%0A%5Cleft%28x%7B0%7D%20%5Cin%20R%7B11%7D%5Cright%29%2C%20%5Cquad%20%5CUpsilon%7B11%7D%3D-0.375%20%5C%5C%0A%5Cleft%28x%7B1%7D%20%5Cin%20R%7B21%7D%5Cright%29%2C%20%5Cquad%20%5CUpsilon%7B21%7D%3D-0.175%20%5C%5C%0A%5Cleft%28x%7B2%7D%20%5Cin%20R%7B31%7D%5Cright%29%2C%20%5Cquad%20%5CUpsilon%7B31%7D%3D0.225%20%5C%5C%0A%5Cleft%28x%7B3%7D%20%5Cin%20R%7B41%7D%5Cright%29%2C%20%5Cquad%20%5CUpsilon%7B41%7D%3D0.325%0A%5Cend%7Barray%7D%0A&id=MTmWw)

此时的树模型结构如下:

此时可更新强学习器,需要用到参数学习率:learningrate=0.1,用 表示。

为什么要用学习率呢?这是Shrinkage的思想,如果每次都全部加上(学习率为1)很容易一步学到位导致过拟合。

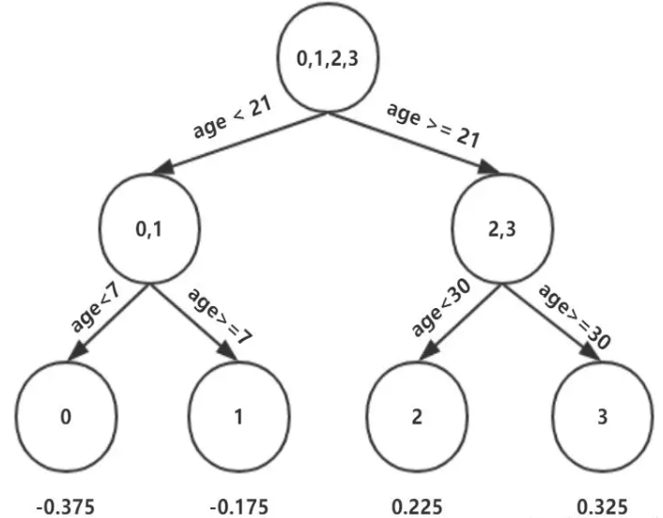

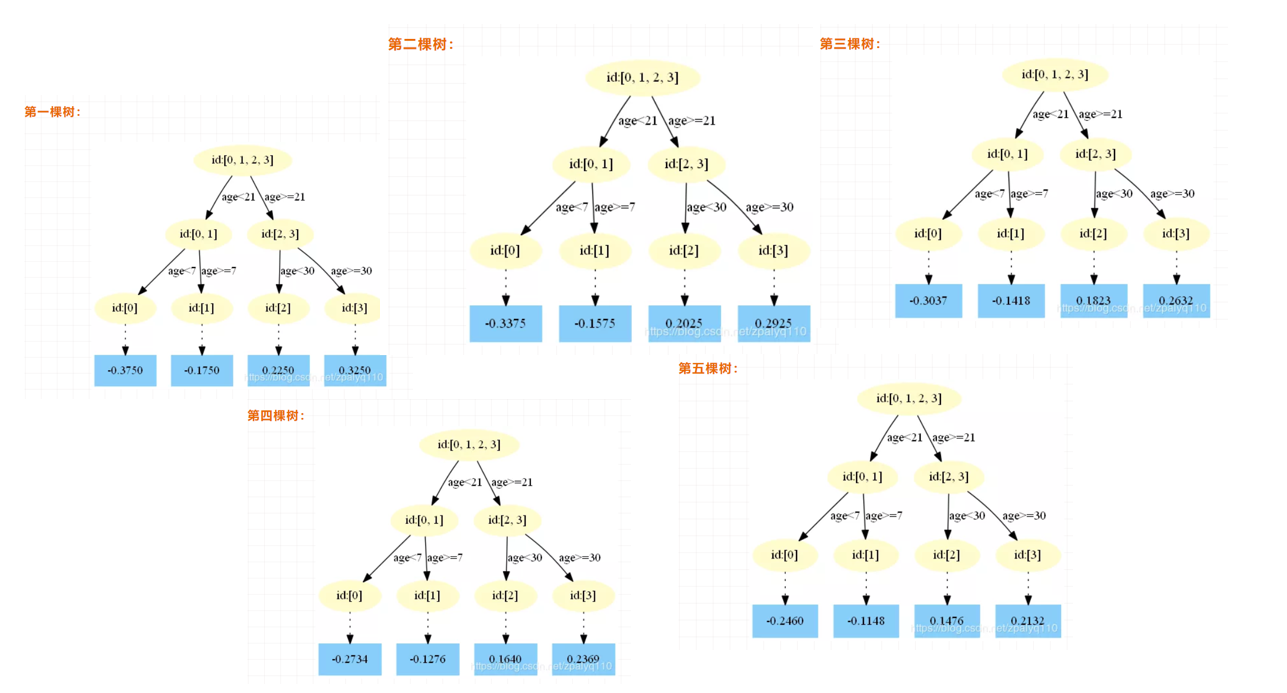

最后得到五轮迭代:

最后的强学习器为:%3Df%7B5%7D(x)%3Df%7B0%7D(x)%2B%5Csum%7Bm%3D1%7D%5E%7B5%7D%20%5Csum%7Bj%3D1%7D%5E%7B4%7D%20%5CUpsilon%7Bj%20m%7D%20I%5Cleft(x%20%5Cin%20R%7Bj%20m%7D%5Cright)#card=math&code=f%28x%29%3Df%7B5%7D%28x%29%3Df%7B0%7D%28x%29%2B%5Csum%7Bm%3D1%7D%5E%7B5%7D%20%5Csum%7Bj%3D1%7D%5E%7B4%7D%20%5CUpsilon%7Bj%20m%7D%20I%5Cleft%28x%20%5Cin%20R%7Bj%20m%7D%5Cright%29&id=Tr0zq)。

其中:

%3D1.475%20%26%20f%7B2%7D(x)%3D0.0205%20%5C%5C%0Af%7B3%7D(x)%3D0.1823%20%26%20f%7B4%7D(x)%3D0.1640%20%5C%5C%0Af%7B5%7D(x)%3D0.1476%0A%5Cend%7Barray%7D%0A#card=math&code=%5Cbegin%7Barray%7D%7Bll%7D%0Af%7B0%7D%28x%29%3D1.475%20%26%20f%7B2%7D%28x%29%3D0.0205%20%5C%5C%0Af%7B3%7D%28x%29%3D0.1823%20%26%20f%7B4%7D%28x%29%3D0.1640%20%5C%5C%0Af_%7B5%7D%28x%29%3D0.1476%0A%5Cend%7Barray%7D%0A&id=VZ9j8)

预测结果为:

%3D1.475%2B0.1%20*(0.2250%2B0.2025%2B0.1823%2B0.164%2B0.1476)%3D1.56714%0A#card=math&code=f%28x%29%3D1.475%2B0.1%20%2A%280.2250%2B0.2025%2B0.1823%2B0.164%2B0.1476%29%3D1.56714%0A&id=qfZBo)

5.3 在sklearn中使用GDBT的实例

下面我们来使用sklearn来使用GBDT:

GradientBoostingRegressor参数解释:

- loss:{‘ls’, ‘lad’, ‘huber’, ‘quantile’}, default=’ls’:‘ls’ 指最小二乘回归. ‘lad’ (最小绝对偏差) 是仅基于输入变量的顺序信息的高度鲁棒的损失函数。. ‘huber’ 是两者的结合. ‘quantile’允许分位数回归(用于alpha指定分位数)

- learning_rate:学习率缩小了每棵树的贡献learning_rate。在learning_rate和n_estimators之间需要权衡。

- n_estimators:要执行的提升次数。

- subsample:用于拟合各个基础学习者的样本比例。如果小于1.0,则将导致随机梯度增强。subsample与参数n_estimators。选择会导致方差减少和偏差增加。subsample < 1.0

- criterion:{‘friedman_mse’,’mse’,’mae’},默认=’friedman_mse’:“ mse”是均方误差,“ mae”是平均绝对误差。默认值“ friedman_mse”通常是最好的,因为在某些情况下它可以提供更好的近似值。

- min_samples_split:拆分内部节点所需的最少样本数

- min_samples_leaf:在叶节点处需要的最小样本数。

- min_weight_fraction_leaf:在所有叶节点处(所有输入样本)的权重总和中的最小加权分数。如果未提供sample_weight,则样本的权重相等。

- max_depth:各个回归模型的最大深度。最大深度限制了树中节点的数量。调整此参数以获得最佳性能;最佳值取决于输入变量的相互作用。

- min_impurity_decrease:如果节点分裂会导致杂质的减少大于或等于该值,则该节点将被分裂。

- min_impurity_split:提前停止树木生长的阈值。如果节点的杂质高于阈值,则该节点将分裂

- max_features{‘auto’, ‘sqrt’, ‘log2’},int或float:寻找最佳分割时要考虑的功能数量:

- 如果为int,则max_features在每个分割处考虑特征。

- 如果为float,max_features则为小数,并 在每次拆分时考虑要素。int(max_features * n_features)

- 如果“auto”,则max_features=n_features。

- 如果是“ sqrt”,则max_features=sqrt(n_features)。

- 如果为“ log2”,则为max_features=log2(n_features)。

- 如果没有,则max_features=n_features。

from sklearn.metrics import mean_squared_errorfrom sklearn.datasets import make_friedman1from sklearn.ensemble import GradientBoostingRegressorX, y = make_friedman1(n_samples=1200, random_state=0, noise=1.0)X_train, X_test = X[:200], X[200:]y_train, y_test = y[:200], y[200:]est = GradientBoostingRegressor(n_estimators=100, learning_rate=0.1,max_depth=1, random_state=0, loss='ls').fit(X_train, y_train)mean_squared_error(y_test, est.predict(X_test))

5.009154859960321

from sklearn.datasets import make_regressionfrom sklearn.ensemble import GradientBoostingRegressorfrom sklearn.model_selection import train_test_splitX, y = make_regression(random_state=0)X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0)reg = GradientBoostingRegressor(random_state=0)reg.fit(X_train, y_train)reg.score(X_test, y_test)

0.4233836905173889

小作业

这里给大家一个小作业,就是大家总结下GradientBoostingRegressor与GradientBoostingClassifier函数的各个参数的意思!参考文档:

GradientBoostingRegressor:https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingRegressor.html#sklearn.ensemble.GradientBoostingRegressor

GradientBoostingClassifier:https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingClassifier.html?highlight=gra#sklearn.ensemble.GradientBoostingClassifier

6. XGBoost算法

XGBoost是陈天奇等人开发的一个开源机器学习项目,高效地实现了GBDT算法并进行了算法和工程上的许多改进,被广泛应用在Kaggle竞赛及其他许多机器学习竞赛中并取得了不错的成绩。

XGBoost本质上还是一个GBDT,但是力争把速度和效率发挥到极致,所以叫X (Extreme) GBoosted,包括前面说过,两者都是boosting方法。

XGBoost是一个优化的分布式梯度增强库,旨在实现高效,灵活和便携。 它在Gradient Boosting框架下实现机器学习算法。 XGBoost提供了并行树提升(也称为GBDT,GBM),可以快速准确地解决许多数据科学问题。 相同的代码在主要的分布式环境(Hadoop,SGE,MPI)上运行,并且可以解决超过数十亿个样例的问题。

XGBoost利用了核外计算并且能够使数据科学家在一个主机上处理数亿的样本数据。最终,将这些技术进行结合来做一个端到端的系统以最少的集群系统来扩展到更大的数据集上。Xgboost以CART决策树为子模型,通过Gradient Tree Boosting实现多棵CART树的集成学习,得到最终模型。下面我们来看看XGBoost的最终模型构建:

引用陈天奇的论文,我们的数据为:

(1) 构造目标函数:

假设有K棵树,则第i个样本的输出为

因此,目标函数的构建为:其中,

为正则项

(2) 叠加式的训练(Additive Training):

给定样本,

(初始预测),

…….以此类推,可以得到:

,其中,

为前K-1棵树的预测结果,

为第K棵树的预测结果。

因此,目标函数可以分解为: 由于正则化项也可以分解为前K-1棵树的复杂度加第K棵树的复杂度,因此:

,由于

在模型构建到第K棵树的时候已经固定,无法改变,因此是一个已知的常数,可以在最优化的时候省去,故:

(3) 使用泰勒级数近似目标函数:

其中,

其中,%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=g%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%28t-1%29%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=zO1QC)和

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=zsH1n)

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=YW6xa)在数学中,泰勒级数(英语:Taylor series)用无限项连加式——级数来表示一个函数,这些相加的项由函数在某一点的导数求得。具体的形式如下:

%3D%5Cfrac%7Bf%5Cleft(x%7B0%7D%5Cright)%7D%7B0%20!%7D%2B%5Cfrac%7Bf%5E%7B%5Cprime%7D%5Cleft(x%7B0%7D%5Cright)%7D%7B1%20!%7D%5Cleft(x-x%7B0%7D%5Cright)%2B%5Cfrac%7Bf%5E%7B%5Cprime%20%5Cprime%7D%5Cleft(x%7B0%7D%5Cright)%7D%7B2%20!%7D%5Cleft(x-x%7B0%7D%5Cright)%5E%7B2%7D%2B%5Cldots%2B%5Cfrac%7Bf%5E%7B(n)%7D%5Cleft(x%7B0%7D%5Cright)%7D%7Bn%20!%7D%5Cleft(x-x%7B0%7D%5Cright)%5E%7Bn%7D%2B……%0A#card=math&code=f%28x%29%3D%5Cfrac%7Bf%5Cleft%28x%7B0%7D%5Cright%29%7D%7B0%20%21%7D%2B%5Cfrac%7Bf%5E%7B%5Cprime%7D%5Cleft%28x%7B0%7D%5Cright%29%7D%7B1%20%21%7D%5Cleft%28x-x%7B0%7D%5Cright%29%2B%5Cfrac%7Bf%5E%7B%5Cprime%20%5Cprime%7D%5Cleft%28x%7B0%7D%5Cright%29%7D%7B2%20%21%7D%5Cleft%28x-x%7B0%7D%5Cright%29%5E%7B2%7D%2B%5Cldots%2B%5Cfrac%7Bf%5E%7B%28n%29%7D%5Cleft%28x%7B0%7D%5Cright%29%7D%7Bn%20%21%7D%5Cleft%28x-x%7B0%7D%5Cright%29%5E%7Bn%7D%2B……%0A&id=KkLDC)

由于%7D%5Cright)#card=math&code=%5Csum%7Bi%3D1%7D%5E%7Bn%7Dl%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28K-1%29%7D%5Cright%29&id=g4Tb2)在模型构建到第K棵树的时候已经固定,无法改变,因此是一个已知的常数,可以在最优化的时候省去,故:

%7D%3D%5Csum%7Bi%3D1%7D%5E%7Bn%7D%5Cleft%5Bg%7Bi%7D%20f%7BK%7D%5Cleft(%5Cmathbf%7Bx%7D%7Bi%7D%5Cright)%2B%5Cfrac%7B1%7D%7B2%7D%20h%7Bi%7D%20f%7BK%7D%5E%7B2%7D%5Cleft(%5Cmathbf%7Bx%7D%7Bi%7D%5Cright)%5Cright%5D%2B%5COmega%5Cleft(f%7BK%7D%5Cright)%0A#card=math&code=%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28K%29%7D%3D%5Csum%7Bi%3D1%7D%5E%7Bn%7D%5Cleft%5Bg%7Bi%7D%20f%7BK%7D%5Cleft%28%5Cmathbf%7Bx%7D%7Bi%7D%5Cright%29%2B%5Cfrac%7B1%7D%7B2%7D%20h%7Bi%7D%20f%7BK%7D%5E%7B2%7D%5Cleft%28%5Cmathbf%7Bx%7D%7Bi%7D%5Cright%29%5Cright%5D%2B%5COmega%5Cleft%28f%7BK%7D%5Cright%29%0A&id=Ptdsv)

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=xwaJr)(4) 如何定义一棵树:

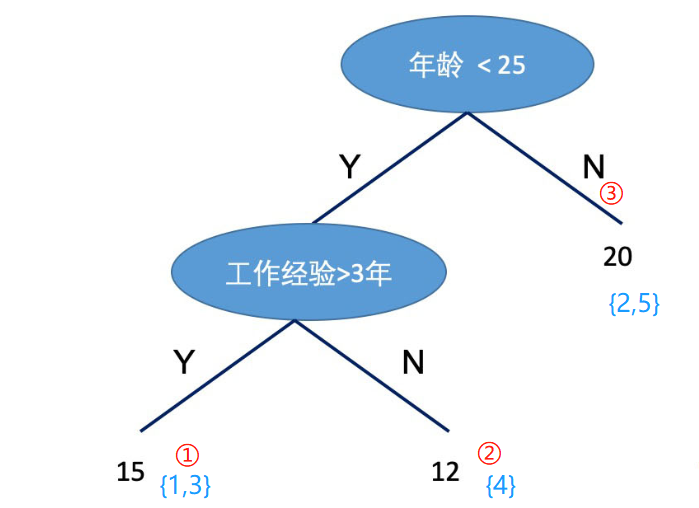

为了说明如何定义一棵树的问题,我们需要定义几个概念:第一个概念是样本所在的节点位置#card=math&code=q%28x%29&id=h1q0s),第二个概念是有哪些样本落在节点j上

%3Dj%5Cright%5C%7D#card=math&code=I%7Bj%7D%3D%5Cleft%5C%7Bi%20%5Cmid%20q%5Cleft%28%5Cmathbf%7Bx%7D%7Bi%7D%5Cright%29%3Dj%5Cright%5C%7D&id=BRZeU),第三个概念是每个结点的预测值

%7D#card=math&code=w%7Bq%28x%29%7D&id=tkyU1),第四个概念是模型复杂度#card=math&code=%5COmega%5Cleft%28f%7BK%7D%5Cright%29&id=K2Ojn),它可以由叶子节点的个数以及节点函数值来构建,则:%20%3D%20%5Cgamma%20T%2B%5Cfrac%7B1%7D%7B2%7D%20%5Clambda%20%5Csum%7Bj%3D1%7D%5E%7BT%7D%20w%7Bj%7D%5E%7B2%7D#card=math&code=%5COmega%5Cleft%28f%7BK%7D%5Cright%29%20%3D%20%5Cgamma%20T%2B%5Cfrac%7B1%7D%7B2%7D%20%5Clambda%20%5Csum%7Bj%3D1%7D%5E%7BT%7D%20w_%7Bj%7D%5E%7B2%7D&id=UnFdN)。如下图的例子:

%20%3D%201%2Cq(x_2)%20%3D%203%2Cq(x_3)%20%3D%201%2Cq(x_4)%20%3D%202%2Cq(x_5)%20%3D%203#card=math&code=q%28x_1%29%20%3D%201%2Cq%28x_2%29%20%3D%203%2Cq%28x_3%29%20%3D%201%2Cq%28x_4%29%20%3D%202%2Cq%28x_5%29%20%3D%203&id=xA6ZN),

,

#card=math&code=w%20%3D%20%2815%2C12%2C20%29&id=KFdoc)

因此,目标函数用以上符号替代后:

%7D%20%26%3D%5Csum%7Bi%3D1%7D%5E%7Bn%7D%5Cleft%5Bg%7Bi%7D%20f%7BK%7D%5Cleft(%5Cmathrm%7Bx%7D%7Bi%7D%5Cright)%2B%5Cfrac%7B1%7D%7B2%7D%20h%7Bi%7D%20f%7BK%7D%5E%7B2%7D%5Cleft(%5Cmathrm%7Bx%7D%7Bi%7D%5Cright)%5Cright%5D%2B%5Cgamma%20T%2B%5Cfrac%7B1%7D%7B2%7D%20%5Clambda%20%5Csum%7Bj%3D1%7D%5E%7BT%7D%20w%7Bj%7D%5E%7B2%7D%20%5C%5C%0A%26%3D%5Csum%7Bj%3D1%7D%5E%7BT%7D%5Cleft%5B%5Cleft(%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20g%7Bi%7D%5Cright)%20w%7Bj%7D%2B%5Cfrac%7B1%7D%7B2%7D%5Cleft(%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20h%7Bi%7D%2B%5Clambda%5Cright)%20w%7Bj%7D%5E%7B2%7D%5Cright%5D%2B%5Cgamma%20T%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0A%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28K%29%7D%20%26%3D%5Csum%7Bi%3D1%7D%5E%7Bn%7D%5Cleft%5Bg%7Bi%7D%20f%7BK%7D%5Cleft%28%5Cmathrm%7Bx%7D%7Bi%7D%5Cright%29%2B%5Cfrac%7B1%7D%7B2%7D%20h%7Bi%7D%20f%7BK%7D%5E%7B2%7D%5Cleft%28%5Cmathrm%7Bx%7D%7Bi%7D%5Cright%29%5Cright%5D%2B%5Cgamma%20T%2B%5Cfrac%7B1%7D%7B2%7D%20%5Clambda%20%5Csum%7Bj%3D1%7D%5E%7BT%7D%20w%7Bj%7D%5E%7B2%7D%20%5C%5C%0A%26%3D%5Csum%7Bj%3D1%7D%5E%7BT%7D%5Cleft%5B%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20g%7Bi%7D%5Cright%29%20w%7Bj%7D%2B%5Cfrac%7B1%7D%7B2%7D%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20h%7Bi%7D%2B%5Clambda%5Cright%29%20w%7Bj%7D%5E%7B2%7D%5Cright%5D%2B%5Cgamma%20T%0A%5Cend%7Baligned%7D%0A&id=apoRJ)

由于我们的目标就是最小化目标函数,现在的目标函数化简为一个关于w的二次函数:%7D%3D%5Csum%7Bj%3D1%7D%5E%7BT%7D%5Cleft%5B%5Cleft(%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20g%7Bi%7D%5Cright)%20w%7Bj%7D%2B%5Cfrac%7B1%7D%7B2%7D%5Cleft(%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20h%7Bi%7D%2B%5Clambda%5Cright)%20w%7Bj%7D%5E%7B2%7D%5Cright%5D%2B%5Cgamma%20T#card=math&code=%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28K%29%7D%3D%5Csum%7Bj%3D1%7D%5E%7BT%7D%5Cleft%5B%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20g%7Bi%7D%5Cright%29%20w%7Bj%7D%2B%5Cfrac%7B1%7D%7B2%7D%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20h%7Bi%7D%2B%5Clambda%5Cright%29%20w%7Bj%7D%5E%7B2%7D%5Cright%5D%2B%5Cgamma%20T&id=dZQut),根据二次函数求极值的公式:

求极值,对称轴在

,极值为

,因此:

以及

%7D(q)%3D-%5Cfrac%7B1%7D%7B2%7D%20%5Csum%7Bj%3D1%7D%5E%7BT%7D%20%5Cfrac%7B%5Cleft(%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20g%7Bi%7D%5Cright)%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20h%7Bi%7D%2B%5Clambda%7D%2B%5Cgamma%20T%0A#card=math&code=%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28K%29%7D%28q%29%3D-%5Cfrac%7B1%7D%7B2%7D%20%5Csum%7Bj%3D1%7D%5E%7BT%7D%20%5Cfrac%7B%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20g%7Bi%7D%5Cright%29%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7Bj%7D%7D%20h%7Bi%7D%2B%5Clambda%7D%2B%5Cgamma%20T%0A&id=lwUJf)

(5) 如何寻找树的形状:

不难发现,刚刚的讨论都是基于树的形状已经确定了计算和

,但是实际上我们需要像学习决策树一样找到树的形状。因此,我们借助决策树学习的方式,使用目标函数的变化来作为分裂节点的标准。我们使用一个例子来说明:

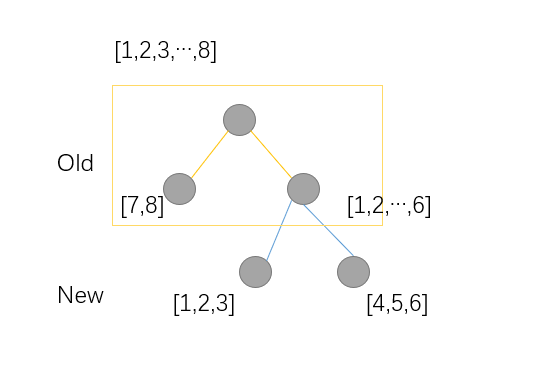

例子中有8个样本,分裂方式如下,因此:

%7D%20%3D%20-%5Cfrac%7B1%7D%7B2%7D%5B%5Cfrac%7B(g_7%20%2B%20g_8)%5E2%7D%7BH_7%2BH_8%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B(g_1%20%2B…%2B%20g_6)%5E2%7D%7BH_1%2B…%2BH_6%20%2B%20%5Clambda%7D%5D%20%2B%202%5Cgamma%20%5C%5C%0A%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B(new)%7D%20%3D%20-%5Cfrac%7B1%7D%7B2%7D%5B%5Cfrac%7B(g_7%20%2B%20g_8)%5E2%7D%7BH_7%2BH_8%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B(g_1%20%2B…%2B%20g_3)%5E2%7D%7BH_1%2B…%2BH_3%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B(g_4%20%2B…%2B%20g_6)%5E2%7D%7BH_4%2B…%2BH_6%20%2B%20%5Clambda%7D%5D%20%2B%203%5Cgamma%5C%5C%0A%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B(old)%7D%20-%20%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B(new)%7D%20%3D%20%5Cfrac%7B1%7D%7B2%7D%5B%20%5Cfrac%7B(g_1%20%2B…%2B%20g_3)%5E2%7D%7BH_1%2B…%2BH_3%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B(g_4%20%2B…%2B%20g_6)%5E2%7D%7BH_4%2B…%2BH_6%20%2B%20%5Clambda%7D%20-%20%5Cfrac%7B(g_1%2B…%2Bg_6)%5E2%7D%7Bh_1%2B…%2Bh_6%2B%5Clambda%7D%5D%20-%20%5Cgamma%0A#card=math&code=%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28old%29%7D%20%3D%20-%5Cfrac%7B1%7D%7B2%7D%5B%5Cfrac%7B%28g_7%20%2B%20g_8%29%5E2%7D%7BH_7%2BH_8%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B%28g_1%20%2B…%2B%20g_6%29%5E2%7D%7BH_1%2B…%2BH_6%20%2B%20%5Clambda%7D%5D%20%2B%202%5Cgamma%20%5C%5C%0A%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28new%29%7D%20%3D%20-%5Cfrac%7B1%7D%7B2%7D%5B%5Cfrac%7B%28g_7%20%2B%20g_8%29%5E2%7D%7BH_7%2BH_8%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B%28g_1%20%2B…%2B%20g_3%29%5E2%7D%7BH_1%2B…%2BH_3%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B%28g_4%20%2B…%2B%20g_6%29%5E2%7D%7BH_4%2B…%2BH_6%20%2B%20%5Clambda%7D%5D%20%2B%203%5Cgamma%5C%5C%0A%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28old%29%7D%20-%20%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28new%29%7D%20%3D%20%5Cfrac%7B1%7D%7B2%7D%5B%20%5Cfrac%7B%28g_1%20%2B…%2B%20g_3%29%5E2%7D%7BH_1%2B…%2BH_3%20%2B%20%5Clambda%7D%20%2B%20%5Cfrac%7B%28g_4%20%2B…%2B%20g_6%29%5E2%7D%7BH_4%2B…%2BH_6%20%2B%20%5Clambda%7D%20-%20%5Cfrac%7B%28g_1%2B…%2Bg_6%29%5E2%7D%7Bh_1%2B…%2Bh_6%2B%5Clambda%7D%5D%20-%20%5Cgamma%0A&id=Sbm1R)

因此,从上面的例子看出:分割节点的标准为%7D%20-%20%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B(new)%7D%20%5C%7D#card=math&code=max%5C%7B%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28old%29%7D%20-%20%5Ctilde%7B%5Cmathcal%7BL%7D%7D%5E%7B%28new%29%7D%20%5C%7D&id=pMZFk),即:

%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7BL%7D%7D%20h%7Bi%7D%2B%5Clambda%7D%2B%5Cfrac%7B%5Cleft(%5Csum%7Bi%20%5Cin%20I%7BR%7D%7D%20g%7Bi%7D%5Cright)%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7BR%7D%7D%20h%7Bi%7D%2B%5Clambda%7D-%5Cfrac%7B%5Cleft(%5Csum%7Bi%20%5Cin%20I%7D%20g%7Bi%7D%5Cright)%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7D%20h%7Bi%7D%2B%5Clambda%7D%5Cright%5D-%5Cgamma%0A#card=math&code=%5Cmathcal%7BL%7D%7B%5Ctext%20%7Bsplit%20%7D%7D%3D%5Cfrac%7B1%7D%7B2%7D%5Cleft%5B%5Cfrac%7B%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7BL%7D%7D%20g%7Bi%7D%5Cright%29%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7BL%7D%7D%20h%7Bi%7D%2B%5Clambda%7D%2B%5Cfrac%7B%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7BR%7D%7D%20g%7Bi%7D%5Cright%29%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7BR%7D%7D%20h%7Bi%7D%2B%5Clambda%7D-%5Cfrac%7B%5Cleft%28%5Csum%7Bi%20%5Cin%20I%7D%20g%7Bi%7D%5Cright%29%5E%7B2%7D%7D%7B%5Csum%7Bi%20%5Cin%20I%7D%20h%7Bi%7D%2B%5Clambda%7D%5Cright%5D-%5Cgamma%0A&id=gRODs)

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=t2SE9)6.1 精确贪心分裂算法:

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=t2SE9)6.1 精确贪心分裂算法:

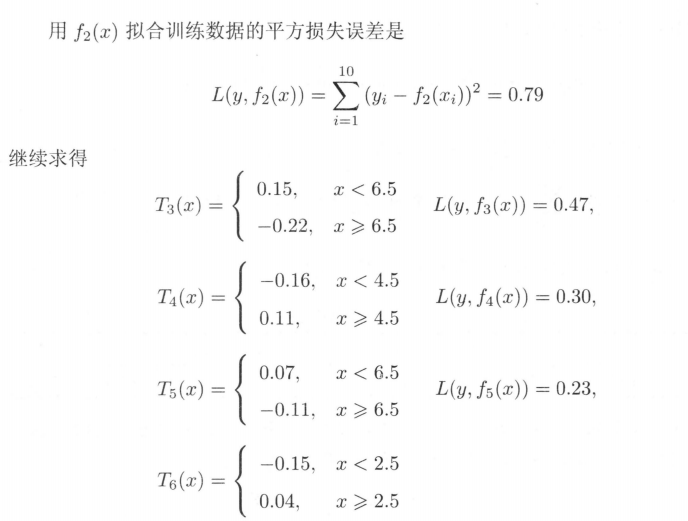

XGBoost在生成新树的过程中,最基本的操作是节点分裂。节点分裂中最重 要的环节是找到最优特征及最优切分点, 然后将叶子节点按照最优特征和最优切 分点进行分裂。选取最优特征和最优切分点的一种思路如下:首先找到所有的候 选特征及所有的候选切分点, 一一求得其 , 然后选择

最大的特征及 对应切分点作为最优特征和最优切分点。我们称此种方法为精确贪心算法。该算法是一种启发式算法, 因为在节点分裂时只选择当前最优的分裂策略, 而非全局最优的分裂策略。精确贪心算法的计算过程如下所示:

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=pis18)

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=EgY7q)6.2基于直方图的近似算法:

%7D%7D%5E%7B2%7D%20l%5Cleft(y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B(t-1)%7D%5Cright)#card=math&code=h%7Bi%7D%3D%5Cpartial%7B%5Chat%7By%7D%5E%7B%28t-1%29%7D%7D%5E%7B2%7D%20l%5Cleft%28y%7Bi%7D%2C%20%5Chat%7By%7D%5E%7B%28t-1%29%7D%5Cright%29&id=EgY7q)6.2基于直方图的近似算法:

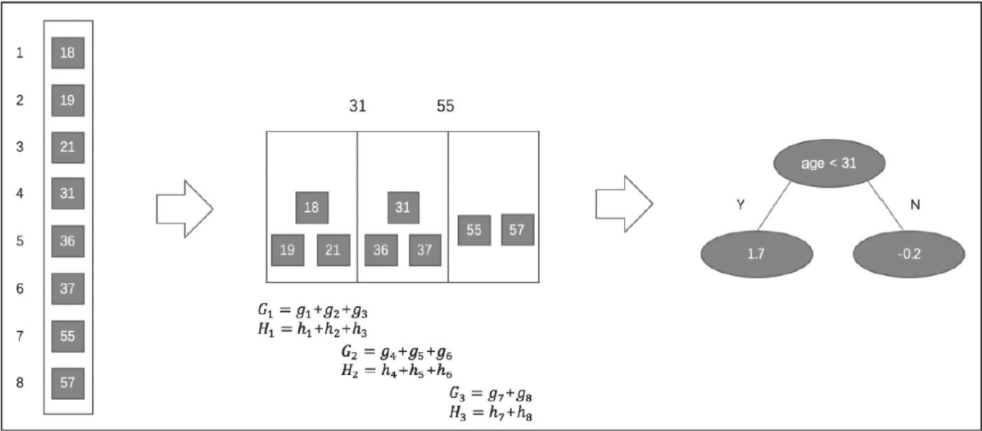

精确贪心算法在选择最优特征和最优切分点时是一种十分有效的方法。它计算了所有特征、所有切分点的收益, 并从中选择了最优的, 从而保证模型能比较好地拟合了训练数据。但是当数据不能完全加载到内存时,精确贪心算法会变得 非常低效,算法在计算过程中需要不断在内存与磁盘之间进行数据交换,这是个非常耗时的过程, 并且在分布式环境中面临同样的问题。为了能够更高效地选 择最优特征及切分点, XGBoost提出一种近似算法来解决该问题。 基于直方图的近似算法的主要思想是:对某一特征寻找最优切分点时,首先对该特征的所有切分点按分位数 (如百分位) 分桶, 得到一个候选切分点集。特征的每一个切分点都可以分到对应的分桶; 然后,对每个桶计算特征统计G和H得到直方图, G为该桶内所有样本一阶特征统计g之和, H为该桶内所有样本二阶特征统计h之和; 最后,选择所有候选特征及候选切分点中对应桶的特征统计收益最大的作为最优特征及最优切分点。基于直方图的近似算法的计算过程如下所示:

- 对于每个特征

按分位数对特征

分桶

可得候选切分点,

- 对于每个特征

有:

- 类似精确贪心算法,依据梯度统计找到最大增益的候选切分点。

下面用一个例子说明基于直方图的近似算法:

假设有一个年龄特征,其特征的取值为18、19、21、31、36、37、55、57,我们需要使用近似算法找到年龄这个特征的最佳分裂点:

近似算法实现了两种候选切分点的构建策略:全局策略和本地策略。全局策略是在树构建的初始阶段对每一个特征确定一个候选切分点的集合, 并在该树每一层的节点分裂中均采用此集合计算收益, 整个过程候选切分点集合不改变。本地策略则是在每一次节点分裂时均重新确定候选切分点。全局策略需要更细的分桶才能达到本地策略的精确度, 但全局策略在选取候选切分点集合时比本地策略更简单。在XGBoost系统中, 用户可以根据需求自由选择使用精确贪心算法、近似算法全局策略、近似算法本地策略, 算法均可通过参数进行配置。

以上是XGBoost的理论部分,下面我们对XGBoost系统进行详细的讲解:

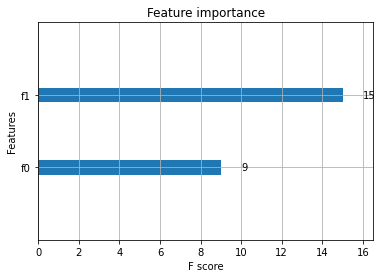

官方文档:https://xgboost.readthedocs.io/en/latest/python/python_intro.html 笔者自己的总结:https://zhuanlan.zhihu.com/p/143009353