支持向量机(SVM)

硬间隔SVM

考虑感知机问题,当数据集线性可分时,可以用感知机算法求出一个完美切分样本的超平面,但是这个超平面有无数条。硬间隔SVM的思想就是找到其中最好的一个超平面。最好的超平面就是样本到其的距离最大的超平面

给定数据集%2C(X_2%2Cy_2)%2C%5Ccdots%2C(X_N%2Cy_N)%5C%7D#card=math&code=D%3D%5C%7B%28X_1%2Cy_1%29%2C%28X_2%2Cy_2%29%2C%5Ccdots%2C%28X_N%2Cy_N%29%5C%7D),其中

%5ET%3D%0A%5Cbegin%7Bpmatrix%7D%0Ax%7B11%7D%26x%7B12%7D%26%5Ccdots%26x%7B1p%7D%5C%5C%0Ax%7B21%7D%26x%7B22%7D%26%5Ccdots%26x%7B2p%7D%5C%5C%0A%5Cvdots%26%5Cvdots%26%5Cddots%26%5Cvdots%5C%5C%0Ax%7BN1%7D%26x%7BN2%7D%26%5Ccdots%26x%7BNp%7D%0A%5Cend%7Bpmatrix%7D%7BN%5Ctimes%20p%7D%5C%5C%0AY%3D%5Cbegin%7Bpmatrix%7Dy1%5C%5Cy_2%5C%5C%5Cvdots%5C%5Cy_N%5Cend%7Bpmatrix%7D%5C%5C%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0AX%3D%28X_1%2CX_2%2C%5Ccdots%2CX_N%29%5ET%3D%0A%5Cbegin%7Bpmatrix%7D%0Ax%7B11%7D%26x%7B12%7D%26%5Ccdots%26x%7B1p%7D%5C%5C%0Ax%7B21%7D%26x%7B22%7D%26%5Ccdots%26x%7B2p%7D%5C%5C%0A%5Cvdots%26%5Cvdots%26%5Cddots%26%5Cvdots%5C%5C%0Ax%7BN1%7D%26x%7BN2%7D%26%5Ccdots%26x%7BNp%7D%0A%5Cend%7Bpmatrix%7D_%7BN%5Ctimes%20p%7D%5C%5C%0AY%3D%5Cbegin%7Bpmatrix%7Dy_1%5C%5Cy_2%5C%5C%5Cvdots%5C%5Cy_N%5Cend%7Bpmatrix%7D%5C%5C%0A%5Cend%7Bgathered%7D%0A)

设超平面为

函数间隔和几何间隔

函数间隔

我们可以用一个样本点到超平面的距离来表示分类预测的确信度,即距离越大就更确信分类是正确的。显然如果给定一个超平面可以用

来相对的刻画样本

#card=math&code=%28X_i%2Cy_i%29)到超平面的距离,于是

#card=math&code=y_i%28W%5ETX_i%2Bb%3D0%29)可以完整的表示分类正确性以及确信度。对于样本

#card=math&code=%28X_i%2Cy_i%29)称

#card=math&code=y_i%28W%5ETX_i%2Bb%3D0%29)为函数间隔

几何间隔

超平面的系数实际是可以线性放缩的,比如

和

表示的是同一个超平面,但是对于这同一个超平面函数间隔却变成了2倍,所以样本的函数间隔是可以放缩的,因此需要给超平面的

加一些约束条件使间隔是确定的。对于样本

#card=math&code=%28X_i%2Cy_i%29),将

单位化后得到的函数距离称为几何间隔,实际就是样本

#card=math&code=%28X_i%2Cy_i%29)到超平面的欧氏距离

%0A#card=math&code=y_i%28%5Cfrac%7BW%7D%7B%7C%7CW%7C%7C%7D%5Ccdot%20X_i%2B%5Cfrac%7Bb%7D%7B%7C%7CW%7C%7C%7D%29%0A)

模型构建

样本到超平面的距离最大,其实就是指几何间隔最大,所以又称为间隔最大化。要使所有样本到超平面的距离最大,那么只需要到超平面最近的样本的几何间隔最大即可,即如下优化问题

%3D%5Cmax%7BW%2Cb%7D%5Cfrac%7B1%7D%7B%7C%7CW%7C%7C%7D%5Cmin%7BXi%2Cy_i%7Dy_i(W%5ETX_i%2Bb)%5C%5C%0As.t.%5Cquad%20y_i(W%5ETX_i%2Bb)%5Cgt0%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmax%7BW%2Cb%7D%5Cmin%7BX_i%2Cy_i%7Dy_i%28%5Cfrac%7BW%7D%7B%7C%7CW%7C%7C%7D%5Ccdot%20X_i%2B%5Cfrac%7Bb%7D%7B%7C%7CW%7C%7C%7D%29%3D%5Cmax%7BW%2Cb%7D%5Cfrac%7B1%7D%7B%7C%7CW%7C%7C%7D%5Cmin_%7BX_i%2Cy_i%7Dy_i%28W%5ETX_i%2Bb%29%5C%5C%0As.t.%5Cquad%20y_i%28W%5ETX_i%2Bb%29%5Cgt0%0A%5Cend%7Bgathered%7D%0A)

显然%3D%5Cgamma#card=math&code=%5Cexists%5Cgamma%5Cgt0%2Cs.t.%5Cquad%5Cmin_%7BX_i%2Cy_i%7Dy_i%28W%5ETX_i%2Bb%29%3D%5Cgamma),则

%5Cge%5Cgamma#card=math&code=y_i%28W%5ETX_i%2Bb%29%5Cge%5Cgamma),又因为函数间隔是可以放缩的,即

是可以放缩的,那么为了简化计算可令

,则可以转化为如下优化问题

%5Cge1%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmax_%7BW%2Cb%7D%5Cfrac%7B1%7D%7B%7C%7CW%7C%7C%7D%5C%5C%0As.t.%5Cquad%20y_i%28W%5ETX_i%2Bb%29%5Cge1%0A%5Cend%7Bgathered%7D%0A)

注意到最大化和最小化

等价,因此最终写为如下优化问题

%5Cle0%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmin_%7BW%2Cb%7D%7B1%5Cover2%7DW%5ETW%5C%5C%0As.t.%5Cquad%201-y_i%28W%5ETX_i%2Bb%29%5Cle0%0A%5Cend%7Bgathered%7D%0A)

解的存在且唯一性

下面证明间隔最大化的超平面是存在且唯一的,即该优化问题只有一个最优解

- 存在性:因为数据集线性可分,所以这个优化问题必有解

- 唯一性:设存在两个最优解

%2C(W_2%2Cb_2)#card=math&code=%28W_1%2Cb_1%29%2C%28W_2%2Cb_2%29),显然

,

为一个常数。令

%5Cle0%5C%5C%0A1-y_i(W_2%5ETX_i%2Bb_2)%5Cle0%0A%5Cend%7Bcases%7D%0A%5CRightarrow1-y_i%5Cleft(%5Cfrac%7BW_1%2BW_2%7D%7B2%7D%5Cright)%5ETX_i%2B%5Cfrac%7Bb_1%2Bb_2%7D%7B2%7D%5Cle0%0A#card=math&code=%5Cbegin%7Bcases%7D%0A1-y_i%28W_1%5ETX_i%2Bb_1%29%5Cle0%5C%5C%0A1-y_i%28W_2%5ETX_i%2Bb_2%29%5Cle0%0A%5Cend%7Bcases%7D%0A%5CRightarrow1-y_i%5Cleft%28%5Cfrac%7BW_1%2BW_2%7D%7B2%7D%5Cright%29%5ETX_i%2B%5Cfrac%7Bb_1%2Bb_2%7D%7B2%7D%5Cle0%0A)

因此%5Cle0#card=math&code=1-y_i%28W_0%5ETX_i%2Bb_0%29%5Cle0),

#card=math&code=%28W_0%2Cb_0%29)为优化问题的一个可行解,则有

因此共线,可令

。若

则

,不能完全满足

%5Cle0#card=math&code=1-yi%28W_0%5ETX_i%2Bb_0%29%5Cle0)的约束条件,与前述讨论矛盾;若

则

,即

的最优解是唯一的。此时将两个最优解写为%2C(W%7Bopt%7D%2Cb2)#card=math&code=%28W%7Bopt%7D%2Cb1%29%2C%28W%7Bopt%7D%2Cb2%29)

设是

中分别使%2C(W%7Bopt%7D%2Cb2)#card=math&code=%28W%7Bopt%7D%2Cb1%29%2C%28W%7Bopt%7D%2Cb2%29)约束条件等号成立的点,

对应

中分别使%2C(W%7Bopt%7D%2Cb2)#card=math&code=%28W%7Bopt%7D%2Cb1%29%2C%28W%7Bopt%7D%2Cb2%29)约束条件等号成立的点,则有%3D0%5C%5C%0A1%2B(W%7Bopt%7D%5ETX1%5E%7B’’%7D%2Bb_1)%3D0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%20b_1%3D-%7B1%5Cover2%7D(W%7Bopt%7D%5ETX1%5E%7B’%7D%2BW%7Bopt%7D%5ETX1%5E%7B’’%7D)%5C%5C%0A%5Cbegin%7Bcases%7D%0A1-(W%7Bopt%7D%5ETX2%5E%7B’%7D%2Bb_2)%3D0%5C%5C%0A1%2B(W%7Bopt%7D%5ETX2%5E%7B’’%7D%2Bb_2)%3D0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%20b_2%3D-%7B1%5Cover2%7D(W%7Bopt%7D%5ETX2%5E%7B’%7D%2BW%7Bopt%7D%5ETX2%5E%7B’’%7D)%5C%5C%0A%5C%5C%0A%5CRightarrow%20b_1-b_2%3D-%7B1%5Cover2%7D%5BW%7Bopt%7D%5ET(X1%5E%7B’%7D-X_2%5E%7B’%7D)%2BW%7Bopt%7D%5ET(X1%5E%7B’’%7D-X_2%5E%7B’’%7D)%5D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cbegin%7Bcases%7D%0A1-%28W%7Bopt%7D%5ETX1%5E%7B%27%7D%2Bb_1%29%3D0%5C%5C%0A1%2B%28W%7Bopt%7D%5ETX1%5E%7B%27%27%7D%2Bb_1%29%3D0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%20b_1%3D-%7B1%5Cover2%7D%28W%7Bopt%7D%5ETX1%5E%7B%27%7D%2BW%7Bopt%7D%5ETX1%5E%7B%27%27%7D%29%5C%5C%0A%5Cbegin%7Bcases%7D%0A1-%28W%7Bopt%7D%5ETX2%5E%7B%27%7D%2Bb_2%29%3D0%5C%5C%0A1%2B%28W%7Bopt%7D%5ETX2%5E%7B%27%27%7D%2Bb_2%29%3D0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%20b_2%3D-%7B1%5Cover2%7D%28W%7Bopt%7D%5ETX2%5E%7B%27%7D%2BW%7Bopt%7D%5ETX2%5E%7B%27%27%7D%29%5C%5C%0A%5C%5C%0A%5CRightarrow%20b_1-b_2%3D-%7B1%5Cover2%7D%5BW%7Bopt%7D%5ET%28X1%5E%7B%27%7D-X_2%5E%7B%27%7D%29%2BW%7Bopt%7D%5ET%28X1%5E%7B%27%27%7D-X_2%5E%7B%27%27%7D%29%5D%0A%5Cend%7Bgathered%7D%0A)

%5Cle0%5C%5C%0A1-(W%7Bopt%7D%5ETX1%5E%7B’%7D%2Bb_2)%5Cle0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%0A%5Cbegin%7Bcases%7D%0AW%7Bopt%7D%5ETX2%5E%7B’%7D%2Bb_1%5Cge1%3DW%7Bopt%7D%5ETX1%5E%7B’%7D%2Bb_1%5C%5C%0AW%7Bopt%7D%5ETX1%5E%7B’%7D%2Bb_2%5Cge1%3DW%7Bopt%7D%5ETX2%5E%7B’%7D%2Bb_2%0A%5Cend%7Bcases%7D%5C%5C%0A%5CRightarrow%0A%5Cbegin%7Bcases%7D%0AW%7Bopt%7D%5ET(X1%5E%7B’%7D-X_2%5E%7B’%7D)%5Cle0%5C%5C%0AW%7Bopt%7D%5ET(X1%5E%7B’%7D-X_2%5E%7B’%7D)%5Cge0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%20W%7Bopt%7D%5ET(X1%5E%7B’%7D-X_2%5E%7B’%7D)%3D0%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cbegin%7Bcases%7D%0A1-%28W%7Bopt%7D%5ETX2%5E%7B%27%7D%2Bb_1%29%5Cle0%5C%5C%0A1-%28W%7Bopt%7D%5ETX1%5E%7B%27%7D%2Bb_2%29%5Cle0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%0A%5Cbegin%7Bcases%7D%0AW%7Bopt%7D%5ETX2%5E%7B%27%7D%2Bb_1%5Cge1%3DW%7Bopt%7D%5ETX1%5E%7B%27%7D%2Bb_1%5C%5C%0AW%7Bopt%7D%5ETX1%5E%7B%27%7D%2Bb_2%5Cge1%3DW%7Bopt%7D%5ETX2%5E%7B%27%7D%2Bb_2%0A%5Cend%7Bcases%7D%5C%5C%0A%5CRightarrow%0A%5Cbegin%7Bcases%7D%0AW%7Bopt%7D%5ET%28X1%5E%7B%27%7D-X_2%5E%7B%27%7D%29%5Cle0%5C%5C%0AW%7Bopt%7D%5ET%28X1%5E%7B%27%7D-X_2%5E%7B%27%7D%29%5Cge0%0A%5Cend%7Bcases%7D%0A%5CRightarrow%20W%7Bopt%7D%5ET%28X_1%5E%7B%27%7D-X_2%5E%7B%27%7D%29%3D0%0A%5Cend%7Bgathered%7D%0A)

同理%3D0#card=math&code=W_%7Bopt%7D%5ET%28X_1%5E%7B%27%27%7D-X_2%5E%7B%27%27%7D%29%3D0),故

即

,所以

的最优解是唯一的

综上所述该优化问题的最优解是存在且唯一的,因此间隔最大化的超平面是存在且唯一的

求解方法

直接想到可以用拉格朗日乘子法,于是令拉格朗日函数

%3D%7B1%5Cover2%7DW%5ETW%2B%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%5B1-y_i(W%5ETX_i%2Bb)%5D%5C%5C%0As.t.%5Cquad%5Clambda_i%5Cge0%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0AL%28W%2Cb%2C%5Clambda%29%3D%7B1%5Cover2%7DW%5ETW%2B%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%5B1-y_i%28W%5ETX_i%2Bb%29%5D%5C%5C%0As.t.%5Cquad%5Clambda_i%5Cge0%0A%5Cend%7Bgathered%7D%0A)

根据拉格朗日对偶性可将原始问题转化为对偶问题

%0A#card=math&code=%5Cmax%7B%5Clambda%7D%5Cmin%7BW%2Cb%7DL%28W%2Cb%2C%5Clambda%29%0A)

首先来计算#card=math&code=%5Cmin%5Climits_%7BW%2Cb%7DL%28W%2Cb%2C%5Clambda%29)

将代入

#card=math&code=L%28W%2Cb%2C%5Clambda%29)

%26%3D%7B1%5Cover2%7DW%5ETW%2B%5Csum%7Bi%3D1%7D%5EN%5Clambda_i-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iW%5ETX_i-b%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_i%5C%5C%0A%26%3D%7B1%5Cover2%7DW%5ETW%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iW%5ETX_i%0A%5Cend%7Baligned%7D%5C%5C%0A%5C%5C%0A%5Cfrac%7B%5Cpartial%20L%7D%7B%5Cpartial%20W%7D%3DW-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iX_i%3D0%5C%5C%0A%5CRightarrow%20W%3D%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iX_i%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cbegin%7Baligned%7D%0AL%28W%2Cb%2C%5Clambda%29%26%3D%7B1%5Cover2%7DW%5ETW%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iW%5ETX_i-b%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_i%5C%5C%0A%26%3D%7B1%5Cover2%7DW%5ETW%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iW%5ETX_i%0A%5Cend%7Baligned%7D%5C%5C%0A%5C%5C%0A%5Cfrac%7B%5Cpartial%20L%7D%7B%5Cpartial%20W%7D%3DW-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iX_i%3D0%5C%5C%0A%5CRightarrow%20W%3D%5Csum%7Bi%3D1%7D%5EN%5Clambda_iy_iX_i%0A%5Cend%7Bgathered%7D%0A)

将代入

#card=math&code=L%28W%2Cb%2C%5Clambda%29)

%26%3D%7B1%5Cover2%7D%5Cleft(%5Csum%7Bi%3D1%7D%5EN%5Clambda_iy_iX_i%5Cright)%5ET%5Cleft(%5Csum%7Bj%3D1%7D%5EN%5Clambdajy_jX_j%5Cright)%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_i%5Cleft(%5Csum%7Bj%3D1%7D%5EN%5Clambdajy_jX_j%5Cright)%5ETX_i%5C%5C%0A%26%3D%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iX_i%5ET%5Cleft(%5Csum%7Bj%3D1%7D%5EN%5Clambdajy_jX_j%5Cright)-%5Csum%7Bi%3D1%7D%5EN%5Cleft(%5Csum%7Bj%3D1%7D%5EN%5Clambda_jy_jX_j%5ET%5Cright)%5Clambda_iy_iX_i%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai%5C%5C%0A%26%3D%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Clambda_i%5Clambda_jy_iy_jX_i%5ETX_j-%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Clambda_i%5Clambda_jy_iy_jX_j%5ETX_i%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai%5C%5C%0A%26%3D-%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Clambda_i%5Clambda_jy_iy_jX_i%5ETX_j%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0AL%28W%2Cb%2C%5Clambda%29%26%3D%7B1%5Cover2%7D%5Cleft%28%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iX_i%5Cright%29%5ET%5Cleft%28%5Csum%7Bj%3D1%7D%5EN%5Clambdajy_jX_j%5Cright%29%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai-%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_i%5Cleft%28%5Csum%7Bj%3D1%7D%5EN%5Clambdajy_jX_j%5Cright%29%5ETX_i%5C%5C%0A%26%3D%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Clambdaiy_iX_i%5ET%5Cleft%28%5Csum%7Bj%3D1%7D%5EN%5Clambdajy_jX_j%5Cright%29-%5Csum%7Bi%3D1%7D%5EN%5Cleft%28%5Csum%7Bj%3D1%7D%5EN%5Clambda_jy_jX_j%5ET%5Cright%29%5Clambda_iy_iX_i%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai%5C%5C%0A%26%3D%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Clambda_i%5Clambda_jy_iy_jX_i%5ETX_j-%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Clambda_i%5Clambda_jy_iy_jX_j%5ETX_i%2B%5Csum%7Bi%3D1%7D%5EN%5Clambdai%5C%5C%0A%26%3D-%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Clambda_i%5Clambda_jy_iy_jX_i%5ETX_j%2B%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%0A%5Cend%7Baligned%7D%0A)

于是原始问题的对偶问题转化为如下优化问题

这个优化问题是一个二次规划问题,求解比原始问题要简单

设最优解为#card=math&code=W%5E%2A%2Cb%5E%2A%2C%5Clambda%5E%2A%3D%28%5Clambda_1%5E%2A%2C%5Clambda_2%5E%2A%2C%5Ccdots%2C%5Clambda_N%5E%2A%29),根据KKT条件应该满足

%3DW%5E-%5Csum_%7Bi%3D1%7D%5EN%5Clambda_i%5EyiX_i%3D0%5C%5C%0A%5Cnabla_bL(W%5E%2Cb%5E%2C%5Clambda%5E*)%3D-%5Csum%7Bi%3D1%7D%5ENyi%5Clambda_i%5E%3D0%5C%5C%0A%5Clambda_i%5E(1-y_i(W%5EX_i%2Bb%5E))%3D0%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%5C%5C%0A1-y_i(W%5EX_i%2Bb%5E)%5Cle0%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%5C%5C%0A%5Clambda_i%5E*%5Cge0%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cnabla_WL%28W%5E%2A%2Cb%5E%2A%2C%5Clambda%5E%2A%29%3DW%5E%2A-%5Csum%7Bi%3D1%7D%5EN%5Clambdai%5E%2Ay_iX_i%3D0%5C%5C%0A%5Cnabla_bL%28W%5E%2A%2Cb%5E%2A%2C%5Clambda%5E%2A%29%3D-%5Csum%7Bi%3D1%7D%5ENy_i%5Clambda_i%5E%2A%3D0%5C%5C%0A%5Clambda_i%5E%2A%281-y_i%28W%5E%2AX_i%2Bb%5E%2A%29%29%3D0%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%5C%5C%0A1-y_i%28W%5E%2AX_i%2Bb%5E%2A%29%5Cle0%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%5C%5C%0A%5Clambda_i%5E%2A%5Cge0%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Bgathered%7D%0A)

支持向量

根据KKT条件,显然当%5Clt0#card=math&code=1-y_i%28W%5E%2AX_i%2Bb%5E%2A%29%5Clt0)时

,当

%3D0#card=math&code=1-y_i%28W%5E%2AX_i%2Bb%5E%2A%29%3D0)时

可以取其他值。而

%3D0#card=math&code=1-y_i%28W%5E%2AX_i%2Bb%5E%2A%29%3D0)的样本即距离超平面最近的样本,也就是说只有距离超平面最近的样本对

有影响,其他的样本点对超平面的确定没有影响,如图所示

只有A、B、C、D四个点决定超平面,其他样本点并无影响。这样的样本点被称为支持向量,正负样本支持向量之间形成一个以超平面为中心的长带,这个长带的宽度即称为间隔

在对偶问题的解中找一个,它对应一个支持向量

,有

%3D0#card=math&code=1-y_k%28W%5E%2A%5Ccdot%20X_k%2Bb_k%29%3D0),那么

%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0AW%5E%2A%3D%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%5E%2Ay_iX_i%5C%5C%0Ab%5E%2A%3Dy_k-W%5E%2A%5Ccdot%20X_k%3Dy_k-%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%5E%2Ay_i%28X_i%5Ccdot%20X_k%29%0A%5Cend%7Bgathered%7D%0A)

最终决策函数为

%3Dsign%5Cleft(%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%5Ey_i(X_i%5Ccdot%20X)%2Bb%5E%5Cright)%0A#card=math&code=f%28X%29%3Dsign%5Cleft%28%5Csum%7Bi%3D1%7D%5EN%5Clambda_i%5E%2Ay_i%28X_i%5Ccdot%20X%29%2Bb%5E%2A%5Cright%29%0A)

软间隔SVM

在实际使用中,几乎不可能会出现数据集线性可分,因此需要算法对实际情况有一些容忍度,允许一部分样本进入到间隔之内甚至分类错误,也就是说不必对所有样本都严格要求函数间隔为1,如此便引申出软间隔支持向量机。线性不可分就意味着某些样本点不能满足函数间隔的约束,即对于进入间隔或者分类错误的点有%5Cgt0#card=math&code=1-yi%28W%5ETX_i%2Bb%29%5Cgt0)。我们的目标是容忍一部分样本点不满足约束但是又不能让它们造成的损失过大导致分类效果不好,这就相当于在目标函数上施加一个损失,在保证尽量满足最大间隔的情况下损失最小,即,下面的问题就是损失如何表示。最简单的是0-1损失函数但是其数学性质差。考虑到对不满足约束的样本有

%5Cgt0#card=math&code=1-y_i%28W%5ETX_i%2Bb%29%5Cgt0),我们可以直接将这个值作为损失,其本质就是不满足约束的样本点到支持向量所在超平面的距离;当然满足约束的样本点损失为零,于是对于某个样本

#card=math&code=%28X_i%2Cy_i%29)的损失

%5Cle0%5C%5C%0A%5Cxi_i%3D1-y_i(W%5ETX_i%2Bb)%2C%261-y_i(W%5ETX_i%2Bb)%5Cgt0%0A%5Cend%7Bcases%7D%0A#card=math&code=%5Cbegin%7Bcases%7D%0A%5Cxi_i%3D0%2C%261-y_i%28W%5ETX_i%2Bb%29%5Cle0%5C%5C%0A%5Cxi_i%3D1-y_i%28W%5ETX_i%2Bb%29%2C%261-y_i%28W%5ETX_i%2Bb%29%5Cgt0%0A%5Cend%7Bcases%7D%0A)

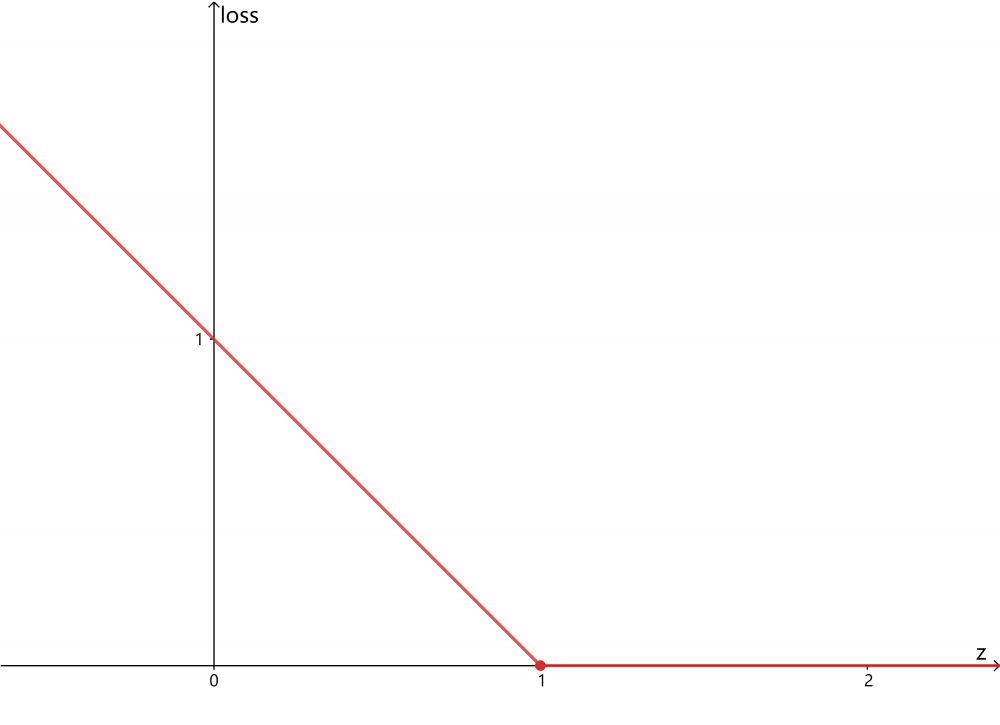

令#card=math&code=zi%3Dy_i%28W%5ETX_i%2Bb%29),则上述损失可统一写为

,其图像为

这样的损失函数称为合页损失函数(hinge loss),总的损失为

对于原先满足约束条件的样本,约束条件可写为

%5Cge1%3D1-%5Cxi_i#card=math&code=y_i%28W%5ETX_i%2Bb%29%5Cge1%3D1-%5Cxi_i);对于原先不满足约束条件的样本

%3D1-%5Cxi_i#card=math&code=y_i%28W%5ETX_i%2Bb%29%3D1-%5Cxi_i),所以对

的总约束为

%5Cge1-%5Cxi_i#card=math&code=y_i%28W%5ETX_i%2Bb%29%5Cge1-%5Cxi_i)。综上新的优化问题为

%5Cge1-%5Cxii%5C%5C%0A%26%5Cxi_i%5Cge0%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmin%7BW%2Cb%7D%7B1%5Cover2%7DW%5ETW%2BC%5Csum_%7Bi%3D1%7D%5EN%5Cxi_i%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26y_i%28W%5ETX_i%2Bb%29%5Cge1-%5Cxi_i%5C%5C%0A%26%5Cxi_i%5Cge0%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A)

其中称为惩罚参数,是人为指定的,显然

增大时对误分类的惩罚增大,减小时对误分类惩罚减小,用它来控制我们对误分类的容忍度。

又称为松弛变量,表示误分类的样本犯错误程度。这个最小化目标从直观来看就是在最大化间隔的同时,最小化误分类损失

显然这个优化问题可以等价看作

%5C%7D%2B%7B1%5Cover2C%7DW%5ETW%0A#card=math&code=%5Cmin%7BW%2Cb%7D%5Csum%7Bi%3D1%7D%5EN%5Cmax%5C%7B0%2C1-y_i%28W%5ETX_i%2Bb%29%5C%7D%2B%7B1%5Cover2C%7DW%5ETW%0A)

所以软间隔支持向量机相当于带L2正则化的hinge损失函数优化问题

对偶问题

同硬间隔支持向量方法,转化为拉格朗日对偶问题

%3D%7B1%5Cover2%7DW%5ETW%2BC%5Csum%7Bi%3D1%7D%5EN%5Cxi_i%2B%5Csum%7Bi%3D1%7D%5EN%5Calphai%5B1-%5Cxi_i-y_i(W%5ETX_i%2Bb)%5D-%5Csum%7Bi%3D1%7D%5EN%5Cbetai%5Cxi_i%5C%5C%0A%5C%5C%0A%5Cmax%7B%5Calpha%2C%5Cbeta%3A%5Calphai%5Cge0%2C%5Cbeta_i%5Cge0%7D%5Cmin%7BW%2Cb%2C%5Cxi%7DL(W%2Cb%2C%5Cxi%2C%5Calpha%2C%5Cbeta)%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0AL%28W%2Cb%2C%5Cxi%2C%5Calpha%2C%5Cbeta%29%3D%7B1%5Cover2%7DW%5ETW%2BC%5Csum%7Bi%3D1%7D%5EN%5Cxi_i%2B%5Csum%7Bi%3D1%7D%5EN%5Calphai%5B1-%5Cxi_i-y_i%28W%5ETX_i%2Bb%29%5D-%5Csum%7Bi%3D1%7D%5EN%5Cbetai%5Cxi_i%5C%5C%0A%5C%5C%0A%5Cmax%7B%5Calpha%2C%5Cbeta%3A%5Calphai%5Cge0%2C%5Cbeta_i%5Cge0%7D%5Cmin%7BW%2Cb%2C%5Cxi%7DL%28W%2Cb%2C%5Cxi%2C%5Calpha%2C%5Cbeta%29%0A%5Cend%7Bgathered%7D%0A)

最终转化为

设最优解为%2C%5Calpha%5E%3D(%5Calpha_1%5E%2C%5Calpha_2%5E%2C%5Ccdots%2C%5Calpha_N%5E)%2C%5Cbeta%5E%3D(%5Cbeta_1%5E%2C%5Cbeta_2%5E%2C%5Ccdots%2C%5Cbeta_N%5E)#card=math&code=W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%3D%28%5Cxi_1%5E%2A%2C%5Cxi_2%5E%2A%2C%5Ccdots%2C%5Cxi_N%5E%2A%29%2C%5Calpha%5E%2A%3D%28%5Calpha_1%5E%2A%2C%5Calpha_2%5E%2A%2C%5Ccdots%2C%5Calpha_N%5E%2A%29%2C%5Cbeta%5E%2A%3D%28%5Cbeta_1%5E%2A%2C%5Cbeta_2%5E%2A%2C%5Ccdots%2C%5Cbeta_N%5E%2A%29),符合KKT条件

%3DW%5E-%5Csum_%7Bi%3D1%7D%5EN%5Calpha_i%5EyiX_i%3D0%5C%5C%0A%5Cnabla_bL(W%5E%2Cb%5E%2C%5Cxi%5E%2C%5Calpha%5E%2C%5Cbeta%5E*)%3D-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5E*y_i%3D0%5C%5C%0A%5Cnabla%7B%5Cxii%7DL(W%5E%2Cb%5E%2C%5Cxi%5E%2C%5Calpha%5E%2C%5Cbeta%5E)%3DC-%5Calpha_i%5E-%5Cbeta_i%5E%3D0%5C%5C%0A%5Calpha_i%5E%5B1-%5Cxi_i%5E-y_i(W%5E%5Ccdot%20X_i%2Bb%5E)%5D%3D0%5C%5C%0A%5Cbeta_i%5E%5Cxi_i%5E%3D0%5C%5C%0A1-%5Cxi_i%5E-y_i(W%5E%5Ccdot%20X_i%2Bb%5E)%5Cle0%5C%5C%0A%5Cxi_i%5E%5Cge0%5C%5C%0A%5Calpha_i%5E%5Cge0%5C%5C%0A%5Cbeta_i%5E*%5Cge0%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cnabla_WL%28W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%2C%5Calpha%5E%2A%2C%5Cbeta%5E%2A%29%3DW%5E%2A-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5E%2Ay_iX_i%3D0%5C%5C%0A%5Cnabla_bL%28W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%2C%5Calpha%5E%2A%2C%5Cbeta%5E%2A%29%3D-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5E%2Ay_i%3D0%5C%5C%0A%5Cnabla%7B%5Cxi_i%7DL%28W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%2C%5Calpha%5E%2A%2C%5Cbeta%5E%2A%29%3DC-%5Calpha_i%5E%2A-%5Cbeta_i%5E%2A%3D0%5C%5C%0A%5Calpha_i%5E%2A%5B1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%5D%3D0%5C%5C%0A%5Cbeta_i%5E%2A%5Cxi_i%5E%2A%3D0%5C%5C%0A1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%5Cle0%5C%5C%0A%5Cxi_i%5E%2A%5Cge0%5C%5C%0A%5Calpha_i%5E%2A%5Cge0%5C%5C%0A%5Cbeta_i%5E%2A%5Cge0%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Bgathered%7D%0A)

在对偶问题的解中,选择一个,对应一个

#card=math&code=%28X_k%2Cy_k%29)有

%3D0#card=math&code=1-y_k%28W%5E%2A%5Ccdot%20X_k%2Bb%5E%2A%29%3D0)

样本点分析

从KKT条件可以看出只有%3D0#card=math&code=1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%3D0)的样本对超平面的确定有影响,与硬间隔一样这些样本被称为支持向量。但是因为软间隔时不要求支持向量到超平面的函数间隔为1,因此支持向量就可能会在间隔边界上,可能在间隔边界内部,也可能在分离超平面误分类的一侧

根据KKT条件可以推导出以下结论

- 若

,则

,由

得

,故

%5Cle0#card=math&code=1-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%5Cle0),即样本点在间隔边界上或者远离间隔边界

- 若

可得

且

%3D0#card=math&code=1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%3D0),即

%3D0#card=math&code=1-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%3D0),此时的样本点为间隔边界上的支持向量

若

,则

%3D0#card=math&code=1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%3D0),

需要分情况讨论

,则

%3D0#card=math&code=1-y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%3D0),此时的样本点为间隔边界上的支持向量

,则

,即

%5Clt1#card=math&code=0%5Clt%20y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%5Clt1),此时的样本点分类正确,在自己所属类的间隔边界和分离超平面之间

,则

%3D0#card=math&code=y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%3D0),此时样本点无法被分类,在分离超平面上

,则

,即

%5Clt0#card=math&code=y_i%28W%5E%2A%5Ccdot%20X_i%2Bb%5E%2A%29%5Clt0),此时样本点分类错误,在超平面不属于自己类的一侧

核方法SVM

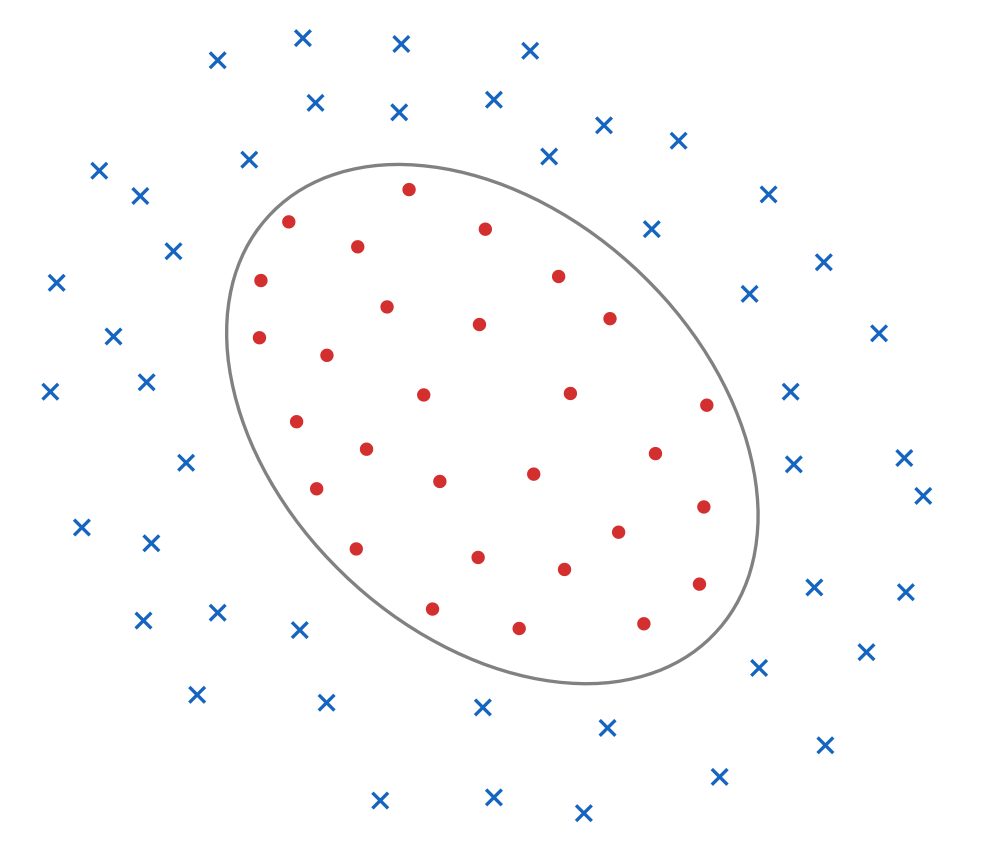

硬间隔对应的是数据完全线性可分的情况,软间隔对应的是数据不完全线性可分但是其分离超平面仍然是线性的。但是实际情况里,数据的分离超平面是线性的情况都很难满足,如图,下面这个二维数据集的分离面明显不是线性的,而是一个曲线

如果一个数据集可以用超曲面分开,就称为非线性可分问题。对于非线性可分问题,直接求解比较困难,所以我们理所应当希望转化为线性问题。解决这个问题的一个方法就是非线性变换,将非线性可分的问题映射到一个新空间使其线性可分,然后在新空间中运用线性的方法去学习模型。核方法就属于这种方法

核函数

按照上述说法,定义一个从输入空间到新空间

的函数映射,这个映射使数据在新空间变为线性问题

%3A%5Cmathcal%7BX%7D%5Cto%5Cmathcal%7BH%7D%0A#card=math&code=%5Cphi%28x%29%3A%5Cmathcal%7BX%7D%5Cto%5Cmathcal%7BH%7D%0A)

对应于支持向量机,样本变换后为

#card=math&code=%5Cphi%28X%29),那么针对新的空间运用SVM即可得最终对偶问题

%5Ccdot%5Cphi(Xj)%5D-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Csum%7Bi%3D1%7D%5EN%5Calphaiy_i%3D0%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmin%5Calpha%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Calphai%5Calpha_jy_iy_j%5B%5Cphi%28X_i%29%5Ccdot%5Cphi%28X_j%29%5D-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Csum%7Bi%3D1%7D%5EN%5Calpha_iy_i%3D0%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A)

对这个问题进行求解即可。但是实际上会有两个问题

- 合适的

#card=math&code=%5Cphi%28x%29)不容易找到,而且因为数据往往在高维时更容易线性可分,所以映射以后的数据一般是高维的,甚至是无穷维的

- 找到

#card=math&code=%5Cphi%28x%29)后去求

%5Ccdot%5Cphi(X_j)#card=math&code=%5Cphi%28X_i%29%5Ccdot%5Cphi%28X_j%29)计算量非常大

考虑到在最终求解的对偶问题中,我们其实只关心变换后的新空间中样本的内积,那么实际上只需要找到如下一个函数使得对于所有的都满足

%3D%5Cphi%5ET(X_i)%5Cphi(X_j)%3D%5Cphi(X_i)%5Ccdot%5Cphi(X_j)%0A#card=math&code=K%28X_i%2CX_j%29%3D%5Cphi%5ET%28X_i%29%5Cphi%28X_j%29%3D%5Cphi%28X_i%29%5Ccdot%5Cphi%28X_j%29%0A)

这样一来就免去了求#card=math&code=%5Cphi%28x%29)以及新空间内积的过程,减少了计算量。我们称这样的函数

#card=math&code=K%28x%2Cy%29)为核函数,从这个角度来看核技巧在很多算法中都能够运用,只要算法关注的是样本的内积。引入了核函数的SVM就转化为如下优化问题

-%5Csum%7Bi%3D1%7D%5EN%5Calpha_i%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Csum%7Bi%3D1%7D%5EN%5Calphaiy_i%3D0%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmin%5Calpha%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Calphai%5Calpha_jy_iy_jK%28X_i%2CX_j%29-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Csum%7Bi%3D1%7D%5EN%5Calpha_iy_i%3D0%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A)

同样满足KKT条件

%3DW%5E-%5Csum_%7Bi%3D1%7D%5EN%5Calpha_i%5Eyi%5Cphi(X_i)%3D0%5C%5C%0A%5Cnabla_bL(W%5E%2Cb%5E%2C%5Cxi%5E%2C%5Calpha%5E%2C%5Cbeta%5E*)%3D-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5E*y_i%3D0%5C%5C%0A%5Cnabla%7B%5Cxii%7DL(W%5E%2Cb%5E%2C%5Cxi%5E%2C%5Calpha%5E%2C%5Cbeta%5E)%3DC-%5Calpha_i%5E-%5Cbeta_i%5E%3D0%5C%5C%0A%5Calpha_i%5E%5B1-%5Cxi_i%5E-y_i(W%5E%5Ccdot%5Cphi(X_i)%2Bb%5E)%5D%3D0%5C%5C%0A%5Cbeta_i%5E%5Cxi_i%5E%3D0%5C%5C%0A1-%5Cxi_i%5E-y_i(W%5E%5Ccdot%5Cphi(X_i)%2Bb%5E)%5Cle0%5C%5C%0A%5Cxi_i%5E%5Cge0%5C%5C%0A%5Calpha_i%5E%5Cge0%5C%5C%0A%5Cbeta_i%5E*%5Cge0%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cnabla_WL%28W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%2C%5Calpha%5E%2A%2C%5Cbeta%5E%2A%29%3DW%5E%2A-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5E%2Ay_i%5Cphi%28X_i%29%3D0%5C%5C%0A%5Cnabla_bL%28W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%2C%5Calpha%5E%2A%2C%5Cbeta%5E%2A%29%3D-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5E%2Ay_i%3D0%5C%5C%0A%5Cnabla%7B%5Cxi_i%7DL%28W%5E%2A%2Cb%5E%2A%2C%5Cxi%5E%2A%2C%5Calpha%5E%2A%2C%5Cbeta%5E%2A%29%3DC-%5Calpha_i%5E%2A-%5Cbeta_i%5E%2A%3D0%5C%5C%0A%5Calpha_i%5E%2A%5B1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%5Cphi%28X_i%29%2Bb%5E%2A%29%5D%3D0%5C%5C%0A%5Cbeta_i%5E%2A%5Cxi_i%5E%2A%3D0%5C%5C%0A1-%5Cxi_i%5E%2A-y_i%28W%5E%2A%5Ccdot%5Cphi%28X_i%29%2Bb%5E%2A%29%5Cle0%5C%5C%0A%5Cxi_i%5E%2A%5Cge0%5C%5C%0A%5Calpha_i%5E%2A%5Cge0%5C%5C%0A%5Cbeta_i%5E%2A%5Cge0%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Bgathered%7D%0A)

此时分离超平面可以写为核函数形式

%3D%5Csum%7Bi%3D1%7D%5EN%5Calpha_i%5Ey_iK(X_i%2CX)%2Bb%5E%0A#card=math&code=f%28X%29%3D%5Csum%7Bi%3D1%7D%5EN%5Calpha_i%5E%2Ay_iK%28X_i%2CX%29%2Bb%5E%2A%0A)

正定核函数

这里存在一个问题:我们如何判断找到的一个函数#card=math&code=K%28x%2Cy%29)能否作为一个核函数,或者说一个函数要满足什么条件才能作为核函数。根据Mercer定理,任何正定函数都可以作为核函数,所以下面讨论如何判断一个函数是否为正定核函数

定义

令为输入空间,

为希尔伯特空间。如果对于

,存在一个映射

%5Cin%5Cmathcal%7BH%7D#card=math&code=%5Cphi%28x%29%5Cin%5Cmathcal%7BH%7D),使得

%3D%5Cphi(x)%5Ccdot%5Cphi(y)%5Cin%5Cmathbb%7BR%7D#card=math&code=K%28x%2Cy%29%3D%5Cphi%28x%29%5Ccdot%5Cphi%28y%29%5Cin%5Cmathbb%7BR%7D),那么称这个

#card=math&code=K%28x%2Cy%29)为正定核函数

充要条件

从定义去判断一个函数是否为正定核是比较困难的,用如下充分必要条件更为简便

正定核的充要条件:对任意,函数

#card=math&code=K%28x%2Cy%29)满足

- 对称性:

#card=math&code=K%28x%2Cy%29)是对称函数,

%3DK(y%2Cx)#card=math&code=K%28x%2Cy%29%3DK%28y%2Cx%29)

- 正定性:对于任意

都有对应的Gram矩阵

%5D%7Bm%5Ctimes%20m%7D%0A#card=math&code=K%3D%5BK%28X_i%2CX_j%29%5D%7Bm%5Ctimes%20m%7D%0A)

是半正定矩阵

常用核函数

- 线性核函数

%3Dx%5ETy%0A#card=math&code=K%28x%2Cy%29%3Dx%5ETy%0A)

这个核函数其实对应的就是线性支持向量机

- 多项式核函数

%3D(%5Calpha%20x%5ETy%2B%5Cbeta)%5E%5Cgamma%0A#card=math&code=K%28x%2Cy%29%3D%28%5Calpha%20x%5ETy%2B%5Cbeta%29%5E%5Cgamma%0A)

其中参数需要自己调参定义

- 高斯核函数

又称为径向基(RBF)函数,是最主流的非线性核函数%3D%5Cexp%5Cleft(-%5Cfrac%7B%7C%7Cx-y%7C%7C%5E2%7D%7B2%5Csigma%5E2%7D%5Cright)%0A#card=math&code=K%28x%2Cy%29%3D%5Cexp%5Cleft%28-%5Cfrac%7B%7C%7Cx-y%7C%7C%5E2%7D%7B2%5Csigma%5E2%7D%5Cright%29%0A)

称为高斯核的带宽,需要自己调参定义

- 拉普拉斯核函数

常用核函数%3D%5Cexp%5Cleft(-%5Cfrac%7B%7C%7Cx-y%7C%7C%7D%7B%5Csigma%7D%5Cright)%0A#card=math&code=K%28x%2Cy%29%3D%5Cexp%5Cleft%28-%5Cfrac%7B%7C%7Cx-y%7C%7C%7D%7B%5Csigma%7D%5Cright%29%0A)

,需要自己调参定义

- Sigmoid核函数

常用核函数%3D%5Ctanh(%5Calpha%20x%5ETy%2B%5Cbeta)%0A#card=math&code=K%28x%2Cy%29%3D%5Ctanh%28%5Calpha%20x%5ETy%2B%5Cbeta%29%0A)

,需要自己调参定义

序列最小最优化算法

重新审视对偶优化问题

-%5Csum%7Bi%3D1%7D%5EN%5Calpha_i%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Csum%7Bi%3D1%7D%5EN%5Calphaiy_i%3D0%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmin%5Calpha%7B1%5Cover2%7D%5Csum%7Bi%3D1%7D%5EN%5Csum%7Bj%3D1%7D%5EN%5Calphai%5Calpha_jy_iy_jK%28X_i%2CX_j%29-%5Csum%7Bi%3D1%7D%5EN%5Calphai%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Csum%7Bi%3D1%7D%5EN%5Calpha_iy_i%3D0%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%2C%5Ccdots%2CN%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A)

这是一个凸二次规划优化问题,是可解且必有全局最优解的,求解的方法也非常多,但是当样本量非常大时,很多方法的求解效率十分低下,因此需要找一种更简便的算法来求解。其中,序列最小最优化算法(SMO)是常用的一种

基本思想

在上述问题中,一共有N个变量,和样本量一致,如果按普通方法求解,在样本量非常大时计算量很大。SMO是一种启发式算法,基本想法是:如果所有变量的解都满足此优化问题的KKT条件最优解就得到了,一次对所有变量进行优化很困难,那么选择一次对其中两个变量,固定其他的变量就变成了一个二次规划问题,对这个二次规划进行优化使选择的两个变量的解离最优解更接近。上述问题叫做SMO的子问题,然后对这个子问题一次一次迭代最终得到最优解

如何选取两个变量呢?思路也很简单,首先肯定选取违反KKT条件最严重的变量,然后注意到,在固定其他变量时需要优化的两个变量的关系其实是确定的,所以另一个变量根据约束条件可以自由选取

求解方法

为书写方便,设#card=math&code=K_%7Bij%7D%3DK%28X_i%2CX_j%29)

不失一般性,设选择要优化的两个变量为,其他变量都是固定的,那么子问题最优化问题可写为

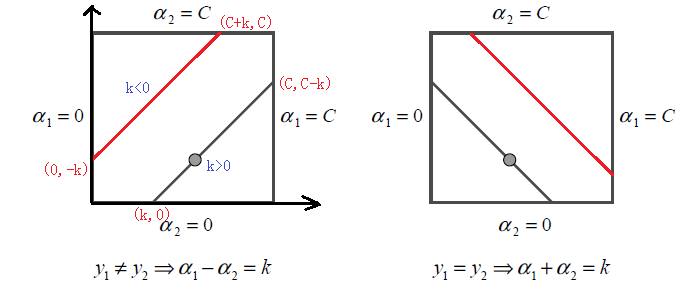

%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Calpha1y_1%2B%5Calpha_2y_2%3D-%5Csum%7B1%3D3%7D%5EN%5Calphaiy_i%3Dk%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0A%5Cmin%7B%5Calpha1%2C%5Calpha_2%7D%7B1%5Cover2%7D%5Calpha_1%5E2K%7B11%7D%2B%7B1%5Cover2%7D%5Calpha2%5E2K%7B22%7D%2B%5Calpha1%5Calpha_2y_1y_2K%7B12%7D%2B%5Calpha1y_1%5Csum%7Bi%3D3%7D%5EN%5Calphaiy_iK%7Bi1%7D%2B%5Calpha2y_2%5Csum%7Bi%3D3%7D%5EN%5Calphaiy_iK%7Bi2%7D-%28%5Calpha1%2B%5Calpha_2%29%5C%5C%0A%5Cbegin%7Baligned%7D%0As.t.%5Cquad%26%5Calpha_1y_1%2B%5Calpha_2y_2%3D-%5Csum%7B1%3D3%7D%5EN%5Calpha_iy_i%3Dk%5C%5C%0A%260%5Cle%5Calpha_i%5Cle%20C%2C%5Cquad%20i%3D1%2C2%0A%5Cend%7Baligned%7D%0A%5Cend%7Bgathered%7D%0A)

其中为一个常数,式中还省略了固定变量组成的常数项,因为常数对最小化没有影响。这个最优化问题转化为了只包含两个变量的二次规划问题,而且这两个变量存在线性关系,求解就变得方便快速

子问题求解

根据约束条件,首先限制在

的框内;其次由

得它们在一条斜率为

的直线上,于是得到下图关系

实际只能在一条线段上取,那么两个变量的优化问题转化为只有一个变量的优化问题。不妨设最终是

的优化问题,设在上一轮迭代中的解为

#card=math&code=%28%5Calpha_1%5E%7Bold%7D%2C%5Calpha_2%5E%7Bold%7D%29),本轮优化的解为

#card=math&code=%28%5Calpha_1%5E%7Bnew%7D%2C%5Calpha_2%5E%7Bnew%7D%29),那么显然有

从上图中知道最优值有一个取值范围即线段的两个端点,设为

,那么可得

在当前迭代可由得到当前的

,则分离超平面为

%3D%5Csum%7Bi%3D1%7D%5EN%5Calpha_iy_iK(X_i%2CX)%2Bb%0A#card=math&code=g%28X%29%3D%5Csum%7Bi%3D1%7D%5EN%5Calpha_iy_iK%28X_i%2CX%29%2Bb%0A)

其中的实际为

。那么可得

-%5Csum%7Bj%3D1%7D%5E2%5Calpha_jy_jK%7Bij%7D-b%2C%5Cquad%20i%3D1%2C2%0A#card=math&code=vi%3D%5Csum%7Bj%3D3%7D%5EN%5Calphajy_jK%7Bij%7D%3Dg%28Xi%29-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7Bij%7D-b%2C%5Cquad%20i%3D1%2C2%0A)

其中的实际为

于是目标函数可以表示为

%3D%7B1%5Cover2%7D%5Calpha1%5E2K%7B11%7D%2B%7B1%5Cover2%7D%5Calpha2%5E2K%7B22%7D%2B%5Calpha1%5Calpha_2y_1y_2K%7B12%7D%2B%5Calpha1y_1v_1%2B%5Calpha_2y_2v_2-(%5Calpha_1%2B%5Calpha_2)%0A#card=math&code=L%28%5Calpha_1%2C%5Calpha_2%29%3D%7B1%5Cover2%7D%5Calpha_1%5E2K%7B11%7D%2B%7B1%5Cover2%7D%5Calpha2%5E2K%7B22%7D%2B%5Calpha1%5Calpha_2y_1y_2K%7B12%7D%2B%5Calpha_1y_1v_1%2B%5Calpha_2y_2v_2-%28%5Calpha_1%2B%5Calpha_2%29%0A)

又根据,可写为只包含

的目标函数

%3D%7B1%5Cover2%7DK%7B11%7D(k-%5Calpha_2y_2)%5E2%2B%7B1%5Cover2%7DK%7B22%7D%5Calpha2%5E2%2BK%7B12%7Dy2(k-%5Calpha_2y_2)%5Calpha_2%2B(k-%5Calpha_2y_2)v_1%2B%5Calpha_2y_2v_2-(k-%5Calpha_2y_2)y_1-%5Calpha_2%0A#card=math&code=L%28%5Calpha_2%29%3D%7B1%5Cover2%7DK%7B11%7D%28k-%5Calpha2y_2%29%5E2%2B%7B1%5Cover2%7DK%7B22%7D%5Calpha2%5E2%2BK%7B12%7Dy_2%28k-%5Calpha_2y_2%29%5Calpha_2%2B%28k-%5Calpha_2y_2%29v_1%2B%5Calpha_2y_2v_2-%28k-%5Calpha_2y_2%29y_1-%5Calpha_2%0A)

对其求导且令之为零得

%2BK%7B22%7D%5Calpha_2%2BK%7B12%7Dy2(k-2%5Calpha_2y_2)-y_2v_1-y_2v_2%2By_1y_2-1%5C%5C%0A%26%3DK%7B11%7D%5Calpha2%2BK%7B22%7D%5Calpha2-2K%7B12%7D%5Calpha2%2BK%7B12%7Dy2k-K%7B11%7Dy2k%2By_1y_2%2By_2v_2-y_2v_1-1%5C%5C%0A%26%3D0%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0A%5Cfrac%7B%5Cpartial%20L%7D%7B%5Cpartial%5Calpha_2%7D%26%3D-y_2K%7B11%7D%28k-%5Calpha2y_2%29%2BK%7B22%7D%5Calpha2%2BK%7B12%7Dy2%28k-2%5Calpha_2y_2%29-y_2v_1-y_2v_2%2By_1y_2-1%5C%5C%0A%26%3DK%7B11%7D%5Calpha2%2BK%7B22%7D%5Calpha2-2K%7B12%7D%5Calpha2%2BK%7B12%7Dy2k-K%7B11%7Dy_2k%2By_1y_2%2By_2v_2-y_2v_1-1%5C%5C%0A%26%3D0%0A%5Cend%7Baligned%7D%0A)

%5Calpha2%26%3Dy_2(y_2-y_1%2BkK%7B11%7D-kK%7B12%7D%2Bv_1-v_2)%5C%5C%0A%26%3Dy_2%5Cleft%5By_2-y_1%2BkK%7B11%7D-kK%7B12%7D%2B%5Cleft(g(X_1)-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7B1j%7D-b%5Cright)-%5Cleft(g(X2)-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7B2j%7D-b%5Cright)%5Cright%5D%5C%5C%0A%26%3Dy2%5Cleft(%5Bg(X_1)-y_1%5D-%5Bg(X_2)-y_2%5D%2BkK%7B11%7D-kK%7B12%7D-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7B1j%7D%2B%5Csum%7Bj%3D1%7D%5E2%5Calpha_jy_jK%7B2j%7D%5Cright)%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0A%5CRightarrow%28K%7B11%7D%2BK%7B22%7D-2K%7B12%7D%29%5Calpha_2%26%3Dy_2%28y_2-y_1%2BkK%7B11%7D-kK%7B12%7D%2Bv_1-v_2%29%5C%5C%0A%26%3Dy_2%5Cleft%5By_2-y_1%2BkK%7B11%7D-kK%7B12%7D%2B%5Cleft%28g%28X_1%29-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7B1j%7D-b%5Cright%29-%5Cleft%28g%28X2%29-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7B2j%7D-b%5Cright%29%5Cright%5D%5C%5C%0A%26%3Dy2%5Cleft%28%5Bg%28X_1%29-y_1%5D-%5Bg%28X_2%29-y_2%5D%2BkK%7B11%7D-kK%7B12%7D-%5Csum%7Bj%3D1%7D%5E2%5Calphajy_jK%7B1j%7D%2B%5Csum%7Bj%3D1%7D%5E2%5Calpha_jy_jK%7B2j%7D%5Cright%29%0A%5Cend%7Baligned%7D%0A)

将代入计算可得

%5Calpha2%3D(K%7B11%7D%2BK%7B22%7D-2K%7B12%7D)%5Calpha2%5E%7Bold%7D%2By_2(%5Bg(X_1)-y_1%5D-%5Bg(X_2)-y_2%5D)%0A#card=math&code=%28K%7B11%7D%2BK%7B22%7D-2K%7B12%7D%29%5Calpha2%3D%28K%7B11%7D%2BK%7B22%7D-2K%7B12%7D%29%5Calpha_2%5E%7Bold%7D%2By_2%28%5Bg%28X_1%29-y_1%5D-%5Bg%28X_2%29-y_2%5D%29%0A)

令

-yi%2C%5Cquad%20i%3D1%2C2%5C%5C%0A%5Ceta%3DK%7B11%7D%2BK%7B22%7D-2K%7B12%7D%0A%5Cend%7Bgathered%7D%0A#card=math&code=%5Cbegin%7Bgathered%7D%0AEi%3Dg%28X_i%29-y_i%2C%5Cquad%20i%3D1%2C2%5C%5C%0A%5Ceta%3DK%7B11%7D%2BK%7B22%7D-2K%7B12%7D%0A%5Cend%7Bgathered%7D%0A)

于是得出

%7D%7B%5Ceta%7D%0A#card=math&code=%5Chat%7B%5Calpha_2%7D%3D%5Calpha_2%5E%7Bold%7D%2B%5Cfrac%7By_2%28E_1-E_2%29%7D%7B%5Ceta%7D%0A)

但是是没有加完整约束条件的解,还要考虑

,因此最终解为

再根据求得

观察一下,可以发现其实就是上一轮计算出的分离超平面

#card=math&code=g%28X%29)对

的预测值与其真实分类

的差

根据计算结果,在每一轮迭代里面需要计算以下值

- 当前轮选择的

对应的

以及

- 根据上一轮的分离超平面

#card=math&code=g%28X%29)计算当前轮的

- 最终优化得到的

对应的分离超平面

#card=math&code=g%28X%29),用于下一轮计算

变量的选择方法

每一轮需要对选择的两个变量进行优化,如何选择合适的变量呢?

- 第一个变量的选择(外层循环)

根据违反KKT条件最严重的原则,先检验样本的KKT条件%5Cge1%5C%5C%0A0%5Clt%5Calpha_i%5Clt%20C%26%5CLeftrightarrow%20y_ig(X_i)%3D1%5C%5C%0A%5Calpha_i%3DC%26%5CLeftrightarrow%20y_ig(X_i)%5Cle1%5C%5C%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0A%5Calpha_i%3D0%26%5CLeftrightarrow%20y_ig%28X_i%29%5Cge1%5C%5C%0A0%5Clt%5Calpha_i%5Clt%20C%26%5CLeftrightarrow%20y_ig%28X_i%29%3D1%5C%5C%0A%5Calpha_i%3DC%26%5CLeftrightarrow%20y_ig%28X_i%29%5Cle1%5C%5C%0A%5Cend%7Baligned%7D%0A)

- 因为一般会设算法初始的

,所以首先遍历整个样本,不满足KKT条件的

可作为第一个变量

- 然后遍历

的样本点(称为non-bound样本),即检测在间隔边界上的支持向量点是否满足KKT条件,检测到不满足则选为第一个变量。一直重复这一步并优化直到所有non-bound样本都满足KKT条件

- 不断交替重复步骤1,2。直到所有样本都满足KKT条件算法终止

%26%5Cge1-%5Cvarepsilon%26%5CLeftrightarrow%26%26%20y_iE_i%26%5Cge-%5Cvarepsilon%5C%5C%0A0%5Clt%5Calpha_i%5Clt%20C%26%5CRightarrow%26%201-%5Cvarepsilon%5Cle%20y_ig(X_i)%26%5Cle1%2B%5Cvarepsilon%26%5CLeftrightarrow%26%26-%5Cvarepsilon%5Cle%20y_iE_i%26%5Cle%5Cvarepsilon%5C%5C%0A%5Calpha_i%3DC%26%5CRightarrow%26%20y_ig(X_i)%26%5Cle1%2B%5Cvarepsilon%26%5CLeftrightarrow%26%26y_iE_i%26%5Cle%5Cvarepsilon%5C%5C%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0A%5Calpha_i%3D0%26%5CRightarrow%26%20y_ig%28X_i%29%26%5Cge1-%5Cvarepsilon%26%5CLeftrightarrow%26%26%20y_iE_i%26%5Cge-%5Cvarepsilon%5C%5C%0A0%5Clt%5Calpha_i%5Clt%20C%26%5CRightarrow%26%201-%5Cvarepsilon%5Cle%20y_ig%28X_i%29%26%5Cle1%2B%5Cvarepsilon%26%5CLeftrightarrow%26%26-%5Cvarepsilon%5Cle%20y_iE_i%26%5Cle%5Cvarepsilon%5C%5C%0A%5Calpha_i%3DC%26%5CRightarrow%26%20y_ig%28X_i%29%26%5Cle1%2B%5Cvarepsilon%26%5CLeftrightarrow%26%26y_iE_i%26%5Cle%5Cvarepsilon%5C%5C%0A%5Cend%7Baligned%7D%0A)

%5C%26%5C%26(%5Calpha_i%5Cgt%200%5C%26%5C%26y_iE_i%5Cle%5Cvarepsilon)%0A#card=math&code=%28%5Calpha_i%5Clt%20C%5C%26%5C%26y_iE_i%5Cge-%5Cvarepsilon%29%5C%26%5C%26%28%5Calpha_i%5Cgt%200%5C%26%5C%26y_iE_i%5Cle%5Cvarepsilon%29%0A)

%7C%7C(%5Calpha_i%5Cgt%200%5C%26%5C%26y_iE_i%5Cgt%5Cvarepsilon)%0A#card=math&code=%28%5Calpha_i%5Clt%20C%5C%26%5C%26y_iE_i%5Clt-%5Cvarepsilon%29%7C%7C%28%5Calpha_i%5Cgt%200%5C%26%5C%26y_iE_i%5Cgt%5Cvarepsilon%29%0A)

#card=math&code=g%28X%29)

- 第二个样本的选择(内层循环)

在第一个样本确定之后,选择第二个样本的原则是让计算速度更快,即目标函数值下降的更快,或者说让有更大的变化。根据前面的迭代公式即选择

最大的样本点

在特殊的情况下会出现按以上方法选择的不能使目标函数值下降,那么采用以下启发式方法继续选择:遍历在间隔边界上即

的支持向量点,依次作为

试用,直到目标函数能够下降,若找不到合适的

,再遍历整数据集。若仍然找不到合适的

,则放弃找到的

,重新选择另外的

超平面的参数计算

每一轮都要计算对应的分离超平面,即。下面讨论如何更新

更新,一般合写为

,这样可以表示为核函数的形式

更新,需要分情况来看

- 若

根据KKT条件%3Dy1(%5Csum%7Bi%3D1%7D%5EN%5Calphaiy_iK(X_i%2CX_1)%2Bb)%3D1#card=math&code=y_1g%28X_1%29%3Dy_1%28%5Csum%7Bi%3D1%7D%5EN%5Calphaiy_iK%28X_i%2CX_1%29%2Bb%29%3D1)得

-y1%3D%5Csum%7Bj%3D1%7D%5EN%5Calphajy_jK%7B1j%7D%2Bb%5E%7Bold%7D-y1%5C%5C%0A%26%3D%5Calpha_1%5E%7Bold%7Dy_1K%7B11%7D%2B%5Calpha2%5E%7Bold%7Dy_2K%7B12%7D%2B%5Csum%7Bi%3D3%7D%5EN%5Calpha_i%5E%7Bold%7Dy_iK%7Bi1%7D%2Bb%5E%7Bold%7D-y1%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0AE_1%26%3Dg%28X_1%29-y_1%3D%5Csum%7Bj%3D1%7D%5EN%5Calphajy_jK%7B1j%7D%2Bb%5E%7Bold%7D-y1%5C%5C%0A%26%3D%5Calpha_1%5E%7Bold%7Dy_1K%7B11%7D%2B%5Calpha2%5E%7Bold%7Dy_2K%7B12%7D%2B%5Csum%7Bi%3D3%7D%5EN%5Calpha_i%5E%7Bold%7Dy_iK%7Bi1%7D%2Bb%5E%7Bold%7D-y1%0A%5Cend%7Baligned%7D%0A)

y_1K%7B11%7D%2B(%5Calpha2%5E%7Bold%7D-%5Calpha_2%5E%7Bnew%7D)y_2K%7B12%7D%2Bb%5E%7Bold%7D%0A#card=math&code=b1%5E%7Bnew%7D%3D-E_1%2B%28%5Calpha_1%5E%7Bold%7D-%5Calpha_1%5E%7Bnew%7D%29y_1K%7B11%7D%2B%28%5Calpha2%5E%7Bold%7D-%5Calpha_2%5E%7Bnew%7D%29y_2K%7B12%7D%2Bb%5E%7Bold%7D%0A)

- 若

,同理可得

y2K%7B22%7D%2B(%5Calpha1%5E%7Bold%7D-%5Calpha_1%5E%7Bnew%7D)y_1K%7B12%7D%2Bb%5E%7Bold%7D%0A#card=math&code=b2%5E%7Bnew%7D%3D-E_2%2B%28%5Calpha_2%5E%7Bold%7D-%5Calpha_2%5E%7Bnew%7D%29y_2K%7B22%7D%2B%28%5Calpha1%5E%7Bold%7D-%5Calpha_1%5E%7Bnew%7D%29y_1K%7B12%7D%2Bb%5E%7Bold%7D%0A)

遵循如下规则:

- 若

且

,上述

,直接作为

- 若

,

,则

- 若

,

,则

- 若

且

且

,

- 若

,直接跳过这个子问题选取新的两个变量组