GPU训练

方式1:找到三个变量调用.cuda()

- 模型

- loss

- 数据 imgs, target = data

mymodule = Mymodule()# ========================cuda======================================if torch.cuda.is_available():mymodule = mymodule.cuda()# 3 损失函数和优化器loss_fn = nn.CrossEntropyLoss()# ========================cuda======================================if torch.cuda.is_available():loss_fn = loss_fn.cuda()with torch.no_grad():for data in test_dataloader:imgs, target = data# ========================cuda======================================if torch.cuda.is_available():imgs = imgs.cuda()target = target.cuda()

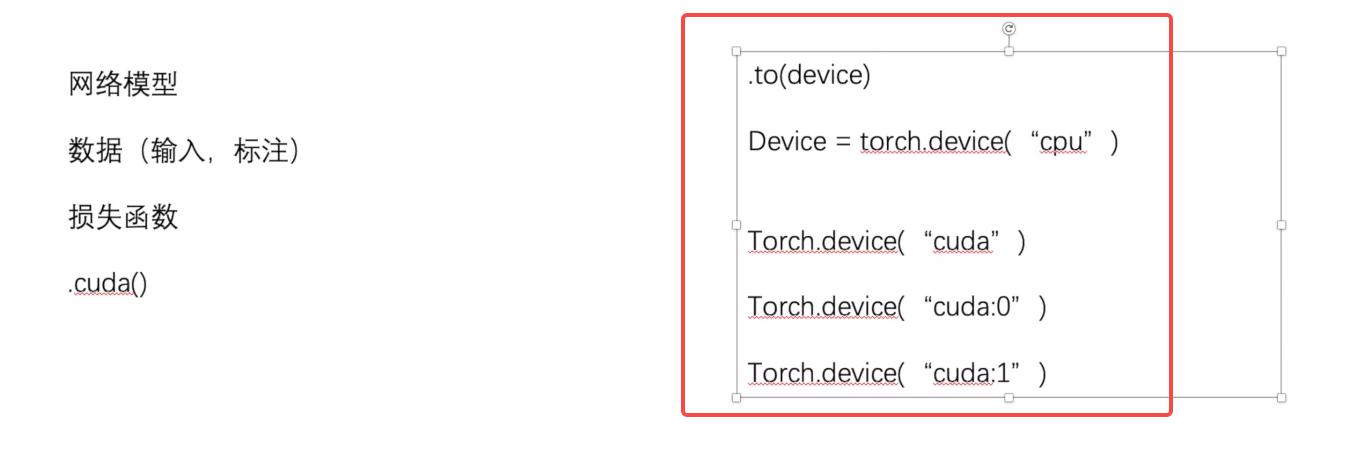

方式2:to(device)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 定义训练的设备!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!# device = torch.device("cpu")device = torch.device("cuda" if torch.cuda.is_available() else "cpu")print("设备:", device)mymodule = Mymodule()# ========================cuda======================================# if torch.cuda.is_available():# mymodule = mymodule.cuda()mymodule = mymodule.to(device) # 也可以不用赋值 直接mymodule.to(device)# 3 损失函数和优化器loss_fn = nn.CrossEntropyLoss()# ========================cuda======================================# if torch.cuda.is_available():# loss_fn = loss_fn.cuda()loss_fn = loss_fn.to(device) # 也可以不用赋值 直接loss_fn.to(device)# 训练步骤开始mymodule.train() # 非必要for data in train_dataloader:imgs, target = data# # ========================cuda======================================# if torch.cuda.is_available():# imgs = imgs.cuda()# target = target.cuda()imgs = imgs.to(device) # 必须这种target = target.to(device) # 必须这种

配置多GPU

import os# 配置gpu# os.environ["CUDA_VISIBLE_DEVICES"] = "5,6,7"# os.environ["CUDA_VISIBLE_DEVICES"] = "0"

torch.distributed.lanuch (shell脚本运行)

#!/usr/bin/env bashCONFIG=$1GPUS=$2PORT=${PORT:-29500}PYTHONPATH="$(dirname $0)/..":$PYTHONPATH \python -m torch.distributed.launch --nproc_per_node=$GPUS --master_port=$PORT \$(dirname "$0")/train.py $CONFIG --launcher pytorch ${@:3}# 命令 torch.distributed.launch python -m torch.distributed.launch main.py

https://zhuanlan.zhihu.com/p/86441879

python -m torch.distributed.launch --nproc_per_node=4 train.py --a b --c d

- 其中python -m torch.distributed.lanuch表示调用torch.distributed.lanuch.py文件来进行分布式训练

- -m表示将后面的torch.distributed.lanuch当做模块加载。

- —nproc_per_node通常与GPU数量保持一致。

- train.py才是真正的训练文件,后面是相关参数。