视频:https://www.bilibili.com/video/BV1Y7411d7Ys?p=6

博客:

视频中截图

说明:

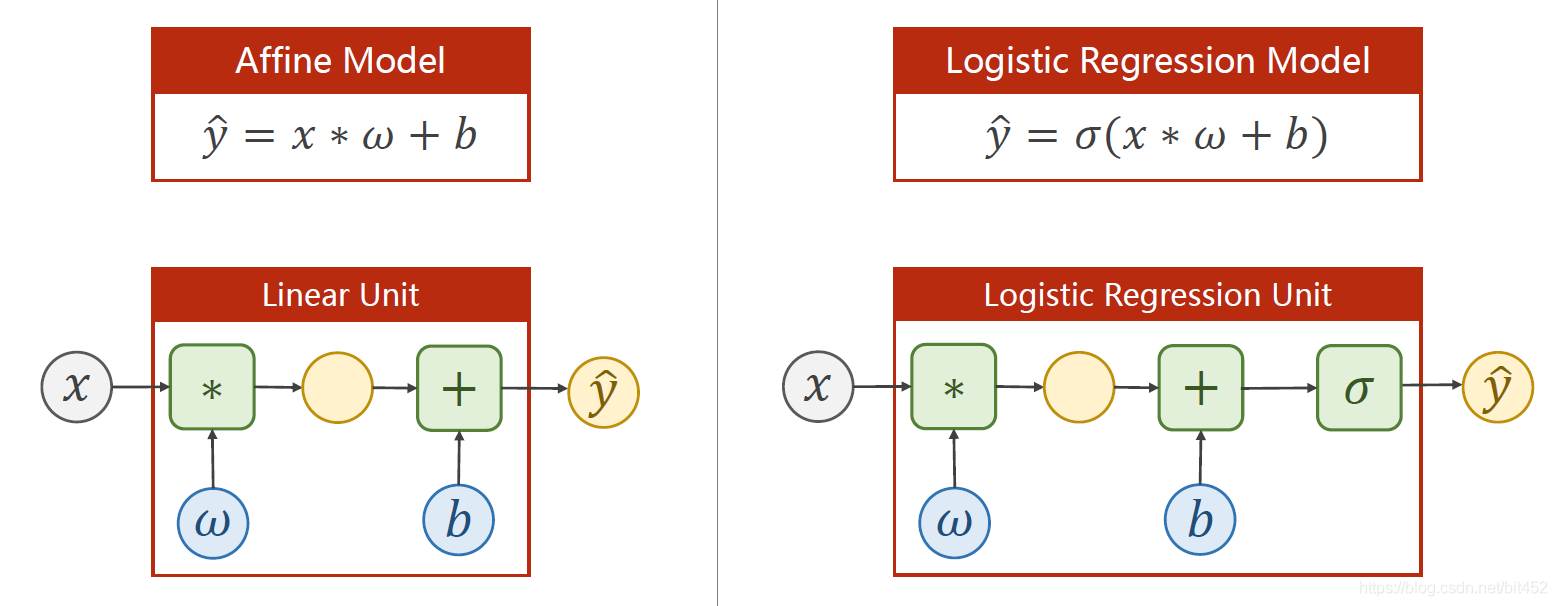

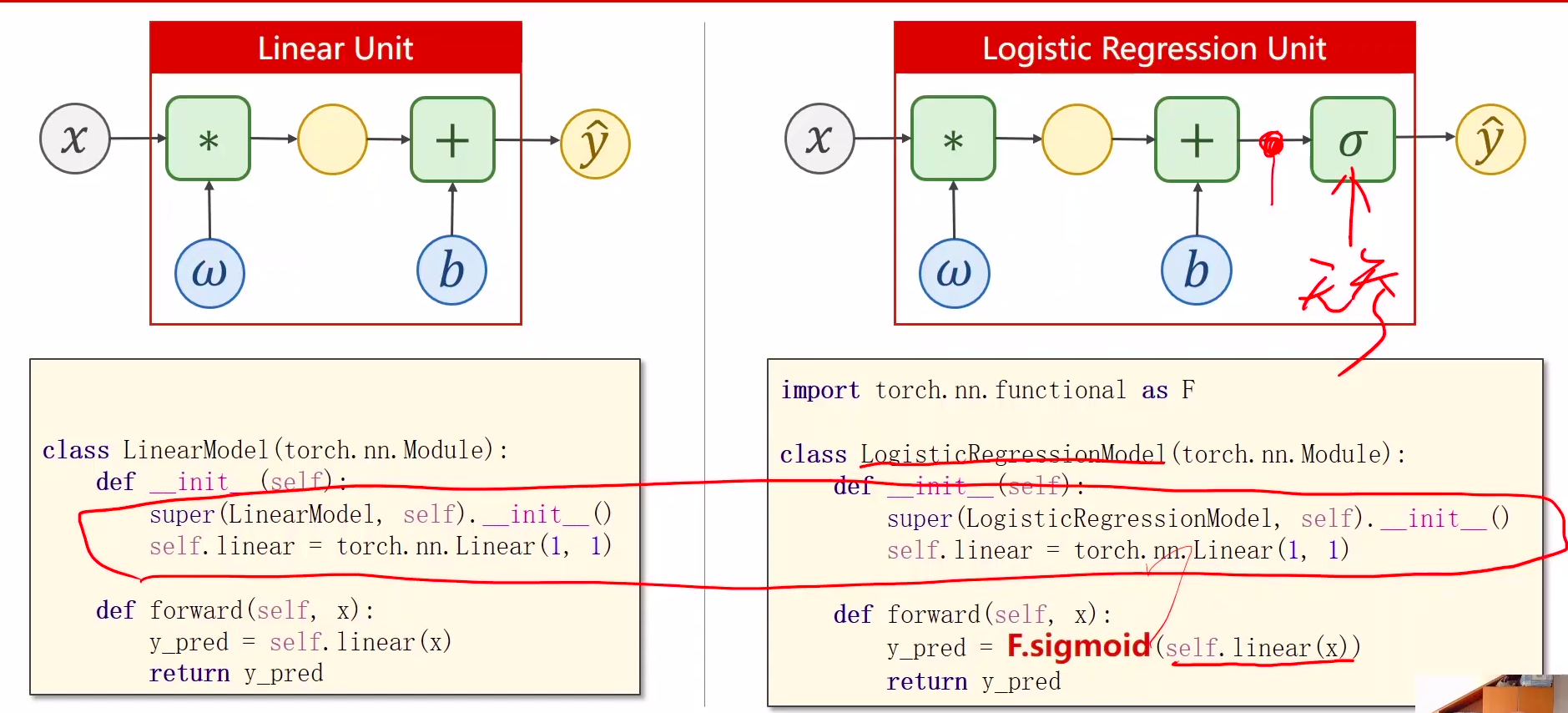

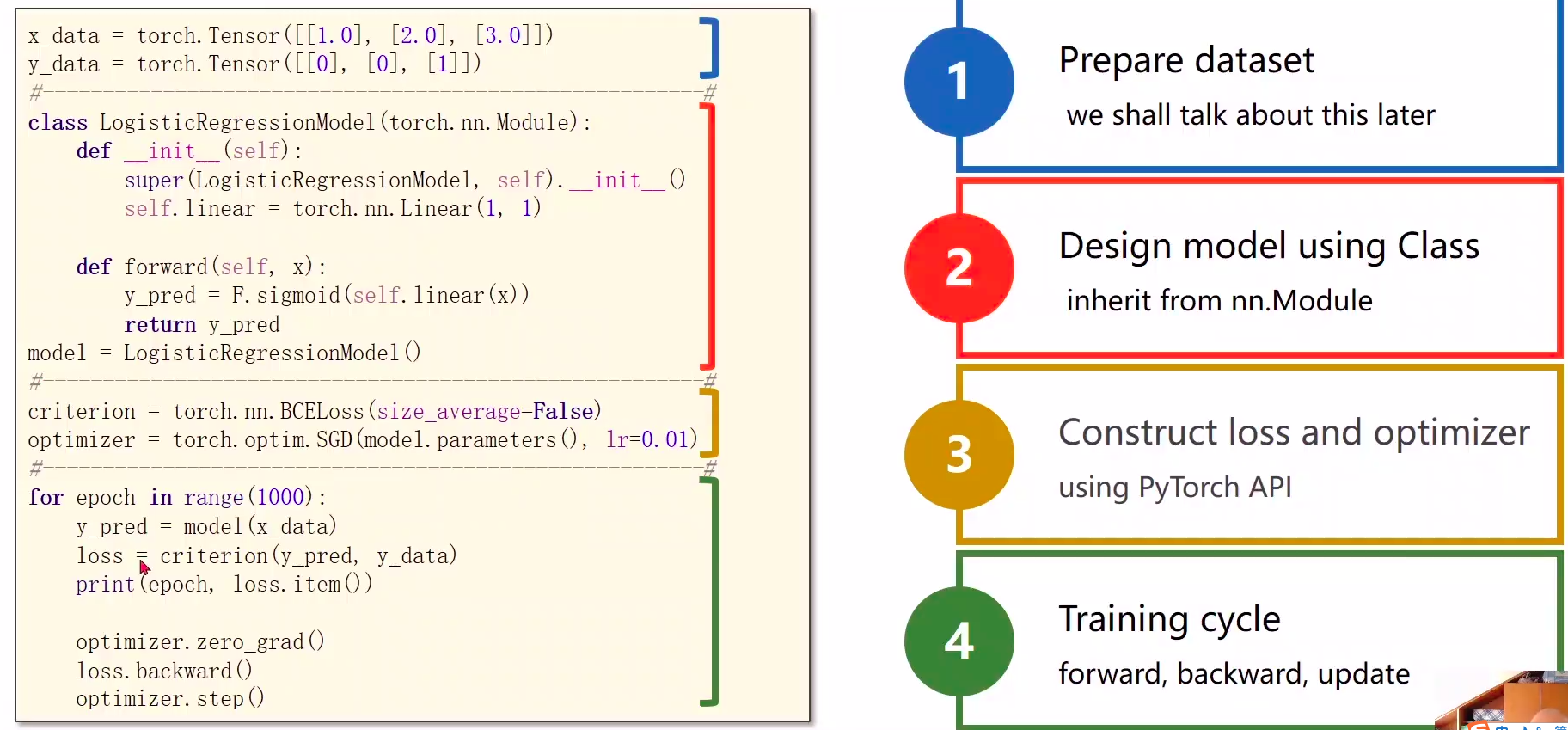

1、 逻辑斯蒂回归和线性模型的明显区别是在线性模型的后面,添加了激活函数(非线性变换)

2、

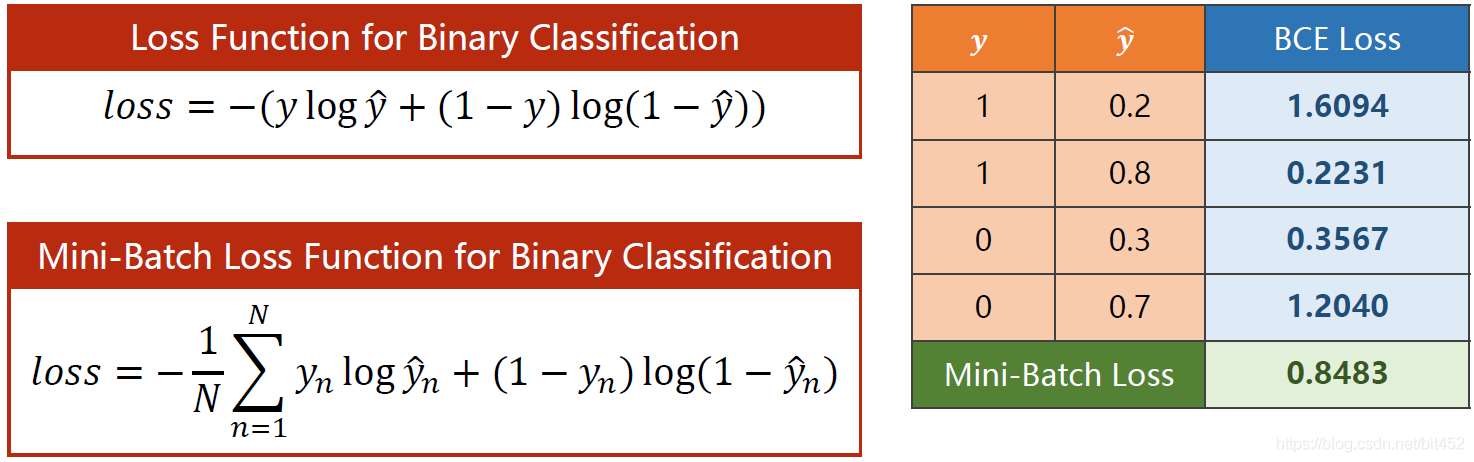

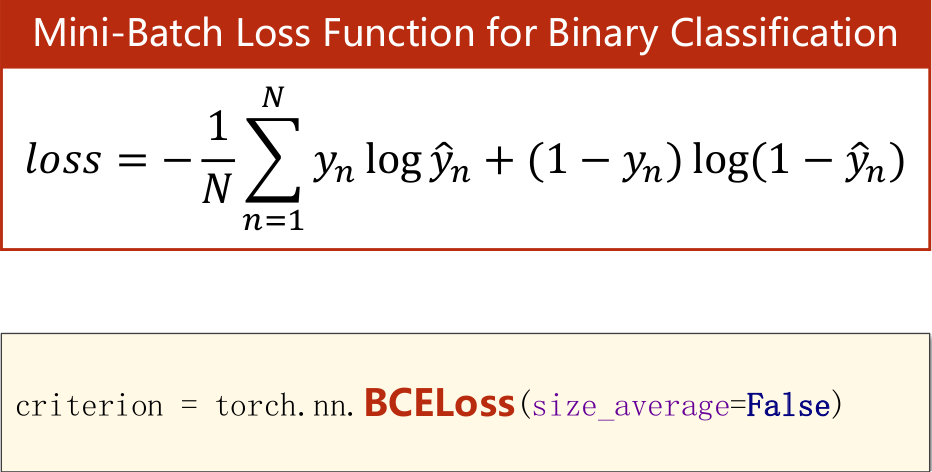

- 线性loss: 比较两个数值的差异

- Mini-Batch Loss:比较分布的差异:KL散度,cross-entropy交叉熵

说明:预测与标签越接近,BCE损失越小。

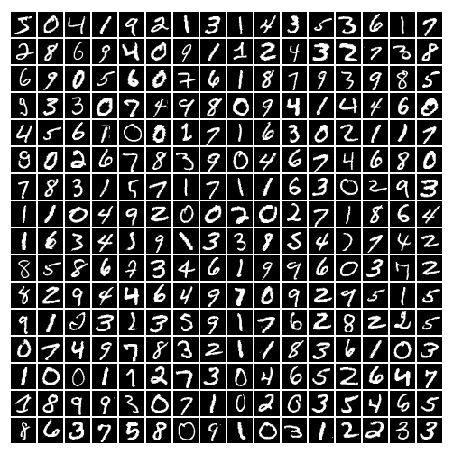

Dataset数据集

MNIST

The database of handwritten digits

- Training set: 60,000 examples,

- Test set: 10,000 examples.

- Classes: 10

import torchvisiontrain_set = torchvision.datasets.MNIST(root='../dataset/mnist', train=True, download=True)test_set = torchvision.datasets.MNIST(root='../dataset/mnist', train=False, download=True)

CIFAR-10

32x32小图片

- Training set: 50,000 examples,

- Test set: 10,000 examples.

- Classes: 10

import torchvision train_set = torchvision.datasets.CIFAR10(...) test_set = torchvision.datasets.CIFAR10(...)

代码说明:

1、视频中代码F.sigmoid(self.linear(x))会引发warning,此处更改为torch.sigmoid(self.linear(x))

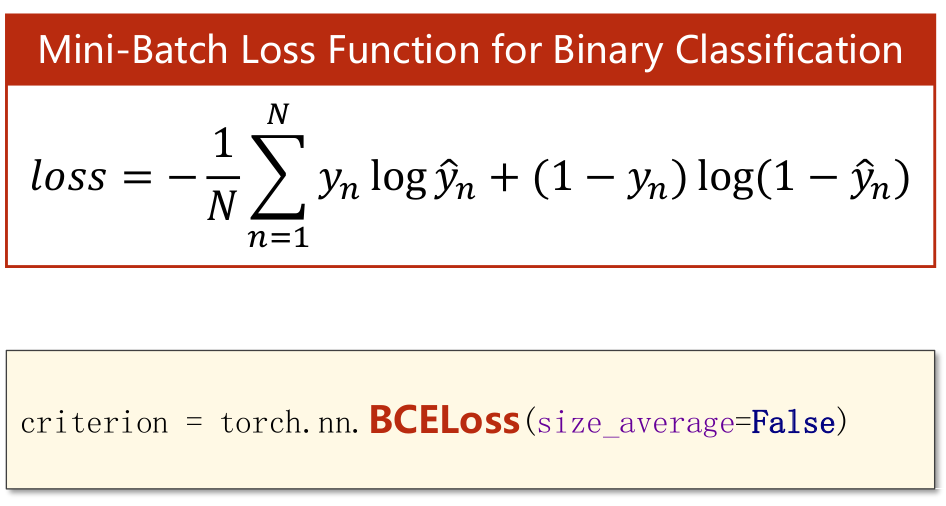

2、BCELoss - Binary CrossEntropyLoss

BCELoss 是CrossEntropyLoss的一个特例,只用于二分类问题,而CrossEntropyLoss可以用于二分类,也可以用于多分类。

如果是二分类问题,建议BCELoss

3、损失函数(BCELoss) BCE和CE交叉熵损失函数的区别

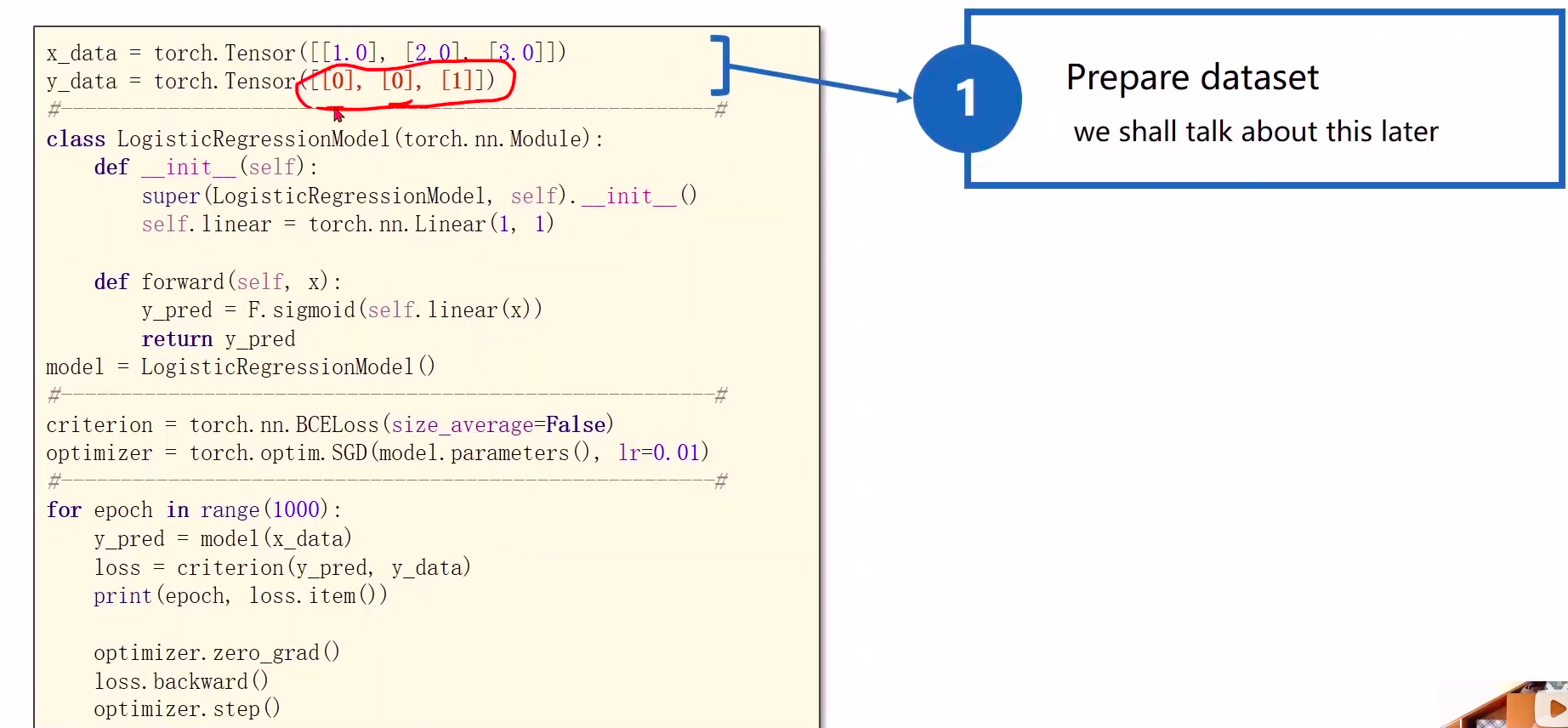

数据准备

原来:y_data = torch.Tensor([[2.0], [4.0], [6.0]])

现在:y_data = torch.Tensor([[0], [0], [1]]) 分类使用

逻辑线性单元Logistic Regression Unit

BCELoss交叉熵

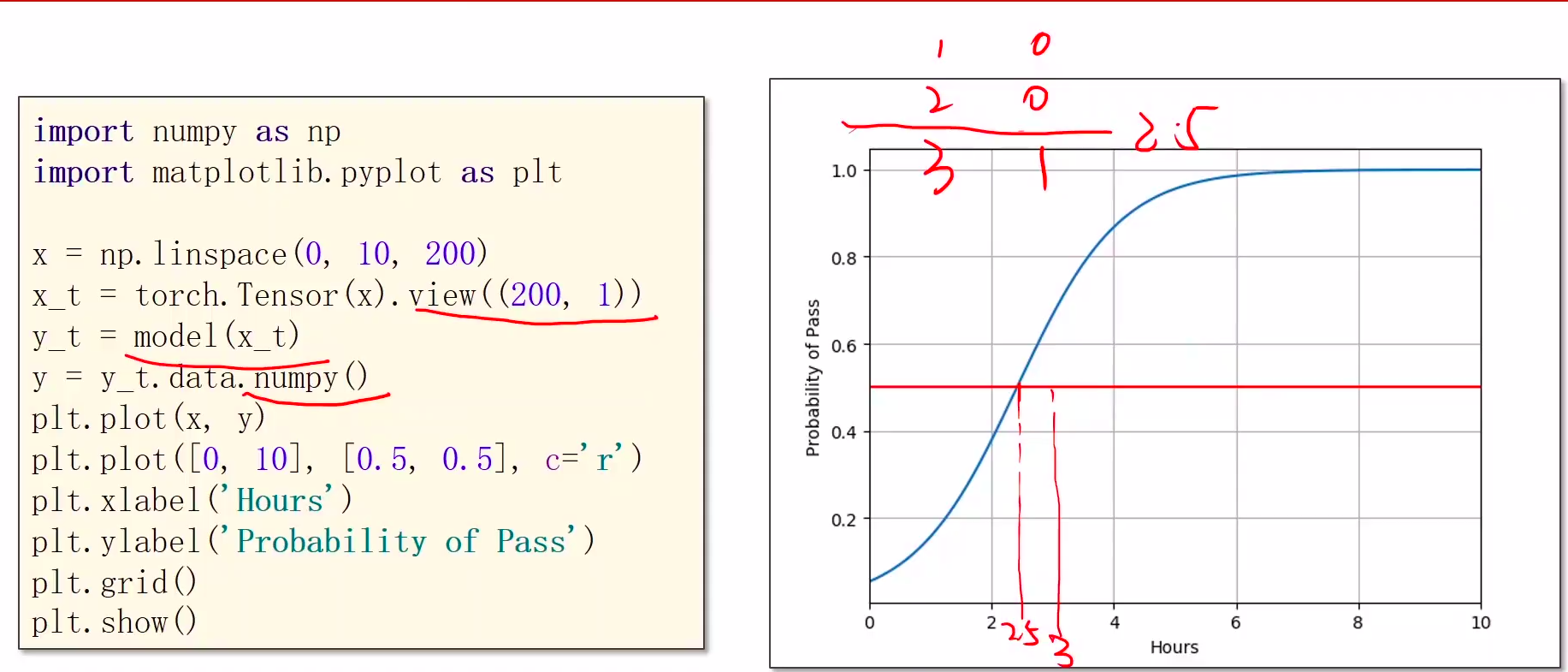

可视化

# 可视化

import numpy as np

import matplotlib.pyplot as plt

x = np.linspace(0, 10, 200)

x_t = torch.Tensor(x).view((200, 1))

y_t = model(x_t) # 使用训练好的模型

y = y_t.data.numpy()

plt.plot(x, y)

plt.plot([0, 10], [0.5, 0.5], c='r')

plt.xlabel('Hours')

plt.ylabel('Probability of Pass')

plt.grid()

plt.show()

代码

'''

Description: 逻辑回归

视频:https://www.bilibili.com/video/BV1Y7411d7Ys?p=6

博客: https://blog.csdn.net/bit452/article/details/109680909

Author: HCQ

Company(School): UCAS

Email: 1756260160@qq.com

Date: 2020-12-05 16:53:13

LastEditTime: 2020-12-05 17:12:56

FilePath: /pytorch/PyTorch深度学习实践/06逻辑回归.py

'''

import torch

# import torch.nn.functional as F

# 1 prepare dataset

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[0], [0], [1]]) # =============================[0], [0], [1]]=========================

# 2 design model using class

class LogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(LogisticRegressionModel, self).__init__()

self.linear = torch.nn.Linear(1,1)

def forward(self, x):

# y_pred = F.sigmoid(self.linear(x))

y_pred = torch.sigmoid(self.linear(x)) # ========================sigmoid=============================

return y_pred

model = LogisticRegressionModel()

# 3 construct loss and optimizer

# 默认情况下,loss会基于element平均,如果size_average=False的话,loss会被累加。

criterion = torch.nn.BCELoss(size_average = False) # ==================BCELoss===========================

optimizer = torch.optim.SGD(model.parameters(), lr = 0.01)

# 4 training cycle forward, backward, update

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

print('w = ', model.linear.weight.item())

print('b = ', model.linear.bias.item())

x_test = torch.Tensor([[4.0]])

y_test = model(x_test)

print('y_pred = ', y_test.data)

# 可视化

import numpy as np

import matplotlib.pyplot as plt

x = np.linspace(0, 10, 200)

x_t = torch.Tensor(x).view((200, 1))

y_t = model(x_t) # 使用训练好的模型

y = y_t.data.numpy()

plt.plot(x, y)

plt.plot([0, 10], [0.5, 0.5], c='r')

plt.xlabel('Hours')

plt.ylabel('Probability of Pass')

plt.grid()

plt.show()