基本信息

0. 说明

1. 卷积基本概念

卷积核

互相关和卷积计算

就是对应相乘然后求和填进去的计算

互相关和卷积计算的区别简单的理解就是卷积核上下左右翻转,对于神经网络的训练没啥影响,计算反的最后的训练结果反正也是反的

3. 池化

池化其实就是为了解决过拟合的问题,把一块的数据用某一个数据进行代表

目前接触的池化层有最大池化层和平均池化层,顾名思义就是把最大值或者是平均值作为池化结果

4. 卷积网络

卷积网络往往是卷积层,激活函数,池化层进行搭配而形成的

卷积网络的最后可以是使用全连接层进行连接到输出的维度

在卷积层和全连接层之间的对接:

卷积层块的输出形状为(批量大小, 通道, 高, 宽)。当卷积层块的输出传入全连接层块时,全连接层块会将小批量中每个样本变平(flatten)。也就是说,全连接层的输入形状将变成二维,其中第一维是小批量中的样本,第二维是每个样本变平后的向量表示,且向量长度为通道、高和宽的乘积。全连接层块含3个全连接层。它们的输出个数分别是120、84和10,其中10为输出的类别个数。

具体的例子可见下附代码:cov是卷积层,最后的fc是全连接层

class LeNet(nn.Module):def __init__(self):super(LeNet, self).__init__()self.conv = nn.Sequential(nn.Conv2d(1, 6, 5), # in_channels, out_channels, kernel_sizenn.Sigmoid(),nn.MaxPool2d(2, 2), # kernel_size, stridenn.Conv2d(6, 16, 5),nn.Sigmoid(),nn.MaxPool2d(2, 2))self.fc = nn.Sequential(nn.Linear(16*4*4, 120),nn.Sigmoid(),nn.Linear(120, 84),nn.Sigmoid(),nn.Linear(84, 10))def forward(self, img):feature = self.conv(img)output = self.fc(feature.view(img.shape[0], -1))return output

5. 卷积理解与1*1卷积核

1*1的卷积核构成的卷积层可以看作是全连接层的变种

具体的理解也挺绕的,不过确实是来着

卷积的理解就是利用卷积核对目标数据进行特征的提取

6. 卷积的超参数

超参数包括卷积核大小,目标数据填充大小,输出输入通道,步长

目前不太能理解,但是对于查询的结果进行直观的理解就是,卷积核越大越能在较大的维度来提取数据,步长小的话会对数据进行重复的提取,加大计算量,但是步长太大又会有遗漏数据的问题。

二维卷积层输出的二维数组可以看作是输入在空间维度(宽和高)上某一级的表征,也叫特征图(feature map)。影响元素x的前向计算的所有可能输入区域(可能大于输入的实际尺寸)叫做x的感受野(receptive field)。以图5.1为例,输入中阴影部分的四个元素是输出中阴影部分元素的感受野。我们将图5.1中形状为2×2的输出记为Y,并考虑一个更深的卷积神经网络:将Y与另一个形状为2×2的核数组做互相关运算,输出单个元素z。那么,z在Y上的感受野包括Y的全部四个元素,在输入上的感受野包括其中全部9个元素。可见,我们可以通过更深的卷积神经网络使特征图中单个元素的感受野变得更加广阔,从而捕捉输入上更大尺寸的特征。 我们常使用“元素”一词来描述数组或矩阵中的成员。在神经网络的术语中,这些元素也可称为“单元”。当含义明确时,本书不对这两个术语做严格区分。

卷积网络

1.Le_Net

利用卷积层和sigmoid激活函数以及最大池化层结合构成卷积层,然后利用线性层和sigmoid激活函数搭建全连接层

lass LeNet(nn.Module):def __init__(self):super(LeNet, self).__init__()self.conv = nn.Sequential(nn.Conv2d(1, 6, 5), # in_channels, out_channels, kernel_sizenn.Sigmoid(),nn.MaxPool2d(2, 2), # kernel_size, stridenn.Conv2d(6, 16, 5),nn.Sigmoid(),nn.MaxPool2d(2, 2))self.fc = nn.Sequential(nn.Linear(16*4*4, 120),nn.Sigmoid(),nn.Linear(120, 84),nn.Sigmoid(),nn.Linear(84, 10))def forward(self, img):feature = self.conv(img)output = self.fc(feature.view(img.shape[0], -1))return output"""LeNet((conv): Sequential((0): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))(1): Sigmoid()(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(3): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))(4): Sigmoid()(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(fc): Sequential((0): Linear(in_features=256, out_features=120, bias=True)(1): Sigmoid()(2): Linear(in_features=120, out_features=84, bias=True)(3): Sigmoid()(4): Linear(in_features=84, out_features=10, bias=True)))"""

2. Alex_Net

先利用较大的卷积核提取信息特征,然后逐渐的减小卷积核大小

差异于引用学习的来源https://tangshusen.me/Dive-into-DL-PyTorch/#/chapter05_CNN/5.6_alexnet

第一,与相对较小的LeNet相比,AlexNet包含8层变换,其中有5层卷积和2层全连接隐藏层,以及1个全连接输出层。下面我们来详细描述这些层的设计。

AlexNet第一层中的卷积窗口形状是11×11。因为ImageNet中绝大多数图像的高和宽均比MNIST图像的高和宽大10倍以上,ImageNet图像的物体占用更多的像素,所以需要更大的卷积窗口来捕获物体。第二层中的卷积窗口形状减小到5×5,之后全采用3×3。此外,第一、第二和第五个卷积层之后都使用了窗口形状为3×3、步幅为2的最大池化层。而且,AlexNet使用的卷积通道数也大于LeNet中的卷积通道数数十倍。

紧接着最后一个卷积层的是两个输出个数为4096的全连接层。这两个巨大的全连接层带来将近1 GB的模型参数。由于早期显存的限制,最早的AlexNet使用双数据流的设计使一个GPU只需要处理一半模型。幸运的是,显存在过去几年得到了长足的发展,因此通常我们不再需要这样的特别设计了。

第二,AlexNet将sigmoid激活函数改成了更加简单的ReLU激活函数。一方面,ReLU激活函数的计算更简单,例如它并没有sigmoid激活函数中的求幂运算。另一方面,ReLU激活函数在不同的参数初始化方法下使模型更容易训练。这是由于当sigmoid激活函数输出极接近0或1时,这些区域的梯度几乎为0,从而造成反向传播无法继续更新部分模型参数;而ReLU激活函数在正区间的梯度恒为1。因此,若模型参数初始化不当,sigmoid函数可能在正区间得到几乎为0的梯度,从而令模型无法得到有效训练。

第三,AlexNet通过丢弃法(参见3.13节)来控制全连接层的模型复杂度。而LeNet并没有使用丢弃法。

第四,AlexNet引入了大量的图像增广,如翻转、裁剪和颜色变化,从而进一步扩大数据集来缓解过拟合。我们将在后面的9.1节(图像增广)详细介绍这种方法。

class AlexNet(nn.Module):def __init__(self):super(AlexNet, self).__init__()self.conv = nn.Sequential(nn.Conv2d(1, 96, 11, 4), # in_channels, out_channels, kernel_size, stride, paddingnn.ReLU(),nn.MaxPool2d(3, 2), # kernel_size, stride# 减小卷积窗口,使用填充为2来使得输入与输出的高和宽一致,且增大输出通道数nn.Conv2d(96, 256, 5, 1, 2),nn.ReLU(),nn.MaxPool2d(3, 2),# 连续3个卷积层,且使用更小的卷积窗口。除了最后的卷积层外,进一步增大了输出通道数。# 前两个卷积层后不使用池化层来减小输入的高和宽nn.Conv2d(256, 384, 3, 1, 1),nn.ReLU(),nn.Conv2d(384, 384, 3, 1, 1),nn.ReLU(),nn.Conv2d(384, 256, 3, 1, 1),nn.ReLU(),nn.MaxPool2d(3, 2))# 这里全连接层的输出个数比LeNet中的大数倍。使用丢弃层来缓解过拟合self.fc = nn.Sequential(nn.Linear(256*5*5, 4096),nn.ReLU(),nn.Dropout(0.5),nn.Linear(4096, 4096),nn.ReLU(),nn.Dropout(0.5),# 输出层。由于这里使用Fashion-MNIST,所以用类别数为10,而非论文中的1000nn.Linear(4096, 10),)def forward(self, img):feature = self.conv(img)output = self.fc(feature.view(img.shape[0], -1))return output"""Net Struct as follows:AlexNet((conv): Sequential((0): Conv2d(1, 96, kernel_size=(11, 11), stride=(4, 4))(1): ReLU()(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)(3): Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): ReLU()(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)(6): Conv2d(256, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(7): ReLU()(8): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(9): ReLU()(10): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(11): ReLU()(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False))(fc): Sequential((0): Linear(in_features=6400, out_features=4096, bias=True)(1): ReLU()(2): Dropout(p=0.5, inplace=False)(3): Linear(in_features=4096, out_features=4096, bias=True)(4): ReLU()(5): Dropout(p=0.5, inplace=False)(6): Linear(in_features=4096, out_features=10, bias=True)))"""

3. VGG_Net

利用VGG块和全连接层进行对接

VGG块的组成规律是:连续使用数个相同的填充为1、窗口形状为3×3的卷积层后接上一个步幅为2、窗口形状为2×2的最大池化层。卷积层保持输入的高和宽不变,而池化层则对其减半。我们使用

vgg_block函数来实现这个基础的VGG块,它可以指定卷积层的数量和输入输出通道数。对于给定的感受野(与输出有关的输入图片的局部大小),采用堆积的小卷积核优于采用大的卷积核,因为可以增加网络深度来保证学习更复杂的模式,而且代价还比较小(参数更少)。例如,在VGG中,使用了3个3x3卷积核来代替7x7卷积核,使用了2个3x3卷积核来代替5*5卷积核,这样做的主要目的是在保证具有相同感知野的条件下,提升了网络的深度,在一定程度上提升了神经网络的效果。

def vgg_block(num_convs, in_channels, out_channels):blk = []for i in range(num_convs):if i == 0:blk.append(nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1))else:blk.append(nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1))blk.append(nn.ReLU())blk.append(nn.MaxPool2d(kernel_size=2, stride=2)) # 这里会使宽高减半return nn.Sequential(*blk)def vgg(conv_arch, fc_features, fc_hidden_units=4096):net = nn.Sequential()# 卷积层部分for i, (num_convs, in_channels, out_channels) in enumerate(conv_arch):# 每经过一个vgg_block都会使宽高减半net.add_module("vgg_block_" + str(i+1), vgg_block(num_convs, in_channels, out_channels))# 全连接层部分net.add_module("fc", nn.Sequential(FlattenLayer(),nn.Linear(fc_features, fc_hidden_units),nn.ReLU(),nn.Dropout(0.5),nn.Linear(fc_hidden_units, fc_hidden_units),nn.ReLU(),nn.Dropout(0.5),nn.Linear(fc_hidden_units, 10)))return net"""Net Struct as follows:Sequential((vgg_block_1): Sequential((0): Conv2d(1, 8, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(vgg_block_2): Sequential((0): Conv2d(8, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(vgg_block_3): Sequential((0): Conv2d(16, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(3): ReLU()(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(vgg_block_4): Sequential((0): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(3): ReLU()(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(vgg_block_5): Sequential((0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(3): ReLU()(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(fc): Sequential((0): FlattenLayer()(1): Linear(in_features=3136, out_features=512, bias=True)(2): ReLU()(3): Dropout(p=0.5, inplace=False)(4): Linear(in_features=512, out_features=512, bias=True)(5): ReLU()(6): Dropout(p=0.5, inplace=False)(7): Linear(in_features=512, out_features=10, bias=True)))"""

4. NiN_Net

NIN网络可以理解为是卷积网络和全连接层结合作为一个块,然后多个块进行连接

由于前面说过1*1的卷积核构造的卷积层就是一个全连接层,所以这里就是这样构造的.

还有一个不同

除使用NiN块以外,NiN还有一个设计与AlexNet显著不同:NiN去掉了AlexNet最后的3个全连接层,取而代之地,NiN使用了输出通道数等于标签类别数的NiN块,然后使用全局平均池化层对每个通道中所有元素求平均并直接用于分类。这里的全局平均池化层即窗口形状等于输入空间维形状的平均池化层。NiN的这个设计的好处是可以显著减小模型参数尺寸,从而缓解过拟合。然而,该设计有时会造成获得有效模型的训练时间的增加。

def nin_block(in_channels, out_channels, kernel_size, stride, padding):blk = nn.Sequential(nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding),nn.ReLU(),nn.Conv2d(out_channels, out_channels, kernel_size=1),nn.ReLU(),nn.Conv2d(out_channels, out_channels, kernel_size=1),nn.ReLU())return blkclass GlobalAvgPool2d(nn.Module):# 全局平均池化层可通过将池化窗口形状设置成输入的高和宽实现def __init__(self):super(GlobalAvgPool2d, self).__init__()def forward(self, x):return F.avg_pool2d(x, kernel_size=x.size()[2:])net = nn.Sequential(nin_block(1, 96, kernel_size=11, stride=4, padding=0),nn.MaxPool2d(kernel_size=3, stride=2),nin_block(96, 256, kernel_size=5, stride=1, padding=2),nn.MaxPool2d(kernel_size=3, stride=2),nin_block(256, 384, kernel_size=3, stride=1, padding=1),nn.MaxPool2d(kernel_size=3, stride=2),nn.Dropout(0.5),# 标签类别数是10nin_block(384, 10, kernel_size=3, stride=1, padding=1),GlobalAvgPool2d(),# 将四维的输出转成二维的输出,其形状为(批量大小, 10)d2l.FlattenLayer())"""Net Struct as follows:Sequential((0): Sequential((0): Conv2d(1, 96, kernel_size=(11, 11), stride=(4, 4))(1): ReLU()(2): Conv2d(96, 96, kernel_size=(1, 1), stride=(1, 1))(3): ReLU()(4): Conv2d(96, 96, kernel_size=(1, 1), stride=(1, 1))(5): ReLU())(1): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)(2): Sequential((0): Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(1): ReLU()(2): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))(3): ReLU()(4): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))(5): ReLU())(3): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)(4): Sequential((0): Conv2d(256, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): Conv2d(384, 384, kernel_size=(1, 1), stride=(1, 1))(3): ReLU()(4): Conv2d(384, 384, kernel_size=(1, 1), stride=(1, 1))(5): ReLU())(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)(6): Dropout(p=0.5, inplace=False)(7): Sequential((0): Conv2d(384, 10, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU()(2): Conv2d(10, 10, kernel_size=(1, 1), stride=(1, 1))(3): ReLU()(4): Conv2d(10, 10, kernel_size=(1, 1), stride=(1, 1))(5): ReLU())(8): GlobalAvgPool2d()(9): FlattenLayer())"""

5. GoogLeNet

Inception块里有4条并行的线路。前3条线路使用窗口大小分别是1×1、3×3和5×5的卷积层来抽取不同空间尺寸下的信息,其中中间2个线路会对输入先做1×1卷积来减少输入通道数,以降低模型复杂度。第四条线路则使用3×3最大池化层,后接1×1卷积层来改变通道数。4条线路都使用了合适的填充来使输入与输出的高和宽一致。最后我们将每条线路的输出在通道维上连结,并输入接下来的层中去。

对于这里,我的理解就是这个网络把选择权交给了神经网络自己,由于采用了各种的卷积核,所以最后的参数的优化也是取决于网络自己。哪一个效果更好就用那一个

class Inception(nn.Module):# c1 - c4为每条线路里的层的输出通道数def __init__(self, in_c, c1, c2, c3, c4):super(Inception, self).__init__()# 线路1,单1 x 1卷积层self.p1_1 = nn.Conv2d(in_c, c1, kernel_size=1)# 线路2,1 x 1卷积层后接3 x 3卷积层self.p2_1 = nn.Conv2d(in_c, c2[0], kernel_size=1)self.p2_2 = nn.Conv2d(c2[0], c2[1], kernel_size=3, padding=1)# 线路3,1 x 1卷积层后接5 x 5卷积层self.p3_1 = nn.Conv2d(in_c, c3[0], kernel_size=1)self.p3_2 = nn.Conv2d(c3[0], c3[1], kernel_size=5, padding=2)# 线路4,3 x 3最大池化层后接1 x 1卷积层self.p4_1 = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)self.p4_2 = nn.Conv2d(in_c, c4, kernel_size=1)def forward(self, x):p1 = F.relu(self.p1_1(x))p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))p4 = F.relu(self.p4_2(self.p4_1(x)))return torch.cat((p1, p2, p3, p4), dim=1) # 在通道维上连结输出

b1 = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),nn.ReLU(),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))b2 = nn.Sequential(nn.Conv2d(64, 64, kernel_size=1),nn.Conv2d(64, 192, kernel_size=3, padding=1),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),Inception(256, 128, (128, 192), (32, 96), 64),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),Inception(512, 160, (112, 224), (24, 64), 64),Inception(512, 128, (128, 256), (24, 64), 64),Inception(512, 112, (144, 288), (32, 64), 64),Inception(528, 256, (160, 320), (32, 128), 128),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),Inception(832, 384, (192, 384), (48, 128), 128),d2l.GlobalAvgPool2d())net = nn.Sequential(b1, b2, b3, b4, b5,d2l.FlattenLayer(), nn.Linear(1024, 10))"""Net Struct as follows:Sequential((0): Sequential((0): Conv2d(1, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))(1): ReLU()(2): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False))(1): Sequential((0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))(1): Conv2d(64, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(2): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False))(2): Sequential((0): Inception((p1_1): Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(96, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(192, 16, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1)))(1): Inception((p1_1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(128, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(256, 32, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(32, 96, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1)))(2): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False))(3): Sequential((0): Inception((p1_1): Conv2d(480, 192, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(480, 96, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(96, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(480, 16, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(16, 48, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(480, 64, kernel_size=(1, 1), stride=(1, 1)))(1): Inception((p1_1): Conv2d(512, 160, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(512, 112, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(112, 224, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(512, 24, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(24, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1)))(2): Inception((p1_1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(512, 24, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(24, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1)))(3): Inception((p1_1): Conv2d(512, 112, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(512, 144, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(144, 288, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(512, 32, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1)))(4): Inception((p1_1): Conv2d(528, 256, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(528, 160, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(160, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(528, 32, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(32, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(528, 128, kernel_size=(1, 1), stride=(1, 1)))(5): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False))(4): Sequential((0): Inception((p1_1): Conv2d(832, 256, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(832, 160, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(160, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(832, 32, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(32, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1)))(1): Inception((p1_1): Conv2d(832, 384, kernel_size=(1, 1), stride=(1, 1))(p2_1): Conv2d(832, 192, kernel_size=(1, 1), stride=(1, 1))(p2_2): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(p3_1): Conv2d(832, 48, kernel_size=(1, 1), stride=(1, 1))(p3_2): Conv2d(48, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(p4_1): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(p4_2): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1)))(2): GlobalAvgPool2d())(5): FlattenLayer()(6): Linear(in_features=1024, out_features=10, bias=True))"""

这里的另一个核心就是关于通道数配比的选择,这个应该也是超参数

反正不管了,大量的实验表明这样的配方好,那用就完事了

5. Batch_Normalization

原书的介绍很详细,但是这个介绍的更加详细

https://zhuanlan.zhihu.com/p/89422962

摘录部分

我们首先来思考一个问题,为什么神经网络需要对输入做标准化处理?原因在于神经网络本身就是为了学习数据的分布,如果训练集和测试集的分布不同,那么导致学习的神经网络泛化性能大大降低。同时,我们在使用mini-batch对神经网络进行训练时,不同的batch的数据的分布也有可能不同,那么网络就要在每次迭代都去学习适应不同的分布,这样将会大大降低网络的训练速度。因此我们需要对输入数据进行标准化处理。 对于深度网络的训练是一个复杂的过程,只要网络的前面几层发生微小的改变,那么后面几层就会被累积放大下去。一旦网络某一层的输入数据的分布发生改变,那么这一层网络就需要去适应学习这个新的数据分布,所以如果训练过程中,训练数据的分布一直在发生变化,那么将会影响网络的训练速度。 网络一旦train起来,那么参数就要发生更新,除了输入层的数据外(因为输入层数据,我们已经人为的为每个样本归一化),后面网络每一层的输入数据分布是一直在发生变化的,因为在训练的时候,前面层训练参数的更新将导致后面层输入数据分布的变化。我们把网络中间层在训练过程中,数据分布的改变称之为:Internal Covariate Shift(输入分布不稳定)。为了解决Internal Covariate Shift,便有了Batch Normalization的诞生。可以看到,在BN的计算过程中,不仅仅有标准化的操作,还有最后一步,被称为变换重构。为什么要增加这一步呢?其实如果是仅仅使用上面的归一化公式,对网络某一层A的输出数据做归一化,然后送入网络下一层B,这样是会影响到本层网络A所学习到的特征的。打个比方,比如网络中间某一层学习到特征数据本身就分布在S型激活函数的两侧,你强制把它给我归一化处理、标准差也限制在了1,把数据变换成分布于s函数的中间部分,这样就相当于我这一层网络所学习到的特征分布被你搞坏了。于是我们增加了变换重构,保留了网络所学习到的特征。

具体代码

def batch_norm(is_training, X, gamma, beta, moving_mean, moving_var, eps, momentum):# 判断当前模式是训练模式还是预测模式if not is_training:# 如果是在预测模式下,直接使用传入的移动平均所得的均值和方差X_hat = (X - moving_mean) / torch.sqrt(moving_var + eps)else:assert len(X.shape) in (2, 4)if len(X.shape) == 2:# 使用全连接层的情况,计算特征维上的均值和方差mean = X.mean(dim=0)var = ((X - mean) ** 2).mean(dim=0)else:# 使用二维卷积层的情况,计算通道维上(axis=1)的均值和方差。这里我们需要保持# X的形状以便后面可以做广播运算mean = X.mean(dim=0, keepdim=True).mean(dim=2, keepdim=True).mean(dim=3, keepdim=True)var = ((X - mean) ** 2).mean(dim=0, keepdim=True).mean(dim=2, keepdim=True).mean(dim=3, keepdim=True)# 训练模式下用当前的均值和方差做标准化X_hat = (X - mean) / torch.sqrt(var + eps)# 更新移动平均的均值和方差moving_mean = momentum * moving_mean + (1.0 - momentum) * meanmoving_var = momentum * moving_var + (1.0 - momentum) * varY = gamma * X_hat + beta # 拉伸和偏移return Y, moving_mean, moving_varclass BatchNorm(nn.Module):def __init__(self, num_features, num_dims):super(BatchNorm, self).__init__()if num_dims == 2:shape = (1, num_features)else:shape = (1, num_features, 1, 1)# 参与求梯度和迭代的拉伸和偏移参数,分别初始化成0和1self.gamma = nn.Parameter(torch.ones(shape))self.beta = nn.Parameter(torch.zeros(shape))# 不参与求梯度和迭代的变量,全在内存上初始化成0self.moving_mean = torch.zeros(shape)self.moving_var = torch.zeros(shape)def forward(self, X):# 如果X不在内存上,将moving_mean和moving_var复制到X所在显存上if self.moving_mean.device != X.device:self.moving_mean = self.moving_mean.to(X.device)self.moving_var = self.moving_var.to(X.device)# 保存更新过的moving_mean和moving_var, Module实例的traning属性默认为true, 调用.eval()后设成falseY, self.moving_mean, self.moving_var = batch_norm(self.training,X, self.gamma, self.beta, self.moving_mean,self.moving_var, eps=1e-5, momentum=0.9)return Ynet = nn.Sequential(nn.Conv2d(1, 6, 5), # in_channels, out_channels, kernel_sizeBatchNorm(6, num_dims=4),nn.Sigmoid(),nn.MaxPool2d(2, 2), # kernel_size, stridenn.Conv2d(6, 16, 5),BatchNorm(16, num_dims=4),nn.Sigmoid(),nn.MaxPool2d(2, 2),FlattenLayer(),nn.Linear(16*4*4, 120),BatchNorm(120, num_dims=2),nn.Sigmoid(),nn.Linear(120, 84),BatchNorm(84, num_dims=2),nn.Sigmoid(),nn.Linear(84, 10))net = nn.Sequential(nn.Conv2d(1, 6, 5), # in_channels, out_channels, kernel_sizenn.BatchNorm2d(6),nn.Sigmoid(),nn.MaxPool2d(2, 2), # kernel_size, stridenn.Conv2d(6, 16, 5),nn.BatchNorm2d(16),nn.Sigmoid(),nn.MaxPool2d(2, 2),FlattenLayer(),nn.Linear(16*4*4, 120),nn.BatchNorm1d(120),nn.Sigmoid(),nn.Linear(120, 84),nn.BatchNorm1d(84),nn.Sigmoid(),nn.Linear(84, 10))"""Net Struct as follows:Sequential((0): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))(1): BatchNorm2d(6, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): Sigmoid()(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(4): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))(5): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(6): Sigmoid()(7): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(8): FlattenLayer()(9): Linear(in_features=256, out_features=120, bias=True)(10): BatchNorm1d(120, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(11): Sigmoid()(12): Linear(in_features=120, out_features=84, bias=True)(13): BatchNorm1d(84, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(14): Sigmoid()(15): Linear(in_features=84, out_features=10, bias=True))"""

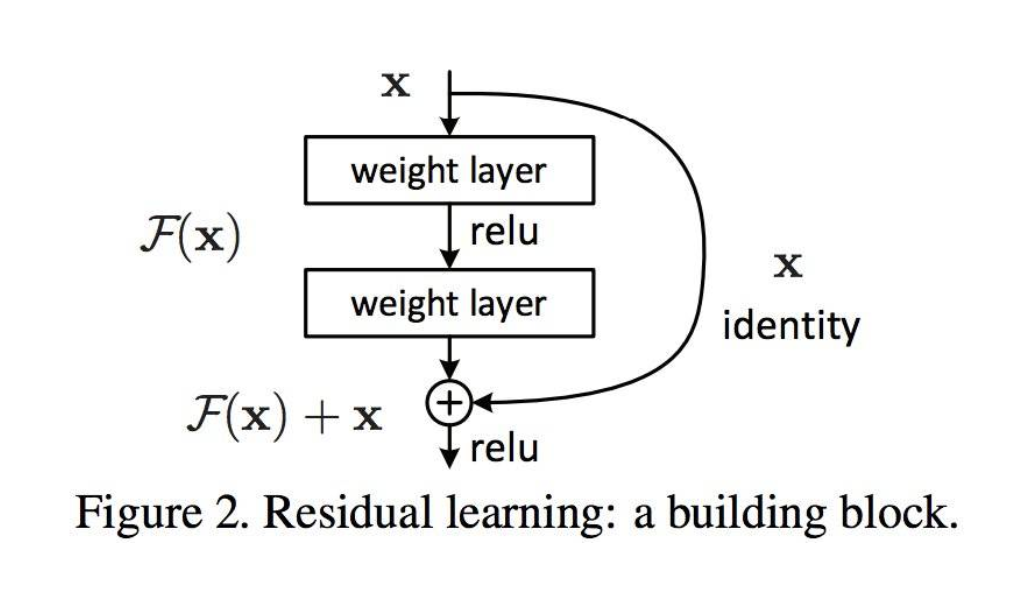

6. ResNet

残差网络是为了解决网络深度加深但是训练效果减弱的问题

优秀参考链接

https://zhuanlan.zhihu.com/p/42706477

原理大致如下图

就是经过了卷积层的结果和原始数据进行结合,保证训练后的结果保留训练前的特征

换做实现就是经过两个卷积层的结果和原始输入相加再经过ReLu,其中如果经过卷积层有通道数的改变,那么在相加之前需要通过1*1的卷积层

class Residual(nn.Module): # 本类已保存在d2lzh_pytorch包中方便以后使用def __init__(self, in_channels, out_channels, use_1x1conv=False, stride=1):super(Residual, self).__init__()self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1, stride=stride)self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1)if use_1x1conv:self.conv3 = nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=stride)else:self.conv3 = Noneself.bn1 = nn.BatchNorm2d(out_channels)self.bn2 = nn.BatchNorm2d(out_channels)def forward(self, X):Y = F.relu(self.bn1(self.conv1(X)))Y = self.bn2(self.conv2(Y))if self.conv3:X = self.conv3(X)return F.relu(Y + X)net = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),nn.BatchNorm2d(64),nn.ReLU(),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))def resnet_block(in_channels, out_channels, num_residuals, first_block=False):if first_block:assert in_channels == out_channels # 第一个模块的通道数同输入通道数一致blk = []for i in range(num_residuals):if i == 0 and not first_block:blk.append(Residual(in_channels, out_channels, use_1x1conv=True, stride=2))else:blk.append(Residual(out_channels, out_channels))return nn.Sequential(*blk)net.add_module("resnet_block1", resnet_block(64, 64, 2, first_block=True))net.add_module("resnet_block2", resnet_block(64, 128, 2))net.add_module("resnet_block3", resnet_block(128, 256, 2))net.add_module("resnet_block4", resnet_block(256, 512, 2))net.add_module("global_avg_pool", GlobalAvgPool2d()) # GlobalAvgPool2d的输出: (Batch, 512, 1, 1)net.add_module("fc", nn.Sequential(FlattenLayer(), nn.Linear(512, 10)))"""Net Struct as follows:Sequential((0): Conv2d(1, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU()(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(resnet_block1): Sequential((0): Residual((conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))(1): Residual((conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(resnet_block2): Sequential((0): Residual((conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv3): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2))(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))(1): Residual((conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(resnet_block3): Sequential((0): Residual((conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv3): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))(1): Residual((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(resnet_block4): Sequential((0): Residual((conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv3): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2))(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))(1): Residual((conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(global_avg_pool): GlobalAvgPool2d()(fc): Sequential((0): FlattenLayer()(1): Linear(in_features=512, out_features=10, bias=True)))"""

7. Dense_Net

稠密神经网络的和之前的一个不同点就是,他没有直接的合并两个数据源。而是把两个数据进行并联

所以数据在经过卷积层之后通道数会快速的增加,所以还有一个层是合并数据,减小计算量的

def conv_block(in_channels, out_channels):blk = nn.Sequential(nn.BatchNorm2d(in_channels),nn.ReLU(),nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1))return blk#每一次的循环都会加大outchannel个通道class DenseBlock(nn.Module):def __init__(self, num_convs, in_channels, out_channels):super(DenseBlock, self).__init__()net = []for i in range(num_convs):in_c = in_channels + i * out_channelsnet.append(conv_block(in_c, out_channels))self.net = nn.ModuleList(net)self.out_channels = in_channels + num_convs * out_channels # 计算输出通道数def forward(self, X):for blk in self.net:#print(blk)Y = blk(X)X = torch.cat((X, Y), dim=1) # 在通道维上将输入和输出连结return X#这个模块是用于减小数据通道的,利用1*1的卷积以及2的步长减小通道和图片大小def transition_block(in_channels, out_channels):blk = nn.Sequential(nn.BatchNorm2d(in_channels),nn.ReLU(),nn.Conv2d(in_channels, out_channels, kernel_size=1),nn.AvgPool2d(kernel_size=2, stride=2))return blknet = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),nn.BatchNorm2d(64),nn.ReLU(),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))num_channels, growth_rate = 64, 32 # num_channels为当前的通道数num_convs_in_dense_blocks = [4, 4, 4, 4]for i, num_convs in enumerate(num_convs_in_dense_blocks):DB = DenseBlock(num_convs, num_channels, growth_rate)net.add_module("DenseBlosk_%d" % i, DB)# 上一个稠密块的输出通道数num_channels = DB.out_channels# 在稠密块之间加入通道数减半的过渡层if i != len(num_convs_in_dense_blocks) - 1:net.add_module("transition_block_%d" % i, transition_block(num_channels, num_channels // 2))num_channels = num_channels // 2net.add_module("BN", nn.BatchNorm2d(num_channels))net.add_module("relu", nn.ReLU())net.add_module("global_avg_pool", GlobalAvgPool2d()) # GlobalAvgPool2d的输出: (Batch, num_channels, 1, 1)net.add_module("fc", nn.Sequential(FlattenLayer(), nn.Linear(num_channels, 10)))'''Net Struct as follows:Sequential((0): Conv2d(1, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU()(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(DenseBlosk_0): DenseBlock((net): ModuleList((0): Sequential((0): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(64, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(1): Sequential((0): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(96, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(2): Sequential((0): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(3): Sequential((0): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(160, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(transition_block_0): Sequential((0): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1))(3): AvgPool2d(kernel_size=2, stride=2, padding=0))(DenseBlosk_1): DenseBlock((net): ModuleList((0): Sequential((0): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(96, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(1): Sequential((0): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(2): Sequential((0): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(160, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(3): Sequential((0): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(192, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(transition_block_1): Sequential((0): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(224, 112, kernel_size=(1, 1), stride=(1, 1))(3): AvgPool2d(kernel_size=2, stride=2, padding=0))(DenseBlosk_2): DenseBlock((net): ModuleList((0): Sequential((0): BatchNorm2d(112, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(112, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(1): Sequential((0): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(144, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(2): Sequential((0): BatchNorm2d(176, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(176, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(3): Sequential((0): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(208, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(transition_block_2): Sequential((0): BatchNorm2d(240, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(240, 120, kernel_size=(1, 1), stride=(1, 1))(3): AvgPool2d(kernel_size=2, stride=2, padding=0))(DenseBlosk_3): DenseBlock((net): ModuleList((0): Sequential((0): BatchNorm2d(120, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(120, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(1): Sequential((0): BatchNorm2d(152, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(152, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(2): Sequential((0): BatchNorm2d(184, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(184, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(3): Sequential((0): BatchNorm2d(216, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(1): ReLU()(2): Conv2d(216, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(BN): BatchNorm2d(248, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU()(global_avg_pool): GlobalAvgPool2d()(fc): Sequential((0): FlattenLayer()(1): Linear(in_features=248, out_features=10, bias=True)))'''