arthas的使用

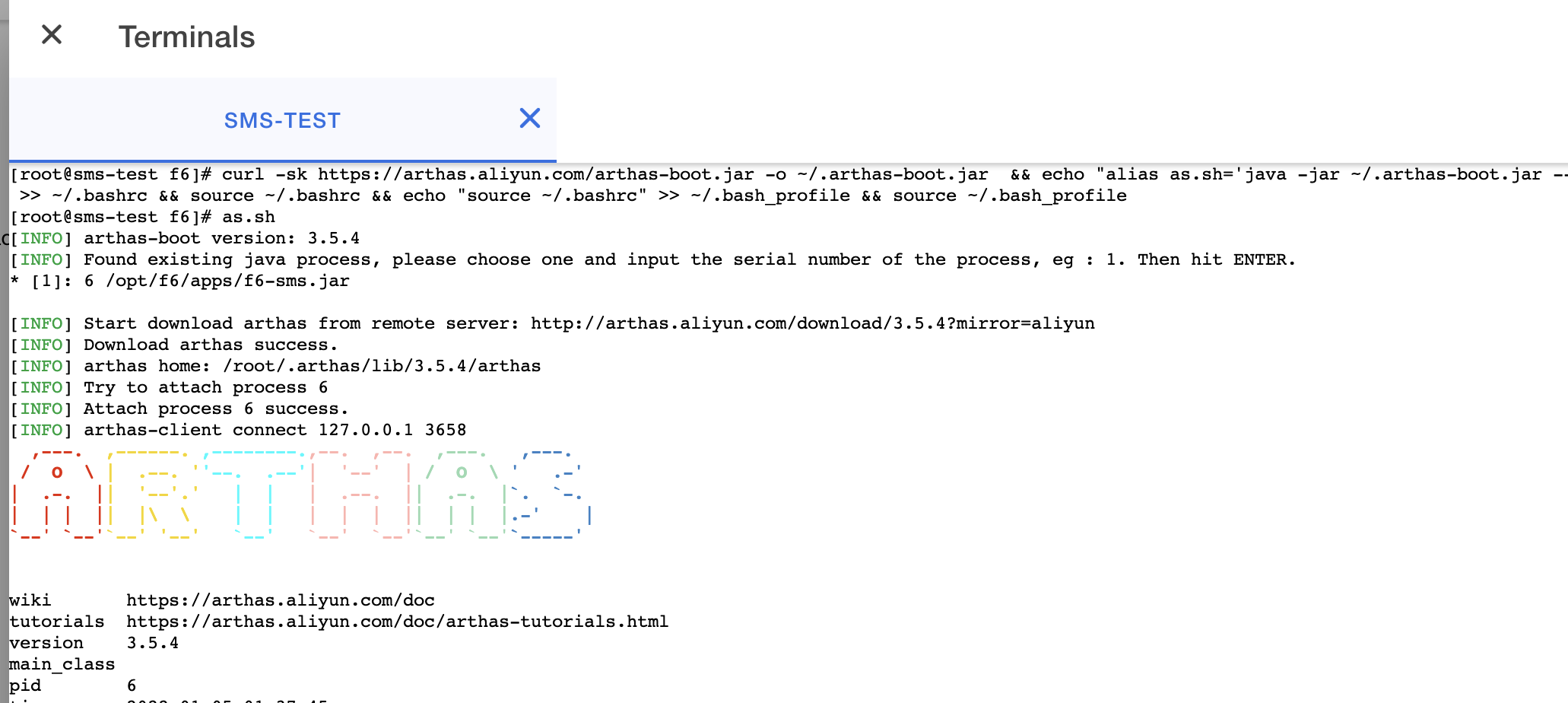

启动arthas

使用kubenav登录到容器内控制台,输入以下指令启动arthas

curl -sk https://arthas.aliyun.com/arthas-boot.jar -o ~/.arthas-boot.jar && echo "alias as.sh='java -jar ~/.arthas-boot.jar --repo-mirror aliyun --use-http 2>&1'" >> ~/.bashrc && source ~/.bashrc && echo "source ~/.bashrc" >> ~/.bash_profile && source ~/.bash_profileas.sh

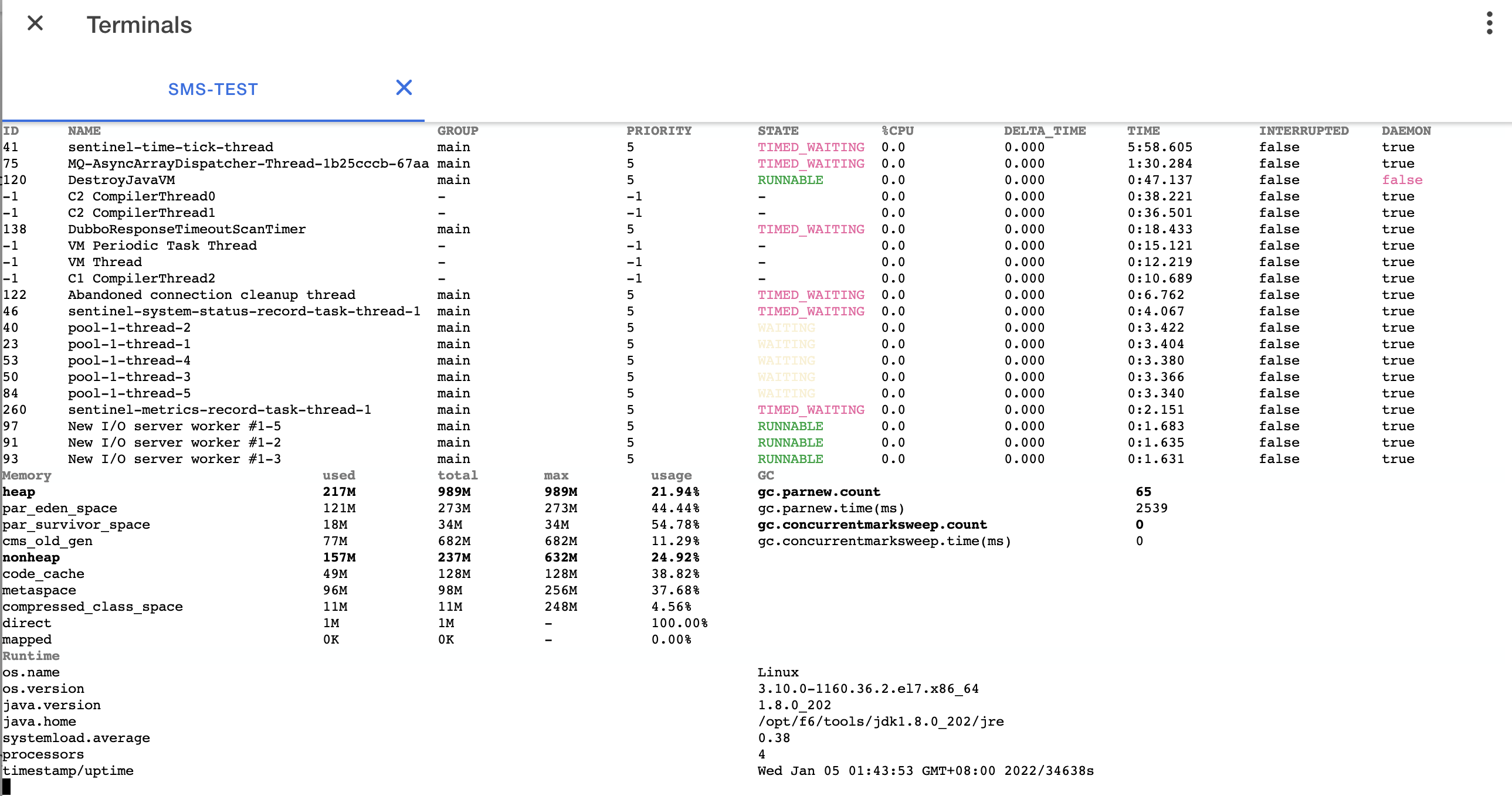

查看dashboard

输入dashboard,按回车/enter,会展示线程,内存、gc及jvm相关的一些信息,按ctrl+c可以中断执行。

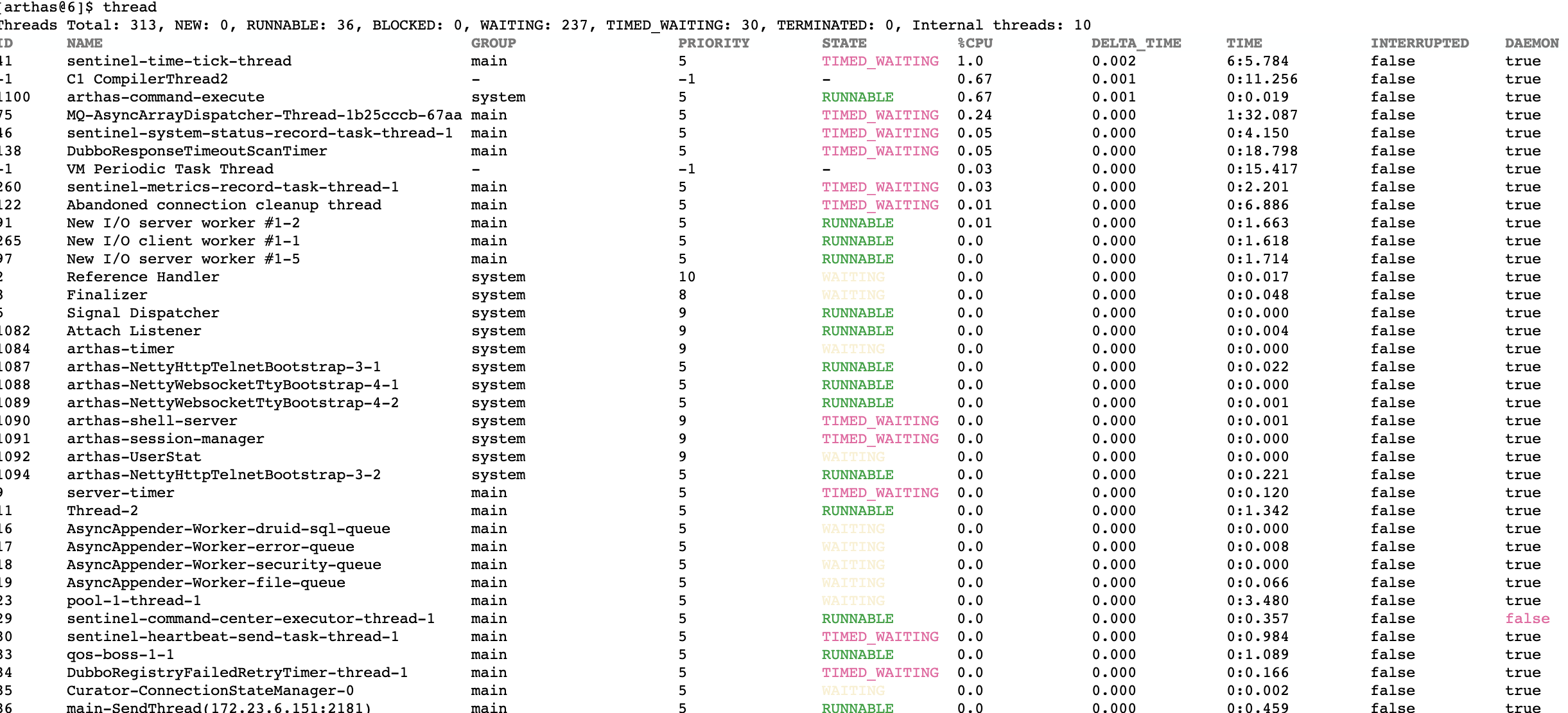

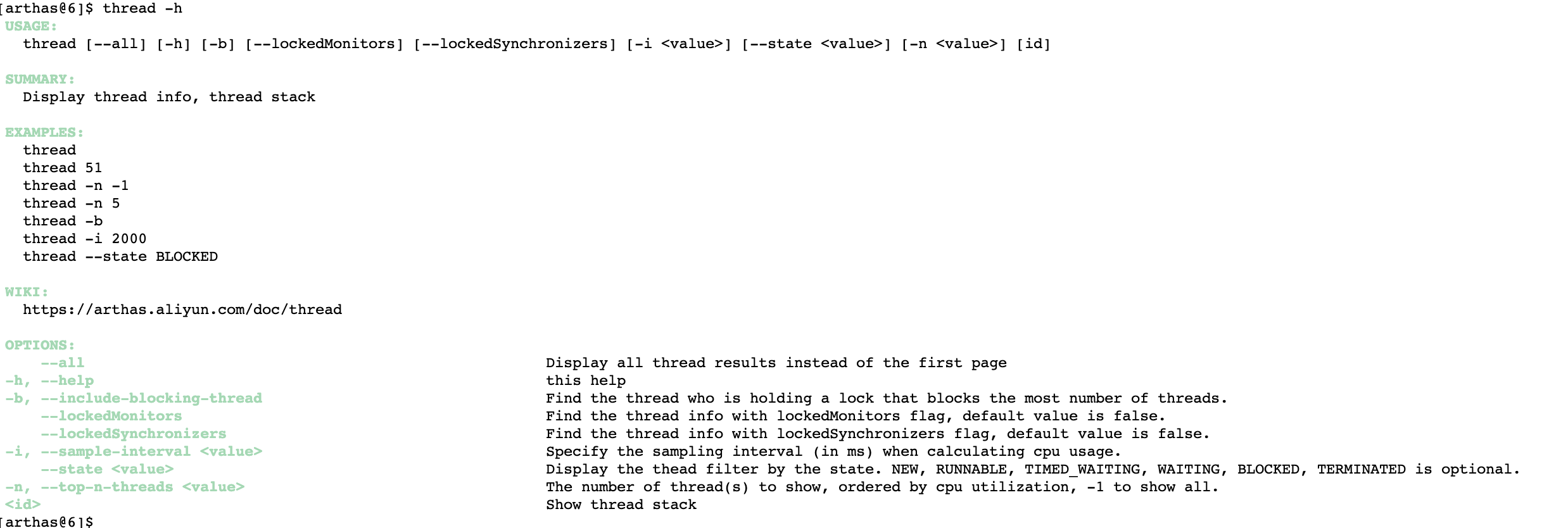

thread

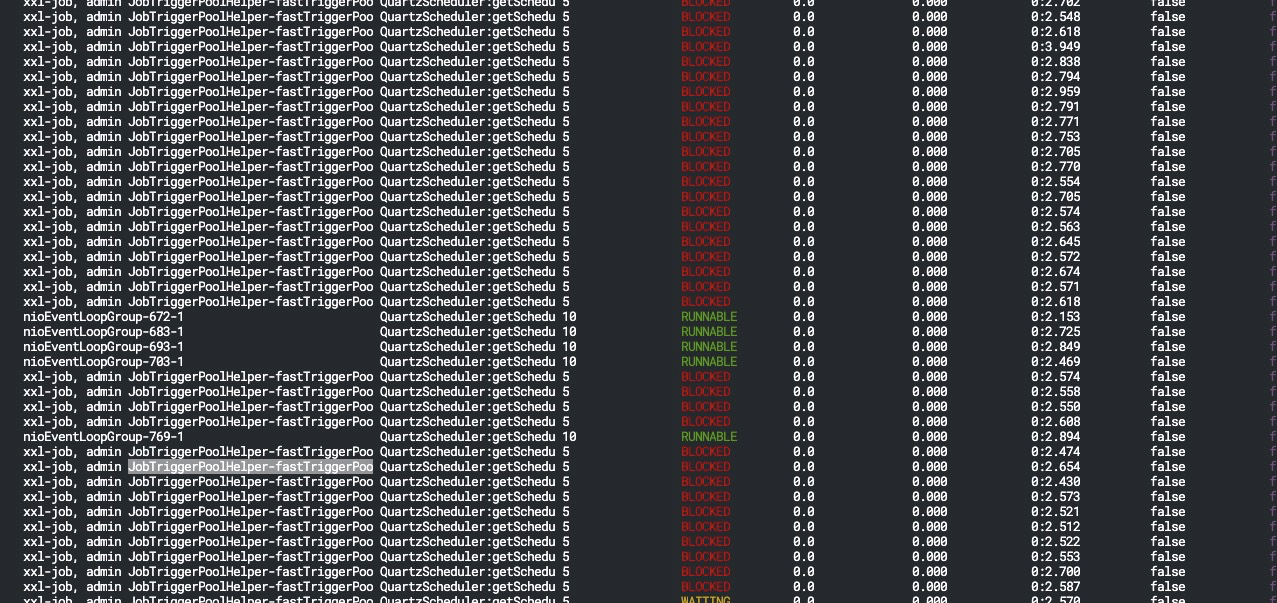

thread 查看线程列表

thread 线程id 查看该线程的堆栈

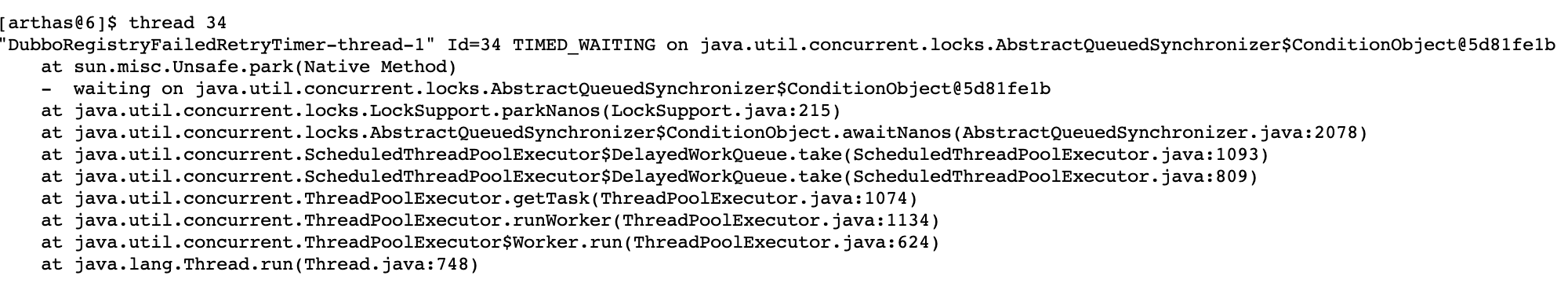

这里我们以前段时间sst环境xxljob故障来举例

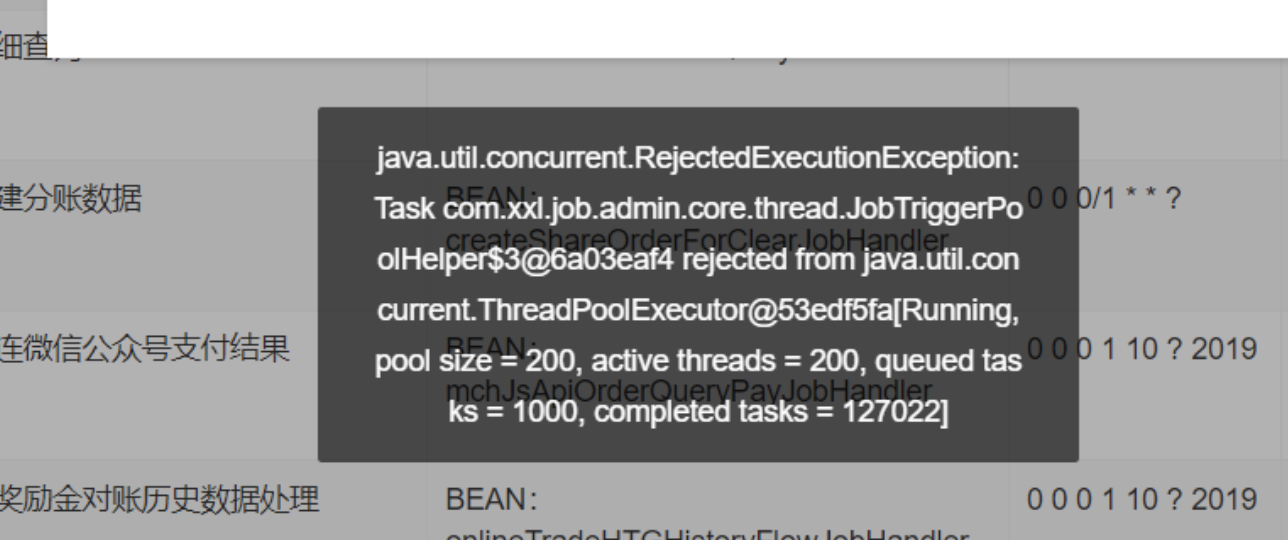

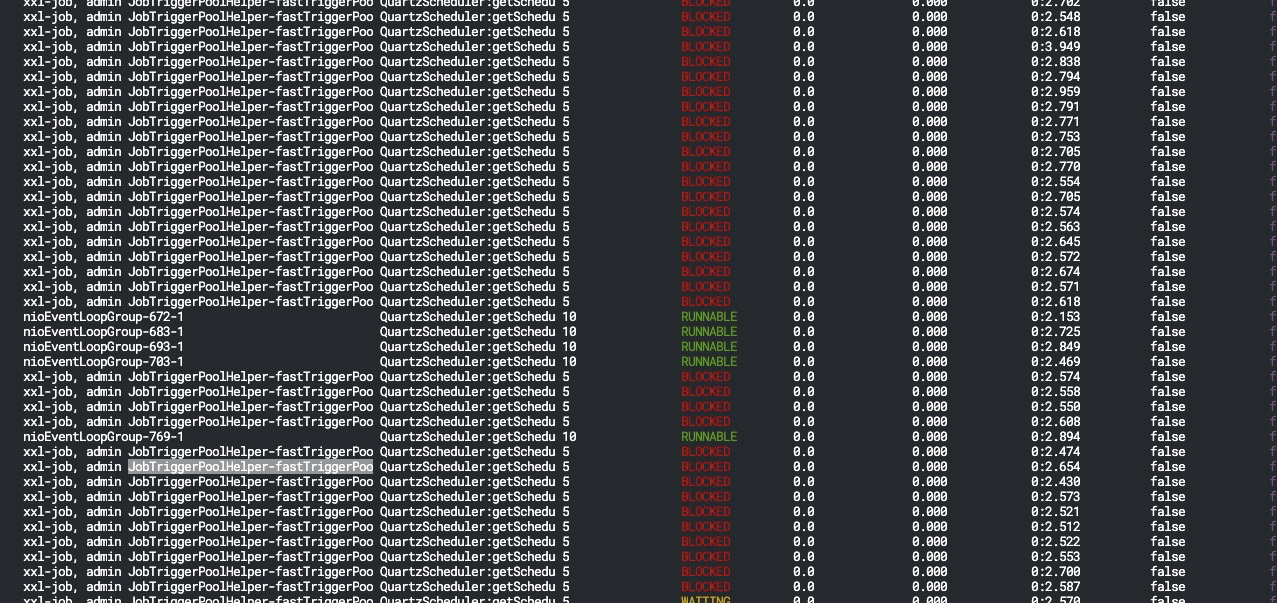

故障现象,所有job都调度不了,直接报错

或者显示调度成功了,但是实际还没有执行,要等很久才能执行到job

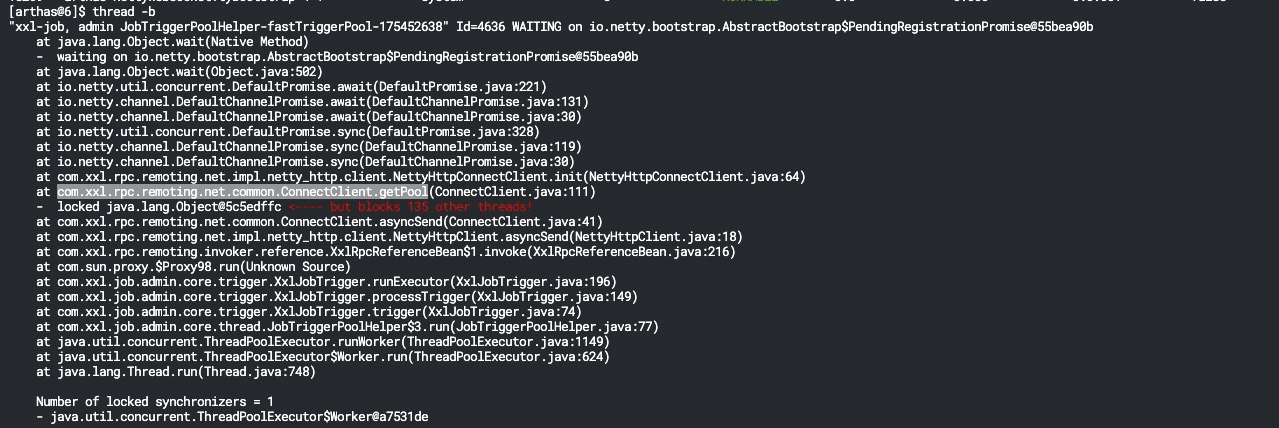

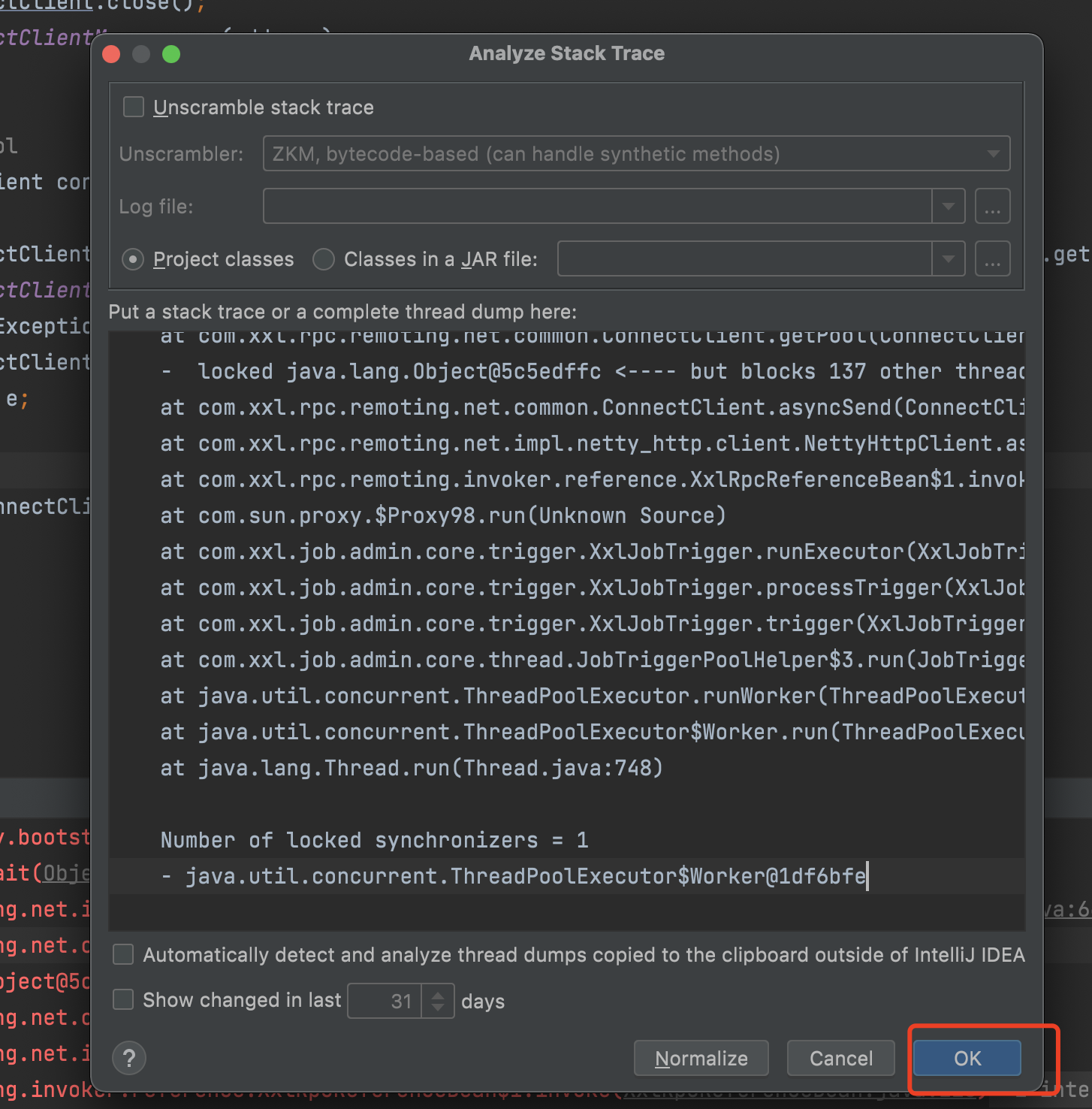

"xxl-job, admin JobTriggerPoolHelper-fastTriggerPool-31419390" Id=7462 WAITING on io.netty.bootstrap.AbstractBootstrap$PendingRegistrationPromise@319e5ba0at java.lang.Object.wait(Native Method)- waiting on io.netty.bootstrap.AbstractBootstrap$PendingRegistrationPromise@319e5ba0at java.lang.Object.wait(Object.java:502)at io.netty.util.concurrent.DefaultPromise.await(DefaultPromise.java:221)at io.netty.channel.DefaultChannelPromise.await(DefaultChannelPromise.java:131)at io.netty.channel.DefaultChannelPromise.await(DefaultChannelPromise.java:30)at io.netty.util.concurrent.DefaultPromise.sync(DefaultPromise.java:328)at io.netty.channel.DefaultChannelPromise.sync(DefaultChannelPromise.java:119)at io.netty.channel.DefaultChannelPromise.sync(DefaultChannelPromise.java:30)at com.xxl.rpc.remoting.net.impl.netty_http.client.NettyHttpConnectClient.init(NettyHttpConnectClient.java:64)at com.xxl.rpc.remoting.net.common.ConnectClient.getPool(ConnectClient.java:111)- locked java.lang.Object@5c5edffc <---- but blocks 137 other threads!at com.xxl.rpc.remoting.net.common.ConnectClient.asyncSend(ConnectClient.java:41)at com.xxl.rpc.remoting.net.impl.netty_http.client.NettyHttpClient.asyncSend(NettyHttpClient.java:18)at com.xxl.rpc.remoting.invoker.reference.XxlRpcReferenceBean$1.invoke(XxlRpcReferenceBean.java:216)at com.sun.proxy.$Proxy98.run(Unknown Source)at com.xxl.job.admin.core.trigger.XxlJobTrigger.runExecutor(XxlJobTrigger.java:196)at com.xxl.job.admin.core.trigger.XxlJobTrigger.processTrigger(XxlJobTrigger.java:149)at com.xxl.job.admin.core.trigger.XxlJobTrigger.trigger(XxlJobTrigger.java:74)at com.xxl.job.admin.core.thread.JobTriggerPoolHelper$3.run(JobTriggerPoolHelper.java:77)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748)Number of locked synchronizers = 1- java.util.concurrent.ThreadPoolExecutor$Worker@1df6bfe

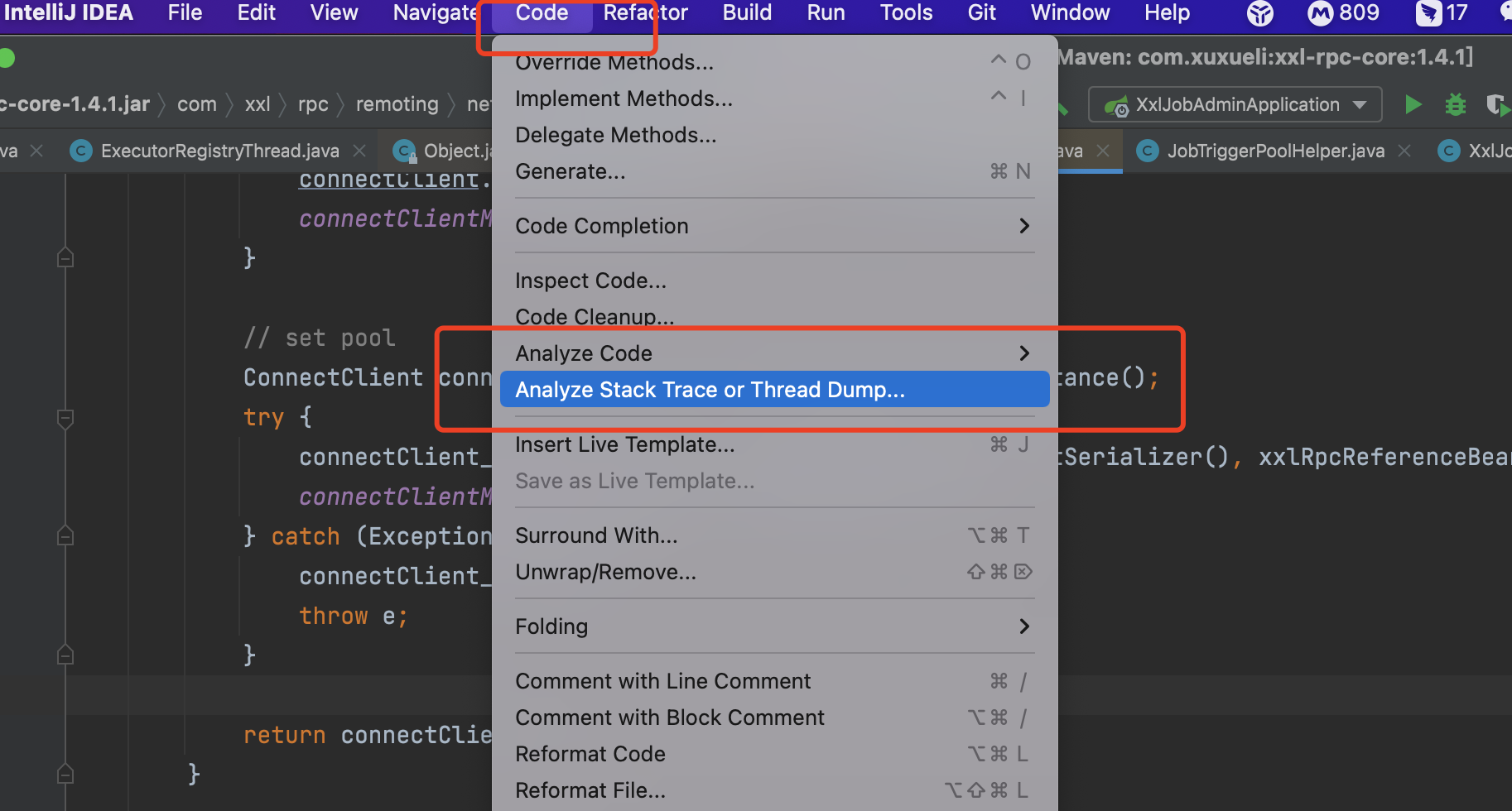

小技巧:我们可以通过复制堆栈信息到idea,快速找到代码

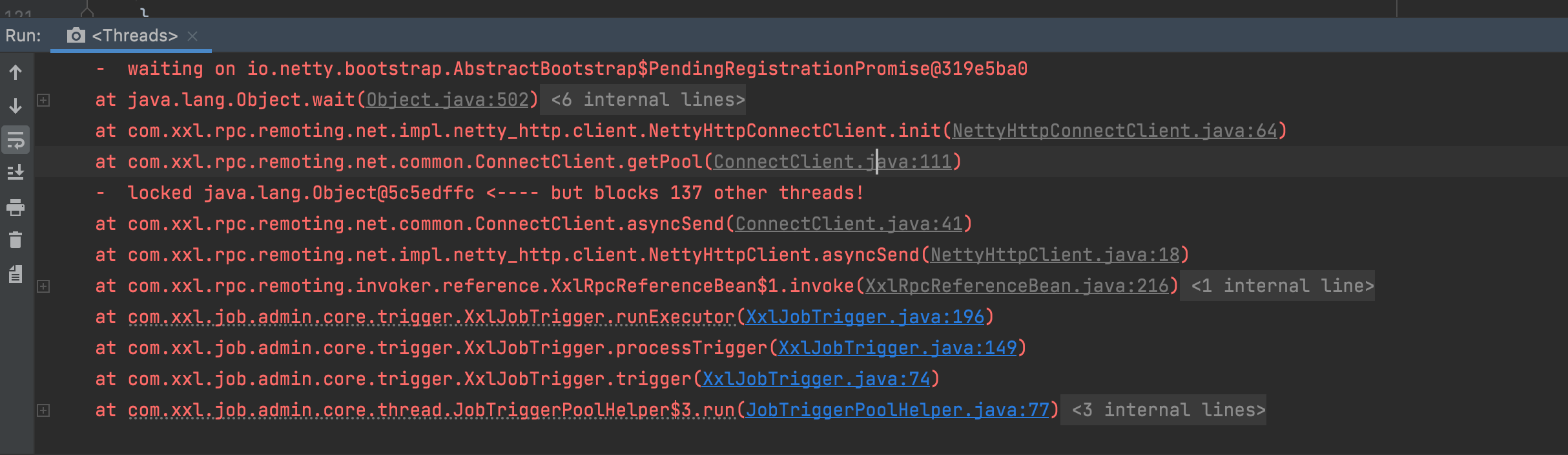

这样就能快速定位到代码

// ---------------------- client pool map ----------------------/*** async send*/public static void asyncSend(XxlRpcRequest xxlRpcRequest, String address,Class<? extends ConnectClient> connectClientImpl,final XxlRpcReferenceBean xxlRpcReferenceBean) throws Exception {// client pool [tips03 : may save 35ms/100invoke if move it to constructor, but it is necessary. cause by ConcurrentHashMap.get]ConnectClient clientPool = ConnectClient.getPool(address, connectClientImpl, xxlRpcReferenceBean);try {// do invokeclientPool.send(xxlRpcRequest);} catch (Exception e) {throw e;}}

// remove-create new clientsynchronized (clientLock) {// get-valid client, avlid repeatconnectClient = connectClientMap.get(address);if (connectClient!=null && connectClient.isValidate()) {return connectClient;}// remove oldif (connectClient != null) {connectClient.close();connectClientMap.remove(address);}// set poolConnectClient connectClient_new = connectClientImpl.newInstance();try {connectClient_new.init(address, xxlRpcReferenceBean.getSerializer(), xxlRpcReferenceBean.getInvokerFactory());connectClientMap.put(address, connectClient_new);} catch (Exception e) {connectClient_new.close();throw e;}return connectClient_new;}

通过查看代码我们可以看到xxljob调度线程在执行时会获取连接池,而正这个连接池获取的代码被上了锁,导致线程直接被blocked 通过查看日志可以看到有很多调度连接超时的日志,很明显就是xxljob访问不到执行器,链接超时导致,从而引发线程被blocked,每调度一次这样访问不通的执行器就会blocked一个xxljob调度线程,这样就会产生排队,当所有线程都被blocked后就会把线程池给打满,这样所有job都调度不了

经过进一步分析,原因是有同学把本地注册到了sst环境,应用在注册xxljob初始化时,只会获取一次本地ip,之后每30秒会有一次心跳,但是如果本地ip变了,这个心跳上报的还是启动时的ip,如果出现这种情况就会产生上述问题

改善:后续考虑通过nginx加白名单的方式过滤,非sst环境的ip不允许注册xxljob

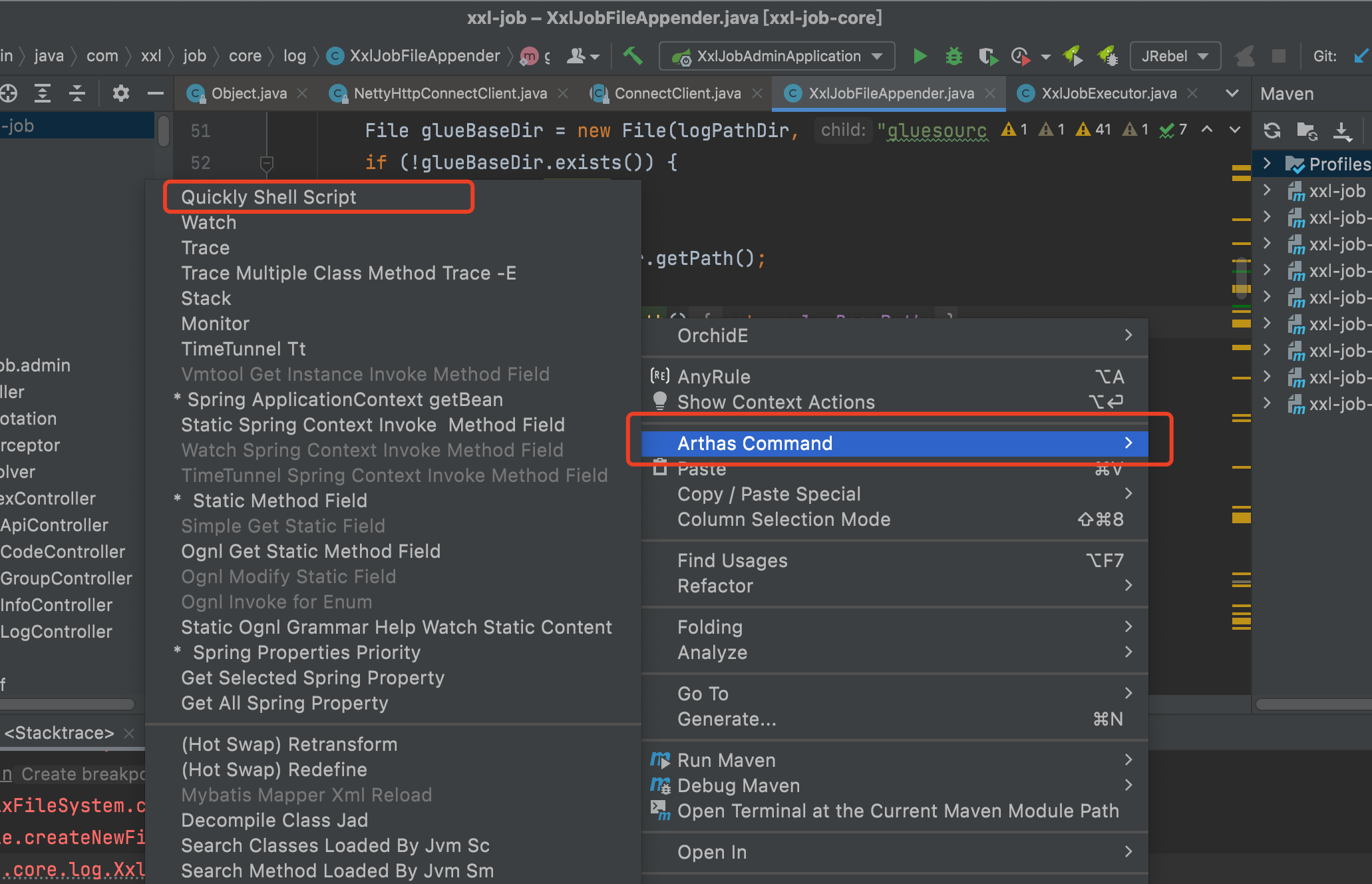

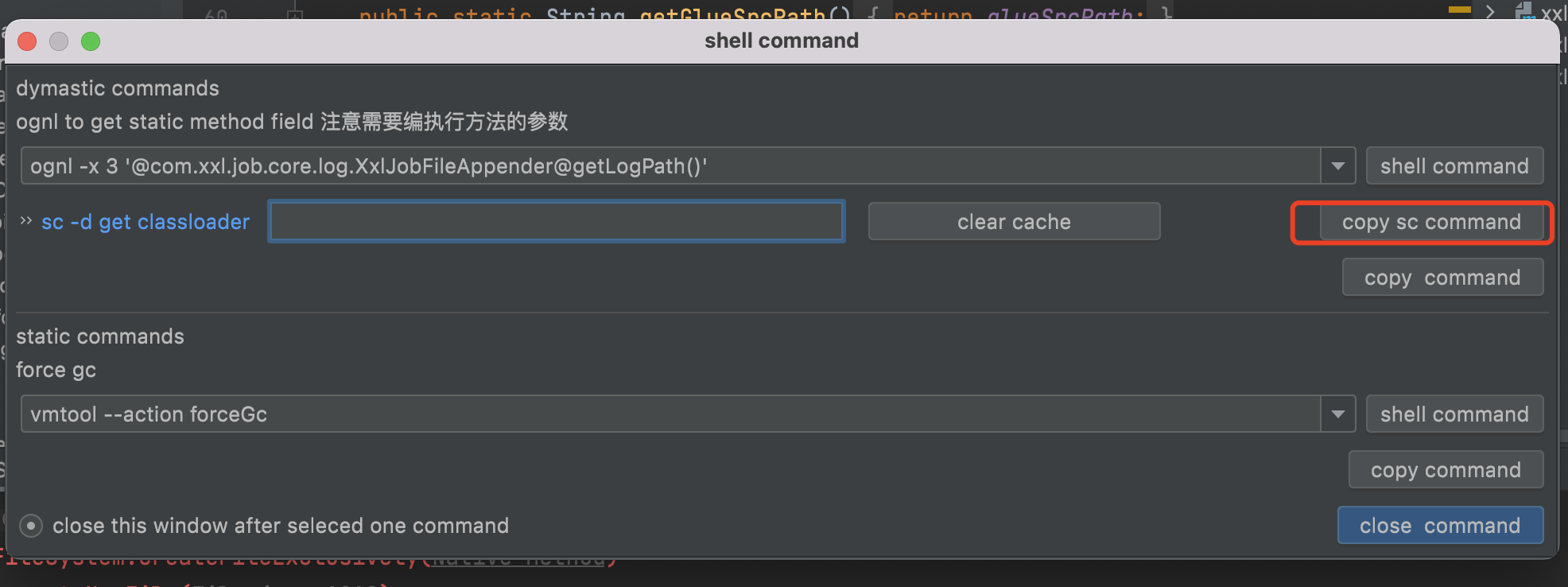

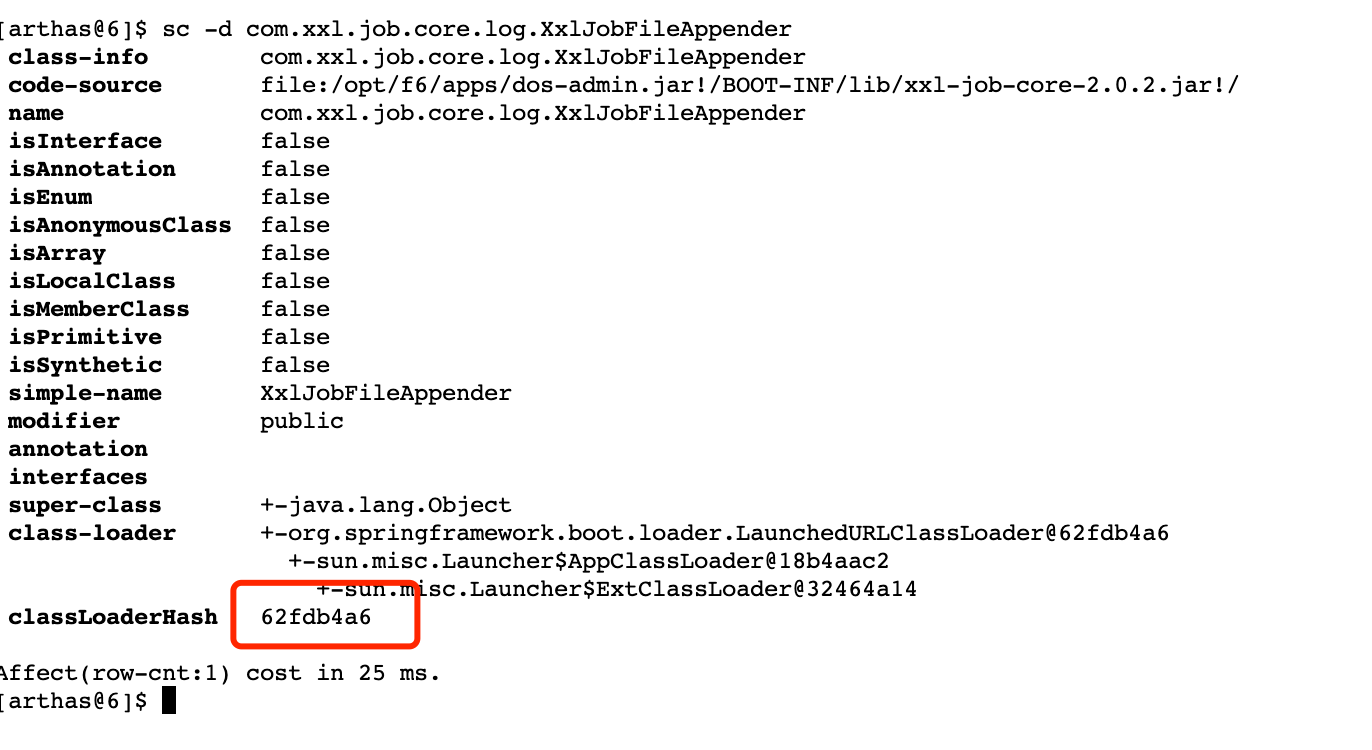

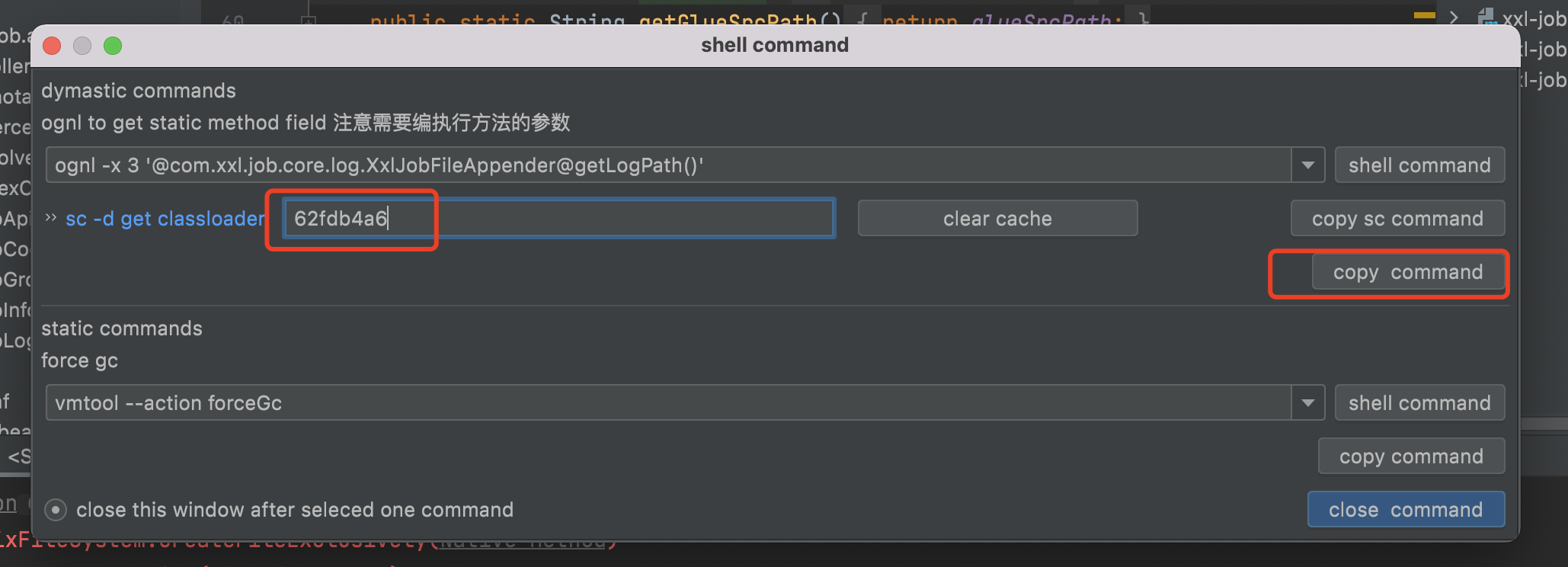

arthas-idea-plugin

search class

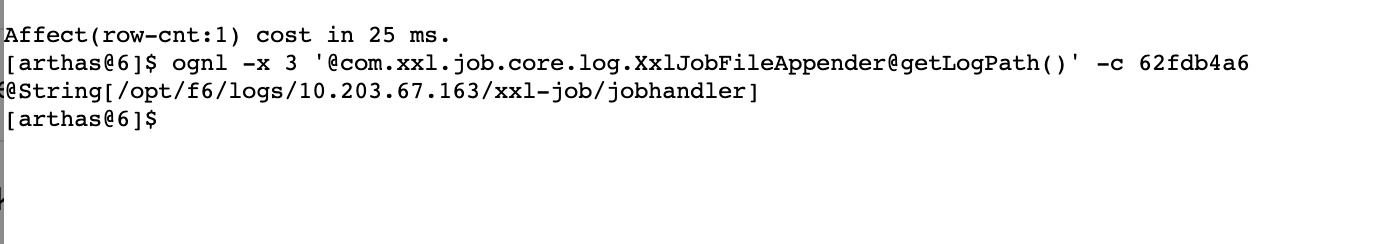

执行静态方法

更多详细操作可参考官方语雀文档

https://www.yuque.com/arthas-idea-plugin