问题描述:

一个Node节点变成NotReady。

集群信息:

kubernetes:v1.17.2

docker:19.03.11

kernel:4.4.230-1.el7.elrepo.x86_64

诊断:

看到NotReady,我第一反应是网络出现了问题,随即查看了flannel的日志,发现许多下面的输出:

E1204 17:25:56.167738 1 iptables.go:115] Failed to ensure iptables rules: Error checking rule existence: failed to check rule existence: fork/exec /sbin/iptables: resource temporarily unavailable

然后又查看了Kube-proxy的日志,也发现的异常日志如下:

E1208 02:56:13.175427 1 proxier.go:1835] Failed to execute iptables-save, syncing all rules: fork/exec /usr/sbin/iptables-save: resource temporarily unavailableE1208 02:56:13.175634 1 proxier.go:1835] Failed to execute iptables-save, syncing all rules: fork/exec /usr/sbin/iptables-save: resource temporarily unavailableE1208 02:56:13.175767 1 proxier.go:1776] Failed to ensure that nat chain KUBE-SERVICES exists: error creating chain "KUBE-SERVICES": fork/exec /usr/sbin/iptables: resource temporarily unavailable:E1208 02:56:13.175964 1 ipset.go:178] Failed to make sure ip set: &{{KUBE-EXTERNAL-IP hash:ip,port inet 1024 65536 0-65535 Kubernetes service external ip + port for masquerade and filter purpose} map[] 0xc0003040a0} exist, error: error creating ipset KUBE-EXTERNAL-IP, error: fork/exec /sbin/ipset: resource temporarily unavailableI1225 01:53:48.321632 1 trace.go:116] Trace[497065658]: "iptables save" (started: 2020-12-25 01:53:39.132708528 +0000 UTC m=+9419409.059395424) (total time: 8.452921929s):Trace[497065658]: [8.452921929s] [8.452921929s] ENDI1225 01:55:41.327722 1 trace.go:116] Trace[1926803068]: "iptables save" (started: 2020-12-25 01:53:48.84925046 +0000 UTC m=+9419418.775937233) (total time: 1m52.478315467s):

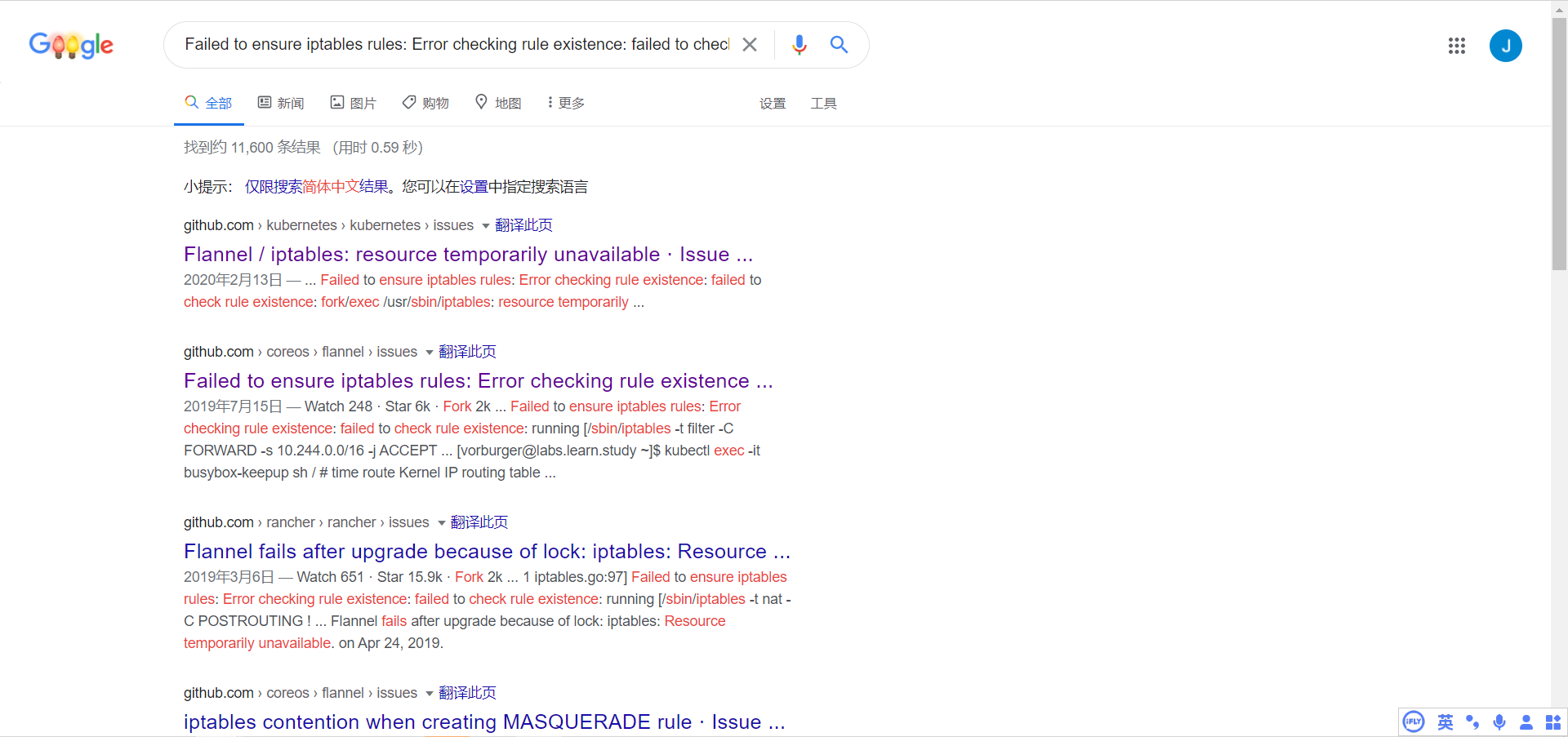

咋一看是iptables的出了问题,本着有事找谷歌,没事也找百度的人道主义精神,一搜出来的东西还真不少。

然后看到官方有人提了这个Issues:

- https://github.com/kubernetes/kubernetes/issues/88148

- https://github.com/coreos/flannel/issues/950

- https://github.com/kubernetes/kubernetes/issues/57428

大体上说:

- 在升级期间,某些服务触及或修改了每个主机上的 IPTABLES,但未完全释放它。

- 在未重新启动的主机上,Flannel 现在无法锁定 IPTABLES,因为已有部分设备持有它。

解决办法就是重启Node。

惊不惊喜,意不意外?

先试试再说。

先将这个节点设置为维护模式:

kubectl cordon k8s-node03-137kubectl drain k8s-node03-137

然后重启节点。

重启过后果然没问题了,你能信?

反正信不信的先观察一段时间……

先把节点恢复再说:

# kubectl uncordon k8s-node03-137