skywalking是什么?为什么要给你的应用加上skywalking?

在介绍skywalking之前,我们先来了解一个东西,那就是APM(Application Performance Management)系统。

一、什么是APM系统

APM (Application Performance Management) 即应用性能管理系统,是对企业系统即时监控以实现

对应用程序性能管理和故障管理的系统化的解决方案。应用性能管理,主要指对企业的关键业务应用进

行监测、优化,提高企业应用的可靠性和质量,保证用户得到良好的服务,降低IT总拥有成本。

APM系统是可以帮助理解系统行为、用于分析性能问题的工具,以便发生故障的时候,能够快速定位和

解决问题。

说白了就是随着微服务的的兴起,传统的单体应用拆分为不同功能的小应用,用户的一次请求会经过多个系统,不同服务之间的调用非常复杂,其中任何一个系统出错都可能影响整个请求的处理结果。为了解决这个问题,Google 推出了一个分布式链路跟踪系统 Dapper ,之后各个互联网公司都参照Dapper 的思想推出了自己的分布式链路跟踪系统,而这些系统就是分布式系统下的APM系统。

目前市面上的APM系统有很多,比如skywalking、pinpoint、zipkin等。其中

- Zipkin:由Twitter公司开源,开放源代码分布式的跟踪系统,用于收集服务的定时数据,以解决微服务架构中的延迟问题,包括:数据的收集、存储、查找和展现。

- Pinpoint:一款对Java编写的大规模分布式系统的APM工具,由韩国人开源的分布式跟踪组件。

- Skywalking:国产的优秀APM组件,是一个对JAVA分布式应用程序集群的业务运行情况进行追踪、告警和分析的系统。

二、什么是skywalking

SkyWalking是apache基金会下面的一个开源APM项目,为微服务架构和云原生架构系统设计。它通过探针自动收集所需的标,并进行分布式追踪。通过这些调用链路以及指标,Skywalking APM会感知应用间关系和服务间关系,并进行相应的指标统计。Skywalking支持链路追踪和监控应用组件基本涵盖主流框架和容器,如国产RPC Dubbo和motan等,国际化的spring boot,spring cloud。官方网站:http://skywalking.apache.org/

Skywalking的具有以下几个特点:

- 多语言自动探针,Java,.NET Core和Node.JS。

- 多种监控手段,语言探针和service mesh。

- 轻量高效。不需要额外搭建大数据平台。

- 模块化架构。UI、存储、集群管理多种机制可选。

- 支持告警。

- 优秀的可视化效果。

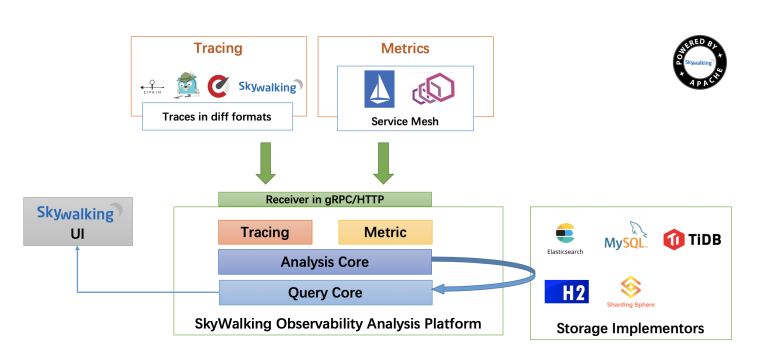

Skywalking整体架构如下:

整体架构包含如下三个组成部分:

1. 探针(agent)负责进行数据的收集,包含了Tracing和Metrics的数据,agent会被安装到服务所在的服务器上,以方便数据的获取。

2. 可观测性分析平台OAP(Observability Analysis Platform),接收探针发送的数据,并在内存中使用分析引擎(Analysis Core)进行数据的整合运算,然后将数据存储到对应的存储介质上,比如Elasticsearch、MySQL数据库、H2数据库等。同时OAP还使用查询引擎(Query Core)提供HTTP查询接口。

3. Skywalking提供单独的UI进行数据的查看,此时UI会调用OAP提供的接口,获取对应的数据然后进行展示。

三、搭建并使用

搭建其实很简单,官方有提供搭建案例。

上文提到skywalking的后端数据存储的介质可以是Elasticsearch、MySQL数据库、H2数据库等,我这里使用Elasticsearch作为数据存储,而且为了便与扩展和收集其他应用日志,我将单独搭建Elasticsearch。

3.1、搭建elasticsearch

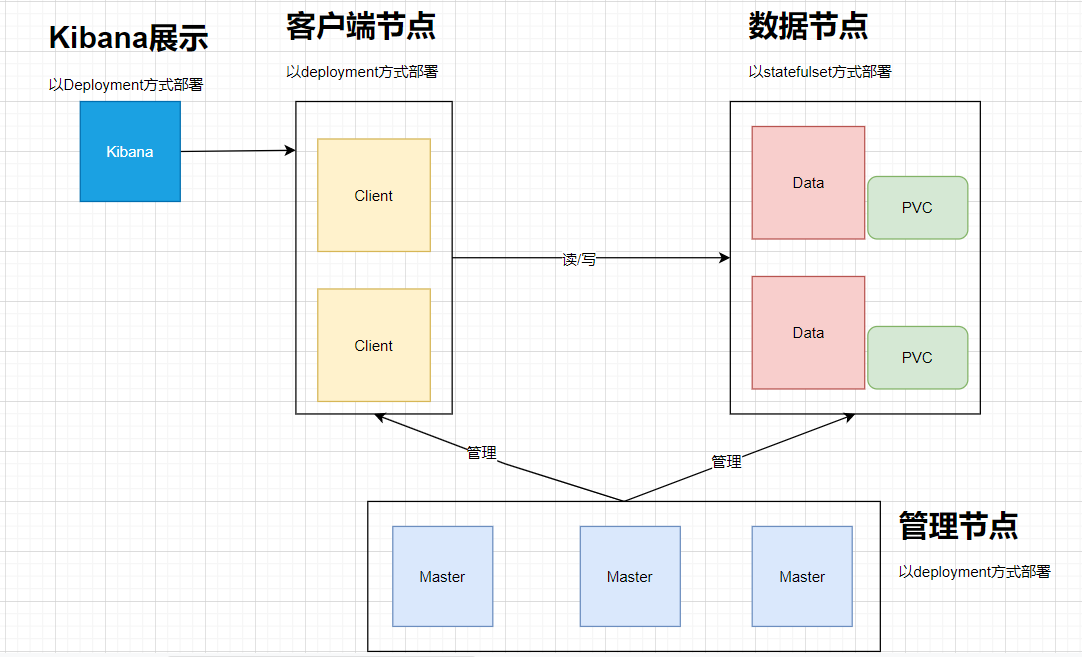

为了增加es的扩展性,按角色功能分为master节点、data数据节点、client客户端节点。其整体架构如下:

其中:

- Elasticsearch数据节点Pods被部署为一个有状态集(StatefulSet)

- Elasticsearch master节点Pods被部署为一个Deployment

- Elasticsearch客户端节点Pods是以Deployment的形式部署的,其内部服务将允许访问R/W请求的数据节点

- Kibana部署为Deployment,其服务可在Kubernetes集群外部访问

(1)先创建estatic的命名空间(es-ns.yaml):

apiVersion: v1kind: Namespacemetadata:name: elastic

执行kubectl apply -f es-ns.yaml

(2)部署es master

配置清单如下(es-master.yaml):

---apiVersion: v1kind: ConfigMapmetadata:namespace: elasticname: elasticsearch-master-configlabels:app: elasticsearchrole: masterdata:elasticsearch.yml: |-cluster.name: ${CLUSTER_NAME}node.name: ${NODE_NAME}discovery.seed_hosts: ${NODE_LIST}cluster.initial_master_nodes: ${MASTER_NODES}network.host: 0.0.0.0node:master: truedata: falseingest: falsexpack.security.enabled: truexpack.monitoring.collection.enabled: true---apiVersion: v1kind: Servicemetadata:namespace: elasticname: elasticsearch-masterlabels:app: elasticsearchrole: masterspec:ports:- port: 9300name: transportselector:app: elasticsearchrole: master---apiVersion: apps/v1kind: Deploymentmetadata:namespace: elasticname: elasticsearch-masterlabels:app: elasticsearchrole: masterspec:replicas: 1selector:matchLabels:app: elasticsearchrole: mastertemplate:metadata:labels:app: elasticsearchrole: masterspec:initContainers:- name: init-sysctlimage: busybox:1.27.2command:- sysctl- -w- vm.max_map_count=262144securityContext:privileged: truecontainers:- name: elasticsearch-masterimage: docker.elastic.co/elasticsearch/elasticsearch:7.8.0env:- name: CLUSTER_NAMEvalue: elasticsearch- name: NODE_NAMEvalue: elasticsearch-master- name: NODE_LISTvalue: elasticsearch-master,elasticsearch-data,elasticsearch-client- name: MASTER_NODESvalue: elasticsearch-master- name: "ES_JAVA_OPTS"value: "-Xms512m -Xmx512m"ports:- containerPort: 9300name: transportvolumeMounts:- name: configmountPath: /usr/share/elasticsearch/config/elasticsearch.ymlreadOnly: truesubPath: elasticsearch.yml- name: storagemountPath: /datavolumes:- name: configconfigMap:name: elasticsearch-master-config- name: "storage"emptyDir:medium: ""---

然后执行kubectl apply -f ``es-master.yaml创建配置清单,然后pod变为running状态即为部署成功。

# kubectl get pod -n elasticNAME READY STATUS RESTARTS AGEelasticsearch-master-77d5d6c9db-xt5kq 1/1 Running 0 67s

(3)部署es data

配置清单如下(es-data.yaml):

---apiVersion: v1kind: ConfigMapmetadata:namespace: elasticname: elasticsearch-data-configlabels:app: elasticsearchrole: datadata:elasticsearch.yml: |-cluster.name: ${CLUSTER_NAME}node.name: ${NODE_NAME}discovery.seed_hosts: ${NODE_LIST}cluster.initial_master_nodes: ${MASTER_NODES}network.host: 0.0.0.0node:master: falsedata: trueingest: falsexpack.security.enabled: truexpack.monitoring.collection.enabled: true---apiVersion: v1kind: Servicemetadata:namespace: elasticname: elasticsearch-datalabels:app: elasticsearchrole: dataspec:ports:- port: 9300name: transportselector:app: elasticsearchrole: data---apiVersion: apps/v1kind: StatefulSetmetadata:namespace: elasticname: elasticsearch-datalabels:app: elasticsearchrole: dataspec:serviceName: "elasticsearch-data"selector:matchLabels:app: elasticsearchrole: datatemplate:metadata:labels:app: elasticsearchrole: dataspec:initContainers:- name: init-sysctlimage: busybox:1.27.2command:- sysctl- -w- vm.max_map_count=262144securityContext:privileged: truecontainers:- name: elasticsearch-dataimage: docker.elastic.co/elasticsearch/elasticsearch:7.8.0env:- name: CLUSTER_NAMEvalue: elasticsearch- name: NODE_NAMEvalue: elasticsearch-data- name: NODE_LISTvalue: elasticsearch-master,elasticsearch-data,elasticsearch-client- name: MASTER_NODESvalue: elasticsearch-master- name: "ES_JAVA_OPTS"value: "-Xms1024m -Xmx1024m"ports:- containerPort: 9300name: transportvolumeMounts:- name: configmountPath: /usr/share/elasticsearch/config/elasticsearch.ymlreadOnly: truesubPath: elasticsearch.yml- name: elasticsearch-data-persistent-storagemountPath: /data/dbvolumes:- name: configconfigMap:name: elasticsearch-data-configvolumeClaimTemplates:- metadata:name: elasticsearch-data-persistent-storagespec:accessModes: [ "ReadWriteOnce" ]storageClassName: managed-nfs-storageresources:requests:storage: 20Gi---

执行kubectl apply -f es-data.yaml创建配置清单,其状态变为running即为部署成功。

# kubectl get pod -n elasticNAME READY STATUS RESTARTS AGEelasticsearch-data-0 1/1 Running 0 4selasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 2m35selasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 2m35selasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 2m35s

(4)部署es client

配置清单如下(es-client.yaml):

---apiVersion: v1kind: ConfigMapmetadata:namespace: elasticname: elasticsearch-client-configlabels:app: elasticsearchrole: clientdata:elasticsearch.yml: |-cluster.name: ${CLUSTER_NAME}node.name: ${NODE_NAME}discovery.seed_hosts: ${NODE_LIST}cluster.initial_master_nodes: ${MASTER_NODES}network.host: 0.0.0.0node:master: falsedata: falseingest: truexpack.security.enabled: truexpack.monitoring.collection.enabled: true---apiVersion: v1kind: Servicemetadata:namespace: elasticname: elasticsearch-clientlabels:app: elasticsearchrole: clientspec:ports:- port: 9200name: client- port: 9300name: transportselector:app: elasticsearchrole: client---apiVersion: apps/v1kind: Deploymentmetadata:namespace: elasticname: elasticsearch-clientlabels:app: elasticsearchrole: clientspec:selector:matchLabels:app: elasticsearchrole: clienttemplate:metadata:labels:app: elasticsearchrole: clientspec:initContainers:- name: init-sysctlimage: busybox:1.27.2command:- sysctl- -w- vm.max_map_count=262144securityContext:privileged: truecontainers:- name: elasticsearch-clientimage: docker.elastic.co/elasticsearch/elasticsearch:7.8.0env:- name: CLUSTER_NAMEvalue: elasticsearch- name: NODE_NAMEvalue: elasticsearch-client- name: NODE_LISTvalue: elasticsearch-master,elasticsearch-data,elasticsearch-client- name: MASTER_NODESvalue: elasticsearch-master- name: "ES_JAVA_OPTS"value: "-Xms256m -Xmx256m"ports:- containerPort: 9200name: client- containerPort: 9300name: transportvolumeMounts:- name: configmountPath: /usr/share/elasticsearch/config/elasticsearch.ymlreadOnly: truesubPath: elasticsearch.yml- name: storagemountPath: /datavolumes:- name: configconfigMap:name: elasticsearch-client-config- name: "storage"emptyDir:medium: ""

执行kubectl apply -f es-client.yaml创建配置清单,其状态变为running即为部署成功。

# kubectl get pod -n elasticNAME READY STATUS RESTARTS AGEelasticsearch-client-f79cf4f7b-pbz9d 1/1 Running 0 5selasticsearch-data-0 1/1 Running 0 3m11selasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 5m42selasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 5m42selasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 5m42s

(5)生成密码

我们启用了 xpack 安全模块来保护我们的集群,所以我们需要一个初始化的密码。我们可以执行如下所示的命令,在客户端节点容器内运行 bin/elasticsearch-setup-passwords 命令来生成默认的用户名和密码:

# kubectl exec $(kubectl get pods -n elastic | grep elasticsearch-client | sed -n 1p | awk '{print $1}') \-n elastic \-- bin/elasticsearch-setup-passwords auto -bChanged password for user apm_systemPASSWORD apm_system = QNSdaanAQ5fvGMrjgYnMChanged password for user kibana_systemPASSWORD kibana_system = UFPiUj0PhFMCmFKvuJucChanged password for user kibanaPASSWORD kibana = UFPiUj0PhFMCmFKvuJucChanged password for user logstash_systemPASSWORD logstash_system = Nqes3CCxYFPRLlNsuffEChanged password for user beats_systemPASSWORD beats_system = Eyssj5NHevFjycfUsPnTChanged password for user remote_monitoring_userPASSWORD remote_monitoring_user = 7Po4RLQQZ94fp7F31ioRChanged password for user elasticPASSWORD elastic = n816QscHORFQMQWQfs4U

注意需要将 elastic 用户名和密码也添加到 Kubernetes 的 Secret 对象中:

kubectl create secret generic elasticsearch-pw-elastic \-n elastic \--from-literal password=n816QscHORFQMQWQfs4U

(6)、验证集群状态

kubectl exec -n elastic \$(kubectl get pods -n elastic | grep elasticsearch-client | sed -n 1p | awk '{print $1}') \-- curl -u elastic:n816QscHORFQMQWQfs4U http://elasticsearch-client.elastic:9200/_cluster/health?pretty{"cluster_name" : "elasticsearch","status" : "green","timed_out" : false,"number_of_nodes" : 3,"number_of_data_nodes" : 1,"active_primary_shards" : 2,"active_shards" : 2,"relocating_shards" : 0,"initializing_shards" : 0,"unassigned_shards" : 0,"delayed_unassigned_shards" : 0,"number_of_pending_tasks" : 0,"number_of_in_flight_fetch" : 0,"task_max_waiting_in_queue_millis" : 0,"active_shards_percent_as_number" : 100.0}

上面status的状态为green,表示集群正常。到这里ES集群就搭建完了。为了方便操作可以再部署一个kibana服务,如下:

---apiVersion: v1kind: ConfigMapmetadata:namespace: elasticname: kibana-configlabels:app: kibanadata:kibana.yml: |-server.host: 0.0.0.0elasticsearch:hosts: ${ELASTICSEARCH_HOSTS}username: ${ELASTICSEARCH_USER}password: ${ELASTICSEARCH_PASSWORD}---apiVersion: v1kind: Servicemetadata:namespace: elasticname: kibanalabels:app: kibanaspec:ports:- port: 5601name: webinterfaceselector:app: kibana---apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:annotations:prometheus.io/http-probe: 'true'prometheus.io/scrape: 'true'name: kibananamespace: elasticspec:rules:- host: kibana.coolops.cnhttp:paths:- backend:serviceName: kibanaservicePort: 5601path: /---apiVersion: apps/v1kind: Deploymentmetadata:namespace: elasticname: kibanalabels:app: kibanaspec:selector:matchLabels:app: kibanatemplate:metadata:labels:app: kibanaspec:containers:- name: kibanaimage: docker.elastic.co/kibana/kibana:7.8.0ports:- containerPort: 5601name: webinterfaceenv:- name: ELASTICSEARCH_HOSTSvalue: "http://elasticsearch-client.elastic.svc.cluster.local:9200"- name: ELASTICSEARCH_USERvalue: "elastic"- name: ELASTICSEARCH_PASSWORDvalueFrom:secretKeyRef:name: elasticsearch-pw-elastickey: passwordvolumeMounts:- name: configmountPath: /usr/share/kibana/config/kibana.ymlreadOnly: truesubPath: kibana.ymlvolumes:- name: configconfigMap:name: kibana-config---

然后执行kubectl apply -f kibana.yaml创建kibana,查看pod的状态是否为running。

# kubectl get pod -n elasticNAME READY STATUS RESTARTS AGEelasticsearch-client-f79cf4f7b-pbz9d 1/1 Running 0 30melasticsearch-data-0 1/1 Running 0 33melasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 36melasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 36melasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 36mkibana-6b9947fccb-4vp29 1/1 Running 0 3m51s

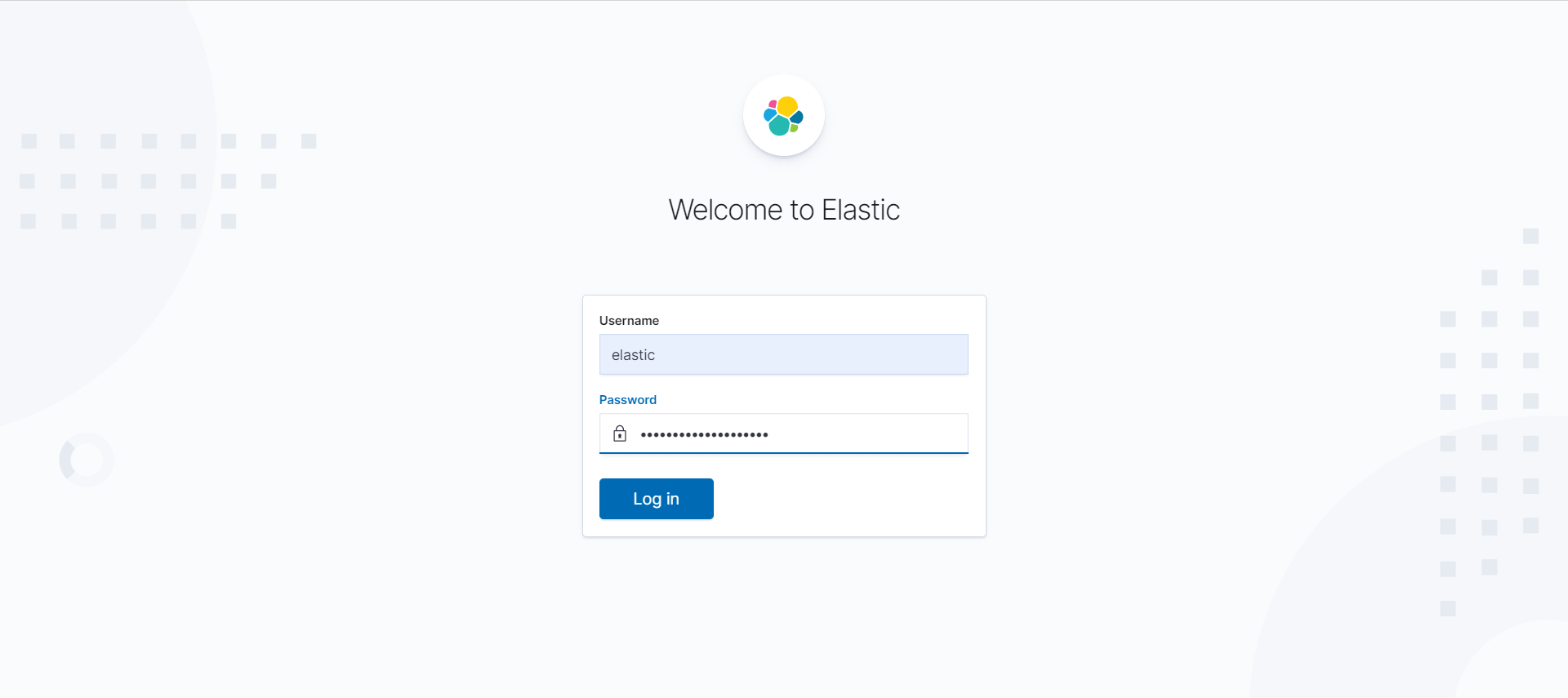

如下图所示,使用上面我们创建的 Secret 对象的 elastic 用户和生成的密码即可登录:

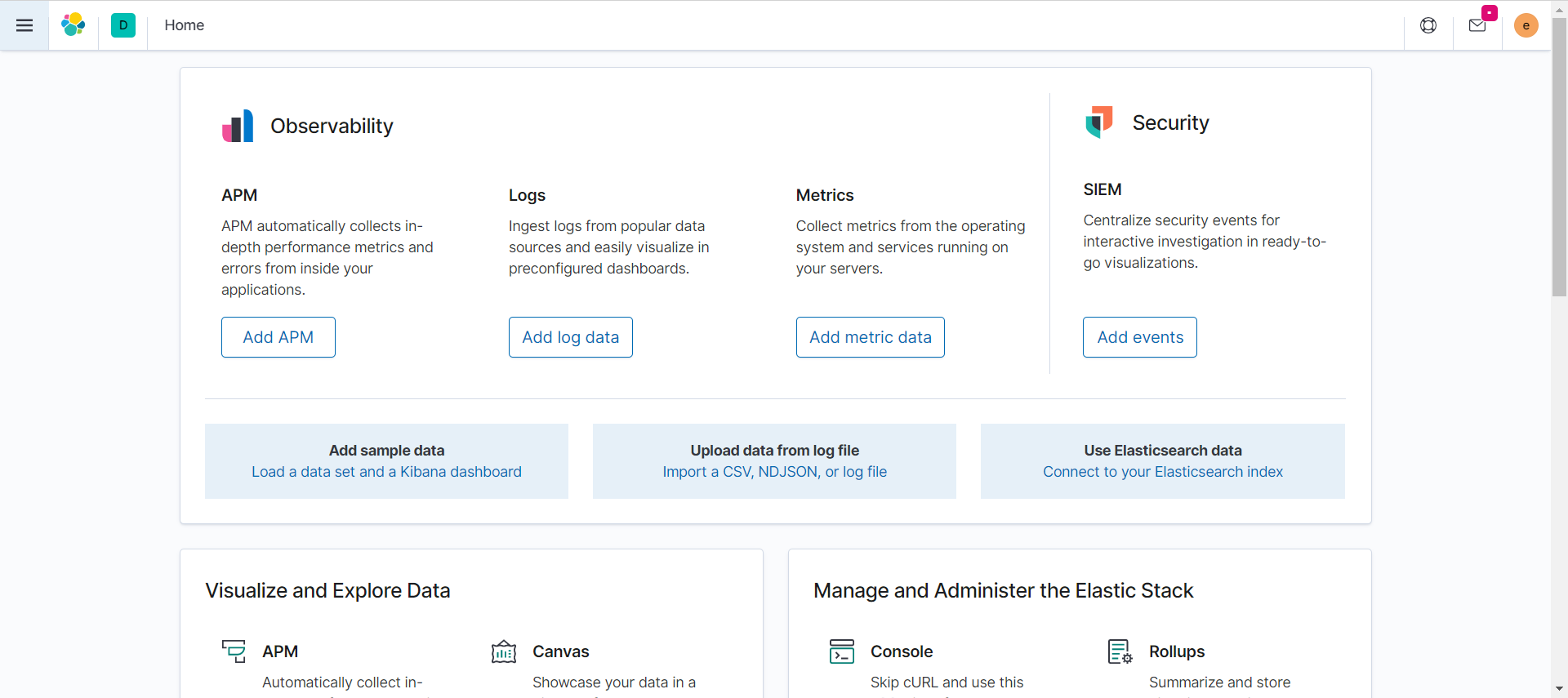

登录后界面如下:

3.2、搭建skywalking server

我这里使用helm安装

(1)安装helm,这里是使用的helm3

wget https://get.helm.sh/helm-v3.0.0-linux-amd64.tar.gztar zxvf helm-v3.0.0-linux-amd64.tar.gzmv linux-amd64/helm /usr/bin/

说明:helm3没有tiller这个服务端了,直接用kubeconfig进行验证通信,所以建议部署在master节点

(2)下载skywalking的代码

mkdir /home/install/package -pcd /home/install/packagegit clone https://github.com/apache/skywalking-kubernetes.git

(3)进入chart目录进行安装

cd skywalking-kubernetes/charthelm repo add elastic https://helm.elastic.cohelm dep up skywalkinghelm install my-skywalking skywalking -n skywalking \--set elasticsearch.enabled=false \--set elasticsearch.config.host=elasticsearch-client.elastic.svc.cluster.local \--set elasticsearch.config.port.http=9200 \--set elasticsearch.config.user=elastic \--set elasticsearch.config.password=n816QscHORFQMQWQfs4U

先要创建一个skywalking的namespace: kubectl create ns skywalking

(4)查看所有pod是否处于running

# kubectl get podNAME READY STATUS RESTARTS AGEmy-skywalking-es-init-x89pr 0/1 Completed 0 15hmy-skywalking-oap-694fc79d55-2dmgr 1/1 Running 0 16hmy-skywalking-oap-694fc79d55-bl5hk 1/1 Running 4 16hmy-skywalking-ui-6bccffddbd-d2xhs 1/1 Running 0 16h

也可以通过以下命令来查看chart。

# helm list --all-namespacesNAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSIONmy-skywalking skywalking 1 2020-09-29 14:42:10.952238898 +0800 CST deployed skywalking-3.1.0 8.1.0

如果要修改配置,则直接修改value.yaml,如下我们修改my-skywalking-ui的service为NodePort,则如下修改:

.....ui:name: uireplicas: 1image:repository: apache/skywalking-uitag: 8.1.0pullPolicy: IfNotPresent....service:type: NodePort# clusterIP: NoneexternalPort: 80internalPort: 8080....

然后使用以下命名升级即可。

helm upgrade sky-server ../skywalking -n skywalking

然后我们可以查看service是否变为NodePort了。

# kubectl get svc -n skywalkingNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEmy-skywalking-oap ClusterIP 10.109.109.131 <none> 12800/TCP,11800/TCP 88smy-skywalking-ui NodePort 10.102.247.110 <none> 80:32563/TCP 88s

现在就可以通过UI界面查看skywalking了,界面如下:

3.3、应用接入skywalking agent

现在skywalking的服务端已经安装好了,接下来就是应用接入了,所谓的应用接入就是应用在启动的时候加入skywalking agent,在容器中接入agent的方式我这里介绍两种。

- 在制作应用镜像的时候把agent所需的文件和包一起打进去

- 以sidecar的形式给应用容器接入agent

首先我们应该下载对应的agent软件包:

wget https://mirrors.tuna.tsinghua.edu.cn/apache/skywalking/8.1.0/apache-skywalking-apm-8.1.0.tar.gztar xf apache-skywalking-apm-8.1.0.tar.gz

(1)在制作应用镜像的时候把agent所需的文件和包一起打进去

开发类似下面的Dockerfile,然后直接build镜像即可,这种方法比较简单

FROM harbor-test.coolops.com/coolops/jdk:8u144_testRUN mkdir -p /usr/skywalking/agent/ADD apache-skywalking-apm-bin/agent/ /usr/skywalking/agent/

注意:这个Dockerfile是咱们应用打包的基础镜像,不是应用的Dockerfile

(2)、以sidecar的形式添加agent包

首先制作一个只有agent的镜像,如下:

FROM busybox:latestENV LANG=C.UTF-8RUN set -eux && mkdir -p /usr/skywalking/agent/ADD apache-skywalking-apm-bin/agent/ /usr/skywalking/agent/WORKDIR /

然后我们像下面这样开发deployment的yaml清单。

apiVersion: apps/v1kind: Deploymentmetadata:labels:name: demo-swname: demo-swspec:replicas: 1selector:matchLabels:name: demo-swtemplate:metadata:labels:name: demo-swspec:initContainers:- image: innerpeacez/sw-agent-sidecar:latestname: sw-agent-sidecarimagePullPolicy: IfNotPresentcommand: ['sh']args: ['-c','mkdir -p /skywalking/agent && cp -r /usr/skywalking/agent/* /skywalking/agent']volumeMounts:- mountPath: /skywalking/agentname: sw-agentcontainers:- image: harbor.coolops.cn/skywalking-java:1.7.9name: democommand:- java -javaagent:/usr/skywalking/agent/skywalking-agent.jar -Dskywalking.agent.service_name=${SW_AGENT_NAME} -jar demo.jarvolumeMounts:- mountPath: /usr/skywalking/agentname: sw-agentports:- containerPort: 80env:- name: SW_AGENT_COLLECTOR_BACKEND_SERVICESvalue: 'my-skywalking-oap.skywalking.svc.cluster.local:11800'- name: SW_AGENT_NAMEvalue: cartechfin-open-platform-skywalkingvolumes:- name: sw-agentemptyDir: {}

我们在启动应用的时候只要引入skywalking的javaagent即可,如下:

java -javaagent:/path/to/skywalking-agent/skywalking-agent.jar -Dskywalking.agent.service_name=${SW_AGENT_NAME} -jar yourApp.jar

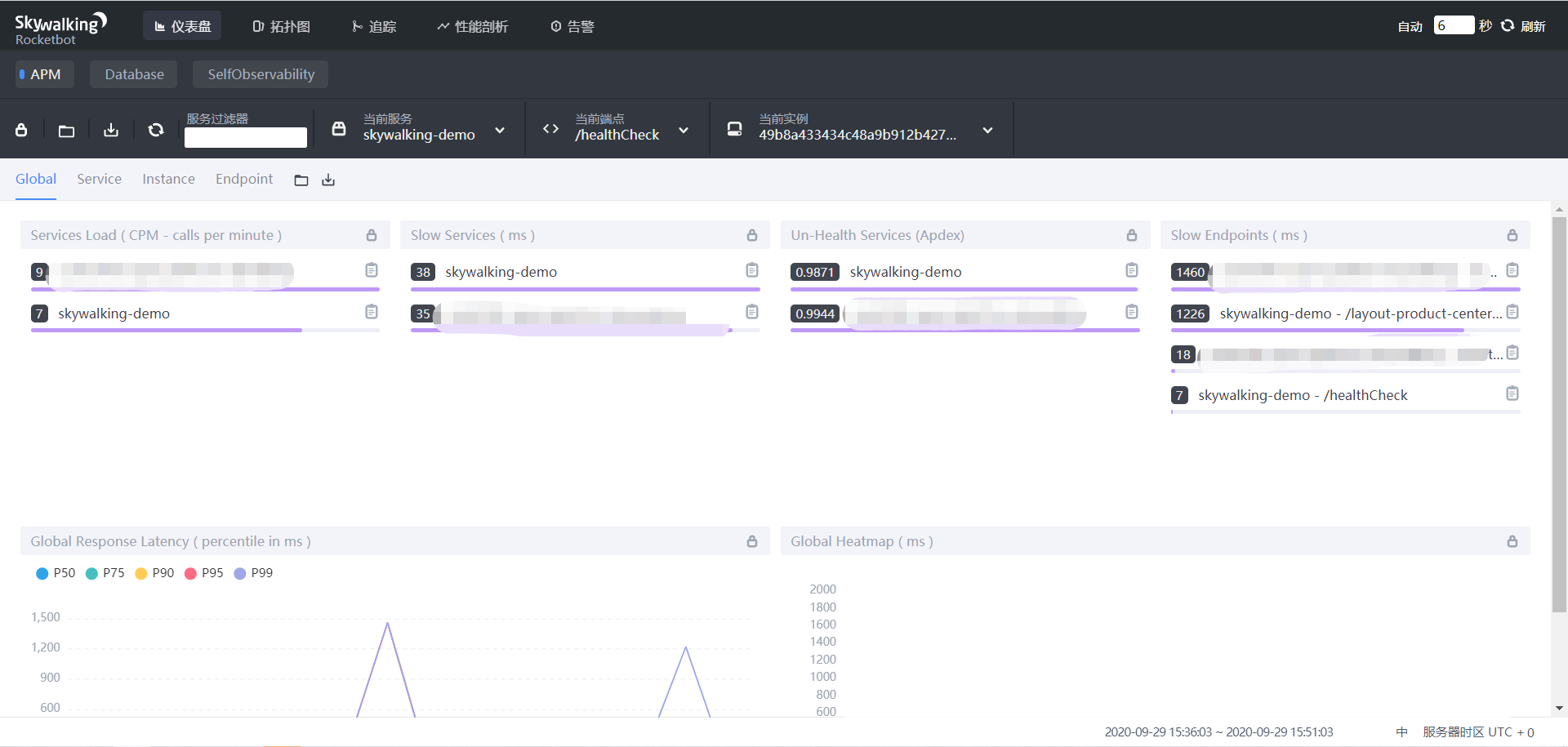

然后我们就可以在UI界面看到注册上来的应用了,如下:

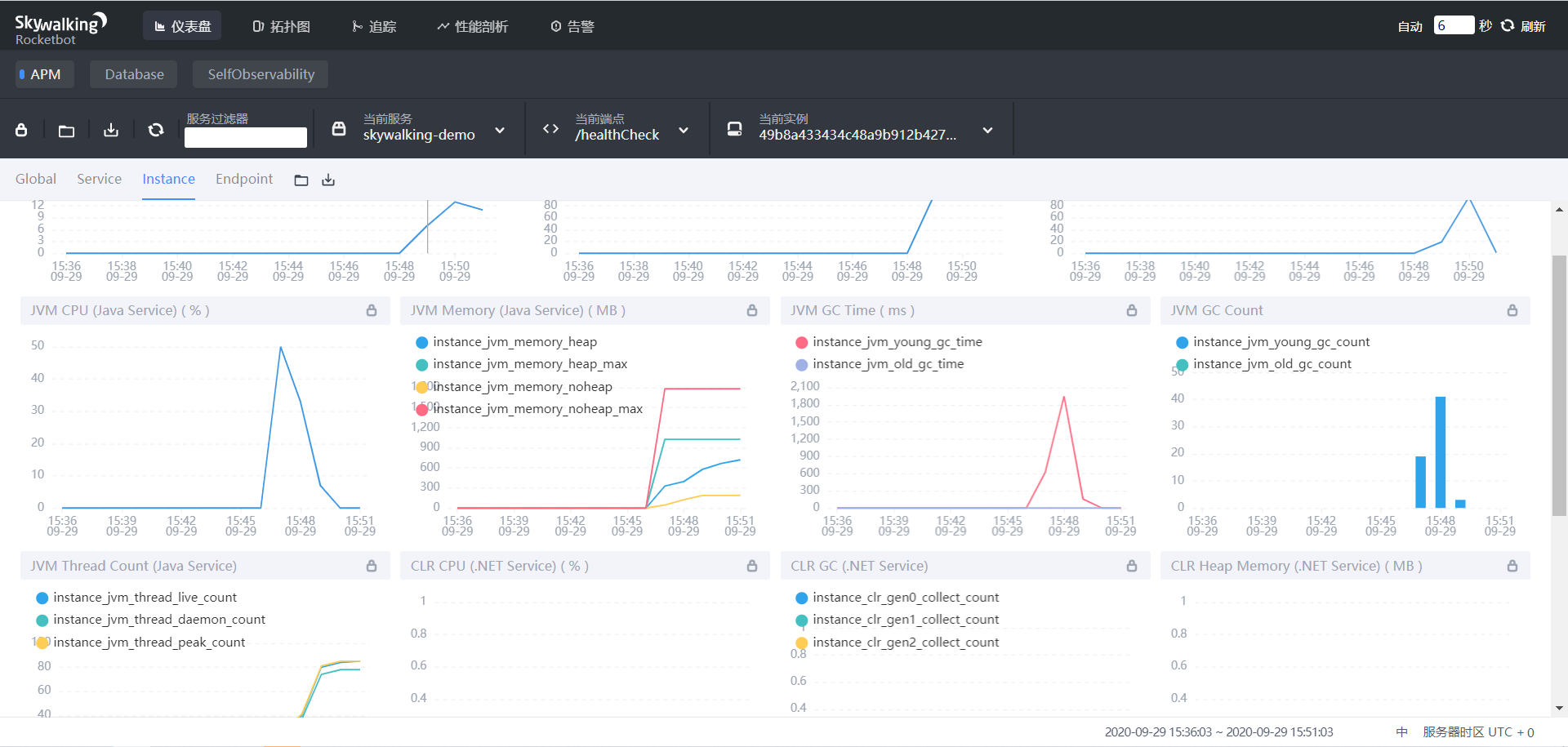

可以查看JVM数据,如下:

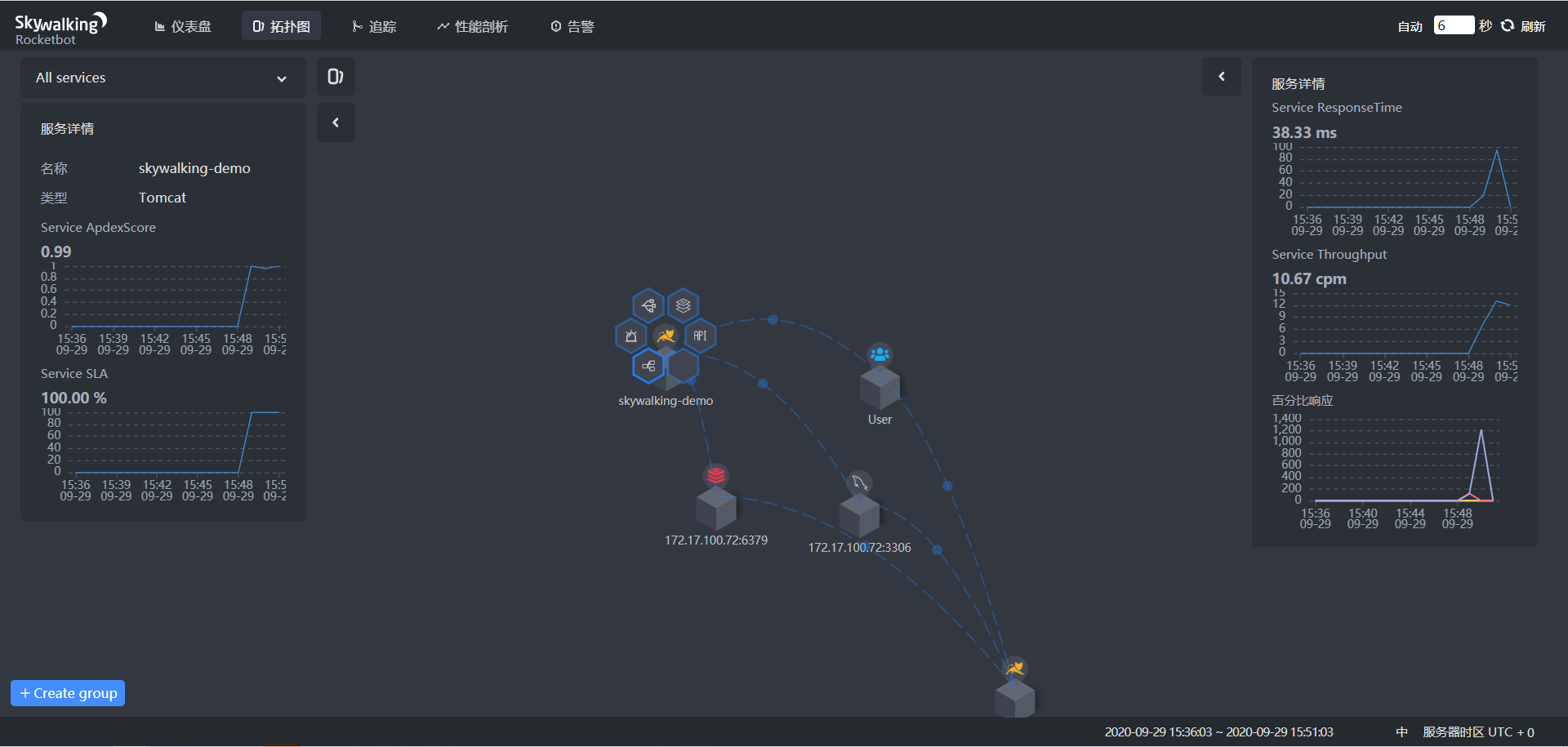

也可以查看其拓扑图,如下:

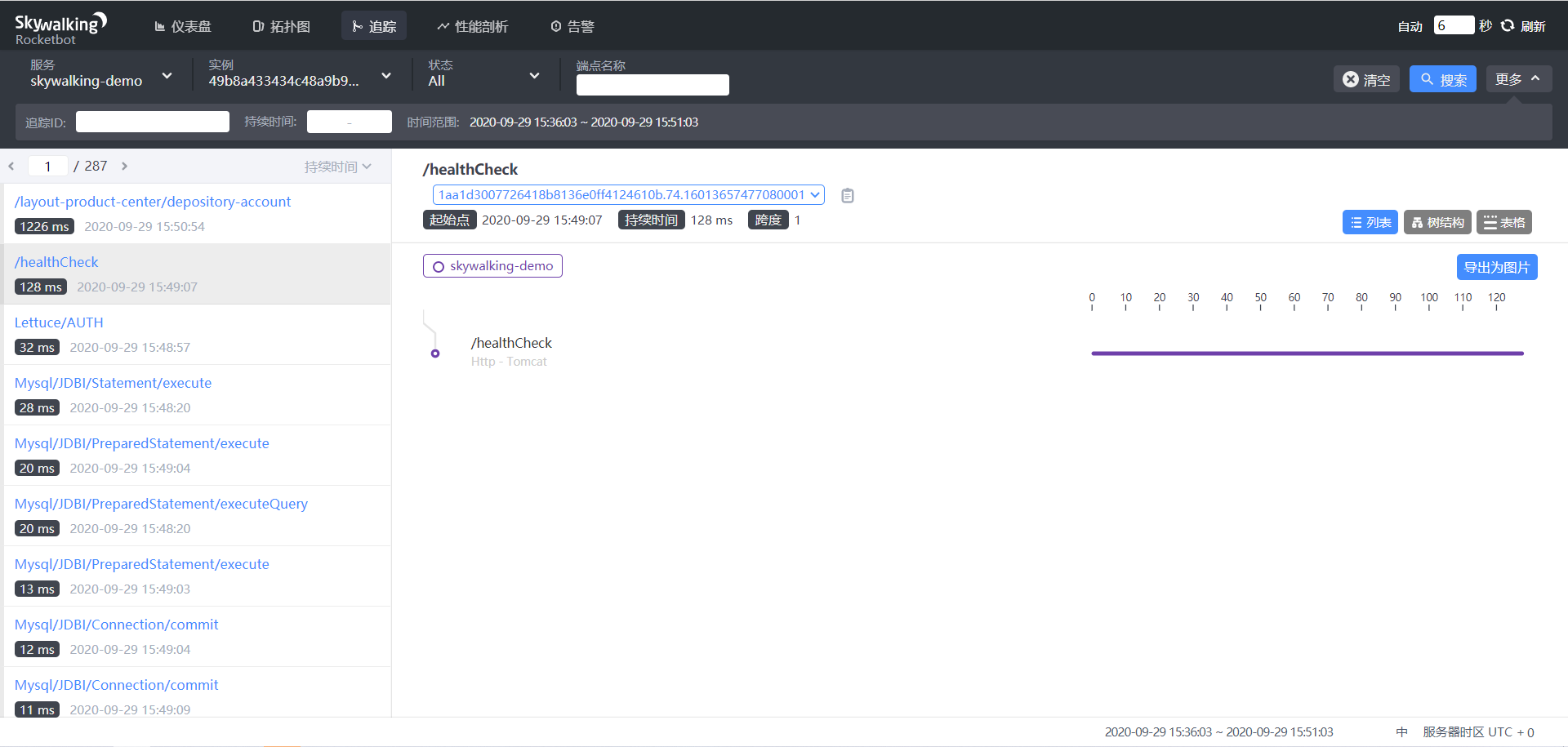

还可以追踪不同的uri,如下:

到这里整个服务就搭建完了,你也可以试一下。

参考文档:

1、https://github.com/apache/skywalking-kubernetes

2、http://skywalking.apache.org/zh/blog/2019-08-30-how-to-use-Skywalking-Agent.html

3、https://github.com/apache/skywalking/blob/5.x/docs/cn/Deploy-skywalking-agent-CN.md