配置清单

- es.yaml

```yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: “Elasticsearch” spec:

ports:- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch-logging

- port: 9200

RBAC authn and authz

apiVersion: v1 kind: ServiceAccount metadata: name: elasticsearch-logging namespace: kube-system labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: “true” addonmanager.kubernetes.io/mode: Reconcile rules:

- apiGroups:

- “” resources:

- “services”

- “namespaces”

- “endpoints” verbs:

- “get”

kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: kube-system name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: “true” addonmanager.kubernetes.io/mode: Reconcile subjects:

- kind: ServiceAccount name: elasticsearch-logging namespace: kube-system apiGroup: “” roleRef: kind: ClusterRole name: elasticsearch-logging apiGroup: “”

Elasticsearch deployment itself

apiVersion: apps/v1 kind: StatefulSet metadata: name: elasticsearch-logging namespace: kube-system labels: k8s-app: elasticsearch-logging version: v6.2.5 kubernetes.io/cluster-service: “true” addonmanager.kubernetes.io/mode: Reconcile spec: serviceName: elasticsearch-logging replicas: 1 selector: matchLabels: k8s-app: elasticsearch-logging version: v6.2.5 template: metadata: labels: k8s-app: elasticsearch-logging version: v6.2.5 kubernetes.io/cluster-service: “true” spec: serviceAccountName: elasticsearch-logging containers:

- image: harbor.querycap.com/rk-infra/elasticsearch:6.8.8-v0.4name: elasticsearch-loggingresources:# need more cpu upon initialization, therefore burstable classlimits:cpu: 1000mrequests:cpu: 100mports:- containerPort: 9200name: dbprotocol: TCP- containerPort: 9300name: transportprotocol: TCPvolumeMounts:- name: elasticsearch-loggingmountPath: /usr/share/elasticsearch/data/- mountPath: /usr/share/elasticsearch/config/elastic-certificates.p12name: keystorereadOnly: truesubPath: elastic-certificates.p12env:- name: "NAMESPACE"valueFrom:fieldRef:fieldPath: metadata.namespace- name: "xpack.security.enabled"value: "true"- name: "xpack.security.transport.ssl.enabled"value: "true"- name: xpack.security.transport.ssl.verification_modevalue: "certificate"- name: xpack.security.transport.ssl.keystore.pathvalue: "/usr/share/elasticsearch/config/elastic-certificates.p12"- name: xpack.security.transport.ssl.truststore.pathvalue: "/usr/share/elasticsearch/config/elastic-certificates.p12"- name: "xpack.ml.enabled"value: "false"- name: "xpack.monitoring.collection.enabled"value: "true"- name: "xpack.license.self_generated.type"value: "basic"volumes:- name: elasticsearch-logginghostPath:path: /data/es/- name: keystoresecret:secretName: es-keystoredefaultMode: 0444nodeSelector:es: data

tolerations:

- key: “dedicated”

operator: “Equal”

value: “es”

effect: “NoExecute”

# Elasticsearch requires vm.max_map_count to be at least 262144.# If your OS already sets up this number to a higher value, feel free# to remove this init container.initContainers:- name: elasticsearch-logging-initimage: alpine:3.6command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"]securityContext:privileged: true- name: elasticsearch-volume-initimage: alpine:3.6command:- chmod- -R- "777"- /usr/share/elasticsearch/data/volumeMounts:- name: elasticsearch-loggingmountPath: /usr/share/elasticsearch/data/

- es-secret.yaml```yamlapiVersion: v1kind: Secretmetadata:name: es-keystorenamespace: kube-systemdata:elastic-certificates.p12: MIINdwIBAzCCDTAGCSqGSIb3DQEHAaCCDSEEgg0dMIINGTCCBW0GCSqGSIb3DQEHAaCCBV4EggVaMIIFVjCCBVIGCyqGSIb3DQEMCgECoIIE+zCCBPcwKQYKKoZIhvcNAQwBAzAbBBQs3hsugdvGL7FWl+IJ6pxClg251AIDAMNQBIIEyF81+gPdcBuo0evFTyLXRtMD+YbLyJb7o3xzzS5BwZb+kvSCSw+lGkGybsL5iglxcBogmjTUbaSfjUbzCQ0xxisKVf1yR9DeP1e6Dm6lzF1lhOKYnxEtR813TXABy85ZEtczIDZkM5C+VKUoz/k4vuSLacaRaoEw4VKvXNo9mX+quzKajWKauZwDV9iP5Kf+4UZw6cQCPT4Xv2t7rab/l6e7peHL/r8hKDMmZAfRNgzjTMV1CrhIRHLPc9b5eOTUfk0uwIdjDhEE9ElB3dhbZFzbMItFPA2L2cCFi42eY1rQKATcoDpifF9NMjWJ8GtSnRmvc0irsJku8yoVZWDyghNgXAQT8JxGy9daV3WAMMbUIsqaV0r09WyH8kAikj/C+Cz9HwyctQs3vJc3fBMq5Dh7cxqaVctvVkxzcTPTv/OnCRg8dXto07xdsnTE37/qvrzw1cwyv5oZ2eBsem+490ulyWCIMrYN+owX1RScnPnI5RjOun2fRwN9G18LJrS6iJuSZVd4cQcWvMO/zmrsKga0KW3VFtJvTE+Pxad0wqN5h0Tx0DWhTJ8iqhMwPL+uHnhAQQUFvFPFSJEWwAYZgbGxYYRXkiZSML3KMWC57+2UYPKgSQHAVDc/RI+AJlMYfRw6QD174OZBG7PiBWJEiRtLqvH2dhcC9mGJEtOgrJyn/CDtSKgBnQm/beHG47BD4esX6A+RUgbvwsn4urYVMPy2qOlefAnSoru58bueQlt+j5CcsiQtaRlw3rzS+5V6U+VSFgVMZxsUzl8n3uV7uMfDSOx6abFGBMw8QNSMQhfaC9qqyU45QkyDeEvpyXY4ThDMV8Cw75g99+LpLfz1BHS2p/iG/6056Qmt7MC/3N6VVrvh5LCWQ6uFjGebqH9HKLDNHq31V7k50W0uZZdQLR0hFfnAX02zb4Rsen1vXvWH5kpu90tNe43IIzvFCD/dHM6L7tNzdNd3ke2bSmqsqp2bDAQew3Wpj3aWdI+HzjTmwONY7S5V88MQoQTeWXcjB8snSQkXYH4fh/vGfL+MpV+pSVtylIauDnMrj2JFOETGmQGHUuk9A1ofjzGdqcMIOGalzDrOb14eC5BVgfnKPOtR4bXar+UMmIIJwAaMQRqVO5D7mNhSPBwGPEW8SQQBbSfELaYHMi9q/Ii6B6D4knxt9eoWlDj7o4/M9omTuX53/HtWB1/4FbDinBNltr55dyazxPjRgzwAIr8mqUxxQoitWVys0oXos7yhizwdKn5/EaLJMo9ii9yIW058qvwpJ+juO36NCW1YK645X/fvu8+1UVC9VVHTxJSGei+RmHXJwRCVqSVG4ckHWPdlhKYPj3auMwkbWNqDOVipT13fwpTws2aGsxld4K6iWT9urbtxxhx8Hg0q35NMEILUODdjF3BbgsXZW40Hj3FN6ALvgFHolU7Homgna+Euvs/TJM62jrr02qZsRnZun5TvYRS9W5FVMU5Vjicfnp+ieCFM2Rl8iasd+h0t3DPBgv9m/xvYqpgD5aUyO+xyL0ikC8se0Nm+IxVKKV1ZATcLaXzZE3EkKu1Fk7T66ODEpkYmzkWzyP+XexqnDUEiQ35iR1yRyoKViArHgxmVx3u3TDo+Frt7c+wP2c/VkTFEMB8GCSqGSIb3DQEJFDESHhAAaQBuAHMAdABhAG4AYwBlMCEGCSqGSIb3DQEJFTEUBBJUaW1lIDE1ODkyNTQzODI2MjkwggekBgkqhkiG9w0BBwagggeVMIIHkQIBADCCB4oGCSqGSIb3DQEHATApBgoqhkiG9w0BDAEGMBsEFLzuguhJknOppoNt0cNAu/Yg9N7IAgMAw1CAggdQwSkHPuMWczUu39ME4ABWIYkp4Wk9MJ4HfM3ovhE+gskOjX6DG+o7H4sZ0okF0kcBoLAOo6Sgr/s9ZuVgYHTi5xtkGQaqbQUSMK03cpWUfue5f1ruRu93KVll6mMAz9z/s6/hG27hhanQUFDcZ4md9dFQHLv5ncBeMwrseeP2sUy/my1qYetlTIdskjfeNh2Dc2uZPTmDv6B/dj+nBHPd4B6zlqHB73JCvE8Qq6zWjjb6/d0geYMNKbdpbQjKbKxNgjzatand27Bh0Bpuk+OeqXF4+TrbwCyaiTnYYQPKbve/zsM7xVzvb5p9sdHmhOdI54+SuImIMuEQSikPmQk4MdPQaS27r7JAvsabdNm9jT1nzIMzINAj3b6LBTckbd94gP2ST2vizvq0maH2AtpZT5twX7AnfTnC2xuuKlZ1sJHsfl2Uw9JyvzNdMtZUaDO+0obesE1dBjH+nI5z75DzFLLbDl65HCJxL1qtvhSCXF676cQ2N4Mq2Sqs+nhzyVy+/NgRcfN/YtagWyYAzxdQmaW0lIQfdDcbYgW+1w+8RUht5imx5G/A0foKCcW1mGW2ocXzUkMxAqspHu3Tb3lazUpSS8BNWELtC+d3H8qx0qsPMenxe0sUxB/pUW5OFqcZCAC2hlUThtUD4E51joKJha0+DraiqmwaaUNFy2CcgqCd52WrwnpRV5gyS+Bk24d/4Dm3ezEZnMQxGiBwB55nHKWlgwAzTqnJWXmM/wYEkEVLsnN9bYBU2e8yM8Q9Qv6Vszg+8GqfIeXs4hjCAIJ8YWCDGtL/rM9+L0lsMfh73jo9Hj0UnvqLgIEaV6pqpk6O7NgdhiWEFDyIbbEWPEpFiLsGclpnELxZbxlWdDlcAM3dpU7hAMZ5AxSv6or7U7WkSfj/K8EzDjpQ66/klbsZiob/EEehdeBRv0ZFTR1uOLY+h5kJub0b/tflw36F0BA2wqxr6pCG8/zdKpET7CWu7TWKtX1Uw7/z16s3uJ6zYIyJJNXwB4c1bbdHiAjAfwh2SgVqGuRjQpN2/vKcwCRC/xWSa9ME/rAA6HhvtNgn0s5dTyKbbTKBkEvsWwfwEFAE3mlRO7t9waAmtWabNdJKSO2z70Qc3Irr+wJw+zDDD7+hfKcRxTFHZ65tlR75QgmWXRMMFMQW9nuKN9hY1k0XRv7qppD2jQRV19sR87Aq+5TbihzmbitYmeqBu87oi82HOu6/gReFGRgz1cGaS6y7+v00xoUiPU5Pc6k4bS0E2/1C/CUARN0K4ELCx+MqlWz01tDMnSzAOvDtB+EmgBIrCPCmVqwvoH2DS5hOoslYRx8LtQtQtb+DeFvxCRHCNzEi4+CwWC0GzWOW2Lz7feCyhwoDGkubRyESKbJ7VKWB4A5s5iyLp2Wc/ewFbBwaywpQ2AFezS8Ka2DiYPFLlf0shfycWjlQih6KYkxVHzd1i2E4mau0FWMNMirqRWkQ2rl5j7FoyNOqtfcy/K2N+FlzT6Y1Sjkw3imeqdxa+CWJAyC2W1dahDgnbR7JbLoKY5P8a++iJ6mbiHTRs8NcWtadcF0bbG1ycdcz/7jNzU7tDQODyfWH43Nf4GzcsPzv+WbDUmuUBuv8PrSeKIhMPc6XjmYV7Qgd8BEwwTpU3DTobvnO60HyoGBRJFsLfd7w0iXPUNqLWK4u1RSCyMjf1m+MfBHPunccqYaY4zgzj4cFkUMpAnpa6IziRDdUE0Vc3ePJSBAQ9WtA66sIIXm7lmwPzENBcUNcFLxX3H1PzWZzKuwBzTVsj7D+ECFL2jnjbq5HmvBKrC05YkT9bTonCWKYDwKwxheEZYSzexfJrHos98PZ28YiLA5F8ifQ/9Lc+T1prWuJ26hA8fUoicQHIaUS/rx3BuibnlfVroZUqsqC4zpYn3hKZDmO+jLrH3kxRTwHcS2Yo/qcseJy4OGseocvH5NDN5LnjHpEw+mqW7Qz7S9ZxmNxU9PwsYBq2Q39TKj0KjnGtIQjGYI4j+Ql6icC0Z3WbSCZseB4rEkG18LLpvdD/U0p6AwZ3MXPFYZOJn3k9TSs30Wbg4+bLUM9OT9LE8XLOFSEQCxgQyVAFpuQyfL3YnAThUoVJoWDr5DktNQ9pwtNhAo8xbQGdkAWUtKBkESRc4tekqSrxI0Zt0iD8VqluaTbt4RMJbtu2q+4vNLuJV6f3PeS0hzEsALwN3ZcNDHNRlVKhctDr0W2K1rVl+p+uGyDTvX7Yx6jEwghs0xoRhU3yU7f/FGhMhQZlZc/xLL/AJLXOAaTXIKO6PsySurWZrzHrboSk+65WmymdBOlEzNf+R/oriqeTNV/RoNVTglLyDdDmycYonbaexz03gHC/g40ypo7jECE3V130kApgnDvt6QRC/yd6NiNh1V4E8gVbm4J7k+gE++4tVE7KFGOSvWwPjkSbXe5PDejENuVX2v4UPuaMoUXAPUZSNNFao4bM4eS50U/g67pnl4PYbdtG/yrjCNnXCaRZRapDVD/MD4wITAJBgUrDgMCGgUABBR9QitEdRrOhirFpeeXYxRCiDXobgQUgdxm0RxqdfUMrM7V9NYJh5DmvWsCAwGGoA==

---# Source: filebeat/templates/filebeat-configmap.yaml---apiVersion: v1kind: ConfigMapmetadata:name: filebeat-confignamespace: kube-systemlabels:k8s-app: filebeatdata:filebeat.yml: |-filebeat.config:max_procs: 1queue.mem.events: 16queue.mem.flush.min_events: 8inputs:# Mounted `filebeat-inputs` configmap:path: ${path.config}/inputs.d/*.yml# Reload inputs configs as they change:reload.enabled: trueenabled: truemodules:path: ${path.config}/modules.d/*.yml# Reload module configs as they change:reload.enabled: true#output.kafka:# hosts: ["kafka-log-01:9092", "kafka-log-02:9092", "kafka-log-03:9092"]# topic: 'topic-test-log'# version: 2.0.0output.logstash:hosts: ["logstash:5044"]enabled: true---apiVersion: v1kind: ConfigMapmetadata:name: filebeat-inputsnamespace: kube-systemlabels:k8s-app: filebeatdata:kubernetes.yml: |-- type: docker#harvester_buffer_size: 4096000close_inactive: 15mscan_frequency: 30scontainers.ids:- "*"enabled: trueprocessors:- add_kubernetes_metadata:in_cluster: true---# Source: filebeat/templates/filebeat-service-account.yamlapiVersion: v1kind: ServiceAccountmetadata:name: filebeatnamespace: kube-systemlabels:k8s-app: filebeat---# Source: filebeat/templates/filebeat-role.yamlapiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRolemetadata:name: filebeatlabels:k8s-app: filebeatrules:- apiGroups: [""] # "" indicates the core API groupresources:- namespaces- podsverbs:- get- watch- list---# Source: filebeat/templates/filebeat-role-binding.yamlapiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata:name: filebeatsubjects:- kind: ServiceAccountname: filebeatnamespace: kube-systemroleRef:kind: ClusterRolename: filebeatapiGroup: rbac.authorization.k8s.io---# Source: filebeat/templates/filebeat-daemonset.yamlapiVersion: extensions/v1beta1kind: DaemonSetmetadata:name: filebeatnamespace: kube-systemlabels:k8s-app: filebeatspec:template:metadata:labels:k8s-app: filebeatspec:serviceAccountName: filebeatterminationGracePeriodSeconds: 30nodeSelector:beta.kubernetes.io/filebeat-ds-ready: "true"containers:- name: filebeatimage: "harbor.querycap.com/rk-infra/filebeat:6.6.2"args: ["-c", "/etc/filebeat.yml","-e","-httpprof","0.0.0.0:6060"]#ports:# - containerPort: 6060# hostPort: 6068securityContext:runAsUser: 0# If using Red Hat OpenShift uncomment this:#privileged: trueresources:limits:memory: 1000Micpu: 1000mrequests:memory: 100Micpu: 100mvolumeMounts:- name: configmountPath: /etc/filebeat.ymlreadOnly: truesubPath: filebeat.yml- name: inputsmountPath: /usr/share/filebeat/inputs.dreadOnly: true- name: datamountPath: /usr/share/filebeat/data- name: varlibdockercontainersmountPath: /var/lib/docker/containersreadOnly: true- name: timezonemountPath: /etc/localtimeimagePullSecrets:- name: qingcloud-registryvolumes:- name: configconfigMap:defaultMode: 0600name: filebeat-config- name: varlibdockercontainershostPath:path: /data/var/lib/docker/containers- name: inputsconfigMap:defaultMode: 0600name: filebeat-inputs# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart- name: datahostPath:path: /data/filebeat-datatype: DirectoryOrCreate- name: timezonehostPath:path: /etc/localtimetolerations:- effect: NoExecutekey: dedicatedoperator: Equalvalue: gpu- effect: NoScheduleoperator: Exists

- kibana.yaml

```yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: kube-system

annotations:

kubernetes.io/ingress.class: “nginx”

spec: rules:- host: a.kibana.rockontrol.com

http:

paths:

- backend: serviceName: kibana servicePort: 5601

- host: a.kibana.rockontrol.com

http:

paths:

apiVersion: v1 kind: Service metadata: name: kibana namespace: kube-system labels: k8s-app: kibana kubernetes.io/cluster-service: “true” addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: “Kibana” spec: type: ClusterIP ports:

- port: 5601 protocol: TCP targetPort: ui selector: k8s-app: kibana

apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: kube-system labels: k8s-app: kibana kubernetes.io/cluster-service: “true” addonmanager.kubernetes.io/mode: Reconcile spec: replicas: 1 selector: matchLabels: k8s-app: kibana template: metadata: labels: k8s-app: kibana annotations: seccomp.security.alpha.kubernetes.io/pod: ‘docker/default’ spec: imagePullSecrets:

- name: qingcloud-registrycontainers:- name: kibanaimage: harbor.querycap.com/rk-infra/kibana:6.8.8resources:# need more cpu upon initialization, therefore burstable classlimits:cpu: 1000mrequests:cpu: 100menv:- name: ELASTICSEARCH_URLvalue: http://elasticsearch-logging:9200- name: XPACK_SECURITY_ENABLEDvalue: "true"- name: ELASTICSEARCH_PASSWORDvalue: M50zLpCI0EeDcOh7- name: ELASTICSEARCH_USERNAMEvalue: kibana- name: XPACK_ML_ENABLEDvalue: "false"ports:- containerPort: 5601name: uiprotocol: TCP

- [**logstash.yaml**](https://git.querycap.com/infra/k8s-elk/-/blob/master/logstash.yaml)```yamlapiVersion: v1kind: Servicemetadata:name: logstashnamespace: kube-systemspec:ports:- port: 5044targetPort: beatsselector:type: logstashclusterIP: None---apiVersion: extensions/v1beta1kind: Deploymentmetadata:name: logstashnamespace: kube-systemspec:template:metadata:labels:type: logstashspec:containers:- image: harbor.querycap.com/rk-infra/logstash:v6.6.2name: logstashports:- containerPort: 5044name: beatscommand:- logstash- '-f'- '/etc/logstash_c/logstash.conf'env:- name: "XPACK_MONITORING_ELASTICSEARCH_HOSTS"value: "http://elasticsearch-logging:9200"volumeMounts:- name: config-volumemountPath: /etc/logstash_c/- name: config-yml-volumemountPath: /usr/share/logstash/config/- name: timezonemountPath: /etc/localtimeresources:limits:cpu: 1000mmemory: 2048Mi# requests:# cpu: 512m# memory: 512MiimagePullSecrets:- name: qingcloud-registryvolumes:- name: config-volumeconfigMap:name: logstash-confitems:- key: logstash.confpath: logstash.conf- name: timezonehostPath:path: /etc/localtime- name: config-yml-volumeconfigMap:name: logstash-ymlitems:- key: logstash.ymlpath: logstash.yml---apiVersion: v1kind: ConfigMapmetadata:name: logstash-confnamespace: kube-systemlabels:type: logstashdata:logstash.conf: |-input {beats {port => 5044}}filter{if [kubernetes][container][name] == "nginx-ingress-controller" {json {source => "message"target => "ingress_log"}if [ingress_log][requesttime] {mutate {convert => ["[ingress_log][requesttime]", "float"]}}if [ingress_log][upstremtime] {mutate {convert => ["[ingress_log][upstremtime]", "float"]}}if [ingress_log][status] {mutate {convert => ["[ingress_log][status]", "float"]}}if [ingress_log][httphost] and [ingress_log][uri] {mutate {add_field => {"[ingress_log][entry]" => "%{[ingress_log][httphost]}%{[ingress_log][uri]}"}}mutate{split => ["[ingress_log][entry]","/"]}if [ingress_log][entry][1] {mutate{add_field => {"[ingress_log][entrypoint]" => "%{[ingress_log][entry][0]}/%{[ingress_log][entry][1]}"}remove_field => "[ingress_log][entry]"}}else{mutate{add_field => {"[ingress_log][entrypoint]" => "%{[ingress_log][entry][0]}/"}remove_field => "[ingress_log][entry]"}}}}if [kubernetes][container][name] =~ /^srv*/ {json {source => "message"target => "tmp"}if [kubernetes][namespace] == "kube-system" {drop{}}if [tmp][level] {mutate{add_field => {"[applog][level]" => "%{[tmp][level]}"}}if [applog][level] == "debug"{drop{}}}if [tmp][msg]{mutate{add_field => {"[applog][msg]" => "%{[tmp][msg]}"}}}if [tmp][func]{mutate{add_field => {"[applog][func]" => "%{[tmp][func]}"}}}if [tmp][cost]{if "ms" in [tmp][cost]{mutate{split => ["[tmp][cost]","m"]add_field => {"[applog][cost]" => "%{[tmp][cost][0]}"}convert => ["[applog][cost]", "float"]}}else{mutate{add_field => {"[applog][cost]" => "%{[tmp][cost]}"}}}}if [tmp][method]{mutate{add_field => {"[applog][method]" => "%{[tmp][method]}"}}}if [tmp][request_url]{mutate{add_field => {"[applog][request_url]" => "%{[tmp][request_url]}"}}}if [tmp][meta._id]{mutate{add_field => {"[applog][traceId]" => "%{[tmp][meta._id]}"}}}if [tmp][project] {mutate{add_field => {"[applog][project]" => "%{[tmp][project]}"}}}if [tmp][time] {mutate{add_field => {"[applog][time]" => "%{[tmp][time]}"}}}if [tmp][status] {mutate{add_field => {"[applog][status]" => "%{[tmp][status]}"}convert => ["[applog][status]", "float"]}}}mutate{rename => ["kubernetes", "k8s"]remove_field => "beat"remove_field => "tmp"remove_field => "[k8s][labels][app]"}}output{elasticsearch {hosts => ["http://elasticsearch-logging:9200"]codec => jsonindex => "logstash-%{+YYYY.MM.dd}"user => 'elastic'password => 'P9IKmhwuYEEej52U'}}---apiVersion: v1kind: ConfigMapmetadata:name: logstash-ymlnamespace: kube-systemlabels:type: logstashdata:logstash.yml: |-http.host: "0.0.0.0"xpack.monitoring.enabled: truexpack.monitoring.elasticsearch.username: logstash_systemxpack.monitoring.elasticsearch.password: hOsocygbtFEs1qtyxpack.monitoring.elasticsearch.url: http://elasticsearch-logging:9200

- 还有一个elastic-certificates.p12,可以自己用二进制生成

/usr/share/elasticsearch/bin/elasticsearch-certutil ca/usr/share/elasticsearch/bin/elasticsearch-certutil cert --ca elastic-certificates.p12#两条命令均一路回车即可,不需要给秘钥再添加密码。#证书创建完成之后,默认在es的数据目录,这里统一放到etc下:ls elastic-*elastic-certificates.p12 elastic-certificates-ca.p12

部署步骤

给部署es的节点加上标签

kubectl label node <node> es=data

部署

kubectl apply -f .

创建用户

kubectl -n kube-system exec -it elasticsearch-logging-0 ./init-user.sh

这里的脚本是自己提前打包到镜像中的,没有使用官方镜像

cat init-user.sh#!/usr/bin/expectset timeout -1spawn bin/elasticsearch-setup-passwords interactiveexpect "\[y/N\]"send "y\r"expect "\[elastic\]"send "P9IKmhwuYEEej52U\r"expect "\[elastic\]"send "P9IKmhwuYEEej52U\r"expect "\[apm_system\]"send "1UmdUStBiKYqR0IJ\r"expect "\[apm_system\]"send "1UmdUStBiKYqR0IJ\r"expect "\[kibana\]"send "M50zLpCI0EeDcOh7\r"expect "\[kibana\]"send "M50zLpCI0EeDcOh7\r"expect "\[logstash_system\]"send "hOsocygbtFEs1qty\r"expect "\[logstash_system\]"send "hOsocygbtFEs1qty\r"expect "\[beats_system\]"send "xy28upiAPDNGfmgw\r"expect "\[beats_system\]"send "xy28upiAPDNGfmgw\r"expect "\[remote_monitoring_user\]"send "jooKJoYHlMiZwTXX\r"expect "\[remote_monitoring_user\]"send "jooKJoYHlMiZwTXX\r"

用户

# 普通用户username: kibanapassword: M50zLpCI0EeDcOh7# 只读用户username: viewerpassword: WBQ3p4ZZ7WappBfEb

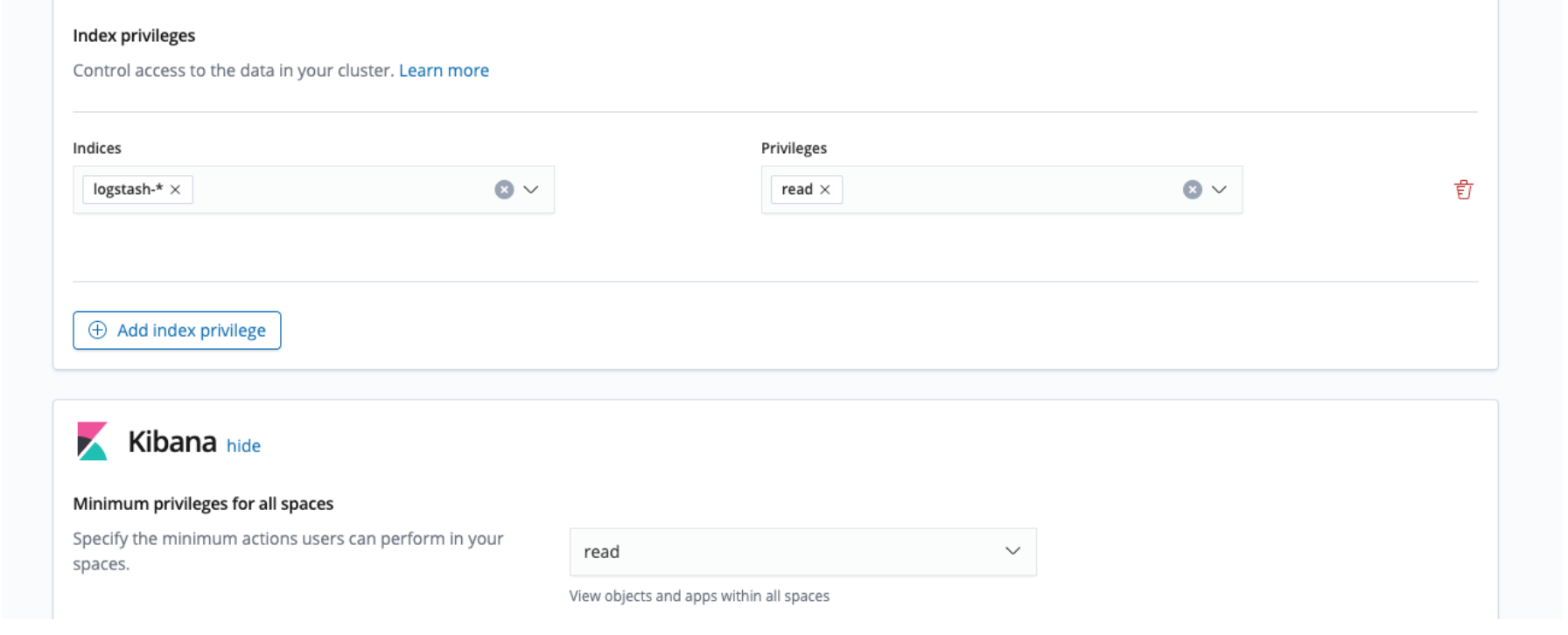

viewer 用的的权限配置

- 创建 viewer role 角色, 权限如下。

- 创建 viewer 账户, 授权 viewer 角色。