方法一:在meta中设置

我们可以直接在自己具体的爬虫程序中设置proxy字段,直接在构造Request里面加上meta字段即可:

# -*- coding: utf-8 -*-import scrapyclass Ip138Spider(scrapy.Spider):name = 'ip138'allowed_domains = ['ip138.com']start_urls = ['http://2020.ip138.com']def start_requests(self):for url in self.start_urls:yield scrapy.Request(url, meta={'proxy': 'http://163.125.69.29:8888'}, callback=self.parse)def parse(self, response):print("response text: %s" % response.text)print("response headers: %s" % response.headers)print("response meta: %s" % response.meta)print("request headers: %s" % response.request.headers)print("request cookies: %s" % response.request.cookies)print("request meta: %s" % response.request.meta)

方法二:在中间件中设置

中间件middlewares.py的写法如下:

# -*- coding: utf-8 -*-class ProxyMiddleware(object):def process_request(self, request, spider):request.meta['proxy'] = "http://proxy.your_proxy:8888"

这里有两个问题:

- 一是proxy一定是要写号

http://前缀的否则会出现to_bytes must receive a unicode, str or bytes object, got NoneType的错误。 - 二是官方文档中写到

process_request方法一定要返回request对象,response对象或None的一种,但是其实写的时候不用return

另外如果代理有用户名密码等就需要在后面再加上一些内容:

# Use the following lines if your proxy requires authenticationproxy_user_pass = "USERNAME:PASSWORD"# setup basic authentication for the proxyencoded_user_pass = base64.encodestring(proxy_user_pass)request.headers['Proxy-Authorization'] = 'Basic ' + encoded_user_pass

如果想要配置多个代理,可以在配置文件中添加一个代理列表:

PROXIES = ['163.125.69.29:8888']

然后在中间件中引入:

# -*- coding: utf-8 -*-import randomfrom ip138_proxy.settings import PROXIESclass ProxyMiddleware(object):def process_request(self, request, spider):request.meta['proxy'] = "http://%s" % random.choice(PROXIES)return None

在settings.py的DOWNLOADER_MIDDLEWARES中开启中间件:

DOWNLOADER_MIDDLEWARES = {'myCrawler.middlewares.ProxyMiddleware': 1,}

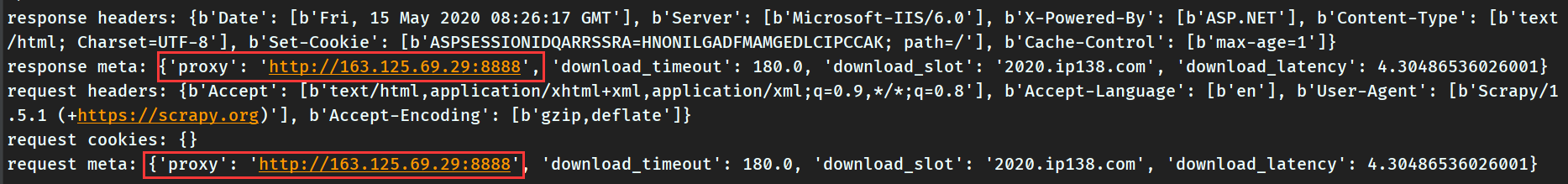

运行程序,可以看到设置的代理生效了:

如果想要找到一些免费的代理可以到快代理中寻找。