搭建es集群

Master节点(元数据节点)

主要负责集群操作相关的内容,如创建或删除索引,跟踪哪些节点是群集的一部分,并决定哪些分片分配给相关的节点。

node.master: true

DataNode 数据存储节点

主要是存储索引数据的节点,主要对文档进行增删改查操作,聚合操作等等,即我们所有的数据都存储在这些节点中。

node.data: true

Coordinate node 协调节点

协调节点只作为接收请求、转发请求到其他节点、汇总各个节点返回数据等功能的节点。

node.data: falsenode.master: false

准备

我们这里选择三个kubernetes的节点来部署

- node01(部署es-m1包含数据节点角色)

- node02(部署es-m2包含数据节点角色)

- node03(部署es-m3包含数据节点角色)

node操作(一下下操作需要在每一个k8snode机器上执行)

调整max_map_count大小,不然会导致es无法启动,需要在每台机器都执行

echo 'vm.max_map_count=262144' >> /etc/sysctl.conf && sysctl -p

创建es存储数据目录,并授权。

mkdir /es-data

chown -R 1000:1000 /es-data

local pv需要根据标签选择节点

kubectl label node node01 local-pv=node01

kubectl label node node02 local-pv=node02

kubectl label node node03 local-pv=node03

es.yaml文件

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

namespace: kube-system

provisioner: kubernetes.io/no-provisioner

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv-1

namespace: kube-system

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /es-data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: local-pv

operator: In

values:

- node01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv-2

namespace: kube-system

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /es-data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: local-pv

operator: In

values:

- node02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv-3

namespace: kube-system

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /es-data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: local-pv

operator: In

values:

- node03

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: elasticsearch

name: elasticsearch

namespace: kube-system

spec:

replicas: 3

serviceName: elasticsearch

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

securityContext:

fsGroup: 1000

containers:

- name: elasticsearch

image: elasticsearch:7.3.1

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

ports:

- containerPort: 9200

protocol: TCP

- containerPort: 9300

protocol: TCP

env:

- name: cluster.name

value: "es_cluster"

- name: node.master

value: "true"

- name: node.data

value: "true"

- name: discovery.seed_hosts

value: "elasticsearch-discovery"

- name: cluster.initial_master_nodes

value: "elasticsearch-0,elasticsearch-1,elasticsearch-2"

- name: ES_JAVA_OPTS

value: "-Xms1g -Xmx1g"

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: es-data

volumeClaimTemplates:

- metadata:

name: es-data

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-storage

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch-discovery

namespace: kube-system

spec:

publishNotReadyAddresses: true

ports:

- name: transport

port: 9300

targetPort: 9300

selector:

app: elasticsearch

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch

namespace: kube-system

spec:

type: ClusterIP

ports:

- name: http

protocol: TCP

port: 9200

selector:

app: elasticsearch

kubectl apply -f es.yaml

filebeat.yaml文件

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

# To enable hints based autodiscover, remove `filebeat.config.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# hints.enabled: true

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: kube-system

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

containers:

- name: filebeat

image: elastic/filebeat:7.3.1

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch

- name: ELASTICSEARCH_PORT

value: "9200"

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

kubectl apply -f filebeat.yaml

kibana.yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-system

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.3.1

resources:

limits:

cpu: 1

memory: 500Mi

requests:

cpu: 0.5

memory: 200Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch:9200

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-system

spec:

ports:

- port: 5601

protocol: TCP

targetPort: ui

selector:

k8s-app: kibana

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: kube-system

spec:

rules:

- host: kibana.tk8s.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 5601

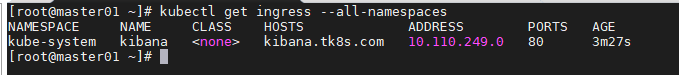

kubectl apply -f kibana.yaml

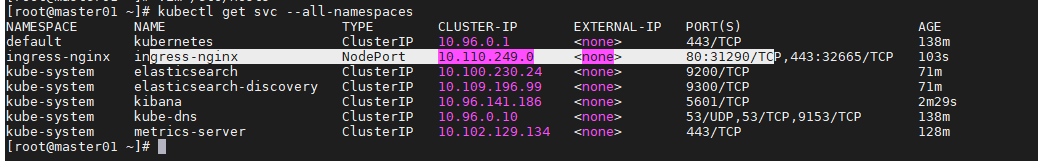

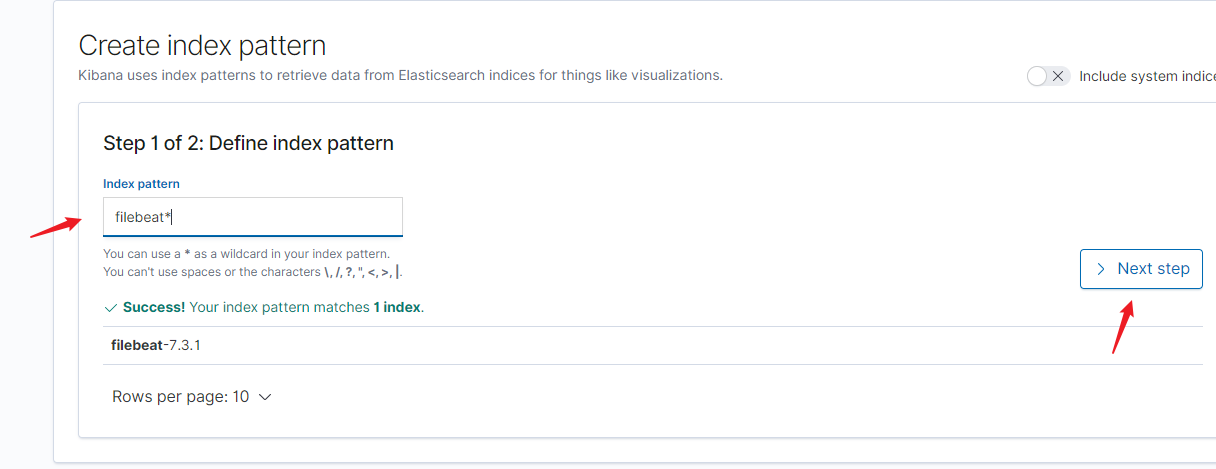

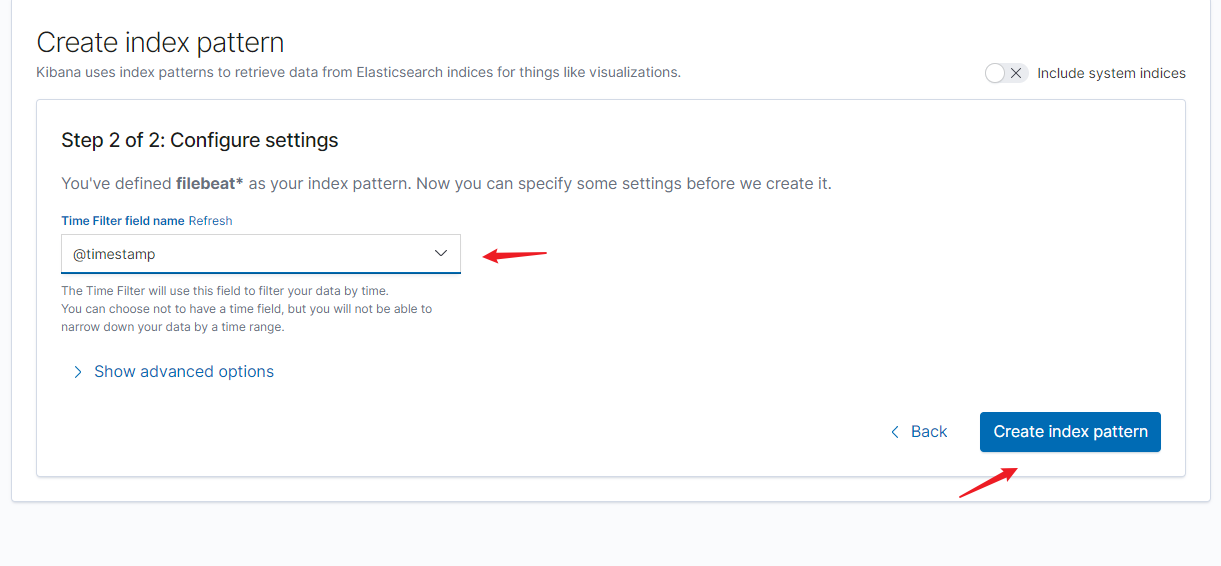

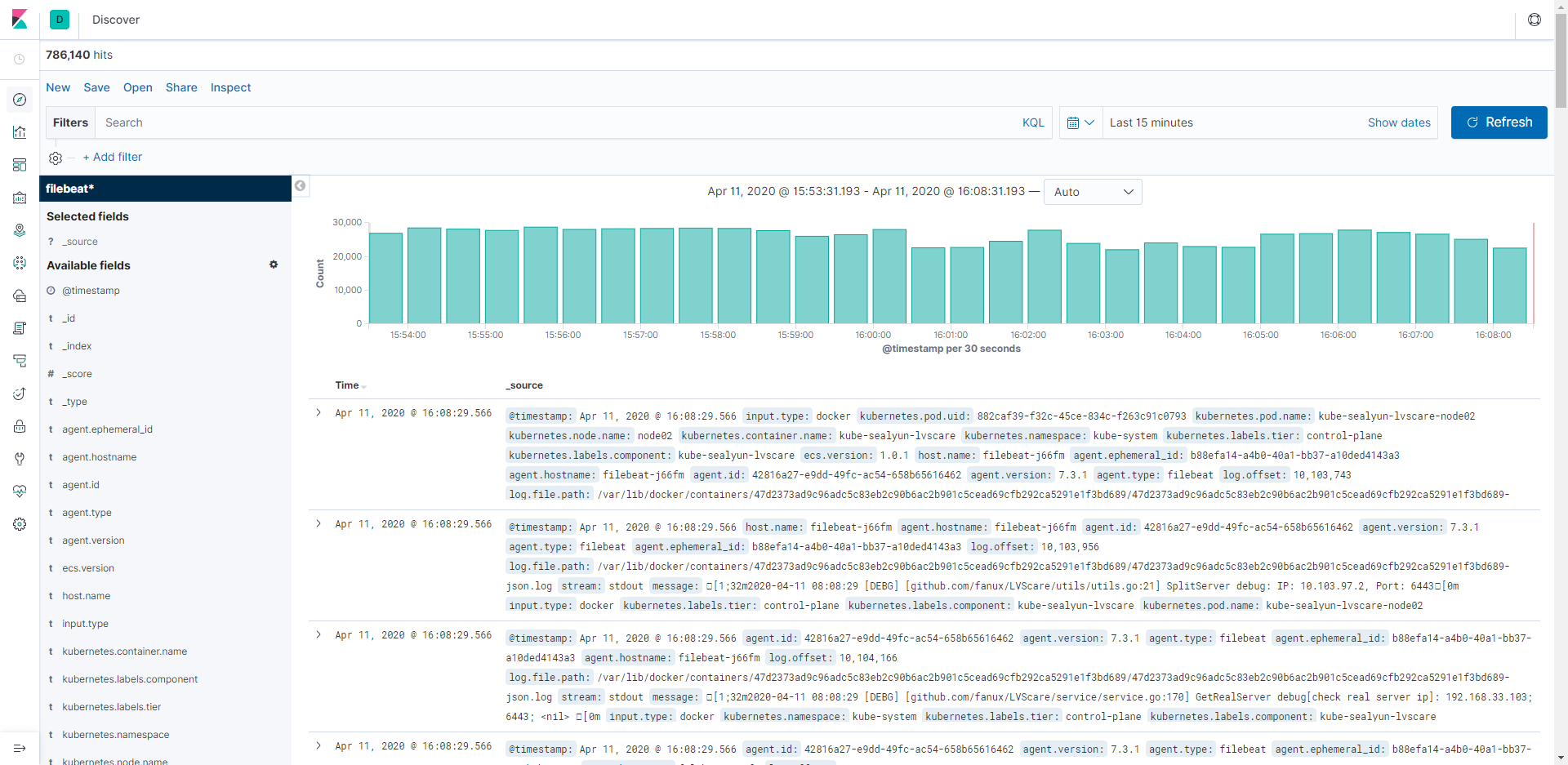

通过ingress访问kibana

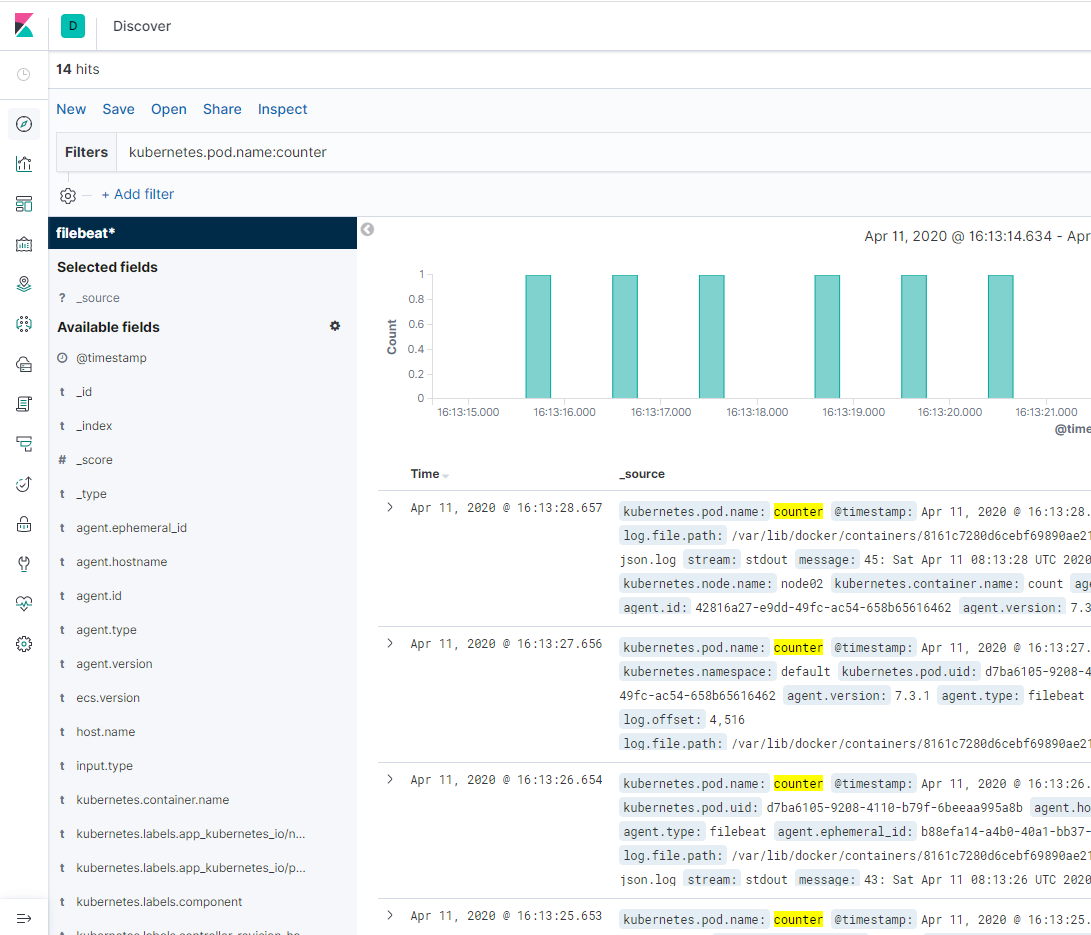

索引用 filebeat*

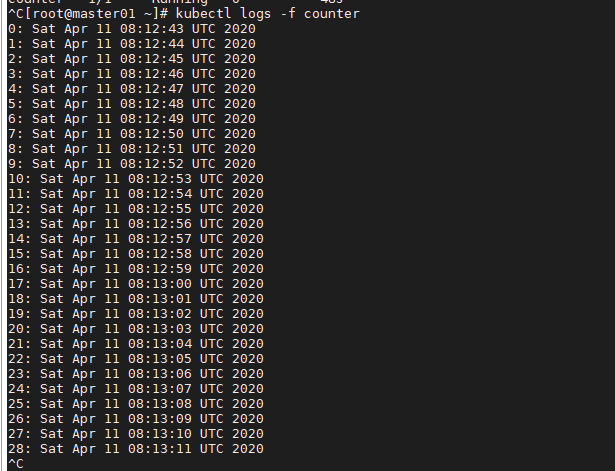

做个测试,查看是否有日志输出

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox:1.28.4

args: [/bin/sh, -c,'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

过滤kubernetes.pod.name:counter