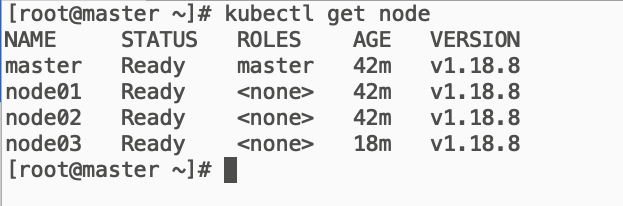

环境准备

| IP | Hostname | 内核 | CPU | Memory |

|---|---|---|---|---|

| 10.240.0.3 | master | 3.10.0-1062 | 2 | 4G |

| 10.240.0.4 | node01 | 3.10.0-1062 | 2 | 4G |

| 10.240.0.5 | node02 | 3.10.0-1062 | 2 | 4G |

| 10.240.0.6 | node01 | 3.10.0-1062 | 2 | 4G |

准备k8s集群

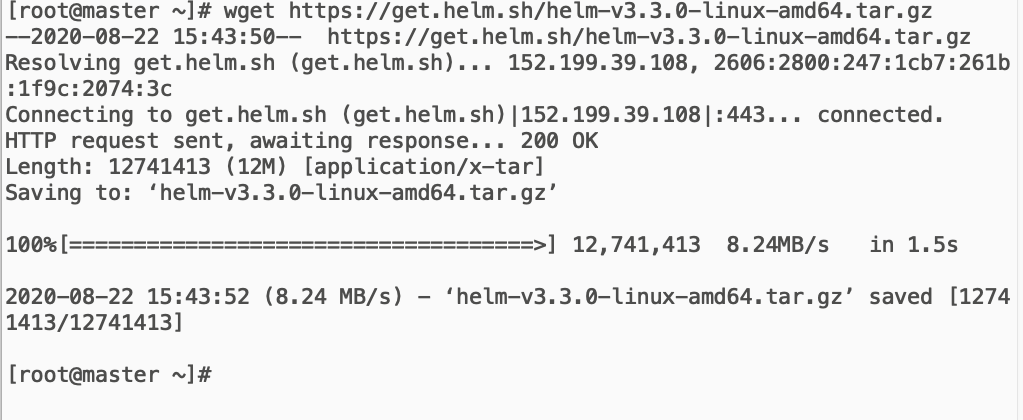

安装helm3

wget https://get.helm.sh/helm-v3.3.0-linux-amd64.tar.gz

tar xvf helm-v3.3.0-linux-amd64.tar.gzchmod +x linux-amd64/helmmv linux-amd64/helm /usr/bin/

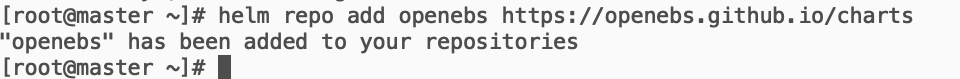

安装openebs

openebs能动态提供local pv

https://github.com/openebs/charts/tree/openebs-2.0.0/charts/openebs

helm repo add openebs https://openebs.github.io/charts

kubectl create ns openebshelm install openebs --namespace openebs openebs/openebs

将 openebs-hostpath设置为默认的 StorageClass

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

安装zookeeper

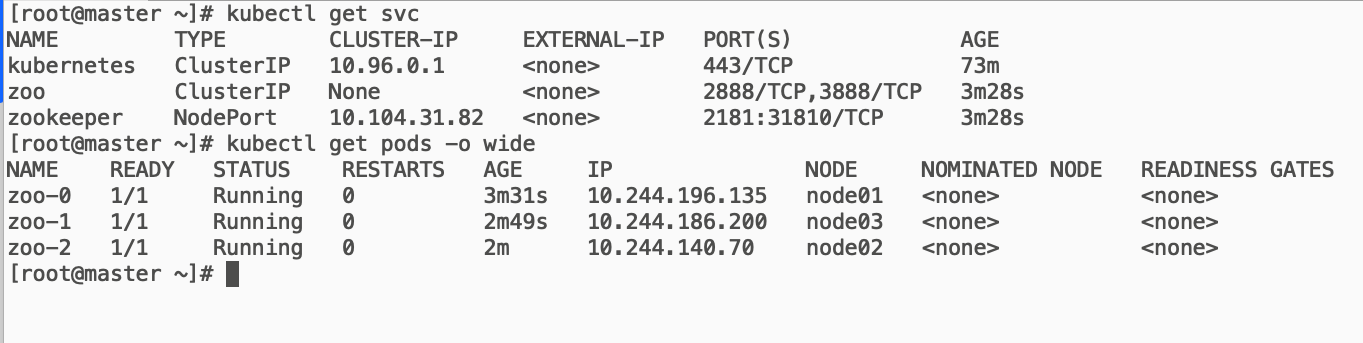

客户连接端口为nodeip:31810

cat > zk-statefulset.yaml << EOFapiVersion: apps/v1kind: StatefulSetmetadata:name: zoospec:serviceName: "zoo"replicas: 3selector:matchLabels:app: zookeepertemplate:metadata:labels:app: zookeeperspec:terminationGracePeriodSeconds: 10containers:- name: zookeeperimage: registry.cn-shenzhen.aliyuncs.com/pyker/zookeeper:3.5.5imagePullPolicy: IfNotPresentreadinessProbe:httpGet:path: /commands/ruokport: 8080initialDelaySeconds: 10timeoutSeconds: 5periodSeconds: 3livenessProbe:httpGet:path: /commands/ruokport: 8080initialDelaySeconds: 30timeoutSeconds: 5periodSeconds: 3env:- name: ZOO_SERVERSvalue: server.1=zoo-0.zoo:2888:3888;2181 server.2=zoo-1.zoo:2888:3888;2181 server.3=zoo-2.zoo:2888:3888;2181ports:- containerPort: 2181name: client- containerPort: 2888name: peer- containerPort: 3888name: leader-electionvolumeMounts:- name: datadirmountPath: /datavolumeClaimTemplates:- metadata:name: datadirspec:accessModes: [ "ReadWriteOnce" ]storageClassName: "openebs-hostpath"resources:requests:storage: 1Gi---apiVersion: v1kind: Servicemetadata:name: zookeeperspec:type: NodePortports:- port: 2181name: clienttargetPort: 2181nodePort: 31810selector:app: zookeeper---apiVersion: v1kind: Servicemetadata:name: zoospec:ports:- port: 2888name: peer- port: 3888name: leader-electionclusterIP: Noneselector:app: zookeeperEOF

kubectl apply -f zk-statefulset.yaml

安装zookeeper web界面

cat > zkui.yaml << EOFapiVersion: v1kind: Servicemetadata:name: zkuilabels:app: zkuispec:type: NodePortports:- port: 9090protocol: TCPtargetPort: 9090nodePort: 30080selector:app: zkui---apiVersion: apps/v1kind: Deploymentmetadata:name: zkuispec:replicas: 1selector:matchLabels:app: zkuitemplate:metadata:labels:app: zkuispec:containers:- name: zkuiimage: registry.cn-shenzhen.aliyuncs.com/pyker/zkui:latestimagePullPolicy: IfNotPresentenv:- name: ZK_SERVERvalue: "zoo-1.zoo:2181,zoo-2.zoo:2181,zoo-0.zoo:2181"EOF

kubectl apply -f zkui.yaml

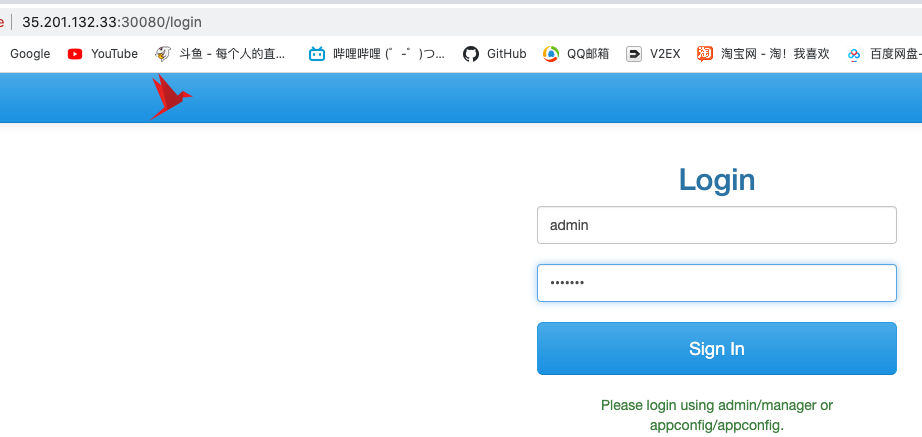

访问zk web

节点ip:30080

帐号是admin,密码为manager

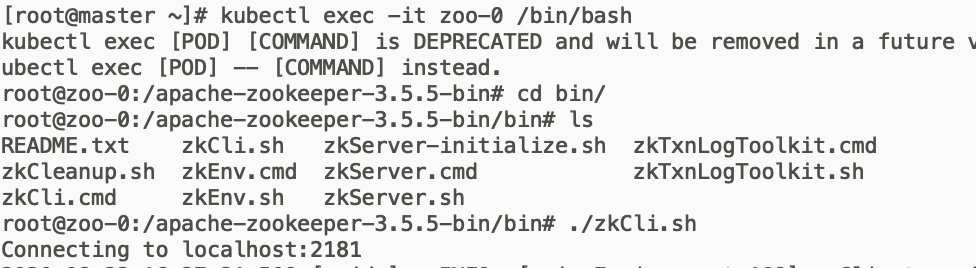

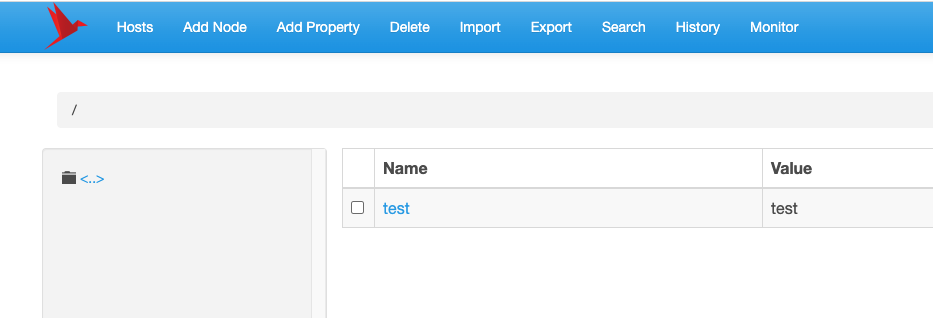

先在客户端测试一下,创建test test

kubectl exec -it zoo-0 -- /bin/bash

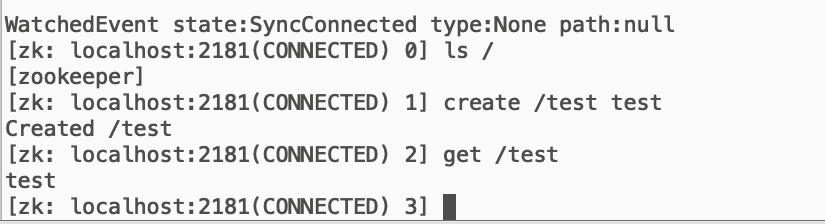

访问web界面,查看已成功创建test test

部署dobbo-admin

下载git

git clone https://github.com/apache/dubbo-admin.gitcd dubbo-admin

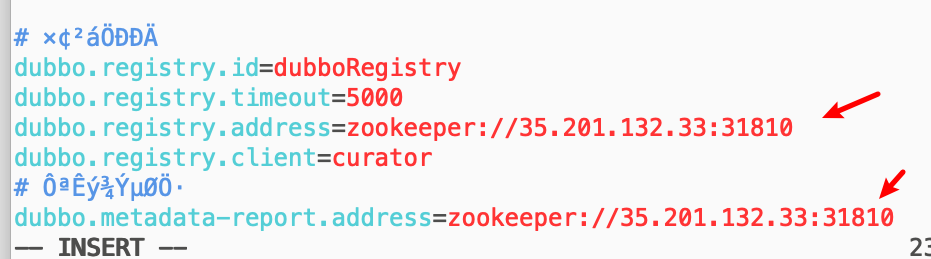

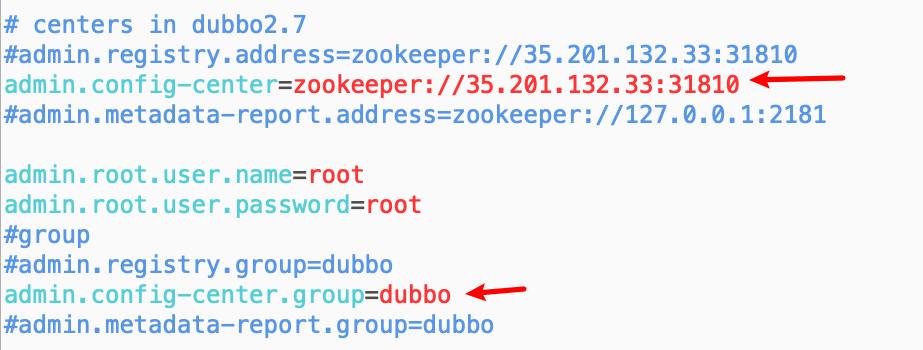

只需修改zk地址,修改成上面部署的zk客户端地址,

由于zk通过nodeport暴露出去,所以客户端地址为nodeip:31810

vim /root/dubbo-admin/dubbo-admin-server/src/main/resources/application.properties

注意:这里取消了这四个个配置,必须先在zookeeper创建文件,否则jar包运行失败

admin.registry.address=zookeeper://xxxx:2181 admin.metadata-report.address=zookeeper://xxxx:2181 admin.registry.group=dubbo admin.metadata-report.group=dubbo

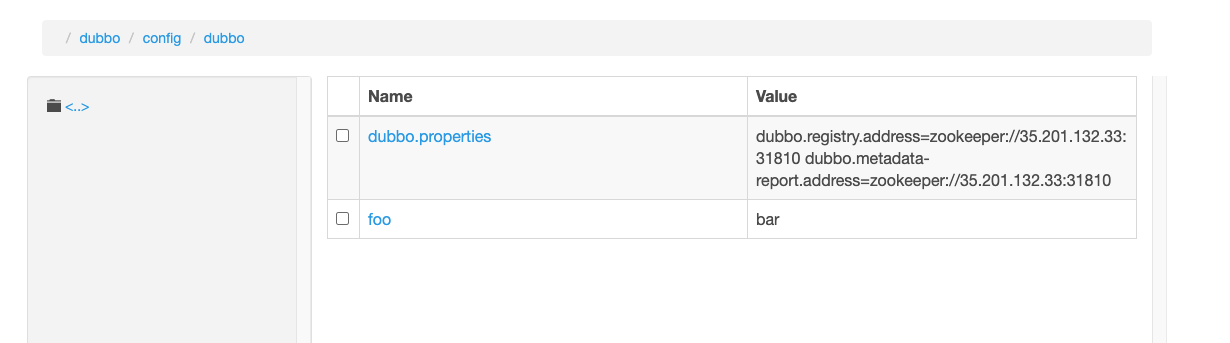

在zookeeper 创建文件 /dubbo/config/dubbo/dubbo.properties ,内容为

dubbo.registry.address=zookeeper://xxxx:2181 dubbo.metadata-report.address=zookeeper://xxxx:2181

在zk web中能看到已经创建好了

修改zk之后打包成docker镜像

tar zcvf dubbo-admin.tar.gz dubbo-admin

FROM tanmgweiwow/jdkenv:v1.0 as BUILDRUN mkdir /appADD dubbo-admin.tar.gz /appWORKDIR /app/dubbo-adminRUN ./mvnw clean package -Dmaven.test.skip=trueFROM tanmgweiwow/jdkenv:v1.0COPY --from=BUILD /app/dubbo-admin/dubbo-admin-distribution/target/dubbo-admin-0.2.0-SNAPSHOT.jar /app.jarENTRYPOINT ["java","-XX:+UnlockExperimentalVMOptions","-XX:+UseCGroupMemoryLimitForHeap","-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"]EXPOSE 8080

docker build -t tanmgweiwow/dubbo-admin:v1.0 .

推送到dockerhub上

docker push tanmgweiwow/dubbo-admin:v1.0

编写deployment

cat > dubbo-admin.yaml << EOFapiVersion: apps/v1kind: Deploymentmetadata:name: dubbo-adminspec:selector:matchLabels:app: dubbo-adminreplicas: 1template:metadata:labels:app: dubbo-adminspec:containers:- name: dubbo-adminimage: tanmgweiwow/dubbo-admin:v1.0ports:- containerPort: 8080---#serviceapiVersion: v1kind: Servicemetadata:name: dubbo-adminspec:ports:- port: 8080protocol: TCPtargetPort: 8080nodePort: 31811selector:app: dubbo-admintype: NodePortEOF

kubectl apply -f dubbo-admin.yaml

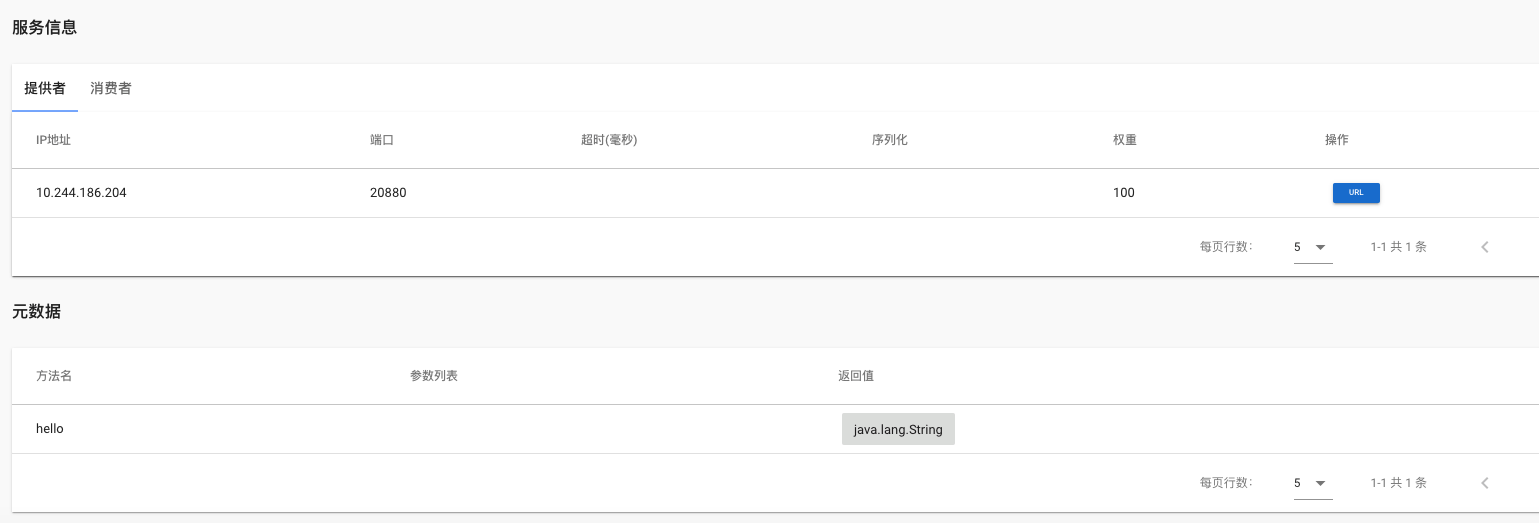

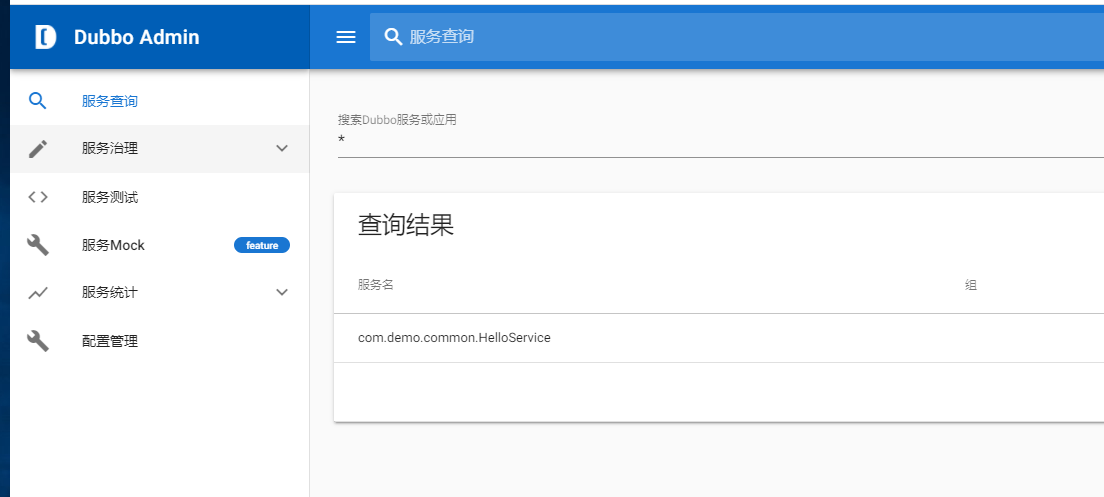

访问nodeip:31811即可,账号密码root/root

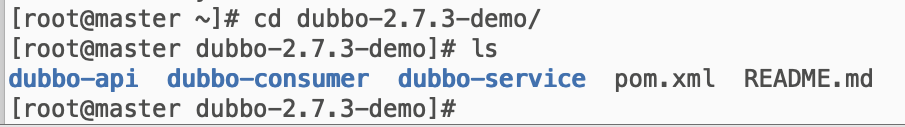

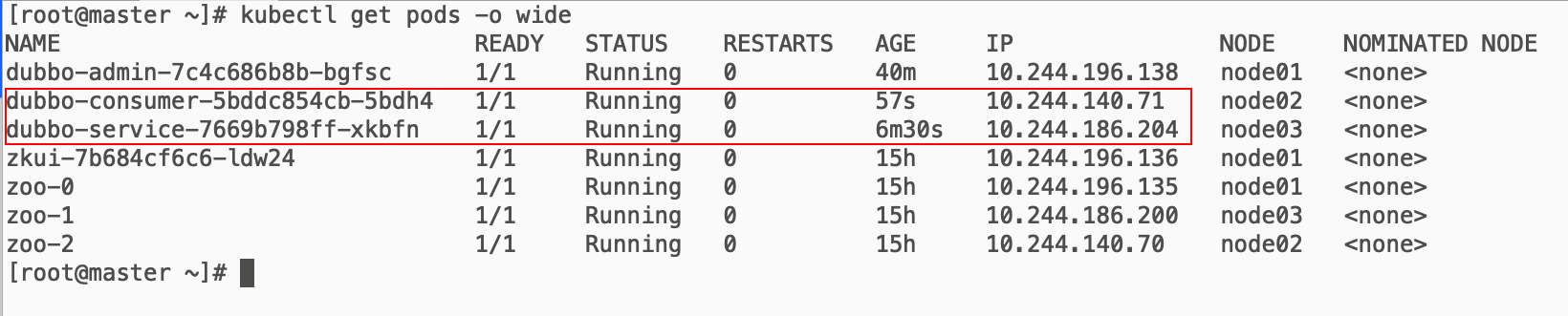

部署dubbo-demo-2.7.3

git clone https://github.com/Mysakura/dubbo-2.7.3-demo.git

修改zookeeper地址

vim dubbo-consumer/src/main/resources/application.properties

vim dubbo-service/src/main/resources/application.properties

制作镜像

tar zcvf dubbo-2.7.3-demo.tar.gz dubbo-2.7.3-demo

dubbo-service 镜像

FROM tanmgweiwow/jdkenv:v1.0 as BUILDRUN mkdir /appADD dubbo-2.7.3-demo.tar.gz /appWORKDIR /app/dubbo-2.7.3-demoRUN mvn clean package -Dmaven.test.skip=trueFROM tanmgweiwow/jdkenv:v1.0COPY --from=BUILD /app/dubbo-2.7.3-demo/dubbo-service/target/dubbo-service-1.0-SNAPSHOT.jar /app.jarENTRYPOINT ["java","-XX:+UnlockExperimentalVMOptions","-XX:+UseCGroupMemoryLimitForHeap","-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"]EXPOSE 9999

docker build -t tanmgweiwow/dubbo-service:v1.0 .

docker push tanmgweiwow/dubbo-service:v1.0

cat > dubbo-service.yaml << EOFapiVersion: apps/v1kind: Deploymentmetadata:name: dubbo-servicespec:selector:matchLabels:app: dubbo-servicereplicas: 1template:metadata:labels:app: dubbo-servicespec:containers:- name: dubbo-serviceimage: tanmgweiwow/dubbo-service:v1.0ports:- containerPort: 9999EOF

kubectl apply -f dubbo-service.yaml

dubbo-consumer 镜像

FROM tanmgweiwow/jdkenv:v1.0 as BUILDRUN mkdir /appADD dubbo-2.7.3-demo.tar.gz /appWORKDIR /app/dubbo-2.7.3-demoRUN mvn clean package -Dmaven.test.skip=trueFROM tanmgweiwow/jdkenv:v1.0COPY --from=BUILD /app/dubbo-2.7.3-demo/dubbo-consumer/target/dubbo-consumer-0.0.1-SNAPSHOT.jar /app.jarENTRYPOINT ["java","-XX:+UnlockExperimentalVMOptions","-XX:+UseCGroupMemoryLimitForHeap","-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"]EXPOSE 9990

docker build -t tanmgweiwow/dubbo-consumer:v1.0 .

docker push tanmgweiwow/dubbo-consumer:v1.0

cat > dubbo-consumer.yaml << EOFapiVersion: apps/v1kind: Deploymentmetadata:name: dubbo-consumerspec:selector:matchLabels:app: dubbo-consumerreplicas: 1template:metadata:labels:app: dubbo-consumerspec:containers:- name: dubbo-serviceimage: tanmgweiwow/dubbo-consumer:v1.0ports:- containerPort: 9990---#serviceapiVersion: v1kind: Servicemetadata:name: dubbo-consumerspec:ports:- port: 9990protocol: TCPtargetPort: 9990nodePort: 31890selector:app: dubbo-consumertype: NodePortEOF

kubectl apply -f dubbo-consumer.yaml

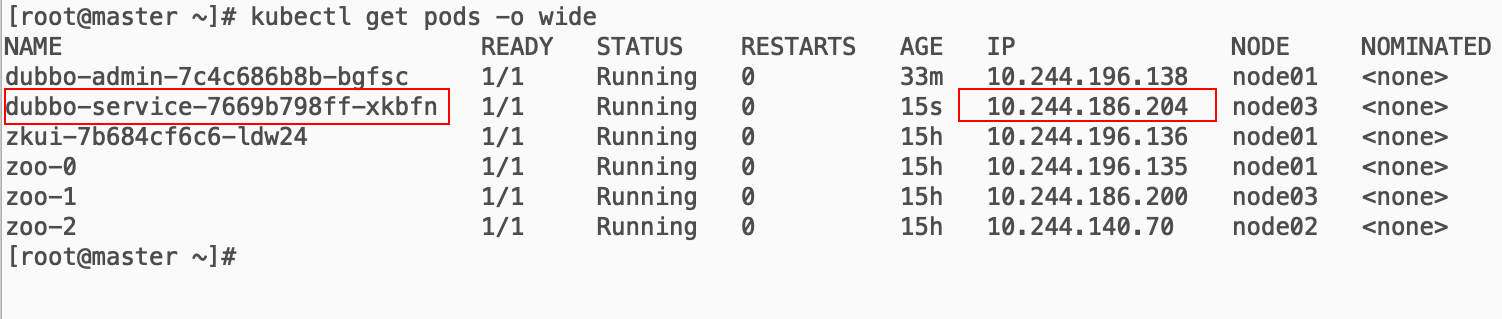

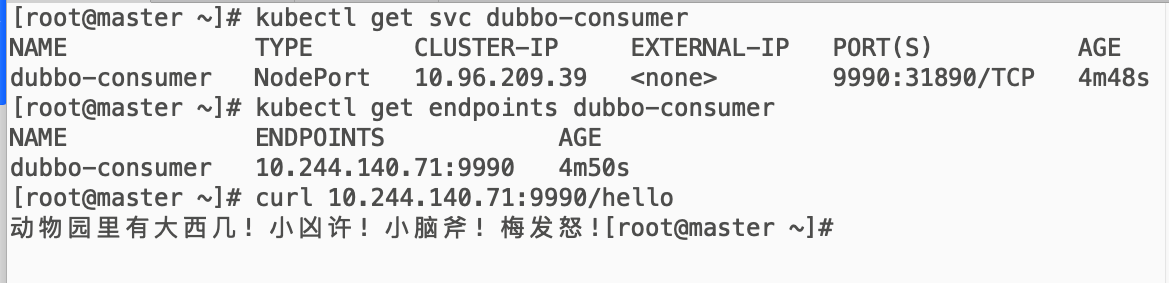

调用dubbo-service接口

内部调用,访问pod id:端口/接口

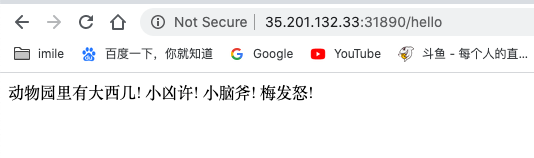

外部调用,访问节点ip:nodeport/接口

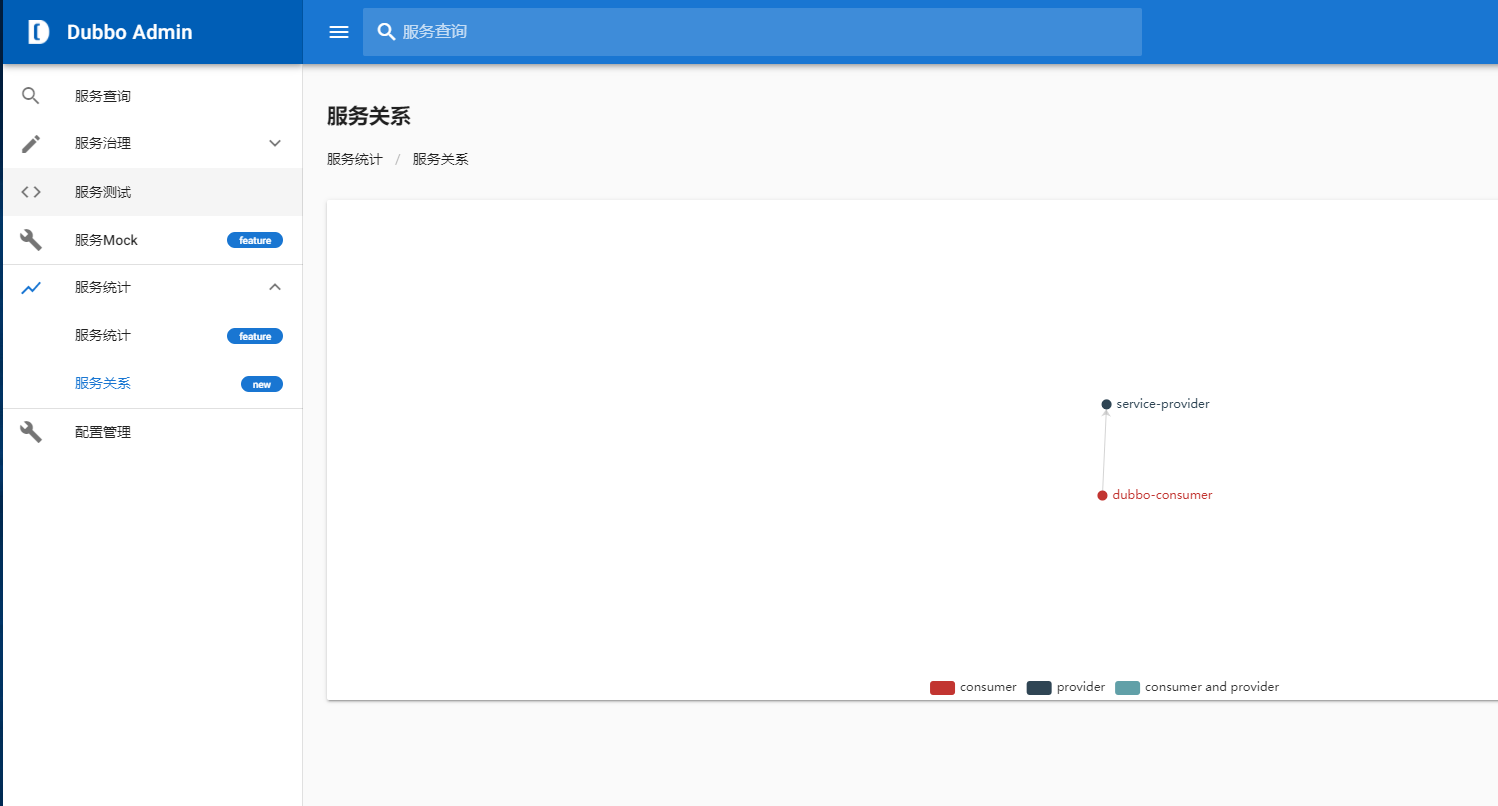

在dubbo-admin中查看服务关系