烹饪指南

本节列出了一些短小精悍的 Pandas 实例与链接。

我们希望 Pandas 用户能积极踊跃地为本文档添加更多内容。为本节添加实用示例的链接或代码,是 Pandas 用户提交第一个 Pull Request 最好的选择。

本节列出了简单、精练、易上手的实例代码,以及 Stack Overflow 或 GitHub 上的链接,这些链接包含实例代码的更多详情。

pd 与 np 是 Pandas 与 Numpy 的缩写。为了让新手易于理解,其它模块是显式导入的。

下列实例均为 Python 3 代码,简单修改即可用于 Python 早期版本。

惯用语

以下是 Pandas 的惯用语。

对一列数据执行 if-then / if-then-else 操作,把计算结果赋值给一列或多列:

In [1]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],...: 'BBB': [10, 20, 30, 40],...: 'CCC': [100, 50, -30, -50]})...:In [2]: dfOut[2]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50

if-then…

在一列上执行 if-then 操作:

In [3]: df.loc[df.AAA >= 5, 'BBB'] = -1In [4]: dfOut[4]:AAA BBB CCC0 4 10 1001 5 -1 502 6 -1 -303 7 -1 -50

在两列上执行 if-then 操作:

In [5]: df.loc[df.AAA >= 5, ['BBB', 'CCC']] = 555In [6]: dfOut[6]:AAA BBB CCC0 4 10 1001 5 555 5552 6 555 5553 7 555 555

再添加一行代码,执行 -else 操作:

In [7]: df.loc[df.AAA < 5, ['BBB', 'CCC']] = 2000In [8]: dfOut[8]:AAA BBB CCC0 4 2000 20001 5 555 5552 6 555 5553 7 555 555

或用 Pandas 的 where 设置掩码(mask):

In [9]: df_mask = pd.DataFrame({'AAA': [True] * 4,...: 'BBB': [False] * 4,...: 'CCC': [True, False] * 2})...:In [10]: df.where(df_mask, -1000)Out[10]:AAA BBB CCC0 4 -1000 20001 5 -1000 -10002 6 -1000 5553 7 -1000 -1000

用 NumPy where() 函数实现 if-then-else

In [11]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [12]: dfOut[12]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50In [13]: df['logic'] = np.where(df['AAA'] > 5, 'high', 'low')In [14]: dfOut[14]:AAA BBB CCC logic0 4 10 100 low1 5 20 50 low2 6 30 -30 high3 7 40 -50 high

切割

In [15]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [16]: dfOut[16]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50In [17]: df[df.AAA <= 5]Out[17]:AAA BBB CCC0 4 10 1001 5 20 50In [18]: df[df.AAA > 5]Out[18]:AAA BBB CCC2 6 30 -303 7 40 -50

设置条件

In [19]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [20]: dfOut[20]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50

和(&),不赋值,直接返回 Series:

In [21]: df.loc[(df['BBB'] < 25) & (df['CCC'] >= -40), 'AAA']Out[21]:0 41 5Name: AAA, dtype: int64

或(|),不赋值,直接返回 Series:

In [22]: df.loc[(df['BBB'] > 25) | (df['CCC'] >= -40), 'AAA']Out[22]:0 41 52 63 7Name: AAA, dtype: int64

或(|),赋值,修改 DataFrame:

In [23]: df.loc[(df['BBB'] > 25) | (df['CCC'] >= 75), 'AAA'] = 0.1In [24]: dfOut[24]:AAA BBB CCC0 0.1 10 1001 5.0 20 502 0.1 30 -303 0.1 40 -50

In [25]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [26]: dfOut[26]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50In [27]: aValue = 43.0In [28]: df.loc[(df.CCC - aValue).abs().argsort()]Out[28]:AAA BBB CCC1 5 20 500 4 10 1002 6 30 -303 7 40 -50

In [29]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [30]: dfOut[30]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50In [31]: Crit1 = df.AAA <= 5.5In [32]: Crit2 = df.BBB == 10.0In [33]: Crit3 = df.CCC > -40.0

硬编码方式为:

In [34]: AllCrit = Crit1 & Crit2 & Crit3

生成动态条件列表:

In [35]: import functoolsIn [36]: CritList = [Crit1, Crit2, Crit3]In [37]: AllCrit = functools.reduce(lambda x, y: x & y, CritList)In [38]: df[AllCrit]Out[38]:AAA BBB CCC0 4 10 100

选择

DataFrames

更多信息,请参阅索引文档。

In [39]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [40]: dfOut[40]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50In [41]: df[(df.AAA <= 6) & (df.index.isin([0, 2, 4]))]Out[41]:AAA BBB CCC0 4 10 1002 6 30 -30

In [42]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]},....: index=['foo', 'bar', 'boo', 'kar'])....:

前 2 个是显式切片方法,第 3 个是通用方法:

- 位置切片,Python 切片风格,不包括结尾数据;

- 标签切片,非 Python 切片风格,包括结尾数据;

- 通用切片,支持两种切片风格,取决于切片用的是标签还是位置。

In [43]: df.loc['bar':'kar'] # LabelOut[43]:AAA BBB CCCbar 5 20 50boo 6 30 -30kar 7 40 -50# GenericIn [44]: df.iloc[0:3]Out[44]:AAA BBB CCCfoo 4 10 100bar 5 20 50boo 6 30 -30In [45]: df.loc['bar':'kar']Out[45]:AAA BBB CCCbar 5 20 50boo 6 30 -30kar 7 40 -50

包含整数,且不从 0 开始的索引,或不是逐步递增的索引会引发歧义。

In [46]: data = {'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]}....:In [47]: df2 = pd.DataFrame(data=data, index=[1, 2, 3, 4]) # Note index starts at 1.In [48]: df2.iloc[1:3] # Position-orientedOut[48]:AAA BBB CCC2 5 20 503 6 30 -30In [49]: df2.loc[1:3] # Label-orientedOut[49]:AAA BBB CCC1 4 10 1002 5 20 503 6 30 -30

In [50]: df = pd.DataFrame({'AAA': [4, 5, 6, 7],....: 'BBB': [10, 20, 30, 40],....: 'CCC': [100, 50, -30, -50]})....:In [51]: dfOut[51]:AAA BBB CCC0 4 10 1001 5 20 502 6 30 -303 7 40 -50In [52]: df[~((df.AAA <= 6) & (df.index.isin([0, 2, 4])))]Out[52]:AAA BBB CCC1 5 20 503 7 40 -50

生成新列

In [53]: df = pd.DataFrame({'AAA': [1, 2, 1, 3],....: 'BBB': [1, 1, 2, 2],....: 'CCC': [2, 1, 3, 1]})....:In [54]: dfOut[54]:AAA BBB CCC0 1 1 21 2 1 12 1 2 33 3 2 1In [55]: source_cols = df.columns # Or some subset would work tooIn [56]: new_cols = [str(x) + "_cat" for x in source_cols]In [57]: categories = {1: 'Alpha', 2: 'Beta', 3: 'Charlie'}In [58]: df[new_cols] = df[source_cols].applymap(categories.get)In [59]: dfOut[59]:AAA BBB CCC AAA_cat BBB_cat CCC_cat0 1 1 2 Alpha Alpha Beta1 2 1 1 Beta Alpha Alpha2 1 2 3 Alpha Beta Charlie3 3 2 1 Charlie Beta Alpha

In [60]: df = pd.DataFrame({'AAA': [1, 1, 1, 2, 2, 2, 3, 3],....: 'BBB': [2, 1, 3, 4, 5, 1, 2, 3]})....:In [61]: dfOut[61]:AAA BBB0 1 21 1 12 1 33 2 44 2 55 2 16 3 27 3 3

方法1:用 idxmin() 提取每组最小值的索引

In [62]: df.loc[df.groupby("AAA")["BBB"].idxmin()]Out[62]:AAA BBB1 1 15 2 16 3 2

方法 2:先排序,再提取每组的第一个值

In [63]: df.sort_values(by="BBB").groupby("AAA", as_index=False).first()Out[63]:AAA BBB0 1 11 2 12 3 2

注意,提取的数据一样,但索引不一样。

多层索引

更多信息,请参阅多层索引文档。

In [64]: df = pd.DataFrame({'row': [0, 1, 2],....: 'One_X': [1.1, 1.1, 1.1],....: 'One_Y': [1.2, 1.2, 1.2],....: 'Two_X': [1.11, 1.11, 1.11],....: 'Two_Y': [1.22, 1.22, 1.22]})....:In [65]: dfOut[65]:row One_X One_Y Two_X Two_Y0 0 1.1 1.2 1.11 1.221 1 1.1 1.2 1.11 1.222 2 1.1 1.2 1.11 1.22# 设置索引标签In [66]: df = df.set_index('row')In [67]: dfOut[67]:One_X One_Y Two_X Two_Yrow0 1.1 1.2 1.11 1.221 1.1 1.2 1.11 1.222 1.1 1.2 1.11 1.22# 多层索引的列In [68]: df.columns = pd.MultiIndex.from_tuples([tuple(c.split('_'))....: for c in df.columns])....:In [69]: dfOut[69]:One TwoX Y X Yrow0 1.1 1.2 1.11 1.221 1.1 1.2 1.11 1.222 1.1 1.2 1.11 1.22# 先 stack,然后 Reset 索引In [70]: df = df.stack(0).reset_index(1)In [71]: dfOut[71]:level_1 X Yrow0 One 1.10 1.200 Two 1.11 1.221 One 1.10 1.201 Two 1.11 1.222 One 1.10 1.202 Two 1.11 1.22# 修整标签,注意自动添加了标签 `level_1`In [72]: df.columns = ['Sample', 'All_X', 'All_Y']In [73]: dfOut[73]:Sample All_X All_Yrow0 One 1.10 1.200 Two 1.11 1.221 One 1.10 1.201 Two 1.11 1.222 One 1.10 1.202 Two 1.11 1.22

运算

In [74]: cols = pd.MultiIndex.from_tuples([(x, y) for x in ['A', 'B', 'C']....: for y in ['O', 'I']])....:In [75]: df = pd.DataFrame(np.random.randn(2, 6), index=['n', 'm'], columns=cols)In [76]: dfOut[76]:A B CO I O I O In 0.469112 -0.282863 -1.509059 -1.135632 1.212112 -0.173215m 0.119209 -1.044236 -0.861849 -2.104569 -0.494929 1.071804In [77]: df = df.div(df['C'], level=1)In [78]: dfOut[78]:A B CO I O I O In 0.387021 1.633022 -1.244983 6.556214 1.0 1.0m -0.240860 -0.974279 1.741358 -1.963577 1.0 1.0

切片

In [79]: coords = [('AA', 'one'), ('AA', 'six'), ('BB', 'one'), ('BB', 'two'),....: ('BB', 'six')]....:In [80]: index = pd.MultiIndex.from_tuples(coords)In [81]: df = pd.DataFrame([11, 22, 33, 44, 55], index, ['MyData'])In [82]: dfOut[82]:MyDataAA one 11six 22BB one 33two 44six 55

提取第一层与索引第一个轴的交叉数据:

# 注意:level 与 axis 是可选项,默认为 0In [83]: df.xs('BB', level=0, axis=0)Out[83]:MyDataone 33two 44six 55

……现在是第 1 个轴的第 2 层

In [84]: df.xs('six', level=1, axis=0)Out[84]:MyDataAA 22BB 55

In [85]: import itertoolsIn [86]: index = list(itertools.product(['Ada', 'Quinn', 'Violet'],....: ['Comp', 'Math', 'Sci']))....:In [87]: headr = list(itertools.product(['Exams', 'Labs'], ['I', 'II']))In [88]: indx = pd.MultiIndex.from_tuples(index, names=['Student', 'Course'])In [89]: cols = pd.MultiIndex.from_tuples(headr) # Notice these are un-namedIn [90]: data = [[70 + x + y + (x * y) % 3 for x in range(4)] for y in range(9)]In [91]: df = pd.DataFrame(data, indx, cols)In [92]: dfOut[92]:Exams LabsI II I IIStudent CourseAda Comp 70 71 72 73Math 71 73 75 74Sci 72 75 75 75Quinn Comp 73 74 75 76Math 74 76 78 77Sci 75 78 78 78Violet Comp 76 77 78 79Math 77 79 81 80Sci 78 81 81 81In [93]: All = slice(None)In [94]: df.loc['Violet']Out[94]:Exams LabsI II I IICourseComp 76 77 78 79Math 77 79 81 80Sci 78 81 81 81In [95]: df.loc[(All, 'Math'), All]Out[95]:Exams LabsI II I IIStudent CourseAda Math 71 73 75 74Quinn Math 74 76 78 77Violet Math 77 79 81 80In [96]: df.loc[(slice('Ada', 'Quinn'), 'Math'), All]Out[96]:Exams LabsI II I IIStudent CourseAda Math 71 73 75 74Quinn Math 74 76 78 77In [97]: df.loc[(All, 'Math'), ('Exams')]Out[97]:I IIStudent CourseAda Math 71 73Quinn Math 74 76Violet Math 77 79In [98]: df.loc[(All, 'Math'), (All, 'II')]Out[98]:Exams LabsII IIStudent CourseAda Math 73 74Quinn Math 76 77Violet Math 79 80

排序

In [99]: df.sort_values(by=('Labs', 'II'), ascending=False)Out[99]:Exams LabsI II I IIStudent CourseViolet Sci 78 81 81 81Math 77 79 81 80Comp 76 77 78 79Quinn Sci 75 78 78 78Math 74 76 78 77Comp 73 74 75 76Ada Sci 72 75 75 75Math 71 73 75 74Comp 70 71 72 73

层级

缺失数据

缺失数据 文档。

向前填充逆序时间序列。

In [100]: df = pd.DataFrame(np.random.randn(6, 1),.....: index=pd.date_range('2013-08-01', periods=6, freq='B'),.....: columns=list('A')).....:In [101]: df.loc[df.index[3], 'A'] = np.nanIn [102]: dfOut[102]:A2013-08-01 0.7215552013-08-02 -0.7067712013-08-05 -1.0395752013-08-06 NaN2013-08-07 -0.4249722013-08-08 0.567020In [103]: df.reindex(df.index[::-1]).ffill()Out[103]:A2013-08-08 0.5670202013-08-07 -0.4249722013-08-06 -0.4249722013-08-05 -1.0395752013-08-02 -0.7067712013-08-01 0.721555

替换

分组

分组 文档。

与聚合不同,传递给 DataFrame 子集的 apply 可回调,可以访问所有列。

In [104]: df = pd.DataFrame({'animal': 'cat dog cat fish dog cat cat'.split(),.....: 'size': list('SSMMMLL'),.....: 'weight': [8, 10, 11, 1, 20, 12, 12],.....: 'adult': [False] * 5 + [True] * 2}).....:In [105]: dfOut[105]:animal size weight adult0 cat S 8 False1 dog S 10 False2 cat M 11 False3 fish M 1 False4 dog M 20 False5 cat L 12 True6 cat L 12 True# 提取 size 列最重的动物列表In [106]: df.groupby('animal').apply(lambda subf: subf['size'][subf['weight'].idxmax()])Out[106]:animalcat Ldog Mfish Mdtype: object

In [107]: gb = df.groupby(['animal'])In [108]: gb.get_group('cat')Out[108]:animal size weight adult0 cat S 8 False2 cat M 11 False5 cat L 12 True6 cat L 12 True

In [109]: def GrowUp(x):.....: avg_weight = sum(x[x['size'] == 'S'].weight * 1.5).....: avg_weight += sum(x[x['size'] == 'M'].weight * 1.25).....: avg_weight += sum(x[x['size'] == 'L'].weight).....: avg_weight /= len(x).....: return pd.Series(['L', avg_weight, True],.....: index=['size', 'weight', 'adult']).....:In [110]: expected_df = gb.apply(GrowUp)In [111]: expected_dfOut[111]:size weight adultanimalcat L 12.4375 Truedog L 20.0000 Truefish L 1.2500 True

In [112]: S = pd.Series([i / 100.0 for i in range(1, 11)])In [113]: def cum_ret(x, y):.....: return x * (1 + y).....:In [114]: def red(x):.....: return functools.reduce(cum_ret, x, 1.0).....:In [115]: S.expanding().apply(red, raw=True)Out[115]:0 1.0100001 1.0302002 1.0611063 1.1035504 1.1587285 1.2282516 1.3142297 1.4193678 1.5471109 1.701821dtype: float64

In [116]: df = pd.DataFrame({'A': [1, 1, 2, 2], 'B': [1, -1, 1, 2]})In [117]: gb = df.groupby('A')In [118]: def replace(g):.....: mask = g < 0.....: return g.where(mask, g[~mask].mean()).....:In [119]: gb.transform(replace)Out[119]:B0 1.01 -1.02 1.53 1.5

In [120]: df = pd.DataFrame({'code': ['foo', 'bar', 'baz'] * 2,.....: 'data': [0.16, -0.21, 0.33, 0.45, -0.59, 0.62],.....: 'flag': [False, True] * 3}).....:In [121]: code_groups = df.groupby('code')In [122]: agg_n_sort_order = code_groups[['data']].transform(sum).sort_values(by='data')In [123]: sorted_df = df.loc[agg_n_sort_order.index]In [124]: sorted_dfOut[124]:code data flag1 bar -0.21 True4 bar -0.59 False0 foo 0.16 False3 foo 0.45 True2 baz 0.33 False5 baz 0.62 True

In [125]: rng = pd.date_range(start="2014-10-07", periods=10, freq='2min')In [126]: ts = pd.Series(data=list(range(10)), index=rng)In [127]: def MyCust(x):.....: if len(x) > 2:.....: return x[1] * 1.234.....: return pd.NaT.....:In [128]: mhc = {'Mean': np.mean, 'Max': np.max, 'Custom': MyCust}In [129]: ts.resample("5min").apply(mhc)Out[129]:Mean 2014-10-07 00:00:00 12014-10-07 00:05:00 3.52014-10-07 00:10:00 62014-10-07 00:15:00 8.5Max 2014-10-07 00:00:00 22014-10-07 00:05:00 42014-10-07 00:10:00 72014-10-07 00:15:00 9Custom 2014-10-07 00:00:00 1.2342014-10-07 00:05:00 NaT2014-10-07 00:10:00 7.4042014-10-07 00:15:00 NaTdtype: objectIn [130]: tsOut[130]:2014-10-07 00:00:00 02014-10-07 00:02:00 12014-10-07 00:04:00 22014-10-07 00:06:00 32014-10-07 00:08:00 42014-10-07 00:10:00 52014-10-07 00:12:00 62014-10-07 00:14:00 72014-10-07 00:16:00 82014-10-07 00:18:00 9Freq: 2T, dtype: int64

In [131]: df = pd.DataFrame({'Color': 'Red Red Red Blue'.split(),.....: 'Value': [100, 150, 50, 50]}).....:In [132]: dfOut[132]:Color Value0 Red 1001 Red 1502 Red 503 Blue 50In [133]: df['Counts'] = df.groupby(['Color']).transform(len)In [134]: dfOut[134]:Color Value Counts0 Red 100 31 Red 150 32 Red 50 33 Blue 50 1

In [135]: df = pd.DataFrame({'line_race': [10, 10, 8, 10, 10, 8],.....: 'beyer': [99, 102, 103, 103, 88, 100]},.....: index=['Last Gunfighter', 'Last Gunfighter',.....: 'Last Gunfighter', 'Paynter', 'Paynter',.....: 'Paynter']).....:In [136]: dfOut[136]:line_race beyerLast Gunfighter 10 99Last Gunfighter 10 102Last Gunfighter 8 103Paynter 10 103Paynter 10 88Paynter 8 100In [137]: df['beyer_shifted'] = df.groupby(level=0)['beyer'].shift(1)In [138]: dfOut[138]:line_race beyer beyer_shiftedLast Gunfighter 10 99 NaNLast Gunfighter 10 102 99.0Last Gunfighter 8 103 102.0Paynter 10 103 NaNPaynter 10 88 103.0Paynter 8 100 88.0

In [139]: df = pd.DataFrame({'host': ['other', 'other', 'that', 'this', 'this'],.....: 'service': ['mail', 'web', 'mail', 'mail', 'web'],.....: 'no': [1, 2, 1, 2, 1]}).set_index(['host', 'service']).....:In [140]: mask = df.groupby(level=0).agg('idxmax')In [141]: df_count = df.loc[mask['no']].reset_index()In [142]: df_countOut[142]:host service no0 other web 21 that mail 12 this mail 2

In [143]: df = pd.DataFrame([0, 1, 0, 1, 1, 1, 0, 1, 1], columns=['A'])In [144]: df.A.groupby((df.A != df.A.shift()).cumsum()).groupsOut[144]:{1: Int64Index([0], dtype='int64'),2: Int64Index([1], dtype='int64'),3: Int64Index([2], dtype='int64'),4: Int64Index([3, 4, 5], dtype='int64'),5: Int64Index([6], dtype='int64'),6: Int64Index([7, 8], dtype='int64')}In [145]: df.A.groupby((df.A != df.A.shift()).cumsum()).cumsum()Out[145]:0 01 12 03 14 25 36 07 18 2Name: A, dtype: int64

扩展数据

分割

按指定逻辑,将不同的行,分割成 DataFrame 列表。

In [146]: df = pd.DataFrame(data={'Case': ['A', 'A', 'A', 'B', 'A', 'A', 'B', 'A',.....: 'A'],.....: 'Data': np.random.randn(9)}).....:In [147]: dfs = list(zip(*df.groupby((1 * (df['Case'] == 'B')).cumsum().....: .rolling(window=3, min_periods=1).median())))[-1].....:In [148]: dfs[0]Out[148]:Case Data0 A 0.2762321 A -1.0874012 A -0.6736903 B 0.113648In [149]: dfs[1]Out[149]:Case Data4 A -1.4784275 A 0.5249886 B 0.404705In [150]: dfs[2]Out[150]:Case Data7 A 0.5770468 A -1.715002

透视表

透视表 文档。

In [151]: df = pd.DataFrame(data={'Province': ['ON', 'QC', 'BC', 'AL', 'AL', 'MN', 'ON'],.....: 'City': ['Toronto', 'Montreal', 'Vancouver',.....: 'Calgary', 'Edmonton', 'Winnipeg',.....: 'Windsor'],.....: 'Sales': [13, 6, 16, 8, 4, 3, 1]}).....:In [152]: table = pd.pivot_table(df, values=['Sales'], index=['Province'],.....: columns=['City'], aggfunc=np.sum, margins=True).....:In [153]: table.stack('City')Out[153]:SalesProvince CityAL All 12.0Calgary 8.0Edmonton 4.0BC All 16.0Vancouver 16.0... ...All Montreal 6.0Toronto 13.0Vancouver 16.0Windsor 1.0Winnipeg 3.0[20 rows x 1 columns]

In [154]: grades = [48, 99, 75, 80, 42, 80, 72, 68, 36, 78]In [155]: df = pd.DataFrame({'ID': ["x%d" % r for r in range(10)],.....: 'Gender': ['F', 'M', 'F', 'M', 'F',.....: 'M', 'F', 'M', 'M', 'M'],.....: 'ExamYear': ['2007', '2007', '2007', '2008', '2008',.....: '2008', '2008', '2009', '2009', '2009'],.....: 'Class': ['algebra', 'stats', 'bio', 'algebra',.....: 'algebra', 'stats', 'stats', 'algebra',.....: 'bio', 'bio'],.....: 'Participated': ['yes', 'yes', 'yes', 'yes', 'no',.....: 'yes', 'yes', 'yes', 'yes', 'yes'],.....: 'Passed': ['yes' if x > 50 else 'no' for x in grades],.....: 'Employed': [True, True, True, False,.....: False, False, False, True, True, False],.....: 'Grade': grades}).....:In [156]: df.groupby('ExamYear').agg({'Participated': lambda x: x.value_counts()['yes'],.....: 'Passed': lambda x: sum(x == 'yes'),.....: 'Employed': lambda x: sum(x),.....: 'Grade': lambda x: sum(x) / len(x)}).....:Out[156]:Participated Passed Employed GradeExamYear2007 3 2 3 74.0000002008 3 3 0 68.5000002009 3 2 2 60.666667

跨列表创建年月:

In [157]: df = pd.DataFrame({'value': np.random.randn(36)},.....: index=pd.date_range('2011-01-01', freq='M', periods=36)).....:In [158]: pd.pivot_table(df, index=df.index.month, columns=df.index.year,.....: values='value', aggfunc='sum').....:Out[158]:2011 2012 20131 -1.039268 -0.968914 2.5656462 -0.370647 -1.294524 1.4312563 -1.157892 0.413738 1.3403094 -1.344312 0.276662 -1.1702995 0.844885 -0.472035 -0.2261696 1.075770 -0.013960 0.4108357 -0.109050 -0.362543 0.8138508 1.643563 -0.006154 0.1320039 -1.469388 -0.923061 -0.82731710 0.357021 0.895717 -0.07646711 -0.674600 0.805244 -1.18767812 -1.776904 -1.206412 1.130127

Apply 函数

In [159]: df = pd.DataFrame(data={'A': [[2, 4, 8, 16], [100, 200], [10, 20, 30]],.....: 'B': [['a', 'b', 'c'], ['jj', 'kk'], ['ccc']]},.....: index=['I', 'II', 'III']).....:In [160]: def SeriesFromSubList(aList):.....: return pd.Series(aList).....:In [161]: df_orgz = pd.concat({ind: row.apply(SeriesFromSubList).....: for ind, row in df.iterrows()}).....:In [162]: df_orgzOut[162]:0 1 2 3I A 2 4 8 16.0B a b c NaNII A 100 200 NaN NaNB jj kk NaN NaNIII A 10 20 30 NaNB ccc NaN NaN NaN

Rolling Apply to multiple columns where function calculates a Series before a Scalar from the Series is returned

In [163]: df = pd.DataFrame(data=np.random.randn(2000, 2) / 10000,.....: index=pd.date_range('2001-01-01', periods=2000),.....: columns=['A', 'B']).....:In [164]: dfOut[164]:A B2001-01-01 -0.000144 -0.0001412001-01-02 0.000161 0.0001022001-01-03 0.000057 0.0000882001-01-04 -0.000221 0.0000972001-01-05 -0.000201 -0.000041... ... ...2006-06-19 0.000040 -0.0002352006-06-20 -0.000123 -0.0000212006-06-21 -0.000113 0.0001142006-06-22 0.000136 0.0001092006-06-23 0.000027 0.000030[2000 rows x 2 columns]In [165]: def gm(df, const):.....: v = ((((df.A + df.B) + 1).cumprod()) - 1) * const.....: return v.iloc[-1].....:In [166]: s = pd.Series({df.index[i]: gm(df.iloc[i:min(i + 51, len(df) - 1)], 5).....: for i in range(len(df) - 50)}).....:In [167]: sOut[167]:2001-01-01 0.0009302001-01-02 0.0026152001-01-03 0.0012812001-01-04 0.0011172001-01-05 0.002772...2006-04-30 0.0032962006-05-01 0.0026292006-05-02 0.0020812006-05-03 0.0042472006-05-04 0.003928Length: 1950, dtype: float64

Rolling Apply to multiple columns where function returns a Scalar (Volume Weighted Average Price) 对多列执行滚动 Apply,函数返回标量值(成交价加权平均价)

In [168]: rng = pd.date_range(start='2014-01-01', periods=100)In [169]: df = pd.DataFrame({'Open': np.random.randn(len(rng)),.....: 'Close': np.random.randn(len(rng)),.....: 'Volume': np.random.randint(100, 2000, len(rng))},.....: index=rng).....:In [170]: dfOut[170]:Open Close Volume2014-01-01 -1.611353 -0.492885 12192014-01-02 -3.000951 0.445794 10542014-01-03 -0.138359 -0.076081 13812014-01-04 0.301568 1.198259 12532014-01-05 0.276381 -0.669831 1728... ... ... ...2014-04-06 -0.040338 0.937843 11882014-04-07 0.359661 -0.285908 18642014-04-08 0.060978 1.714814 9412014-04-09 1.759055 -0.455942 10652014-04-10 0.138185 -1.147008 1453[100 rows x 3 columns]In [171]: def vwap(bars):.....: return ((bars.Close * bars.Volume).sum() / bars.Volume.sum()).....:In [172]: window = 5In [173]: s = pd.concat([(pd.Series(vwap(df.iloc[i:i + window]),.....: index=[df.index[i + window]])).....: for i in range(len(df) - window)]).....:In [174]: s.round(2)Out[174]:2014-01-06 0.022014-01-07 0.112014-01-08 0.102014-01-09 0.072014-01-10 -0.29...2014-04-06 -0.632014-04-07 -0.022014-04-08 -0.032014-04-09 0.342014-04-10 0.29Length: 95, dtype: float64

时间序列

把以小时为列,天为行的矩阵转换为连续的时间序列。 如何重排 DataFrame?

为 DatetimeIndex 里每条记录计算当月第一天

In [175]: dates = pd.date_range('2000-01-01', periods=5)In [176]: dates.to_period(freq='M').to_timestamp()Out[176]:DatetimeIndex(['2000-01-01', '2000-01-01', '2000-01-01', '2000-01-01','2000-01-01'],dtype='datetime64[ns]', freq=None)

重采样

重采样 文档。

用 Grouper 代替 TimeGrouper 处理时间分组的值

用 TimeGrouper 与另一个分组创建子分组,再 Apply 自定义函数

合并

模拟 R 的 rbind:追加两个重叠索引的 DataFrame

In [177]: rng = pd.date_range('2000-01-01', periods=6)In [178]: df1 = pd.DataFrame(np.random.randn(6, 3), index=rng, columns=['A', 'B', 'C'])In [179]: df2 = df1.copy()

基于 df 构建器,需要ignore_index。

In [180]: df = df1.append(df2, ignore_index=True)In [181]: dfOut[181]:A B C0 -0.870117 -0.479265 -0.7908551 0.144817 1.726395 -0.4645352 -0.821906 1.597605 0.1873073 -0.128342 -1.511638 -0.2898584 0.399194 -1.430030 -0.6397605 1.115116 -2.012600 1.8106626 -0.870117 -0.479265 -0.7908557 0.144817 1.726395 -0.4645358 -0.821906 1.597605 0.1873079 -0.128342 -1.511638 -0.28985810 0.399194 -1.430030 -0.63976011 1.115116 -2.012600 1.810662

In [182]: df = pd.DataFrame(data={'Area': ['A'] * 5 + ['C'] * 2,.....: 'Bins': [110] * 2 + [160] * 3 + [40] * 2,.....: 'Test_0': [0, 1, 0, 1, 2, 0, 1],.....: 'Data': np.random.randn(7)}).....:In [183]: dfOut[183]:Area Bins Test_0 Data0 A 110 0 -0.4339371 A 110 1 -0.1605522 A 160 0 0.7444343 A 160 1 1.7542134 A 160 2 0.0008505 C 40 0 0.3422436 C 40 1 1.070599In [184]: df['Test_1'] = df['Test_0'] - 1In [185]: pd.merge(df, df, left_on=['Bins', 'Area', 'Test_0'],.....: right_on=['Bins', 'Area', 'Test_1'],.....: suffixes=('_L', '_R')).....:Out[185]:Area Bins Test_0_L Data_L Test_1_L Test_0_R Data_R Test_1_R0 A 110 0 -0.433937 -1 1 -0.160552 01 A 160 0 0.744434 -1 1 1.754213 02 A 160 1 1.754213 0 2 0.000850 13 C 40 0 0.342243 -1 1 1.070599 0

可视化

可视化 文档。

用 Pandas、Vincent、xlsxwriter 生成 Excel 文件里的嵌入可视图

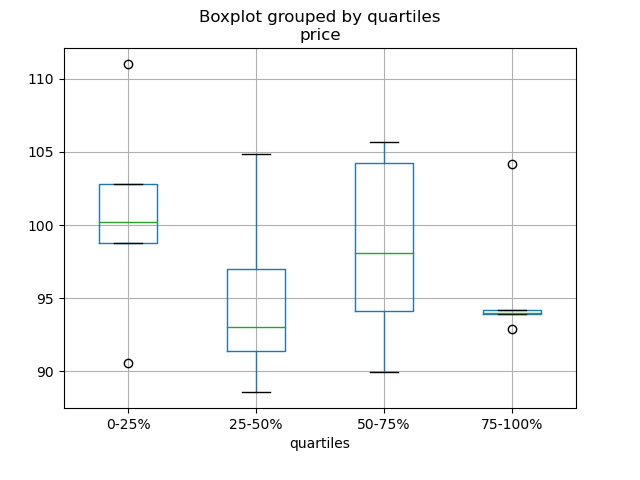

In [186]: df = pd.DataFrame(.....: {'stratifying_var': np.random.uniform(0, 100, 20),.....: 'price': np.random.normal(100, 5, 20)}).....:In [187]: df['quartiles'] = pd.qcut(.....: df['stratifying_var'],.....: 4,.....: labels=['0-25%', '25-50%', '50-75%', '75-100%']).....:In [188]: df.boxplot(column='price', by='quartiles')Out[188]: <matplotlib.axes._subplots.AxesSubplot at 0x7efff077f910>

数据输入输出

CSV

CSV文档

读取不是 gzip 或 bz2 压缩(read_csv 可识别的内置压缩格式)的文件。本例在介绍如何读取 WinZip 压缩文件的同时,还介绍了在环境管理器里打开文件,并读取内容的通用操作方式。详见本链接

从多个文件读取数据,创建单个 DataFrame

最好的方式是先一个个读取单个文件,然后再把每个文件的内容存成列表,再用 pd.concat() 组合成一个 DataFrame:

In [189]: for i in range(3):.....: data = pd.DataFrame(np.random.randn(10, 4)).....: data.to_csv('file_{}.csv'.format(i)).....:In [190]: files = ['file_0.csv', 'file_1.csv', 'file_2.csv']In [191]: result = pd.concat([pd.read_csv(f) for f in files], ignore_index=True)

还可以用同样的方法读取所有匹配同一模式的文件,下面这个例子使用的是glob:

In [192]: import globIn [193]: import osIn [194]: files = glob.glob('file_*.csv')In [195]: result = pd.concat([pd.read_csv(f) for f in files], ignore_index=True)

最后,这种方式也适用于 io 文档 介绍的其它 pd.read_* 函数。

解析多列里的日期组件

用一种格式解析多列的日期组件,速度更快。

In [196]: i = pd.date_range('20000101', periods=10000)In [197]: df = pd.DataFrame({'year': i.year, 'month': i.month, 'day': i.day})In [198]: df.head()Out[198]:year month day0 2000 1 11 2000 1 22 2000 1 33 2000 1 44 2000 1 5In [199]: %timeit pd.to_datetime(df.year * 10000 + df.month * 100 + df.day, format='%Y%m%d').....: ds = df.apply(lambda x: "%04d%02d%02d" % (x['year'],.....: x['month'], x['day']), axis=1).....: ds.head().....: %timeit pd.to_datetime(ds).....:10.6 ms +- 698 us per loop (mean +- std. dev. of 7 runs, 100 loops each)3.21 ms +- 36.4 us per loop (mean +- std. dev. of 7 runs, 100 loops each)

跳过标题与数据之间的行

In [200]: data = """;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: ;;;;.....: date;Param1;Param2;Param4;Param5.....: ;m²;°C;m²;m.....: ;;;;.....: 01.01.1990 00:00;1;1;2;3.....: 01.01.1990 01:00;5;3;4;5.....: 01.01.1990 02:00;9;5;6;7.....: 01.01.1990 03:00;13;7;8;9.....: 01.01.1990 04:00;17;9;10;11.....: 01.01.1990 05:00;21;11;12;13.....: """.....:

选项 1:显式跳过行

In [201]: from io import StringIOIn [202]: pd.read_csv(StringIO(data), sep=';', skiprows=[11, 12],.....: index_col=0, parse_dates=True, header=10).....:Out[202]:Param1 Param2 Param4 Param5date1990-01-01 00:00:00 1 1 2 31990-01-01 01:00:00 5 3 4 51990-01-01 02:00:00 9 5 6 71990-01-01 03:00:00 13 7 8 91990-01-01 04:00:00 17 9 10 111990-01-01 05:00:00 21 11 12 13

选项 2:读取列名,然后再读取数据

In [203]: pd.read_csv(StringIO(data), sep=';', header=10, nrows=10).columnsOut[203]: Index(['date', 'Param1', 'Param2', 'Param4', 'Param5'], dtype='object')In [204]: columns = pd.read_csv(StringIO(data), sep=';', header=10, nrows=10).columnsIn [205]: pd.read_csv(StringIO(data), sep=';', index_col=0,.....: header=12, parse_dates=True, names=columns).....:Out[205]:Param1 Param2 Param4 Param5date1990-01-01 00:00:00 1 1 2 31990-01-01 01:00:00 5 3 4 51990-01-01 02:00:00 9 5 6 71990-01-01 03:00:00 13 7 8 91990-01-01 04:00:00 17 9 10 111990-01-01 05:00:00 21 11 12 13

SQL

SQL 文档

Excel

Excel 文档

HTML

从不能处理默认请求 header 的服务器读取 HTML 表格

HDFStore

按块对大规模数据存储去重的本质是递归还原操作。这里介绍了一个函数,可以从 CSV 文件里按块提取数据,解析日期后,再按块存储。

把属性存至分组节点

In [206]: df = pd.DataFrame(np.random.randn(8, 3))In [207]: store = pd.HDFStore('test.h5')In [208]: store.put('df', df)# 用 pickle 存储任意 Python 对象In [209]: store.get_storer('df').attrs.my_attribute = {'A': 10}In [210]: store.get_storer('df').attrs.my_attributeOut[210]: {'A': 10}

二进制文件

读取 C 结构体数组组成的二进制文件,Pandas 支持 NumPy 记录数组。 比如说,名为 main.c 的文件包含下列 C 代码,并在 64 位机器上用 gcc main.c -std=gnu99 进行编译。

#include <stdio.h>#include <stdint.h>typedef struct _Data{int32_t count;double avg;float scale;} Data;int main(int argc, const char *argv[]){size_t n = 10;Data d[n];for (int i = 0; i < n; ++i){d[i].count = i;d[i].avg = i + 1.0;d[i].scale = (float) i + 2.0f;}FILE *file = fopen("binary.dat", "wb");fwrite(&d, sizeof(Data), n, file);fclose(file);return 0;}

下列 Python 代码读取二进制二建 binary.dat,并将之存为 pandas DataFrame,每个结构体的元素对应 DataFrame 里的列:

names = 'count', 'avg', 'scale'# 注意:因为结构体填充,位移量比类型尺寸大offsets = 0, 8, 16formats = 'i4', 'f8', 'f4'dt = np.dtype({'names': names, 'offsets': offsets, 'formats': formats},align=True)df = pd.DataFrame(np.fromfile('binary.dat', dt))

::: tip 注意

不同机器上创建的文件因其架构不同,结构化元素的位移量也不同,原生二进制格式文件不能跨平台使用,因此不建议作为通用数据存储格式。建议用 Pandas IO 功能支持的 HDF5 或 msgpack 文件。

:::

计算

相关性

用 DataFrame.corr() 计算得出的相关矩阵的下(或上)三角形式一般都非常有用。下例通过把布尔掩码传递给 where 可以实现这一功能:

In [211]: df = pd.DataFrame(np.random.random(size=(100, 5)))In [212]: corr_mat = df.corr()In [213]: mask = np.tril(np.ones_like(corr_mat, dtype=np.bool), k=-1)In [214]: corr_mat.where(mask)Out[214]:0 1 2 3 40 NaN NaN NaN NaN NaN1 -0.018923 NaN NaN NaN NaN2 -0.076296 -0.012464 NaN NaN NaN3 -0.169941 -0.289416 0.076462 NaN NaN4 0.064326 0.018759 -0.084140 -0.079859 NaN

除了命名相关类型之外,DataFrame.corr 还接受回调,此处计算 DataFrame 对象的距离相关矩阵。

In [215]: def distcorr(x, y):.....: n = len(x).....: a = np.zeros(shape=(n, n)).....: b = np.zeros(shape=(n, n)).....: for i in range(n):.....: for j in range(i + 1, n):.....: a[i, j] = abs(x[i] - x[j]).....: b[i, j] = abs(y[i] - y[j]).....: a += a.T.....: b += b.T.....: a_bar = np.vstack([np.nanmean(a, axis=0)] * n).....: b_bar = np.vstack([np.nanmean(b, axis=0)] * n).....: A = a - a_bar - a_bar.T + np.full(shape=(n, n), fill_value=a_bar.mean()).....: B = b - b_bar - b_bar.T + np.full(shape=(n, n), fill_value=b_bar.mean()).....: cov_ab = np.sqrt(np.nansum(A * B)) / n.....: std_a = np.sqrt(np.sqrt(np.nansum(A**2)) / n).....: std_b = np.sqrt(np.sqrt(np.nansum(B**2)) / n).....: return cov_ab / std_a / std_b.....:In [216]: df = pd.DataFrame(np.random.normal(size=(100, 3)))In [217]: df.corr(method=distcorr)Out[217]:0 1 20 1.000000 0.199653 0.2148711 0.199653 1.000000 0.1951162 0.214871 0.195116 1.000000

时间差

时间差文档。

In [218]: import datetimeIn [219]: s = pd.Series(pd.date_range('2012-1-1', periods=3, freq='D'))In [220]: s - s.max()Out[220]:0 -2 days1 -1 days2 0 daysdtype: timedelta64[ns]In [221]: s.max() - sOut[221]:0 2 days1 1 days2 0 daysdtype: timedelta64[ns]In [222]: s - datetime.datetime(2011, 1, 1, 3, 5)Out[222]:0 364 days 20:55:001 365 days 20:55:002 366 days 20:55:00dtype: timedelta64[ns]In [223]: s + datetime.timedelta(minutes=5)Out[223]:0 2012-01-01 00:05:001 2012-01-02 00:05:002 2012-01-03 00:05:00dtype: datetime64[ns]In [224]: datetime.datetime(2011, 1, 1, 3, 5) - sOut[224]:0 -365 days +03:05:001 -366 days +03:05:002 -367 days +03:05:00dtype: timedelta64[ns]In [225]: datetime.timedelta(minutes=5) + sOut[225]:0 2012-01-01 00:05:001 2012-01-02 00:05:002 2012-01-03 00:05:00dtype: datetime64[ns]

In [226]: deltas = pd.Series([datetime.timedelta(days=i) for i in range(3)])In [227]: df = pd.DataFrame({'A': s, 'B': deltas})In [228]: dfOut[228]:A B0 2012-01-01 0 days1 2012-01-02 1 days2 2012-01-03 2 daysIn [229]: df['New Dates'] = df['A'] + df['B']In [230]: df['Delta'] = df['A'] - df['New Dates']In [231]: dfOut[231]:A B New Dates Delta0 2012-01-01 0 days 2012-01-01 0 days1 2012-01-02 1 days 2012-01-03 -1 days2 2012-01-03 2 days 2012-01-05 -2 daysIn [232]: df.dtypesOut[232]:A datetime64[ns]B timedelta64[ns]New Dates datetime64[ns]Delta timedelta64[ns]dtype: object

与 datetime 类似,用 np.nan 可以把值设为 NaT。

In [233]: y = s - s.shift()In [234]: yOut[234]:0 NaT1 1 days2 1 daysdtype: timedelta64[ns]In [235]: y[1] = np.nanIn [236]: yOut[236]:0 NaT1 NaT2 1 daysdtype: timedelta64[ns]

轴别名

设置全局轴别名,可以定义以下两个函数:

In [237]: def set_axis_alias(cls, axis, alias):.....: if axis not in cls._AXIS_NUMBERS:.....: raise Exception("invalid axis [%s] for alias [%s]" % (axis, alias)).....: cls._AXIS_ALIASES[alias] = axis.....:In [238]: def clear_axis_alias(cls, axis, alias):.....: if axis not in cls._AXIS_NUMBERS:.....: raise Exception("invalid axis [%s] for alias [%s]" % (axis, alias)).....: cls._AXIS_ALIASES.pop(alias, None).....:In [239]: set_axis_alias(pd.DataFrame, 'columns', 'myaxis2')In [240]: df2 = pd.DataFrame(np.random.randn(3, 2), columns=['c1', 'c2'],.....: index=['i1', 'i2', 'i3']).....:In [241]: df2.sum(axis='myaxis2')Out[241]:i1 -0.461013i2 2.040016i3 0.904681dtype: float64In [242]: clear_axis_alias(pd.DataFrame, 'columns', 'myaxis2')

创建示例数据

类似 R 的 expand.grid() 函数,用不同类型的值组生成 DataFrame,需要创建键是列名,值是数据值列表的字典:

In [243]: def expand_grid(data_dict):.....: rows = itertools.product(*data_dict.values()).....: return pd.DataFrame.from_records(rows, columns=data_dict.keys()).....:In [244]: df = expand_grid({'height': [60, 70],.....: 'weight': [100, 140, 180],.....: 'sex': ['Male', 'Female']}).....:In [245]: dfOut[245]:height weight sex0 60 100 Male1 60 100 Female2 60 140 Male3 60 140 Female4 60 180 Male5 60 180 Female6 70 100 Male7 70 100 Female8 70 140 Male9 70 140 Female10 70 180 Male11 70 180 Female