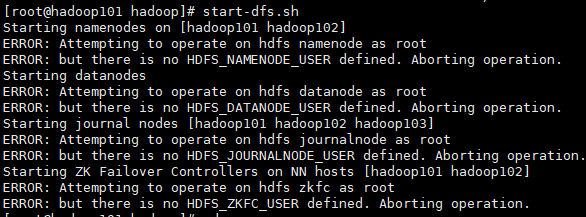

[root@hadoop101 hadoop]# start-dfs.shStarting namenodes on [hadoop101 hadoop102]ERROR: Attempting to operate on hdfs namenode as rootERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.Starting datanodesERROR: Attempting to operate on hdfs datanode as rootERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.Starting journal nodes [hadoop101 hadoop102 hadoop103]ERROR: Attempting to operate on hdfs journalnode as rootERROR: but there is no HDFS_JOURNALNODE_USER defined. Aborting operation.Starting ZK Failover Controllers on NN hosts [hadoop101 hadoop102]ERROR: Attempting to operate on hdfs zkfc as rootERROR: but there is no HDFS_ZKFC_USER defined. Aborting operation.

在Hadoop安装目录下找到sbin文件夹

在里面修改四个文件

对于start-dfs.sh和stop-dfs.sh文件,添加下列参数:

#!/usr/bin/env bashHDFS_DATANODE_USER=rootHDFS_JOURNALNODE_USER=rootHDFS_DATANODE_SECURE_USER=hdfsHDFS_NAMENODE_USER=rootHDFS_ZKFC_USER=root

对于start-yarn.sh和stop-yarn.sh文件,添加下列参数:

#!/usr/bin/env bashYARN_RESOURCEMANAGER_USER=rootHADOOP_SECURE_DN_USER=yarnYARN_NODEMANAGER_USER=root

重新开始start…就可以了。