XDP中有很多"方法"和"方式":https://isovalent.com/blog/post/2021-12-release-111https://www.tigera.io/learn/guides/ebpf/ebpf-xdp/Note:Cilium一直处于快速发展的阶段,所以我们在谈论这些Feature的时候,我们需要指定版本。以下内容基于Cilium 1.11版本。// XDP 硬件要求:[支持多队列的网卡一般的协议通用offloadTX/RX checksum offload,即校验offload,利用网卡计算校验和,而不是。Receive Side Scaling,RSS 即接收端伸缩,是一种网络驱动程序技术,可在多处理器或多处理器核心之间有效分配接收到的网络数据包并处理。Transport Segmentation Offload,TSO,即,是一种利用网卡替代CPU对大数据包进行分片,降低CPU负载的技术。最好支持LRO,aRFS]

- 1.XDP Mode ```properties XDP programs can be directly attached to a network interface. Whenever a new packet is received on the network interface, XDP programs receive a callback, and can perform operations on the packet very quickly. You can connect an XDP program to an interface using the following models:

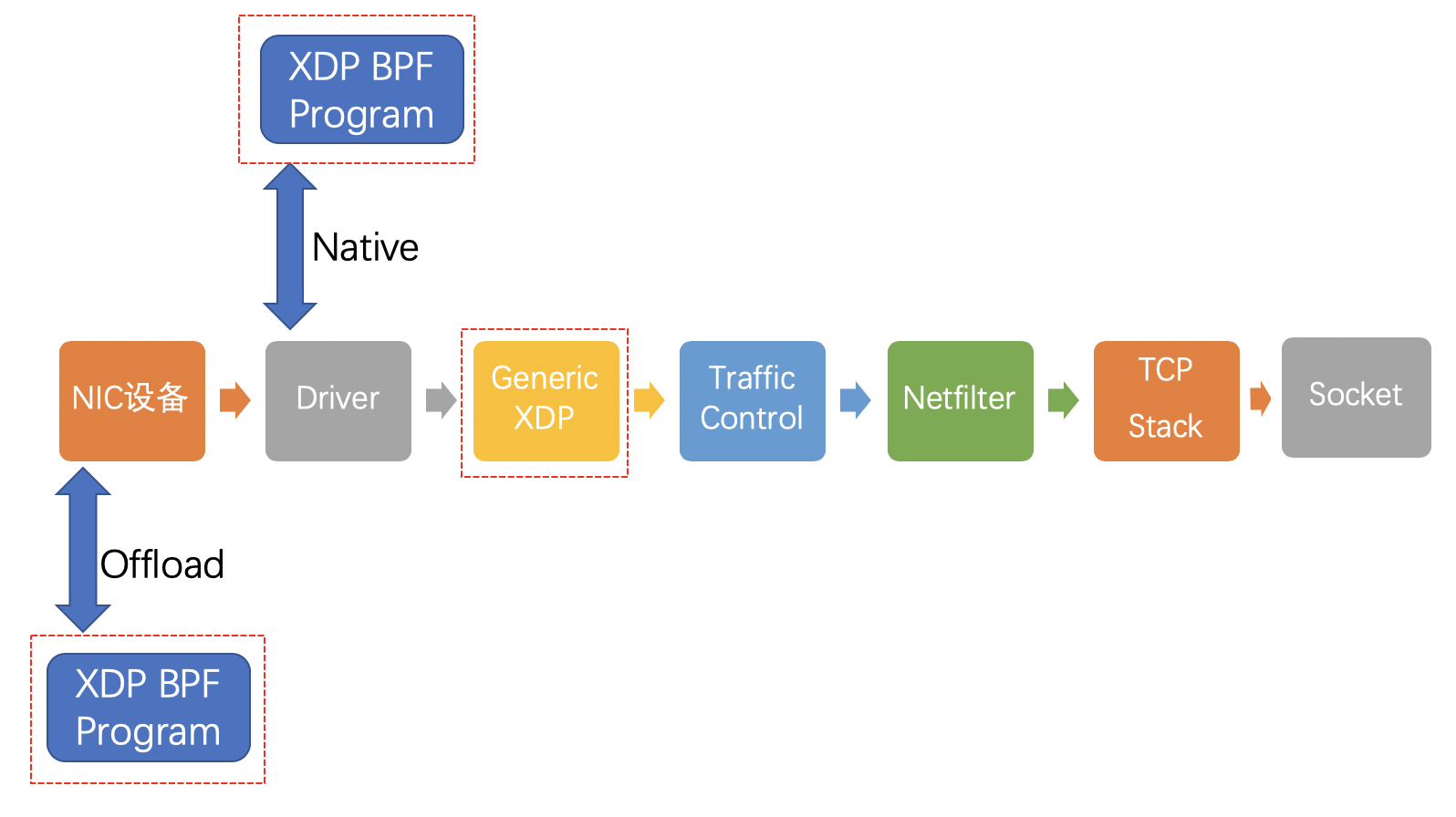

1.Generic XDP – XDP programs are loaded into the kernel as part of the ordinary network path. This does not provide full performance benefits, but is an easy way to test XDP programs or run them on generic hardware that does not provide specific support for XDP. 2.Native XDP – The XDP program is loaded by the network card driver as part of its initial receive path. This also requires support from the network card driver. 3.Offloaded XDP – The XDP program loads directly on the NIC, and executes without using the CPU. This requires support from the network interface device. 以上是来自于Calico官网中的现行模式的描述。

先对此研读一下:具体的逻辑参考下图:此图为在常规Linux的环境中加入XDP后的DataPath: 1.xdpgeneric 表示 generic XDP(通用 XDP),用于给那些还没有原生支持 XDP 的驱动进行试验性测试。generic XDP hook 位于内核协议栈的主接收路径(main receive path)上,接受的是 skb 格式的包,但由于 这些 hook 位于 ingress 路 径的很后面(a much later point),因此与 native XDP 相比性能有明显下降。因 此,xdpgeneric 大部分情况下只能用于试验目的,很少用于生产环境。其实往往我们需要对照说明,并且我们还需要有相应的Benchmark测试来做辅助说明,虽然我们通常看到一个HOOK在另外一个HOOK之后,我们会认为这里”相隔”很远,实际上我们通过相应的Benchmark测试以后,试试上往往得到的结果不一定有那么大的差距。当然这是我们努力的一个方向,但是综合各方面的考虑,我们会推荐一个方案在生产环境中使用。

2.xdpdrv 表示 native XDP(原生 XDP), 意味着 BPF 程序直接在驱动的接收路 径上运行,理论上这是软件层最早可以处理包的位置(the earliest possible point)。这是常规/传统的 XDP 模式,需要驱动实现对 XDP 的支持,目前 Linux 内核中主流的 10G/40G 网卡都已经支持。此种方式由于Linux目前的原生的Driver是支持的,改造起来成本应该也相对较少,所以通常我们会使用此种方式来实现我们环境中的流量处理。

3.xdpoffload 在一些智能网卡(例如支持 Netronome’s nfp 驱动的网卡)实现了 xdpoffload 模式 ,允许将整个 BPF/XDP 程序 offload 到硬件,因此程序在网卡收到包时就直接在网卡进行处理。这提供了比native XDP 更高的性能,虽然在这种模式中某些 BPF map 类型和BPF 辅助函数是不能用的。BPF 校验器检测到这种情况时会直接报错,告诉用户哪些东西是不支持的。除了这些不支持的 BPF 特性之外,其他方面与 native XDP 都是一样的。此种模式需要使用到网卡自身能力支持,相对于来说改造成本可能会高点,但是最近几年智能网卡也越来越普遍,我们需要先关注于这个行业,时刻保持嗅觉即可。

- [x] **2.XDP Function**

```properties

Here are some of the operations an XDP program can perform with the packets it receives, once it is connected to a network interface:

XDP_DROP – Drops and does not process the packet. eBPF programs can analyze traffic patterns and use filters to update the XDP application in real time to drop specific types of packets (for example, malicious traffic).

XDP_PASS – Indicates that the packet should be forwarded to the normal network stack for further processing. The XDP program can modify the content of the package before this happens.

XDP_TX – Forwards the packet (which may have been modified) to the same network interface that received it.

XDP_REDIRECT – Bypasses the normal network stack and redirects the packet via another NIC to the network.

XDP_DROP:直接丢包

XDP_ABORTED:也是丢包,不过会触发一个eBPF 程序错误,可以通过调试工具查看

XDP_TX:将处理后的packet 发回给相同的网卡。[same network interface that received it.]这点:以便负载均衡器以发夹方式(hair-pinning)运行。

// 这里介绍一下发卡(hair-pinning)模式:一句话描述就是:数据从某一个网口收到,然后能再从该网口发出去。// 在Cilium 1.11的版本中使用便是该模式。

XDP_PASS:将处理后的packet 传递给内核协议栈。

XDP_REDIRECT 稍微复杂点,它会需要一个额外的参数来表明Redirect 的目的地,这个额外的参数是在XDP 程序返回之前通过一个helper 函数设置。这种方式使得Redirect 可以非常方便的扩展,增加新的Redirect目的地只需要再增加一个参数值即可。目前Redirect的目的地包含了以下几种可能:

将处理后的packet转发给一个不同的网卡,包括了转发给连接虚拟机或者容器的虚拟网卡。//该能力需要Linux 内核中的网卡的driver支持,不过目前:40G 和 100G 以上的上游网卡驱动都支持开箱即用的 XDP_REDIRECT。[不同网卡]

将处理后的packet转发给一个不同的CPU做进一步处理。

将处理后的packet转发给一个特定的用户空间socket(AF_XDP),这种方式使得XDP也可以直接bypass网络协议栈,甚至进一步结合zero-copy技术降低包处理的overhead。// \

此种方式即为:AF_XDP模式:具体可以参考我前边的文章:https://www.yuque.com/docs/share/eb731827-58f1-42d2-b3a4-59398340fb2c?# 《7.Why We Always Compare XDP with DPDK?》

// 这里多说一点:目前在网络加速的方案上:我们有两大类:1.以DPDK为代表的完全ByPass kernel方案:DPDK+VPP+SRIOV+Multus。 2.是以XDP为代表的AF_XDP。//

以下来自Cilium官网介绍:https://isovalent.com/blog/post/2021-12-release-111

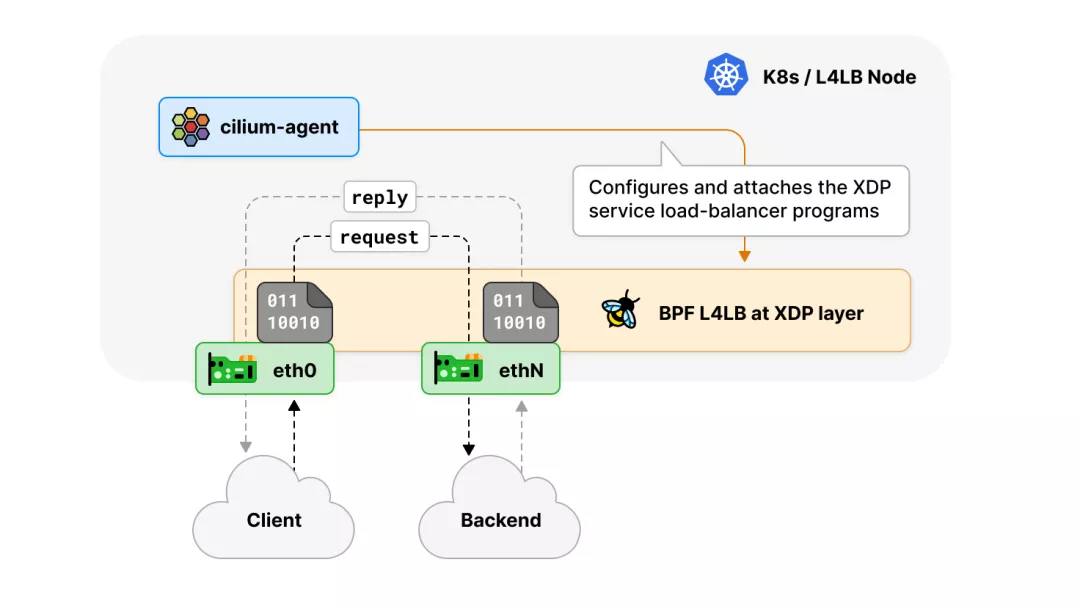

[XDP Multi-Device Load-Balancer Support]:

Up until this release, the XDP-based load-balancer acceleration could only be enabled on a single networking device in order to operate as a hair-pinning load-balancer, (where forwarded packets leave through the same device they arrived on). The reason for this initial constraint which was added with the XDP-based kube-proxy replacement acceleration was due to limited driver support for multi-device forwarding under XDP (XDP_REDIRECT) whereas same-device forwarding (XDP_TX) is part of every driver's initial XDP support in the Linux kernel.

This meant that environments with multiple networking devices had to use Cilium's regular kube-proxy replacement for the tc eBPF layer. One typical example of such an environment is a host with two network devices: One of which faces a public network (e.g., accepting requests to a Kubernetes service from the outside), while the other one faces a private network (e.g., used for in-cluster communication among Kubernetes nodes).

Given nowadays on modern LTS Linux kernels the vast majority of 40G and 100G+ upstream NIC drivers support XDP_REDIRECT out of the box, this constraint can finally be lifted, thus this release implements load-balancing support among multiple network devices in the XDP layer for Cilium's kube-proxy replacement as well as Cilium's standalone load balancer, which allows keeping packet processing performance high also for more complex environments.