ENV:kubeadm init --kubernetes-version=v1.20.5 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --skip-phases=addon/kube-proxy --ignore-preflight-errors=Swap[root@dev3 ~]# kubectl get nodes -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEdev1 Ready control-plane,master 30d v1.20.5 192.168.2.31 <none> CentOS Linux 7 (Core) 5.15.8-1.el7.elrepo.x86_64 docker://20.10.12dev2 Ready <none> 30d v1.20.5 192.168.2.32 <none> CentOS Linux 7 (Core) 5.15.8-1.el7.elrepo.x86_64 docker://20.10.12dev3 Ready <none> 30d v1.20.5 192.168.2.33 <none> CentOS Linux 7 (Core) 5.15.8-1.el7.elrepo.x86_64 docker://20.10.12[root@dev3 ~]#1.add the repo:helm repo add cilium https://helm.cilium.io/2.generate the installation template:helm template cilium cilium/cilium --version 1.10.6 \--namespace kube-system \--set kubeProxyReplacement=strict \--set k8sServiceHost=192.168.2.31 \--set k8sServicePort=6443 > 1.10.6.yaml3.我们加以下参数enable log level to debug:debug-verbose: "datapath"debug: "true"monitor-aggregation: "none"4.Check the cilium status:root@dev1:/home/cilium# cilium statusKVStore: Ok DisabledKubernetes: Ok 1.20 (v1.20.5) [linux/amd64]Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1beta1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]KubeProxyReplacement: Strict [ens33 192.168.2.31 (Direct Routing)]Cilium: Ok 1.10.6 (v1.10.6-17d3d15)NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memoryCilium health daemon: OkIPAM: IPv4: 2/254 allocated from 10.244.0.0/24,BandwidthManager: Disabled[Host Routing: Legacy]Masquerading: IPTables [IPv4: Enabled, IPv6: Disabled]Controller Status: 20/20 healthyProxy Status: OK, ip 10.244.0.146, 0 redirects active on ports 10000-20000Hubble: Ok Current/Max Flows: 4095/4095 (100.00%), Flows/s: 23.85 Metrics: DisabledEncryption: DisabledCluster health: 3/3 reachable (2022-01-16T10:34:13Z)root@dev1:/home/cilium#

- 1.同节点Pod通信:

```properties

[root@dev1 kubernetes]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-client-8497865645-j7dck 1/1 Running 0 11s 10.244.0.70 dev1

cni-same-7d599bc5d8-8xv54 1/1 Running 0 25s 10.244.0.228 dev1 [root@dev1 kubernetes]#

[root@dev1 kubernetes]# kubectl exec -it cni-client-8497865645-j7dck bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] — [COMMAND] instead.

[1.查看该Pod的IP地址:]

bash-5.1# ifconfig -a

eth0 Link encap:Ethernet HWaddr AE:89:8C:7F:1A:51

inet addr:10.244.0.70 Bcast:0.0.0.0 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:7 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:746 (746.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

[2.查看路由表:]

bash-5.1# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.244.0.146 0.0.0.0 UG 0 0 0 eth0

10.244.0.146 0.0.0.0 255.255.255.255 UH 0 0 0 eth0

bash-5.1#

bash-5.1# ping 10.244.0.228

PING 10.244.0.228 (10.244.0.228): 56 data bytes

64 bytes from 10.244.0.228: seq=0 ttl=63 time=0.237 ms

^C

—- 10.244.0.228 ping statistics —-

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.237/0.237/0.237 ms

[3.查看eth 0 veth pair的lxc网卡]

bash-5.1# ethtool -S eth0

NIC statistics:

peer_ifindex: 39

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_drops: 0

rx_queue_0_xdp_redirect: 0

rx_queue_0_xdp_drops: 0

rx_queue_0_xdp_tx: 0

rx_queue_0_xdp_tx_errors: 0

tx_queue_0_xdp_xmit: 0

tx_queue_0_xdp_xmit_errors: 0

bash-5.1#

39: lxcc61decd4948f@if38:

[4.查看eth0的模式] bash-5.1# ethtool -i eth0 driver: veth version: 1.0 firmware-version: expansion-rom-version: bus-info: supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no bash-5.1#

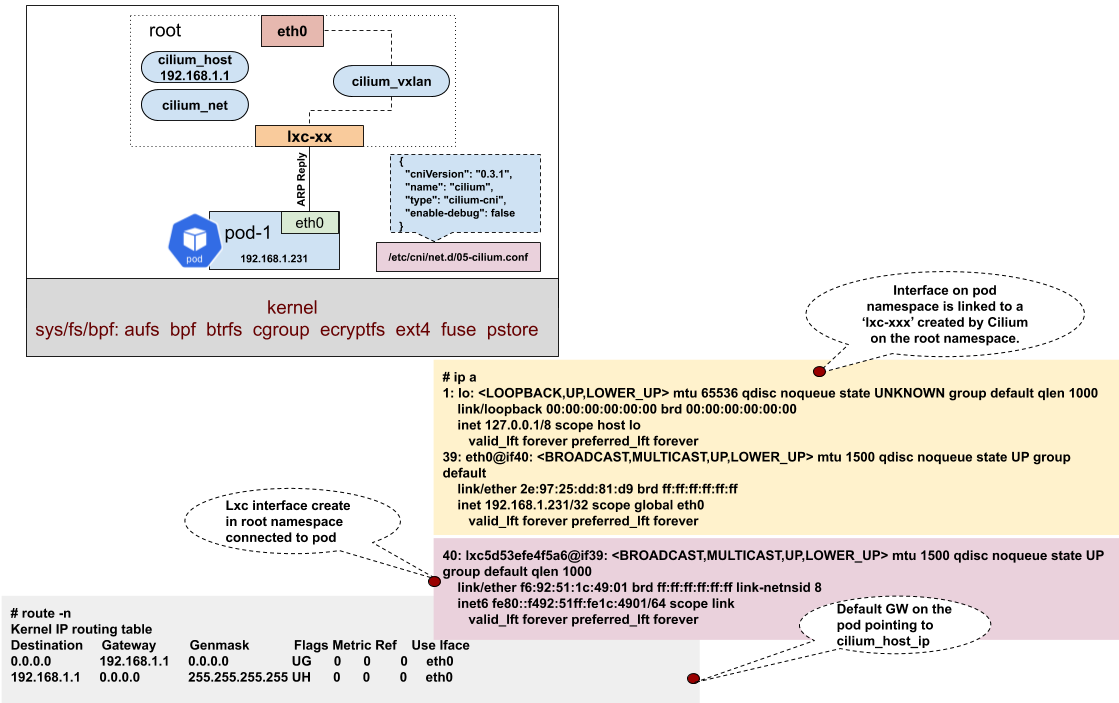

那么此时数据包:由Pod所在的NS中通过veth pair到Linux 的ROOT NS中。[这里需要注意的是这个Dst_MAC地址来源是:这个ARP响应是通过Cilium Agent通过挂载的eBPF程序实现的自动应答,并且将veth-pair对端的MAC地址返回,避免了虚拟网络中的ARP广播问题]此时需要查询到对端Pod2的走法了。此时Dst_IP为:cni-same-7d599bc5d8-8xv54 1/1 Running 0 11m 10.244.0.228 dev1

所以依然是看路由表形式:

[root@dev1 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.1 0.0.0.0 UG 0 0 0 ens33

10.0.0.126 0.0.0.0 255.255.255.255 UH 0 0 0 cilium_host

10.244.0.0 10.244.0.146 255.255.255.0 UG 0 0 0 cilium_host

10.244.0.146 0.0.0.0 255.255.255.255 UH 0 0 0 cilium_host

10.244.1.0 10.244.0.146 255.255.255.0 UG 0 0 0 cilium_host

10.244.2.0 10.244.0.146 255.255.255.0 UG 0 0 0 cilium_host

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens33

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33

[root@dev1 ~]#

但是我们此时看此路由的组成,似乎有点说不通,就是为什么出接口都是一个看起来并不相关的接口:cilium_host的网卡。但是我们去cilium_host接口去tcpdump -pne -i cilium_host 的时候,发现在ping的时候,没有任何的报文呈现。

而此时我们知道在Pod的eth0 的peer lxca网卡:

[root@dev1 kubernetes]# tc filter show dev lxce8f4542fdaac ingress

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 bpf_lxc.o:[from-container] direct-action not_in_hw tag a49dbfe948d9f3d4

[root@dev1 kubernetes]# 这里加载的[from-container] HOOK,这里的使用由redirect的能力。

pod 发出流量后,流量会发给本 Pod 宿主机 lxc 接口的 tc ingress hook 的 eBPF 程序处理,eBPF 最终会查找目的 Pod,确定位于同一个节点,直接通过 redirect 方法将流量直接越过 kernel stack,送给目的 Pod 的 lxc 口,最终将流量送给目的 Pod。

[这里我们需要弄清楚一点:就是和我们此前学习的CNI有什么区别。那么实际上主要是iptables的区别]

[——————————————————————————————————————————————————]

[cilium 的逻辑:redirect 到对端的lxc网卡,但是不经过HOST NS中的iptables 处理]

[calico 的逻辑:采用路由的形式,如同使用路由器的两端的不同网段的接口,但是数据包需要经过HOST NS的iptables处理]

[——————————————————————————————————————————————————]

这里我们结合iptables TRACE的能力来做:

我们有两套环境:一套是Cilium 1.10.6,另外一套是Calico 3.8

我们追踪一下HOST NS中的iptables处理细节:

[**]

在对应主机上:我们先看Cilium的形式:

modprobe nf_log_ipv4

iptables -t raw -I PREROUTING -p icmp -j TRACE

如果想要删除此条iptables规则:

iptables -t raw -D PREROUTING 1

然后观察主机上的 tailf /var/log/message

[root@dev1 kubernetes]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-client-8497865645-j7dck 1/1 Running 0 11s 10.244.0.70 dev1

^C [root@dev1 ~]#

[**]

Calico的情形:

[root@k8s-1 ~]# kubectl get pods -o wide | grep k8s-2

cc 1/1 Running 0 44m 10.244.200.196 k8s-2

—- 10.244.200.195 ping statistics —- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.488/0.488/0.488 ms [root@k8s-1 ~]# kubectl exec -it cc — ping -c 1 10.244.200.195 PING 10.244.200.195 (10.244.200.195): 56 data bytes 64 bytes from 10.244.200.195: seq=0 ttl=63 time=0.339 ms

—- 10.244.200.195 ping statistics —- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.339/0.339/0.339 ms

在此环境上使用iptables TRACE能力: modprobe nf_log_ipv4 iptables -t raw -I PREROUTING -p icmp -j TRACE 如果想要删除此条iptables规则: iptables -t raw -D PREROUTING 1 然后观察主机上的 tailf /var/log/message

Jan 18 20:19:22 k8s-2 kernel: TRACE: raw:PREROUTING:rule:2 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 Jan 18 20:19:22 k8s-2 kernel: TRACE: raw:cali-PREROUTING:rule:1 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 Jan 18 20:19:22 k8s-2 kernel: TRACE: raw:cali-PREROUTING:rule:2 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 Jan 18 20:19:22 k8s-2 kernel: TRACE: raw:cali-PREROUTING:return:6 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 MARK=0x40000 Jan 18 20:19:22 k8s-2 kernel: TRACE: raw:PREROUTING:policy:3 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 MARK=0x40000 Jan 18 20:19:22 k8s-2 kernel: TRACE: mangle:PREROUTING:rule:1 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 MARK=0x40000 Jan 18 20:19:22 k8s-2 kernel: TRACE: mangle:cali-PREROUTING:rule:3 IN=cali70648170f23 OUT= MAC=ee:ee:ee:ee:ee:ee:ee:93:0c:36:53:83:08:00 SRC=10.244.200.196 DST=10.244.200.195 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=43325 DF PROTO=ICMP TYPE=8 CODE=0 ID=5376 SEQ=0 MARK=0x40000

此时我们发现HOST NS中是有iptables处理的,当然这也是我们Linux中最稳定的处理方式,也是最为常见的方式。 [**]

通过抓包:我们可以知道数据包在从pod-A中出来以后,会在其对应的lxc网卡中使用from-container HOOK处理,直接把数据redirect到pod-B的lxc网卡,进而到达pod-B的eth0网卡。

- [x] **2.不同节点Pod通信:**

```properties

[root@dev1 kubernetes]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-client-7ccd98bdb8-vbkq7 1/1 Running 0 64m 10.244.0.31 dev1 <none> <none>

cni-server-7559c58f9-cp8fh 1/1 Running 1 24h 10.244.2.47 dev3 <none> <none>

[root@dev1 kubernetes]#

我们尝试抓包和iptables TRACE:

这里我们有两个层面的解析:

1.首先是经过POD-A的lxc对应的from-container redirect到cilium_vxlan进行vlxan的封装,此时我们需要抓包看一下,数据包会经过POD-A的lxc网卡:

配置TRACE:

iptables -t raw -I PREROUTING -p icmp -j TRACE

iptables -t raw -I PREROUTING -p udp --dstport=8472 -j TRACE

[root@dev1 ~]# tcpdump -pne -i lxce8f4542fdaac

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on lxce8f4542fdaac, link-type EN10MB (Ethernet), capture size 262144 bytes

21:24:51.811033 ae:8b:3c:e0:79:c4 > 2e:0e:ba:44:5d:22, ethertype IPv4 (0x0800), length 98: 10.244.0.31 > 10.244.2.47: ICMP echo request, id 17408, seq 0, length 64

21:24:51.811857 2e:0e:ba:44:5d:22 > ae:8b:3c:e0:79:c4, ethertype IPv4 (0x0800), length 98: 10.244.2.47 > 10.244.0.31: ICMP echo reply, id 17408, seq 0, length 64

然后此时我们在:tailf /var/log/message 中grep icmp,此时什么消息也没有,说明我们此时iptables 并没有处理这个icmp消息。

那么当数据包到达cilium_vxlan 接口以后,就需要采用vxlan的数据包封装的形式,这点我们应该已经比较熟悉了。在内核模块中的vxlan指导下进行vxlan的数据封装。

那么我们封装完成的数据包该如何处理呢?

首先还是两个方面的处理。1.是此时cilium_vxlan的HOOK:2.HOST NS中的iptables如何处理。

1.cilium_vxlam HOOK:

[root@dev1 ~]# tc filter show dev cilium_vxlan ingress

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 bpf_overlay.o:[from-overlay] direct-action not_in_hw tag d69b69b1e9fcd92c

[root@dev1 ~]# tc filter show dev cilium_vxlan egress [此时应该使用此HOOK]

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 bpf_overlay.o:[to-overlay] direct-action not_in_hw tag 19425a935f3c7100

[root@dev1 ~]#

这里实际上没有什么特殊的处理。紧接着便是封装完成的数据包进行route discover的过程。

那么在routeing discover的过程中,我们需要看看,此时HOST NS中的iptables是否处理了数据包:

tailf /var/log/message:

本端:

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.2.31 netmask 255.255.255.0 broadcast 192.168.2.255

inet6 fe80::20c:29ff:fe34:a373 prefixlen 64 scopeid 0x20<link>

ether [00:0c:29:34:a3:73] 这里是MAC地址 txqueuelen 1000 (Ethernet)

RX packets 276868 bytes 33366183 (31.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 291234 bytes 81055632 (77.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

对端:

[root@dev3 ~]# ifconfig ens33

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.2.33 netmask 255.255.255.0 broadcast 192.168.2.255

inet6 fe80::20c:29ff:fe9f:c816 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:9f:c8:16 txqueuelen 1000 (Ethernet)

RX packets 132454 bytes 36388582 (34.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 117747 bytes 14214296 (13.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@dev3 ~]#

2022-01-18T21:36:41.297783+08:00 dev1 kernel: TRACE: raw:PREROUTING:rule:3 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297863+08:00 dev1 kernel: TRACE: raw:CILIUM_PRE_raw:return:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: raw:PREROUTING:rule:3 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: raw:CILIUM_PRE_raw:return:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297882+08:00 dev1 kernel: TRACE: raw:PREROUTING:policy:4 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: raw:PREROUTING:policy:4 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297902+08:00 dev1 kernel: TRACE: mangle:PREROUTING:rule:1 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: mangle:PREROUTING:rule:1 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297924+08:00 dev1 kernel: TRACE: mangle:CILIUM_PRE_mangle:return:4 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297947+08:00 dev1 kernel: TRACE: mangle:PREROUTING:policy:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: mangle:CILIUM_PRE_mangle:return:4 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: mangle:PREROUTING:policy:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297972+08:00 dev1 kernel: TRACE: mangle:INPUT:policy:1 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: mangle:INPUT:policy:1 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.297985+08:00 dev1 kernel: TRACE: filter:INPUT:rule:1 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.298087+08:00 dev1 kernel: TRACE: filter:CILIUM_INPUT:return:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.298110+08:00 dev1 kernel: TRACE: filter:INPUT:rule:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.298125+08:00 dev1 kernel: TRACE: filter:KUBE-FIREWALL:return:3 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: filter:INPUT:rule:1 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: filter:CILIUM_INPUT:return:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: filter:INPUT:rule:2 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: filter:KUBE-FIREWALL:return:3 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

2022-01-18T21:36:41.298140+08:00 dev1 kernel: TRACE: filter:INPUT:policy:3 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

Jan 18 21:36:41 dev1 kernel: TRACE: filter:INPUT:policy:3 IN=ens33 OUT= MAC=00:0c:29:34:a3:73:00:0c:29:9f:c8:16:08:00 SRC=192.168.2.33 DST=192.168.2.31 LEN=134 TOS=0x00 PREC=0x00 TTL=64 ID=35501 PROTO=UDP SPT=39902 DPT=8472 LEN=114

^C

[root@dev1 ~]#

这里说明:

我们经过vxlan封装的数据包此时会被HOST NS中的iptables处理,这点很重要。我们也会同样比较在native routing中的模式进行对比。

这点很重要:具体参考:https://github.com/cilium/cilium/issues/16280