When accessing a Kubernetes service from outside via NodePort, ExternalIPs or LoadBalancer, a Kubernetes worker node might redirect the request to another node. This happens when a service endpoint runs on a different node than the request was sent to. Before the redirect, the request is SNAT'd, which means that the backend won't see the source IP address of a client. Also, the reply will be sent through the initial node back to the client, which introduces additional latency.To avoid that, Kubernetes offers externalTrafficPolicy=Local which helps to preserve the client source IP address by dropping a request to a service if a receiving node does not run any service endpoint. However, this complicates load-balancer implementations, and can lead to uneven load balancing.To address the problem, we have implemented Direct Server Return for Kubernetes services with the help of eBPF. This not only preserves the client source IP address, but also allows us to avoid an extra hop when sending a reply back to the client as shown in the figures below:

![20221015-Cilium DSR[Direct Server Return]-[N-S Traffic] - 图1](/uploads/projects/wei.luo@cilium/e2e7f0c9920462f059046a2945a06f7a.png)

[Client_IP 192.168.2.4]![20221015-Cilium DSR[Direct Server Return]-[N-S Traffic] - 图2](/uploads/projects/wei.luo@cilium/b2a389e35e5c9fa2a797f6eee4265614.png)

- 1.Install Cilium With DSR Mode ```properties [1.Install Guide:] https://docs.cilium.io/en/v1.11/gettingstarted/kubeproxy-free/#direct-server-return-dsr

helm template cilium cilium/cilium —version 1.11.1 \ —namespace kube-system \ —set tunnel=disabled \ —set autoDirectNodeRoutes=true \ —set kubeProxyReplacement=strict \ —set loadBalancer.mode=dsr \ —set k8sServiceHost=192.168.2.61 \ —set k8sServicePort=6443 > dsr.yaml

Modify the below parameter in the dsr.yaml:

debug-verbose: “datapath” ipv4-native-routing-cidr: 10.0.0.0/8 debug: “true” monitor-aggregation: “none” enable-bpf-masquerade: “true” enable-endpoint-routes: “false”

Create the demo:

kubectl create deploy dsr —image=burlyluo/nettoolbox kubectl expose deployment dsr —port=80 —target-port=80 —type=NodePort

- [x] **2.DSR DataPath Verify**```propertiesClient_IP:192.168.2.4root@bpf1:~# kubectl get nodes -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEbpf1 Ready control-plane,master 24h v1.23.2 192.168.2.61 <none> Ubuntu 20.04.3 LTS 5.11.0-051100-generic docker://20.10.12bpf2 Ready <none> 24h v1.23.2 192.168.2.62 <none> Ubuntu 20.04.3 LTS 5.11.0-051100-generic docker://20.10.12bpf3 Ready <none> 24h v1.23.2 192.168.2.63 <none> Ubuntu 20.04.3 LTS 5.11.0-051100-generic docker://20.10.12root@bpf1:~#root@bpf1:~# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESdsr-57565d4d4-mhf5l 1/1 Running 0 35m 10.0.1.23 bpf3 <none> <none>root@bpf1:~# kubectl get svc -o wide dsrNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORdsr NodePort 10.106.245.185 <none> 80:31406/TCP 33m app=dsrroot@bpf1:~#Client_IP为windows的环境:curl 192.168.2.61:31406

[x] 1.1:windows下抓包:

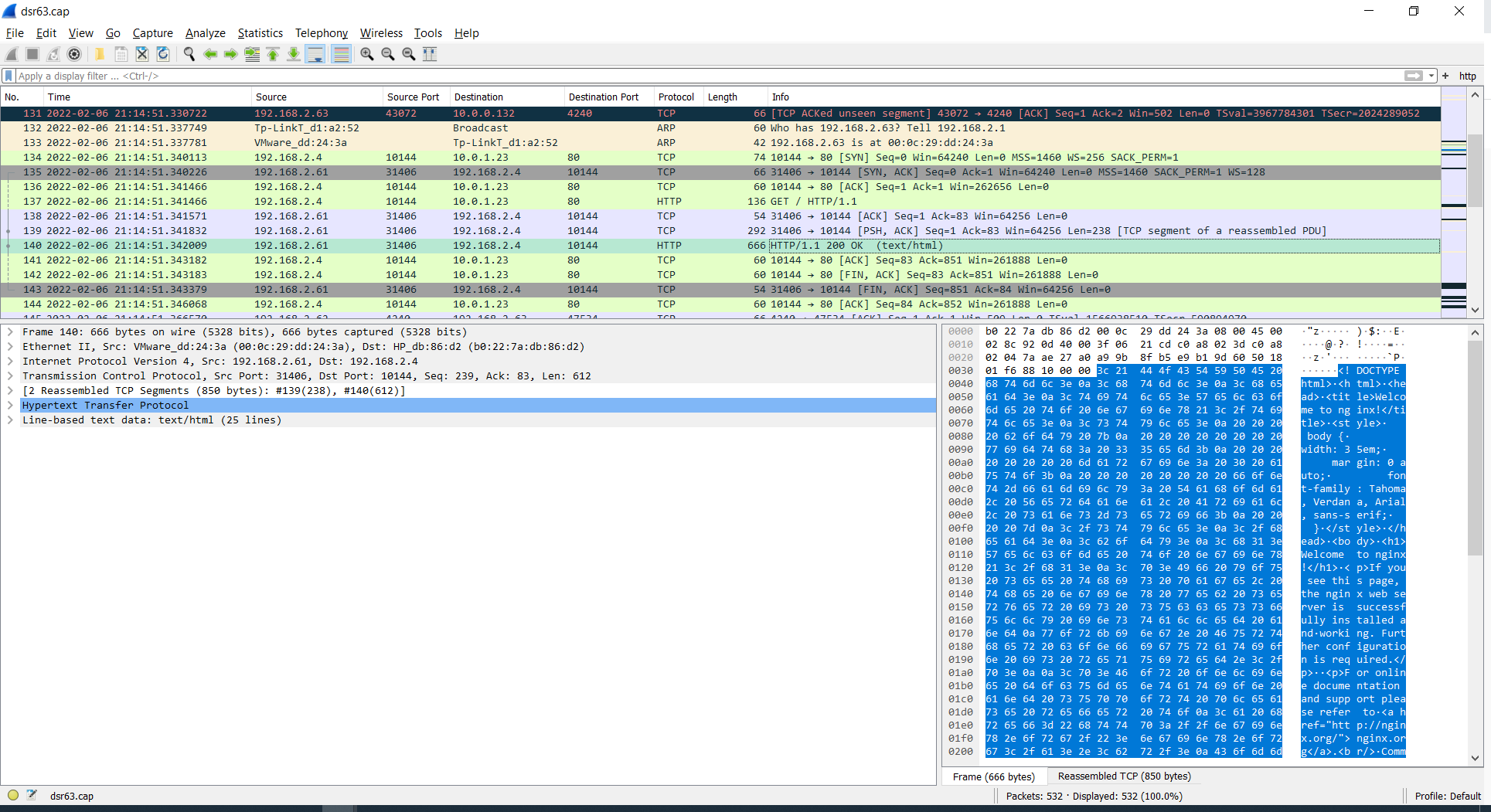

1.在windows(192.168.2.4)上抓包,使用wireshark: filter:tcp.stream eq 12 179 2022-02-06 21:14:51.308437 192.168.2.4 10144 192.168.2.61 31406 HTTP 136 GET / HTTP/1.1 182 2022-02-06 21:14:51.310085 192.168.2.61 31406 192.168.2.4 10144 HTTP 666 HTTP/1.1 200 OK (text/html)windows:192.168.2.4上抓包:

[x] 1.2:在192.168.2.61上抓包:

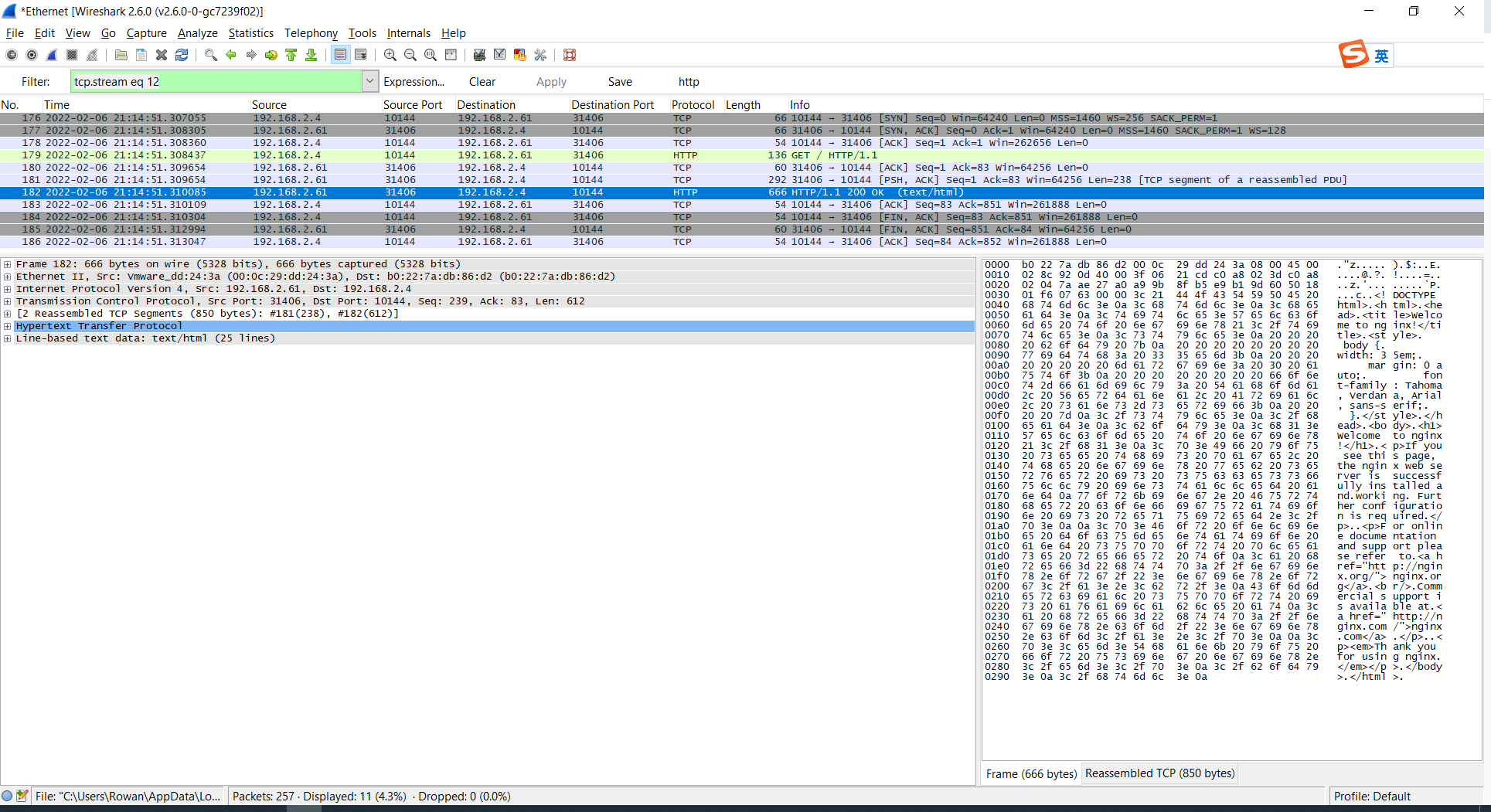

158 2022-02-06 21:14:51.341311 192.168.2.4 10144 192.168.2.61 31406 HTTP 136 GET / HTTP/1.1 我们看到这里:是来自client的http的GET消息。 159 2022-02-06 21:14:51.341431 192.168.2.4 10144 10.0.1.23 80 HTTP 136 GET / HTTP/1.1 我们看到这里:是192.168.2.61转发到service的backend的pod的消息。但是我们没有找到200OK,这点很重要。在192.168.2.61上的抓包:

[x] 1.3:在192.168.2.63上的抓包

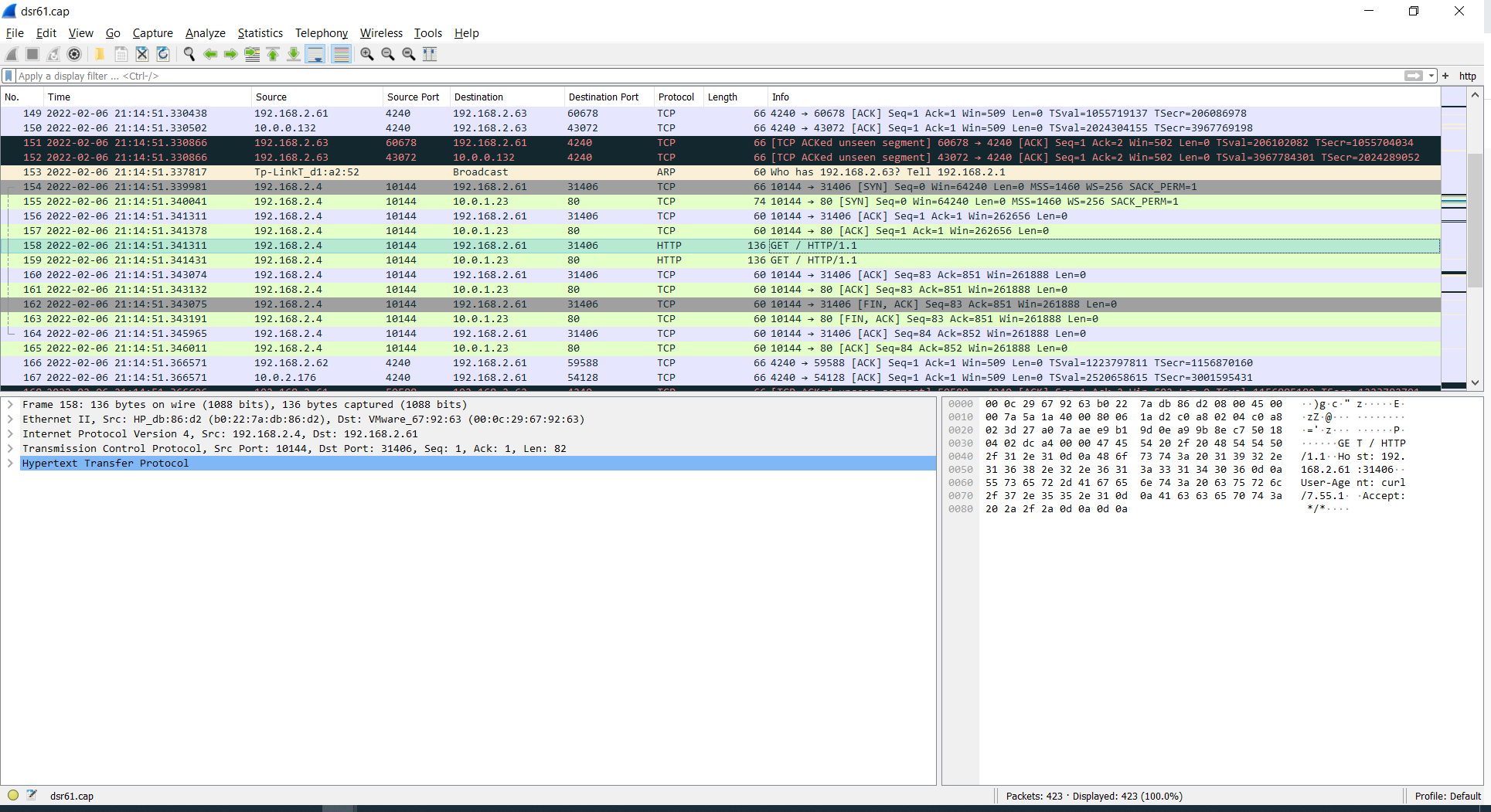

137 2022-02-06 21:14:51.341466 192.168.2.4 10144 10.0.1.23 80 HTTP 136 GET / HTTP/1.1 #这里是收到61过来的消息。 140 2022-02-06 21:14:51.342009 192.168.2.61 31406 192.168.2.4 10144 HTTP 666 HTTP/1.1 200 OK (text/html) #这里是63上的消息,注意是是192.168.2.63上的消息,但是构造的S_IP:192.168.2.61 Dst_IP:192.168.2.4。 #这和我们通常理解的不太一样,但是我们知道对网卡的理解加深一下,就比如VLAN的实现。