kubeadm init --kubernetes-version=v1.20.5 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --skip-phases=addon/kube-proxy --ignore-preflight-errors=Swap[root@dev3 ~]# kubectl -nkube-system exec -it cilium-ftkmw bashkubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.croot@dev1:/home/cilium# cilium statusKVStore: Ok DisabledKubernetes: Ok 1.20 (v1.20.5) [linux/amd64]Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1beta1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]KubeProxyReplacement: Strict [ens33 192.168.2.31 (Direct Routing)]Host firewall: DisabledCilium: Ok 1.11.0 (v1.11.0-27e0848)NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memoryCilium health daemon: OkIPAM: IPv4: 3/254 allocated from 10.0.0.0/24,BandwidthManager: Disabled[Host Routing: Legacy]Masquerading: IPTables [IPv4: Enabled, IPv6: Disabled]Controller Status: 24/24 healthyProxy Status: OK, ip 10.0.0.126, 0 redirects active on ports 10000-20000Hubble: Ok Current/Max Flows: 4095/4095 (100.00%), Flows/s: 0.78 Metrics: DisabledEncryption: DisabledCluster health: 3/3 reachable (2022-01-15T12:05:05Z)root@dev1:/home/cilium#[--------------------------------------------------------------------------------------------------][Host Routing: Legacy] [VS] [Host Routing: BPF][--------------------------------------------------------------------------------------------------][root@dev1 kubernetes]# kubectl -nkube-system exec -it cilium-v9n66 bashkubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.root@dev3:/home/cilium# cilium statusKVStore: Ok DisabledKubernetes: Ok 1.20 (v1.20.5) [linux/amd64]Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1beta1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]KubeProxyReplacement: Strict [ens33 192.168.2.33 (Direct Routing)]Cilium: Ok 1.10.6 (v1.10.6-17d3d15)NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memoryCilium health daemon: OkIPAM: IPv4: 4/254 allocated from 10.244.2.0/24,BandwidthManager: Disabled[Host Routing: BPF]Masquerading: BPF [ens33] 10.0.0.0/16 [IPv4: Enabled, IPv6: Disabled]Controller Status: 29/29 healthyProxy Status: OK, ip 10.244.2.85, 0 redirects active on ports 10000-20000Hubble: Ok Current/Max Flows: 4095/4095 (100.00%), Flows/s: 2.66 Metrics: DisabledEncryption: DisabledCluster health: 3/3 reachable (2022-01-16T09:00:29Z)root@dev3:/home/cilium#

- 1.eBPF host-Routing Leagcy ```properties 使用传统的eBPF host-Routing 模式,那么数据包流向即为使用传统的封装模式,比如我们这里使用的VXLAN作为Backend的形式,那么和我们分析的Calico和Flannel的模式基本无异。但是,如果真的去分析的话,我们也是需要区分一下的。他们之间的实现方式还是有一定的区别的。[但是无论是什么样子的形式,我们只需要按照结果导向的模式便可:就是数据出去的时候一定是带有VXLAN的格式的,我们需要知道的是VXLAN的VTEP的IP地址,MAC地址,以及VNI ID。]。至于不同的CNI他们可能实现的方式不太一样,实际上区别就是在于这个数据包怎么给打包成VXLAN的包,以及对端过来的数据包(VXLAN)格式的如何解开,然后发向对应的Dst。解决了这个,无论它的格式是什么样子的,我们都可以理解。不然会有比较多的理解难点。

此为一个总结,我们后边采用不同的文章来分析。

- [x] **2.eBPF host-Routing BPF**

```properties

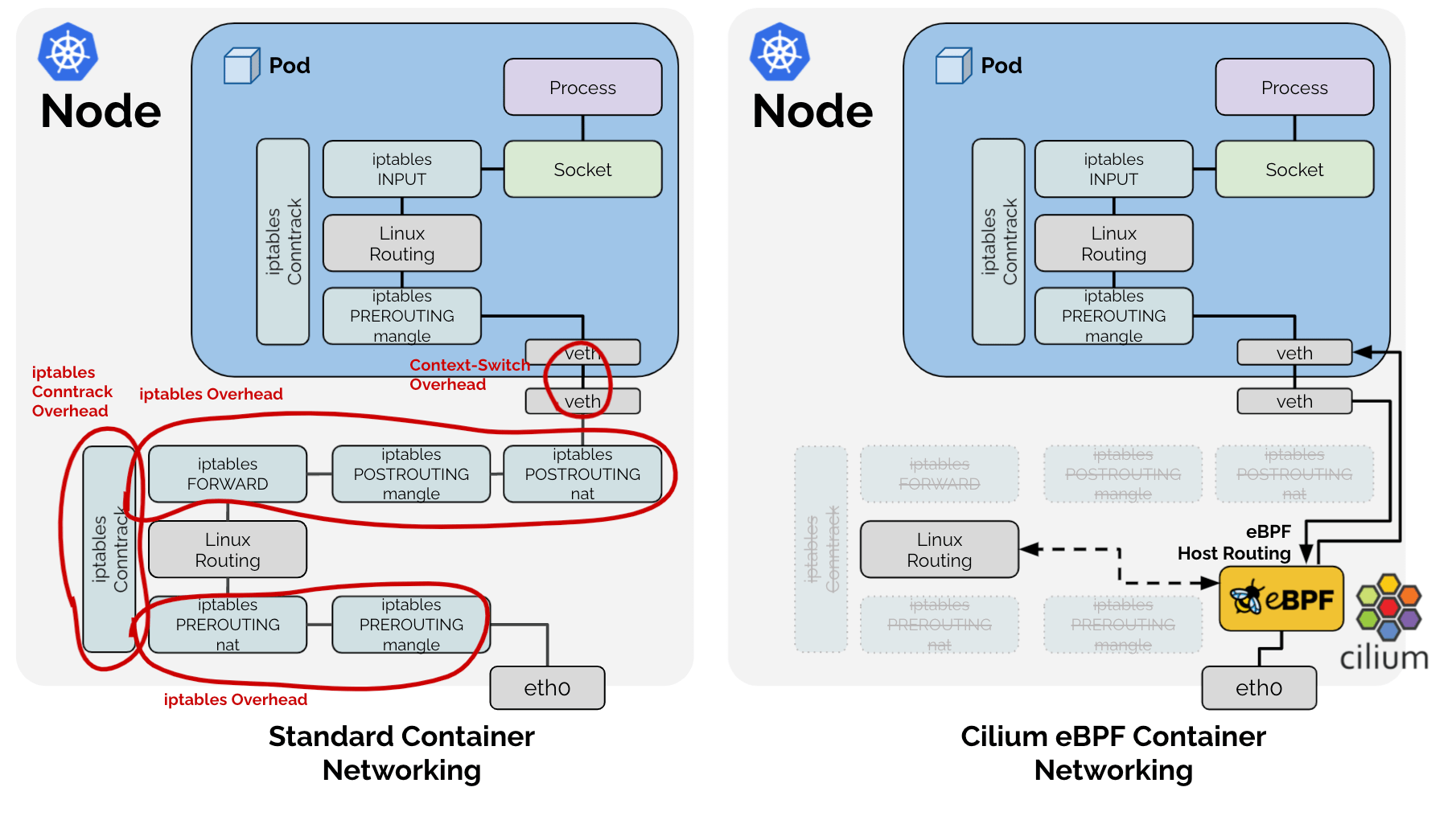

此形式由于引入了eBPF host-Routing的加持,那么在DataPath上就会有不同的地方。主要是引入了两个helper()函数:

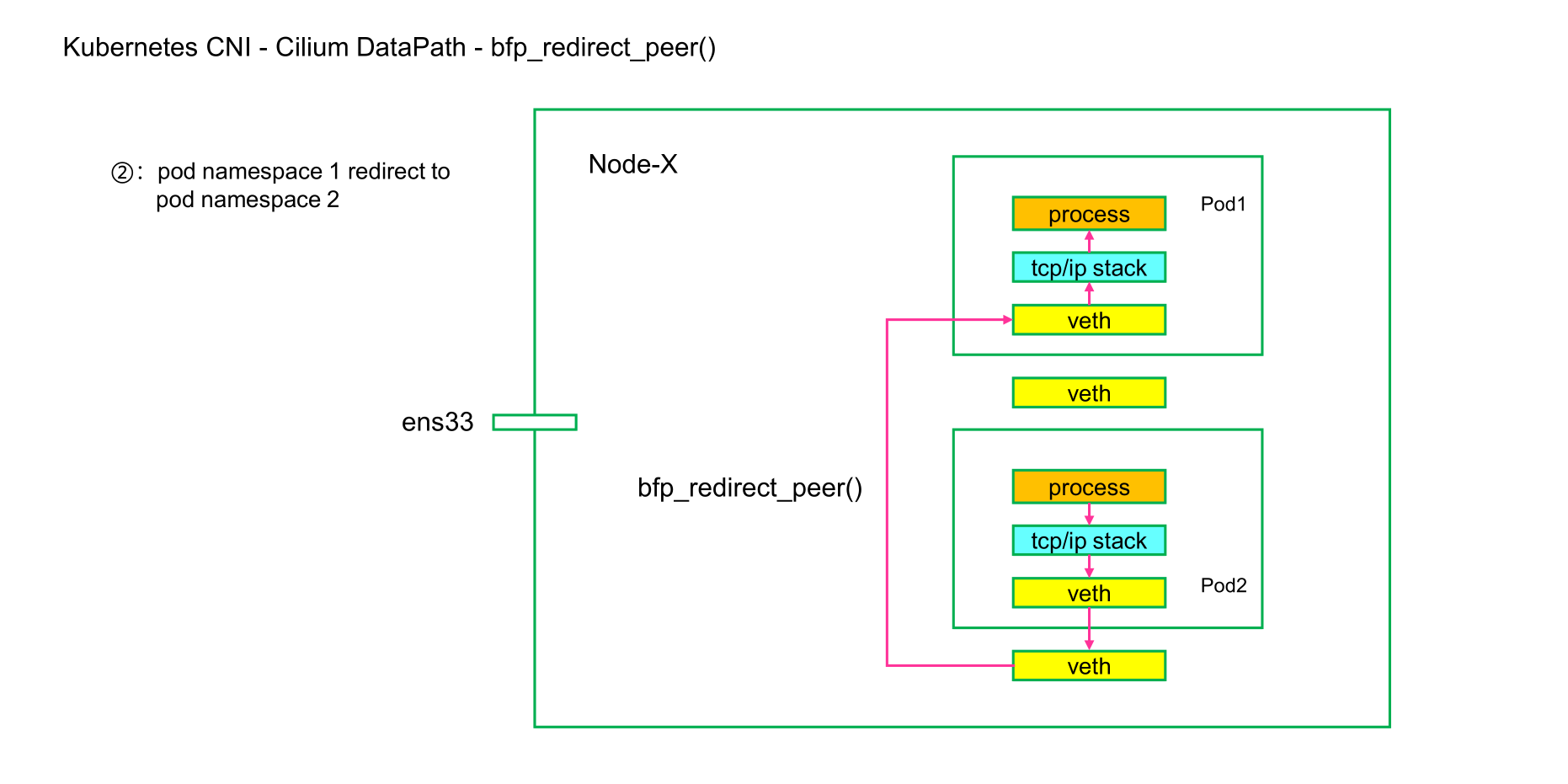

[bpf_redirect_peer()]

一句话描述一下:

同节点的Pod通信时候,Pod-A从自己的veth pair 的lxc出来以后直接送到Pod-B的veth pair的eth0网卡,而绕过Pod-B的veth pair的lxc网卡。

[bpf_redirect_neigh ()]

一句话描述一下:

不同节点Pod通信的时候,Pod-A从自己的veth pair的lxc出来以后被自己的ingress方向上的from-container处理(vxlan封装或是native routing)然后tail call到vxlan处理然后再走bpf_redirect_neigh()到我们的物理网卡,然后到对端Pod-B所在的Node节点,此时再进行反向处理即可。这里有一点注意:就是from-container ---> vxlan 封装 ---> bpf_redirect_neigh () 处理。

关于这两个函数的描述,我们可以参考以下两图,我们能够以下看出来它们想要描述的内容:

bpf_redirect_peer():

bpf_redirect_neigh():